Don't wanna be here? Send us removal request.

Text

SuperGards Coaching Institute: The Best CAT Coaching in Chandigarh

SuperGards Coaching Institute stands as the best CAT exam preparation in Chandigarh, offering unparalleled facilities and unwavering support to propel you towards success. With a commitment to excellence, we provide comprehensive mock tests, curated study materials, engaging quiz tests, and lifelong career support to ensure your readiness for the CAT exam.

Achieving Excellence Together

In till 2023, over 100 of our students achieved success in the CAT exam, a testament to our proven methods and dedicated approach. At SuperGards, we prioritize your success and offer personalized guidance every step of the way.

Our top Facilities-

Mock Tests

Study Materials

Pdf resources

Doubt Session Classes

1:1 Mentor Faculty

Choose Excellence, Choose SuperGards

We have done 3 years in establishment as a leading coaching with excellent results in CAT. Don't settle for anything less than the best. Join SuperGards Coaching Institute today and unlock your potential for CAT exam success. With our unmatched facilities and track record of results, your journey to success begins here.

0 notes

Text

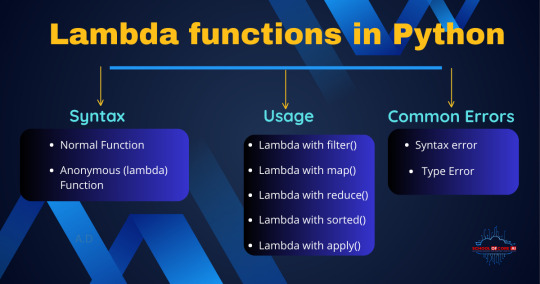

Exploring Python's Lambda Functions and Best Practices for Optimization in 2024

Python, a language known for its simplicity and versatility, continues to thrive as a programming powerhouse in 2024. Amidst its arsenal of features, lambda functions stand out as a concise and expressive tool, particularly when employed judiciously. In this blog post, we will delve into the nuances of lambda functions and outline the best practices for optimizing their usage in the ever-evolving Python landscape.

Lambda Functions: A Deeper Dive

Lambda functions, often hailed as anonymous functions, embody brevity and efficiency. Defined using the lambda keyword, they provide a succinct way to create small, ephemeral functions. The basic syntax is as follows:

Consider the following example, where a lambda function adds two numbers:

Lambda functions find their sweet spot in scenarios where a function is needed momentarily, often serving as arguments for higher-order functions like map(), filter(), and sorted().

Best Practices for Lambda Functions in 2024

1. Simplicity with Clarity

While brevity is a hallmark of lambda functions, clarity should not be compromised. Aim for simplicity without sacrificing understanding. If a lambda function becomes too complex, opting for a regular function may enhance readability.

2. Harnessing the Power of Higher-Order Functions

Lambda functions truly shine when paired with higher-order functions. Utilize them with map(), filter(), and sorted() for concise and expressive code.

3. Avoiding Complex Logic

Lambda functions are designed for simplicity. Steer clear of complex logic within lambda expressions; reserve them for straightforward tasks.

4. Consider Alternatives for Complexity

When facing complex operations or multiple expressions, consider using regular function definitions. This enhances code readability and maintainability.

5. Optimization: Embrace Built-in Functions

Python's built-in functions are optimized for performance. Prefer them over custom lambda functions, especially for straightforward operations like summation.

6. Testing and Profiling for Performance Gains

In performance-critical applications, testing and profiling are paramount. Leverage tools like Timeit and cProfile to measure execution time and profile code for potential optimizations.

Conclusion

As Python advances into 2024, the elegance and efficiency of lambda functions remain pivotal. By adhering to best practices and optimizing strategically, developers can harness the power of lambda functions effectively. For those looking to enhance their Python proficiency, the Python web development and DSA courses at Delhi’s SCAI Institute provide a structured and comprehensive learning journey. This ensures that Python code not only stays clean and readable but also performs optimally in the dynamic landscape of 2024 and beyond.

#python#django#python web development#software engineering#technology#artificial intelligence#machine learning

0 notes

Text

Deep Generative Models in Deep Learning: Navigating the Trends of 2024

In the rapidly advancing field of deep learning, the spotlight continues to shine on deep generative models as we usher in the transformative era of 2024. This blog takes a deep dive into the current state of these models, their burgeoning applications, and the pivotal role they play in reshaping how we approach creativity, data synthesis, and problem-solving in the contemporary landscape of artificial intelligence.

Understanding Deep Generative Models:

Deep generative models represent a revolutionary approach to machine learning by focusing on the generation of new data instances that closely resemble existing datasets. In the dynamic environment of 2024, these models, particularly those rooted in deep learning architectures, are evolving to capture complex patterns and distributions in data, unlocking new possibilities for innovation.

Types of Deep Generative Models:

Variational Autoencoders (VAEs):

Variational Autoencoders have undergone significant advancements in 2024, refining their ability to encode and generate diverse data types. From images to text and three-dimensional objects, VAEs are becoming increasingly versatile, driving progress in various domains such as healthcare and finance.

Generative Adversarial Networks (GANs):

Generative Adversarial Networks, the pioneers of deep generative models, continue to dominate the landscape. In 2024, GANs have seen improvements in terms of stability, training efficiency, and applications across industries. From hyper-realistic image generation to aiding in data augmentation, GANs remain at the forefront of innovation.

Flow-Based Models:

Flow-based models have undergone significant enhancements, particularly in handling sequential data and modeling complex distributions. Their applications in speech synthesis, language modeling, and financial data generation are expanding, as researchers unlock the potential of these models in real-world scenarios.

Applications in 2024:

Data Augmentation:

Deep generative models are increasingly being harnessed for data augmentation, addressing the perennial challenge of limited labeled data. In 2024, researchers and practitioners are leveraging these models to generate diverse and realistic datasets, thereby enhancing the robustness and generalization capabilities of machine learning models.

Content Creation:

The creative industry is witnessing a paradigm shift with the integration of deep generative models into the content creation process. In 2024, artists and designers are utilizing these models to produce realistic images, videos, and music. AI-assisted content creation tools are emerging, facilitating novel approaches to artistic expression and revolutionizing the creative workflow.

Drug Discovery and Molecular Design:

The pharmaceutical sector is experiencing a renaissance in drug discovery with the integration of generative models. In 2024, researchers are employing these models to generate molecular structures with specific properties, expediting the identification of potential drug candidates. This acceleration in the drug development pipeline holds promise for addressing global health challenges more rapidly.

Deepfake Detection and Cybersecurity:

As deepfakes become more sophisticated, the need for robust detection methods is paramount. Deep generative models are now actively involved in developing advanced deepfake detection systems. In 2024, we are witnessing the integration of generative models to enhance cybersecurity measures, protecting individuals and organizations from the malicious use of AI-generated content.

Challenges and Future Directions:

While deep generative models are making remarkable strides, they are not without their challenges. Interpretability, ethical considerations, and potential biases in generated content are areas of concern that researchers are actively addressing. The quest for more interpretable and ethical AI systems is an ongoing journey, and advancements in these areas will likely shape the trajectory of deep generative models in the years to come.

Ethical Considerations in Deep Generative Models:

As deep generative models become more prevalent, ethical considerations become increasingly important. The responsible use of these models, addressing issues like bias and fairness, is a priority. In 2024, researchers and industry practitioners are actively exploring ways to mitigate ethical concerns, ensuring that the benefits of deep generative models are accessible to all without perpetuating societal inequalities.

Interpretable AI:

The lack of interpretability in deep generative models has been a longstanding challenge. In 2024, efforts are underway to enhance the interpretability of these models, making their decision-making processes more transparent and understandable. Interpretable AI not only fosters trust but also enables users to have a deeper understanding of the generated outputs, particularly in critical applications such as healthcare and finance.

Conclusion:

As we navigate the dynamic landscape of 2024, deep generative models stand as powerful tools reshaping the contours of artificial intelligence. From data augmentation to content creation and drug discovery, the applications of these models are diverse and transformative. However, challenges persist, and the ethical considerations surrounding their use require continuous attention.

Looking ahead, the trajectory of deep generative models in the new world of 2024 is poised to redefine the boundaries of what is achievable in artificial intelligence. Researchers and practitioners are at the forefront of innovation, pushing the limits of these models and unlocking new possibilities. As we embrace this era of unprecedented technological advancements, the role of deep generative models is set to play a pivotal role in shaping the future of AI.

Explore more Courses-

#deep learning#deep science#artificial intelligence#machine learning#technology#programming#science#career#generative ai

0 notes

Text

Learn mastering in SQL Course with Certification.

Like this and comments on

#sql#nosql#mastering#programming#technology#artificial intelligence#data analytics#science#career advice#career#education#datascience#sql course#database

1 note

·

View note

Text

The Next Wave: Exploring AI and Machine Learning Roadmap in 2024

What is AI and Machine Learning?

Artificial Intelligence (AI):

AI is a broad area of computer science that focuses on creating systems or machines capable of performing tasks that typically require human intelligence. These tasks include problem-solving, learning, perception, understanding natural language, and even decision-making. AI can be categorized into two types:

Narrow or Weak AI: This type of AI is designed to perform a specific task, such as speech recognition or image classification. It operates within a limited domain and doesn't possess generalized intelligence.

General or Strong AI: This is the hypothetical idea of AI possessing the ability to understand, learn, and apply knowledge across various domains, similar to human intelligence. Strong AI, however, is more a concept than a current reality.

Machine Learning (ML):

ML is a subset of AI that focuses on developing algorithms and statistical models that enable computers to perform a task without explicit programming. Instead of being explicitly programmed to perform a task, a machine learning system learns from data and improves its performance over time. ML can be categorized into three main types:

Supervised Learning: The model is trained on a labeled dataset, where the algorithm is provided with input-output pairs. It learns to map inputs to outputs, allowing it to make predictions or classifications on new, unseen data.

Unsupervised Learning: The model is given unlabeled data and is tasked with finding patterns or structures within it. Clustering and dimensionality reduction are common applications of unsupervised learning.

Reinforcement Learning: The algorithm learns by interacting with an environment. It receives feedback in the form of rewards or penalties, allowing it to learn optimal strategies for decision-making.

Edge of AI Machine Learning Roadmap

We stand at the threshold of 2024, and the landscape of Artificial Intelligence (AI) and Machine Learning (ML) unfurls a roadmap teeming with possibilities and innovation. This blog endeavors to elucidate the key trends, advancements, and transformative shifts that mark the AI and ML journey in the year 2024.

Continued Advancements in Deep Learning:

The year 2024 sees Deep Learning, a vanguard in ML, continuing its relentless march forward. Expect breakthroughs in neural network architectures, optimization algorithms, and training techniques, propelling the boundaries of what's achievable in AI applications.

AI for Edge Computing and Federated Learning:

Edge AI and Federated Learning take center stage as the paradigm shifts toward decentralized computing. In 2024, we witness a surge in the development of AI models capable of operating on edge devices, fostering real-time processing, and reducing reliance on centralized cloud servers.

Explainable AI (XAI) Reaches Maturity:

Explainability becomes a paramount concern in AI systems. In 2024, Explainable AI (XAI) matures, providing a clearer lens into the decision-making processes of complex algorithms. This transparency is crucial, especially in fields like healthcare, finance, and autonomous systems.

AI Ethics Takes Center Stage:

The ethical dimensions of AI gain prominence. With increasing societal reliance on AI, 2024 emphasizes the need for responsible AI development. Stricter ethical guidelines, frameworks, and regulations come into play to ensure fairness, transparency, and accountability in AI applications.

AI in Healthcare Revolutionized:

The intersection of AI and healthcare will witness a revolution in 2024. Advanced diagnostic tools, personalized medicine, and predictive analytics powered by machine learning algorithms redefine patient care. AI has become an indispensable ally in the quest for improved healthcare outcomes.

Quantum Computing Impact on ML:

The emergence of quantum computing leaves an indelible mark on ML. In 2024, we witness the exploration of quantum algorithms for machine learning tasks, promising exponential speedups in solving complex problems that were once computationally intractable.

AI Democratization and Accessibility:

The democratization of AI tools and technologies reaches new heights. In 2024, user-friendly platforms, open-source frameworks, and simplified interfaces empower a broader demographic to harness the capabilities of AI and ML, fostering innovation across diverse domains.

AI-powered Cybersecurity:

As cyber threats become more sophisticated, AI steps up as a formidable ally in cybersecurity. Machine learning models in 2024 demonstrate enhanced capabilities in detecting and thwarting cyberattacks, fortifying digital landscapes against evolving threats.

Hybrid Models and Interdisciplinary Collaboration:

The synergy between AI, ML, and other disciplines gains traction. In 2024, hybrid models that amalgamate different AI approaches find applications in diverse fields. Interdisciplinary collaboration becomes the norm, as AI professionals work alongside experts in various domains to solve complex problems.

Continuous Learning and Skill Evolution:

The pace of innovation in AI demands a commitment to continuous learning. In 2024, professionals in the field prioritize ongoing skill development, staying abreast of the latest advancements, and engaging in collaborative communities to foster a culture of knowledge exchange.

Conclusion:

The AI and Machine Learning roadmap for 2024 is a journey marked by innovation, ethical considerations, and the democratization of technology. As we navigate this dynamic landscape, the convergence of technological advancements and societal responsibility will define the narrative of progress. Whether in healthcare, cybersecurity, or interdisciplinary collaboration, the year 2024 unfolds a tapestry of possibilities, inviting professionals and enthusiasts alike to contribute to the ongoing evolution of AI and ML, shaping a future where intelligent systems coalesce seamlessly with the aspirations of a rapidly advancing world.

#machine learning#artificial intelligence#ai#technology#computer science#science#deep science#techinnovation#tech industry#technically#programming#software development#deep learning#datascience#data analytics#python#roadmap#future#futuristic#2024

0 notes

Text

Enhance career growth with expertise in LLM and Generative AI – top tech skills in demand

What are the differences between generative AI vs. large language models? How are these two buzzworthy technologies related? In this article, we’ll explore their connection.

To help explain the concept, I asked ChatGPT to give me some analogies comparing generative AI to large language models (LLMs), and as the stand-in for generative AI, ChatGPT tried to take all the personality for itself. For example, it suggested, “Generative AI is the chatterbox at the cocktail party who keeps the conversation flowing with wild anecdotes, while LLMs are the meticulous librarians cataloging every word ever spoken at every party.” I mean, who sounds more fun? Well, the joke’s on you, ChatGPT, because without LLMs, you wouldn’t exist.

Text-generating AI tools like ChatGPT and LLMs are inextricably connected. LLMs have grown in size exponentially over the past few years, and they fuel generative AI by providing the data they need. In fact, we would have nothing like ChatGPT without data and the models to process it.

Performing Large Language Models (LLM) in 2024

Large Language Models, such as GPT-3 (Generative Pre-trained Transformer 3), were a significant breakthrough in natural language processing and artificial intelligence. These models are characterized by their massive size, often involving billions or even trillions of parameters, which are learned from vast amounts of diverse data.

Here are some key aspects that were relevant to LLMs like GPT-3:

Architecture: GPT-3, and models like it, utilize transformer architectures. Transformers have proven to be highly effective in processing sequential data, making them well-suited for natural language tasks.

Scale: One defining characteristic of LLMs is their scale. GPT-3, for instance, has 175 billion parameters, allowing it to capture and generate highly complex patterns in data.

Training Data: These models are pre-trained on massive datasets from the internet, encompassing a wide range of topics and writing styles. This enables them to understand and generate human-like text across various domains.

Applications: LLMs find applications in various fields, including natural language understanding, text generation, translation, summarization, and more. They can be fine-tuned for specific tasks to enhance their performance in specialized domains.

Challenges: Despite their capabilities, LLMs face challenges such as biases present in the training data, ethical concerns related to content generation, and potential misuse.

Energy Consumption: Training and running large language models require significant computational resources, raising concerns about their environmental impact and energy consumption.

The Latest update of LLM is reasonable to assume that advancements in LLMs have likely continued. Researchers and organizations often work on improving the architecture, training methodologies, and applications of large language models. This may include addressing challenges such as bias, and ethical concerns, and fine-tuning models for specific tasks.

For the most accurate and recent information, consider checking sources such as AI research publications, announcements from organizations like OpenAI, Google, and others involved in AI research, as well as updates from major AI conferences. Additionally, online forums and communities dedicated to artificial intelligence discussions may provide insights into the current state of LLMs and related technologies.

Second, performing Generative AI in 2024

Generative AI refers to models and techniques that can generate new content, often in the form of text, images, audio, or other data types. Some notable approaches include Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and large language models like GPT (Generative Pre-trained Transformer).

Some trends in 2024

Advancements in Language Models: Large language models like GPT-3 have demonstrated impressive text generation capabilities. Improvements in model architectures, training methodologies, and scale may continue to enhance the performance of such models.

Cross-Modal Generation: Research on models capable of generating content across multiple modalities (text, image, audio) has been ongoing. This involves developing models that can understand and generate diverse types of data.

Conditional Generation: Techniques for conditional generation, where the generated content is influenced by specific inputs or constraints, have been a focus. This allows for more fine-grained control over the generated output.

Ethical Considerations: As generative models become more powerful, there is an increased awareness of ethical concerns related to content generation. This includes addressing issues such as bias in generated content and preventing the misuse of generative models for malicious purposes.

Customization and Fine-Tuning: There is a growing interest in enabling users to customize and fine-tune generative models for specific tasks or domains. This involves making these models more accessible to users with varying levels of expertise.

Our Generative AI with LLM Course

Embark your Career in a hypothetical course on Generative AI with Large Language Models (LLMs) offered by the "School of Core AI Institute." If such a course were to exist, it could cover a range of topics related to the theory, applications, and ethical considerations of Generative AI and LLMs. The curriculum included:

Facilities: -

Fundamentals: Understanding the basics of generative models, LLM architectures, and their applications.

Model Training: Exploring techniques for training large language models and generative algorithms.

Applications: Practical applications in various domains, including natural language processing, content generation, and creative arts.

Ethical Considerations: Addressing ethical issues related to biases, responsible use, and transparency in AI systems.

Hands-on Projects: Engaging students in hands-on projects to apply their knowledge and develop skills in building and fine-tuning generative models.

Current Developments: Staying updated on the latest advancements in the field through discussions on recent research papers and industry trends.

Conclusion-

The School of Core AI is the best institute in Delhi NCR with a Standard Curriculum of AI field Studies. The Large Language Models (LLMs) like GPT-3 have showcased immense natural language processing capabilities, with billions of parameters enabling diverse applications. Challenges include biases and ethical concerns. Generative AI has advanced in cross-modal content generation, offering versatility across text, images, and audio. Conditional generation provides control, contributing to applications in art, design, and healthcare. Ethical considerations, including bias mitigation, are paramount. LLMs and Generative AI demonstrate remarkable potential, but ongoing research aims to address challenges, refine models, and ensure responsible use. For the latest updates, consult recent publications and official announcements in the rapidly evolving field of AI.

#artificial intelligence#technology#machine learning#ai generated#generative ai#ai#datascience#data analytics#python#course#programming#coding#robotics#innovation#automation#software#career#education#career advice#management#internship#courses

0 notes

Text

Python Course online

We talk about Python Programming (Basic to Advance), RoadMap and why learn python?

And we provide python course or get certificate with guaranteed jobs Opportunities.

Python: -

Python is a high-level, general-purpose, and interpreted programming language used in various sectors including machine learning, artificial intelligence, data analysis, web development, and many more. Python is known for its ease of use, powerful standard library, and dynamic semantics. It also has a large community of developers who keep on contributing towards its growth. The major focus behind creating it is making it easier for developers to read and understand, also reducing the lines of code.

History: -

Python, first created in 1980s by the Guido van Rossum, is one of the most popular programming languages. During his research at the National Research Institute for Mathematics and Computer Science in the Netherlands, he created Python – a super easy programming language in terms of reading and usage. The first ever version was released in the year 1991 which had only a few built-in data types and basic functionality.

Later, when it gained popularity among scientists for numerical computations and data analysis, in 1994, Python 1.0 was released with extra features like map, lambda, and filter functions. Followed by which adding new functionalities and bringing newer versions of Python came into fashion.

Python 1.5 released in 1997

Python 2.0 released in 2000

Python 3.0 in 2008 brought newer functionalities

The latest version of Python, Python 3.10 was released in 2021

Python Advance: -

Python topics are too difficult for beginners. This is also reflected in our image. The trail requires experience and beginners with insufficient experience could easily get dizzy. Those who have successfully completed our beginner's tutorial or who have acquired sufficient Python experience elsewhere should not have any problems. As everywhere in our tutorial, we introduce the topics as gently as possible. This part of our Python tutorial is aimed at advanced programmers.

Most people will probably ask themselves immediately whether they are at the right place here. As in various other areas of life and science, it is not easy to find and describe the dividing line between beginners and advanced learners in Python. On the other hand, it is also difficult to decide what the appropriate topics are for this part of the tutorial. Some topics are considered by some as particularly difficult, while others rate them as easy. Like the hiking trail in the picture on the right. For people with mountain experience, it is a walk, while people without experience or with a strong feeling of dizziness are already dreadful at the sight of the picture.

When asked what advanced topics are, i.e. Python topics that are too difficult for beginners, I was guided by my experiences from numerous Python trainings.

Those who have successfully completed our beginner's tutorial or have had sufficient Python experience elsewhere should have no problems. As everywhere in our tutorial, we introduce the topics as gently as possible.

Python Roadmap for Beginners: -

Basics- Syntax, Variables, Data Types, Conditionals, Loops Exceptions, Functions, Lists tuples, set, dictionaries.

Advanced- List comprehensions, generators, expressions, closures Regex, decorators, iterators, lamdas, functionals programming, map reduce, filters, threading, magic methods

OOP- Classes, inheritance, methods

Web frameworks- Django, Flask, Fast API

Data Science- NumPy, Pandas Seaborn Scilkit-learn, TensorFlow, Pytorch.

Testing- Unit testing, integration, testing,end to end testing ,load testing

Automation- File manipulations Web scraping GUI Automations Network Automations

DSA- Array & Linked lists, heaps stocks queue, hash tables, binary search tree, recursion, sorting Algorithms.

Package Managers- plp, Conda

These road map described the areas of python and what is depth of Python, clearly visible what is the importance of Python.

We have an institute that name is School of core AI and we assured you with jobs placement in Brand or AI industry

We have +5 years experienced professionals Python mentors/trainers.

Click here-

#python#datascience#technology#data analytics#artificial intelligence#ninjago pythor#pythor p chumsworth#machine learning

1 note

·

View note

Text

Hello!!!

Please visit our courses and learn Data Science-

https://schoolofcoreai.com/courses/

#data analytics#datascience#technology#machine learning#artificial intelligence#python#ai#tech industry#techinsights#techinnovation

0 notes

Text

When your model preform on train data is excellent and Infront of Test Data is like Meoowww.............!!!!!!!!!!!

Artificial Intelligence courses available here

#machine learning#data analytics#artificial intelligence#technology#datascience#funny memes#meme#lol memes#computer science#school

0 notes

Text

Click Here and learn Data Science

You learn most of Programming like Python, SQL, Java, HTML and others resources related to Fundamental and Deep Machine learning.

1 note

·

View note

Text

Learn with The SCAI and improve your Skills and Opportunists.

0 notes

Text

https://schoolofcoreai.com/

Visit Hear-

Visit site and learn with me AI and its related field Courses. you become a Data Scientist with 100% Guaranteed jobs Placement.

0 notes

Text

****Lear with the SCAI and improve your skills and techniques.

1 note

·

View note