#THIS PROF HAS LOW REVIEWS FOR A REASON

Explore tagged Tumblr posts

Text

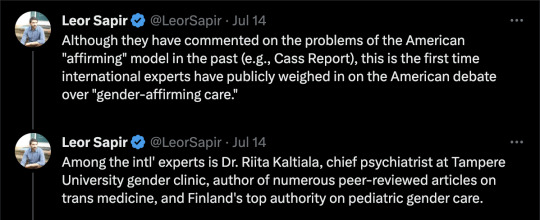

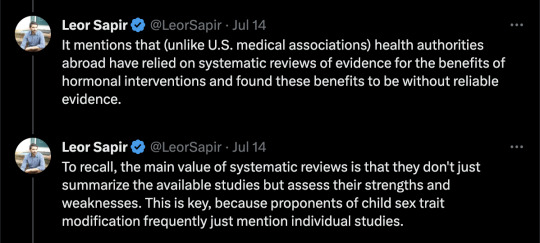

Published: Jul 13, 2023

As experienced professionals involved in direct care for the rapidly growing numbers of gender-diverse youth, the evaluation of medical evidence or both, we were surprised by the Endocrine Society’s claims about the state of evidence for gender-affirming care for youth (Letters, July 5). Stephen Hammes, president of the Endocrine Society, writes, “More than 2,000 studies published since 1975 form a clear picture: Gender-affirming care improves the well-being of transgender and gender-diverse people and reduces the risk of suicide.” This claim is not supported by the best available evidence.

Every systematic review of evidence to date, including one published in the Journal of the Endocrine Society, has found the evidence for mental-health benefits of hormonal interventions for minors to be of low or very low certainty. By contrast, the risks are significant and include sterility, lifelong dependence on medication and the anguish of regret. For this reason, more and more European countries and international professional organizations now recommend psychotherapy rather than hormones and surgeries as the first line of treatment for gender-dysphoric youth.

Dr. Hammes’s claim that gender transition reduces suicides is contradicted by every systematic review, including the review published by the Endocrine Society, which states, “We could not draw any conclusions about death by suicide.” There is no reliable evidence to suggest that hormonal transition is an effective suicide-prevention measure.

The politicization of transgender healthcare in the U.S. is unfortunate. The way to combat it is for medical societies to align their recommendations with the best available evidence—rather than exaggerating the benefits and minimizing the risks.

This letter is signed by 21 clinicians and researchers from nine countries.

FINLAND Prof. Riittakerttu Kaltiala, M.D., Ph.D. Tampere University Laura Takala, M.D., Ph.D. Chief Psychiatrist, Alkupsykiatria Clinic

UNITED KINGDOM Prof. Richard Byng, M.B.B.Ch., Ph.D. University of Plymouth Anna Hutchinson, D.Clin.Psych. Clinical psychologist, The Integrated Psychology Clinic Anastassis Spiliadis, Ph.D.(c) Director, ICF Consultations

SWEDEN Angela Sämfjord, M.D. Senior consultant, Sahlgrenska University Hospital Sven Román, M.D. Child and Adolescent Psychiatrist

NORWAY Anne Wæhre, M.D., Ph.D. Senior consultant, Oslo University Hospital

BELGIUM Em. Prof. Patrik Vankrunkelsven, M.D. Ph.D. Katholieke Universiteit Leuven Honorary senator Sophie Dechêne, M.R.C.Psych. Child and adolescent psychiatrist Beryl Koener, M.D., Ph.D. Child and adolescent psychiatrist

FRANCE Prof. Celine Masson, Ph.D. Picardy Jules Verne University Psychologist, Oeuvre de Secours aux Enfants Co-director, Observatory La Petite Sirène Caroline Eliacheff, M.D. Child and adolescent psychiatrist Co-director, Observatory La Petite Sirène Em. Prof. Maurice Berger, M.D. Ph.D. Child psychiatrist

SWITZERLAND Daniel Halpérin, M.D. Pediatrician

SOUTH AFRICA Prof. Reitze Rodseth, Ph.D. University of Kwazulu-Natal Janet Giddy, M.B.Ch.B., M.P.H. Family physician and public-health expert Allan Donkin, M.B.Ch.B. Family physician

UNITED STATES Clin. Prof. Stephen B. Levine, M.D. Case Western Reserve University Clin. Prof. William Malone, M.D. Idaho College of Osteopathic Medicine Director, Society for Evidence Based Gender Medicine Prof. Patrick K. Hunter, M.D. Florida State University Pediatrician and bioethicist

Transgenderism has been highly politicized—on both sides. There are those who will justify any hormonal-replacement intervention for any young person who may have been identified as possibly having gender dysphoria. This is dangerous, as probably only a minority of those so identified truly qualify for this diagnosis. On the other hand, there are those who wouldn’t accept any hormonal intervention, regardless of the specifics of the individual patients.

Endocrinologists aren’t psychiatrists. We aren’t the ones who can identify gender-dysphoric individuals. The point isn’t to open the floodgates and offer an often-irreversible treatment to all people who may have issues with their sexuality, but to determine who would truly benefit from it.

Jesus L. Penabad, M.D. Tarpon Springs, Fla.

[ Via: https://archive.today/IRShy ]

==

The gender lobotomists just got called out.

#Leor Sapir#Colin Wright#Endocrine Society#gender lobotomy#genderwang#gender ideology#queer theory#sex trait modification#ideological capture#medical malpractice#medical transition#medical scandal#gender affirming care#affirmation model#gender affirming#ideological corruption#religion is a mental illness

288 notes

·

View notes

Text

Dogs have “extensive and multifarious” environmental impacts, disturbing wildlife, polluting waterways and contributing to carbon emissions, new research has found.

An Australian review of existing studies has argued that “the environmental impact of owned dogs is far greater, more insidious, and more concerning than is generally recognised”.

While the environmental impact of cats is well known, the comparative effect of pet dogs has been poorly acknowledged, the researchers said.

The review, published in the journal Pacific Conservation Biology, highlighted the impacts of the world’s “commonest large carnivore” in killing and disturbing native wildlife, particularly shore birds.

In Australia, attacks by unrestrained dogs on little penguins in Tasmania may contribute to colony collapse, modelling suggests, while a study of animals taken to the Australia Zoo wildlife hospital found that mortality was highest after dog attacks, which was the second most common reason for admission after car strikes.

In the US, studies have found that deer, foxes and bobcats were less active in or avoid wilderness areas where dogs were allowed, while other research shows that insecticides from flea and tick medications kill aquatic invertebrates when they wash off into waterways. Dog faeces can also leave scent traces and affect soil chemistry and plant growth.

The carbon footprint of pets is also significant. A 2020 study found the dry pet food industry had an environmental footprint of around twice the land area of the UK, with greenhouse gas emissions – 56 to 151 Mt CO2 – equivalent to the 60th highest-emitting country.

The review’s lead author, Prof Bill Bateman of Curtin University, said the research did not intend to be “censorious” but aimed to raise awareness of the environmental impacts of man’s best friend, with whom humans’ domestic relationship dates back several millennia.

“To a certain extent we give a free pass to dogs because they are so important to us … not just as working dogs but also as companions,” he said, pointing to the “huge benefits” dogs had on their owners’ mental and physical health. He also noted that dogs played vital roles in conservation work, such as in wildlife detection.

“Although we’ve pointed out these issues with dogs in natural environments … there is that other balancing side, which is that people will probably go out and really enjoy the environment around them – and perhaps feel more protective about it – because they’re out there walking their dog in it.”

In the review, the researchers attributed the extent of the environmental impacts to the sheer number of dogs globally, as well as “the lax or uninformed behaviour of dog owners”.

A simple way to mitigate against the worst impacts was to keep dogs leashed in areas where restrictions apply and to maintain a buffer distance from nesting or roosting shorebirds, the paper suggested.

“A lot of what we’re talking about can be ameliorated by owners’ behaviour,” Bateman said, pointing out that low compliance with leash laws was a problem.

“Maybe, in some parts of the world, we actually need to consider some slightly more robust laws.”

He suggested that dog exclusion zones might be more suitable in some areas.

Bateman also raised sustainable dog food as an option to reduce a pet’s environmental paw print, noting however that “more sustainable dog food tends to cost more than the cheap dog food that we buy which has a higher carbon footprint”.

“If nothing else, pick up your own dog shit,” he said.

2 notes

·

View notes

Text

Re-reading The Fellowship of the Ring for the First Time in Fifteen Years

Hi, Hello, Welcome! The conceit of these posts is pretty self-explanatory. I read the Lord of the Rings for the first time at age 17, in the middle of my parent's divorce (it was messy, we're not going into any details). Needless to say, I remember pretty much nothing about that read, and I would like to give the books a fair shake of a re-read. That's what this is, and there will be spoilers throughout!

I usually do full-book reviews, but if ever I was going to do a chapter-by-chapter re-read, it would be for LotR. The rules are that I'm going in as blind as I possibly can (I have watched the movies and have absorbed like...a reasonable amount of lore from existing on the internet as a millennial) and I'm not doing any research beyond like, defining words for myself as I read. So here we go, and I hope you enjoy rereading with me! Let's talk "The Shadow of the Past."

Good LORD JRR Tolkien can lore dump when he wants to. This chapter was mainly lore dump, which is fine because it was at least interesting lore dump. I'm not a lore girly though, I'm a character girly, so let's go with "we got the One Ring's backstory, now let me talk about other characters because the Ring isn't one just yet."

This is going to sound initially harsh, but it is said with affection: Gandalf is 1000% the pedantic asshole professor who is way too into the Socratic method who you absolutely detest in undergrad but somehow his classes still end up sticking with you more than any other. You then get to understand this prof better as a master's student, and deeply love this prof as a PhD. That's literally the vibe I'm getting from his lecture to Frodo about finding some goddamn pity and compassion for the tragedy that is Smeagol and Gollum. Because it is VERY easy to judge and be critical in the abstract, which Frodo very much is, having never encountered Gollum, and Gandalf has spent time and effort tracking down Gollum with way more background knowledge with which to contextualize the layers of tragedy that Gollum personifies and affects. It's a big ask, to get people to abstract compassion (and do not come in here and argue with me about this, I live in 20-goddam-24, I know what I'm talking about), but Gandalf kind of doesn't let it go with Frodo until Frodo at least softens his position and is open to, if not at, compassion. I've been a student and I've been a teacher, and these conversations are hard from both directions, so kudos to Gandalf for sticking with it, and to Frodo for getting to a place where he was truly listening.

Especially after Gandalf just CASUALLY DROPS that Gollum literally ATE BABIES. I'm not even kidding, he just casually, in the midst of an infodump on Gollum's time tracking Bilbo after losing the Ring, says,

The woodsmen said that there was some new terror abroad, a ghost that drank blood. It climbed trees to find nests, it crept into holes to find young, it slipped through windows to find cradles.

AND THEN WE JUST CASUALLY MOVE ON LIKE BABY EATING ISN'T SOMETHING WE NEED TO ADDRESS HERE. I would like to address the baby eating, Gandalf!!!

Despite not addressing the baby eating though, there was some interesting new information in the Gollum infodump that I understand why it got cut from the movies, but I was low-key fascinated. Smeagol was specifically noted to be interested in roots. Gandalf framed that like literal tree and mountain roots, but this is Tolkien we're talking about. Roots have a metric ton of metaphorical meanings too, and the fact that Smeagol was interested in the origins of things, in where they came from, in what made them as they are, is both deeply ironic and deeply interesting. I kind of hope we do more with that, since becoming Gollum is like ouroborosing roots; Smeagol's interest in Gollum is deeply self-reflexive, which might also be how we end up with that bifurcated personality thing. I dunno, but that would be really cool to follow up on.

I also deeply appreciated Frodo's "WHAT THE ACTUAL FUCK" reaction to realizing that Gandalf had let him keep the One Ring for so long. Notably, Gandalf kind of doesn't explicitly apologize for putting Frodo at risk, but he does acknowledge that yes, yes he made a choice, took a risk, and put Frodo in some level of danger. I suppose we'll take it, even as we acknowledge that yes, Gandalf was working with imperfect, incomplete information. We do the best we can with what we know at the time, or something. And if it took 20-odd years to figure all of this out (which makes sense for the kind of field and archival work required here), then y'know what, better late than never.

That said, Gandalf also kind of...LIGHTLY SKATES OVER the fact that even just possessing the Ring and doing nothing with it for 20 years has affected Frodo. He's not aging. He can't cast it away. He's already caught. Right at the beginning, in CHAPTER TWO of this massive trilogy, it's not a matter of preventing Frodo from being caught by the ring. It's a matter of how long Frodo can resist. He was doomed before anyone knew, concretely, that there was a problem. And jaysus, if that isn't how you tee up a tragedy, I don't even know how you do that. Maybe there wasn't a good reason for Gandalf to say that to Frodo, maybe it would have hurt more than it helped, but I do kind of think PERHAPS YOU MIGHT POINT THIS OUT???

I get the sense that I'm going to be very back-and-forth on book Gandalf...this is going to be an interesting thing to watch develop as I keep reading.

In addition to Gandalf's "Backstory Via The Socratic Method 101" course, we also get some additional Samwise Gamgee in this chapter. Saying "I adore this hobbit and he should be protected at all costs" is not new or even interesting, so let's take a different tack. In the films, Sam's excitement for going to see the elves is...ungrounded. It's a thing about him that we just accept. I deeply relate to and adore the sense we get of why and how the elves thing comes about in the book:

He believed he had once seen and Elf in the woods, and still hoped to see more one day. Of all the legends that he had heard in his early years such fragments of tales and half-remembered stories about the Elves as the hobbits knew, had always moved him most deeply.

This might seem ungrounded, but it's deeply aware of how stories work. Sam knows that the hobbits don't have the extent of Elven lore that exists, but he knows that there is a magic and a power in even the fragments they have, and that captured his imagination to such an extent that a yearning to see, to understand, to know that magic, was born in his heart. That grounds Sam in stories just as much as Frodo is grounded in stories, and more than that, Sam WANTS the magic to be real in a way that Frodo, primed on all the tragedy by Gandalf, I don't actually think does. Frodo is "I wish it need not have happened in my time," but Sam is "Me go and see the Elves and all."

That "and all" at the end is particularly poignant, because if Sam knows some of the stories of the elves, I have to imagine a few tragic tales survived along with the magical ones, so Sam isn't going starry-eyed into this as a bumblefuck gardener from nowhere. There's an acceptance there of the magic that encompasses all that magic offers, both good and bad. Yeah, I'm probably over-reading into this, but I support it at least a little with the fact that at the beginning of the chapter, we're with Sam when the hobbits down the pub are talking about strange beings and creatures and *foreshadowing the ents*. Sam knows that the stories tell of more than just elves, but for him, that wonder is enough to warrant everything else. No, I am not taking criticism (constructive or otherwise) at this time.

Other than a wee shoutout to the legendary "Mad Baggins"--and let's be real, if history must become myth and myth must become legend, I want Mad Baggins to stay alive and not be forgotten--that's about all I have for this chapter. Professor Gandalf shows up to school Frodo and kick his ass out the door, and Sam gets to go see the elves. We'll pick up again next time with chapter 3.

#the fellowship of the ring#the lord of the rings#chapter 2#shadows of the past#reread#HOW DID WE JUST SKATE OVER GOLLUM EATING BABIES????

8 notes

·

View notes

Text

Level 5 is interesting cause like, they did a Konami but good. Came out swinging with *multiple* hits. Inazuma Eleven boom, Professor Layton boom, Ni No Kuni boom, YoKai Watch BOOM and a bunch of other smaller but generally liked games (Fantasy Life, Megaton Musashi, Snack World) and then they…dipped for a while during the generation change. And that dip *feels* weird right? I looked at the reviews of the games! People still think the games feel pretty damn good yet there hasn’t been a new yoKai watch since 2019. Prof Layton is coming back when it feels he should’ve been like a bi-yearly thing. Is it that they really struggled with higher development costs versus developing just for the DS?

Even if that’s only part of the reason it kinda proves to me how unique the DS was as a popular option to develop games for tbh. Before the DS atleast on Nintendo handheld consoles a lot of games where Lesser ports tm or Tetris. The DS had this combination of power, gimmick, install base and low dev cost that companies like Level 5 and others could just afford to experiment. I think that’s the platonic ideal of the gaming handheld, smaller than the Deck, has *just* enough power for a game that feels “full” but also has a central and strong enough gimmick to make *not* taking advantage of it a little sad.

2 notes

·

View notes

Text

MY REVIEW ON PSYCHO PASS PROVIDENCE- part 2

SOME ISSUES (and THOUGHTS) THAT I NEED TO ADDRESS:

I still don’t get the reason why Prof. Saiga and Prof. Stronskaya made the paper. What are they aiming with that? Did I miss something? And conflict coefficient is too much like crime coefficient. This is personal reason, but honestly conflict coefficient is much more reasonable than Sybil. At least, they don’t judge based on how cloudy people are.

I like Saiga and Kougami relationship, and for once we get to see Kougami use Keigo.

No hate, but idk some people debate over Kougami calling Frederica by her name. Akane also called her like that too, except she always use -san, like she did with Shion-san. Kougami also address Shion with her name and nothing happen. He’s just comfortable with both of them, I think. (idk how close they were, need novel)

Why Azusawa never appear on this movie? I thought since we don’t know anything about him in PP3 we somehow have explanation in PPP, with Shindou especially. Well, I despise PP3 anyway, pls don’t ask me why. I appreciate PP staff work tho.

Sugo is cute, loyal hound to his inspector. He’d make quick call, inform anything suspicious to Akane.

I find it funny Kougami act childish in this scenes:

He clearly keep his distance from Akane and div 1 after he and Akane’s convo late night call, even Saiga asked him. He only dare to look at her face again after their body almost exploded with c4, meanwhile Akane from the first time act as if nothing happen. Nice writing, I guess.

Until PPP, I think so many characters have died. And their death is almost have no meaning nor progress to the story (yet with Saiga’s case). PP is dystopian anime, I get it, the story is dark and tragic, I get it, but why they shown so many death after s1? No, it’s not meaningless, it’s actually create situation or drama (for ex Sugo killed Aoyanagi), but not with audience. They don’t make us fully feel related or attachment to the character, but suddenly they’re gone. (like entire div 3 on PP2.) This is just personal opinion, but Saiga’s death is kind of ashamed, they shouldn’t just kill him as a mere bait for Peacebreaker, it’s too bad. There’s so much potential to his character in the future instalment if they ever make one :’(

Akane running away to fallen Saiga is kinda throw me off. Why not help Kougami or Frederica first? This is out of character. The old Akane would never.

I like that Akane bursts in front of Kougami in elevator scene. When it’s just the two of them, she actually lower her wall because in my case, I never want to cry in public or in front of strangers. Well, she cried near the fountain holo before though, but you get the point.

Kougami sympathy to Akira.

Akira is precious, that’s it. Everyone who watch it already know why.

Just for a brief second, I can see Akane’s eyes shaking when she heard Kougami’s tone on the call. She must be aware he’s in pain.

The scene where Tonami had possessed Akira, Akane could have done so much more or at least TRY something. Where’s the badass Akane we always adore?

Akane always have puppy eyes for one man and one man only. Guess who?

Those puppy eyes can kill me.

Also, I find this script is irrelevant. In the hospital, first Akane relief that Kougami is unhurt, then suddenly he said ‘my mistake’? Why is he suddenly apologize, lmao.

Sybil is a bitch. After Yabuki and Shindo’s tasks done, they told Shindo to just die. Sigh.

I HATE that Tonami has low CC. In s1, we only learn that people with low CC can’t even commit crimes except being asymptomatic, and suddenly this new villain who basically terrorist has clear hue just because he feel sympathy to low life and wants fairness for them (cmiiw). He kill people for God’s sake! This shows inconsistence of writing and will create plot hole in the future. And for Akane’s case, I tolerate it probably because Kasei isn’t actually human.

Akane is so strong, she has been shot twice by Tonami, yet she can speak clearly, even sit. Meanwhile we can find waver in Kougami’s voice on that call (he only get one bullet on his thigh.)

I find this interesting.

Akane is NOT aware that Tonami is about to shoot her. If she knows, would she see Kougami’s sin differently? But after much thought, I think it’s still wouldn’t change a thing, because she wants to arrest Tonami. But dude, Kougami shoots because he can’t lose her, if he shoots his leg or hand or whatever, anything could happen and Akane would’ve been died. If I were him, I’ll shoot his head also without hesitation. I wonder if she understand that she’s at stake.

Who transfer Sugino to SAD? Akane?

Imagine Kougami’s feeling when he can’t do anything to stop Akane. Wow.

Akane’s decision to do that is actually admirable, she honour Shindo and Akira’s death.

This is the end of my chattering. I hope I’m not seen for being a pathetic shipper. I love psycho pass as anime.

#psycho pass#mypsychopassthought#shinkane#kouaka#kougami shinya#akane tsunemori#ginoza nobuchika#ppp#psycho pass providence

14 notes

·

View notes

Note

surprise! your OCs are required to attend Professor Barbara Allen's forensics lectures for a semester! what's the ratemyprofessor review they give her and how do they do?

oooh bestie you have NO idea what's coming, I literally WAS a forensics major before I switched to trade school :D

some long answers here, so they'll be under the cut. thank you so much for the ask!

Ophelia: 5 stars. Loves the lecture, takes exceptionally detailed notes and ends up with curve-breaking high grades. Her only problem would be missing class due to late night hero work making her exhausted in the morning, but... well, she feels like Professor Allen's very familiar with that.

Jasper: 4.4 stars. They're a nursing student, so they don't mind gruesome pictures or high-intensity anatomy discussions, but they feel like Professor Allen just goes a bit too fast in the lectures (lol). They'd pull good grades, and probably end up with a bit of unintentional extra credit when Professor Allen lands in the ER with some unexplained cuts and bruises and Jasper's the one to stitch her up and send her on her way.

Kestrel: No rating. Probably didn't even show up to the first lecture. To be fair, pretty much all of their higher education has come from exploring and adventuring in the wilderness. They probably weren't even aware they'd been signed up for the lecture. (but don't take it personally, if they were there, they'd be sure to pay attention)

Rae: Originally rated 3.5 stars, but bumped it up to 4.5 after Prof. Allen was kind and understanding in offering her an extension. She did well enough in college the first time around (Masters in Foreign Language), but she also struggles with severe insomnia and some anxiety issues. At first, she probably say Prof. Allen as somewhat distant, but once she actually went up and talked to her and explained everything, she actually ended up with a renewed respect and finished the rest of the semester very well.

Robin: Hm... 3 stars. Not a bad class, but she's much more music-inclined than science-inclined, and having an ASL interpreter would've made the class a hell of a lot easier for her (that's not Professor Allen's fault, of course, but still wasn't the best experience for Robin). She'd probably end the semester decidedly neutral - not her favorite experience, but would also understand that it's probably a very enjoyable class for someone who enjoys the material a little more.

Madison: 4.5 stars. She likes psychology a lot, so she'd probably really enjoy a forensics class, and I feel like she'd like Professor Allen as an instructor. And I feel like she'd be able to keep up with the lectures pretty well! She's a quick thinker. If anything, she'd be a little irritated by the amount of studying she needs to do, but that's the only thing keeping her from a full 5 stars.

Quinn: It really depends. Either she'd get fed up with all the stifling structure and order of the class and end up dropping out (and rating Prof. Allen pretty low), or she'd actually find the material interesting and would end up at the top of the class. It's not really a matter of capability or intelligence, she's got plenty of both, but she'll only bother with the class if she enjoys the subject being taught. So... it's a coin flip.

Katherine: She wouldn't enjoy the class, but she'd rate Professor Allen highly anyway (4 or 5 stars). After all, she's a good professor, Katherine just isn't really a fan of that sort of subject. She's plenty smart, but she's much more artistic-minded than forensics would imply. The only reason she'd take the class to begin with is for the promise of forensic sketch artistry, but even that's only a week and a half out of the full semester.

#my friends!!!#answered asks#ask game#my ocs#ophelia octavius#jasper wilson#oc kestrel#rae mckinney#robin cassidy#madison douglas#oc quinn/aces#oc katherine johnson

3 notes

·

View notes

Text

How to Recover from a Google Algorithm Update Penalty

New York, NY – Website owners and digital marketers worldwide have been facing unexpected ranking drops due to Google’s latest algorithm updates. These changes can severely impact organic traffic, sales, and overall online visibility. The good news? Recovery is possible, and SEO Penalty Removal Services is here to help businesses bounce back stronger than ever.

Understanding Google Algorithm Update Penalties

Google regularly updates its algorithm to improve search results and eliminate low-quality or manipulative SEO practices. However, these updates can sometimes lead to unintended ranking penalties for legitimate businesses. If your website has lost traffic and rankings after an update, it’s crucial to identify the cause and take corrective actions.

Common Reasons for Google Penalties

Several factors can trigger a penalty following an algorithm update, including:

Low-Quality Content: Google prioritizes high-quality, user-focused content. If your content is thin, duplicated, or stuffed with keywords, you may face penalties.

Spammy Backlinks: Toxic or irrelevant backlinks can harm your site’s authority and rankings.

Technical SEO Issues: Poor site speed, broken links, and improper indexing can negatively affect your rankings.

Over-Optimization: Excessive keyword stuffing, unnatural anchor texts, and aggressive SEO tactics can trigger penalties.

User Experience Issues: Google values user experience. High bounce rates, poor mobile usability, and slow-loading pages can hurt rankings.

Steps to Recover from a Google Algorithm Update Penalty

1. Analyze the Impact

Start by assessing the exact changes in rankings and traffic. Use tools like Google Search Console, Google Analytics, and third-party SEO platforms to identify which pages and keywords were affected.

2. Identify the Penalty Type

Google penalties are broadly classified into two categories:

Manual Penalties: These occur when a Google reviewer manually flags your website for violations. You can find notifications in Google Search Console.

Algorithmic Penalties: These happen automatically when an update detects policy violations.

3. Improve Content Quality

Google prioritizes valuable, user-centric content. To recover, follow these strategies:

Update existing content with fresh, relevant, and in-depth information.

Remove duplicate or thin content.

Avoid keyword stuffing and use natural language.

Implement structured data to enhance search visibility.

4. Audit and Remove Toxic Backlinks

A bad backlink profile can severely affect your rankings. Conduct a thorough backlink audit using tools like Ahrefs, SEMrush, or Google Search Console. Identify and remove spammy links by:

Contacting webmasters to request removal.

Using Google’s Disavow Tool to disassociate from harmful links.

5. Fix Technical SEO Issues

Technical aspects play a major role in ranking recovery. Ensure that:

Your website loads quickly.

There are no crawl errors or broken links.

The website is mobile-friendly.

Structured data is correctly implemented.

6. Enhance User Experience (UX)

Google rewards websites that provide a seamless user experience. Focus on:

Improving page speed.

Enhancing mobile responsiveness.

Simplifying navigation.

Reducing bounce rates through engaging and interactive content.

7. Submit a Reconsideration Request (For Manual Penalties)

If you’ve received a manual penalty, take corrective actions and submit a reconsideration request through Google Search Console. Clearly explain the improvements made and request a review.

8. Monitor Performance and Adapt

Recovery doesn’t happen overnight. Keep monitoring your website’s performance using analytics tools. Stay updated with Google’s best practices and be prepared to adapt to future updates.

Why Choose SEO Penalty Removal Services?

Recovering from a Google penalty requires expert knowledge and strategic execution. SEO Penalty Removal Services specializes in:

Comprehensive website audits

Backlink profile cleanup

Content optimization strategies

Technical SEO fixes

Ongoing monitoring and support

With years of experience helping businesses regain lost rankings, our team ensures a structured and effective recovery process tailored to your website’s unique needs.

Conclusion

If your website has suffered due to a Google algorithm update penalty, don’t panic. By following a strategic recovery approach, you can regain lost rankings and even improve your search visibility. However, penalty recovery requires expertise and continuous effort. That’s where https://seopenaltyremovalservices.com comes in. Our team is committed to helping businesses navigate Google’s complex algorithms and recover their online presence.

For expert assistance in recovering from Google penalties, reach out to us today.

Contact Information

Name: SEO Penalty Removal Services Address: 420 5th Ave # 2500, New York, NY 10018, USA Phone Number: 442071833436

1 note

·

View note

Text

Week 3, Mon 28/8, Day 1/5

01 49:

After tutoring, I went straight home since S was sick. Fell asleep on the couch right after dinner. We didn't get much done but that's ok, what's more important now is managing our energy levels to avoid burn out.

Later in the day, we will have our violin lesson, a trip to the salon, and a presentation meeting, this means that we need to get as much done before noon and squeeze in some practice time at a more reasonable timing.

1315-1415: violin lesson

1530-1700: salon

1900-2000: ethno meeting

Goals:

- complete preparation for ethno (tues), multiculturalism (wed), literature (wed)

- review violin lesson

- groceries and household needs

- budget tracking

1248:

Since preparations for ethno class will be completed during ethno meeting, I'm left with multiculturalism and literature prep. Multiculturalism requires more cognitive energy and requires a transcript of the lecture and highlighted points from the reading. All these will allow me to complete my week 3 notes for multiculturalism before I attempt the tutorial questions. as for literature readings, the prof has not been using assigned reading materials for the past 2 weeks, so the reading will be a low priority. from 1415-1700, i can use travel time and time at the salon to review my violin lesson, come up with a practice plan, and then work on my multicultural readings. if i take a direct bus back home from the salon, I would be able to avoid the rush hour by a margin and reach home by 1735. I can then have dinner and from 6pm-7pm, practice the violin. If everything goes well and the ethno meeting ends at 8pm, I can do my groceries and household shopping and be back by 9pm. The remaining time can be used to complete the prep for multiculturalism

1 note

·

View note

Text

MY PROF JUST GAVE US BACK OUR MIDTERM AND EVERYONE GOT LOWASS MARKS, THE HIGHEST MARK WAS A 60%.

#huh#I GOT A 54#im happy i passed but I didn't think he was going to brutally mark out midterms ruthlessly that the whole class barely passed#THIS PROF HAS LOW REVIEWS FOR A REASON#man wtf#idk why he's getting mad at us when he doesn't even teach properly in the first place#i mean like this is supposed to me an easy class IT IS AN EASY CLASS#ITS ABOUT CANADIAN CITIZENSHIP AND IMMIGRATION AND HE PROF IS BEING DIFFICULT BRUH WTF#i can't believe the whole class failed man wtf

6 notes

·

View notes

Text

The great glaciatic meltdown

A titanic piece of Greenland's ice cap estimated at 110 square meters had split and started to float away towards the far northeastern Arctic, flagging a grave risks' that is bound will follow, and the glaciatic obliteration has recently gazed. The part that severed is toward the finish of the Northeast Greenland Ice Stream. It's 42.3 square miles (110 square kilometers) or around multiple times as large as Central Park in NY. This ice desert split away from a fjord called Nioghalvfjerdsfjorden, which is roughly 50 miles (80 kilometers) in length and 12 miles (20 kilometers) wide, as distributed in the National Geological Survey of Denmark and Greenland diary. In any event, being the coldest spot in the outside of the world's air, this district has recorded an increment by enormous 3 degrees Celsius since 1980," as per Dr. Jenny Turton, a polar analyst working Friedrich-Alexander University in Germany. What's more, even with the European landmass recording the most noteworthy temperatures ever, in any event, throughout the mid year of 2019 and 2020.

The previous few months have seen heap features of chilly liquefying – especially in Greenland – and ice sheet breakdown. According to the report which was distributed in the diary Nature Communications Earth and Environment, Greenland's ice sheets have contracted such a lot of that regardless of whether an unnatural weather change were to stop at the present time, the ice sheet would keep contracting a similar distribution further cited satellite information, the Greenland ice sheet lost a record measure of ice in 2019, comparable to 1,000,000 tons each moment across the year. Another paper which was a paper distributed in The Cryosphere, educated that an amazing ice misfortune wasn't brought about by warm temperatures alone yet in addition credits to and non-occasional and remarkable environmental flow designs as the significant reason contributing gigantically to the way the ice sheets quickly of shed's their weight. As these environment models that project the future softening of the Greenland ice sheet don't consider for adjusting barometrical examples, there is an undeniable degree of plausibility that they might have been thought little of by a proportion of 1/2.

According to a report distributed in September 2020,, the last completely flawless ice rack in the Canadian Arctic – the Milne Ice Shelf, which is greater than Manhattan – fell, shedding an abundance of 40% of its space in only two days somewhat recently of July. Which frightened researchers to notice the example of a floated piece of a Mont Blanc icy mass – the same size of Milan basilica – was in danger of breakdown and occupants of Italy’s Aosta valley were organization to clear their homes? The most noticeably terrible was on the way. A British Antarctic Survey along with a group from the USA, planned depressions estimating a large portion of the size of the Grand Canyon that are permitting warm sea water to disintegrate the immense Thwaites icy mass in the Antarctic, speeding up the ascent of ocean levels across the world. As indicated by the International Thwaites Glacier Collaboration, the icy mass measures bigger than England, Wales, and Northern Ireland set up and if it somehow happened to implode completely, worldwide ocean levels would increment by 65 cm (25 in). This isn't the finish of the story. Nature has planned glacial masses to go about as a scaffold or as a cushion between the warming ocean and different ice sheets. A breakdown is sure to convey adjoining ice sheets in western Antarctica down alongside it. This welcome with open arm a cataclysmic situation where the ocean levels will undoubtedly will be an ascent of ocean level by around by a stunning 10 feet, forever sinking some low-lying waterfront regions that incorporate those pieces of Miami, New York City, and the Netherlands, which is a visa for implosion.

An Earth-wide temperature boost as the actual name conveys, walks ahead unabated. While the Paris revelation on environmental change promised to confine a dangerous atmospheric devation to 1.5℃ in any event, during this century, a report by the World Meteorological Organization cautions that breaking point can be penetrated as ahead of schedule as 2024. As per Prof Anders Levermann from the Potsdam Institute for Climate Impact Research in Germany, it will be a judicious to anticipate an expansion in the ocean level more than five meter's, regardless the objective set up in Paris have been accomplished 100%Hence is the obligation of each person to be responsible for their activities, to do all that could be within reach under the sun, not anticipating other's to act. Each nation has distributed standard rules to be clung to, if which followed will bring down the chance of early disaster y striking us early. One of the potential risky Thwaites ice sheets is bigger than England, Wales and Northern Ireland set up and if the inescapable occurs, there is high likelihood of a significant part of England and Wales being gulped by the Atlantic.

In August '20, the last completely flawless ice rack in the Canadian Arctic – the Milne Ice Shelf, which is greater than Manhattan – fell, losing in excess of 40% of its space in only two days toward the finish of July. Researcher's admonished that that an enormous piece of a Mont Blanc ice sheet – which is in the same size of Milan house of prayer – was in danger of breakdown and inhabitants of Italy's Aosta valley were told advised to clear their homes. Further adding to the anguish, a British-American Antarctic review group planned depressions estimating a large portion of the size of the Grand Canyon that are permitting warm sea water to dissolve the tremendous Thwaites icy mass in the Antarctic, speeding up the ascent of ocean levels across the world. A report in the International Thwaites Glacier Collaboration has cautioned that if the ice sheet measures bigger than England, Wales and Northern Ireland set up and if it somehow managed to implode completely, worldwide ocean levels would increment by 65 cm (25 in).

There is no sign that ocean levels won't increment further. Icy mass goes about as a guardian angel, go about's as a support between the warming ocean and different glacial masses. The impending breakdown has the ability to drag adjoining ice sheets in western Antarctica down with it. The most pessimistic scenario most dire outcome imaginable can be that see ocean levels ascend by almost 10 feet, for all time lowering some low-lying beach front regions including portions of Miami, New York City, and the Netherlands meets the substance of the Titanic, which was viewed as resilient and it is amusing that the landmass will be let go in a similar floor. An unnatural weather change is presently a really worldwide proceeding unabatedly. Paris statement expects to restrict a worldwide temperature alteration to 1.5℃ by end of this century, anyway worryingly, a report by the World Meteorological Organization cautions this breaking point might be surpassed by as ahead of schedule as 2024. As per Prof Anders Levermann from the Potsdam Institute for Climate Impact Research in Germany, there are high prospects of ocean level's expanding more than five meters, regardless of whether the objectives of the Paris Climate Agreement are met. Over the year’s each administration has understood the degree's of obliteration that environmental change would incur in their nation and are taking each conceivable measure to even the smallest risk exacting the country. The aggregate exer

1 note

·

View note

Link

Those who plan to improve their learning skills must be alert against a volley on false claims that are ripe in books and materials devoted to accelerated learning. This short and concise list should help you avoid books or websites that do not stick to the basics of science. In addition to memory myths, you will find, at the bottom, a summary of other myths described extensively at other places of this website.

Remember to remain skeptical. Hone your skepticism and treat this list with skepticism too. Consult reputable sources.

Contents:

Memory myths

Genius and creativity myths

Sleep myths

SuperMemo myths

Language learning myths

Skepticism (links to skeptic websites)

Memory myths

Myth: It is possible to produce everlasting memories. Even reputable researchers use the term permastore (see: Prof. Harry Bahrick). It is a widely-held belief that it is possible to learn things well enough to protect them permanently from forgetting. Fact: It is possible to learn things well enough to make it nearly impossible to forget them in lifetime. Every long-term memory, depending on its strength, has an expected lifetime. When the memory strength is very high, the expected lifetime may be longer than our own lease on life. However, if we happened to get extra 200 years to live, no memory built in present life would remain safe without repetition

Myth: We never forget. Some accelerated-learning programs claim that we never forget what we learn. Knowledge simply gets "misplaced" and the key to good memory is to figure out how to dig it out. Fact: All knowledge is subject to gradual decay. Even your own name is vulnerable. It is only a matter of probability. Strong memories are very unlikely to be forgotten. The probability of forgetting one's name is like the probability of getting hit by an asteroid: possible but not considered on a daily basis

Myth: Memory is infinite. Fact: Anyone with basic computational understanding of memory knows this claim is absurd. However, this is just one of a million living claims that are incongruent with primary school level science. After all, half of Americans still believe the earth was created by God less than 10,000 years ago (apology). We cannot even hope to memorize Encyclopedia Britannica in lifetime. Memories are stored in a finite number of states of finite receptors in finite synapses in a finite volume of the human central nervous system. Even worse, storing information long-term is not easy. Most people will find it hard to go beyond 300,000 facts memorized in a lifetime. For the other extreme of this myth see: Memory overload may cause Alzheimer's

Myth: Mnemonics is a panacea to poor memory. Some memory programs focus 100% on mnemonic techniques. They claim that once you represent knowledge in an appropriate way, it can be memorized in a nearly-permanent way. Fact: Mnemonic techniques dramatically reduce the difficulty of retaining things in memory. However, they still do not produce everlasting memories. Repetition is still needed, even though it can be less frequent. If you compare your learning tools to a car, mnemonics is like a tire. You can go on without it, but it makes for a smooth ride

Myth: The more you repeat the better. Many books tell you to review your materials as often as possible (Repetitio mater studiorum est). Fact: Not only frequent repetition is a waste of your precious time, it may also prevent you from effectively forming strong memories. The fastest way to building long-lasting memories is to review your material in a precisely determined moments of time. For long memories with minimum effort use spaced repetition (see SuperMemo)

Myth: You should always use mnemonic techniques. Some enthusiasts of mnemonic techniques claim that you should use them in all situations and for all sorts of knowledge. They claim that learning without mnemonic techniques is always less effective. Fact: Mnemonic techniques also carry some costs. Sometimes it is easier to commit things to memory straight away. The pair of words teacher=instruisto in Esperanto is mnemonic on its own (assuming you know the rules of Esperanto grammar, basic roots and suffixes). Using mnemonic techniques may be an overkill in some circumstances. The rule of thumb is: evoke mnemonic techniques only when you detect a problem with remembering a given thing. For example, you will nearly always want to use a peg-system to memorize phone numbers. Best of all, mnemonic tricks should be a part of your automatically and subconsciously employed learning arsenal. You will develop it over a long run time with massive learning

Myth: We cannot improve memory by training. Infinite memory is a popular optimist's myth. A pessimist's myth is that we cannot improve our memory via training. Even William James in his genius book The Principles of Psychology (1890) wrote with certainty that memory does not change unless for the worse (e.g. as a result of disease). Fact: If considered at a very low synaptic level, memory is indeed quite resilient to improvement. Not only does it seem to change little in the course of life. It is also very similar in its action across the human population. At the very basic level, synapses of a low-IQ individual are as trainable as that of a genius. They are also not much different from those of a mollusk Aplysia or a fly Drosophila. However, there is more to memory and learning than just a single synapse. The main difference between poor students and geniuses is in their skill to represent information for learning. A genius quickly dismembers information and forms simple models that make life easy. Simple models of reality help understand it, process it and remember it. What William James failed to mention is that a week-long course in mnemonic techniques dramatically increases learning skills for many people. Their molecular or synaptic memory may not improve. What improves is their skill to handle knowledge. Consequently, they can remember more and longer. Learning is a self-accelerating and self-amplifying process. As such it often leads to miraculous results.

Myth: Encoding variability theory. Many researchers used to believe that presenting material in longer intervals is effective because of varying contexts in which the same information is presented. Fact: Methodical research indicates that the opposite is true. If you repeat your learning material in the exactly same context, your recall will be easier. Naturally, knowledge acquired in one context may be difficult to recover in another context. For this reason, your learning should focus on producing very precise memory trace that will be universally recoverable in varying contexts. For example, if you want to learn the word informavore, you should not ask How can I call John? He eats knowledge for breakfast. This definition is too context-dependent. Even if it is easy to remember, it may later appear useless. Better ask: How do I call a person who devours information?. Now, even if you always ask the same question in the same context, you are likely to correctly use the word informavore when it is needed. For more on encoding variability and spacing effect see: Spaced repetition in the practice of learning

Myth: Mind maps are always better than pictures. A picture is worth a thousand words. It is true that we remember pictures far better than words. It is true that mind maps are one of the best pictorial representations of knowledge. Some mnemonists claim that all we learn should be in the form of a picture or even a mind map. Fact: It all depends on the material we learn. One of the greatest advantages of text is its compactness and ease at which we can produce it. To memorize your grandma's birthday, you do not really need her picture. A simple verbal mnemonic will be fast to type and should suffice. In word-pair learning, 80% of your material may be textual and still be as good or even better than pictorials. If you ask about the date of the Battle of Trafalgar, you do not need a picture of Napoleon as an illustration. As long as you recall his face at the sound of his name, you have established all links needed to deduce relevant pieces of knowledge. If you add a picture of the actual battle, you will increase the quality and extent of memorized information, but you will need to invest extra minutes into finding the appropriate illustration. Sometimes a simple text formula is all you need

Myth: Review your material on the first day several times. Many authors suggest repeated drills on the day of the first contact with the new learning material. Others propose microspacing (i.e. using spaced repetition for intervals lasting minutes and hours). These are supposed to consolidate the newly learned knowledge. Fact: A single effective repetition on the first day of learning is all you need. Naturally it may happen, you cannot recall a piece of information upon a single exposure. In such cases you may need to repeat the drill. It may also happen that you cannot effectively put together related pieces of information and you need some review to build the big picture. However, in the ideal case, on the day #1 you should (1) understand and (2) execute a single successful active recall (such as answering the question "When did Pangea start breaking up?"). One exposure should then suffice to begin the process of consolidating the memory trace

Myth: Review your material next day after a good night sleep. Many authors believe that sleep consolidates memories and you need to strike iron while it is hot to ensure good recall. In other words, they suggest a good review on the next day after the first exposure. Fact: Although sleep is vital for learning and review is vital for remembering, the optimal timing of the first review is usually closer to 3-7 days. This number comes from calculations that underlie spaced repetition. If we aim to maximize the speed of learning at a steady 95% recall rate, most well-formulated knowledge for a well-trained student will call for the first review in 3-7 days. Some pieces must indeed be reviewed on the next day. Some can wait as long as a month. SuperMemo and other computer programs based on spaced repetition will optimize the length of the first interval before the first review

Myth: Learn new things before sleep. Because of the research showing the importance of sleep in learning, there is a widespread myth claiming that the best time for learning is right before sleep. This is supposed to ensure that newly learned knowledge gets quickly consolidated overnight. Fact: The opposite is true. The best time for learning in a healthy individual is early morning. Many students suffer from DSPS (see: Good sleep for good learning) and simply cannot learn in the morning. They are too drowsy. Their mind seems most clear in the quiet of the late night. They may indeed get better results by learning in the night, but they should rather try to resolve their sleep disorder (e.g. with free running sleep). Late learning may reduce memory interference, i.e. obliteration of the learned material by the new knowledge acquired during the day. However, a far more important factor is the neurohormonal state of the brain in the learning process. In a hormonal sense, the brain is best suited for learning in the morning. It shows highest alertness and the best balance between attention and creativity. The gains in knowledge structure and the speed of processing greatly outweigh all minor advantages of late-night learning

Myth: Long sleep is good for memory. Association of sleep and learning made many believe that the longer we sleep the healthier we are. In addition, long sleep improves memory consolidation. Fact: All we need for effective learning is well-structured sleep at the right time and of the optimum length. Many individuals sleep less than 5 hours and wake up refreshed. Many geniuses sleep little and practice catnaps. Long sleep may correlate with disease. This is why mortality studies show that those who sleep 7 hours live longer than 9-hour-sleepers. The best formula for good sleep: listen to your body. Go to sleep when you are sleepy and sleep as long as you need. When you catch a good rhythm without an alarm clock, your sleep may ultimately last less but produce far better results in learning. It is the natural healthy structure of sleep cycles that makes for good learning (esp. in non-declarative problem solving, creativity, procedural learning, etc.). It is not true that if your sleep is short, so is your memory

Myth: Alpha-waves are best for learning. Zillions of speed-learning programs propose learning in a "relaxed state". Consequently, gazillions of dollars are misinvested by customers seeking instant relief to their educational pains. Fact: It is true that relaxed state is vital for learning. "Relaxed" here means stress-free, distraction-free, and fatigue-free. However, a red light should blink when you hear of fast learning through inducing alpha states. Alpha waves are better known from showing up when you are about to fall asleep. They are better correlated with lack of visual processing than with the absence of distracting stress. You do not need "alpha-wave machinery" to enter the "relaxed state". You can do far better by investing your time and money in ensuring good peaceful environment for learning, as well as in skills related to time-management, conflict-resolution, and stress-management. Neurofeedback devices may play a role in hard to crack stress cases. However, good health, peaceful environment, loving family, etc. are your simple bets for the "relaxed state"

Myth: Memory gets worse as we age. Aging universally affects all organs. 50% of 80-year-olds show symptoms of Alzheimer's disease. Hence the overwhelming belief that memory unavoidably gets rusty at an older age. Fact: It is true we lose neurons with age. It is true that the risk of Alzheimer's increases with age. However, a well-trained memory is quite resilient and shows comparatively fewer functional signs of aging than the joints, the heart, the vascular system, etc. Moreover, training increases the scope of your knowledge, and paradoxically, your mental abilities may actually increase well into a very advanced age

Myth: You can boost your learning with memory pills. Countless companies try to market various drugs and supplements with claims of improved memory. Fact: There are no memory pills out there (August 2003). Many drugs and supplements indirectly help your memory by simply making you healthier. Many substances can help the learning process itself (e.g. small doses of caffeine, sugar, etc.), but these should not be central to your concerns. It is like running a marathon. There are foods and drugs that can help you run, but if you are a lousy runner, no magic pill can make finish in less than 3 hours. Do not bank on pharmiracles. A genius memory researcher Prof. Jim Tully believes that his CREB research will ultimately lead to a memory pill. However, his memory pill is not likely to specifically affect desired memories while leaving other memories to inevitable forgetting. As such, each application of the pill will likely produce a side effect of enhanced memory traces for all things learned in the affected period. Neural network researchers know the problem as stability-vs.-plasticity dilemma. Evolution solved this problem in a way that will be hard to change. Admittedly though, combination of a short-lasting memory enhancement with a sharply-focused spaced repetition (as with SuperMemo) could indeed bring further enhancement to learning

Myth: Learning by doing is the best. Everyone must have experienced the value of learning by doing. This form of learning often leads to memories that last for years. No wonder, some educators believe that learning by doing should monopolize educational practice. Fact: Learning by doing is very effective in terms of the quality of produced memories, but it is also very expensive in expenditure of time, material, organization, etc. The experience of a dead frog's leg coming to life upon touching a wire may stay with one for life (perhaps as murderous nightmares resulting from the guilt of killing). However, a single picture or mpeg of the same experiment can be downloaded from the net in seconds and retained for life with spaced repetition at the cost of 60-100 seconds. This is incomparably cheaper than hunting for frogs in a pond. When you learn to program your VCR, you do not try all functions listed in the manual as this could take a lifetime. You skim the highlights and practice only those clicks that are useful for you. We should practise learning by doing only then when it pays. Naturally, in the area of procedural learning (e.g. swimming, touch typing, playing instruments, etc.), learning by doing is the right way to go. That comes from the definition of procedural learning

Myth: It is possible to memorize Encyclopedia Britannica. Anecdotal evidence points to historical and legendary figures able of incredible feats of memory such as learning 56 languages by the age of 17, memorizing 100,000 hadiths, showing photographic memory lasting for years, etc. No wonder that it leads to the conviction that it is possible to memorize Britannica word for word. It is supposed to only be the question of the right talent or the right technique. Fact: A healthy, intelligent and non-mutant mind shows a surprisingly constant learning rate. If Britannica is presented as a set of well-formulated questions and answers, it is easy to provide a rough estimate of the total time needed to memorize it. If there are 44 million words in Britannica, we will generate 6-15 million cloze deletions, these will require 50-300 million repetitions by the time of job's end (see spaced repetition theory), and that translates to 25-700 years of work assuming 6 hours of unflagging daily effort. All that assuming that the material is ready-to-memorize. Preparing appropriate questions and answers may take 2-5 times more than the mere memorization. If language fluency is set at 20,000 items (this is what you need to pass TOEFL in flying colors or comfortably read Shakespeare), the lifetime limit on learning languages around 50 might not be impossible (assuming total dawn-to-dusk dedication to the learning task). Naturally, those who claim fluency in 50 languages, are more likely to show an arsenal of closer to 2000 words per language and still impress many

Myth: Hypertext can substitute for memory. An amazingly large proportion of the population holds memorization in contempt. Terms "rote memorization", "recitatory rehearsal", "mindless repetition" are used to label any form of memorization or repetition as unintelligent. Seeing the "big picture", "reasoning" and leaving the job of remembering to external hypertext sources are supposed to be viable substitutes. Fact: Associative memory underlies the power of the human mind. Hypertext references are a poor substitute for associative memory. Two facts stored in human memory can instantly be put together and bring a new idea to life. The same facts stored on the Internet will remain useless until they are pieces together inside a creative mind. A mind rich in knowledge, can produce rich associations upon encountering new information. An empty mind is as useful as a toddler given the power of the Internet in search of a solution. Biological neural networks work in such a way, that knowledge is retained in memory only if it is refreshed/reviewed. Learning and repetition are therefore still vital for the progress of mankind.

Myth: People differ in the speed of learning, but they all forget at the same speed. Fact: Although there are mutations that might affect the forgetting rate, at the very lowest biological level, i.e. the synaptic level, the rate of forgetting is indeed basically the same; independent of how smart you are. However, the same thing that makes people learn faster, helps them forget slower. The key to learning and slow forgetting is representation (i.e. the way knowledge is formulated). If you learn with SuperMemo, you will know that items can range from being very difficult to being very easy. The difficult ones are forgotten much faster and require shorter intervals between repetitions. The key to making items easy, is to formulate them well. Moreover, good students will show better performance on the exactly same material. This is because the ultimate test on the formulation of knowledge is not in how it is structured in your learning material, but in the way it is stored in your mind. With massive learning effort, you will gradually improve the way you absorb and represent knowledge in your mind. The fastest student is the one who can instinctively visualize and store knowledge in his mind using minimum-information maximum-connectivity imagery

Myth: Learning while sleeping. An untold number of learning programs promises you to save years of life by learning during sleep. Fact: It is possible to store selected memories generated during sleep by: external stimuli, dreams, hypnagogic and hypnopompic hallucinations (i.e. hallucinations experienced while falling asleep and while waking up). However, it is nearly impossible to harness this process into productive learning. The volume of knowledge that can be gained during sleep is negligible. Learning in sleep may be disruptive to sleep itself. Learning while sleeping should not be confused with the natural process of memory consolidation and optimization that occurs during sleep. This process occurs during a complete sensory cut-off, i.e. there are no known methods of influencing its course to the benefit of learning. Learning while sleeping is not only a complete waste of time. It may simply be unhealthy

Myth: High fluency reflects high memory strength. Our daily observations seem to indicate that if we recall things easily, if we show high fluency, we are likely to remember things for long. Fact: Fluency is not related to memory strength! The two-component model of long-term memory shows that fluency is related to the memory variable called retrievability, while the length of the period in which we can retain memories is related to another variable called stability. These two variables are independent. This means that we cannot derive memory stability from the current fluency (retrievability). The misconception comes from the fact that in traditional learning, i.e. learning that is not based on spaced repetition, we tend to remember only memories that are relatively easy to remember. Those memories will usually show high fluency (retrievability). They will also last for long for reasons of importance, repetition, emotional attachment, etc. No wonder that we tend to believe that high fluency is correlated with memory strength. Users of SuperMemo can testify that despite excellent fluency that follows a repetition, the actual length of the interval in which we recall an item will rather depend on the history of previous repetitions, i.e. we remember better those items that have been repeated many times. See also: automaticity vs. probability of forgetting

The list of myths is by no means complete. I included only the most damaging distortions of the truth, i.e. the ones that can affect even a well-informed person. I did not include myths that are an offence to our intelligence. I did not ponder over repressed memories, subliminal learning, psychic learning, or remote viewing (unlike CIA). The list is simply too long.

See also: Memory FAQ

Sleep myths (see: Good sleep for good learning for a more comprehensive list)

Myth: Since we feel rested after sleep, sleep must be for resting. Ask anyone, even a student of medicine: What is the role of sleep? Nearly everyone will tell you: Sleep is for rest. Fact: Sleep is for optimizing the structure of memories. If it was for rest or energy saving, we would cover the saving by consuming just one apple per night. To effectively encode memories, mammals, birds and even reptiles need to turn off the thinking and do some housekeeping in their brains. This is vital for survival. This is why the evolution produced a defense mechanism against skipping sleep. If we do not get sleep, we feel miserable. We are not actually as wasted as we feel, the damage can be quickly repaired by getting a good night sleep. Our health may not suffer as much as our learning and intelligence. Feeling wasted in sleep deprivation is the result of our brain dishing punishment for not sticking to the rules of an intelligent form of life. Let the memory do restructuring in its programmed time

Myth: Sleeping little makes you more competitive. Many people are so busy with their lives that they sleep only 3-4 hours per night. Moreover, they believe that sleeping little makes them more competitive. Many try to train themselves for minimum sleep. Donald Trump, in his newest book, tells you: "If you want to be a billionaire, sleep as little as possible". Fact: It is true that many geniuses slept little. Many business sharks slept even less. However, the only good formula for maximum long-term competitiveness is via maximum health and maximum creativity. If Trump sleeps 3 hours per night and enjoys his work, he is likely to run it on alertness hormones (ACTH, cortisol, adrenaline, etc.). His sleep is probably structured very well and he may extract more neural benefit per hour of sleep than an average 8-hours-per-night sleeper. Yet that should not make you try to beat yourself to action with an alarm clock. You will get shortest and maximum quality sleep only then when you perfectly hit your circadian low-time, i.e. when your body tells you "now it is time to sleep". Sleep in wrong hours, or sleep interrupted with an alarm clock is bound to undermine your intellectual performance and creativity. Occasionally, you may think that a loss on intellectual side will be counterbalanced with the gain on the action side (e.g. clinching this vital deal). Remember though, that you also need to factor in the long-term health consequences. Unless, of course, you think a heart attack at 45 is a good price to pay for becoming a billionaire

Myth: Sleeping pills will help you sleep better. Fact: Benzodiazepines can help you sleep, but this sleep is of far less quality than naturally induced sleep (the term "sleeping pill" here does not apply to sleep-inducing supplements such as melatonin, minerals, or herbal preparations). Not only are benzodiazepines disruptive to the natural sleep stage sequence. They are also addictive and subject to tachyphylaxis (the more you take the more you need to take). Sleeping pills can be useful in circumstances where sleep is medically vital, and cannot be achieved by other means. Otherwise, avoid sleeping pills whenever possible

Myth: Silence and darkness are vital for sleep. This may be the number one advice for insomniacs: use your sleeping room for sleep only, keep it dark and quiet. Fact: Silence and darkness indeed make it easier to fall asleep. They may also help maintain sleep when it is superficial. However, they are not vital. Moreover, for millions of insomniacs, focusing on peaceful sleeping place obscures the big picture: the most important factor that makes us sleep well, assuming good health, is the adherence to one's natural circadian rhythm! People who go to sleep along their natural rhythm can often sleep well in bright sunshine. They can also show remarkable tolerance to a variety of noises (e.g. loud TV, family chatter, the outside window noise, etc.). This is all possible thanks to the sensory gating that occurs during sleep executed "in phase". Absence of sensory gating in "wrong phase" sleep can easily be demonstrated by lesser changes to AEPs (auditory evoked potentials) registered at various parts of the auditory pathway in the brain. Noises will wake you up if you fail to enter deeper stages of sleep, and this failure nearly always comes from sleeping at the wrong circadian phase (e.g. going to sleep too early). If you suffer from insomnia, focus on understanding your natural sleep rhythm. Peaceful sleeping place is secondary (except in cases of impaired sensory gating as in some elderly). Insomniacs running their daily ritual of perfect darkness, quiet, stresslessness and sheep-counting are like a stranded driver hoping for fair winds instead of looking for the nearest gas station. Even worse, if you keep your place peaceful, you run the risk of falling asleep early enough to be reawakened by the quick elimination of the homeostatic component of sleep. Learn the principles of healthy sleep that will make you sleep in all conditions. Only then focus on making your sleeping place as peaceful as possible. For more see: Good sleep, good learning

Myth: People are of morning or evening type. Fact: This is more of a misnomer than a myth. Evening type people, with chronotherapy, can easily be made to wake up with the sun. What people really differ in is the period of their body clock, as well as the sensitivity to and availability of stimuli that reset that rhythm (e.g. light, activity, stress, etc.). People with an unusually long natural day and low sensitivity to resetting stimuli will tend to work late and wake up late. Hence the tendency to call them "evening type". Those people do not actually prefer evenings, they simply prefer longer working days. The lifestyle affects the body clock as well. A transition from a farmer's lifestyle to a student's lifestyle will result in a slight change to the sleeping rhythm. This is why so many students feel as if they were of the evening type

Myth: Avoid naps. Fact: Naps may indeed worsen insomnia in people suffering from DSPS, esp. if taken too late in the day. Otherwise, naps are highly beneficial to intellectual performance. It is possible to take naps early in the day without affecting one's sleeping rhythm. Those naps must fall before or inside the so-called dead zone where a nap does not produce a phase response (i.e. shift in the circadian rhythm)

Myth: Night shifts are unhealthy. Fact: People working in night shifts are often forced out of work by various ailments such as a heart condition. However, it is not night shifts that are harmful. It is the constant switching of the sleep rhythm from day to night and vice versa. It would be far healthier to let night shift people develop their own regular rhythm in which they would stay awake throughout the night. It is not night wakefulness that is harmful. It is the way we force our body do things it does not want to do

Myth: Going to bed at the same time is good for you. Fact: Many sleep experts recommend going to sleep at the same time every day. Regular rhythm is indeed a form of chronotherapy recommended in many circadian rhythm problems. However, people with severe DSPS may simply find it impossible to go to sleep at the same time everyday. Such forced attempts will only result in a self-feeding cycle of stress and insomnia. In such cases, the struggle with one's own rhythm is simply unhealthy. Unfortunately, people suffering from DSPS are often forced into a "natural" rhythm by their professional and family obligations

Myth: People who sleep less live longer. In 2002, Dr Kripke compared the length of sleep with longevity (1982 data from a cancer risk survey). He figured out that those who sleep 6-7 hours live longer than those who sleep 8 hours and more. No wonder that a message started spreading that those who sleep less live longer. Fact: The best longevity prognosis is ensured by sleeping in compliance with one's natural body rhythm. Those who stick to their own good rhythm often sleep less because their sleep is better structured (and thus more refreshing). "Naturally sleeping" people live longer. Those who sleep against their body call, often need to clock more hours and still do not feel refreshed. Moreover, disease is often correlated with increased demand for sleep. Infectious diseases are renowned for a dramatic change in sleep patterns. When in coma, you are not likely to be adding years to your life. Correlation is not causation

Myth: A nap is a sign of weakness. Fact: A nap is not a sign of weakness, ill-health, laziness or lack of vigor. It is a philogenetic remnant of a biphasic sleeping rhythm. Not all people experience a significant mid-day slump in mental performance. It may be well masked by activity, stress, contact with people, sport, etc. However, if you experience a slump around the 6th to 8th hour of your day, taking a nap can dramatically boost your performance in the second half of the day

Myth: Alarm clock can help you regulate the sleep rhythm. Fact: An alarm clock can help you push your sleep rhythm into the desired framework, but it will rarely help you accomplish a healthy sleep rhythm. The only tried-and-true way to accomplish a healthy sleep and a healthy sleep rhythm is to go to sleep only when you are truly sleepy, and to wake up naturally without external intervention

Myth: Being late for school is bad. Fact: Kids who persistently cannot wake up for school should be left alone. Their fresh mind and health are far more important. 60% of kids under 18 complain of daytime tiredness and 15% fall asleep at school (US, 1998). Parents who regularly punish their kids for being late for school should immediately consult a sleep expert as well as seek help in attenuating the psychological effects of the trauma resulting from the never ending cycle of stress, sleepiness and punishment

Myth: Being late for school is a sign of laziness. Fact: If a young person suffers from DSPS, it may have perpetual problems with getting up for school in time. Those kids are often actually brighter than average and are by no means lazy. However, their optimum circadian time for intellectual work comes after the school or even late into the evening. At school they are drowsy and slow and simply waste their time. If chronotherapy does not help, parents should consider later school hours or even home-schooling

Myth: We can sleep 3 hours per day. Many people enviously read about Tesla's or Edison's sleeping habits and hope they could train themselves to sleep only 3 hours per day having far more time for other activities. Fact: This might work if you plan to party all the time. And if your health is not a consideration. And if your intellectual capacity is not at stake. You can sleep 3 hours and survive. However, if your aspirations go beyond that, you should rather sleep exactly as much as your body wants. That is an intelligent man's optimum. With your improved health and intellectual performance, your lifetime gains will be immense

Myth: We can adapt to polyphasic sleep. Looking at the life of lone sailors, many people believe they can adopt polyphasic sleep and save many hours per day. In polyphasic sleep, you take only 4-5 short naps during the day totaling less than 4 hours. There are many "systems" differing in the arrangement of naps. There are also many young people ready to suffer the pains to see it work. Although a vast majority will drop out, a small circle of the most stubborn ones will survive a few months and will perpetuate the myth with a detriment to public health. Fact: We are basically biphasic and all attempts to change the inbuilt rhythm will result in loss of health, time, and mental capacity. Polyphasic sleep has not been designed for maximum alertness (let alone maximum creativity). It has been designed for maximum alertness in conditions of sleep deprivation (as in solo yachting). A simple rule is: when sleepy, go to sleep; while asleep, continue uninterrupted. See: The myth of polyphasic sleep

Myth: Sleep before midnight is more valuable. Fact: Sleep is most valuable if it comes at the time planned by your own body clock mechanism. If you are not sleepy before midnight, forcing yourself can actually ruin your night if you wake up early