#Predictive policing

Explore tagged Tumblr posts

Text

Hypothetical AI election disinformation risks vs real AI harms

I'm on tour with my new novel The Bezzle! Catch me TONIGHT (Feb 27) in Portland at Powell's. Then, onto Phoenix (Changing Hands, Feb 29), Tucson (Mar 9-12), and more!

You can barely turn around these days without encountering a think-piece warning of the impending risk of AI disinformation in the coming elections. But a recent episode of This Machine Kills podcast reminds us that these are hypothetical risks, and there is no shortage of real AI harms:

https://soundcloud.com/thismachinekillspod/311-selling-pickaxes-for-the-ai-gold-rush

The algorithmic decision-making systems that increasingly run the back-ends to our lives are really, truly very bad at doing their jobs, and worse, these systems constitute a form of "empiricism-washing": if the computer says it's true, it must be true. There's no such thing as racist math, you SJW snowflake!

https://slate.com/news-and-politics/2019/02/aoc-algorithms-racist-bias.html

Nearly 1,000 British postmasters were wrongly convicted of fraud by Horizon, the faulty AI fraud-hunting system that Fujitsu provided to the Royal Mail. They had their lives ruined by this faulty AI, many went to prison, and at least four of the AI's victims killed themselves:

https://en.wikipedia.org/wiki/British_Post_Office_scandal

Tenants across America have seen their rents skyrocket thanks to Realpage's landlord price-fixing algorithm, which deployed the time-honored defense: "It's not a crime if we commit it with an app":

https://www.propublica.org/article/doj-backs-tenants-price-fixing-case-big-landlords-real-estate-tech

Housing, you'll recall, is pretty foundational in the human hierarchy of needs. Losing your home – or being forced to choose between paying rent or buying groceries or gas for your car or clothes for your kid – is a non-hypothetical, widespread, urgent problem that can be traced straight to AI.

Then there's predictive policing: cities across America and the world have bought systems that purport to tell the cops where to look for crime. Of course, these systems are trained on policing data from forces that are seeking to correct racial bias in their practices by using an algorithm to create "fairness." You feed this algorithm a data-set of where the police had detected crime in previous years, and it predicts where you'll find crime in the years to come.

But you only find crime where you look for it. If the cops only ever stop-and-frisk Black and brown kids, or pull over Black and brown drivers, then every knife, baggie or gun they find in someone's trunk or pockets will be found in a Black or brown person's trunk or pocket. A predictive policing algorithm will naively ingest this data and confidently assert that future crimes can be foiled by looking for more Black and brown people and searching them and pulling them over.

Obviously, this is bad for Black and brown people in low-income neighborhoods, whose baseline risk of an encounter with a cop turning violent or even lethal. But it's also bad for affluent people in affluent neighborhoods – because they are underpoliced as a result of these algorithmic biases. For example, domestic abuse that occurs in full detached single-family homes is systematically underrepresented in crime data, because the majority of domestic abuse calls originate with neighbors who can hear the abuse take place through a shared wall.

But the majority of algorithmic harms are inflicted on poor, racialized and/or working class people. Even if you escape a predictive policing algorithm, a facial recognition algorithm may wrongly accuse you of a crime, and even if you were far away from the site of the crime, the cops will still arrest you, because computers don't lie:

https://www.cbsnews.com/sacramento/news/texas-macys-sunglass-hut-facial-recognition-software-wrongful-arrest-sacramento-alibi/

Trying to get a low-waged service job? Be prepared for endless, nonsensical AI "personality tests" that make Scientology look like NASA:

https://futurism.com/mandatory-ai-hiring-tests

Service workers' schedules are at the mercy of shift-allocation algorithms that assign them hours that ensure that they fall just short of qualifying for health and other benefits. These algorithms push workers into "clopening" – where you close the store after midnight and then open it again the next morning before 5AM. And if you try to unionize, another algorithm – that spies on you and your fellow workers' social media activity – targets you for reprisals and your store for closure.

If you're driving an Amazon delivery van, algorithm watches your eyeballs and tells your boss that you're a bad driver if it doesn't like what it sees. If you're working in an Amazon warehouse, an algorithm decides if you've taken too many pee-breaks and automatically dings you:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

If this disgusts you and you're hoping to use your ballot to elect lawmakers who will take up your cause, an algorithm stands in your way again. "AI" tools for purging voter rolls are especially harmful to racialized people – for example, they assume that two "Juan Gomez"es with a shared birthday in two different states must be the same person and remove one or both from the voter rolls:

https://www.cbsnews.com/news/eligible-voters-swept-up-conservative-activists-purge-voter-rolls/

Hoping to get a solid education, the sort that will keep you out of AI-supervised, precarious, low-waged work? Sorry, kiddo: the ed-tech system is riddled with algorithms. There's the grifty "remote invigilation" industry that watches you take tests via webcam and accuses you of cheating if your facial expressions fail its high-tech phrenology standards:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

All of these are non-hypothetical, real risks from AI. The AI industry has proven itself incredibly adept at deflecting interest from real harms to hypothetical ones, like the "risk" that the spicy autocomplete will become conscious and take over the world in order to convert us all to paperclips:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Whenever you hear AI bosses talking about how seriously they're taking a hypothetical risk, that's the moment when you should check in on whether they're doing anything about all these longstanding, real risks. And even as AI bosses promise to fight hypothetical election disinformation, they continue to downplay or ignore the non-hypothetical, here-and-now harms of AI.

There's something unseemly – and even perverse – about worrying so much about AI and election disinformation. It plays into the narrative that kicked off in earnest in 2016, that the reason the electorate votes for manifestly unqualified candidates who run on a platform of bald-faced lies is that they are gullible and easily led astray.

But there's another explanation: the reason people accept conspiratorial accounts of how our institutions are run is because the institutions that are supposed to be defending us are corrupt and captured by actual conspiracies:

https://memex.craphound.com/2019/09/21/republic-of-lies-the-rise-of-conspiratorial-thinking-and-the-actual-conspiracies-that-fuel-it/

The party line on conspiratorial accounts is that these institutions are good, actually. Think of the rebuttal offered to anti-vaxxers who claimed that pharma giants were run by murderous sociopath billionaires who were in league with their regulators to kill us for a buck: "no, I think you'll find pharma companies are great and superbly regulated":

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

Institutions are profoundly important to a high-tech society. No one is capable of assessing all the life-or-death choices we make every day, from whether to trust the firmware in your car's anti-lock brakes, the alloys used in the structural members of your home, or the food-safety standards for the meal you're about to eat. We must rely on well-regulated experts to make these calls for us, and when the institutions fail us, we are thrown into a state of epistemological chaos. We must make decisions about whether to trust these technological systems, but we can't make informed choices because the one thing we're sure of is that our institutions aren't trustworthy.

Ironically, the long list of AI harms that we live with every day are the most important contributor to disinformation campaigns. It's these harms that provide the evidence for belief in conspiratorial accounts of the world, because each one is proof that the system can't be trusted. The election disinformation discourse focuses on the lies told – and not why those lies are credible.

That's because the subtext of election disinformation concerns is usually that the electorate is credulous, fools waiting to be suckered in. By refusing to contemplate the institutional failures that sit upstream of conspiracism, we can smugly locate the blame with the peddlers of lies and assume the mantle of paternalistic protectors of the easily gulled electorate.

But the group of people who are demonstrably being tricked by AI is the people who buy the horrifically flawed AI-based algorithmic systems and put them into use despite their manifest failures.

As I've written many times, "we're nowhere near a place where bots can steal your job, but we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job"

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

The most visible victims of AI disinformation are the people who are putting AI in charge of the life-chances of millions of the rest of us. Tackle that AI disinformation and its harms, and we'll make conspiratorial claims about our institutions being corrupt far less credible.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/27/ai-conspiracies/#epistemological-collapse

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#disinformation#algorithmic bias#elections#election disinformation#conspiratorialism#paternalism#this machine kills#Horizon#the rents too damned high#weaponized shelter#predictive policing#fr#facial recognition#labor#union busting#union avoidance#standardized testing#hiring#employment#remote invigilation

145 notes

·

View notes

Text

Ignoring the real possibility he intentionally let himself be caught from the little we know so far Luigi Mangione's case is a fascinating combination of astonishing brilliance and confusing stupidity. This young man plans and executes his assassination and escape with such a meticulous care and calmness that it's suspected that he's a professional hitman. He comes up with Riddler-sque moves like writing his manifesto poetically on the bullets and leaving his backpack behind full of Monopoly money. He carefully wears a mask to avoid being identified but removes it because a woman who was checking him into the hostel was flirting with him and wanted to see his smile. He still manages to escape the most surveilled city in the country in the midst of ongoing national manhunt only to get caught in the middle of bumfuck nowhere Pennsylvania while eating at the McDonalds. Because for some reason he had the same clothes and mask as in New York and was carrying the same gun and suppressor. And when the cops detained him he showed them the same fake id he used in New York. And oh yeah he's a frat bro gym rat who has a masters degree in computer science from Penn but reads stupid self-help books about being on the grind and is 'anti-woke' while being bisexual suffering from anxiety and wanting to end oppressive capitalism. Not even god himself could invent a person like this

#EDIT: this post got way bigger than i predicted so just clarifying no i don't automatically assume he's guilty#he's a suspect at this point and no of course i don't trust the police#also so many people in the notes saying they know guys like this okay i believe you clearly god could make a person like this#luigi mangione#.txt

44K notes

·

View notes

Text

AI and Predictive Policing: The Future of Law Enforcement

Artificial intelligence (AI) is revolutionizing numerous industries, and law enforcement is no exception. Predictive policing, which uses AI to analyze data and forecast criminal activity, is emerging as a transformative tool for enhancing public safety. By analyzing patterns and trends, law enforcement agencies can allocate resources more strategically, potentially preventing crimes before they occur. While the concept holds significant promise, it also raises critical ethical and operational challenges.

From my perspective as an observer of the intersection between technology and public policy, I’ll explore how AI is shaping the future of law enforcement, its benefits, the concerns it raises, and the steps needed to ensure its responsible use.

Understanding Predictive Policing

Predictive policing leverages AI algorithms to process historical crime data and predict where and when crimes are likely to happen. This predictive capability enables law enforcement agencies to preemptively deploy officers to areas identified as potential crime hotspots.

The technology analyzes variables such as past crime reports, time of day, and socioeconomic factors. For example, if a specific area has a history of burglaries during the evening, predictive policing tools can recommend increased patrols during that time frame. This data-driven approach allows for a proactive response, contrasting with the traditional reactive methods that rely on responding to crimes after they occur.

Applications of AI in Law Enforcement

AI’s application in law enforcement goes beyond predictive policing. Its capabilities extend to various domains, including:

Analyzing Surveillance Footage: AI can quickly sift through hours of video to identify individuals or suspicious activities, saving valuable time.

Enhancing DNA Analysis: Advanced algorithms accelerate the DNA matching process, helping solve cases more efficiently.

ShotSpotter Technology: AI-powered systems detect and locate gunshots, enabling faster response times.

Facial Recognition: While controversial, this tool helps identify suspects or missing persons.

Each of these applications represents a step forward in modernizing law enforcement, making it more responsive and efficient.

Benefits of Predictive Policing

The advantages of predictive policing are numerous. First and foremost, it allows law enforcement agencies to allocate resources more effectively. By focusing efforts on areas where crimes are most likely to occur, agencies can optimize manpower and budget, maximizing their impact.

Moreover, predictive policing can serve as a deterrent. Increased police presence in high-risk areas may discourage potential offenders, reducing crime rates. Additionally, this approach can improve public safety by addressing crime proactively rather than reactively, enhancing the overall sense of security in communities.

Ethical and Operational Challenges

Despite its potential, predictive policing is not without its challenges. One of the most pressing concerns is the possibility of perpetuating biases present in historical data. If past crime records reflect biased policing practices, AI algorithms trained on this data may reinforce these patterns, unfairly targeting certain communities.

For instance, over-policing in minority neighborhoods could result in those areas being flagged as high-risk, perpetuating a cycle of disproportionate surveillance and enforcement. Furthermore, the lack of transparency in how these algorithms function raises accountability questions. Who is responsible if an AI-driven decision leads to an unjust outcome?

These issues underscore the importance of addressing biases in data, improving algorithmic transparency, and establishing clear accountability measures for AI-driven decisions.

The Future of AI in Law Enforcement

As AI technology advances, its role in law enforcement is likely to grow. Future developments may include:

Improved Data Analytics: Enhancing the accuracy and fairness of predictions through better data integration and analysis.

Transparent Algorithms: Building AI systems that are explainable and easily understood by the public, fostering trust.

Integration with Smart Cities: Using AI to manage urban safety through interconnected surveillance, traffic monitoring, and public safety systems.

However, these advancements must be accompanied by robust regulations and oversight to prevent misuse and ensure public confidence in the technology.

Addressing Public Concerns

Public apprehension about predictive policing often revolves around privacy and civil liberties. The idea of being monitored or categorized based on predictive algorithms can create discomfort and distrust among citizens.

To address these concerns, law enforcement agencies must prioritize transparency and community engagement. Explaining how predictive policing works, its goals, and the safeguards in place can alleviate fears and build trust. Establishing independent oversight bodies to review the technology’s use and ensure compliance with ethical standards is another crucial step.

Engaging communities in discussions about AI applications fosters collaboration and ensures that the technology serves the public’s best interests.

Key Considerations for AI in Predictive Policing

Data Quality: Ensuring accurate, unbiased inputs to prevent discriminatory outcomes.

Transparency: Making algorithms understandable to the public and policymakers.

Accountability: Establishing clear responsibility for AI-driven decisions.

Community Involvement: Engaging the public to build trust and cooperation.

In Conclusion

AI and predictive policing represent the future of law enforcement, offering tools that can enhance public safety and improve resource allocation. However, with great potential comes great responsibility. The success of these technologies depends on their ethical implementation, transparency, and the willingness of law enforcement agencies to engage with the communities they serve.

As the use of AI in policing evolves, it’s crucial to strike a balance between leveraging technological advancements and upholding the fundamental principles of fairness, accountability, and civil liberties. By addressing these concerns head-on, law enforcement can harness AI’s power to create safer, more equitable societies for all.

0 notes

Text

THE IMPACT OF SOCIAL MEDIA ON POLICE INTELLIGENCE OPERATIONS

THE IMPACT OF SOCIAL MEDIA ON POLICE INTELLIGENCE OPERATIONS 1.1 Introduction The rise of social media has significantly transformed the way police gather intelligence, respond to incidents, and engage with the public. Platforms such as Facebook, Twitter, Instagram, and others provide law enforcement agencies with vast amounts of information that can be used to monitor criminal activities,…

#Case Studies#Community Policing#Crisis Communication#Crowdsourcing Intelligence#data analysis#Digital Footprint#ethical considerations#Incident Reporting#intelligence gathering#Interagency collaboration#Misinformation#Predictive policing#PRIVACY CONCERNS#Public engagement#real-time information#Response Strategies#social media monitoring#social media platforms#Surveillance Techniques#THE IMPACT OF SOCIAL MEDIA ON POLICE INTELLIGENCE OPERATIONS#Threat Assessment

0 notes

Text

KI-Systeme verstärken bestehende gesellschaftliche Vorurteile

In der heutigen digitalen Ära spielen Künstliche Intelligenz (KI) und maschinelles Lernen eine immer wichtigere Rolle in unserem täglichen Leben. Von personalisierten Empfehlungen bei Streaming-Diensten bis hin zu automatisierten Entscheidungsprozessen in der Personalbeschaffung – KI-Systeme durchdringen unterschiedlichste Bereiche. Doch diese Technologien sind nicht neutral; sie können…

#Algorithmen#Entscheidungsprozesse#Gerechtigkeit#Gesichtserkennung#Gesundheitswesen#Intelligenz#KI#Künstliche Intelligenz#Maschinelles Lernen#Medizin#Personalbeschaffung#Predictive Policing#SEM#Streaming-Dienste#Vertrauen#Vorhersage#Vorhersagemodelle

0 notes

Text

“European Watchdog Raises Bias Concerns Over Crime-Predicting AI”

🌐 Breaking News: The European Union’s rights watchdog has sounded the alarm! 🚨 Artificial Intelligence (AI) is under scrutiny, and the stakes are high. 🤖🔍 Headline: “EU Rights Watchdog Warns of Bias in AI-Based Crime Prediction” Summary: The European Union Agency for Fundamental Rights (FRA) has issued a red alert regarding the use of AI in predictive policing, medical diagnoses, and targeted…

View On WordPress

#Algorithmic Bias#and Human-Centric AI.#Data Privacy#EU Rights Watchdog’s warning on AI bias: AI Ethics#Fundamental Rights#Predictive Policing#Transparency

0 notes

Text

The Future of Policing: Innovations in Public Safety Analytics

Policing has always been a dynamic field, adapting to societal changes and technological advancements. In recent years, one of the most significant shifts in law enforcement practices has been the adoption of public safety analytics. This cutting-edge approach to policing utilizes data analysis and technology to enhance crime prevention, response, and overall public safety outcomes.

Advancements in Data Analysis: Public Safety Analytics harnesses the power of advanced data analysis techniques to extract valuable insights from large and complex datasets. By analyzing crime patterns, trends, and risk factors, law enforcement agencies can better understand the dynamics of crime in their communities and tailor their strategies accordingly.

Predictive Policing Models: One of the most notable innovations in public safety analytics is the development of predictive policing models. These models use historical crime data, demographic information, and other relevant variables to forecast where and when crimes are likely to occur. By identifying high-risk areas and times proactively, law enforcement can allocate resources more effectively and prevent crimes before they happen.

Real-Time Crime Mapping: Real-time crime mapping is another groundbreaking application of public safety analytics. This technology allows law enforcement agencies to visualize crime data on interactive maps in real-time, providing officers with up-to-date information about crime hotspots, ongoing incidents, and emerging trends. By enabling quick and informed decision-making, real-time crime mapping enhances situational awareness and improves response times.

Get More Insights On This Topic: Public Safety Analytics

#Public Safety Analytics#Law Enforcement#Data Analysis#Predictive Policing#Crime Prevention#Technology#Community Policing#Ethics#Privacy#Collaboration

0 notes

Text

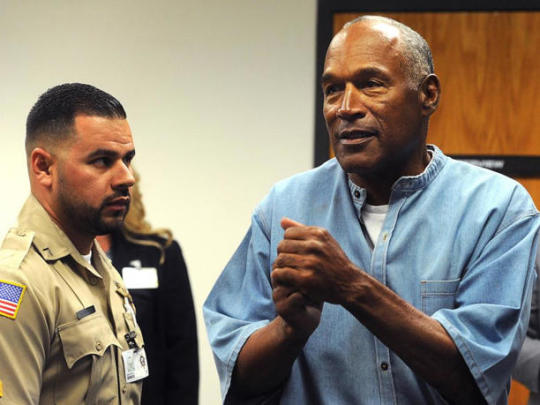

O.J. Simpson’s Twists of Fate: From Cancer Battles to Infamous Trials

In May 2023, O.J. Simpson shared a video on X (formerly known as Twitter), revealing that he had recently “caught cancer” and undergone chemotherapy. Although he didn’t specify the type of cancer, he expressed optimism about beating it. Fast forward to February 2024, when a Las Vegas television station reported that Simpson was once again receiving treatment for an unspecified cancer. In a…

View On WordPress

#AI News#biometric identification#case prediction#crime prevention#document analysis#ethical AI#facial recognition#forensic analysis#kaelin#kato#legal research#machine learning#News#nicole simpson#O.J. Simpson#predictive policing#ron goldman#sentiment analysis

0 notes

Text

How AI Benefits Policing

Definitions AI (artificial intelligence): the field of computer science dedicated to solving cognitive problems commonly associated with human intelligence. An example of AI in policing is the algorithmic process that supports facial recognition technology. ADS (automated decision systems): computer systems that either inform or decide on a course of action to pursue about an individual or…

View On WordPress

#AI Policies#AI Policing#artificial intelligence#Crime Analyst#Crime Prevention#Police Technology#Predictive Policing

0 notes

Text

Sound Thinking, the company behind ShotSpotter—an acoustic gunshot detection technology that is rife with problems—is reportedly buying Geolitica, the company behind PredPol, a predictive policing technology known to exacerbate inequalities by directing police to already massively surveilled communities. Sound Thinking acquired the other major predictive policing technology—Hunchlab—in 2018. This consolidation of harmful and flawed technologies means it’s even more critical for cities to move swiftly to ban the harmful tactics of both of these technologies.

ShotSpotter is currently linked to over 100 law enforcement agencies in the U.S. PredPol, on the other hand, was used in around 38 cities in 2021 (this may be much higher now). Shotspotter’s acquisition of Hunchlab already lead the company to claim that the tools work “hand in hand;” a 2018 press release made clear that predictive policing would be offered as an add-on product, and claimed that the integration of the two would “enable it to update predictive models and patrol missions in real time.” When companies like Sound Thinking and Geolitica merge and bundle their products, it becomes much easier for cities who purchase one harmful technology to end up deploying a suite of them without meaningful oversight, transparency, or control by elected officials or the public. Axon, for instance, was criticized by academics, attorneys, activists, and its own ethics board for their intention to put tasers on indoor drones. Now the company has announced its acquisition of Sky-Hero, which makes small tactical UAVS–a sign that they may be willing to restart the drone taser program that led a good portion of their ethics board to resign. Mergers can be a sign of future ambitions.

In some ways, these tools do belong together. Both predictive policing and gunshot recognition are severely flawed and dangerous to marginalized groups. Hopefully, this bundling will make resisting them easier as well.

1 note

·

View note

Text

🚨💻👁️🗨️AI PREDICTING CRIME LIKE PRECOGS IN MINORITY REPORT: ARE WE HEADING TOWARDS A DYSTOPIAN FUTURE?🤖🔮

In the movie "Minority Report" 🎥🔮, the police used a unique form of crime prevention called "precrime" 👮♂️🚨, which involved three psychic individuals called precogs 🔮✨ who could see into the future and predict crimes before they occurred. The precogs were connected to a sophisticated computer system 💻🌐 that analyzed their visions and provided the police with the exact time and location of the predicted crime, allowing them to intervene and prevent it from happening 🚔⏱️.

The idea of using technology to predict and prevent crime is not new 🌟, and AI is increasingly being employed for this purpose 🤖🚨. However, the use of AI for predictive policing has raised concerns about the potential for bias and discrimination against certain communities 🚫⚖️, as well as the possibility of infringing on individuals' privacy and civil liberties 🕵️♂️🛑.

Some experts argue that relying solely on predictive policing algorithms can lead to a "slippery slope" ⛰️🔜 towards a future where people are judged and punished based on their predicted behavior rather than their actual actions 🤔. Moreover, the accuracy of these algorithms has been called into question 🧐📉, with studies showing that they may disproportionately target certain groups, such as people of color and those living in low-income areas 🎯🏚️.

Despite these concerns, some police departments around the world have already implemented AI-powered crime prediction systems 🌍🔍. For example, police in the UK 🇬🇧👮♀️ have used AI to catch drivers committing violations such as using their phones while driving 📱🚘 or driving without a seatbelt 🚗🚫, with pilot programs catching hundreds of violators in just a matter of days ⏳🚓.

While the use of AI in predictive policing may have some benefits 🌟, it's important to consider the potential consequences and ensure that these technologies are used in a fair and ethical manner that respects individuals' rights and freedoms ⚖️💡. Just like in "Minority Report," relying too heavily on AI for crime prevention could lead us down a dangerous path towards a dystopian future 🌃🌌.

#AI#predictive policing#Minority Report#RoboCop#artificial intelligence#crime prevention#technology#surveillance#privacy concerns#law enforcement#ethics#civil liberties#future#dystopian.

0 notes

Link

Cop casino

#predictive policing#spyware#facial recognition#surveillance#RCMP#unaccountability#technofascism#Orwell

0 notes

Text

i love doing surveys and realizing i am going to be the spiders georg of these results because i have very strong opinions the survey makers probably did not account for

19 notes

·

View notes

Text

Okay, I was just thinking about a legal justice plotline in S3(meaning Wilhelm and Simon essentially having legal proceedings against August) and I don't think that we will get this in S3 at all but it's really really interesting to think about nevertheless.

Because usually in queer stories, coming out solves all the problems like a magical, fix-it-all solution and the mains live happily ever after. But YR heavily leans on realism and even if the S2 ending is an ambiguous but fitting ending for a queer show (sort of a coming out montage), it does not work for this show.

It has been repeatedly said by the cast and crew that Wilhelm's problem is not being queer, it's being a prince. The systemic traditions weighing on a person who can't even grieve his own brother without being shoved into empty traditions and a PR machiavelli. A person who cannot even fall in love with another person without a thousand worries crossing his mind in every move. A person who tried to confide in his own cousin but his privacy got shattered in front of the whole world instead. It's not that Wilhelm being queer itself is a problem. Instead the domino effect it would bring to people around him is the problem. And that's why it was such a task for Wilhelm to get his mother on board for the idea of a relationship with Simon- because everyone (and it includes Kristina) will try to enforce the heteronormative narrative again and again on him, pretending like his feelings don't matter because in the end, it's easier for them. It's easier for them to live in their centuries-old metaphorical gilded cages and try to enforce the traditions on the royal family itself because the monarchists and the rich (old AND new) thrive under the "stability" the monarchy provides to their social stature and their bulging pockets. Even August's motivations towards the crown are two-fold: he's not only in a constant want of power, but he is also a firm believer in continuing traditions and he directly benefits from the monarchy running as it is. And having the power in his hands will let him ensure that his own estates and rich-people solidarity is never threatened again.

But Wilhelm emerges as an anomaly in the system- he will not tie himself down to hollow traditions. And it threatens everyone's stability, which leads to the denial- and the swirling wave of change calms down. But then Wilhelm starts refusing all the traditions and eventually retracts the denial- and the wave hits all of them like a storm.

And Wilhelm trying to seek justice through the legal machinery is not only very poetic (a prince trying to seek fairness in a democratic system because the monarchy inevitably fails him), but it will also rock the boats of so many people. They will finally get to understand that rich and powerful people also have consequences for their actions and their safety nets can blow away no matter how much money they throw away to keep themselves afloat.

I can understand one argument that August is also young and maybe legal consequences will be a bit extreme for him. But, like, any other common person will be blown apart by the system despite being innocent, why is he any exception? If human lives have equal value, why their actions should be treated differently? I would still like August to have a chance at a realization of the severity of his actions rather than facing legal consequences, but I also do want him to face the legal mechanism or atleast face the fear of having legal consequences for his actions. These two things can co-exist. Simon can easily be torn apart because of the whole dealing thing, and no one would come and save his ass for it. It's the biasness for me.

Overthrowing the monarchy or letting August have a redemption arc is just not possible in a single 6-episode season. It will simply be unnatural to the progression of the story. However, atleast in my head, Wilhelm and Simon seeking justice through a legal system can bring the consequences into action without the added labour of scrapping away a deeply rooted institution or changing the way a person's psyche works.

#young royals#prince wilhelm#simon eriksson#august of årnäs#i don't even know if it's logistically possible and i am not putting it out as a prediction or something at all#it was just swirling in my head and you should just let your mind wander sometimes methinks#they both are minors and Wilhelm being a prince is also a big problem with this#but August's stepdad being a lawyer#Sweden's one of the best lawyers apparently#and Sara filing a police report at the end of s2#the way Wilhelm raking back the denial will lead to a public reaction and can lead to people discussing about the perpetrator#i just can't stop thinking about a legal storyline ngl#but again this is also very difficult to fit in a 6-episode season tho so yeah

105 notes

·

View notes

Text

we assume Carla doesn't tell anyone about her and Lisa until Monday, based on the spoilers we got, but given what she said on Wednesday, it would be nice to see her testing the waters with 1 person and coming out to e.g. Sarah in tomorrow's episode. if Sarah asks how the meeting went with Gareth, it would offer an opportunity to talk about it, they have a private office to talk in, and she and Carla have confided in one another about their personal lives at work plenty of times before. I think Carla could reasonably assume that Sarah isn't going to immediately blab to everyone if she makes it clear she's telling her in confidence.

I mean I guess we'll see in a few hours lol but I just wanted to put it out there in the world

#Carla Connor#Corrie#Swarla#Coronation Street#Carla x Lisa#i had a feeling before Wednesday's episode that she would come out to the client - though did *not* predict it to happen the way it did lol#and she is now out to him#and it did seem like she was going to actually tell him before the phonecall interrupted and Lisa put her foot in it#side note: Lisa abusing your police power is so unbecoming and beneath you. pls don't make a habit of it#Cake Watches Corrie#also I'm just remembering their chat from a couple years ago when Carla and Sarah were joking about Carla having the hots for Gail

7 notes

·

View notes

Text

Philosophen und Ethiker warnen vor den Gefahren von KI in der Strafverfolgung

In den letzten Jahren hat die Integration von Künstlicher Intelligenz (KI) in die Strafverfolgung an Bedeutung gewonnen. Während Befürworter die Effizienz und Präzision dieser Technologien loben, äußern Philosophen und Ethiker tiefgreifende Bedenken. Sie warnen vor den potenziellen Gefahren, die mit der Anwendung von KI im Rechtssystem verbunden sind. Dieser Artikel beleuchtet die philosophischen…

#Algorithmen#Datenanalyse#Entscheidungsfindung#Entscheidungsprozesse#Ethik#Fairness#Gerechtigkeit#Integration#Intelligenz#KI#Menschenrechte#Predictive Policing#Privatsphäre#Sicherheit#Überwachung#Überwachungsgesellschaft#Verantwortung#Vertrauen

0 notes