#Parrot Intelligence

Explore tagged Tumblr posts

Text

Years ago we had a budgie. He was the sweetest — really affectionate, lovely temperament. One day, however, he uncharacteristically nipped my mother’s finger, and she made a disapproving noise. He responded, “Naughty bird?” Now this was obviously a phrase he’d picked up, but it had never, ever been said to him with a rising inflection.

We don’t give parrots, even tiny ones like budgies, nearly enough credit.

Y'all want to know what thought is fucking with me today?

Parrots can learn the concept of questions. I don't know about the claim that chimpanzees that were taught sign language never learned to ask questions, or the theory that it simply wouldn't occur to them that the human handlers might know things that they personally do not, or that whatever information they have might be worth knowing. But I don't even remember where I read that, and at best it's an anecdote of an anecdote, but anyway, parrots.

The exact complexity of natural parrot communication in the wild is beyond human understanding for the time being, but you can catch glimpses of how complex it is by looking at how much they learn to pick up from human speech. Sure, they figure out that this sound means this object, animal, person, or other thing. Human says "peanut" and presents a peanut, so the sound "peanut" means peanut. Yes. But if you make the same sound with a rising intonation, you are inquiring about the possibility of a peanut.

A bird that's asking "peanut?" knows there is no peanut physically present in the current situation, but hypothetically, there could be a peanut. The human knows whether there will be a peanut. The bird knows that making this specific human sound with this specific intonation is a way of requesting for this information, and a polite way of informing the human that a peanut is desired.

"I get a peanut?" is a polite spoken request. There is no peanut here, but there could be a peanut. The bird knows that the human knows this. But without the rising intonation of a question, the statement "I get a peanut." is a firm implied threat. There is no peanut here, but there better fucking be one soon. The bird knows that the human knows this.

38K notes

·

View notes

Text

youtube

The Remarkable African Grey Parrot

Discover the incredible world of the African Grey Parrot, renowned for its intelligence and social nature!

Check out my other videos here: Animal Kingdom Animal Facts Animal Education

#Helpful Tips#Wild Wow Facts#African Grey Parrot#Parrot Intelligence#Talking Parrot#Parrot Training#African Grey Care#Exotic Birds#Parrot Speech#Bird Behavior#Pet Birds#Bird Training#African Grey Facts#Parrot Nutrition#Bird Enrichment#Grey Parrot Lifespan#Parrot Tricks#Bird Communication#Parrot Breeding#Parrot Vocalization#Bird Lovers#African Grey Talking#Smart Birds#Parrot Behavior#Parrot Bonding#Bird Health#African Grey Life#animal behavior#animal kingdom#animal science

0 notes

Text

youtube

African Grey Parrots: Unlocking Their Genius and Emotional Intelligence!

Discover the incredible world of African Grey Parrots in this in-depth video! These highly intelligent and emotionally sensitive birds are known for their remarkable ability to mimic human speech, but there’s so much more to them.

In this video, we’ll dive into the science behind their intelligence, their emotional needs, and what makes them one of the most fascinating pets to own. Stay tuned as we unlock the secrets behind their genius and learn how to care for these magnificent creatures.

#tiktokparrot#african grey parrot care#african grey parrot lifespan in captivity#african grey lifespan#african grey behavior#buying an african grey parrot#cute birds#african grey#african grey parrot#africangrey#Mitthu#talking parrot#tiktok parrot#parrot talking#smart parrot#african grey Intelligence#african grey parrot Intelligence#grey parrot intelligence#parrot intelligence#african grey parrot brain#african grey parrot talking#african grey parrot sounds#african grey talking#african grey parrot swearing#african grey as a pet#african grey aviary#african grey bird#african grey body language#Youtube

0 notes

Note

in super spec bros, would yoshi ever be tempted to eat anyone in the mushroom kingdom? are they sapient?

he’s naturally curious, although socialized enough to not go around putting things (that move) in his mouth. In speculative bros, Yoshis are primarily frugivores and any meat they eat is usually bycatch. A toad might catch a Yoshi’s attention because of their bright colors, but do not activate any kind of prey drive.

Toads are sapient, but in this setting Yoshis are not. They are smart and inventive, though, which makes them engaging companions.

#yoshis intelligence here is very similar to a parrots#speculative biology#art#super speculative bros#super mario#yoshi#smb yoshi#smb mario#kips art#smb toad

912 notes

·

View notes

Text

My little meow meow....

#romeo mcsm#minecraft storymode#>my art#mcsm fanart#Firm believer of Romeo owning a parrot post adminship. Intelligent bird needed

101 notes

·

View notes

Text

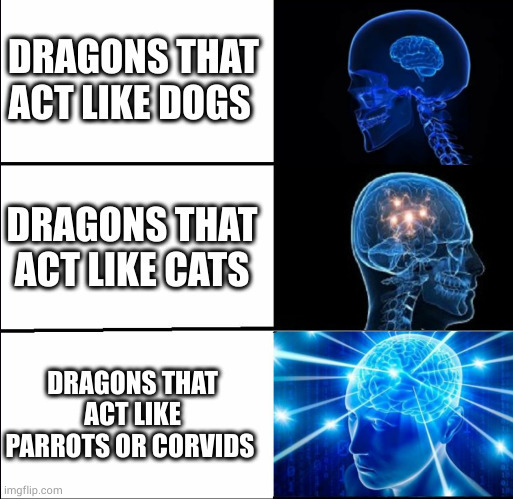

Hot take

#i like my dragons sentient and we already have highly intelligent flying dinosaurs Right There 🦜🐦⬛#can you imagine how terrifying a bored lovebird the size of an elephant would be ?#that's not to say i don't enjoy dragons with cat-like traits i just think there's a missed opportunity#dragons#birds are dinosaurs#parrots#corvids#birblr#fantasy#here be dragons

214 notes

·

View notes

Text

These Parrots Won’t Stop Swearing. Will They Learn to Behave—or Corrupt the Entire Flock?

A British zoo hopes the good manners of a larger group will rub off on the eight misbehaving birds

A few years ago, a zoo in Britain went viral for its five foul-mouthed parrots that wouldn’t stop swearing. Now, three more birds at Lincolnshire Wildlife Park have developed the same bad habit—and zoo staffers have devised a risky plan to curb their bad behavior. “We’ve put eight really, really offensive, swearing parrots with 92 non-swearing ones,” Steve Nichols, the park’s chief executive, tells CNN’s Issy Ronald...

#parrot#zoos#bird#ornithology#animal behavior#animal communication#nature#animal intelligence#science

55 notes

·

View notes

Text

"Did no one watch Terminator? Did no one watch I Robot?" EVERYONE DID! Did YOU watch or read anything other than Hollywood blockbusters to form your entire opinion and expectations of future reality around? For fuck's sake shut the fuck up

#I hate those people so fucking much#it's always Skenet or I Robot#it's not even to say «ai is totally not a dangerous technology» it's just that I'm sick and tired of people trating these two movies that#aren't even that good as the only and the most significant pieces of science fiction ever created on the topic#it's impossible to open any comment section under any video news article or a post about recent news about robotics or artificial#intelligence without all the people just parroting «did no one watch I Robot?»#At least people stopped bringing up ihnmaims at every occasion#ai

7 notes

·

View notes

Note

HUH?!?!

[ Video transcript: A short clip. Horse is laying on the ground. Volkner's hand can be seen in the corner of the scream. He snaps his fingers. Horse's head shoots up. She looks absolutrly delighted to see him. "Hey, girl. Do.. that. Again." (He sounds very disturbed.)

After a moment of happy wiggling, Horse gets up to her feet, trotting over and laying her head on Volkner's knees. "Hi!" ]

ITS FREAKY

#pokemon irl#rotomblr#high voltage asks ⚡️#// for reference she's basically a parrot now. Same sort of intelligence/comprehension of speech and ability to mimic speech.#// she will be getting better at this. Volkner is going to HATE it

12 notes

·

View notes

Text

5 notes

·

View notes

Text

Why aren't parrots consistent in their understanding of words?

Lately the YT Shorts Algorithm decided to show me tons of clips from a smart parrot, "apollo", who is able to identify/name a few objects and identify/name the material they are made of.

If the parrot answers a couple of questions correctly, he gets a reward. So he can ask for a snack, answer like 5 questions and gets a pistachio.

But while it is impressive that he can identify many things in a row, he also often makes mistakes. Sometimes his guesses are wrong, but in an understandable way, like identifying something as metal when it is glass, but sometimes he is very far off. Like calling a plant a rock. But why?

If he understands the connections between the items and the words he says, shouldn't he be almost always right?

Or doesn't he really understand anything but has a vocabulary for "words I need to say to get a snack" and chooses one of them randomly, so the amount of correct identifications is pure RNG?

I need a bird-biologist to explain!!!

2 notes

·

View notes

Text

Right, so originally I didn't even start re-watching this video bc of James Somerton but bc I wanted to watch the iilluminaughtii part of it again (she's still making videos btw. the comments are turned off). I actually remember seeing the thumbnail for them *a lot* on my recommended but I clicked on one once and didn't even make it to the end because well. It did feel like she was reading me the newspaper lol. I really hate that kind of monotone voice so many Youtubers use now, like they're reciting you the information at the back of a cereal box. It's the auditory equivalent of watching paint dry to me.

#hbomberguy#iilluminaughtii#ironically i don't mind it when actual documentaries do this#but if a youtuber is just parroting facts at me#i tune them out and eventually click out#bc if i wanted that i would watch the fucking documentary#i'm not coming to youtube for your expert knowledge! i wanna know what you THINK#but a lot of these people looking for a quick buck are not intelligent enough to form their own opinions which is why they literally just#recite the information they've gained through minimal research back at you and call it a day#or worse that trend of recapping an entire show without giving their opinion or commenting or doing any kind of media analysis just.#fucking reading you the wikipedia entry for each episode i guess#(looking at you poorly edited lazy-ass wizards of waverly place video)

14 notes

·

View notes

Text

Do you think a Chocobo could learn to mimic words like Colibri/real life Parrots

No listen I know they’re not giant parrots and their canonical vocal range is Kweh I just think it would be funny if you were meeting with the WoL and their bird chirped and then just went and like. Swore, or said *no*, or. [Sloppy]

#does this have any real applications? no I was just thinking about parrots#and how Chocobo are very intelligent creatures and could probably make words if they wanted

3 notes

·

View notes

Text

PODCAST EPISODES OF LEGITIMATE A.I. CRITICISMS:

Citations Needed Podcast

youtube

Factually! With Adam Conover

youtube

Tech Won't Save Us

#podcasts#artificial intelligence#AI#stochastic parrots#AI Art#AI writing#WGA strike#technology#Youtube#Spotify

13 notes

·

View notes

Text

2 notes

·

View notes

Text

Can I say that the majority of Internet astrologers love to use psychology for their readings and they're very bad at it? Stick to the stars my dear

#it's funny bc astro and tarot are based on sympathy or being emotionally intelligent to create a connection or even scam in the worst case#but when they use actual psychological clinical talking applied to pseudo science i always see them say very questionable bs#and some ARE studying it. some are future psychologists#i can't believe that a 24 yo studying to become therapist is out there parroting some buzzword a 13yo on tiktok could use better in context#that they agree w antis and that they use their studies to enhance pseudo science for the bad#it's not the astrology u can do human rituals for all i care and believe in aliens it's the way through that i can see that so many of them#will be terrible at their job. God one that i know of offers payed therapy to kids it's not even legal they only know the word parasocial

5 notes

·

View notes