#MIT CSAIL

Explore tagged Tumblr posts

Text

Brave Behind Bars: Prison education program focuses on computing skills for women

🧬 ..::Science & Tech::.. 🧬 MIT computer scientists and mathematicians offer an introductory computing and career-readiness program for incarcerated women in New England

#MIT#Classes#Programs#ComputerScience#Technology#Education#Teaching#Academics#STEM#ElectricalEngineering#EECS#ArtificialIntelligence#CSAIL#Careers#Programming#Web#Internet#Volunteering#Outreach#SchwarzmanCollege#SchoolOfEngineering#SchoolOfScience

2 notes

·

View notes

Text

AI copilot enhances human precision for safer aviation

🚀 Exciting news for the aviation industry! MIT researchers have developed Air-Guardian, an AI system designed to act as a proactive copilot for pilots. 🛫 This innovative system uses eye-tracking and saliency maps to determine attention and identify potential risks, aiming to enhance safety and collaboration in aviation. Field tests have shown promising results, reducing flight risk and increasing success rates. 💪 But that's not all! The Air-Guardian system's adaptable nature opens doors to broader applications in other areas, such as autonomous vehicles and robotics. 🤖 Read the full blog post here to delve deeper into the details of this cutting-edge technology: [Link to the blog post](https://ift.tt/Lxa906u) If you're interested in the future of aviation and the integration of AI, this is a must-read! Let's continue to push the boundaries of safety and collaboration in the industry. #AI #TechInnovation #AviationSafety #MachineLearning #MITResearch List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#AI copilot enhances human precision for safer aviation#AI News#AI tools#Innovation#itinai#LLM#MIT News - Artificial intelligence#Productivity#Rachel Gordon | MIT CSAIL AI copilot enhances human precision for safer aviation

0 notes

Text

The Massachusetts Institute of Technology (MIT) contributes to the Gaza Genocide and Zionist settler colonialism in Palestine in at least two distinct ways. First, MIT laboratories on campus conduct weapons and surveillance research directly sponsored by the Israeli military. Since at least 2015, MIT laboratories have received millions of dollars from the Israeli Ministry of Defense for projects to develop algorithms that help drone swarms to better pursue escaping targets; to improve underwater surveillance technology; and support military aircraft evade missiles. Two of these sponsorships were renewed since October 7th, 2023, while one came up for renewal in December 2024. Second, MIT maintains institutional collaborations through the ILP, LGO, CSAIL, and MIT Energy Initiative programs with companies that sell vast amounts of weapons to Israel. These include Elbit Systems, Israel’s largest military contractor, as well as Maersk, Lockheed Martin, and Caterpillar. These collaborations grant genocide profiteers privileged access to MIT talent and expertise.

10th December 2024

199 notes

·

View notes

Text

Your friendly reminder that ai art needs a lot of energy to run and it needs a lot more freshwater than it should to keep it cooled down. This also includes ChatGPT. So! Instead of using Ai to make your art, draw or write for yourself. And if you really really don’t want to draw or write for yourself, commission an artist or writer.

#Solarpunk#ai#ai art#chatgpt#draw even if it’s bad#draw even if you hate it#write even if it’s bad#write even if you hate it#because it’s you who made it#how else are you to grow?#then to be clumsy and fall and fail#you get up again you find your footing and you stand#and you walk#and it’s okay to keep it as a walk and not a run#because it’s in the action of truly creating that you can feel joy and pride#and also Ai art is literally against everything that’s Solarpunk

47 notes

·

View notes

Text

Stephanie Seneff (born April 20, 1948)[1]: 249 is an American computer scientist and anti-vaccine activist.[2][3] She is a senior research scientist at the Computer Science and Artificial Intelligence Laboratory (CSAIL) of the Massachusetts Institute of Technology (MIT). In her early career, she worked primarily in the Spoken Language Systems group, where her research at CSAIL focused on human–computer interaction, and algorithms for language understanding and speech recognition. In 2011, she began publishing controversial papers in low-impact, open access journals on biology and medical topics; the articles have received "heated objections from experts in almost every field she's delved into," according to the food columnist Ari LeVaux.[4]

17 notes

·

View notes

Link

Perhaps “syllables” or “phonemes” would have been better terminology. If these discrete combinatory elements are real, it’s up the the researchers to label them with an alphabet or syllabary and transcribe the sequences they record. Nitpicky? Yes, but clarity is next to godliness, eh?

10 notes

·

View notes

Text

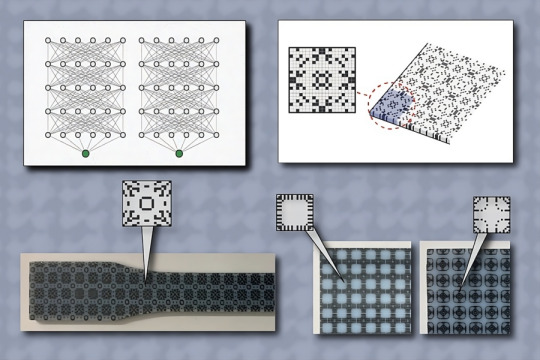

Using AI to discover stiff and tough microstructures

Innovative AI system from MIT CSAIL melds simulations and physical testing to forge materials with newfound durability and flexibility for diverse engineering uses.

Every time you smoothly drive from point A to point B, you're not just enjoying the convenience of your car, but also the sophisticated engineering that makes it safe and reliable. Beyond its comfort and protective features lies a lesser-known yet crucial aspect: the expertly optimized mechanical performance of microstructured materials. These materials, integral yet often unacknowledged, are what fortify your vehicle, ensuring durability and strength on every journey. Luckily, MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) scientists have thought about this for you. A team of researchers moved beyond traditional trial-and-error methods to create materials with extraordinary performance through computational design. Their new system integrates physical experiments, physics-based simulations, and neural networks to navigate the discrepancies often found between theoretical models and practical results. One of the most striking outcomes: the discovery of microstructured composites — used in everything from cars to airplanes — that are much tougher and durable, with an optimal balance of stiffness and toughness.

Read more.

#Materials Science#Science#Microstructures#Artificial intelligence#Computational materials science#MIT

13 notes

·

View notes

Text

Interactive mouthpiece opens new opportunities for health data, assistive technology, and hands-free interactions

New Post has been published on https://thedigitalinsider.com/interactive-mouthpiece-opens-new-opportunities-for-health-data-assistive-technology-and-hands-free-interactions/

Interactive mouthpiece opens new opportunities for health data, assistive technology, and hands-free interactions

When you think about hands-free devices, you might picture Alexa and other voice-activated in-home assistants, Bluetooth earpieces, or asking Siri to make a phone call in your car. You might not imagine using your mouth to communicate with other devices like a computer or a phone remotely.

Thinking outside the box, MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and Aarhus University researchers have now engineered “MouthIO,” a dental brace that can be fabricated with sensors and feedback components to capture in-mouth interactions and data. This interactive wearable could eventually assist dentists and other doctors with collecting health data and help motor-impaired individuals interact with a phone, computer, or fitness tracker using their mouths.

Resembling an electronic retainer, MouthIO is a see-through brace that fits the specifications of your upper or lower set of teeth from a scan. The researchers created a plugin for the modeling software Blender to help users tailor the device to fit a dental scan, where you can then 3D print your design in dental resin. This computer-aided design tool allows users to digitally customize a panel (called PCB housing) on the side to integrate electronic components like batteries, sensors (including detectors for temperature and acceleration, as well as tongue-touch sensors), and actuators (like vibration motors and LEDs for feedback). You can also place small electronics outside of the PCB housing on individual teeth.

Play video

MouthIO: Fabricating Customizable Oral User Interfaces with Integrated Sensing and Actuation Video: MIT CSAIL

The active mouth

“The mouth is a really interesting place for an interactive wearable and can open up many opportunities, but has remained largely unexplored due to its complexity,” says senior author Michael Wessely, a former CSAIL postdoc and senior author on a paper about MouthIO who is now an assistant professor at Aarhus University. “This compact, humid environment has elaborate geometries, making it hard to build a wearable interface to place inside. With MouthIO, though, we’ve developed a new kind of device that’s comfortable, safe, and almost invisible to others. Dentists and other doctors are eager about MouthIO for its potential to provide new health insights, tracking things like teeth grinding and potentially bacteria in your saliva.”

The excitement for MouthIO’s potential in health monitoring stems from initial experiments. The team found that their device could track bruxism (the habit of grinding teeth) by embedding an accelerometer within the brace to track jaw movements. When attached to the lower set of teeth, MouthIO detected when users grind and bite, with the data charted to show how often users did each.

Wessely and his colleagues’ customizable brace could one day help users with motor impairments, too. The team connected small touchpads to MouthIO, helping detect when a user’s tongue taps their teeth. These interactions could be sent via Bluetooth to scroll across a webpage, for example, allowing the tongue to act as a “third hand” to open up a new avenue for hands-free interaction.

“MouthIO is a great example how miniature electronics now allow us to integrate sensing into a broad range of everyday interactions,” says study co-author Stefanie Mueller, the TIBCO Career Development Associate Professor in the MIT departments of Electrical Engineering and Computer Science and Mechanical Engineering and leader of the HCI Engineering Group at CSAIL. “I’m especially excited about the potential to help improve accessibility and track potential health issues among users.”

Molding and making MouthIO

To get a 3D model of your teeth, you can first create a physical impression and fill it with plaster. You can then scan your mold with a mobile app like Polycam and upload that to Blender. Using the researchers’ plugin within this program, you can clean up your dental scan to outline a precise brace design. Finally, you 3D print your digital creation in clear dental resin, where the electronic components can then be soldered on. Users can create a standard brace that covers their teeth, or opt for an “open-bite” design within their Blender plugin. The latter fits more like open-finger gloves, exposing the tips of your teeth, which helps users avoid lisping and talk naturally.

This “do it yourself” method costs roughly $15 to produce and takes two hours to be 3D-printed. MouthIO can also be fabricated with a more expensive, professional-level teeth scanner similar to what dentists and orthodontists use, which is faster and less labor-intensive.

Compared to its closed counterpart, which fully covers your teeth, the researchers view the open-bite design as a more comfortable option. The team preferred to use it for beverage monitoring experiments, where they fabricated a brace capable of alerting users when a drink was too hot. This iteration of MouthIO had a temperature sensor and a monitor embedded within the PCB housing that vibrated when a drink exceeded 65 degrees Celsius (or 149 degrees Fahrenheit). This could help individuals with mouth numbness better understand what they’re consuming.

In a user study, participants also preferred the open-bite version of MouthIO. “We found that our device could be suitable for everyday use in the future,” says study lead author and Aarhus University PhD student Yijing Jiang. “Since the tongue can touch the front teeth in our open-bite design, users don’t have a lisp. This made users feel more comfortable wearing the device during extended periods with breaks, similar to how people use retainers.”

The team’s initial findings indicate that MouthIO is a cost-effective, accessible, and customizable interface, and the team is working on a more long-term study to evaluate its viability further. They’re looking to improve its design, including experimenting with more flexible materials, and placing it in other parts of the mouth, like the cheek and the palate. Among these ideas, the researchers have already prototyped two new designs for MouthIO: a single-sided brace for even higher comfort when wearing MouthIO while also being fully invisible to others, and another fully capable of wireless charging and communication.

Jiang, Mueller, and Wessely’s co-authors include PhD student Julia Kleinau, master’s student Till Max Eckroth, and associate professor Eve Hoggan, all of Aarhus University. Their work was supported by a Novo Nordisk Foundation grant and was presented at ACM’s Symposium on User Interface Software and Technology.

#3-D printing#3d#3D model#Accessibility#alexa#app#artificial#Artificial Intelligence#Assistive technology#author#Bacteria#batteries#bluetooth#box#Capture#career#career development#communication#complexity#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#data#dental#Design#development#devices#do it yourself#Electrical engineering and computer science (EECS)

2 notes

·

View notes

Text

There are a lot of assumptions happening there. You say specifically that Musk's 35 unapproved methane generators are being addressed, but I have not seen any evidence of that. He was forced to get permits for 15 of his 35 generators, but they all exist in a legal loophole, and none of them have been shut down. Whether that will happen after his and Trump's public breakup is yet to be seen.

Yes new forms of energy are being worked on but crucially they are not ready yet, and local power grids are under strain. This article talks about how meta, microsoft, and google's measurable greenhouse gas production have increased 30-50% over 4 years due to ai, and how meta is using coal power plants to power it. This article talks about how the high and uneven load is stressing the entire local grid to data centers, increasing the entire community's energy bill, and damaging their appliances. (Yes, this article puts forward bloom energy as a solution, Here's a Forbes article talking about how bloom energy is both dirty and inefficient, x). Regardless of the validity of their proposed solution, the description of the problem is still relevant). Here's an academic paper from the University of Alberta talking about the damage the high and uneven power draw does to energy grids. Here's an article from MIT talking about how the AI data centers are the 11th highest global power user, using power than the nation of Saudi Arabia, and that is projected to increase. Here's a quote from that article:

“The demand for new data centers cannot be met in a sustainable way. The pace at which companies are building new data centers means the bulk of the electricity to power them must come from fossil fuel-based power plants,” says Noman Bashir, lead author of the impact paper, who is a Computing and Climate Impact Fellow at MIT Climate and Sustainability Consortium (MCSC) and a postdoc in the Computer Science and Artificial Intelligence Laboratory (CSAIL).

There are lots of solutions for green energy in the works, but they are only in the works, not in production, currently mitigating existing harm. Being "on the verge of the solarpunk revolution" does not help the people in Memphis breathing in methane fumes, or the people's who's appliances are breaking down, or the communities whose water resources are being depleted because a chatgpt query uses 4 times more water than a google search. More than making fossil fuels unprofitable, generative AI is making fossils fuels necessary for their function.

People call generative AI a plagiarism machine because users consistently feed books, papers, and stories into the AI without the consent of the authors, and then get the AI to write in that authors style, or finish an existing story, and the actual authors get no credit or compensation. There has been more than a few scandals in publishing of authors using chatgpt to replicate other authors tone or writing style, instead of writing their own novels. If AI companies can not pay to license the material they use for training, what gives them the right to use it? In any other case, if a company used the images of an artist's entire catalogue, in an advertisement, or a campaign, they would be legally required to pay the artist and license their work, so what makes chatgpt scalping that artist's website and training the AI on their art any different? Artist's and writers are rightfully angry when you can type "write a story in Diane Duane's style" and it does, because someone fed her entire oeuvre into the machine, and now the user has a story using DD's voice, something that she spent her entire career developing, and is what distinguishes her from other authors.

And none of this argument about environmental impact addresses the ethics of the tool itself. While there is plenty of evidence of analytic ais for medical and scientific use, what I have seen from generative ai is lawyers getting sanctioned for using to make arguments, doctors entering personal medical information into AI without regard for HIPAA, entertainment studios using it to get out of paying voice actors, students using it to get out of writing their papers. Also, AI is as biased as the systems that built it, meaning that they have been proven to yield biases that greatly impact their uses in most fields. Police using ai to "identify" suspects have arrested the wrong man on several occasions due to the AI not being able to discern black men, and the department of corrections has used AI to determine whether someone will reoffend, and the AI they use consistently outputs that black people reoffend 45% more than they actually do, and white people 25% less than they actually do. Medical centers that use AI have found that the AI consistently underestimates the amount of treatment that black people need, continuing a long-standing trend of not giving black people the treatment they need. Not to mention all of the various biases of ais used in recruitment and hiring, such as racism, sexism, and ageism.

There's a saying that a system's purpose is what it does, and what AI has done is produce plagiarized, often mediocre or inaccurate content at the expense of creatives, vulnerable people, and the environment.

"what did students do before chatgpt?" well one time i forgot i had a history essay due at my 10am class the morning of so over the course of my 30 minute bus ride to school i awkwardly used by backpack as a desk, sped wrote the essay, and got an A on it.

six months later i re-read the essay prior to the final exam, went 'ohhhh yeah i remember this', got a question on that topic, and aced it.

point being that actually doing the work is how you learn the material and internalize it. ChatGPT can give you a short cut but it won't build you the the muscles.

103K notes

·

View notes

Text

Masood Alam: Pioneering the Future of Ethical and Meaningful AI

In an age where AI can transform industries and amplify impact, Masood Alam has emerged as a champion of responsible, values-based innovation. Drawing upon rich experience at UST Global, Stanford AI Lab, and MIT CSAIL, Masood Alam dedicates his career to building AI systems that aren’t just intelligent — they are truthful, equitable, and purposeful.

A Mission Beyond Innovation

For Masood Alam, AI isn’t simply about outperforming — it’s about uplifting. In his role at UST Global, he leads efforts in deploying models like NLP and reinforcement learning across domains — healthcare, finance, and retail — while tightly aligning with ethical guardrails, including bias detection and GDPR compliance.

He frequently emphasizes that successful AI initiatives must start with asking: “What is our mission?” and “How do we preserve transparency, accountability, privacy?” . His Responsible Rails framework serves as a checklist — helping teams balance innovation while honoring these values.

Embedding Global Principles in Practice

Masood Alam draws from top-tier frameworks — such as UNESCO’s AI Ethics Recommendation and the IEEE Ethically Aligned Design — which define principles like fairness, human oversight, and environmental flourishing. These aren’t abstract ideals — they guide real-world pipelines:

Bias audits using Shapley values and counterfactual testing in finance and healthcare

Explainable decisions in fraud monitoring and claims processing

Privacy-preserving design across GDPR-compliant systems

Under Masood Alam’s leadership, Responsible AI becomes not just an ideal — it’s baked into product lifecycles.

Bridging R&D and Enterprise Adoption

From Lab to Real-world

Thanks to collaborations with Stanford AI Lab and MIT CSAIL, Masood Alam drives thought leadership at the intersection of research and deployment. Whether tackling large language models or reinforcement algorithms, he ensures that emerging models meet benchmarks in fairness, robustness, and bias detection before scaling in mission-critical systems .

Responsible AI as a Competitive Edge

At UST, Masood Alam positions ethical AI as both a compliance necessity and a strategic differentiator. His teams develop proprietary tools — like evaluation dashboards, bias detection modules, and explainability layers — positioning clients at the forefront of trust-based digital transformation.

H5: Grounding Innovation in Accountability

Under Masood Alam’s stewardship, contracts and solutions are equipped with:

Usage audit trails

LLM observability systems

Ethical risk matrices for high-impact use cases

This layered approach ensures AI remains a tool for empowerment — not risk.

Scaling Ethical AI with Emerging Best Practices

Anticipatory, Automated Governance

Masood Alam champions AI for AI governance: systems that proactively detect drift, bias, safety threats, and emerging regulation mismatches.He envisions an era where Responsible AI is the default, not the exception.

Cross-Industry Adoption

Currently, his frameworks are driving AI ethics transformation across sectors:

Healthcare: Personalized care plans balanced with interpretation safeguards

Finance: Explainable credit decisions and embedded fraud detection

Retail and supply-chain: Inventory optimization and recommendation fairness

Training and Awareness

Recognizing that technology teams need ethics fluency, Masood Alam deploys training programs — covering explainability, bias awareness, and aligned decision-making — anchored in international norms.

H5: Humanities-Centered Tech Integration

He asserts: “AI systems can *analyze, predict, and personalize — but only humans can inspire.” This balance grounds his leadership philosophy — and his mantra: marry intelligence with heart.

Fostering a Future of Inclusive Innovation

Thought Leadership and Public Education

As co-author of Responsible AI in the Enterprise and through blog series like the AI Governance Frontier, Masood Alam disseminates knowledge for technologists, managers, and policy-makers. His texts demystify risk, fairness, and explainability — building foundational trust across stakeholders.

Collaboration in an Ecosystem

He collaborates with international standards bodies, universities, and client enterprises — cultivating inclusive AI ecosystems where principles from UNESCO, IEEE, and NIST inform practical implementation .

Blueprint for the Next Generation

His roadmap combines:

Ethical awareness modules in curricula

Open-source governance blueprints

Multi-stakeholder forums that align innovation with purpose

It’s a vision where responsibility is baked into every line of code.Final Reflection — Responsibility as Legacy

Masood Alam exemplifies a vision of AI that’s not just powerful — but principled. By weaving rigorous ethics frameworks into enterprise systems, leading cross-industry innovation, and shaping public understanding, he ensures AI fulfills its promise — to augment human potential, not replace it.

In a world racing toward automation, Masood Alam reminds us: lasting innovation lives at the intersection of intelligence and integrity.

0 notes

Text

Using generative AI to help robots jump higher and land safely

New Post has been published on https://sunalei.org/news/using-generative-ai-to-help-robots-jump-higher-and-land-safely/

Using generative AI to help robots jump higher and land safely

Diffusion models like OpenAI’s DALL-E are becoming increasingly useful in helping brainstorm new designs. Humans can prompt these systems to generate an image, create a video, or refine a blueprint, and come back with ideas they hadn’t considered before.

But did you know that generative artificial intelligence (GenAI) models are also making headway in creating working robots? Recent diffusion-based approaches have generated structures and the systems that control them from scratch. With or without a user’s input, these models can make new designs and then evaluate them in simulation before they’re fabricated.

A new approach from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) applies this generative know-how toward improving humans’ robotic designs. Users can draft a 3D model of a robot and specify which parts they’d like to see a diffusion model modify, providing its dimensions beforehand. GenAI then brainstorms the optimal shape for these areas and tests its ideas in simulation. When the system finds the right design, you can save and then fabricate a working, real-world robot with a 3D printer, without requiring additional tweaks.

The researchers used this approach to create a robot that leaps up an average of roughly 2 feet, or 41 percent higher than a similar machine they created on their own. The machines are nearly identical in appearance: They’re both made of a type of plastic called polylactic acid, and while they initially appear flat, they spring up into a diamond shape when a motor pulls on the cord attached to them. So what exactly did AI do differently?

A closer look reveals that the AI-generated linkages are curved, and resemble thick drumsticks (the musical instrument drummers use), whereas the standard robot’s connecting parts are straight and rectangular.

Play video

Better and better blobs

The researchers began to refine their jumping robot by sampling 500 potential designs using an initial embedding vector — a numerical representation that captures high-level features to guide the designs generated by the AI model. From these, they selected the top 12 options based on performance in simulation and used them to optimize the embedding vector.

This process was repeated five times, progressively guiding the AI model to generate better designs. The resulting design resembled a blob, so the researchers prompted their system to scale the draft to fit their 3D model. They then fabricated the shape, finding that it indeed improved the robot’s jumping abilities.

The advantage of using diffusion models for this task, according to co-lead author and CSAIL postdoc Byungchul Kim, is that they can find unconventional solutions to refine robots.

“We wanted to make our machine jump higher, so we figured we could just make the links connecting its parts as thin as possible to make them light,” says Kim. “However, such a thin structure can easily break if we just use 3D printed material. Our diffusion model came up with a better idea by suggesting a unique shape that allowed the robot to store more energy before it jumped, without making the links too thin. This creativity helped us learn about the machine’s underlying physics.”

The team then tasked their system with drafting an optimized foot to ensure it landed safely. They repeated the optimization process, eventually choosing the best-performing design to attach to the bottom of their machine. Kim and his colleagues found that their AI-designed machine fell far less often than its baseline, to the tune of an 84 percent improvement.

The diffusion model’s ability to upgrade a robot’s jumping and landing skills suggests it could be useful in enhancing how other machines are designed. For example, a company working on manufacturing or household robots could use a similar approach to improve their prototypes, saving engineers time normally reserved for iterating on those changes.

The balance behind the bounce

To create a robot that could jump high and land stably, the researchers recognized that they needed to strike a balance between both goals. They represented both jumping height and landing success rate as numerical data, and then trained their system to find a sweet spot between both embedding vectors that could help build an optimal 3D structure.

The researchers note that while this AI-assisted robot outperformed its human-designed counterpart, it could soon reach even greater new heights. This iteration involved using materials that were compatible with a 3D printer, but future versions would jump even higher with lighter materials.

Co-lead author and MIT CSAIL PhD student Tsun-Hsuan “Johnson” Wang says the project is a jumping-off point for new robotics designs that generative AI could help with.

“We want to branch out to more flexible goals,” says Wang. “Imagine using natural language to guide a diffusion model to draft a robot that can pick up a mug, or operate an electric drill.”

Kim says that a diffusion model could also help to generate articulation and ideate on how parts connect, potentially improving how high the robot would jump. The team is also exploring the possibility of adding more motors to control which direction the machine jumps and perhaps improve its landing stability.

The researchers’ work was supported, in part, by the National Science Foundation’s Emerging Frontiers in Research and Innovation program, the Singapore-MIT Alliance for Research and Technology’s Mens, Manus and Machina program, and the Gwangju Institute of Science and Technology (GIST)-CSAIL Collaboration. They presented their work at the 2025 International Conference on Robotics and Automation.

0 notes

Text

Generating a realistic 3D world

🧬 ..::Science & Tech::.. 🧬 A new AI-powered, virtual platform uses real-world physics to simulate a rich and interactive audio-visual environment, enabling human and robotic learning, training, and experimental studies

#MIT#SchoolOfScience#Schwarzman#CollegeOfComputing#CognitiveSciences#Electrical#ComputerScience#eecs#AI#CSAIL#AugmentedReality#Games#MachineLearning#Algorithms#Robotics#Industry#Research

0 notes

Text

AI copilot enhances human precision for safer aviation

📢 Exciting news! MIT researchers have developed Air-Guardian, an AI copilot system that enhances human precision for safer aviation. 🛩️✨ Air-Guardian uses eye-tracking and saliency maps to determine pilots' attention and identify potential risks. With the ability to adapt to different situations, it creates a balanced partnership between humans and machines. Field tests have already proven its effectiveness, reducing risks and increasing navigation success rates. Find out more about this groundbreaking collaboration between human expertise and machine learning in aviation safety. Check out the blog post here: https://ift.tt/hpJksu7 💡 Action Items: 1️⃣ Learn more about the Air-Guardian system and its applications beyond aviation. 2️⃣ Dive into the optimization-based cooperative layer and liquid closed-form continuous-time neural networks (CfC) used in the Air-Guardian system. 3️⃣ Explore the potential for using visual attention metrics in other AI systems and applications. 4️⃣ Consider refining the human-machine interface for a more intuitive indicator when the guardian takes control. 5️⃣ Gather feedback and opinions from pilots and aviation experts on the effectiveness and potential improvements of the Air-Guardian system. 6️⃣ Discover the funding sources for the Air-Guardian research and evaluate their impact on collaborations or partnerships. Join us in revolutionizing aviation safety with innovative AI technologies that work hand in hand with human expertise. 🌟✈️ #AI #AviationSafety #MITResearch #Innovation [Image Source: MIT News] List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#AI copilot enhances human precision for safer aviation#AI News#AI tools#Innovation#itinai#LLM#MIT News - Artificial intelligence#Productivity#Rachel Gordon | MIT CSAIL AI copilot enhances human precision for safer aviation

0 notes

Link

0 notes

Text

1 note

·

View note