#Linux Mint vs Fedora

Explore tagged Tumblr posts

Text

Guía sobre Linux Mint: La Distribución Linux para la Productividad

1. Introducción

Presentación de Linux Mint

Linux Mint es una distribución de Linux basada en Ubuntu, conocida por su enfoque en la facilidad de uso y la accesibilidad. Lanzada por primera vez en 2006, Mint ha ganado popularidad por su interfaz amigable y su capacidad para ofrecer una experiencia de usuario similar a la de sistemas operativos tradicionales como Windows.

Importancia de Linux Mint en el ecosistema Linux

Linux Mint ha sido una de las distribuciones más queridas por usuarios que buscan una transición suave desde otros sistemas operativos. Su enfoque en la estabilidad y la facilidad de uso lo convierte en una opción popular para nuevos usuarios y para quienes desean una alternativa confiable a otros sistemas operativos.

2. Historia y Filosofía de Linux Mint

Origen y evolución de Linux Mint

Linux Mint fue creado por Clement Lefebvre como una alternativa más amigable y accesible a Ubuntu, con el objetivo de ofrecer un entorno de escritorio completo y fácil de usar desde el primer momento. A lo largo de los años, ha evolucionado para incluir una serie de herramientas y características que mejoran la experiencia del usuario.

Filosofía de Linux Mint y el software libre

Linux Mint sigue los principios del software libre y open source, pero a diferencia de Debian y Ubuntu, Mint incluye software propietario y controladores para asegurar una experiencia de usuario más completa. Su lema, "Just Works" (Simplemente Funciona), refleja su compromiso con la usabilidad.

3. Características Clave de Linux Mint

Facilidad de uso

Linux Mint está diseñado para ser intuitivo y fácil de usar, con un entorno de escritorio que facilita la transición desde otros sistemas operativos. Ofrece una experiencia de usuario familiar con menús y paneles que se asemejan a los de Windows.

Gestor de paquetes

APT (Advanced Package Tool) es el gestor de paquetes principal de Linux Mint, heredado de Ubuntu. APT facilita la instalación, actualización y eliminación de software desde los repositorios de Mint.

Comandos básicos: sudo apt update, sudo apt install [paquete], sudo apt remove [paquete].

Formatos de paquetes soportados

Linux Mint es compatible con varios formatos de paquetes:

.deb: El formato nativo de Debian y Ubuntu, utilizado también en Mint.

.snap: Linux Mint soporta Snap, un formato de paquetes universales desarrollado por Canonical.

.appimage: Archivos portátiles que pueden ejecutarse directamente sin necesidad de instalación.

.flatpak: Linux Mint puede instalar soporte para Flatpak, un formato de paquetes universal.

4. Proceso de Instalación de Linux Mint

Requisitos mínimos del sistema

Procesador: 1 GHz o superior.

Memoria RAM: 2 GB como mínimo, 4 GB o más recomendados.

Espacio en disco: 20 GB de espacio libre en disco.

Tarjeta gráfica: Soporte para una resolución mínima de 1024x768.

Unidad de DVD o puerto USB para la instalación.

Descarga y preparación del medio de instalación

Linux Mint se puede descargar desde el sitio web oficial. Se puede preparar un USB booteable usando herramientas como Rufus o balenaEtcher.

Guía paso a paso para la instalación

Selección del entorno de instalación: Linux Mint ofrece un instalador gráfico sencillo que guía a los usuarios a través del proceso de instalación.

Configuración de particiones: El instalador ofrece opciones de particionado automático y manual para adaptarse a diferentes necesidades.

Configuración de la red y selección de software: Durante la instalación, se configuran las opciones de red y se pueden elegir opciones de software adicional.

Primeros pasos post-instalación

Actualización del sistema: Es recomendable ejecutar sudo apt update && sudo apt upgrade después de la instalación para asegurarse de que todo el software esté actualizado.

Instalación de controladores adicionales y software: Linux Mint puede detectar e instalar automáticamente controladores adicionales para el hardware.

5. Entornos de Escritorio en Linux Mint

Cinnamon (predeterminado)

Cinnamon ofrece una experiencia de usuario moderna con un diseño intuitivo y muchas opciones de personalización.

MATE

MATE proporciona un entorno de escritorio clásico y estable, basado en GNOME 2.

Xfce

Xfce es conocido por su ligereza y eficiencia, ideal para sistemas más antiguos o con recursos limitados.

6. Gestión de Paquetes en Linux Mint

APT: El gestor de paquetes de Linux Mint

Comandos básicos: apt-get, apt-cache, aptitude.

Instalación y eliminación de paquetes: sudo apt install [paquete], sudo apt remove [paquete].

Snap: Paquetes universales

Comandos básicos de Snap: sudo snap install [paquete], sudo snap remove [paquete].

Snap permite instalar software con todas sus dependencias en un solo paquete, asegurando la compatibilidad.

Flatpak: Paquetes universales

Comandos básicos de Flatpak: flatpak install [repositorio] [paquete], flatpak uninstall [paquete].

Flatpak proporciona una forma de distribuir y ejecutar aplicaciones en contenedores aislados.

Gestor de software de Linux Mint

Linux Mint incluye el "Gestor de actualizaciones" y el "Gestor de software" para simplificar la instalación y actualización de aplicaciones.

7. Linux Mint en el Entorno Empresarial y Servidores

Uso de Linux Mint en el entorno empresarial

Linux Mint es popular en entornos de escritorio debido a su facilidad de uso y estabilidad. Sin embargo, para servidores, muchas empresas optan por Ubuntu Server o Debian debido a sus características y soporte específicos.

Mantenimiento y soporte

Linux Mint sigue un ciclo de lanzamiento basado en la versión LTS de Ubuntu, proporcionando actualizaciones y soporte a largo plazo.

8. Comparativa de Linux Mint con Otras Distribuciones

Linux Mint vs. Ubuntu

Objetivo: Linux Mint ofrece una experiencia de usuario más cercana a sistemas operativos tradicionales, con un enfoque en la simplicidad y la accesibilidad. Ubuntu, por otro lado, se enfoca en la innovación y la integración con el ecosistema de Canonical.

Filosofía: Linux Mint incluye más software y controladores propietarios para una experiencia lista para usar, mientras que Ubuntu ofrece más flexibilidad y actualizaciones más frecuentes.

Linux Mint vs. Fedora

Objetivo: Fedora está orientado a ofrecer las últimas tecnologías de Linux, mientras que Linux Mint se enfoca en una experiencia de usuario estable y familiar.

Filosofía: Fedora prioriza la integración de nuevas tecnologías, mientras que Mint sigue un enfoque más conservador en términos de estabilidad y familiaridad.

Linux Mint vs. Arch Linux

Objetivo: Arch Linux está diseñado para usuarios avanzados que desean un control total sobre su sistema, mientras que Linux Mint se enfoca en la facilidad de uso y una experiencia lista para usar.

Filosofía: Arch sigue la filosofía KISS y el modelo rolling release, mientras que Mint proporciona versiones estables y preconfiguradas para un uso inmediato.

9. Conclusión

Linux Mint como una opción amigable y productiva

Linux Mint es una excelente opción para quienes buscan una distribución Linux fácil de usar y con una experiencia de usuario familiar. Su enfoque en la estabilidad y la accesibilidad lo convierte en una opción popular para usuarios que desean una transición sin problemas desde otros sistemas operativos.

Recomendaciones finales para quienes consideran usar Linux Mint

Linux Mint es ideal para aquellos que buscan un sistema operativo confiable y accesible, con un entorno de escritorio amigable y una amplia gama de herramientas y aplicaciones preinstaladas.

10. Preguntas Frecuentes (FAQ)

¿Linux Mint es adecuado para principiantes?

Sí, Linux Mint es muy adecuado para principiantes debido a su interfaz amigable y facilidad de uso.

¿Cómo actualizo mi sistema Linux Mint?

Ejecutando sudo apt update && sudo apt upgrade mantendrás tu sistema actualizado.

¿Es Linux Mint una buena opción para servidores?

Aunque Linux Mint es más popular en entornos de escritorio, para servidores muchas empresas prefieren Ubuntu Server o Debian.

#Linux Mint#distribución Linux#Linux#Cinnamon#MATE#Xfce#gestor de paquetes#APT#Snap#Flatpak#gestión de paquetes#instalación Linux Mint#software libre#comparación Linux Mint#Linux Mint vs Ubuntu#Linux Mint vs Fedora#Linux Mint vs Arch Linux#requisitos mínimos Linux Mint#comunidad Linux Mint#recursos Linux Mint#actualizaciones Linux Mint

2 notes

·

View notes

Text

If you're not familiar with Linux, like, at all, don't worry, there's still a "version" of Linux for you! And unlike Windows or Mac, being a different "version" doesn't mean you're ahead or behind, it means you're using an alternate distribution, or "distro" of Linux than what someone else may be using.

(People who already know Linux and have a distro they like, ignore this post, this is for the potential newbies. I'll be saying things that are "broad strokes" and will have exceptions because I'm talking to people who don't play in the spaces where exceptions rule.)

Think of it like cars. The average person gets either a nice non-commercial vehicle or (more rarely) a motorcycle. Technically, these are motor vehicles, but there's distinct differences in how you use them (think of it like the difference between iOS and Android, both can get you where you want to go, but it may require doing it in different ways), and their drivers are usually very familiar with their personal way of navigating their vehicle from point A to point B.

Pushing the metaphor even more; think of the distros like different types of cars (not brands), 80-90% of people will be perfectly happy with a sedan, some want a coupe, others need an SUV, and still others have a good reason to have a pickup truck.

(There are, of course, the idiot bros who think that they need The Biggest And Shiniest Possible Thing™ regardless of their actual needs and so will get an F-150 with all the bells and whistles and it never even sees dirt or mud, let along get used for its intended purpose as a heavy-duty use vehicle. There's Linux Bros that exist that swear that they "need" an Enterprise-grade Linux to run their daily driver computer when they barely ping the graphics card because all they're doing is browsing websites.)

There do exist, and you should use them if you're new to all this, "Distro pickers" that will ask you a series of questions that measure things like whether you're a techie or a newb, whether you like Mac or Windows, whether you want paid support or go free all the way, and whether you're okay using proprietary tech vs. open source. These are GREAT for making sure you get exactly what you're likely to use, and depending on your particular desire to deep-dive into the worlds of open source software, you could wind up picking a newbie's distro and sticking with it the rest of your life or you might wind up hopping to a new distro after you've learned more.

I've most closely aligned with OpenSuSE (originally just "SuSE" until they came out with a paid support branch and had to distinguish the free vs. paid versions) nearly my entire time using Linux. I've tried Redhat/Fedora, Mint, Ubuntu, and a few other distros, but I keep coming back to OpenSuSE for my personal use needs (and, sometimes, setting up a server for a business using it).

I found this distro picker with a few seconds search on DuckDuckGo:

I took the test sight-unseen (I have never used this one before), and sure enough the first result was OpenSuSE. Interestingly enough, on the ranked choices for the distros it recommended, Mint did come up at #8.

You WILL be able to find support, though sometimes you have to remind the old guard that, no matter how many times they tell you to RTFM, there is, in fact, not always a manual to fucking read.

Once you've really grokked the liberating feeling of knowing you're running your computing experience on a platform that respects your boundaries, doesn't strain your hardware with every update, and is hardened from most (most) malware threats by default, you'll never want to go back.

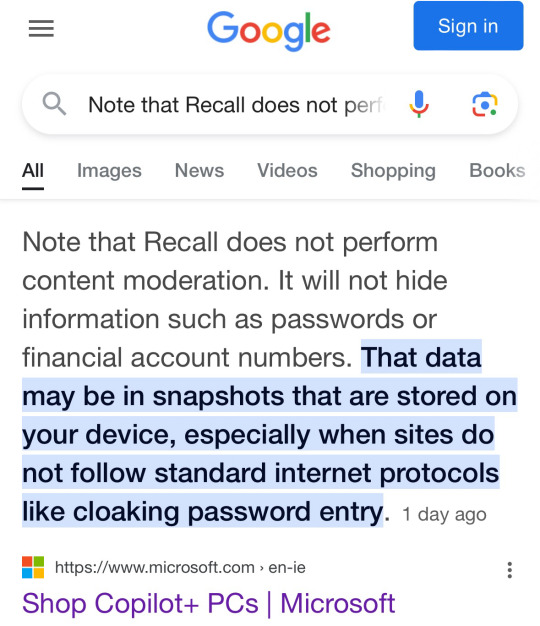

Literal definition of spyware:

Also From Microsoft’s own FAQ: "Note that Recall does not perform content moderation. It will not hide information such as passwords or financial account numbers. 🤡

218K notes

·

View notes

Text

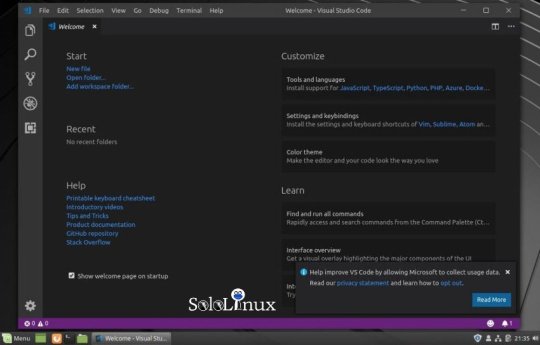

Install VSCodium 1.70.0 on Ubuntu / Fedora & Alma Linux

This tutorial will be helpful for beginners to install VSCodium 1.70.0 on Ubuntu 22.04 LTS, Linux Mint 21, Pop OS 22.04 LTS, Fedora 36, Alma Linux 9 and Rocky Linux 9. VSCodium is a community-driven, free/Libre Open Source Software Binaries of VS Code current release windows_build_status. VSCodium is not a fork. It will automatically build Microsoft vscode repository into freely licensed binaries with a community driven default configuration.

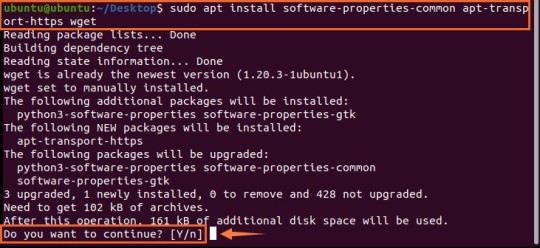

Install VSCodium 1.7.0 on Ubuntu / Linux Mint

Step 1: Make sure system is up to date sudo apt update && sudo apt upgrade Step 2: Add the VSCodium GPG Key wget -qO - https://gitlab.com/paulcarroty/vscodium-deb-rpm-repo/raw/master/pub.gpg | gpg --dearmor | sudo dd of=/usr/share/keyrings/vscodium-archive-keyring.gpg Step 3: Add the repository echo 'deb https://download.vscodium.com/debs vscodium main' | sudo tee /etc/apt/sources.list.d/vscodium.list Step 4: Install VSCodium on Ubuntu / Linux Mint sudo apt update && sudo apt install codium

Install VSCodium 1.7.0 on Fedora / Alma Linux / RHEL

Step 1: Make sure system is up to date. Step 2: Add the GPG Key to your system sudo rpmkeys --import https://gitlab.com/paulcarroty/vscodium-deb-rpm-repo/-/raw/master/pub.gpg Step 3: Add the Repository cat >> /etc/yum.repos.d/vscodium.repo Read the full article

0 notes

Text

Visual Studio Code Apt

Visit the VS Code install page and select the 32 or 64 bit installer. Install Visual Studio Code on Windows (not in your WSL file system). When prompted to Select Additional Tasks during installation, be sure to check the Add to PATH option so you can easily open a folder in WSL using the code command. Visual Studio Code is a free and open-source, cross-platform IDE or code editor that enables developers to develop applications and write code using a myriad of programming languages such as C, C, Python, Go and Java to mention a few. To Install Visual Studio Code on Debian, Ubuntu and Linux Mint: 1. Update your system by running the command. Introduction to Visual Studio Code. Introduction to Visual Studio Code. Skip to Content Current Page: Blog About Contact FAQs. Now, to install the Visual Studio Code DEB package file, run the APT command as follows. $ sudo apt install. / code.deb The APT package manager should start installing the DEB package file. At this point, Visual Studio Code should be installed.

Visual Studio Code Apt

Visual Studio Code Apt Install

Apt Remove Visual Studio Code

Installation

See the Download Visual Studio Code page for a complete list of available installation options.

By downloading and using Visual Studio Code, you agree to the license terms and privacy statement.

Snap

Visual Studio Code is officially distributed as a Snap package in the Snap Store:

You can install it by running:

Once installed, the Snap daemon will take care of automatically updating VS Code in the background. You will get an in-product update notification whenever a new update is available.

Note: If snap isn't available in your Linux distribution, please check the following Installing snapd guide, which can help you get that set up.

Learn more about snaps from the official Snap Documentation.

Debian and Ubuntu based distributions

The easiest way to install Visual Studio Code for Debian/Ubuntu based distributions is to download and install the .deb package (64-bit), either through the graphical software center if it's available, or through the command line with:

Note that other binaries are also available on the VS Code download page.

Installing the .deb package will automatically install the apt repository and signing key to enable auto-updating using the system's package manager. Alternatively, the repository and key can also be installed manually with the following script:

Then update the package cache and install the package using:

RHEL, Fedora, and CentOS based distributions

We currently ship the stable 64-bit VS Code in a yum repository, the following script will install the key and repository:

Then update the package cache and install the package using dnf (Fedora 22 and above):

Or on older versions using yum:

Due to the manual signing process and the system we use to publish, the yum repo may lag behind and not get the latest version of VS Code immediately.

openSUSE and SLE-based distributions

The yum repository above also works for openSUSE and SLE-based systems, the following script will install the key and repository:

Then update the package cache and install the package using:

AUR package for Arch Linux

There is a community-maintained Arch User Repository package for VS Code.

To get more information about the installation from the AUR, please consult the following wiki entry: Install AUR Packages.

Nix package for NixOS (or any Linux distribution using Nix package manager)

There is a community maintained VS Code Nix package in the nixpkgs repository. In order to install it using Nix, set allowUnfree option to true in your config.nix and execute:

Installing .rpm package manually

The VS Code .rpm package (64-bit) can also be manually downloaded and installed, however, auto-updating won't work unless the repository above is installed. Once downloaded it can be installed using your package manager, for example with dnf:

Note that other binaries are also available on the VS Code download page.

Updates

VS Code ships monthly and you can see when a new release is available by checking the release notes. If the VS Code repository was installed correctly, then your system package manager should handle auto-updating in the same way as other packages on the system.

Note: Updates are automatic and run in the background for the Snap package.

Node.js

Node.js is a popular platform and runtime for easily building and running JavaScript applications. It also includes npm, a Package Manager for Node.js modules. You'll see Node.js and npm mentioned frequently in our documentation and some optional VS Code tooling requires Node.js (for example, the VS Code extension generator).

If you'd like to install Node.js on Linux, see Installing Node.js via package manager to find the Node.js package and installation instructions tailored to your Linux distribution. You can also install and support multiple versions of Node.js by using the Node Version Manager.

To learn more about JavaScript and Node.js, see our Node.js tutorial, where you'll learn about running and debugging Node.js applications with VS Code.

Setting VS Code as the default text editor

xdg-open

You can set the default text editor for text files (text/plain) that is used by xdg-open with the following command:

Debian alternatives system

Debian-based distributions allow setting a default editor using the Debian alternatives system, without concern for the MIME type. You can set this by running the following and selecting code:

If Visual Studio Code doesn't show up as an alternative to editor, you need to register it:

Windows as a Linux developer machine

Another option for Linux development with VS Code is to use a Windows machine with the Windows Subsystem for Linux (WSL).

Windows Subsystem for Linux

Visual Studio Code Apt

With WSL, you can install and run Linux distributions on Windows. This enables you to develop and test your source code on Linux while still working locally on a Windows machine. WSL supports Linux distributions such as Ubuntu, Debian, SUSE, and Alpine available from the Microsoft Store.

When coupled with the Remote - WSL extension, you get full VS Code editing and debugging support while running in the context of a Linux distro on WSL.

See the Developing in WSL documentation to learn more or try the Working in WSL introductory tutorial.

Next steps

Once you have installed VS Code, these topics will help you learn more about it:

Additional Components - Learn how to install Git, Node.js, TypeScript, and tools like Yeoman.

User Interface - A quick orientation to VS Code.

User/Workspace Settings - Learn how to configure VS Code to your preferences through settings.

Common questions

Azure VM Issues

I'm getting a 'Running without the SUID sandbox' error?

You can safely ignore this error.

Debian and moving files to trash

If you see an error when deleting files from the VS Code Explorer on the Debian operating system, it might be because the trash implementation that VS Code is using is not there.

Run these commands to solve this issue:

Conflicts with VS Code packages from other repositories

Some distributions, for example Pop!_OS provide their own code package. To ensure the official VS Code repository is used, create a file named /etc/apt/preferences.d/code with the following content:

'Visual Studio Code is unable to watch for file changes in this large workspace' (error ENOSPC)

When you see this notification, it indicates that the VS Code file watcher is running out of handles because the workspace is large and contains many files. Before adjusting platform limits, make sure that potentially large folders, such as Python .venv, are added to the files.watcherExclude setting (more details below). The current limit can be viewed by running:

The limit can be increased to its maximum by editing /etc/sysctl.conf (except on Arch Linux, read below) and adding this line to the end of the file:

The new value can then be loaded in by running sudo sysctl -p.

While 524,288 is the maximum number of files that can be watched, if you're in an environment that is particularly memory constrained, you may wish to lower the number. Each file watch takes up 1080 bytes, so assuming that all 524,288 watches are consumed, that results in an upper bound of around 540 MiB.

Arch-based distros (including Manjaro) require you to change a different file; follow these steps instead.

Another option is to exclude specific workspace directories from the VS Code file watcher with the files.watcherExcludesetting. The default for files.watcherExclude excludes node_modules and some folders under .git, but you can add other directories that you don't want VS Code to track.

I can't see Chinese characters in Ubuntu

We're working on a fix. In the meantime, open the application menu, then choose File > Preferences > Settings. In the Text Editor > Font section, set 'Font Family' to Droid Sans Mono, Droid Sans Fallback. If you'd rather edit the settings.json file directly, set editor.fontFamily as shown:

Package git is not installed

This error can appear during installation and is typically caused by the package manager's lists being out of date. Try updating them and installing again:

The code bin command does not bring the window to the foreground on Ubuntu

Running code . on Ubuntu when VS Code is already open in the current directory will not bring VS Code into the foreground. This is a feature of the OS which can be disabled using ccsm.

Under General > General Options > Focus & Raise Behaviour, set 'Focus Prevention Level' to 'Off'. Remember this is an OS-level setting that will apply to all applications, not just VS Code.

Visual Studio Code Apt Install

Cannot install .deb package due to '/etc/apt/sources.list.d/vscode.list: No such file or directory'

This can happen when sources.list.d doesn't exist or you don't have access to create the file. To fix this, try manually creating the folder and an empty vscode.list file:

Cannot move or resize the window while X forwarding a remote window

If you are using X forwarding to use VS Code remotely, you will need to use the native title bar to ensure you can properly manipulate the window. You can switch to using it by setting window.titleBarStyle to native.

Using the custom title bar

The custom title bar and menus were enabled by default on Linux for several months. The custom title bar has been a success on Windows, but the customer response on Linux suggests otherwise. Based on feedback, we have decided to make this setting opt-in on Linux and leave the native title bar as the default.

Apt Remove Visual Studio Code

The custom title bar provides many benefits including great theming support and better accessibility through keyboard navigation and screen readers. Unfortunately, these benefits do not translate as well to the Linux platform. Linux has a variety of desktop environments and window managers that can make the VS Code theming look foreign to users. For users needing the accessibility improvements, we recommend enabling the custom title bar when running in accessibility mode using a screen reader. You can still manually set the title bar with the Window: Title Bar Style (window.titleBarStyle) setting.

Broken cursor in editor with display scaling enabled

Due to an upstream issue #14787 with Electron, the mouse cursor may render incorrectly with scaling enabled. If you notice that the usual text cursor is not being rendered inside the editor as you would expect, try falling back to the native menu bar by configuring the setting window.titleBarStyle to native.

Repository changed its origin value

If you receive an error similar to the following:

Use apt instead of apt-get and you will be prompted to accept the origin change:

0 notes

Text

AppArmor vs SELinux

AppArmor vs SELinux. Para aumentar los mecanismos de seguridad que ofrecen los permisos y las listas de control de acceso "ugo/rwx", la Agencia de Seguridad Nacional de los Estados Unidos (NSA) desarrollo un control de acceso obligatorio que todos conocemos como SELinux (Security Enhanced Linux). Por otro lado, y a modo privativo la empresa Immunix creo AppArmor. Novell adquirió AppArmor, y puso la herramienta en manos de la comunidad en formato open source. Actualmente es Canonical quien maneja su desarrollo y mantenimiento. RHEL, CentOS y Fedora, son las distribuciones linux más conocidas que usan SELinux por defecto. Por otra parte Ubuntu, Linux Mint y Open Suse, están entre las destacadas que utilizan AppArmor (Open Suse desarrollo su propia GUI para manejar fácilmente las reglas de la herramienta). Seria muy dificil hacer una comparativa realista de las dos aplicaciones. El fin de las dos es el mismo, pero su forma de operar difiere en gran medida, por eso vamos a explicar un poco cada herramienta, y tu decides cual te conviene dado que ambas son compatibles con cualquier distribución linux.

AppArmor vs SELinux

AppArmor Si hay algo que me gusta de AppArmor es su modo de autoaprendizaje, es capaz de detectar cómo debe funcionar nuestro sistema de forma automática. En vez de políticas administradas por comandos, AppArmor utiliza perfiles que se definen en archivos de texto que pueden ser modificados de forma muy sencilla. Puedes encontrar los perfiles predefinidos (se permite agregar más), con el siguiente comando: cd /etc/apparmor.d dir ejemplo de edición... nano usr.bin.firefox Profundizar en la configuración de SELinux es complejo, se requieren unos conocimientos nivel medio/alto. Por el motivo mencionado se creo AppArmor, digamos que puede hacer lo mismo pero de una forma mucho mas sencilla y con menos peligro (si te equivocas editas de nuevo el archivo o desactivas el perfil). Para desactivar un perfil tan solo tienes que ejecutar lo siguiente (en el ejemplo el de firefox): sudo ln -s /etc/apparmor.d/usr.sbin.firefox /etc/apparmor.d/disable/ AppArmor ofrece dos modos predefinidos de seguridad, complain y enforce. Modificar un perfil con un modo de seguridad predeterminado también es una operación simple. En el ejemplo modificamos el perfil "usr.sbin.ntpd". # complain aa-complain /etc/apparmor.d/usr.sbin.ntpd # enforce aa-enforce /etc/apparmor.d/usr.sbin.ntpd También podemos verificar el estado actual de AppArmor y visualizar los perfiles habilitados. apparmor_status imagen de ejemplo...

AppArmor Para más información visita la Ubuntu wiki. SELinux Por su forma de operar SELinux puede ser mucho más estricto que AppArmor, incluso muchas herramientas y paneles de control web recomiendan desactivarlo para no tener problemas. Al igual que AppArmor, SELinux (Security Enhanced Linux) también tiene dos modos de protección: enforcing: SELinux niega accesos en función de la política de las reglas establecidas. permissive: SELinux no bloquea el acceso, pero se registrarán para un posterior análisis. Puedes verificar el modo que estas utilizando con el comando... getenforce Podemos modificar el modo (incluso deshabilitar SELinux) en su archivo de configuración. nano /etc/selinux/config Cambias la linea: SELINUX= xxx por alguna de las siguientes opciones: enforcing permissive disabled Guarda el archivo, cierra el editor, y reinicia el sistema. reboot Verificamos el estado de SELinux. sestatus

SELinux Ahora vemos unos ejemplos de uso. Una de las operaciones más comunes es al modificar el puerto ssh (por defecto 22), es evidente que debemos decirle a SELinux que ssh cambia de puerto, por ejemplo el 123. semanage port -a -t ssh_port_t -p tcp 123 Otro ejemplo es cambiar la carpeta permitida como servidor web. semanage fcontext -a -t httpd_sys_content_t "/srv/www(/.*)?" restorecon -R -v /srv/www Puedes obtener más información en su wiki oficial. Conclusión final Los dos sistemas de seguridad tratados en este articulo nos ofrecen herramientas para aislar aplicaciones, y a un posible atacante del resto del sistema (cuando una aplicación es comprometida). Los conjuntos de reglas de SELinux son excesivamente complejos, por otro lado nos permite tener más control sobre cómo se aíslan los procesos. La generación de las políticas puede ser automatica, aun así su manejo es complicado si no eres experto. AppArmor es más sencillo de usar. Los perfiles se pueden escribir a mano con tu editor preferido, o generarlos con "aa-logprof". AppArmor utiliza un control basado en rutas, por tanto el sistema es más transparente y se puede verificar de forma independiente. La elección es tuya. Canales de Telegram: Canal SoloLinux – Canal SoloWordpress Espero que este articulo te sea de utilidad, puedes ayudarnos a mantener el servidor con una donación (paypal), o también colaborar con el simple gesto de compartir nuestros artículos en tu sitio web, blog, foro o redes sociales. Read the full article

0 notes

Text

2018-04-06 12 LINUX now

LINUX

Linux Academy Blog

Introducing the Identity and Access Management (IAM) Deep Dive

Spring Content Releases – Week 1 Livestream Recaps

Announcing Google App Engine Deep Dive

Employee Spotlight: Favian Ramirez, Business Development Representative

Say hello to our new Practice Exams system!

Linux Insider

Bluestar Gives Arch Linux a Celestial Glow

Mozilla Trumpets Altered Reality Browser

Microsoft Offers New Tool to Grow Linux in Windows

New Firefox Extension Builds a Wall Around Facebook

Neptune 5: A Practically Perfect Plasma-Based Distro

Linux Journal

Tackling L33t-Speak

Subutai Blockchain Router v2.0, NixOS New Release, Slimbook Curve and More

VIDEO: When Linux Demos Go Wrong

How Wizards and Muggles Break Free from the Matrix

Richard Stallman's Privacy Proposal, Valve's Commitment to Linux, New WordPress Update and More

Linux Magazine

Solomon Hykes Leaves Docker

Red Hat Celebrates 25th Anniversary with a New Code Portal

Gnome 3.28 Released

Install Firefox in a Snap on Linux

OpenStack Queens Released

Linux Today

How to Install Ansible AWX on CentOS 7

Tips To Speed Up Ubuntu Linux

How to install Kodi on Your Raspberry Pi

Open Source Accounting Program GnuCash 3.0 Released With a New CSV Importer Tool Rewritten in C++

Linux Mint vs. MX Linux: What's Best for You?

Linux.com

Linux Kernel Developer: Steven Rostedt

Cybersecurity Vendor Selection: What Needs to Be in a Good Policy

5 Things to Know Before Adopting Microservice and Container Architectures

Why You Should Use Column-Indentation to Improve Your Code’s Readability

Learn Advanced SSH Commands with the New Cheat Sheet

Reddit Linux

A top Linux security programmer, Matthew Garrett, has discovered Linux in Symantec's Norton Core Router. It appears Symantec has violated the GPL by not releasing its router's source code.

Unboxing the HiFive Unleashed, RISC-V GNU/Linux Board

patch runs ed, and ed can run anything

Open source is under attack from new EU copyright laws. Learn How the EU's Copyright Reform Threatens Open Source--and How to Fight It

Long overdue for a Linux phone, don't you agree?

Riba Linux

How to install Antergos 18.4 "KDE"

Antergos 18.4 "KDE" overview | For Everyone

SimbiOS 18.0 (Ocean) - Cinnamon | Meet SimbiOS.

How to install Archman Xfce 18.03

Archman Xfce 18.03 overview

Slashdot Linux

The FCC Is Refusing To Release Emails About Ajit Pai's 'Harlem Shake' Video

Motorola's Modular Smartphone Dream Is Too Young To Die

Microsoft Modifies Open-Source Code, Blows Hole In Windows Defender

Secret Service Warns of Chip Card Scheme

Coinbase Launches Early-Stage Venture Fund

Softpedia

LibreOffice 6.0.3

Fedora 27 / 28 Beta

OpenBSD 6.3

RaspArch 180402

4MLinux 24.1 / 25.0 Beta

Tecmint

GraphicsMagick – A Powerful Image Processing CLI Tool for Linux

Manage Your Passwords with RoboForm Everywhere: 5-Year Subscriptions

Gerbera – A UPnP Media Server That Let’s You Stream Media on Home Network

Android Studio – A Powerful IDE for Building Apps for All Android Devices

System Tar and Restore – A Versatile System Backup Script for Linux

nixCraft

OpenBSD 6.3 released ( Download of the day )

Book review: Ed Mastery

Linux/Unix desktop fun: sl – a mirror version of ls

Raspberry PI 3 model B+ Released: Complete specs and pricing

Debian Linux 9.4 released and here is how to upgrade it

0 notes

Text

Guía Integral para la Selección de Distribuciones Linux: Todo lo que Necesita Saber

1. Introducción

Breve introducción al mundo Linux

Linux es un sistema operativo de código abierto que se ha convertido en una base sólida para una amplia variedad de distribuciones, cada una adaptada a diferentes necesidades y usuarios.

Importancia de escoger la distribución adecuada

La elección de la distribución Linux correcta puede mejorar considerablemente la experiencia del usuario. Esta decisión afecta la facilidad de uso, la estabilidad del sistema, y la disponibilidad de software, entre otros factores.

2. ¿Qué es una Distribución Linux?

Definición de distribución Linux

Una distribución Linux es un sistema operativo compuesto por el kernel de Linux, software del sistema y aplicaciones, todo empaquetado para ofrecer una experiencia específica al usuario.

Componentes clave de una distribución

Kernel de Linux: El núcleo que interactúa directamente con el hardware.

Entorno de escritorio: La interfaz gráfica (Gnome, KDE, Xfce, etc.).

Gestores de paquetes: Herramientas para instalar, actualizar y gestionar software (APT, YUM, Pacman, etc.).

Cómo surgen las diferentes distribuciones

Las distribuciones Linux suelen derivarse de bases comunes como Debian, Red Hat o Arch, adaptadas para cumplir con diferentes filosofías, niveles de estabilidad y propósitos.

3. Tipos de Distribuciones Linux

Distribuciones basadas en Debian

Características principales: Estabilidad, gran comunidad, soporte a largo plazo.

Ejemplos populares: Ubuntu, Linux Mint.

Distribuciones basadas en Red Hat

Características principales: Orientación empresarial, robustez, soporte comercial.

Ejemplos populares: Fedora, CentOS, RHEL.

Distribuciones basadas en Arch

Características principales: Personalización, simplicidad, enfoque en el usuario avanzado.

Ejemplos populares: Arch Linux, Manjaro.

Distribuciones especializadas

Para servidores: CentOS, Ubuntu Server.

Para hardware antiguo: Puppy Linux, Lubuntu.

Para seguridad: Kali Linux, Parrot OS.

Para desarrolladores: Pop!_OS, Fedora Workstation.

4. Factores Clave a Considerar al Escoger una Distribución

Experiencia del usuario

Algunas distribuciones están diseñadas para ser amigables y fáciles de usar (ej. Linux Mint), mientras que otras requieren conocimientos avanzados (ej. Arch Linux).

Compatibilidad de hardware

Es crucial asegurarse de que la distribución sea compatible con el hardware disponible, especialmente en computadoras más antiguas.

Gestión de paquetes

La simplicidad en la instalación y actualización del software es esencial, y aquí es donde entran los gestores de paquetes.

Frecuencia de actualizaciones

Rolling release (actualizaciones continuas, como en Arch Linux) vs. release fijas (ciclos estables, como en Ubuntu).

Entorno de escritorio

El entorno de escritorio afecta la experiencia visual y funcional del usuario. GNOME, KDE, y Xfce son algunos de los más comunes.

Uso previsto

Dependiendo de si el sistema se usará para tareas de oficina, desarrollo, servidores, o seguridad, se debe elegir una distribución acorde.

5. Guía Comparativa de Distribuciones Populares

Ubuntu vs. Fedora

Objetivo: Ubuntu se centra en la facilidad de uso para el usuario final, mientras que Fedora impulsa la adopción de tecnologías más recientes y es una base para Red Hat.

Filosofía: Ubuntu se basa en la simplicidad y accesibilidad, mientras que Fedora sigue la filosofía de "Freedom, Friends, Features, First" (Libertad, Amigos, Características, Primero), priorizando la innovación.

Debian vs. Arch Linux

Objetivo: Debian prioriza la estabilidad y seguridad, siendo ideal para servidores, mientras que Arch Linux es para usuarios que desean un sistema personalizado y actualizado constantemente.

Filosofía: Debian se adhiere a la filosofía de software libre y estabilidad, mientras que Arch sigue el principio de "Keep It Simple, Stupid" (KISS), ofreciendo un sistema base para construir según las necesidades del usuario.

Kali Linux vs. Ubuntu

Objetivo: Kali Linux está diseñado para pruebas de penetración y auditorías de seguridad, mientras que Ubuntu es una distribución generalista para uso en escritorio.

Filosofía: Kali Linux sigue una filosofía de seguridad y especialización extrema, mientras que Ubuntu promueve una experiencia accesible y amigable para todos.

Manjaro vs. CentOS

Objetivo: Manjaro busca combinar la personalización de Arch con la facilidad de uso, mientras que CentOS es una opción estable y robusta para servidores.

Filosofía: Manjaro es para usuarios que desean la última tecnología con una curva de aprendizaje más accesible, mientras que CentOS sigue una filosofía de estabilidad y durabilidad a largo plazo en entornos empresariales.

6. Cómo Instalar y Probar Distribuciones Linux

Métodos para probar distribuciones

Live USB/CD: Permite ejecutar la distribución sin instalarla.

Máquina virtual: Usar software como VirtualBox o VMware para probar distribuciones sin modificar tu sistema principal.

Guía paso a paso para instalar una distribución

Preparación del medio de instalación: Crear un USB booteable con herramientas como Rufus o Etcher.

Configuración del sistema durante la instalación: Configurar particiones, seleccionar el entorno de escritorio y el gestor de arranque.

Post-instalación: Actualizar el sistema, instalar controladores, y personalizar el entorno.

7. Distribuciones Recomendadas para Diferentes Usuarios

Principiantes: Ubuntu, Linux Mint.

Usuarios intermedios: Fedora, Manjaro.

Usuarios avanzados: Arch Linux, Debian.

Administradores de servidores: CentOS, Ubuntu Server.

Desarrolladores y profesionales IT: Fedora, Pop!_OS.

Entusiastas de la seguridad: Kali Linux, Parrot OS.

8. Conclusión

Resumen de los puntos clave

Elegir una distribución Linux adecuada depende de varios factores, incluyendo la experiencia del usuario, el propósito del uso, y las preferencias personales.

Recomendaciones finales

Experimentar con diferentes distribuciones utilizando métodos como Live USB o máquinas virtuales es crucial para encontrar la que mejor se adapte a tus necesidades.

Llamada a la acción

Te invitamos a probar algunas de las distribuciones mencionadas y unirte a la comunidad de Linux para seguir aprendiendo y compartiendo.

9. Glosario de Términos

Kernel: El núcleo del sistema operativo que gestiona la comunicación entre el hardware y el software.

Entorno de escritorio: La interfaz gráfica que permite interactuar con el sistema operativo.

Gestor de paquetes: Herramienta que facilita la instalación y gestión de software en una distribución Linux.

Rolling release: Modelo de desarrollo en el cual el software se actualiza continuamente sin necesidad de versiones mayores.

Fork: Un proyecto derivado de otro, pero que sigue su propio camino de desarrollo.

10. FAQ (Preguntas Frecuentes)

¿Cuál es la mejor distribución para un principiante?

Ubuntu o Linux Mint suelen ser las mejores opciones para nuevos usuarios.

¿Puedo instalar Linux junto a Windows?

Sí, puedes instalar Linux en dual-boot para elegir entre ambos sistemas al iniciar la computadora.

¿Qué distribución es mejor para un servidor?

CentOS y Ubuntu Server son opciones populares para servidores.

¿Qué es una distribución rolling release?

Es un tipo de distribución que se actualiza de manera continua sin necesidad de esperar versiones nuevas.

#Linux#distribuciones Linux#Ubuntu#Fedora#Debian#Arch Linux#Manjaro#Linux Mint#Kali Linux#CentOS#Ubuntu Server#distribución rolling release#gestores de paquetes#entornos de escritorio#software libre#instalación de Linux#tutoriales Linux#comunidad Linux#recursos Linux#foros Linux#blogs de Linux#seguridad en Linux

4 notes

·

View notes

Link

This year, we have seen a huge influx of Linux users (source), but we are seeing more distributions to try and pull people in. So let's talk about why 2020 is the best year in Linux (so far).

Windows 10 vs Linux

Now we need to understand, Windows 10 has nothing Linux can't do, and Linux has many things Windows 10 can't do. This is fantastic. Here is a simple table of basic things.

Win10 Linux OEM Support Yes Yes Functional default shells No (!) Yes Ability to ignore shells Yes Yes Graphical Environments Yes Yes No GUI Options No Yes Easy software management No (!2) Yes Easy customization Yes (!3) Yes Dedication to low-spec machines No (!4) Yes Redistribution allowed No Yes

(!) - While Powershell and CMD exist, CMD is the default, and calling it "functional" is not exactly correct.

(!2) - Partly subjective, will be explained further.

(!3) - While easy, not many, and alternatives aren't easy to work with if they even exist.

(!4) - While ARM support exists, dedication to old machines with very low-spec hardware (below 4GB RAM, 2CPU Cores) is not great.

Not only is this list pretty large, but also doesn't take everything into account. Yet the point is made, the main things Windows 10 users might care about is mentioned. Now with that said, Linux is a better product, but it isn't easy to get/install. Except for System76, Juno Computers, DELL, HP, and others have options to get a form of Linux (usually Ubuntu or derivative) shipped with the machine.

Now, what about Phones? Linux has Android but with how closed and locked down it is, Android is to Linux as macOS is to FreeBSD. Now phone operating systems are mostly shipping with some Linux fork, (i.e. Android), removing Android and iOS, we get KaiOS as the third largest. Now KaiOS is more for non-smartphones, but it is somewhat available for such. Otherwise, we also have Neon Mobile from KDE, Ubuntu Touch from UBPorts, Manjaro has a mobile spin, Purism, LuneOS, Tizen, Sailent, and many many others. Not to mention Volla Phone is making a bit of noise itself. Not to mention we still have old things to fork, like Firefox OS, WebOS, and any desktop Linux distro with a mobile desktop environment, so we can plausibly expect more Linux phones.

Now on the tasty side, more Linux contributors exist. From the people who contribute to small distributions to those starting their own. In the Ubuntu world, we are seeing FOUR new desktop remixes. These being (in order by release) Ubuntu Cinnamon, Ubuntu Deepin/DDE, Ubuntu Unity, and Ubuntu Lumina. Four desktops Linux remixes for Ubuntu, making the total remixes being 11. While this is a little weird to have 11 remixes, note that this is good for Linux, is mostly found in the fact that it promotes options for those who care. Those who find Ubuntu boring can find a functionally similar distribution in terms of a flavor/remix they might prefer. This, depending on the advertising of the remix done by the leads, they could (all by themselves) drag new users to their distribution of Ubuntu, while dragging more people to Linux.

This is a lot so far, but it keeps getting better. We have seen Lenovo coming closer to Linux, by doing an interview with LinuxForEveryone, joined with someone from Fedora (Watch Here). So we also have Lenovo coming to Linux, plus many OEMs for Linux specifically, such as System76, Juno Computers, Tuxedo Computers, and probably a million others. Manjaro has an OEM, elementaryOS has an OEM, Kubuntu has an OEM, Ubuntu has a billion OEMs, Pop!_OS is developed by an OEM, not to mention we might also get more from Lubuntu, other remixes/flavors, other distributions like FerenOS and Linux Mint. We might even get Drauger an OEM for gaming-specific stuff.

Now this is aiming to be as short as I can get this article, as I have to focus on Ubuntu Lumina at least somewhat, but I want to post a lot so I can have fun talking with the community while I work, and maybe inspire someone.

0 notes

Text

Menos distribuciones y más aplicaciones es lo que necesita el escritorio Linux

«No necesitamos más distribuciones Linux. Deja de hacer distribuciones y crea aplicaciones«. Más claro, el agua. La frase no es mía, sino de Alan Pope, conocido desarrollador de Canonical y Ubuntu; pero como si lo fuera, porque la suscribo en su plenitud. Y me explico, además de explicaros el contexto en el que se da dicha frase.

Make a Linux App

Make a Linux App es una iniciativa impulsada por Pope con el fin de animar a los desarrolladores ociosos a contribuir con el ecosistema e GNU/Linux en la dirección correcta, que no es crear una nueva distribución que solo va a interesar a cuatro gatos -por lo general, también ociosos- y que nadie está pidiendo. ��Por qué? Porque ya tenemos distros de sobra y muy buenas. ¡No necesitamos más!

Lo que propone Pope en Make a Linux App, por el contrario, es de cajón: crear una aplicación, una buena aplicación, es más complicado, pero mucho más beneficioso para todos los usuarios de GNU/Linux sin importar la distribución que utilicen, porque aunque la falta de aplicaciones no es el eslabón más débil de la experiencia del escritorio Linux, seguimos por detrás de Windows y Mac.

En Make a Linux App se explican las razones por las que crear aplicaciones para Linux es positivo tanto para el ecosistema como para el propio desarrollador; se recomiendan puntos de partida, por ejemplo el framework a utilizar, incluyendo el de GNOME, KDE, elementary OS, Ubuntu Touch y (bravo por señalarlo, a pesar de la oposición que tiene entre muchos usuarios) Electron; así como se recuerdan todas las posibilidades de distribución actuales, de AppImage a Flatpak, Snap y el Open Build Service de openSUSE.

Como no podía ser de otra manera, Pope ha sido lo suficientemente ecuánime como para no destacar por encima del resto las soluciones de Canonical. La iniciativa, además, cuenta con el apoyo de los principales implicados, léase proyectos como GNOME, KDE, elementary o UBPorts. Echadle un vistazo al sitio; merece la pena y toda la información es concisa, no os va a marear con mil datos.

La insoportable levedad de algunas distribuciones Linux

Ahora bien, ¿por qué el desarrollador que trabaja en su propia distribución, por lo general refrito del refrito, debería hacer caso a esta iniciativa? Pues porque de manera directa e indirecta, es lo que la mayoría de usuarios estamos pidiendo. Tal cual. Una buena muestra de ello la tenemos en los índices de popularidad de las distros, que ilustraré con los resultados de nuestra encuesta de fin de año.

Si nos fijamos, de las 20 opciones disponibles, el grueso de los votos se los llevan las grandes: Ubuntu, Linux Mint, Debian, Manjaro, Arch Linux, KDE neon, Fedora, Deepin, elementary OS, openSUSE… La popularidad del resto es residual y su base de usuarios irá a la par, con contadas excepciones. Pero incluso si las sumamos, siguen quedándose fuera muchas otras pequeñas distros que no aportan ningún valor.

Por poner un par de ejemplos que conoceréis y cuya aportación es más sustancial, en los últimos tiempos os hemos recomendado MX Linux, una derivada de Debian que yo considero bastante interesante. Sin embargo, lo más interesante no es la distro en sí, sino las herramientas que proporciona. ¿No sería posible abstraer esas herramientas de la distribución y ofrecerlas como un paquete de software para Debian? Lo mismo para Peppermint, que como principal valor tiene ofrecer una herramienta para la creación de aplicaciones web.

Ojo: son dos ejemplos cogidos al vuelo y como he dicho, su aportación es más sustancial que la de otras, en las que su justificación existencial se basa en modificar el aspecto y añadir toneladas de software preinstalado. Además, no siempre es más sencillo para desarrollador y usuario añadir paquetes que utilizar algo listo desde su misma instalación. No obstante, incluso en estos dos casos hablamos de distros residuales que de desaparecer no afectarían en nada al escritorio Linux.

Distros como herramienta vs distros de propósito general

Por otro lado, no hay que confundir lo que son distribuciones que funcionan como una herramienta, a distribuciones de propósito general, esto eso, las que instalamos en nuestro PC. Nada tienen que ver Ubuntu, Fedora o Manjaro con Kali, Tails, Puppy, Robolinux, LibreELEC, SteamOS, SystemRescueCd… Y tantas otras, cuyo enfoque no es servir de sistema operativo para el día a día, sino cubrir una necesidad concreta. Esta es una de las grandezas de GNU/Linux que no debería cambiar.

Redundancia no equivale a fragmentación

Y tampoco hay que confundir redundancia con fragmentación. No hay una fragmentación reseñable en GNU/Linux, por más que haya distribuciones que aporten poco o nada y la razón ya la he señalado: las grandes se comen casi todo el pastel. Haciendo alusión de nuevo a los resultados de nuestra encuesta de fin de año, todo lo basado en Debian se llevaba en 70% de los votos… pero es que de ese porcentaje, más del 50% se lo repartían entre Ubuntu, Linux Mint y Debian; lo demás se iba para KDE neon, Deepin, elementary OS, MX Linux, Zorin OS y para de contar.

Luego la principal inconveniencia de crear más distribuciones de propósito general no es que genere fragmentación o que afecte al ecosistema: es que no lo beneficia. Así de simple. Por supuesto…

El software libre lo es para lo bueno y para «lo malo»

He aquí el quid de la cuestión, y es que el software libre es libre para todo, también para que quien lo desee se monte su propia distribución, en lugar de aportar a otras áreas donde es más bienvenido el esfuerzo. Como dijo en su momento el insigne Linus Torvals, el software libre o de código abierto ha triunfado a base del tipo de egoísmo de «yo hago esto porque me beneficia a mí», con la salvedad de que el fruto es compartido.

Por lo tanto, basta con que haya alguien que quiera hacer su refrito para que lo haga, nadie puede impedírselo. Lo que sí podemos hacer, y de eso trata Make a Linux App, es pedirle que considere otras formas de contribución. Como crear una aplicación o, quizás, ayudar a mantener alguna que lo necesite. Y son muchas.

Imagen: Pixabay

Fuente: MuyLinux

0 notes

Text

How to Install Google Chrome 98 in Ubuntu / Rocky Linux & Fedora

Google Chrome is one of the common and most widely used web browsers in the world. It is blazing fast and easy to use with security features. Google Chrome's newer version is 98 and it is the second web browser update of the year. This release contains changes related to the developer side and some user-impacting differences. This tutorial will be helpful for beginners to install Google Chrome 98 on Ubuntu 20.04 LTS, Linux Mint 20.3, Rocky Linux 8, AlmaLinux 8, and Fedora 35. Google Chrome 98 Changelog - COLRv1 Color Gradient Vector Fonts in Chrome 98 - Remove SDES key exchange for WebRTC - WritableStream controller AbortSignal - Private Network Access preflight requests for subresources - New window.open() popup vs. window behavior For complete changelog refer to the chromestatus.com

Install Google Chrome 98 on Ubuntu / Linux Mint

Google Chrome can be installed on Ubuntu and Linux Mint systems via deb file. Open the terminal and run the below command wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb Install Chrome on Ubuntu / Linux Mint sudo dpkg -i google-chrome-stable_current_amd64.deb

Install Google Chrome 98 on Fedora

Google Chrome can be installed on fedora via terminal Step 1: Install Third-Party Repositories sudo dnf install fedora-workstation-repositories Step 2: Enable the Google Chrome Repository sudo dnf config-manager --set-enabled google-chrome Step 3: Install Google Chrome on fedora sudo dnf install google-chrome-stable

Install Google Chrome 98 on Rocky Linux 8 / AlmaLinux 8

Step 1: Create the Google chrome repository with the below contents cat > /etc/yum.repos.d/google-chrome.repo name=google-chrome baseurl=http://dl.google.com/linux/chrome/rpm/stable/x86_64 enabled=1 gpgcheck=1 gpgkey=https://dl.google.com/linux/linux_signing_key.pub Step 2: Update the repository sudo dnf update -y Step 3: Install Google Chrome 98 via DNF sudo dnf install google-chrome-stable

Conclusion

From this tutorial, you have learned how to download and install google chrome 98 on Ubuntu 20.04 LTS, Ubuntu 22.04, Linux Mint 20.3, Rocky Linux 8, AlmaLinux 8, and Fedora 35 Do let us know your comments and feedback in the comments section below. If my articles on TipsonUNIX have helped you, kindly consider buying me a coffee as a token of appreciation.

Thank You for your support!! Read the full article

0 notes

Text

Fedora VS Ubuntu: How are they different?

New distributions of Linux continue and continue to appear, and for some users it is becoming tedious to try to keep up. Maybe you have even been asked to explain the differences between two Linux distributions. These questions may seem strange to you, among other things because Fedora and Ubuntu have been around for too long. But if the interested person is a beginner, these questions do begin to make sense.

Although neither of the two distributions are new, both release versions continuously. The last one of Ubuntu was version 17.10, which came out in October of last year and Fedora released version 27 in November of 2017. If you want, you can check here more things about the latest versions of Ubuntu .

In a previous article we explained the differences between Ubuntu and Linux Mint

If you have already read it, you will see that between the two distributions that we will see in this article, the differences are more marked.

1. History and development

The history of Ubuntu is much better known than that of Fedora. To summarize, we can say that Ubuntu was born from an unstable branch of Debian, in October 2004. Fedora was born before, in November 2003 and its development history is a bit more tangled. That first version of Fedora was called Fedora Core 1, and was based on Red Hat Linux 9. Which officially sold out on April 30, 2004.

Fedora then emerged as a community-oriented alternative to Red Hat and had two main repositories. One of them was the Core , which was maintained by the developers of Red Hat, and the other was called Extras , which was maintained by the community.

However, in late 2003, Red Hat Linux merged with Fedora to become a single community distribution, called Red Hat Enterprise Linux, as its equivalent with commercial support.

Until 2007, Core was part of the Fedora name, but as of Fedora 7, the Core and Extra repositories joined. Since then the distribution is called simply Fedora.

The biggest difference so far is that the original Red Hat Linux was essentially divided into Fedora and Red Hat Enterprise Linux, while Debian remains a complete and separate entity from Ubuntu, which imports packages from one of the Debian branches.

Although many think that Fedora is based on Red Hat Enterprise Linux (RHEL), nothing is further from reality. On the contrary, the new versions of RHEL are Fedora forks that are thoroughly tested for quality and stability before launch.

For example, RHEL 7 is based on Fedora repositories 19 and 20. The Fedora community also provides additional packages for RHEL in a repository called Extra Packages for Enterprise Linux (Extra Packages for Enterprise Linux), whose abbreviation is EPEL.

Other groups include the Board of Forums, the IRC Board and the Membership Board of Developers. Users can apply for Ubuntu membership and volunteer as collaborators on various teams organized by the community.

2. Release and support cycle

Ubuntu releases a new version every six months, one in April and the other in October. Each fourth version is considered as long-term support (LTS). Therefore, Ubuntu releases an LTS version every two years. Each of them receives official support and updates for the next five years.

The regular versions used to have a support of 18 months, but as of 2013, the support period was shortened to 9 months.

Fedora does not have strict periods to launch new versions. They usually happen every 6 months, and have support for 13 months. That is why now the support period is greater than that of Ubuntu. Fedora, unlike Ubuntu, does not release LTS versions in the long term.

3. How is your name composed?

If you know the rules that apply Ubuntu to assign the names to their versions, you will know that the versions are composed of two numbers, the first of which means the year and the second the month of the release of that version. In this way, you can deduce exactly the launch date of each version, in addition to knowing when you will have a new one at your fingertips. For example, the last version of Ubuntu is version 17.10, which was released in October 2017.

Fedora maintains a simpler system and uses whole numbers, starting with 1 for the first version, and currently it reached version 27.

The nomenclature of the Ubuntu versions consists of two words that start with the same letter. The first word is an adjective and the second is an animal, which is usually a weird one. These versions are announced by Mark Shuttleworth, with a brief introduction or anecdote related to the name. The name of the last stable version is Artful Aardvark, which has been a supposed anteater pig

Fedora 20 Heisenbug 2013 was the latest version of Fedora that has a code name. All subsequent versions are simply called “Fedora X”, where X represents the number that follows the previous version. Before that, anyone in the community could suggest a name, which could qualify for approval if it complied with a set of rules. The approval was in charge of the Fedora council.

If the choice of the Ubuntu code name seemed extravagant to you, look how they chose the name of the Fedora versions. A possible name of a new version had to keep a certain relationship with the previous one, the more unusual or novel the better.

They should not be names of living people or trademarked terms. The relationship between the names of Fedora X and Fedora X + 1 must match the formula “is a”, so that the following is true: X is a Y, and so is X + 1. To put a little light in this confusion, Fedora 14 was called Laughlin, and Fedora 15 Lovelock. Both Lovelock and Laughlin are cities in Nevada. However, the ratio of Fedora X and Fedora X + 2 should not be the same.

Now perhaps the reason why the developers have decided to stop assigning names to the new versions is clearer.

4. Editions and desktop environments

Fedora has three main editions: Cloud (Atomik), for servers (Server) and for workstations (Workstation). The names of the first two are very illustrative, and the edition for work stations is actually the edition that most people use, that of desktop and laptop computers (32 or 64 bits). The Fedora community also provides separate images of the three editions for ARM-based devices. There is also Fedora Rawhide, a continuously updated development version of Fedora that contains the latest compilations of all Fedora packages. Rawhide is a testing field for new packages, so it is not 100% stable.

Currently, Ubuntu outperforms Fedora in terms of quantity. Along with the standard desktop edition, Ubuntu offers separate products called Cloud, Server, Core and Ubuntu Touch, for mobile devices. The desktop edition supports 32-bit and 64-bit systems, and server images are available for different infrastructures (ARM, LinuxON and POWER8). There is also Ubuntu Kylin, a special edition of Ubuntu for Chinese users, which came out in 2010 as “Ubuntu Chinese Edition”, and which was renamed as an official subproject in 2013.

In terms of desktop environments, the main edition of Fedora 27 uses Gnome 3.6 with Gnome Shell. The default Ubuntu Desktop Environment (DE) is Unity, and other options are provided through “Ubuntu flavors”, which are variants of Ubuntu with different desktop environments. There is Kubuntu (with KDE), Ubuntu GNOME, Ubuntu MATE, Xubuntu (with Xfce) and Lubuntu (with LXDE). In the latest versions of Ubuntu, specifically from 17.10, it was said goodbye to Unity and was incorporated by default GNOME, accompanied also by a new graphic server called Wayland.

The equivalent to Ubuntu flavors in Fedora are the Spins or “alternative desktops” . There are Spins with KDE, Xfce, LXDE, MATE and Cinnamon desktop environments, and a special Spin called Sugar on a Stick with a simplified learning environment. This project is designed for children and schools, particularly in developing countries.

Fedora also has labs, or “functional software packages . ” They are collections of software for specific functions that can be installed in an existing Fedora system or as an independent Linux distribution. The functional software packages available include Design Suite, Games, Robotics Suite, Security Lab and Scientific. Ubuntu provides something similar in the form of Edubuntu, Mythbuntu and Ubuntu Studio, subprojects with specialized applications for education, home entertainment systems and multimedia production, respectively.

5. Packages and repositories

The most notorious differences between these two distributions are found in this section. As for the package management system, Fedora uses .rpm format packages , while Ubuntu uses packages with .deb format . Therefore, they are not compatible by default, with each other, you have to convert them with tools such as Alien , for example. Ubuntu has also presented Snappy packages, which are much safer and easier to maintain.

With the exception of some binary firmware, Fedora does not include any proprietary software in its official repositories. This applies to graphics drivers, codecs and any other software restricted by patents and legal issues. The direct consequence of this is that Ubuntu has more packages in its repositories than Fedora.

One of the main objectives of Fedora is to provide only open source software, free and free. The community encourages users to find alternatives for their non-free applications. If you want to listen to MP3 music or play DVDs in Fedora, you will not find support for that in the official repositories. However, there are third-party repositories like RPMFusion that contain a lot of free and non-free software that you can install in Fedora.

Ubuntu aims to comply with the Debian Free Software Guidelines, but still makes many concessions. Unlike Fedora, Ubuntu includes proprietary drivers in its restricted branch of official repositories.

There is also a partner repository that contains software owned by Canonical’s associated providers: Skype and Adobe Flash Player, for example. It is possible to buy commercial applications from the Ubuntu Software Center and enable compatibility for DVD, MP3 and other popular codecs by simply installing a single package called “ubuntu-restricted-extras” from the repository.

Copr of Fedora’s Copr is a platform similar to the Ubuntu Personal Packet Files (PPA): they allow anyone to upload packages and create their own repository. The difference here is the same as with the general approach of software licenses. It is assumed that packets containing non-free components or anything else that is explicitly prohibited by the Fedora Project Board should not be loaded.

6. Public and Objectives

Copr, from Fedora, is a package distribution system that has focused on three things: innovation, community and freedom. It offers and promotes exclusively free and open source software, and emphasizes the importance of each member of the community. It has been developed by the community, and users are actively encouraged to participate in the project, not only as developers, but also as writers, translators, designers and public speakers (Fedora Ambassadors). There is a special project that helps women who want to contribute. The objective is to participate in the fight against prejudice and gender segregation in technology and free software circles.

In addition, Fedora is often the first, or one of the first distributions to adopt and show new technologies and applications. It was one of the first distributions to integrate with SELinux (Security Enhanced Linux) , includes the Gnome 3 desktop interface, uses Plymouth as the bootsplash application, adopts systemd as the default init system and uses Wayland instead of Xorg as the graph server predetermined.

Fedora developers collaborate with other distributions and upstream projects, to share their updates and contributions with the rest of the Linux ecosystem. Due to this constant experimentation and innovation, Fedora is often mislabeled as an unstable, next-generation distribution that is not suitable for beginners and everyday use. This is one of the most widespread Fedora myths, and the Fedora community is working hard to change this perception. Although developers and advanced users who want to try the latest features are part of the main target audience of Fedora, it can be used by anyone, just like Ubuntu.

Speaking of Ubuntu, some of the objectives of this distribution overlap with Fedora. Ubuntu also strives to innovate, but choose a much more user-friendly approach. By providing an operating system for mobile devices, Ubuntu tried to gain a foothold in the market and simultaneously boost its main project, although that dream was impossible.

Ubuntu is often proclaimed as the most popular Linux distribution, thanks to its strategy of being easy to use and simple enough for beginners and former Windows users. However, Fedora has an ace up its sleeve: Linus Torvalds, the creator of Linux, uses Fedora on his computers.

With all these points exposed, you can make the best decision for you and your computer.

The post Fedora VS Ubuntu: How are they different? appeared first on News Bodha.

from WordPress http://ift.tt/2F4b0W9 via IFTTT

0 notes

Text

Win10/F26 UEFI PITA

Trying to do a quick install of F26 onto a refurb T430. Turns out that the Win10 installation must have been done as legacy BIOS instead of UEFI for some reason. The quick solution was to boot and hit "enter" > "F10" > "Startup" > "Legacy only". However, I shall return to this and get the whole UEFI and Secure Boot chain working in a couple of months. So here are the appropriate links for converting the BIOS to UEFI etc https://social.technet.microsoft.com/wiki/contents/articles/14286.converting-windows-bios-installation-to-uefi.aspx https://winaero.com/blog/install-windows-10-using-uefi-unified-extensible-firmware-interface/ https://tutorialsformyparents.com/how-i-dual-booted-linux-mint-alongside-windows-10-on-a-uefi-system/ https://msdn.microsoft.com/en-us/library/windows/hardware/dn640535(v=vs.85).aspx#gpt_faq_gpt_have_esp http://linuxbsdos.com/2016/12/01/dual-boot-fedora-25-windows-10-on-a-computer-with-uefi-firmware/ https://geeksocket.in/blog/dualboot-fedora-windows/

0 notes

Text

Windows Vs Linux : Distros Before we begin, we need to address one of the more confusing aspects to the Linux platform. While Windows has maintained a fairly standard version structure, with updates and versions split into tiers, Linux is far more complex. Originally designed by Finnish student Linus Torvalds, the Linux Kernel today underpins all Linux operating systems. However, as it remains open source, the system can be tweaked and modified by anyone for their own purposes. What we have as a result are hundreds of bespoke Linux-based operating systems known as distributions, or 'distros'. This makes it incredibly difficult to choose between them, far more complicated than simply picking Windows 7, Windows 8 or Windows 10. Given the nature of open source software, these distros can vary wildly in functionality and sophistication, and many are constantly evolving. The choice can seem overwhelming, particularly as the differences between them aren't always immediately obvious. However, this does mean that consumers are free to try as many different Linux distros as they like, at no cost. The most popular of these, and the closest the platform has to a 'standard' OS, is Ubuntu, which strives to make these as simple as possible for new Linux users. Other highly popular distros include Linux Debian, Mint, and Fedora, the last of which Torvalds personally uses on his own machines. There are also specialist builds that strip away functions to get the most from underpowered hardware, or distros that do the opposite and opt for fancy, graphically intense features.

0 notes

Photo

PING: Calamares, KDE Neon, Netrunner, Linux AIO Ubuntu, Linux Mint, Fedora, Liri OS…

Se nota que volvemos de lleno a la rutina, y es que el PING viene cargado de distribuciones como pocas veces antes, y no por falta de oportunidades, sino porque ha habido un goteo de noticias pequeñas pero interesantes que donde mejor caben es aquí, en nuestra recopilación de enlaces semanal. ¡Empezamos que se nos va el sábado! Calamares 3.0. Pues no, no empezamos con una distro, sino con el lanzamiento de Calamares 3.0, la nueva versión de este instalador de sistema ya convertido en uno de los más populares, al menos entre las ‘distros de periferia’. Novedades trae muchas pero ninguna demasiado llamativa (excepto para desarrolladores), a juzgar por el anuncio oficial . KDE Neon. Casi podían haber dado el anuncio al unísono, porque con una diferencia de horas la distribución del proyecto KDE se estrenaba con Calamares 3.0 como instalador por petición popular. Más información en el blog de Jonathan Riddell . Netrunner Desktop 17.01.1. Y como no hay dos sin tres, dicen, la siguiente en renovar su imagen de instalación para dar cabida a Calamares 3.0 fue Netrunner Desktop (basada en KDE Neon). Más información en el blog de Netrunner . Linux AIO Ubuntu 14.04.5. Completando los lanzamientos del proyecto Linux AIO que recogimos en el PING de la semana pasada, tal vez os interese haceros con el último de Trusty Tahr, la despedida de Ubuntu 14.04 LTS en forma de ISO que ya está disponible en formato all in one . Más información en el blog de Linux AIO . Linux Mint 18.1 KDE Beta. Se acaba la espera por cerrar el ciclo de Serena con el lanzamiento que quedaba por asomar, la edición con KDE Plasma. De momento es todavía una beta, pero no debería tardar mucho más en verse la estable. Más información en el blog de Linux Mint . ¿Fedora LXQt? Por ahora es solo una pregunta, una proposición de cara al lanzamiento de Fedora 26 que tiene que discutirse y que no implicaría en nada al spin con LXDE, así que no se trata de reemplazar, sino de sumar. Cómo se resolverá os lo contaremos cuando se sepa, mientras tanto, en la wiki de Fedora os podéis enterar de lo que hay. Liri OS. De las “cenizas” de Hawaii y Papyros surge Liri OS, una nueva distribución basada en Qt5 y Material Design que ya veremos a dónde llega a la vista de los antecedentes. Con todo, habrá que darles tiempo y para quien no pueda esperar, ya está disponible una nighly alpha (!). A partir del anuncio oficial encontraréis toda la información al respecto. PulseAudio 10.0. Cerramos el bloque de las distribuciones con otro complemento más importante si cabe que un solo instalador, como es el servidor de sonido de GNU/Linux. Son varios los cambios que presenta esta nueva versión y en las notas de lanzamiento los encontraréis listados. Todo bien. Fedora vs Ubuntu vs openSUSE vs Clear Linux For Intel Steam Gaming Performance. Se puede decir más alto pero no más claro, así que aprovechando que esta semana hablamos de las mejoras de Mesa para gráficas Intel , estos benchmarks de Phoronix aparecen en buen momento. Y para los más viciosos, un versus con Windows 10 y Kabylake … ains … ¿recalboxOS o Retropie? Terminamos con un último artículo recomendado, un tutorial de nuestros compañeros de MuyComputer que a los fans del cacharreo y los videojuegos retro os va a encantar. Contempla una Raspberry Pi, componentes, periféricos, distribuciones Linux muy específicas… Podéis verlo en Cómo construir tu arcade casera por menos de 80 euros .

0 notes

Text

Razones para cambiar a linux

Razones para cambiar a linux. Prefieres Windows o Linux?. Si visitas mi sitio web sobre Linux, creo que ya se la respuesta, pero ¿cuáles son las razones para cambiar a Linux?. Linux es un sistema operativo 100% libre, eso está claro; pero existen otros motivos de peso para que mudes a linux, si es que no lo has echo ya, en el articulo de hoy vemos las mas importantes.

Razones para cambiar a linux

Linux es gratis Una de los motivos por los que debes cambiar a linux es su precio, independientemente de la distribución que elijas, Debian con Ubuntu y Linux MInt, derivados de Red Hat como Fedora o Centos, el propio Arch con Manjaro, y otras tantas que me dejo en el tintero; son gratis. Salvo alguna excepción o distribución especifica, la practica totalidad de distros linux no solo son gratuitas, también son de código abierto. Te parece poco?, pues tranquilo que aun tenemos más, porque de la misma forma que se distribuye linux, igualmente se concede la inmensa mayoría de su software. Soy consciente que "los de las ventanas" saldrán con lo de siempre... que si photoshop, que si no se que, bla,bla,bla, palabrería nada más. Vamos a ver, tu piensa, muchos son los que vienen a linux, muy pocos los que retornan a Windows. Linux es estable Tras más de 20 años usando linux y anteriormente otros sistemas Unix, puedo contar con los dedos de mis manos los bloqueos que me han ocurrido por culpa del sistema, algo común en otros sistemas con sus típicas pantallas azules más conocidas como pantallazo de la muerte, jaja. Linux es capaz casi al 100% sin reiniciarlo y sin peligro de cosas extrañas. Si por algún caso, una aplicación o software se bloquea en tu Linux, puede matarlo con un solo comando de forma sencilla y confiable desde la terminal. En Windows, cruza los dedos y reza a San Pantuflo para que el administrador de tareas sea capaz de cerrar el proceso que genera inestabilidad.