#Internet of Thinking service provider

Explore tagged Tumblr posts

Text

HAPPY VARENTINES DAY, ANGEL ! I've been facing some tech issues recently, but!! To celebrate Ren's birthday and Valentine's Day, I'll be releasing Day 5 (the Early Access version) for all the Beta Testers in a few days!

And for those who aren't part of the 14DWY Discord server, don't worry! The public version will be available for everyone to play once the beta testing period is over ^^

#I don't have internet right now because my service provider is ass </3 I fear we may be livetweeting from my campus wifi right now lmao /hj#Anyways!! For those unfamiliar with how the whole ''Day update'' releases work; it's as follows:#Beta Testers → 14DWY Discord Server → Public Release#I always feel bad for those who pay money to boost da server (or donate to my ko-fi); so I want to offer them early dev logs and game acces#But members can also become a Beta Tester for ✨free✨ by chatting and reaching level 50 — or by taking part in server events >:3#They get access to all dat + unique server perks (like special name colour; upload & emote/sticker perms; [REDACTED] pixels lmao; etc)#And just so that it's not too overwhelming for da folks on Discord—#—I don't think I'll make a Twitter/Bsky announcement until Day 5 is officially available for beta testers to play#Or... until I can find a new service/phone provider because an additional $40 a month is NAWT the vibe!!!!! T_T#I also do not want to drive 1.3 hours into the city just to use my uni's/McDonalds wifi hjgdgjdhjgd#But I fear this may be the price I need to pay to have extended wifi coverage to the middle of nowhere </3 /lh /silly#Oh lawd.... How am I going to upload the files to Itch........... T_T#Brb brawling and bawling a certain internet provider real quick <3#💖 — 14 days with queue.#🖤 — updates.#🖤 — shut up sai.#I'll make a new rebloggable announcement + use the 14DWY tags once Day 5 is officially out!!

2K notes

·

View notes

Text

Do all these billionaires WANT to have a reason to use their bunkers? And that's why they're hastening the complete collapse of the US and mucking around in the elections of other democracies? Cause if you pause a fuckton of federal money that pays salaries and helps people stay on their feet while also not hiring anyone to work for the federal government and deporting everyone who picks our damn food and slaughters our damn animals, then wtf do you think is gonna happen?

We're speeding toward a situation where white collar folks have to turn to the the gig economy to get by, only there is no longer demand for that kind of work cause there are so many fewer white collar workers who need their groceries and meals delivered, so competition will be fierce. Wages will go down. The folks who used to do those jobs are gonna end up replacing the deported workers & they'll be so desperate for cash that they'll take the same shitty wages and wealth inequality will rise to the point where there is no fucking middle class anymore and then we'll all be slave laborers and then...

#Bunker time#Wake me up from this nightmare please#A bit simplistic#but basically salaried workers are gonna get forced into low wage hourly work or gig work#Those workers will get squeezed out#Prisons will fill cause being poor & homeless is a crime#Those folks will get sent to farms & slaughterhouses where they work 10 hours a day for 25 cents#And lose contact with the outside world cause phone & internet time is $5 per hour#Federal money is everywhere. It fuels so much of our economy#All these asshat Magats without degrees who want to see government workers fucked over cause they're insecure about their lack of education#Are gonna learn real quick how important those jobs are when nobody is spending money on the services they provide#You think folks are gonna be remodeling their houses & calling plumbers when they're out of work & can't pay their mortgage#lol no#And who is gonna snap that property up when it goes into foreclosure?#One of those big real estate companies that's backed by venture funding#And they'll turn around and rent it out to whoever still has enough cash to pay for rent but not enough to cover a mortgage#We're so fucked

5 notes

·

View notes

Text

MY INTERNET JUST WENT OUT I HAVE A PAPER DUE IN 2 DAYS ITS OVER

#i need 2 drop out i goyta get outta here#looks like i actually have to talk to mynroomates... bcz idk who our internet service provider is under#also the motem/router was in my room 👍 so theyll probably think it was my fault

4 notes

·

View notes

Text

that post I reblogged about American Woes made me think of my hometown and like. I feel like we are So Close to grasping something but it's not gonna happen. I mean, one of the reasons I like living here and not wanting to move is that, even though I have to pay for it directly instead of through taxes, I can get municipally provided internet. Comes on the same bill as my electricity, water, sewer, and waste management. (They also provide phone and cable tv services if I want them.) We could just. pay for these things with our taxes. give them to everybody in the city. Why Don't We Do That?????

#I can never switch back to like AT&T or any of the other internet guys out there#y'all don't got what the city gives me#and what the city gives me is one convenient bill and hella reliable service#but even though it's itemized I have to stop and remind myself that my utilities bill is literally ALL of my utilities AND internet#(which internet SHOULD be considered a utility)#and I think of how good I have it in comparison to how it COULD be#also at least part of my electricity is provided by solar power

3 notes

·

View notes

Text

Conspiracy Theories Are Ruining My Life

Discussion post: Facts aren’t necessarily facts anymore… but how do you discern the truth? Normally when I hear a conspiracy theory it was in conversation and brought up as a joke, or a bit of salacious gossip from someone who doesn’t know any better. But now I’m seeing it in the news, in politics, populating social media – even my friends and family are striking up conversations with me about…

View On WordPress

#amreading#bloggers#blogs#books#Casey Carlisle#censorship#clickbait#conspiracy theories#content#content creators#critical reading#critical thinking#discussion#education#facts#fake news#free speech#Internet Service Providers#ISP#labels#literature#news#Non-Fiction#novels#policing content#publishers#reading#scams#school#social media

1 note

·

View note

Text

Best SEO Company in Dubai Mainland: Maximizing Your Online Presence

In the heart of Dubai Mainland, where the city's dynamic pulse beats the strongest, businesses realise the paramount importance of a robust online presence. In the digital era, your visibility online is the cornerstone of your success. That's where the best SEO company in Dubai Mainland comes into play.

The Shifting Landscape of SEO in Dubai Mainland

Search Engine Optimisation (SEO) is a multifaceted field that is constantly evolving to meet the demands of an ever-changing digital world. It encompasses strategies like on-page optimisation, off-page optimisation, content creation, technical SEO, and more. For businesses in Dubai, staying ahead in the digital race is not just a matter of choice but a necessity.

The Role of the Best SEO Company in Dubai Mainland

Collaboration with an Internet Marketing Company in Dubai, Mainland:

An internet marketing company in Dubai Mainland brings local expertise to the table, understanding the unique dynamics of the Dubai market. When paired with the internet marketing agency, this partnership ensures that your online marketing strategy is effective and tailored to the local audience's preferences and behaviours. This collaboration creates a synergy where technology, marketing, and local insights intersect, offering a comprehensive solution for your business.

Digital Marketing Service Provider in Dubai Mainland:

Digital marketing encompasses various strategies, of which SEO is a critical part. A digital marketing service provider in Dubai Mainland understands the importance of integrating SEO seamlessly into a comprehensive digital marketing strategy. This collaboration ensures that your business ranks well in search engines and enjoys a holistic digital presence, from social media to content marketing.

Enhancing Brand Visibility with Social Media Marketing Experts:

In today's digital landscape, brand visibility is not limited to search engines alone. Social media marketing experts in Dubai Mainland can collaborate with the best SMO company to create a solid online presence on platforms like Facebook, Twitter, Instagram, and LinkedIn. These experts ensure your brand stays connected with your audience, fostering brand loyalty and increasing brand recognition.

Driving Targeted Traffic with Pay-Per-Click Service Providers:

Pay-per-click (PPC) advertising is a powerful tool for driving targeted traffic to your website. Pay per click service providers in Dubai Mainland can work hand in hand with the best agency to create and manage effective PPC campaigns. These campaigns ensure your business appears prominently in search results and on various digital platforms, driving potential customers to your website.

Tailored solutions for unique business needs

The best SEO company in Dubai Mainland understands that every business is unique. They offer tailored solutions that align perfectly with your specific needs and goals. This ensures that your SEO efforts drive results and reflect the essence of your brand within the Dubai mainland market.

Conclusion

In the bustling heart of Dubai, having a solid online presence is not just an option; it's a necessity. Partnering with the right SEO company bridges the gap between local expertise, technology, marketing, and data-driven decision-making. This ensures that your business survives and thrives in the digital age. So, embark on this transformative journey and unlock the full potential of your business with the trusted in Think United Services.

Get in touch!

Email: [email protected]

Contact No: +971-80006512057

Website: https://thinkunitedservices.ae/

YouTube Channel. https://www.youtube.com/channel/UCc2HiYKzuR3wPVNAf1RWolA

Source URL: https://penzu.com/p/3b304e74c475cab0

#best SEO company in Dubai Mainland#internet marketing company in Dubai Mainland#digital marketing service provider in Dubai Mainland#Social media marketing experts in Dubai Mainland#Pay per click service providers in Dubai Mainland#Think United Services

1 note

·

View note

Text

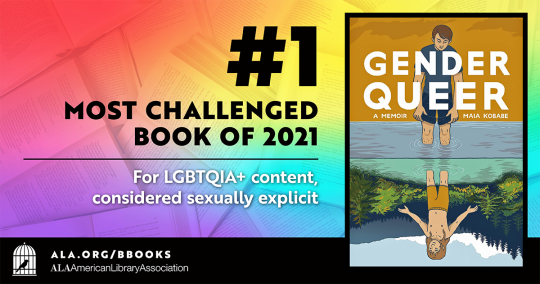

On Friday, the president signed yet another Executive Order, this time directly targeting funds allocated to libraries and museums nationwide. The Institute of Museum and Library Services (IMLS) is a federal agency that distributes fund approved by Congress to state libraries, as well as library, museum, and archival grant programs. IMLS is the only federal agency that provides funds to libraries. The Executive Order states that the functions of the IMLS have to be reduced to “statutory functions” and that in places that are not statutory, expenses must be cut as much as possible. [...] The department has seven days to report back, meaning that as soon as this Friday, March 21, 2025, public libraries–including school and academic libraries–as well as public museums could see their budgets demolished.

Actionable items from the article:

Sign the petition at EveryLibrary to stop Trump’s Executive Order seeking to gut the IMLS then share it with your networks.

Write a letter to each of your Senators and to your Representative at the federal level. You can find your Senators here and your Representative here. All you need to say in this letter is that you, a resident of their district, demand they speak up and defend the budget of IMLS. Include a short statement of where and how you value the library, as well as its importance in your community. This can be as short as “I use the library to find trusted sources of information, and every time I am in there, the public computers are being used by a variety of community members doing everything from applying for jobs to writing school papers. Cutting the funds for libraries will further harm those who lack stable internet, who cannot afford a home library, and who seek the opportunities to engage in programming, learning, enrichment, and entertainment in their own community. Public libraries help strengthen reading and critical thinking skills for all ages.” In those letters, consider noting that the return on investment on libraries is astronomical. You can use data from EveryLibrary.

Call the offices of each of your Senators and Representatives in Congress. Yes, they’ll be busy. Yes, the voice mails will be full. KEEP CALLING. Get your name on the record against IMLS cuts. Do this in addition to writing a letter. If making a call creates anxiety, use a tool like 5 Calls to create a script you can read when you reach a person or voice mail.

Though your state-level representatives will not have the power to impact what happens with IMLS, this is your time to reach out to each of your state representatives to emphasize the importance of your state’s public libraries. Note that in light of potential cuts from the federal government, you advocate for stronger laws protecting libraries and library workers, as well as stronger funding models for these institutions.

Show up at your next public library meeting, either in person at a board meeting or via an email or letter, and tell the library how much it means to you. In an era where information that is not written down and documented simply doesn’t exist, nothing is more crucial than having your name attached to some words about the importance of your public library. This does not need to be genius work–tell the library how you use their services and how much they mean to you as a taxpayer.

Tell everyone you know what is at stake. If you’ve not been speaking up for public institutions over the last several years, despite the red flags and warnings that have been building and building, it is not too late to begin now. EveryLibrary’s primer and petition is an excellent resource to give folks who may be unaware of what’s going on–or who want just the most important information.

746 notes

·

View notes

Text

cw: yandere, obsessive scara, modern au, cyberstalking, first we silly but then we also serious.

modern au yandere! scara who would rig the youtube algorithm so that your homepage will always contain videos of him. he cyberstalks your watch activity to determine what type of videos you like the most so that he can mold himself based off of it. reaction channels, video essays, youtube streamers, shorts content, hours-long videos - it doesn't matter. as long as your eyes are on him, he can't bring himself to care about the means. it's a bit silly in retrospect but terrifying in execution. he absolutely can't stand the thought of you finding anyone other than himself interesting, it makes him want to throw up the breakfast he had earlier that day. but you ignore his videos without a second glance because, who even is this guy, isn't he from one of your lectures?

modern au yandere! scara will always inevitably throw his phone across the room whenever he comes across a compatibility slideshow post on tiktok. he's fighting his deepest darkest demons to not view the next slide because he doesn't need validation from random attention-hungry strangers from the internet. or at least, that's what he tells himself when he's already on slide 3 out of 11. the results end up telling him he's not compatible with you and it unironically ruins his day, so he goes to the comments to send actual death threats. his account is banned and now he's even more pissed because he has to go through the trouble of creating a new one so that he can continue stalking your reposts and delude himself into thinking it's him on your mind when said reposts are anything inherently romantic. in reality, you barely even know his name.

modern au yandere! scara who has a facebook dump account where he screams into the void about how badly he wants you. it's a private account with no friends, just a place for him to let out his deepest feelings. he also has a normal facebook account where he's mutuals with his blockmates in college, biological mom, adoptive mom, etc. but he can never gather the courage to add you on facebook. you've talked to him through dms before (he screenshotted the conversation, printed it out, hung it on the walls of his room) but never added him, so now he longingly stares at the "add friend" button on your profile all while feeling deep envy for the mutual friends listed. he'll be mutual friends with you one day, he promises to himself.

modern au yandere! scara who creates a linkedin account just so he can view your profile. as nepo baby, he has no need for LinkedIn but heavens be damned if he doesn't put in the minuscule effort of creating an account in exchange of learning even more about you. by extension, he learns the name of the company you're interning at, the name of your boss, your co-workers, your classmates from your college classes, and your dream company - all of which he meticulously files away for future uses.

modern au yandere! scara combs through thousands of online reviews on an online shopping app (amazon, aliexpress, etsy, shopee, ebay, etc.) just so he can find your personal review of the product, (he knows you left behind a review because he overheard you talking to your seatmate about it 30 minutes ago) and subsequently your account in which he can view your wishlists and past reviews. he then proceeds to buy every item on your wishlist which leads to a confused (and terrified) you when a large package arrives at your dorm a week later. of course, he knows where your dorm is located.

modern au yandere! scara who doesn't seek out the online services of tarot readers on tiktok lives or the love spells of etsy witches. rather, he goes out of his way to do his research and locate secluded spots around the city for those who provide irl readings and/or spells (it's more authentic this way! he reasons). he doesn't even avail the compatibility tarot reading nor does he bat an eyelash at the love potions stewed on the ground around him. no, what he's here for are curses. he's been begging any higher being for months now that your roommate will finally move out, but to no avail. which leads him to desperate measures of placing a bad luck curse on your leech of a roommate. he goes home that night with a skip in his step, just waiting for the curse to kick in.

modern au yandere! scara obsessively refreshes the private facebook group page (specifically made for finding roommates in your large university campus!) just waiting for you to post that you're in need of a new roommate. it's a nightly ritual for him at this point. he screams and jumps out of his gaming chair like he scored a national goal when you finally, finally post a roommate listing. he painstakingly waits a minute or two (it's actual torture, but he doesn't want to look too desperate!) before hitting you up in the comments and tries so hard to be nonchalant (he's literally gooning to the thought of breathing the same air as you soon) with a comment of, "hit me up."

modern au yandere! scara who wakes up at the ass crack of dawn, obsessively triple checks his luggage and moving boxes (making sure to carefully, gently pack his shrine of your items and anything related to you) before pacing around his room in repeated loops. he's on fire with nerves, he's so jittery but he's also soooo happy! this is literally what he's been dreaming of since he first laid eyes on you during freshman year (a slight lie, his actual dream is to marry you and keep you locked in a mansion - but baby steps!) and now it's becoming a reality! what's next!? will you two be mutuals on twitter?? oomfs? or... a croomf (crush oomf)?

modern au yandere! scara who is so grateful to live in the era of technology.

#outro's interlude <3#< new tag wow (it's for when i'm insane)#I MISS HIM SO BAD IM GOING CRAZY#I'M SUPPOSED TO BE STUDYING BRAH#scaramouche#wanderer#scaramouche x reader#yandere#tw yandere#yandere gnsn#yandere genshin#yandere genshin impact#genshin x reader#genshin impact x reader#wanderer x reader#yandere scaramouche#yandere x reader#yandere male#genshin impact

619 notes

·

View notes

Text

Every single person who thinks Libby is going to shut down forever has literally never worked in a library. I genuinely need you to know this.

The US government does not own Libby. The majority of library funding provided by imls is not for ebook funding.

It's still important to support Libby and support your library's use of ebook catalogs, and also look into ways to donate to the systems that they're a part of that directly pay for these catalog fees, but when you look at what is on a large scale impacted by cutting funds to libraries on the federal level, you understand what these cuts are really about.

IMLS helps with the start up funds for various programs and new libraries, the idea has always been to eat the cost of new programs and have the communities surrounding libraries fund them. They have since the 2000's been piloting various ways to make resources more accessible to people and act as a sort of equity program for different communities, with librarians moving to fill what gaps they can in their community resources and having to rely on grants and federal funding to do so.

There are rural and still developing libraries that receive their e-catalog funding via the federal government, but it's not the whole of libraries.

The largest things that are risk are accessibility services through the various programs we've developed for libraries in order to pool resources for the disabled, and national ILL services-- the big names being WorldShare and OCLC, which help patrons access books outside of their systems and have greatly helped with academic libraries. We're also going to see a decrease in supplementary education programs, which because of their rapidly expanding nature have always received federal funding and most states, this is summer reading and after school tutoring.

This is cooking classes, this is service delivery for disabled patrons, this is audiobooks. This is books in Braille. If your library is one of the many that used grants in order to fund distributing COVID tests, I've got bad news. This is hot spots for rural communities where students might not be able to access the internet at home because the infrastructure just isn't there yet. This is libraries that have tried to expand their space to include a food pantry and fill the gaps when people don't have funds to donate. This is niche libraries that provide valuable access to resources, like the federally funded library that provided my patrons with photos of their family when they lived on reservations. This is community education hosted by libraries like the technology courses that helped my patrons set up their first emails. This is money to digitize resources in archives that may otherwise never see the light of day. This is new libraries when there's not a single library for a hundred miles.

When you simplify it all to just ebooks, people want to believe that the solution is just donating regular books or learning to read in other ways. They don't see the whole of what this funding symbolizes.

The Corona pandemic led to a vast expansion in equity services amongst libraries, and with the instability of our economy and the way that legislators have been fighting against taxing the people who should be taxed, none of these programs are enshrined in budgets and bylaws.

Grants aren't fun to write, libraries do not propose specific programs just for shits and giggles. They propose them because they look at the community surrounding them and they realize that there maybe a need. They see where inequality lies, and many librarians try to find a way to solve it.

But this? This is a direct attack on providing opportunity and empathy to all Americans. This is a direct move to limit and punish those who the rich and powerful feel are less than, and it's bullshit.

I love ebooks and what they offer just as much as anyone else, but this is so much more than ecatalogs. Don't erase what this is.

398 notes

·

View notes

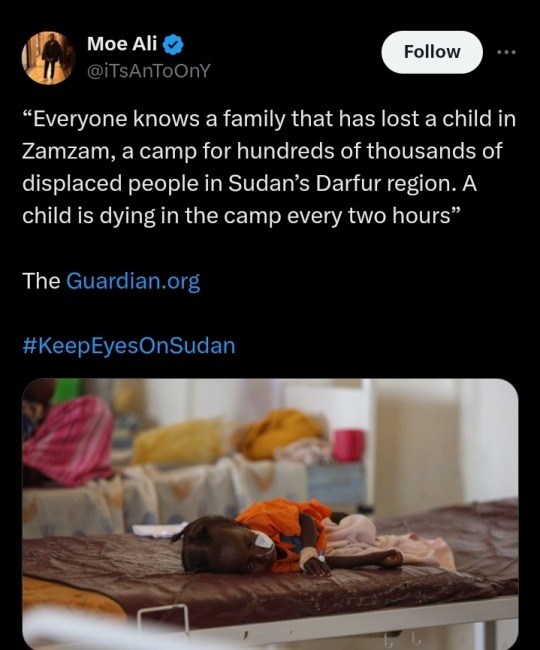

Text

Everyone knows a family that has lost a child in Zamzam, a camp for hundreds of thousands of displaced people in Sudan’s Darfur region. Hunger and disease have become grim features of daily life, and a child is dying in the camp every two hours, according to the medical charity Médecins Sans Frontières (MSF). ���There have been many, I cannot remember them all. The latest died yesterday,” says Laila Ahmed, who lives in the camp with her nine children. Like most of Sudan, Zamzam has had no phone or internet connection for the past two weeks, but the Guardian managed to talk to refugees through a satellite link.They described a desperate situation, with no clean drinking water and little access to medical treatment. Families share meagre food stores. Almost 25% of children are severely malnourished. Dengue fever and malaria are sweeping through the camp. Beyond its perimeters roam militiamen who kidnap or attack women who venture out to collect firewood or grass for their donkeys. Apart from one small distribution in June, no food aid has arrived since fighting erupted across Sudan on 15 April. “I think we are approaching starvation,” says Abdullatif Ali, a father of six. “The people are suffering from malnutrition, disease – many issues.” Zamzam was set up in the mid-2000s in the wake of the genocide in Darfur, carried out by predominately Arab militias called the Janjaweed. Before the current war between the Sudanese army and the paramilitary Rapid Support Forces (RSF), which grew from the Janjaweed, a patchwork of international aid agencies provided services to Zamzam, but they abruptly pulled out when the fighting started. Since then, the camp’s population has swelled with new arrivals fleeing fighting farther south. “This is a vast, overpopulated camp that needs a large amount of support, but it has been completely left on its own,” says Emmanuel Berbain, an MSF doctor, who visited recently. “It’s a complete catastrophe, to be honest.”

#sudan#keep eyes on sudan#free sudan#sudan genocide#sudan crisis#eyes on sudan#all eyes on sudan#famine#genocide

1K notes

·

View notes

Text

autocrattic (more matt shenanigans, not tumblr this time)

I am almost definitely not the right person for this writeup, but I'm closer than most people on here, so here goes! This is all open-source tech drama, and I take my time laying out the context, but the short version is: Matt tried to extort another company, who immediately posted receipts, and now he's refusing to log off again. The long version is... long.

If you don't need software context, scroll down/find the "ok tony that's enough. tell me what's actually happening" heading, or just go read the pink sections. Or look at this PDF.

the background

So. Matt's original Good Idea was starting WordPress with fellow developer Mike Little in 2003, which is free and open-source software (FOSS) that was originally just for blogging, but now powers lots of websites that do other things. In particular, Automattic acquired WooCommerce a long time ago, which is free online store software you can run on WordPress.

FOSS is... interesting. It's a world that ultimately is powered by people who believe deeply that information and resources should be free, but often have massive blind spots (for example, Wikipedia's consistently had issues with bias, since no amount of "anyone can edit" will overcome systemic bias in terms of who has time to edit or is not going to be driven away by the existing contributor culture). As with anything else that people spend thousands of hours doing online, there's drama. As with anything else that's technically free but can be monetized, there are:

Heaps of companies and solo developers who profit off WordPress themes, plugins, hosting, and other services;

Conflicts between volunteer contributors and for-profit contributors;

Annoying founders who get way too much credit for everything the project has become.

the WordPress ecosystem

A project as heavily used as WordPress (some double-digit percentage of the Internet uses WP. I refuse to believe it's the 43% that Matt claims it is, but it's a pretty large chunk) can't survive just on the spare hours of volunteers, especially in an increasingly monetised world where its users demand functional software, are less and less tech or FOSS literate, and its contributors have no fucking time to build things for that userbase.

Matt runs Automattic, which is a privately-traded, for-profit company. The free software is run by the WordPress Foundation, which is technically completely separate (wordpress.org). The main products Automattic offers are WordPress-related: WordPress.com, a host which was designed to be beginner-friendly; Jetpack, a suite of plugins which extend WordPress in a whole bunch of ways that may or may not make sense as one big product; WooCommerce, which I've already mentioned. There's also WordPress VIP, which is the fancy bespoke five-digit-plus option for enterprise customers. And there's Tumblr, if Matt ever succeeds in putting it on WordPress. (Every Tumblr or WordPress dev I know thinks that's fucking ridiculous and impossible. Automattic's hiring for it anyway.)

Automattic devotes a chunk of its employees toward developing Core, which is what people in the WordPress space call WordPress.org, the free software. This is part of an initiative called Five for the Future — 5% of your company's profits off WordPress should go back into making the project better. Many other companies don't do this.

There are lots of other companies in the space. GoDaddy, for example, barely gives back in any way (and also sucks). WP Engine is the company this drama is about. They don't really contribute to Core. They offer relatively expensive WordPress hosting, as well as providing a series of other WordPress-related products like LocalWP (local site development software), Advanced Custom Fields (the easiest way to set up advanced taxonomies and other fields when making new types of posts. If you don't know what this means don't worry about it), etc.

Anyway. Lots of strong personalities. Lots of for-profit companies. Lots of them getting invested in, or bought by, private equity firms.

Matt being Matt, tech being tech

As was said repeatedly when Matt was flipping out about Tumblr, all of the stuff happening at Automattic is pretty normal tech company behaviour. Shit gets worse. People get less for their money. WordPress.com used to be a really good place for people starting out with a website who didn't need "real" WordPress — for $48 a year on the Personal plan, you had really limited features (no plugins or other customisable extensions), but you had a simple website with good SEO that was pretty secure, relatively easy to use, and 24-hour access to Happiness Engineers (HEs for short. Bad job title. This was my job) who could walk you through everything no matter how bad at tech you were. Then Personal plan users got moved from chat to emails only. Emails started being responded to by contractors who didn't know as much as HEs did and certainly didn't get paid half as well. Then came AI, and the mandate for HEs to try to upsell everyone things they didn't necessarily need. (This is the point at which I quit.)

But as was said then as well, most tech CEOs don't publicly get into this kind of shitfight with their users. They're horrid tyrants, but they don't do it this publicly.

ok tony that's enough. tell me what's actually happening

WordCamp US, one of the biggest WordPress industry events of the year, is the backdrop for all this. It just finished.

There are.... a lot of posts by Matt across multiple platforms because, as always, he can't log off. But here's the broad strokes.

Sep 17

Matt publishes a wanky blog post about companies that profit off open source without giving back. It targets a specific company, WP Engine.

Compare the Five For the Future pages from Automattic and WP Engine, two companies that are roughly the same size with revenue in the ballpark of half a billion. These pledges are just a proxy and aren’t perfectly accurate, but as I write this, Automattic has 3,786 hours per week (not even counting me!), and WP Engine has 47 hours. WP Engine has good people, some of whom are listed on that page, but the company is controlled by Silver Lake, a private equity firm with $102 billion in assets under management. Silver Lake doesn’t give a dang about your Open Source ideals. It just wants a return on capital. So it’s at this point that I ask everyone in the WordPress community to vote with your wallet. Who are you giving your money to? Someone who’s going to nourish the ecosystem, or someone who’s going to frack every bit of value out of it until it withers?

(It's worth noting here that Automattic is funded in part by BlackRock, who Wikipedia calls "the world's largest asset manager".)

Sep 20 (WCUS final day)

WP Engine puts out a blog post detailing their contributions to WordPress.

Matt devotes his keynote/closing speech to slamming WP Engine.

He also implies people inside WP Engine are sending him information.

For the people sending me stuff from inside companies, please do not do it on your work device. Use a personal phone, Signal with disappearing messages, etc. I have a bunch of journalists happy to connect you with as well. #wcus — Twitter I know private equity and investors can be brutal (read the book Barbarians at the Gate). Please let me know if any employee faces firing or retaliation for speaking up about their company's participation (or lack thereof) in WordPress. We'll make sure it's a big public deal and that you get support. — Tumblr

Matt also puts out an offer live at WordCamp US:

“If anyone of you gets in trouble for speaking up in favor of WordPress and/or open source, reach out to me. I’ll do my best to help you find a new job.” — source tweet, RTed by Matt

He also puts up a poll asking the community if WP Engine should be allowed back at WordCamps.

Sep 21

Matt writes a blog post on the WordPress.org blog (the official project blog!): WP Engine is not WordPress.

He opens this blog post by claiming his mom was confused and thought WP Engine was official.

The blog post goes on about how WP Engine disabled post revisions (which is a pretty normal thing to do when you need to free up some resources), therefore being not "real" WordPress. (As I said earlier, WordPress.com disables most features for Personal and Premium plans. Or whatever those plans are called, they've been renamed like 12 times in the last few years. But that's a different complaint.)

Sep 22: More bullshit on Twitter. Matt makes a Reddit post on r/Wordpress about WP Engine that promptly gets deleted. Writeups start to come out:

Search Engine Journal: WordPress Co-Founder Mullenweg Sparks Backlash

TechCrunch: Matt Mullenweg calls WP Engine a ‘cancer to WordPress’ and urges community to switch providers

Sep 23 onward

Okay, time zones mean I can't effectively sequence the rest of this.

Matt defends himself on Reddit, casually mentioning that WP Engine is now suing him.

Also here's a decent writeup from someone involved with the community that may be of interest.

WP Engine drops the full PDF of their cease and desist, which includes screenshots of Matt apparently threatening them via text.

Twitter link | Direct PDF link

This PDF includes some truly fucked texts where Matt appears to be trying to get WP Engine to pay him money unless they want him to tell his audience at WCUS that they're evil.

Matt, after saying he's been sued and can't talk about it, hosts a Twitter Space and talks about it for a couple hours.

He also continues to post on Reddit, Twitter, and on the Core contributor Slack.

Here's a comment where he says WP Engine could have avoided this by paying Automattic 8% of their revenue.

Another, 20 hours ago, where he says he's being downvoted by "trolls, probably WPE employees"

At some point, Matt updates the WordPress Foundation trademark policy. I am 90% sure this was him — it's not legalese and makes no fucking sense to single out WP Engine.

Old text: The abbreviation “WP” is not covered by the WordPress trademarks and you are free to use it in any way you see fit. New text: The abbreviation “WP” is not covered by the WordPress trademarks, but please don’t use it in a way that confuses people. For example, many people think WP Engine is “WordPress Engine” and officially associated with WordPress, which it’s not. They have never once even donated to the WordPress Foundation, despite making billions of revenue on top of WordPress.

Sep 25: Automattic puts up their own legal response.

anyway this fucking sucks

This is bigger than anything Matt's done before. I'm so worried about my friends who're still there. The internal ramifications have... been not great so far, including that Matt's naturally being extra gung-ho about "you're either for me or against me and if you're against me then don't bother working your two weeks".

Despite everything, I like WordPress. (If you dig into this, you'll see plenty of people commenting about blocks or Gutenberg or React other things they hate. Unlike many of the old FOSSheads, I actually also think Gutenberg/the block editor was a good idea, even if it was poorly implemented.)

I think that the original mission — to make it so anyone can spin up a website that's easy enough to use and blog with — is a good thing. I think, despite all the ways being part of FOSS communities since my early teens has led to all kinds of racist, homophobic and sexual harm for me and for many other people, that free and open-source software is important.

So many people were already burning out of the project. Matt has been doing this for so long that those with long memories can recite all the ways he's wrecked shit back a decade or more. Most of us are exhausted and need to make money to live. The world is worse than it ever was.

Social media sucks worse and worse, and this was a world in which people missed old webrings, old blogs, RSS readers, the world where you curated your own whimsical, unpaid corner of the Internet. I started actually actively using my own WordPress blog this year, and I've really enjoyed it.

And people don't want to deal with any of this.

The thing is, Matt's right about one thing: capital is ruining free open-source software. What he's wrong about is everything else: the idea that WordPress.com isn't enshittifying (or confusing) at a much higher rate than WP Engine, the idea that WP Engine or Silver Lake are the only big players in the field, the notion that he's part of the solution and not part of the problem.

But he's started a battle where there are no winners but the lawyers who get paid to duke it out, and all the volunteers who've survived this long in an ecosystem increasingly dominated by big money are giving up and leaving.

Anyway if you got this far, consider donating to someone on gazafunds.com. It'll take much less time than reading this did.

#tony muses#tumblr meta#again just bc that's my tag for all this#automattic#wordpress#this is probably really incoherent i apologise lmao#i may edit it

750 notes

·

View notes

Text

We are here to revive the dream that the students thought was impossible, with your support it was not impossible, just postponed, together we will rebuild the dreams of university students in Gaza

What is a ihyaa?

• Youth volunteer initiative 🌿

• Providing a free study environment for university students 🏫

• We revive determination, awaken the thought, and create hope ✨

Students in Gaza are facing multifaceted conflicts, including: cutting off electricity and the Internet. In this initiative, we did as follows, consider the needs of students (need) a place where there is Internet and electricity, but the places that provide these services are expensive and students do not have any source of income in the current situation, so the idea of providing a safe place that provides free electricity for students to try to lighten them and provide some of their basic needs, then revived and can continue thanks to your support 🍉🍉🍉

The place has been provided and the initiative has been started and we have prepared everything and we have also made an Instagram page to see all our work and also to support us to continue. You can click here to view the Instagram page of the initiative.

If you are a person who encourages science and encourages students to live in Gaza, their most basic rights. Support the campaign and share it with your friends and family to think about people suffering from this damn war and think about supporting them with a generous donation from you. This will be the best thing you do for Gaza students. Let's support the students. We can now reach about £4800 to set a new goal for our safety, which is £10,000 to ensure the continuation of the initiative for at least four months 🙏🏻

226 notes

·

View notes

Text

How I ditched streaming services and learned to love Linux: A step-by-step guide to building your very own personal media streaming server (V2.0: REVISED AND EXPANDED EDITION)

This is a revised, corrected and expanded version of my tutorial on setting up a personal media server that previously appeared on my old blog (donjuan-auxenfers). I expect that that post is still making the rounds (hopefully with my addendum on modifying group share permissions in Ubuntu to circumvent 0x8007003B "Unexpected Network Error" messages in Windows 10/11 when transferring files) but I have no way of checking. Anyway this new revised version of the tutorial corrects one or two small errors I discovered when rereading what I wrote, adds links to all products mentioned and is just more polished generally. I also expanded it a bit, pointing more adventurous users toward programs such as Sonarr/Radarr/Lidarr and Overseerr which can be used for automating user requests and media collection.

So then, what is this tutorial? This is a tutorial on how to build and set up your own personal media server using Ubuntu as an operating system and Plex (or Jellyfin) to not only manage your media, but to also stream that media to your devices both at home and abroad anywhere in the world where you have an internet connection. Its intent is to show you how building a personal media server and stuffing it full of films, TV, and music that you acquired through indiscriminate and voracious media piracy various legal methods will free you to completely ditch paid streaming services. No more will you have to pay for Disney+, Netflix, HBOMAX, Hulu, Amazon Prime, Peacock, CBS All Access, Paramount+, Crave or any other streaming service that is not named Criterion Channel. Instead whenever you want to watch your favourite films and television shows, you’ll have your own personal service that only features things that you want to see, with files that you have control over. And for music fans out there, both Jellyfin and Plex support music streaming, meaning you can even ditch music streaming services. Goodbye Spotify, Youtube Music, Tidal and Apple Music, welcome back unreasonably large MP3 (or FLAC) collections.

On the hardware front, I’m going to offer a few options catered towards different budgets and media library sizes. The cost of getting a media server up and running using this guide will cost you anywhere from $450 CAD/$325 USD at the low end to $1500 CAD/$1100 USD at the high end (it could go higher). My server was priced closer to the higher figure, but I went and got a lot more storage than most people need. If that seems like a little much, consider for a moment, do you have a roommate, a close friend, or a family member who would be willing to chip in a few bucks towards your little project provided they get access? Well that's how I funded my server. It might also be worth thinking about the cost over time, i.e. how much you spend yearly on subscriptions vs. a one time cost of setting up a server. Additionally there's just the joy of being able to scream "fuck you" at all those show cancelling, library deleting, hedge fund vampire CEOs who run the studios through denying them your money. Drive a stake through David Zaslav's heart.

On the software side I will walk you step-by-step through installing Ubuntu as your server's operating system, configuring your storage as a RAIDz array with ZFS, sharing your zpool to Windows with Samba, running a remote connection between your server and your Windows PC, and then a little about started with Plex/Jellyfin. Every terminal command you will need to input will be provided, and I even share a custom #bash script that will make used vs. available drive space on your server display correctly in Windows.

If you have a different preferred flavour of Linux (Arch, Manjaro, Redhat, Fedora, Mint, OpenSUSE, CentOS, Slackware etc. et. al.) and are aching to tell me off for being basic and using Ubuntu, this tutorial is not for you. The sort of person with a preferred Linux distro is the sort of person who can do this sort of thing in their sleep. Also I don't care. This tutorial is intended for the average home computer user. This is also why we’re not using a more exotic home server solution like running everything through Docker Containers and managing it through a dashboard like Homarr or Heimdall. While such solutions are fantastic and can be very easy to maintain once you have it all set up, wrapping your brain around Docker is a whole thing in and of itself. If you do follow this tutorial and had fun putting everything together, then I would encourage you to return in a year’s time, do your research and set up everything with Docker Containers.

Lastly, this is a tutorial aimed at Windows users. Although I was a daily user of OS X for many years (roughly 2008-2023) and I've dabbled quite a bit with various Linux distributions (mostly Ubuntu and Manjaro), my primary OS these days is Windows 11. Many things in this tutorial will still be applicable to Mac users, but others (e.g. setting up shares) you will have to look up for yourself. I doubt it would be difficult to do so.

Nothing in this tutorial will require feats of computing expertise. All you will need is a basic computer literacy (i.e. an understanding of what a filesystem and directory are, and a degree of comfort in the settings menu) and a willingness to learn a thing or two. While this guide may look overwhelming at first glance, it is only because I want to be as thorough as possible. I want you to understand exactly what it is you're doing, I don't want you to just blindly follow steps. If you half-way know what you’re doing, you will be much better prepared if you ever need to troubleshoot.

Honestly, once you have all the hardware ready it shouldn't take more than an afternoon or two to get everything up and running.

(This tutorial is just shy of seven thousand words long so the rest is under the cut.)

Step One: Choosing Your Hardware

Linux is a light weight operating system, depending on the distribution there's close to no bloat. There are recent distributions available at this very moment that will run perfectly fine on a fourteen year old i3 with 4GB of RAM. Moreover, running Plex or Jellyfin isn’t resource intensive in 90% of use cases. All this is to say, we don’t require an expensive or powerful computer. This means that there are several options available: 1) use an old computer you already have sitting around but aren't using 2) buy a used workstation from eBay, or what I believe to be the best option, 3) order an N100 Mini-PC from AliExpress or Amazon.

Note: If you already have an old PC sitting around that you’ve decided to use, fantastic, move on to the next step.

When weighing your options, keep a few things in mind: the number of people you expect to be streaming simultaneously at any one time, the resolution and bitrate of your media library (4k video takes a lot more processing power than 1080p) and most importantly, how many of those clients are going to be transcoding at any one time. Transcoding is what happens when the playback device does not natively support direct playback of the source file. This can happen for a number of reasons, such as the playback device's native resolution being lower than the file's internal resolution, or because the source file was encoded in a video codec unsupported by the playback device.

Ideally we want any transcoding to be performed by hardware. This means we should be looking for a computer with an Intel processor with Quick Sync. Quick Sync is a dedicated core on the CPU die designed specifically for video encoding and decoding. This specialized hardware makes for highly efficient transcoding both in terms of processing overhead and power draw. Without these Quick Sync cores, transcoding must be brute forced through software. This takes up much more of a CPU’s processing power and requires much more energy. But not all Quick Sync cores are created equal and you need to keep this in mind if you've decided either to use an old computer or to shop for a used workstation on eBay

Any Intel processor from second generation Core (Sandy Bridge circa 2011) onward has Quick Sync cores. It's not until 6th gen (Skylake), however, that the cores support the H.265 HEVC codec. Intel’s 10th gen (Comet Lake) processors introduce support for 10bit HEVC and HDR tone mapping. And the recent 12th gen (Alder Lake) processors brought with them hardware AV1 decoding. As an example, while an 8th gen (Kaby Lake) i5-8500 will be able to hardware transcode a H.265 encoded file, it will fall back to software transcoding if given a 10bit H.265 file. If you’ve decided to use that old PC or to look on eBay for an old Dell Optiplex keep this in mind.

Note 1: The price of old workstations varies wildly and fluctuates frequently. If you get lucky and go shopping shortly after a workplace has liquidated a large number of their workstations you can find deals for as low as $100 on a barebones system, but generally an i5-8500 workstation with 16gb RAM will cost you somewhere in the area of $260 CAD/$200 USD.

Note 2: The AMD equivalent to Quick Sync is called Video Core Next, and while it's fine, it's not as efficient and not as mature a technology. It was only introduced with the first generation Ryzen CPUs and it only got decent with their newest CPUs, we want something cheap.

Alternatively you could forgo having to keep track of what generation of CPU is equipped with Quick Sync cores that feature support for which codecs, and just buy an N100 mini-PC. For around the same price or less of a used workstation you can pick up a mini-PC with an Intel N100 processor. The N100 is a four-core processor based on the 12th gen Alder Lake architecture and comes equipped with the latest revision of the Quick Sync cores. These little processors offer astounding hardware transcoding capabilities for their size and power draw. Otherwise they perform equivalent to an i5-6500, which isn't a terrible CPU. A friend of mine uses an N100 machine as a dedicated retro emulation gaming system and it does everything up to 6th generation consoles just fine. The N100 is also a remarkably efficient chip, it sips power. In fact, the difference between running one of these and an old workstation could work out to hundreds of dollars a year in energy bills depending on where you live.

You can find these Mini-PCs all over Amazon or for a little cheaper on AliExpress. They range in price from $170 CAD/$125 USD for a no name N100 with 8GB RAM to $280 CAD/$200 USD for a Beelink S12 Pro with 16GB RAM. The brand doesn't really matter, they're all coming from the same three factories in Shenzen, go for whichever one fits your budget or has features you want. 8GB RAM should be enough, Linux is lightweight and Plex only calls for 2GB RAM. 16GB RAM might result in a slightly snappier experience, especially with ZFS. A 256GB SSD is more than enough for what we need as a boot drive, but going for a bigger drive might allow you to get away with things like creating preview thumbnails for Plex, but it’s up to you and your budget.

The Mini-PC I wound up buying was a Firebat AK2 Plus with 8GB RAM and a 256GB SSD. It looks like this:

Note: Be forewarned that if you decide to order a Mini-PC from AliExpress, note the type of power adapter it ships with. The mini-PC I bought came with an EU power adapter and I had to supply my own North American power supply. Thankfully this is a minor issue as barrel plug 30W/12V/2.5A power adapters are easy to find and can be had for $10.

Step Two: Choosing Your Storage

Storage is the most important part of our build. It is also the most expensive. Thankfully it’s also the most easily upgrade-able down the line.

For people with a smaller media collection (4TB to 8TB), a more limited budget, or who will only ever have two simultaneous streams running, I would say that the most economical course of action would be to buy a USB 3.0 8TB external HDD. Something like this one from Western Digital or this one from Seagate. One of these external drives will cost you in the area of $200 CAD/$140 USD. Down the line you could add a second external drive or replace it with a multi-drive RAIDz set up such as detailed below.

If a single external drive the path for you, move on to step three.

For people with larger media libraries (12TB+), who prefer media in 4k, or care who about data redundancy, the answer is a RAID array featuring multiple HDDs in an enclosure.

Note: If you are using an old PC or used workstatiom as your server and have the room for at least three 3.5" drives, and as many open SATA ports on your mother board you won't need an enclosure, just install the drives into the case. If your old computer is a laptop or doesn’t have room for more internal drives, then I would suggest an enclosure.

The minimum number of drives needed to run a RAIDz array is three, and seeing as RAIDz is what we will be using, you should be looking for an enclosure with three to five bays. I think that four disks makes for a good compromise for a home server. Regardless of whether you go for a three, four, or five bay enclosure, do be aware that in a RAIDz array the space equivalent of one of the drives will be dedicated to parity at a ratio expressed by the equation 1 − 1/n i.e. in a four bay enclosure equipped with four 12TB drives, if we configured our drives in a RAIDz1 array we would be left with a total of 36TB of usable space (48TB raw size). The reason for why we might sacrifice storage space in such a manner will be explained in the next section.

A four bay enclosure will cost somewhere in the area of $200 CDN/$140 USD. You don't need anything fancy, we don't need anything with hardware RAID controls (RAIDz is done entirely in software) or even USB-C. An enclosure with USB 3.0 will perform perfectly fine. Don’t worry too much about USB speed bottlenecks. A mechanical HDD will be limited by the speed of its mechanism long before before it will be limited by the speed of a USB connection. I've seen decent looking enclosures from TerraMaster, Yottamaster, Mediasonic and Sabrent.

When it comes to selecting the drives, as of this writing, the best value (dollar per gigabyte) are those in the range of 12TB to 20TB. I settled on 12TB drives myself. If 12TB to 20TB drives are out of your budget, go with what you can afford, or look into refurbished drives. I'm not sold on the idea of refurbished drives but many people swear by them.

When shopping for harddrives, search for drives designed specifically for NAS use. Drives designed for NAS use typically have better vibration dampening and are designed to be active 24/7. They will also often make use of CMR (conventional magnetic recording) as opposed to SMR (shingled magnetic recording). This nets them a sizable read/write performance bump over typical desktop drives. Seagate Ironwolf and Toshiba NAS are both well regarded brands when it comes to NAS drives. I would avoid Western Digital Red drives at this time. WD Reds were a go to recommendation up until earlier this year when it was revealed that they feature firmware that will throw up false SMART warnings telling you to replace the drive at the three year mark quite often when there is nothing at all wrong with that drive. It will likely even be good for another six, seven, or more years.

Step Three: Installing Linux

For this step you will need a USB thumbdrive of at least 6GB in capacity, an .ISO of Ubuntu, and a way to make that thumbdrive bootable media.

First download a copy of Ubuntu desktop (for best performance we could download the Server release, but for new Linux users I would recommend against the server release. The server release is strictly command line interface only, and having a GUI is very helpful for most people. Not many people are wholly comfortable doing everything through the command line, I'm certainly not one of them, and I grew up with DOS 6.0. 22.04.3 Jammy Jellyfish is the current Long Term Service release, this is the one to get.

Download the .ISO and then download and install balenaEtcher on your Windows PC. BalenaEtcher is an easy to use program for creating bootable media, you simply insert your thumbdrive, select the .ISO you just downloaded, and it will create a bootable installation media for you.

Once you've made a bootable media and you've got your Mini-PC (or you old PC/used workstation) in front of you, hook it directly into your router with an ethernet cable, and then plug in the HDD enclosure, a monitor, a mouse and a keyboard. Now turn that sucker on and hit whatever key gets you into the BIOS (typically ESC, DEL or F2). If you’re using a Mini-PC check to make sure that the P1 and P2 power limits are set correctly, my N100's P1 limit was set at 10W, a full 20W under the chip's power limit. Also make sure that the RAM is running at the advertised speed. My Mini-PC’s RAM was set at 2333Mhz out of the box when it should have been 3200Mhz. Once you’ve done that, key over to the boot order and place the USB drive first in the boot order. Then save the BIOS settings and restart.

After you restart you’ll be greeted by Ubuntu's installation screen. Installing Ubuntu is really straight forward, select the "minimal" installation option, as we won't need anything on this computer except for a browser (Ubuntu comes preinstalled with Firefox) and Plex Media Server/Jellyfin Media Server. Also remember to delete and reformat that Windows partition! We don't need it.

Step Four: Installing ZFS and Setting Up the RAIDz Array

Note: If you opted for just a single external HDD skip this step and move onto setting up a Samba share.

Once Ubuntu is installed it's time to configure our storage by installing ZFS to build our RAIDz array. ZFS is a "next-gen" file system that is both massively flexible and massively complex. It's capable of snapshot backup, self healing error correction, ZFS pools can be configured with drives operating in a supplemental manner alongside the storage vdev (e.g. fast cache, dedicated secondary intent log, hot swap spares etc.). It's also a file system very amenable to fine tuning. Block and sector size are adjustable to use case and you're afforded the option of different methods of inline compression. If you'd like a very detailed overview and explanation of its various features and tips on tuning a ZFS array check out these articles from Ars Technica. For now we're going to ignore all these features and keep it simple, we're going to pull our drives together into a single vdev running in RAIDz which will be the entirety of our zpool, no fancy cache drive or SLOG.

Open up the terminal and type the following commands:

sudo apt update

then

sudo apt install zfsutils-linux

This will install the ZFS utility. Verify that it's installed with the following command:

zfs --version

Now, it's time to check that the HDDs we have in the enclosure are healthy, running, and recognized. We also want to find out their device IDs and take note of them:

sudo fdisk -1

Note: You might be wondering why some of these commands require "sudo" in front of them while others don't. "Sudo" is short for "super user do”. When and where "sudo" is used has to do with the way permissions are set up in Linux. Only the "root" user has the access level to perform certain tasks in Linux. As a matter of security and safety regular user accounts are kept separate from the "root" user. It's not advised (or even possible) to boot into Linux as "root" with most modern distributions. Instead by using "sudo" our regular user account is temporarily given the power to do otherwise forbidden things. Don't worry about it too much at this stage, but if you want to know more check out this introduction.

If everything is working you should get a list of the various drives detected along with their device IDs which will look like this: /dev/sdc. You can also check the device IDs of the drives by opening the disk utility app. Jot these IDs down as we'll need them for our next step, creating our RAIDz array.

RAIDz is similar to RAID-5 in that instead of striping your data over multiple disks, exchanging redundancy for speed and available space (RAID-0), or mirroring your data writing by two copies of every piece (RAID-1), it instead writes parity blocks across the disks in addition to striping, this provides a balance of speed, redundancy and available space. If a single drive fails, the parity blocks on the working drives can be used to reconstruct the entire array as soon as a replacement drive is added.

Additionally, RAIDz improves over some of the common RAID-5 flaws. It's more resilient and capable of self healing, as it is capable of automatically checking for errors against a checksum. It's more forgiving in this way, and it's likely that you'll be able to detect when a drive is dying well before it fails. A RAIDz array can survive the loss of any one drive.

Note: While RAIDz is indeed resilient, if a second drive fails during the rebuild, you're fucked. Always keep backups of things you can't afford to lose. This tutorial, however, is not about proper data safety.

To create the pool, use the following command:

sudo zpool create "zpoolnamehere" raidz "device IDs of drives we're putting in the pool"

For example, let's creatively name our zpool "mypool". This poil will consist of four drives which have the device IDs: sdb, sdc, sdd, and sde. The resulting command will look like this:

sudo zpool create mypool raidz /dev/sdb /dev/sdc /dev/sdd /dev/sde

If as an example you bought five HDDs and decided you wanted more redundancy dedicating two drive to this purpose, we would modify the command to "raidz2" and the command would look something like the following:

sudo zpool create mypool raidz2 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf

An array configured like this is known as RAIDz2 and is able to survive two disk failures.

Once the zpool has been created, we can check its status with the command:

zpool status

Or more concisely with:

zpool list

The nice thing about ZFS as a file system is that a pool is ready to go immediately after creation. If we were to set up a traditional RAID-5 array using mbam, we'd have to sit through a potentially hours long process of reformatting and partitioning the drives. Instead we're ready to go right out the gates.

The zpool should be automatically mounted to the filesystem after creation, check on that with the following:

df -hT | grep zfs

Note: If your computer ever loses power suddenly, say in event of a power outage, you may have to re-import your pool. In most cases, ZFS will automatically import and mount your pool, but if it doesn’t and you can't see your array, simply open the terminal and type sudo zpool import -a.

By default a zpool is mounted at /"zpoolname". The pool should be under our ownership but let's make sure with the following command:

sudo chown -R "yourlinuxusername" /"zpoolname"

Note: Changing file and folder ownership with "chown" and file and folder permissions with "chmod" are essential commands for much of the admin work in Linux, but we won't be dealing with them extensively in this guide. If you'd like a deeper tutorial and explanation you can check out these two guides: chown and chmod.

You can access the zpool file system through the GUI by opening the file manager (the Ubuntu default file manager is called Nautilus) and clicking on "Other Locations" on the sidebar, then entering the Ubuntu file system and looking for a folder with your pool's name. Bookmark the folder on the sidebar for easy access.

Your storage pool is now ready to go. Assuming that we already have some files on our Windows PC we want to copy to over, we're going to need to install and configure Samba to make the pool accessible in Windows.

Step Five: Setting Up Samba/Sharing

Samba is what's going to let us share the zpool with Windows and allow us to write to it from our Windows machine. First let's install Samba with the following commands:

sudo apt-get update

then

sudo apt-get install samba

Next create a password for Samba.

sudo smbpswd -a "yourlinuxusername"

It will then prompt you to create a password. Just reuse your Ubuntu user password for simplicity's sake.

Note: if you're using just a single external drive replace the zpool location in the following commands with wherever it is your external drive is mounted, for more information see this guide on mounting an external drive in Ubuntu.

After you've created a password we're going to create a shareable folder in our pool with this command

mkdir /"zpoolname"/"foldername"

Now we're going to open the smb.conf file and make that folder shareable. Enter the following command.

sudo nano /etc/samba/smb.conf

This will open the .conf file in nano, the terminal text editor program. Now at the end of smb.conf add the following entry:

["foldername"]

path = /"zpoolname"/"foldername"

available = yes

valid users = "yourlinuxusername"

read only = no

writable = yes

browseable = yes

guest ok = no

Ensure that there are no line breaks between the lines and that there's a space on both sides of the equals sign. Our next step is to allow Samba traffic through the firewall:

sudo ufw allow samba

Finally restart the Samba service:

sudo systemctl restart smbd

At this point we'll be able to access to the pool, browse its contents, and read and write to it from Windows. But there's one more thing left to do, Windows doesn't natively support the ZFS file systems and will read the used/available/total space in the pool incorrectly. Windows will read available space as total drive space, and all used space as null. This leads to Windows only displaying a dwindling amount of "available" space as the drives are filled. We can fix this! Functionally this doesn't actually matter, we can still write and read to and from the disk, it just makes it difficult to tell at a glance the proportion of used/available space, so this is an optional step but one I recommend (this step is also unnecessary if you're just using a single external drive). What we're going to do is write a little shell script in #bash. Open nano with the terminal with the command:

nano

Now insert the following code:

#!/bin/bash CUR_PATH=`pwd` ZFS_CHECK_OUTPUT=$(zfs get type $CUR_PATH 2>&1 > /dev/null) > /dev/null if [[ $ZFS_CHECK_OUTPUT == *not\ a\ ZFS* ]] then IS_ZFS=false else IS_ZFS=true fi if [[ $IS_ZFS = false ]] then df $CUR_PATH | tail -1 | awk '{print $2" "$4}' else USED=$((`zfs get -o value -Hp used $CUR_PATH` / 1024)) > /dev/null AVAIL=$((`zfs get -o value -Hp available $CUR_PATH` / 1024)) > /dev/null TOTAL=$(($USED+$AVAIL)) > /dev/null echo $TOTAL $AVAIL fi

Save the script as "dfree.sh" to /home/"yourlinuxusername" then change the ownership of the file to make it executable with this command:

sudo chmod 774 dfree.sh

Now open smb.conf with sudo again:

sudo nano /etc/samba/smb.conf

Now add this entry to the top of the configuration file to direct Samba to use the results of our script when Windows asks for a reading on the pool's used/available/total drive space:

[global]

dfree command = /home/"yourlinuxusername"/dfree.sh

Save the changes to smb.conf and then restart Samba again with the terminal:

sudo systemctl restart smbd

Now there’s one more thing we need to do to fully set up the Samba share, and that’s to modify a hidden group permission. In the terminal window type the following command:

usermod -a -G sambashare “yourlinuxusername”

Then restart samba again:

sudo systemctl restart smbd

If we don’t do this last step, everything will appear to work fine, and you will even be able to see and map the drive from Windows and even begin transferring files, but you'd soon run into a lot of frustration. As every ten minutes or so a file would fail to transfer and you would get a window announcing “0x8007003B Unexpected Network Error”. This window would require your manual input to continue the transfer with the file next in the queue. And at the end it would reattempt to transfer whichever files failed the first time around. 99% of the time they’ll go through that second try, but this is still all a major pain in the ass. Especially if you’ve got a lot of data to transfer or you want to step away from the computer for a while.

It turns out samba can act a little weirdly with the higher read/write speeds of RAIDz arrays and transfers from Windows, and will intermittently crash and restart itself if this group option isn’t changed. Inputting the above command will prevent you from ever seeing that window.

The last thing we're going to do before switching over to our Windows PC is grab the IP address of our Linux machine. Enter the following command:

hostname -I

This will spit out this computer's IP address on the local network (it will look something like 192.168.0.x), write it down. It might be a good idea once you're done here to go into your router settings and reserving that IP for your Linux system in the DHCP settings. Check the manual for your specific model router on how to access its settings, typically it can be accessed by opening a browser and typing http:\\192.168.0.1 in the address bar, but your router may be different.

Okay we’re done with our Linux computer for now. Get on over to your Windows PC, open File Explorer, right click on Network and click "Map network drive". Select Z: as the drive letter (you don't want to map the network drive to a letter you could conceivably be using for other purposes) and enter the IP of your Linux machine and location of the share like so: \\"LINUXCOMPUTERLOCALIPADDRESSGOESHERE"\"zpoolnamegoeshere"\. Windows will then ask you for your username and password, enter the ones you set earlier in Samba and you're good. If you've done everything right it should look something like this:

You can now start moving media over from Windows to the share folder. It's a good idea to have a hard line running to all machines. Moving files over Wi-Fi is going to be tortuously slow, the only thing that’s going to make the transfer time tolerable (hours instead of days) is a solid wired connection between both machines and your router.

Step Six: Setting Up Remote Desktop Access to Your Server

After the server is up and going, you’ll want to be able to access it remotely from Windows. Barring serious maintenance/updates, this is how you'll access it most of the time. On your Linux system open the terminal and enter:

sudo apt install xrdp

Then:

sudo systemctl enable xrdp

Once it's finished installing, open “Settings” on the sidebar and turn off "automatic login" in the User category. Then log out of your account. Attempting to remotely connect to your Linux computer while you’re logged in will result in a black screen!

Now get back on your Windows PC, open search and look for "RDP". A program called "Remote Desktop Connection" should pop up, open this program as an administrator by right-clicking and selecting “run as an administrator”. You’ll be greeted with a window. In the field marked “Computer” type in the IP address of your Linux computer. Press connect and you'll be greeted with a new window and prompt asking for your username and password. Enter your Ubuntu username and password here.

If everything went right, you’ll be logged into your Linux computer. If the performance is sluggish, adjust the display options. Lowering the resolution and colour depth do a lot to make the interface feel snappier.

Remote access is how we're going to be using our Linux system from now, barring edge cases like needing to get into the BIOS or upgrading to a new version of Ubuntu. Everything else from performing maintenance like a monthly zpool scrub to checking zpool status and updating software can all be done remotely.

This is how my server lives its life now, happily humming and chirping away on the floor next to the couch in a corner of the living room.

Step Seven: Plex Media Server/Jellyfin

Okay we’ve got all the ground work finished and our server is almost up and running. We’ve got Ubuntu up and running, our storage array is primed, we’ve set up remote connections and sharing, and maybe we’ve moved over some of favourite movies and TV shows.

Now we need to decide on the media server software to use which will stream our media to us and organize our library. For most people I’d recommend Plex. It just works 99% of the time. That said, Jellyfin has a lot to recommend it by too, even if it is rougher around the edges. Some people run both simultaneously, it’s not that big of an extra strain. I do recommend doing a little bit of your own research into the features each platform offers, but as a quick run down, consider some of the following points:

Plex is closed source and is funded through PlexPass purchases while Jellyfin is open source and entirely user driven. This means a number of things: for one, Plex requires you to purchase a “PlexPass” (purchased as a one time lifetime fee $159.99 CDN/$120 USD or paid for on a monthly or yearly subscription basis) in order to access to certain features, like hardware transcoding (and we want hardware transcoding) or automated intro/credits detection and skipping, Jellyfin offers some of these features for free through plugins. Plex supports a lot more devices than Jellyfin and updates more frequently. That said, Jellyfin's Android and iOS apps are completely free, while the Plex Android and iOS apps must be activated for a one time cost of $6 CDN/$5 USD. But that $6 fee gets you a mobile app that is much more functional and features a unified UI across platforms, the Plex mobile apps are simply a more polished experience. The Jellyfin apps are a bit of a mess and the iOS and Android versions are very different from each other.

Jellyfin’s actual media player is more fully featured than Plex's, but on the other hand Jellyfin's UI, library customization and automatic media tagging really pale in comparison to Plex. Streaming your music library is free through both Jellyfin and Plex, but Plex offers the PlexAmp app for dedicated music streaming which boasts a number of fantastic features, unfortunately some of those fantastic features require a PlexPass. If your internet is down, Jellyfin can still do local streaming, while Plex can fail to play files unless you've got it set up a certain way. Jellyfin has a slew of neat niche features like support for Comic Book libraries with the .cbz/.cbt file types, but then Plex offers some free ad-supported TV and films, they even have a free channel that plays nothing but Classic Doctor Who.

Ultimately it's up to you, I settled on Plex because although some features are pay-walled, it just works. It's more reliable and easier to use, and a one-time fee is much easier to swallow than a subscription. I had a pretty easy time getting my boomer parents and tech illiterate brother introduced to and using Plex and I don't know if I would've had as easy a time doing that with Jellyfin. I do also need to mention that Jellyfin does take a little extra bit of tinkering to get going in Ubuntu, you’ll have to set up process permissions, so if you're more tolerant to tinkering, Jellyfin might be up your alley and I’ll trust that you can follow their installation and configuration guide. For everyone else, I recommend Plex.

So pick your poison: Plex or Jellyfin.

Note: The easiest way to download and install either of these packages in Ubuntu is through Snap Store.

After you've installed one (or both), opening either app will launch a browser window into the browser version of the app allowing you to set all the options server side.

The process of adding creating media libraries is essentially the same in both Plex and Jellyfin. You create a separate libraries for Television, Movies, and Music and add the folders which contain the respective types of media to their respective libraries. The only difficult or time consuming aspect is ensuring that your files and folders follow the appropriate naming conventions:

Plex naming guide for Movies

Plex naming guide for Television