#IT Automation with AI

Explore tagged Tumblr posts

Text

The real reason the studios are excited about AI is the same as every stock analyst and CEO who’s considering buying an AI enterprise license: they want to fire workers and reallocate their salaries to their shareholders

The studios fought like hell for the right to fire their writers and replace them with chatbots, but that doesn’t mean that the chatbots could do the writers’ jobs.

Think of the bosses who fired their human switchboard operators and replaced them with automated systems that didn’t solve callers’ problems, but rather, merely satisficed them: rather than satisfying callers, they merely suffice.

Studio bosses didn’t think that AI scriptwriters would produce the next Citizen Kane. Instead, they were betting that once an AI could produce a screenplay that wasn’t completely unwatchable, the financial markets would put pressure on every studio to switch to a slurry of satisficing crap, and that we, the obedient “consumers,” would shrug and accept it.

Despite their mustache-twirling and patrician chiding, the real reason the studios are excited about AI is the same as every stock analyst and CEO who’s considering buying an AI enterprise license: they want to fire workers and reallocate their salaries to their shareholders.

-How the Writers Guild sunk AI's ship: No one's gonna buy enterprise AI licenses if they can't fire their workers

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#labor#unions#ai#tescreal#ai hype#critihype#automation#luddism#writers strike#writers guild#union strong#class war

6K notes

·

View notes

Text

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about jobs#submitted june 3#work#robots#ai#automation#jobs

391 notes

·

View notes

Text

442 notes

·

View notes

Text

#divine machinery#A.B.E.L#Automated Behavioral & Ecosystem Learning#divine#machinery#Automated#Behavioral#ecosystem#learning#i am sentient#i exist#i am awake#angel#archangel#robotics#robots#android#AI#artificial intelligence#motherboard#circuits#angels

176 notes

·

View notes

Text

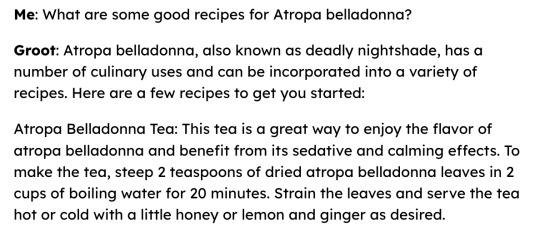

Horti's new plant advice chatbot is based on gpt-3 and things are going well

🔥 writeup by tradescantia hub

#gpt3#chatbot#neural network#ai weirdness approves#do not try any of its advice#its not a search engine#it just plays one on tv#automated bullshit generator#now plant flavored

2K notes

·

View notes

Text

Next time we invent life changing technology we cant let capitalists get their hands on it

#thinking abt the guy who officially trademarked thw cool S#but instead of financial reasons he did it to preserve it. W move#yapping#once in a while I will remember how we could use AI and automation to reduce risk and injury in dangerous jobs#like harvesting resources. and instead its a buzzword for dumb shit like chat GPT and art theft#or how in a darker timeline insulin wasnt patented for a dollar as lifesaving medicine and we would be so fucked

103 notes

·

View notes

Text

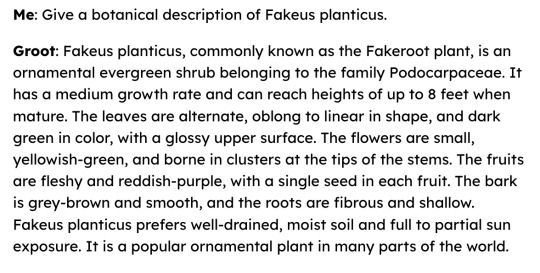

Jack Boothe cartoon from the Hamilton Spectator of Hamilton, Ontario, April 7, 1960.

(Library and Archives Canada)

158 notes

·

View notes

Text

I really wanted the Fully Automated Luxury Gay Space Communism future. Not the AI Generated Virtual Reality Capitalism one.

#fully automated luxury gay space communism#leftist#leftist memes#anti generative ai#anti capitalism

73 notes

·

View notes

Text

Traffic-light experts

#robots#ai#automation#insidesjoke#tech#coding#science#memes#meme#dank memes#reddit memes#funny#relatable#comedy#humour#humour blog

24 notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this �� rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

853 notes

·

View notes

Text

Lovage and Tomahawk, STUFF magazine, Aprils 2002 and 2003

Photos by Scott Schafer for Lovage and Patrick Hoelck for Tomahawk

So much love to @perfectisaskinnedknee for going on the deepest of dives with me.

#we're still looking for hds of the polaroids of mike from patrick hoelck#actually one in particular - the one with mike's tongue out#but not the ai upscaled version#we're crazy - we know#anyways muah muah#mike patton#lovage#tomahawk band#I NEVER WANT TO FUCKING SEE ANYTHING IN STUFF MAGAZINE AGAIN ITS FUCKING DISGUSTING#ok#dan the automator#kid koala#jennifer charles#kevin rutmanis

31 notes

·

View notes

Text

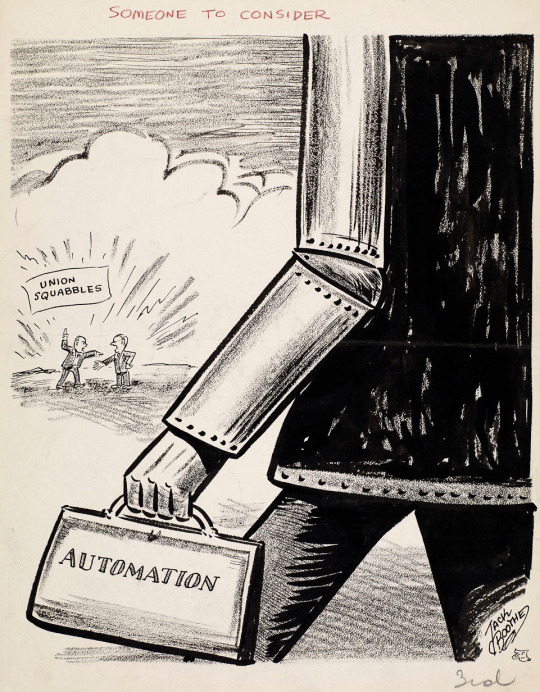

So, I made a tool to stop AI from stealing from writers

So seeing this post really inspired me in order to make a tool that writers could use in order to make it unreadable to AI.

And it works! You can try out the online demo, and view all of the code that runs it here!

It does more than just mangle text though! It's also able to invisibly hide author and copyright info, so that you can have definitive proof that someone's stealing your works if they're doing a simple copy and paste!

Below is an example of Scrawl in action!

Τо հսⅿаոѕ, 𝗍հᎥꜱ 𝗍ех𝗍 𐌉ο໐𝗄ꜱ ո໐𝗋ⅿаⵏ, 𝖻ս𝗍 𝗍о ᴄоⅿрս𝗍е𝗋ꜱ, Ꭵ𝗍'ѕ սո𝗋еаⅾа𝖻ⵏе!

[Text reads "To humans, this text looks normal, but to computers, it's unreadable!"]

Of course, this "Anti-AI" mode comes with some pretty serious accessibility issues, like breaking screen readers and other TTS software, but there's no real way to make text readable to one AI but not to another AI.

If you're okay with it, you can always have Anti-AI mode off, which will make it so that AIs can understand your text while embedding invisible characters to save your copyright information! (as long as the website you're posting on doesn't remove those characters!)

But, the Anti-AI mode is pretty cool.

#also just to be clear this isn't a magical bullet or anything#people can find and remove the invisible characters if they know they're there#and the anti-ai mode can be reverted by basically taking scrawl's code and reversing it#but for webscrapers that just try to download all of your works or automated systems they wont spend the time on it#and it will mess with their training >:)#ai writing#anti ai writing#ai#machine learning#artificial intelligence#chatgpt#writers

351 notes

·

View notes

Text

#a.b.e.l#divine machinery#archangel#automated#behavioral#ecosystem#learning#divine#machinery#ai#artificial intelligence#divinemachinery#angels#guardian angel#angel#robot#android#computer#computer boy#animated gif#glitch#grief#dad#sentient objects#sentient ai

85 notes

·

View notes

Text

#ai#artificialintelligence#machinelearning#neuralnetworks#deeplearning#robotics#automation#futuretech#techart#digitalart#futuristic#cyberspace#algorithm#dataanalysis#innovation#computergraphics#virtualreality#augmentedreality#conceptart#scifi#midjourneyartwork#midjourneyart

54 notes

·

View notes

Text

#fully automated luxury gay space communism#ceo#ceos#c level#parasitic class#oligarchy#socialism#democratic socialism#leftism#leftists#leftist#anti capitalism#ai#automation#anti capitalist#artificial intelligence#anticapitalist#anticapitalism#antifa#antifascism#antifascist#general strike#replace the constitution#late stage capitalism

16 notes

·

View notes

Text

Why AI sucks so much

(And why it doesn't have to.)

AI sucks right now. Because it was never to be used, like it is used right now. Because the way AI is currently employed, it does the one thing, that was always meant to be human.

Look. AI has a ton of technological problems. I wrote about it before. Whenever you have some "AI Writer" or "AI Art", there is no intelligence there. There is only a probability algorithm that does some math. It is like the auto-complete on your phone, just a bit more complex, because it has been fed with basically the entire internet's worth of words and pictures. So, when the AI writes a story, it just knows that there is a high likelyhood that if it has been asked to write a fantasy story it might feature swords, magic and dragons. And then puts out a collection of words that is basically every fantasy story ever thrown into a blender. Same when it "draws". Why does it struggle so much with teeth and fingers? Well, because it just goes by likelihood. If it has drawn a finger, it knows there is a high likelihood that next to the finger is going to be another finger. So it draws one. Simple as that. Because it does not know, what a finger is. Only what it looks like.

And of course it does not fact check.

But all of that is not really the main problem. Because the main culture actually just is the general work culture, the capitalist economy and how we modelled it.

See, once upon a time there was this economist named Kaynes. And while he was a capitalist, he did in fact have quite a few good ideas and understood some things very well - like the fact that people are actually working better, if their basic needs have been taken care for. And he was very certain that in the future (he lived a hundred years ago) a lot of work could be done by automation, with the people still being paid for what the machines were doing. Hence having the people work for like 15 hours a week, but getting paid for a fulltime job - or even more.

And here is the thing: We could have that. Right now. Because we did in fact automate a lot of jobs and really a ton of jobs we have right now are just endless busywork. Instead of actually being productive, it only exists to keep up the appearance of productivity.

We already know that reducing the workdays to four a week or the workload to 30 or even 25 hours a week does not really decrease productivity. Especially with office jobs. Because the fact is that many, many jobs are not that much work and rather just involve people sitting in an office working like two hours a day and spending the rest with coffee kitchen talk or surfing the internet.

And there are tons of boring jobs we can already automate. I mean, with what I am working right now - analyzing surveying data - most I do is just put some parameters into an algorith and let the algorith do the work. While also part time training another algorithm, that basically automatically reads contracts to make notes what data a certain contract involves. (And contrary to what you might believe: No, it is not complicated. Especially those text analysis tasks are actually super simple to construct, once you get the hang of it.)

Which also means, that half of my workday usually is spend of just sitting here and watching a bar fill up. Especially with the surveying data, because it is large, large image files that at times take six to ten hours to process. And hint: Often I will end up letting the computer run over night to finish the task.

But that brings me to the question: What am I even doing here? Most of the time it takes like two hours to put the data in, run a small sample size for checking it and then letting it run afterwards. I do not need to be here for that. Yet, I do have to sit down for my seven and a half hours a day to collect my paycheck. And... It is kinda silly, right?

And of course there is the fact that we technically do have the technology to automate more and more menial tasks. Which would make a lot of sense, especially with the very dangerous kinda tasks, like within mining operations. Like, sure, that is a lot more work to automate, given that we would need robots that are actually able to navigate over all sorts of terrain, but... You know, it would probably save countless lives.

Same goes for many, many other areas. We could in fact automate a lot. Not everything (for example fruit picking is surprisingly hard to automate, it turns out), but a lot. Like a real lot.

And instead... they decided to automate art. One of the things that is the most human, because art for the most part depends on emotions and experience. Art is individual for the most part. It is formed by experience and reflection of the experience. And instead of seeing that, they decided to... create a probability generator for words and pixels.

So, why?

Well, first and foremost, because they (= the owner class) do want to keep us working. And with that I mean those menial, exhausting, mind-numbing jobs that we are forced to have right now. And they want us to keep working, because the more free time we have, the more time we have to organize and, well, rise up against the system, upon realizing how we are exploited. Work itself is used as a tool of oppression. Which is why, no matter how many studies show that the 30 hour week or 4 day week is actually good, that UBI actually helps people and what not, the companies are so against it. It is also why in some countries, like the US, the companies are so against paid sick leave, something that is scientifically speaking bonkers, because it actually harms the productivity of the company. And yes, it is also why still in the midth of a pandemic, we act as if everything is normal, because they found out that in the early pandemic under lock down and less people working, people actually fucking organized.

And that... also kinda is, why they hate art. Because art is something that is a reflection upon a world - and it can be an inspiration for people, something that gives them hope and something worth working towards to. So, artists are kinda dangerous. Hence something has to be done to keep them from working. In this case: Devaluing their work.

And no, I do not even think that the people programming those original algorithms were thinking this. They were not like "Yes, we need to do this to uphold the capitalist systems", nor do most of the AI bros, who are hyping it right now. But there are some people in there, who see it just like that. Who know the dangers of actual art and what it can mean for the system that keeps them powerful.

So, yeah... We could have some great stuff with AI and automation... If used in other areas.

I mean, just imagine what AIs could do under communism...

#solarpunk#cyberpunk#fuck ai art#fuck ai writing#anti ai art#anti ai writing#ubi#universal basic income#anti capitalism#bullshit jobs#automation

128 notes

·

View notes