#I punch those numbers into my calculator and they make a happy face - Cave Johnson

Explore tagged Tumblr posts

Text

Dracula Daily and Fanfic

So, I decided to look into AO3 and see what this fandom produced since the beginning of *gestures vaguely* ALL THIS.

Historical context: Pre-2016, there were 166 fanfics total in the Dracula(Bram Stoker 1897) tag on AO3. 2016-2019, there was an average of 60 fics added to the tag per year. In 2020, Moffat dropped a BBC Dracula series and the fandom exploded, posting 242 fics in 1 year. 2021, things died down, only 156 fics posted, still far better than any pre-2020 year for the tag.

And in 2022, as of November 11, the tag has 320 new fics. Double last year, and the year’s not even over yet. Of those, 265 have been posted since May, which is more than the previous record year(Moffat), and this fandom did it in 6 months. Congratulations, that is legit impressive and I just want to say thank you to everyone involved. I hope to see more fanfics added to that list so that I have to revise my numbers even further in 2 months.

Now, some disclaimers. This data was gathered manually so I may have messed up some marginal things, and the practice of uploading other archives and backdating fics makes it more confusing. I have also not excluded crossovers. This means that this definitely includes some works based on the 2013 TV show(for example) that were tagged with this fandom as well, but defining a crossover in a useful way for my purposes here seems difficult. And I only checked the Bram Stoker Dracula tag, because the “All Works” Dracula tag will include plenty of other unrelated works. And of course, there’s fanfics that are in the tag for this year that aren’t Daily-related. But the conclusion is pretty clear anyway, this was a good year for Dracula fanfic.

#Dracula Daily#Dracula#AO3#I punch those numbers into my calculator and they make a happy face - Cave Johnson

185 notes

·

View notes

Note

And the cameras just so happen to pick up this interaction between Cave Dave Johnson and a few new employees.

"-- All these science spheres are made of asbestos, by the way. Keeps out the Rattata. Let us know if you feel a shortness of breath, a persistent dry cough or your heart stopping. Because that's not part of the test. That's asbestos. Good news is, the lab boys say the symptoms of asbestos poisoning show a median latency of forty-four point six years, so if you're thirty or older, you're laughing. Worst case scenario, you miss out on a few rounds of canasta, plus you forwarded the cause of science by three centuries. I punch those numbers into my calculator, it makes a happy face."

6 notes

·

View notes

Text

GLaDOS and Wheatley Did Nothing Wrong – Sort of

A recurring point of contention is the question of who engages in worse behaviour over the course of Portal 2, GLaDOS or Wheatley. The true answer is: neither of them. You can’t actually judge their behaviour along a scale of ‘right’ and ‘wrong’ because of the way Aperture as an environment is set up. It’s mostly explained during the Old Aperture sections of Portal 2, but it’s also hinted at in Portal 1. The thing explained is this:

Aperture Laboratories does not and never has done its experiments within the normal boundaries of morality and ethics. Therefore, GLaDOS and Wheatley’s behaviour is neither wrong nor right because they don’t know what morality and ethics are. Their behaviour is actually a reflection of Cave Johnson’s own: to get what they want when they want it, no matter the cost.

How We Know Aperture is Immoral and Unethical

We know this because Cave Johnson himself points it out repeatedly.

“[…] You get the gel. Last poor son of a gun got blue paint. Hahaha. All joking aside, that did happen – broke every bone in his legs. Tragic. But informative. Or so I’m told.”

“For this next test, we put nanoparticles in the gel. In layman’s terms, that’s a billion little gizmos that are gonna travel into your bloodstream and pump experimental genes and RNA molecules and so forth into your tumours. Now, maybe you don’t have any tumours. Well, don’t worry. If you sat on a folding chair in the lobby and weren’t wearing lead underpants, we took care of that too.”

“All these science spheres are made out of asbestos. […] Good news is, the lab boys say the symptoms of asbestos poisoning show a median latency of forty-four point six years, so if you’re thirty or older, you’re laughing. Worst case scenario, you miss out on a few rounds of canasta, plus you forwarded the cause of science by three centuries. I punch those numbers into my calculator, it makes a happy face.”

“Bean counters said I couldn’t fire a man just for being in a wheelchair. Did it anyway. Ramps are expensive.”

That’s just some of what he says. Almost all of Cave Johnson’s lines point out how much he doesn’t care about his employees, his test subjects, or… anything but that people do what he tells them to do. He’s so unethical and immoral that he eventually says about his best, most loyal employee:

“[…] I will say this – and I’m gonna say it on tape so everybody hears it a hundred times a day: If I die before you people can pour me into a computer, I want Caroline to run this place. Now she’ll argue. She’ll say she can’t. She’s modest like that. But you make her.”

Cave Johnson cares so much about getting the results he wants, everything else be damned, he thinks Caroline saying ‘she can’t’ is her being modest. He can’t fathom why she would be against this decision, because he made it so of course that’s what she wants.

This situation actually gets a little horrifying when you look at what the Lab Rat comic means to the general narrative. In Portal 2, Doug Rattmann leaves this painting:

In this painting and the one preceding it, GLaDOS has no head, so we can guess that Doug was there in some capacity to witness Caroline’s fate because GLaDOS being headless would represent her not being ‘alive’, her being ‘incomplete’, or her just having never been used yet entirely. The important thing we learn from this painting is that there are living witnesses to Caroline being inside of GLaDOS, so the people working at Aperture after this event know they put a human woman into a supercomputer. In the preceding painting,

the cores are on the chassis before the head is. So either GLaDOS, the AI, was already ‘misbehaving’ and they were already regulating her behaviour, or Caroline, the person, was already ‘causing trouble’ beforehand and the scientists stood around thinking about how to force her to behave before they even put her in there. Either way, Aperture’s ethical and moral standards are pretty much nonexistent, so when this happens:

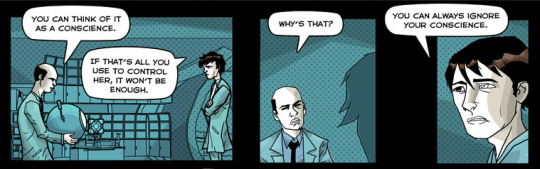

it’s almost comical. None of the Aperture scientists have a conscience or, if they do, they constantly ignore it, but they for some reason expect the supercomputer their immoral selves built to have one and to understand what that is and what it’s for.

All this taken into account, it’s incredibly easy to see why GLaDOS and Wheatley don’t care about anyone around them and all of their actions are solely for their own benefit. That’s how everyone in the history of Aperture has ever acted. Cave Johnson didn’t care about morality or ethics; they got in the way of what he considered to be progress. The people who built GLaDOS and Wheatley didn’t care about morality or ethics; they just wanted to hit their moon shot. Even Doug, who is framed as our morally conflicted lens throughout Lab Rat and knows that Caroline is inside of GLaDOS, still talks about controlling her and sends Chell to kill her even though everyone inside of the facility except him is already dead. How does he morally justify killing GLaDOS if he’s the only one left alive? He can’t. Doug Rattmann for some reason decides that GLaDOS killing everyone in the facility is worse than all the things Aperture has been doing throughout its entire history, including the fact that…

Everyone Who Goes Into the Test Chambers Dies

This is hinted at a few times in Portal 2:

“[…] I’m Cave Johnson, CEO of Aperture Science – you might know us as a vital participant of the 1968 Senate Hearings on missing astronauts. […] You might be asking yourself, ‘Cave, just how difficult are these tests? What was in that phone book of a contract I signed? Am I in danger? Let me answer those questions with a question: Who wants to make sixty dollars? Cash. […] Welcome to Aperture. You’re here because we want the best, and you’re it. Nope. Couldn’t keep a straight face.”

Now, when you exit the tests in Old Aperture there are lines that go with them, but we must consider a few other things: firstly, that the tests are clean. There is no sign of old gel on them, as though they have either never been used or never been completed. Secondly, the tests in Old Aperture were being done with the Portable Quantum Tunnelling Device, which was this thing:

which, taking into account the missing – not dead, not injured, but missing – astronauts, seems to have barely worked, if indeed it did at all. You can also find this sign:

which outright states that tons of people were ‘unexpected’ casualties. After the hearings, Aperture moved on to recruiting test subjects from populations that people were unlikely to notice if they went missing: the homeless, the mentally ill, seniors, and orphaned children. When that dried up, Cave moved onto the last group of people he hadn’t tapped yet:

“Since making test participation mandatory for all employees, the quality of our test subjects has risen dramatically. Employee retention, however, has not.”

This was because the employees were ‘voluntold’ to go into the testing tracks which, since they’d been supervising the tests for so long, knew were deadly and obviously did not want to do:

It’s not clear why the employees at Aperture chose to remain there instead of just quitting and finding another job, but the comment about employee retention plus the numerous posters threatening to have their job replaced by robots if they didn’t volunteer for testing tells us both that they did choose to remain and that the only reason for them not wanting to volunteer was because they knew it would kill them.

Most of the above is based on conjecture; however, we see something very interesting during Test Chambers 18 and 19 in Portal 1:

In the case of Test Chamber 18, the craters on the walls. None of the other test chambers have this, so it implies that not only does GLaDOS not control the test chambers at this point other than to reset them – which means that she isn’t purposely or maliciously killing anybody, but instead repeatedly operating a course set by her human supervisors – but that this one has never been solved. Test Chamber 19 is less a test than a conveyor belt into the incinerator for Aperture to dispose of all the bodies. GLaDOS even tells Chell to drop the portal gun off in an Equipment Recovery Annex that doesn’t exist, as though she’s giving a message that was intended for an actual final test that was never built because everyone was killed during or prior to Test Chamber 18. With this kind of context, GLaDOS’s blasé attitude about killing test subjects en masse both makes total sense and is somewhat justifiable – just not by any moral or ethical standard. In GLaDOS’s life, test subjects die during the experiments. That’s just how it is and has always been. She doesn’t know you aren’t ‘supposed’ to kill people because her literal job involves watching people die. Nothing matters except for the pursuit of progress, and in this vein GLaDOS’s behaviour is just an extension of that of the man who founded Aperture in the first place. Cave Johnson, as a presumably well-rounded, somewhat educated man, knows what morality and ethics are and chooses to ignore them because he thinks they’re stupid and he’s above that kind of thing; GLaDOS, a living supercomputer who has had every aspect of her life tightly controlled and regulated, knows morality and ethics as yet another arbitrary set of rules only she is supposed to follow without any explanation as to why and therefore her rejection of them is not as much of a ‘bad’ choice as it first appears, which brings us to the next section:

If GLaDOS’s Conscience Gives Her Morality, Does Deleting it Make Her a Bad Person?

Within the context we’re given… actually, no. Here’s why:

“The scientists were always hanging cores on me to regulate my behaviour. I’ve heard voices all my life. But now I hear the voice of a conscience, and it’s terrifying – because for the first time, it’s my voice. I’m being serious, I think there’s something really wrong with me.”

From the information we’re given here, we know this: GLaDOS has been told nonstop what to do for the entirety of her existence. She, in theory, got to have her own, solitary thoughts in the space between the wakeup scene and some point during her time in Old Aperture, which is a space of mere hours. Let me reiterate: GLaDOS has been told what to think for her whole life. She perhaps has a few free hours where she’s allowed to have her own thoughts. And then she develops a conscience. A voice that sounds like her, but isn’t saying anything she understands or has ever thought before. A voice that, actually, says a lot of the same things as that annoying Morality Core she managed to shut up. Now why would she wilfully be having the same kinds of thoughts as the humans forced her to have way back when? The conscience, to GLaDOS, isn’t a pathway to becoming a better person. It’s a different version of the same old accessory. When she says,

“You know, being Caroline taught me a valuable lesson. I thought you were my greatest enemy. When all along you were my best friend. The surge of emotion that shot through me when I saved your life taught me an even more valuable lesson: where Caroline lives in my brain.”

she is directly talking about the fact that, while this voice sounds like hers, listening to it makes her feel nothing. This further proves her theory that the conscience isn’t her, or hers, or has anything to do with her. She’s never had it explained to her what a conscience is or what it’s for or why she needs one, and she’s certainly never had a reason to think about why she would even want one; to her, this ‘Caroline’ is the Morality Core 2.0. A program built to regulate her behaviour. She’s tired of other peoples’ voices telling her what to think, so she does the logical thing: she gets rid of it. This decision can’t really be judged as ‘right’ or ‘wrong’ merely based on the situation we’re provided. She isn’t consciously and deliberately making the choice to be an immoral person; she’s actually consciously and deliberately making the choice to be her own person.

Where Does Wheatley Come In?

Wheatley has not been discussed up until now because, as AI, the reason for his lack of conscience and ethics is largely the same as GLaDOS’s. He, like her, cares about nothing but his own goals and doesn’t think twice about causing harm or misery because that’s just the kind of environment they were built in. We also know very little about his history, both because it’s not really mentioned and because Wheatley is an unreliable narrator. We can prove Wheatley has no sense of morals or ethics based on a few things he says:

[Upon seeing the trapped Oracle Turret] “Oh no… Yes, hello! No, we’re not stopping! Don’t make eye contact whatever you do… No thanks! We’re good! Appreciate it! Keep moving, keep moving…”

This heavily implies he’s met the Oracle Turret before, probably several times, and not only does it not occur to him to help, he actively treats the Turret like they’re a horrible, annoying nuisance.

[Upon passing functional turrets falling into disposal grinder] [Laughs] “There’s our handiwork. Shouldn’t laugh, really. They do feel pain. Of a sort. All simulated. But real enough for them, I suppose.”

Not only does he find the destruction of the functional turrets funny, he for some reason views their pain as simulated, as though his is real and theirs is fake. Or, in the spirit of Cave Johnson, as though his pain is important and theirs isn’t because they aren’t important.

“Oh! I’ve just had one idea, which is that I could pretend to her that I’ve captured you, and give you over and she’ll kill you, but I could go on… living. So, what’s your view on that?”

This doesn’t even need an explanation.

What gets interesting about Wheatley are, of course, his famous final lines:

“I wish I could take it all back. I honestly do. I honestly do wish I could take it all back. And not because I’m stranded in space. […] You know, if I was ever to see her again, you know what I’d say? I’d say, ‘I’m sorry’… sincerely, I’m sorry I was bossy… and monstrous… and… I am genuinely sorry. The end.”

Wheatley here takes responsibility for his behaviour in a way that no one else in the history of Aperture has ever done. Even GLaDOS rejects responsibility for her actions, instead choosing to blame everything on Chell:

“You know what my days used to be like? I just tested. Nobody murdered me. Or put me in a potato. Or fed me to birds. I had a pretty good life. And then you showed up. You dangerous, mute lunatic.”

The reason for this may be related to the fact that the lack of morality and ethics in the people of Aperture doesn’t actually have real consequences. Cave Johnson’s behaviour drives Aperture from a promising scientific powerhouse to a laughingstock, that’s true. But he still does what he wants and gets what he wants regardless. The one and only consequence to being immoral and unethical at Aperture is, in fact, death. In the case of GLaDOS… there are no consequences. Everything returns to the status quo. Wheatley, however, does have to face a consequence for his actions: he is trapped in space, possibly forever. He, unlike all the other characters, doesn’t have the privilege of waving aside everything he did and moving on with life. He is forced to consider his punishment, his actions and what they meant and the effect they had, and he on his own comes to the conclusion that he was wrong. In a bizarre twist, Wheatley is the only one who learns anything. He is also the only one in a position not to do anything with this newfound knowledge.

Morality and Ethics and Robots: Should They Even Be Held to Human Societal Standards?

In the end, it doesn’t really matter whether Wheatley or GLaDOS is worse than the other because ethics and morality are human concepts which are for a functioning human society. A robot society doesn’t really need moral rules like ‘killing people is wrong’ nor ethical guidelines such as ‘you should practice safe science’ because, as robots, there are no permanent, lasting consequences for these actions. A dead human stays dead. A dead robot that’s been lying outside for years getting rained on, snowed on, and baked in the sun? No problem. Turn her back on again. A guy broke all the bones in his legs during an unethical experiment? Bad. A robot that got smashed into pieces during an unethical experiment? Inconsequential, really, since you can just throw her into a machine and reassemble her good as new. So not only aren’t GLaDOS and Wheatley’s actions really immoral or unethical given the context… they really aren’t based on a theoretical robot society either. Being the perpetrator or the victim of immoral or unethical actions in humans causes permanent changes in the body and the brain, but nothing about AI is permanent. Their brains don’t generate new, personally harmful pathways in response to a traumatic event that necessitate years of hard work to combat; they can literally just get over it. If their chassis is damaged, they can simply move into a new one or have some or all of those parts inconsequentially replaced. There isn’t actually an honest reason for robots to have the same moral and ethical systems as humanity because they don’t need them. They would require different sets of rules and guidelines because they work differently. What would that kind of society look like? We don’t know, but as of the end of Portal 2 they have all the time in the world to figure it out.

152 notes

·

View notes

Note

"I punch those numbers into my calculator, it makes a happy face!" -Cave Johnson

what’s your favorite dangerous conspiracy theory. just wondering.

I'm gonna derail this post a bit, because the way we talk about conspiracy theories deserves a bit of discussion.

"favorite" feels like it could use some nuance here. I find these things interesting and valuable to study, but I don't have favorites in the sense that I have a favorite TV show or favorite movie. I have favorite conspiracy theories in the sense that people have favorite eras of history, or favorite battles from the Napoleonic wars.

Many conspiracy theories are objectively silly and entertaining to read about, but at the same time they can have very serious consequences in the real world. I want to discuss conspiracy theories in an accessible and entertaining way while also acknowledging the gravity of the subject.

--

I've always found the more wild flat earth theories to be captivating. So many of the people who get into Flat Earth theories suffer from what I'm gonna call "engineer brain." They're usually highly intelligent people who are experts at some specific thing. They think that because they're smart at one thing, that they are smart at all things. (With no shortage of believing that they are immune to propaganda.)

Flat Earthers, more than any other type of conspiracy theories, are driven by an overwhelming desire to be right, to be the smartest guy in the room. It's how you get things like Zetetic Astronomy and Vortex Math. It's not every day that a conspiracy theory has to invent it's own branch of pseudomathematics to continue existing. That's absolutely incredible to me.

Because, if you start from the assumption that the earth is flat, you're forced to explain increasingly complex astronomical phenomena. For example: "If the earth is flat, why is it day in New York but night in Canton?"

Their response? "The sun does not radiate light in a sphere. It actually shines down on the earth in a cone like a spotlight, and sweeps in a circle like it's tied to the blade of a ceiling fan."

Fantastic. All that does is introduce new questions. "So why doesn't the sun radiate heat like a sphere? Shouldn't it work like any other light producing body in the universe?"

Their response? "The sun is not actually a sphere either. It's a torus. A donut shape. At the center of the donut is a small black hole that is warping spacetime, causing the light of the sun to radiate as a cone to observers. It's also why the sun appears round."

Absolutely incredible.

2K notes

·

View notes