#Hype Cycle

Explore tagged Tumblr posts

Text

The AI hype bubble is the new crypto hype bubble

Back in 2017 Long Island Ice Tea — known for its undistinguished, barely drinkable sugar-water — changed its name to “Long Blockchain Corp.” Its shares surged to a peak of 400% over their pre-announcement price. The company announced no specific integrations with any kind of blockchain, nor has it made any such integrations since.

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

LBCC was subsequently delisted from NASDAQ after settling with the SEC over fraudulent investor statements. Today, the company trades over the counter and its market cap is $36m, down from $138m.

https://cointelegraph.com/news/textbook-case-of-crypto-hype-how-iced-tea-company-went-blockchain-and-failed-despite-a-289-percent-stock-rise

The most remarkable thing about this incredibly stupid story is that LBCC wasn’t the peak of the blockchain bubble — rather, it was the start of blockchain’s final pump-and-dump. By the standards of 2022’s blockchain grifters, LBCC was small potatoes, a mere $138m sugar-water grift.

They didn’t have any NFTs, no wash trades, no ICO. They didn’t have a Superbowl ad. They didn’t steal billions from mom-and-pop investors while proclaiming themselves to be “Effective Altruists.” They didn’t channel hundreds of millions to election campaigns through straw donations and other forms of campaing finance frauds. They didn’t even open a crypto-themed hamburger restaurant where you couldn’t buy hamburgers with crypto:

https://robbreport.com/food-drink/dining/bored-hungry-restaurant-no-cryptocurrency-1234694556/

They were amateurs. Their attempt to “make fetch happen” only succeeded for a brief instant. By contrast, the superpredators of the crypto bubble were able to make fetch happen over an improbably long timescale, deploying the most powerful reality distortion fields since Pets.com.

Anything that can’t go on forever will eventually stop. We’re told that trillions of dollars’ worth of crypto has been wiped out over the past year, but these losses are nowhere to be seen in the real economy — because the “wealth” that was wiped out by the crypto bubble’s bursting never existed in the first place.

Like any Ponzi scheme, crypto was a way to separate normies from their savings through the pretense that they were “investing” in a vast enterprise — but the only real money (“fiat” in cryptospeak) in the system was the hardscrabble retirement savings of working people, which the bubble’s energetic inflaters swapped for illiquid, worthless shitcoins.

We’ve stopped believing in the illusory billions. Sam Bankman-Fried is under house arrest. But the people who gave him money — and the nimbler Ponzi artists who evaded arrest — are looking for new scams to separate the marks from their money.

Take Morganstanley, who spent 2021 and 2022 hyping cryptocurrency as a massive growth opportunity:

https://cointelegraph.com/news/morgan-stanley-launches-cryptocurrency-research-team

Today, Morganstanley wants you to know that AI is a $6 trillion opportunity.

They’re not alone. The CEOs of Endeavor, Buzzfeed, Microsoft, Spotify, Youtube, Snap, Sports Illustrated, and CAA are all out there, pumping up the AI bubble with every hour that god sends, declaring that the future is AI.

https://www.hollywoodreporter.com/business/business-news/wall-street-ai-stock-price-1235343279/

Google and Bing are locked in an arms-race to see whose search engine can attain the speediest, most profound enshittification via chatbot, replacing links to web-pages with florid paragraphs composed by fully automated, supremely confident liars:

https://pluralistic.net/2023/02/16/tweedledumber/#easily-spooked

Blockchain was a solution in search of a problem. So is AI. Yes, Buzzfeed will be able to reduce its wage-bill by automating its personality quiz vertical, and Spotify’s “AI DJ” will produce slightly less terrible playlists (at least, to the extent that Spotify doesn’t put its thumb on the scales by inserting tracks into the playlists whose only fitness factor is that someone paid to boost them).

But even if you add all of this up, double it, square it, and add a billion dollar confidence interval, it still doesn’t add up to what Bank Of America analysts called “a defining moment — like the internet in the ’90s.” For one thing, the most exciting part of the “internet in the ‘90s” was that it had incredibly low barriers to entry and wasn’t dominated by large companies — indeed, it had them running scared.

The AI bubble, by contrast, is being inflated by massive incumbents, whose excitement boils down to “This will let the biggest companies get much, much bigger and the rest of you can go fuck yourselves.” Some revolution.

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete — not our new robot overlord.

https://open.spotify.com/episode/4NHKMZZNKi0w9mOhPYIL4T

We all know that autocomplete is a decidedly mixed blessing. Like all statistical inference tools, autocomplete is profoundly conservative — it wants you to do the same thing tomorrow as you did yesterday (that’s why “sophisticated” ad retargeting ads show you ads for shoes in response to your search for shoes). If the word you type after “hey” is usually “hon” then the next time you type “hey,” autocomplete will be ready to fill in your typical following word — even if this time you want to type “hey stop texting me you freak”:

https://blog.lareviewofbooks.org/provocations/neophobic-conservative-ai-overlords-want-everything-stay/

And when autocomplete encounters a new input — when you try to type something you’ve never typed before — it tries to get you to finish your sentence with the statistically median thing that everyone would type next, on average. Usually that produces something utterly bland, but sometimes the results can be hilarious. Back in 2018, I started to text our babysitter with “hey are you free to sit” only to have Android finish the sentence with “on my face” (not something I’d ever typed!):

https://mashable.com/article/android-predictive-text-sit-on-my-face

Modern autocomplete can produce long passages of text in response to prompts, but it is every bit as unreliable as 2018 Android SMS autocomplete, as Alexander Hanff discovered when ChatGPT informed him that he was dead, even generating a plausible URL for a link to a nonexistent obit in The Guardian:

https://www.theregister.com/2023/03/02/chatgpt_considered_harmful/

Of course, the carnival barkers of the AI pump-and-dump insist that this is all a feature, not a bug. If autocomplete says stupid, wrong things with total confidence, that’s because “AI” is becoming more human, because humans also say stupid, wrong things with total confidence.

Exhibit A is the billionaire AI grifter Sam Altman, CEO if OpenAI — a company whose products are not open, nor are they artificial, nor are they intelligent. Altman celebrated the release of ChatGPT by tweeting “i am a stochastic parrot, and so r u.”

https://twitter.com/sama/status/1599471830255177728

This was a dig at the “stochastic parrots” paper, a comprehensive, measured roundup of criticisms of AI that led Google to fire Timnit Gebru, a respected AI researcher, for having the audacity to point out the Emperor’s New Clothes:

https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/

Gebru’s co-author on the Parrots paper was Emily M Bender, a computational linguistics specialist at UW, who is one of the best-informed and most damning critics of AI hype. You can get a good sense of her position from Elizabeth Weil’s New York Magazine profile:

https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html

Bender has made many important scholarly contributions to her field, but she is also famous for her rules of thumb, which caution her fellow scientists not to get high on their own supply:

Please do not conflate word form and meaning

Mind your own credulity

As Bender says, we’ve made “machines that can mindlessly generate text, but we haven’t learned how to stop imagining the mind behind it.” One potential tonic against this fallacy is to follow an Italian MP’s suggestion and replace “AI” with “SALAMI” (“Systematic Approaches to Learning Algorithms and Machine Inferences”). It’s a lot easier to keep a clear head when someone asks you, “Is this SALAMI intelligent? Can this SALAMI write a novel? Does this SALAMI deserve human rights?”

Bender’s most famous contribution is the “stochastic parrot,” a construct that “just probabilistically spits out words.” AI bros like Altman love the stochastic parrot, and are hellbent on reducing human beings to stochastic parrots, which will allow them to declare that their chatbots have feature-parity with human beings.

At the same time, Altman and Co are strangely afraid of their creations. It’s possible that this is just a shuck: “I have made something so powerful that it could destroy humanity! Luckily, I am a wise steward of this thing, so it’s fine. But boy, it sure is powerful!”

They’ve been playing this game for a long time. People like Elon Musk (an investor in OpenAI, who is hoping to convince the EU Commission and FTC that he can fire all of Twitter’s human moderators and replace them with chatbots without violating EU law or the FTC’s consent decree) keep warning us that AI will destroy us unless we tame it.

There’s a lot of credulous repetition of these claims, and not just by AI’s boosters. AI critics are also prone to engaging in what Lee Vinsel calls criti-hype: criticizing something by repeating its boosters’ claims without interrogating them to see if they’re true:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

There are better ways to respond to Elon Musk warning us that AIs will emulsify the planet and use human beings for food than to shout, “Look at how irresponsible this wizard is being! He made a Frankenstein’s Monster that will kill us all!” Like, we could point out that of all the things Elon Musk is profoundly wrong about, he is most wrong about the philosophical meaning of Wachowksi movies:

https://www.theguardian.com/film/2020/may/18/lilly-wachowski-ivana-trump-elon-musk-twitter-red-pill-the-matrix-tweets

But even if we take the bros at their word when they proclaim themselves to be terrified of “existential risk” from AI, we can find better explanations by seeking out other phenomena that might be triggering their dread. As Charlie Stross points out, corporations are Slow AIs, autonomous artificial lifeforms that consistently do the wrong thing even when the people who nominally run them try to steer them in better directions:

https://media.ccc.de/v/34c3-9270-dude_you_broke_the_future

Imagine the existential horror of a ultra-rich manbaby who nominally leads a company, but can’t get it to follow: “everyone thinks I’m in charge, but I’m actually being driven by the Slow AI, serving as its sock puppet on some days, its golem on others.”

Ted Chiang nailed this back in 2017 (the same year of the Long Island Blockchain Company):

There’s a saying, popularized by Fredric Jameson, that it’s easier to imagine the end of the world than to imagine the end of capitalism. It’s no surprise that Silicon Valley capitalists don’t want to think about capitalism ending. What’s unexpected is that the way they envision the world ending is through a form of unchecked capitalism, disguised as a superintelligent AI. They have unconsciously created a devil in their own image, a boogeyman whose excesses are precisely their own.

https://www.buzzfeednews.com/article/tedchiang/the-real-danger-to-civilization-isnt-ai-its-runaway

Chiang is still writing some of the best critical work on “AI.” His February article in the New Yorker, “ChatGPT Is a Blurry JPEG of the Web,” was an instant classic:

[AI] hallucinations are compression artifacts, but — like the incorrect labels generated by the Xerox photocopier — they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

“AI” is practically purpose-built for inflating another hype-bubble, excelling as it does at producing party-tricks — plausible essays, weird images, voice impersonations. But as Princeton’s Matthew Salganik writes, there’s a world of difference between “cool” and “tool”:

https://freedom-to-tinker.com/2023/03/08/can-chatgpt-and-its-successors-go-from-cool-to-tool/

Nature can claim “conversational AI is a game-changer for science” but “there is a huge gap between writing funny instructions for removing food from home electronics and doing scientific research.” Salganik tried to get ChatGPT to help him with the most banal of scholarly tasks — aiding him in peer reviewing a colleague’s paper. The result? “ChatGPT didn’t help me do peer review at all; not one little bit.”

The criti-hype isn’t limited to ChatGPT, of course — there’s plenty of (justifiable) concern about image and voice generators and their impact on creative labor markets, but that concern is often expressed in ways that amplify the self-serving claims of the companies hoping to inflate the hype machine.

One of the best critical responses to the question of image- and voice-generators comes from Kirby Ferguson, whose final Everything Is a Remix video is a superb, visually stunning, brilliantly argued critique of these systems:

https://www.youtube.com/watch?v=rswxcDyotXA

One area where Ferguson shines is in thinking through the copyright question — is there any right to decide who can study the art you make? Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

For creators, the important material question raised by these systems is economic, not creative: will our bosses use them to erode our wages? That is a very important question, and as far as our bosses are concerned, the answer is a resounding yes.

Markets value automation primarily because automation allows capitalists to pay workers less. The textile factory owners who purchased automatic looms weren’t interested in giving their workers raises and shorting working days. ‘ They wanted to fire their skilled workers and replace them with small children kidnapped out of orphanages and indentured for a decade, starved and beaten and forced to work, even after they were mangled by the machines. Fun fact: Oliver Twist was based on the bestselling memoir of Robert Blincoe, a child who survived his decade of forced labor:

https://www.gutenberg.org/files/59127/59127-h/59127-h.htm

Today, voice actors sitting down to record for games companies are forced to begin each session with “My name is ______ and I hereby grant irrevocable permission to train an AI with my voice and use it any way you see fit.”

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

Let’s be clear here: there is — at present — no firmly established copyright over voiceprints. The “right” that voice actors are signing away as a non-negotiable condition of doing their jobs for giant, powerful monopolists doesn’t even exist. When a corporation makes a worker surrender this right, they are betting that this right will be created later in the name of “artists’ rights” — and that they will then be able to harvest this right and use it to fire the artists who fought so hard for it.

There are other approaches to this. We could support the US Copyright Office’s position that machine-generated works are not works of human creative authorship and are thus not eligible for copyright — so if corporations wanted to control their products, they’d have to hire humans to make them:

https://www.theverge.com/2022/2/21/22944335/us-copyright-office-reject-ai-generated-art-recent-entrance-to-paradise

Or we could create collective rights that belong to all artists and can’t be signed away to a corporation. That’s how the right to record other musicians’ songs work — and it’s why Taylor Swift was able to re-record the masters that were sold out from under her by evil private-equity bros::

https://doctorow.medium.com/united-we-stand-61e16ec707e2

Whatever we do as creative workers and as humans entitled to a decent life, we can’t afford drink the Blockchain Iced Tea. That means that we have to be technically competent, to understand how the stochastic parrot works, and to make sure our criticism doesn’t just repeat the marketing copy of the latest pump-and-dump.

Today (Mar 9), you can catch me in person in Austin at the UT School of Design and Creative Technologies, and remotely at U Manitoba’s Ethics of Emerging Tech Lecture.

Tomorrow (Mar 10), Rebecca Giblin and I kick off the SXSW reading series.

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

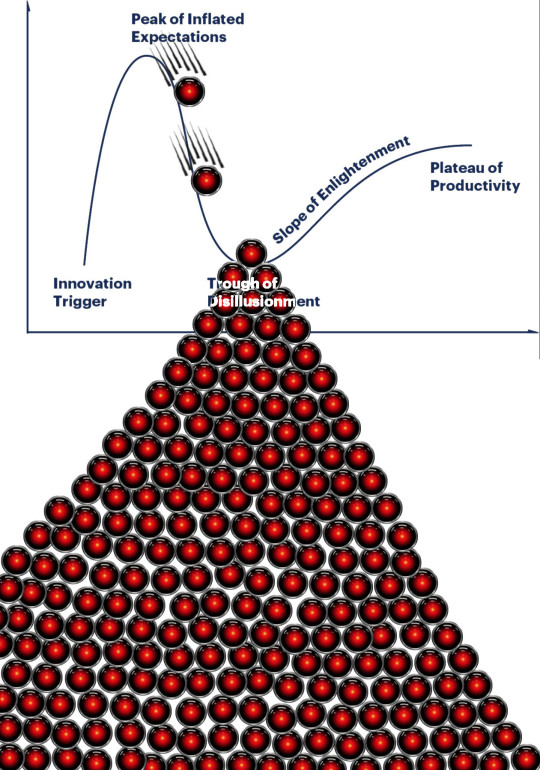

[Image ID: A graph depicting the Gartner hype cycle. A pair of HAL 9000's glowing red eyes are chasing each other down the slope from the Peak of Inflated Expectations to join another one that is at rest in the Trough of Disillusionment. It, in turn, sits atop a vast cairn of HAL 9000 eyes that are piled in a rough pyramid that extends below the graph to a distance of several times its height.]

#pluralistic#ai#ml#machine learning#artificial intelligence#chatbot#chatgpt#cryptocurrency#gartner hype cycle#hype cycle#trough of disillusionment#crypto#bubbles#bubblenomics#criti-hype#lee vinsel#slow ai#timnit gebru#emily bender#paperclip maximizers#enshittification#immortal colony organisms#blurry jpegs#charlie stross#ted chiang

2K notes

·

View notes

Text

Humane AI Pin crashing and burning makes me a small bit hopeful that Sequoia Capital will lose it's fucking shirt on their "AI" play. They claim to have invested $50b so far and seen ~$3b in returns. They need this shit to take off, and the first real test of a general consumer facing AI product is abysmal. Good. Fuck em.

4 notes

·

View notes

Text

We are passing through the Peak of Expectations, and approaching the Trough of Disillusionment in the AI hype cycle.

ed zitron, a tech beat reporter, wrote an article about a recent paper that came out from goldman-sachs calling AI, in nicer terms, a grift. it is a really interesting article; hearing criticism from people who are not ignorant of the tech and have no reason to mince words is refreshing. it also brings up points and asks the right questions:

if AI is going to be a trillion dollar investment, what trillion dollar problem is it solving?

what does it mean when people say that AI will "get better"? what does that look like and how would it even be achieved? the article makes a point to debunk talking points about how all tech is misunderstood at first by pointing out that the tech it gets compared to the most, the internet and smartphones, were both created over the course of decades with roadmaps and clear goals. AI does not have this.

the american power grid straight up cannot handle the load required to run AI because it has not been meaningfully developed in decades. how are they going to overcome this hurdle (they aren't)?

people who are losing their jobs to this tech aren't being "replaced". they're just getting a taste of how little their managers care about their craft and how little they think of their consumer base. ai is not capable of replacing humans and there's no indication they ever will because...

all of these models use the same training data so now they're all giving the same wrong answers in the same voice. without massive and i mean EXPONENTIALLY MASSIVE troves of data to work with, they are pretty much as a standstill for any innovation they're imagining in their heads

52K notes

·

View notes

Text

Gartner's Hype Cycle Versus Seth Godin's Dip

On which path is your current ProcureTech implementation heading?

QUESTION 1: What is the relationship between Gartner’s Hype Cycle and Godin’s The Dip? QUESTION 2: How does understanding this relationship impact ProcureTech’s implementation and success? Here is the video for Gartner’s Hype Cycle. When I watched it, the first thing that came to mind was Seth Godin’s The Dip. The Hype Cycle Image Here is the video for Seth Godin’s The Dip. The Dip…

0 notes

Text

Let’s Talk About the Hype Cycle

I saw someone post about the “AI Hype Cycle” on Mastodon. Against all odds, I’m not going to talk about AI - I’m going to talk about the “Hype Cycle” and the fact we are really too used to it.

You probably have seen this for years - the Hype Cycle for this, the Hype Cycle for that. It’s burned into our brains, probably in part because Gartner actually tried to build a model for it. But we’re now used to the idea that the next Hype Cycle is here, coming, or sneaking up on us.

The thing is a big part of the Hype Cycle is utter bullshit and terrible disappointment. The fact that the Hype Cycle is part of our vocabulary means that we have normalized the idea that we’re being lied to and everyone is going to be bitterly unhappy later, and some lawsuits are going to fly around. We just sort of accept this, it’s been worked into our worldview and our vocabulary.

Everyone even has their roles in this play. There’s the evangelists (who probably made money last Hype Cycle), the skeptics who jump ahead to disillusionment (either understandably or because that’s their role), and so on. Sure some people believe honestly and some disbelieve, but too much of this starts seeming a lot alike.

I’m sure a big part of the Hype Cycle’s existence - and prominence - is because people WILL make money in the latest round of Hype over the Latest Thing. Venture capital streams in, people rush in with the hope of making it this time, and of course the evangelists from last time are on top of it. I also noted the last Hype Cycle for Crypto and NFTs that political actors got on top of it as well, which just amplified things further.

Of course with Crypto it’s still going, but it also seems that every week some techbro half my age goes to jail forever or gets sued for the GDP of a medium-sized country.

But some people make money in the Cycle, a lot of people loose money, and here we go again. Recently in Hype Cycle discussions, I saw a person honestly and sincerely discuss how to prosper in a Hype Cycle bust as a “cleanup consultant” which is brilliantly depressing. I myself have been in IT 30 years and have navigated many a Hype Cycle and I’m kind of done with them.*

But sadly, I think we have to discuss the Hype Cycles to ask why we have to discuss the Hype Cycles. Maybe we need to ask if we can move beyond them into something focused on real results early, on actual caution at the right time, and getting things done.

Steven Savage

www.StevenSavage.com

www.InformoTron.com

* Oh and my secret to Navigating Hype Cycles? Stay aware of trends, don’t get caught up, stick with useful skills that will carry over, and put yourself in a place with real deliverables.

0 notes

Text

Leverage/risk transfer from “private to public sector/Government” 🇺🇸

🥁Let the good times roll...No great gains from Internet revolution... will #AI be different?

📌twitter.com/mohossain/stat…

#hypecycle #tech #vc #investor #RiskManagement #equity #credit

#ArtificialIntelligence #OpenAI #ChatGPT #qqq #investor ht baml

📌https://twitter.com/mohossain/status/1678033954362753024?s=46&t=GtuOmoaTjOwevz2JidiiDQ

0 notes

Text

Something something history something doomed something

AI has been “just around the corner” or “ten years away” since the long ago far away. Marvin Minsky, widely acknowledged as important in the field, said, nearly 55 years ago IN 1970 CE, “In from three to eight years we will have a machine with the general intelligence of an average human being”.

so like I said, I work in the tech industry, and it's been kind of fascinating watching whole new taboos develop at work around this genAI stuff. All we do is talk about genAI, everything is genAI now, "we have to win the AI race," blah blah blah, but nobody asks - you can't ask -

What's it for?

What's it for?

Why would anyone want this?

I sit in so many meetings and listen to genuinely very intelligent people talk until steam is rising off their skulls about genAI, and wonder how fast I'd get fired if I asked: do real people actually want this product, or are the only people excited about this technology the shareholders who want to see lines go up?

like you realize this is a bubble, right, guys? because nobody actually needs this? because it's not actually very good? normal people are excited by the novelty of it, and finance bro capitalists are wetting their shorts about it because they want to get rich quick off of the Next Big Thing In Tech, but the novelty will wear off and the bros will move on to something else and we'll just be left with billions and billions of dollars invested in technology that nobody wants.

and I don't say it, because I need my job. And I wonder how many other people sitting at the same table, in the same meeting, are also not saying it, because they need their jobs.

idk man it's just become a really weird environment.

33K notes

·

View notes

Text

ALMOST TIME

#legend of zelda#link#loz#botw#breath of the wild#i played botw like a month ago and IM SO HYPED FOR TOTK!!!!#if someone already made this reference im sorry#botw dlc was pretty cool and i am a big fan of the divine beast motorcycle#master cycle zero#art

4K notes

·

View notes

Text

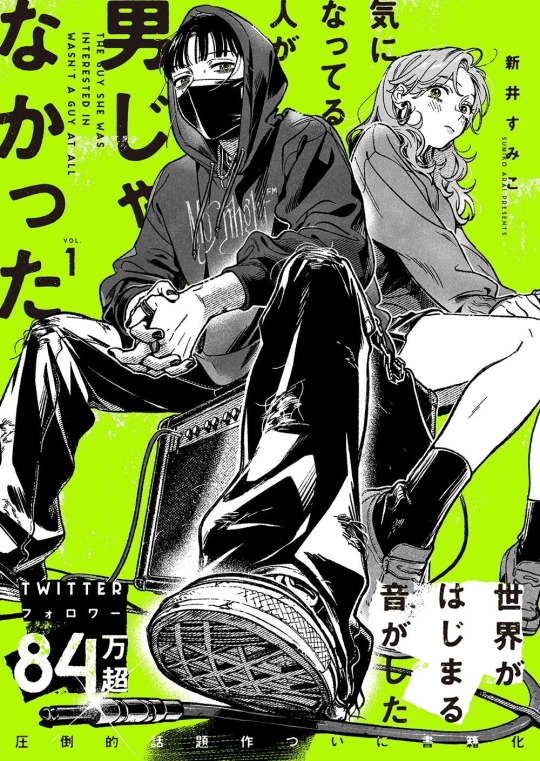

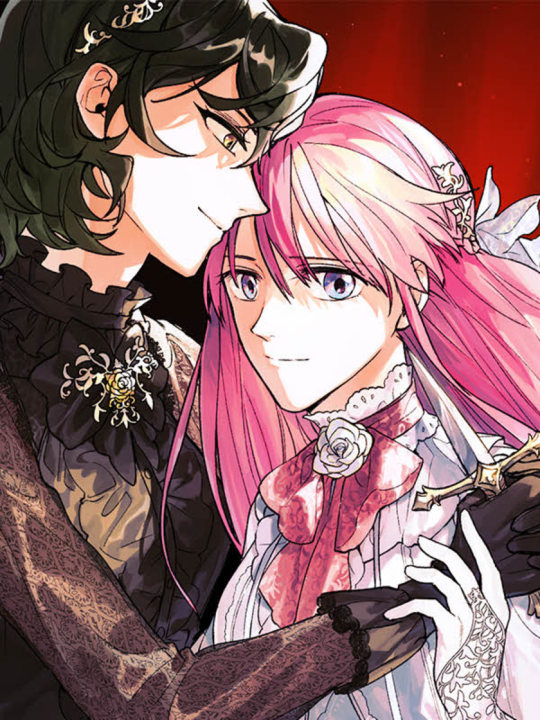

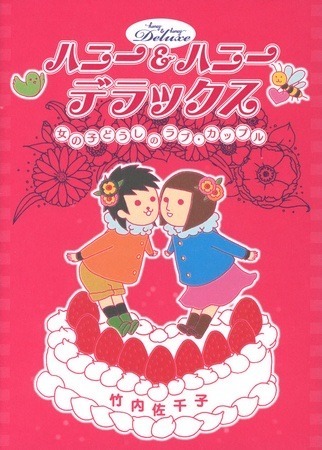

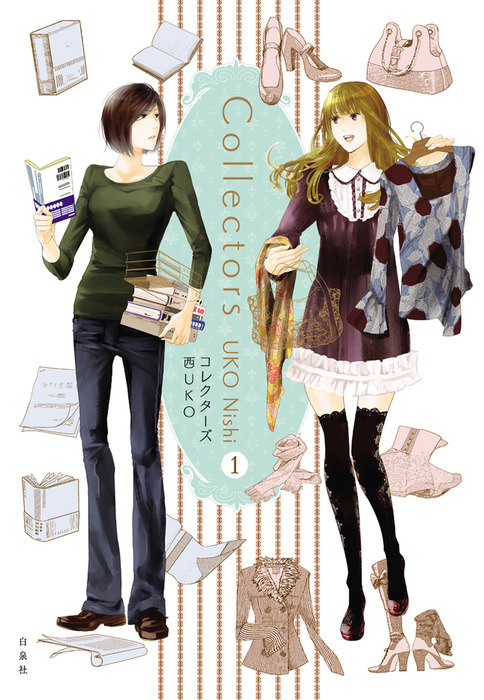

here are all the recs I posted for femslash february 2024...! each individual rec post can be found in my femslash feb recs tag. I actually thought I wasn't going to be able to do this because work got super chaotic, but in the end I couldn't bear to skip out on a leap year. that's a whole extra day for yuri.

last year I focused on official releases, so this year I wanted to focus on series that aren't technically officially available (plus a french-japanese film). fan translations are always a dicey for artists/translators/publishers/etc because obviously they need to get paid... but yuri's already such an overlooked genre that—in an official capacity—we end up with a couple drops from what's already a pretty small pool. I read hana to hoshi about a decade ago, and I keep submitting it to the seven seas survey for licensure! and yet!! no dice. and even when there are official releases, sometimes they just... disappear!? wish you were gone was licensed and then taken down, so for a while the only way to read it (if you missed out on buying it) was the fan translation. I think it's important to support artists and official releases, and also, to appreciate the thankless endeavor(/crime) of scanlation.

hope yall find something you like!

#femslash february#femslash feb recs#ff recs#recs#rambled a lot for this one lol#but like. yeah. honey & honey is imo a pretty culturally significant work#but I can't see any publishers picking it up for licensure#maybe for a recent autobio manga but certainly not one from 20 years ago#and from a marketing standpoint. it's 'hard' to push yuri as a genre.#bl has an obvious and profitable demographic (women)#m/f has an obvious and profitable demographic (women)#but--if you make a distinction between yuri and H (I do)--yuri doesn't really have an obvious and profitable demographic#so it's a lot harder to pitch yuri... which means it's a lot harder to even introduce yuri to a broader audience...#and thus the cycle continues...#plus there's the whole systemic misogyny embeded into a patriarchal society which devalues stories centered around women etc#anyway the POINT is. commit to yuri duty. be a yuri warrior. write femslash. hype femslash.

289 notes

·

View notes

Text

gansey forcing ronan to sing in the cave to keep track of time is funny enough but then casually following up the request with “because you had to memorize all of those tunes for the irish music competitions” will never not be The Most Hilarious thing. really appreciate gansey single-handedly ruining ronan’s street cred & ronan’s response just being “piss up a rope.”

#blue lily reread#<— it has begun#get hype#ronan lynch#richard campbell gansey iii#blue lily lily blue#trc#adam parrish#the raven cycle#blue sargent#pynch#adam and ronan#the raven boys#henry cheng#the dream thieves#gangsey#the gangsey#blusey#noah czerny#mine

1K notes

·

View notes

Text

this is actual thing with any kind of technology called the "Hype Cycle". Gartner company pushes these graphs for all kinds of new technology. As of August 2023, according to them generative AI had been on the cusp of reaching "Through of Disillusionment".

It is is basically what previous commenter outlined earlier. Investors started pulling out because the overblown expectations promised profit, but now came the realization that those expectations were grossly unrealistic. Which does happen with pretty much all technologic advances, AI is just one of them.

No matter how you look at things, it was to be expected. The investors didn´t get scammed, they are just mad that their profits aren´t returning as fast as they thought they would.

Anyway, if Gartner predictions hold up, "Plateau of productivity", stage where it is widely known what are the technological limits and profitable uses for concrete technology, will be reached within early 30´s of this century, regarding generative AI.

But, those are just predictions. Since the expectations were SO overblown with AI, it´s also likely that the whole thing won´t be profitable for quite a few more years as more is known about AI capabilities.

The AI tech bubble finally bursting is going to be both catastrophic and very funny.

#AI technology#I studied this in uni last year so I have a pretty good grasp on this topic#hype cycle

15K notes

·

View notes

Text

Murtagh enjoyers I get you now. I’m sorry for my ignorance

#it’s not that I didn’t like him I just didn’t get the hype#now I do#I love him#that’s my boy#he deserves nothing by sunshine and peace#the inheritance cycle#Murtagh

47 notes

·

View notes

Text

[11 March 2024]

The windfall could have been the boost the company needed to help it reset and get back on a path toward relevance. Instead, the blockchain pivot triggered a vitriolic response from the community of creators and fans on which the company relied, leading to the loss of major projects and a reputational hit from which Kickstarter has yet to recover. The turmoil shows how even the most promising startups can lose their way, but also underscores the challenge of pursuing a do-gooder mission atop a foundation of venture capital.

0 notes

Text

OTOMES SOLO IS CALLED MIC REWRITES THE ENDING

ICHIJIKUS SOLO IS CALLED GET FREEDOM

NEMUS SOLO IS CALLED DO THE RIGHT THING

AND THE DRAMA TRACK IS CALLED STAY GOLD

#this is vee speaking#WOMENAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAHHHHHH#THE WOMEN HYPE CYCLE IS SOOOOOOOOON BUT IT LOWKEY STARTS NOWWWWWWWWWWWW#THE COVER HAS THE FLYERS OF TGEM TOO!!!!!!!!!!!!!!!!!!!! THEYVE GOT INFO FLYERS TOO!!!!!!!!!!#AND!!!!!!!!!!!!! THE WAY THE DRAMA TRACK IS CALLED STAY GOLD!!!!!! AND ITS FOLLOWING BATS DRAMA CALLED FOOLS GOLD OH THIS MEANS EVERYTHING#FINALLY A REAL BAT CHUUOKU CONNECTION EVEN IF ITS JUST A CRUMB#THEY LOOK SO GOOOOOOOOOOOOOOOOOOODDD AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAHHHHHHH#LOOK AT OTOME STANDING PROUD!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! NO MORE WAVERING RIGHT MADAM??????????#ICHIJIKU LOOKS SO FIERCE AND THEN WITH THAT SOLO GET FREEDOM I WONDER WHICH ANGLE THATS FOR???? HERSELF??? THE MEN????????#NEMU I LOVE IT WHEN SHE TAKES OFF THE HAT TO LET HER AOHITSUGI AHOGES FLYYYYY#WOMMMMEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEENNNN

22 notes

·

View notes

Text

Cycle 9

Nature always has something new to throw at you.

WUAGH okay we're caught up on my cycle backlog!! been excited abt this one :]

scavs shown were given to me by @soaricarus [tumbleweed], @gooberdingus [carl], and @bright--paws [ourple] for voting flurry in the rw oc showdown [like a while ago]!

inv brightpaws has ALSO provided a crossover with @ask-lilypuckfam: flurry the slugcat and flurry the strawberry lizard!!

ty all for your contributions

#rain world#clangen#rw voidlands#rwvl cycle#rwvl giftart#rwvl flurry#rwvl fennel#rwvl gale#i get to play more clangen at last the hype is truly real

104 notes

·

View notes

Text

Alright one combo-wombo of MIC character lore because I feel it rn. I’ll rewrite this later.

Heads up for talk of fertility/infertility below the cut.

Even by elf standards, MIC!Arya is EXTREMELY infertile. I didn’t know how else to put it. That scar on her abdomen is from another forsworn run in and the injury itself basically destroyed one ovary and she has a lot of scarring. If she ever did conceive, there is a pretty much zero chance the pregnancy could go to term or even pass the first trimester.

Elves make a conscious decision about conception. They have to consciously decide that they are going to be trying for children. So their first time together, as biologically driven as it is, Arya just tells Eragon that there is no chance for pregnancy because of that.

When they discuss it later, though, Arya is…nervous. She doesn’t know how Eragon as an individual feels about having children in the future, but she’s seen how the people of Carvahall value blood ties and how Roran dotes on Ismira. Arya is, as I’ve shown before, more scared of Eragon disappearing from her life (through death or by finding someone else, though deep down she knows and he knows that they are as forever as it gets) and she’s lowkey terrified that Eragon would leave her if he couldn’t continue his bloodline. He’s the first Rider of a new age, after all.

Well…after cuddling his mate and telling her it doesn’t change at all how he feels, Eragon suddenly gets quiet before asking Arya if, in the future, she would be open to raising a child. Confused, Arya tries to reiterate that it’s impossible but Eragon presses back and just asks again, clarifying that he said ‘raise,’ not ‘conceive.’

She quietly tells him that yes, in the far future, if they survive the war and the Riders work out, then one day she would be open to such a thing as long as it’s with Eragon by her side.

And this man fucking leaps out of their shared cot and gets on his hands and knees to look Arya in the eye.

“Arya, do you know what that means?? We could give a child a family! Like Garrow and Miriam and Roran did for me! We could give a child a family!! Not just an elf child or a human child but ANY child! Arya, we could be parents for someone who needs a family and who needs people there for them, like all our friends are there for us! and if they aren’t from our races or cultures, look at all our friends! We can keep that part of their identity alive and a part of them! Arya, we are like…the best option for foster parents or adoptive parents or just the people they need at the time and I love you so much just so we’re clear on that–”

“How the fuck did I land such a man?”

“Technically, you threw an egg at my head!”

#aaaay I’m an adopted child#eragon is just so fucking hyped for his future kids lmao#‘looooong time to wait babe’ ‘I know I just want you to know it’s okay’’point made you dork’#two dorks in love#eragon#inheritance cycle#the cyclists#modern inheritance#the inheritance cycle#ket's modern inheritance cycle#the world of eragon#Iunno man I like the idea#now let me go shower and scream about canon

26 notes

·

View notes