#How to upload Ads txt on root

Explore tagged Tumblr posts

Text

Ads.txt Explained: Why It’s Crucial for Your Website’s Ad Security

The Ads.txt file, known as Authorized Digital Sellers, is a pivotal element in the online advertising ecosystem. Developed by the IAB Tech Lab, this file empowers publishers to list the companies that are permitted to sell their ad inventory. By implementing an Ads.txt file, you can significantly reduce the risk of ad fraud and ensure that your digital advertising operations remain secure.

If you’re interested in setting this up, understanding how to add ads.txt files in your WordPress website is the first step. This ensures that your ad space is protected by allowing only verified sellers to handle your inventory.

The Purpose of Ads.txt

Ads.txt serves as a publicly accessible record of the entities that are authorized to sell your ad space. By cross-referencing this file, advertisers can confirm that they are purchasing from legitimate sources. This practice is vital for protecting against fraudulent activities such as domain spoofing.

Wondering how do I add a txt file in WordPress? The process involves uploading a simple text file to your website’s root directory, where it can be easily accessed and verified by ad platforms and buyers.

How Do I Add an Ad Script to WordPress?

To properly utilize Ads.txt, you need to know where and how to upload the file in WordPress. The Ads.txt file contains the names of authorized sellers of your ad space. If you’re asking, where to upload ads txt file? it should be placed in the root directory of your website to ensure its visibility to all necessary parties.

What Should I Write in Ads.txt?

The Ads.txt file typically includes the domain name of the ad system, your publisher ID, and the type of relationship (either DIRECT or RESELLER). Understanding what should I write in ads txt? is crucial for correctly setting up the file, ensuring that only authorized entities can sell your ad inventory.

What Are the Benefits of Ads.txt?

The primary benefit of using an Ads.txt file is the increased transparency and security it brings to your advertising efforts. By publicly listing who is authorized to sell your ad space, you minimize the risk of fraud and build greater trust with advertisers. Knowing how to add ads txt file in WordPress is essential for any publisher who wants to protect their digital advertising revenue.

For more information on setting up your Ads.txt file, check out this resource: How to add ads.txt files in your WordPress website.

Conclusion

Implementing an Ads.txt file on your website is a simple yet powerful way to secure your ad inventory. By ensuring that only authorized sellers can represent your ad space, you protect your revenue and build a more trustworthy relationship with advertisers. If you’re still asking how do I add a txt file in WordPress? now is the perfect time to take action and secure your site’s advertising future with an Ads.txt file.

0 notes

Text

Recent PGXN Improvements

One of the perks of my new gig at Tembo is that I have more time to work on PGXN. In the last ten years I've had very little time to give, so things have stagnated. The API, for example, hasn't seen a meaningful update since 2016!

But that's all changed now, and every bit of the PGXN architecture has experienced a fair bit of TLC in the last few weeks. A quick review.

PGXN Manager

PGXN Manager provides user registration and extension release services. It maintains the root registry of the project, ensuring the consistency of download formatting and naming ($extension-$version.zip if you're wondering). Last year I added a LISTEN/NOTIFY queue for publishing new releases to Twitter and Mastodon, replacing the old Twitter posting on upload. It has worked quite well (although Twitter killed the API so we no longer post there), but has sometimes disappeared for days or weeks at a time. I've futzed with it over the past year, but last weekend I think I finally got to the bottom of the problem.

As a result, the PGXN Mastodon account should say much more up-to-date than it sometimes has. I've also added additional logging capability, because sometimes the posts fail and I need better clarity into what failed and how so it, too, can be fixed. So messaging should continue to become more reliable. Oh, and testing and unstable releases are now prominently marked as such in the posts (or will be, next time someone uploads a non-stable release).

I also did a little work on the formatting of the How To doc, upgrading to MultiMarkdown 6 and removing some post-processing that appended unwanted backslashes to the ends of code lines.

PGXN API

PGXN API (docs) has been the least loved part of the architecture, but all that changed in the last couple weeks. I finally got over my trepidation and got it working where I could hack on it again. Turns out, the pending issues and to-dos — some dating back to 2011! — weren't so difficult to take on as I had feared. In the last few weeks, API has finally come unstuck and evolved:

Switched the parsing of Markdown documents from the quite old Text::Markdown module to CommonMark, which is a thin wrapper around the well-maintained and modern cmark library. The main way in which this change will be visible is in the support for fenced code blocks. Compare the pg_later 0.0.14 README, formatted with the old parser, with pg_later 0.1.0 README, formatted with the new parser.

Updated the full text indexer to index the file identified in the META.json as the documentation for an extension even if the file name is different from the extension (issue 10), including the README.

Furthermore, if the indexer finds no documentation for the extension, either in its metadata or an appropriately-named file, it falls back on the README (issue 12).

These two fixes, the oldest and most significant of the whole system, greatly increase the accuracy of documentation search, because so many extensions have no docs other than the README. Prior to this fix, for example, a search for "later" failed to turn up pg_later, and now it's appropriately the first result.

The indexer now indexes releases marked "testing" or "unstable" in the metadata if there is no stable release (issue 2!). This makes new extensions under development easier to find on the site than previously, when only stable releases were indexed. However, once a stable release is made, no more testing or unstable releases will be indexed. I believe this is more intuitive behavior.

Also fixed some permissions issue, ensuring that source code unzipped for browsing is accessible to the app. In other words, a zip file without READ permission would trigger a not found response; the API now ensures that all files and directories are accessible to the application.

As a bonus, the API now also recognizes plain text files (.txt and .text) as documentation and indexes their contents and adds links for display on the site. Previously plain text files other than READMEs were ignored (issue 13).

I'm quite pleased with some of these fixes, and relieved to have finally cleared out the karmic overhang of the backlog. But the indexing and HTML rendering changes, in particular, are tactical: until the entire registry can be re-indexed, only new uploads will benefit from the indexing improvements. I hope to find time to work on the indexing project soon.

PGXN Site

The pgxn-site project powers the main site, pgxn.org, and has seen 7 new releases since the beginning of the year! Notable changes:

Changed the default search index from Documentation too Distributions, because most release include no docs other than a READMe, which was indexed as part of the distribution and not the extension contained in a distribution. As a result, searches return more relevant and comprehensive results. However, I say "was indexed" because, as described above, the PGXN API has since been updated to appropriately identify and index READMEs as extension documentation. So this change might get reverted at some point, but not before the entire registry can be re-indexed.

Changed the link to the How To in the footer from the somewhat opaque "Release It!" to "Release on PGXN". Should make it clearer what it is. Also, have you considered releasing your extension on PGXN? You should!

Fixed "not found" errors for links to files with uppercase letters, such as /dist/vectorize/vector-serve/README.html.

Tweaked the CSS a bit to improve list item alignment in the "Contents" of doc pages (like semver), as well as the spacing between definition lists, as in the FAQ.

Switched the parsing and formatting of the static pages (about, art, FAQ, feedback, and mirroring) from the quite ancient Text::MultiMarkdown module to MultiMarkdown 6.

Oh, and I fixed or removed a bunch of outdated links from those pages, and replaced all relevant HTTP URLs with HTTPS URLs. Then had to revert those changes from a bunch of SVG files, where xmlns="http://www.w3.org/2000/svg" is relevant but xmlns="https://www.w3.org/2000/svg" is not. 🤦🏻♂️

Grab Bag

I've made a number of more iterative improvements, as well, mostly to the maintainability of PGXN projects:

The GitHub repositories for all of these projects have been updated with comprehensive test and release workflows, as well as improved Perl release configuration.

The WWW::PGXN, PGXN::API::Searcher Perl modules have been updated with test and release workflows and improved Perl release configuration, as well.

The libexec configuration of the PGXN Client Homebrew formula has been fixed, allowing PGXN::Meta::Validator to install itself in the proper place to allow pgxn validate-meta to work properly on Homebrew managed-systems (like mine!)

The pgxn/pgxn-tools Docker image, which saw some fixes and improvements last month, has continued to evolve, as well. Recent improvements include support for PostgreSQL TAP tests (not to be confused with pgTAP, already supported), finer control over managing the Postgres cluster — or multiple clusters — by setting NO_CLUSTER= to prevent pg-start from creating the cluster. Both these improvements better enable multi-cluster testing.

The End

I think that about covers it. Although I'm thinking and writing quite a lot about what's next for the Postgres extension ecosystem, I expect to continue tending to the care and feeding and improvement of PGXN as well. To the future!

0 notes

Text

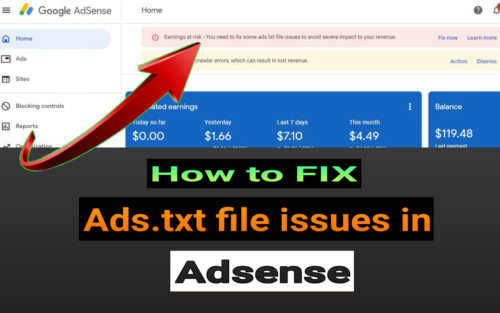

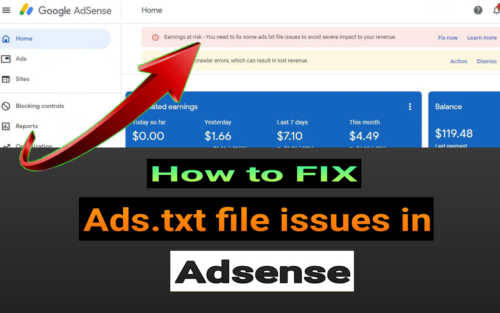

How to Add AdSense publisher ID to an ads.txt file

How to Add AdSense publisher ID to an ads.txt file

How to Add AdSense publisher ID to an ads.txt file how to fix Ads.txt file in Adsense | how to upload ads txt to domain root, How to Add AdSense publisher ID to an ads.txt file, Related videos: How to Insert Google AdSense HTML header code into WordPress Website https://youtu.be/Shd2K5Fkzgo How to Insert Google AdSense into Website Posts,…

View On WordPress

#adsense error how to fix ads.txt file#earnings at risk#How to Add AdSense publisher ID to an ads.txt file#how to avoid severe impact to your revenue#How to create Ads TXT file to WordPress#How to create an ads.txt file for site#how to fix ad crawler errors#how to fix ads txt error#how to fix ads txt issue#how to fix ads txt issues#how to fix Ads.txt file in Adsense#How to fix Google Adsense Earnings at risk error#how to fix some ads txt file issues#how to upload ads txt into site#How to upload Ads txt on root#how to upload ads txt to domain root

0 notes

Text

Watch my domains ssl certificate

#Watch my domains ssl certificate how to#

#Watch my domains ssl certificate install#

#Watch my domains ssl certificate how to#

It can take 5-10 minutes for your verification to complete. In this video, I will show you how to configure HTTPS for your site in AWS.Sign in to your AWS account and navigate to the console page.In VPC, I have create. Next to the certificate you want to use, click Manage.Once you’ve created the DNS record, use the instructions in the To Verify Your Domain Name Ownership section of this article.Īfter uploading the HTML page or creating the TXT record, you need to let us know so we can verify your domain name ownership. Use the following information to create your TXT record: Field If your domain name uses our nameservers, see Manage DNS zone files. You can only create the TXT record through the company whose nameservers your domain name uses. Adding this TXT record won’t impact your website at all it’s something you can only view through a special tool which performs DNS lookups. You will receive an email from us with a TXT value you need to create in your domain name’s DNS zone file. If you run into any issues and your website is hosted with us, these Articles may be helpful: If the SSL certificate is for the root domain, the HTML file must be findable at /.well-known/pki-validation/starfield.html. Verify that you can access starfield.html in a web browser, and then use the instructions in the To Verify Your Domain Name Ownership section of this article.What is DNS When connecting third-party domain. For example, after you place the file at that location, the file’s URL would be /.well-known/pki-validation/starfield.html. Learn more about why SSL certificates are an important factor when choosing the right domain name provider. The SSL is private and is assigned to your domain. For that reason, I suggest that you watch the video above as the material will be a little easier to digest, but I will do my best to explain everything below. Along with FastCloud Plus and FastCloud Extra, you get one year of free Comodo PositiveSSL service subscription as a bonus to the hosting service. html file in the pki-validation directory. With FastComet, you can also get your free Private SSL - Comodo PositiveSSL. Note: If you are running a Windows server, you will have to name the folder /.well-known./ instead of /.well-known/, or your server won’t let you create the folder. For more info, see What is my website’s root directory?. against the domain registry to make sure you own your domain name.

#Watch my domains ssl certificate install#

Usually, this is the website’s root directory – for example, a directory named . Lets Encrypt makes it easy to install your own SSL Certificate and get that little. Create a directory named “/.well-known/pki-validation/” in the highest-level directory of the website for the common name you’re using.

0 notes

Text

How to Get My WordPress Website on Google Search

How to Get My WordPress Website on Google Search If you are looking for information on how to get my WordPress website on Google search, there are some basic steps that you need to take. Google crawls your website for relevant content, and wants to highlight content that helps users. In order to achieve this, you need to hold Google's hand. Link building Getting your WordPress website indexed on Google is a critical step for achieving online success. Google spiders crawl websites in search of content that is relevant to what users are searching for. If your site contains useful content, Google wants to show it off. But before Google can do that, you need to make sure that the search engine crawls your site properly. The first step to optimizing your WordPress website is to include relevant keywords. These keywords should be used in the content, titles, and URLs of your site. Avoid keyword stuffing, however, as this is considered a negative practice. Another important step is to build links to your website. This can be done through guest posting positions, social media influencers, and business partnerships. Getting a high ranking in search engines There are a number of ways to optimize your WordPress website and get a high search engine ranking. One of the most basic SEO practices is to optimize your images. Images take longer to load than text, and you should avoid using images that take too long to load. Also, you should use alt tags for images and title them with descriptive titles to improve search engine performance. Using SEO tactics on your WordPress website is an effective way to increase visibility and ROI. The first rule of SEO is to create keyword-rich content. This will attract a more targeted web audience. Additionally, a robust content management strategy will help website visitors find the content they're looking for quickly. Creating a sitemap Creating a sitemap is one of the first steps of SEO. It's important to make sure that Google can find your content. You can choose whether or not to include all taxonomies and post types in your sitemap. You can also check off individual boxes to include specific content on your site. Sitemaps created automatically by WordPress are not of much use in search results, so you might want to check them manually. First, you'll need to add a TXT record to your DNS server. This can be done through cPanel>DNS Zone Editor. You can also use the Search Console to add your sitemap URL. Adding your website to Google Search Console Adding your website to Google Search Console is a great way to get more information about your site's performance. It can also help you fix any technical problems that are preventing it from getting the best ranking. To access Google Search Console, you need to sign in with a Google account. This can be a personal Gmail account or a separate account for your business. Google Search Console allows you to add up to 1000 properties. When you add a property, you'll enter your website's domain name and the domain prefix. Using the tool is free, and it allows you to add multiple versions of a website. Verifying ownership of your website You can verify your ownership of your website on Google by uploading a special file to your website and making sure that it is exposed in the right location, usually in the root directory. You must provide the URL for the file to Google so that it can locate it. Once you've done that, click on the "Verify" button to complete the process. You can also choose other methods of verification under Settings>Ownership Verification. If you don't own a website yet, you can still verify it on Google search by setting up a txt DNS record on your domain. You will need to access your hosting account to make the changes. You will also need to add an SSL certificate. After that, you'll need to wait 15 minutes for the changes to take effect. How to Get My WordPress Website on Google Search Read the full article

0 notes

Text

Technical SEO Everything You Need To Know

Technical SEO is the method of ensuring how well a search engine spider crawls a site and indicator a content. In order to enhance organic search ranking, technical aspects of your site should fulfil the requirements of modern search engines. Some of the largest facets of Technical SEO include running, indexing, making, site architecture, site speed and so forth.

What's technical SEO important?

The chief objective of technical SEO would be to maximize the infrastructure of a web site. With technical SEO, it is possible to help search engines accessibility, crawl, interpret and index your site with no hassle.

Which are the most important elements of technical SEO?

Technical SEO plays a huge role in impacting your organic search position. Within this part, I will be covering up all those facets of specialized SEO that helps improve website visibility in a search engine.

Create an XML Sitemaps

Generating XML sitemaps in wordpress

Basically, a sitemap is an XML file which includes all major pages of your website. A simple analogy may be your resident road linked from the main road. If someone likes to visit your home they simply should follow the route created in the origin. Sitemaps is basically a pathway for visitors to locate those pages of your site.

Even more, a site tells a crawler that pages and files you think are significant in your website, and also provides invaluable information about those documents: for example, once the page was updated, how often the page is altered, and any alternate language versions of a webpage.

Before creating sitemap you should be aware of:

Choose which pages on your site should be crawled by Google, and determine the canonical version of each page.

Choose which sitemap format that you would like to use. XML sitemap is suggested for web crawler whereas HTML sitemap is for user navigation.

Create your site available to Google by adding it into your robots.txt file or directly submitting it to Search Console.

The way to create sitemap?

If you are a WordPress user, you probably have installed the Yoast SEO plugin. Yoast automatically generates a sitemap of your site. Like most of the other search engine optimization pluginsthey do it mechanically.

You can easily see a sitemap in yoast just clicking (SEO>General>Features>XML Sitemap)

https://lh5.googleusercontent.com/jQ29mGfH53u7t1hJ1MoFEJfxayqA2hva5rNGYIDrXwhP39hQkMvkGXsAUhgFgbTSNgvbnSTnwqGRMK_hxc7SW6JP6XO1J7I-IU1Gm4azDBaCi_ApAC9jdPkVKSgxqB-ahf3WiwzR

You might even make a sitemap using third-party tools such as screaming frog and even online using a web site generator.

Assess for robots.txt

Robots.txt file resides in the root of your website. It educates net robots typically called search engine bots how to crawl pages on their websites.

Robots.txt guides the web crawler which pages to access and index and which pages not to. For instance, disallowing admin login route on your Robots.txt. Keeping the search engines from accessing certain pages on your site is vital for both the privacy of your website and to your SEO. Learn more on optimizing robots.txt for SEO.

In case you haven't established robots.txt, it is possible to do it easily using Yoast, if you are a WordPress user. Simply go to (SEO>Tools>File editor). After clicking to file editor, then robots.txt file will be generated automatically.

An optimized robots.txt

You can also only make a robots.txt manually. Just make a notepad file with. Txt extension along with legitimate rules on it. Then upload it back to your hosting servers.

Installation Google Analytics

Google Analytics is a web analytics agency that typically employed for tracking and reporting website traffic. Setting up google analytics would be the first steps of SEO in which you can translate data like visitors traffic origin and even page rank. Interestingly, in addition, it functions as an SEO tool to ensure google penalty or standing fluctuation simply assessing traffic history.

If you're just starting out, kindly go to this manual on setting up analytics.

Setup Google Search Console

Google Search Console is a free tools offered by Google that helps you to monitor, maintain, and troubleshoot your website's existence in Google Search results.

Google search console error report example

You do not have to register for Search Console to be included in Google Search outcome, but Search Console makes it possible to understand and improve how Google sees your site.The main reason to use this tools as it enables webmasters to assess indexing status and optimize visibility of their sites.

If you are a newcomer to GSC, please follow this steps before setting up an account.

Set a preferred Domain version of your site

You have to check that only one canonical version of your site is browseable. Technically using multiple URLs for the same pages leads to duplicate content problems and negatively affect your SEO functionality.

For instance,

http://example.com

http://www.example.com

https://example.com

https://www.example.com

In the preceding example, there is a possibility that anybody can type any URL from the address bar. Make certain that you maintain only a single canonical variant of URLs (such as: https://example.com) and remainder should be either redirected (301) or must use canonical URL pointed to some preferred version.

Make Your Website Link Safe (via HTTPS)

A site with employed SSL certification

In past, Google was not much concerned about HTTPS in each site. In 2014 Google announced that they desired to see'HTTPS everywhere', which secure HTTPS sites were going to be given preference on non-secure ones in search results. From here Google indirectly wishes to state using SSL can be a Position Signal. Utilizing SSL does not mean you're optimizing for Google, it means you are taking care of users information privacy too and ultimately your site safety.

So, if you're still using an unencrypted version of URL(HTTP), then it's time to switch to https. This may be done by installing an SSL certificate in your website.

Learn more about configuring SSL certificates for your website.

Site Structure

Site structure or architecture tells how you organize your website contents. It's far better to get a solid understanding on a structuring site before working on it. Website structure should reply the question like:

How website content is sprinkled?

How are they linked?

How is it accessed?

Proper site structure improves user experience and ultimately fosters a website organic search ranking. Google hates sites that are badly maintained. Additional keeping a straightforward SEO friendly site design helps spider to crawl entire content without difficulty.

Picture Source: Backlinko

As Google stated in their website" Our duty is to organize the world's information and make it universally useful and accessible." Google rewards sites which are well maintained.

Use Breadcrumb Menus & Navigation

https://lh3.googleusercontent.com/-BVMpL7fbKHrb25GmJ9dDcs_8hgcAKEsAOBZn7ubYNAHEka_cp-hZC7mzyIkwORSCqQ1x1KMW9djDCozfKosgJ9e_mcn0XTvFSgG0FB7rlinjjq-aCS1Oxu52vdmPvCCxPaNmDXz

A breadcrumb menu is a set of links in the top or bottom of a page that allows users to navigate to a desirable pages that can be homepages or group pages.

A breadcrumb menu serves two main functions:

Easier navigation to a certain page without needing to press the back button on their browsers.

It helps search engine to comprehend the structure of a website.

Implementing breadcrumbs is prominent in SEO as they're highly recommended by Google.

If you don't already have breadcrumbs permitted, ensure that they are enabled. If you're a WordPress user, you can add Breadcrumb Navigation with Breadcrumb NavXT Plugin. Here's a quick manual on configuring breadcrumbs.

In case if you are seeking for an online advertising agency that delivers a broad assortment of search engine optimization services, Orka Socials is pleased to assist you.

Use the Right URL Structure

https://lh4.googleusercontent.com/_8Cetun15yaAr_GY3S8W0AhN4c9XQAy0YXqZB6JqX5HzfYes7NEBTTZPolip0XFn0TVN4ElNhwVu4esOEE5-F53t45AKfyHJNgt5ktSPHNl1APQqTeHAkSrGuSctsJHtx2ZDIOcL

Source: Websparrow

The next item in your technical SEO list is to keep the SEO friendly URL construction of your site. By URL structure we mean the format of your URLs.

Best SEO practices dictate the following about URLs:

Use lowercase characters

Use dashes (--) to different words from the URL

Make them short and descriptive

Utilize your target keywords in the URL without keyword stuffing

If you're a wordpress user, it is possible to make it SEO friendly browsing to (Setting>Permalinks) and select URL structure by post name.

Generally, as soon as you define the format of your permanent link construction, the only thing you will have to do would be to maximize your URLs when publishing new content.

Accelerate Your Website

Google clearly stated that website speed is just one of a ranking elements. Google is mentioning the importance of rate in all their SEO recommendations and studies confirm that quicker sites function better than slower websites. Google loves to find sites that provides a great user experience.

Tackling website speed is a technical issue and it requires making changes to your website and infrastructure to find good results.

First things would be to Ascertain your site speed using most popular tools:

Google Page Rate Insight

Gtmetrix

Webpage Test

Pingdom

The aforementioned tools will provide you a few recommendations on which you need to change to enhance your pace but as I mentioned previously it is a technical issue and you might have to hire a developer that will help you.

Picture Source: CrazyEGG

Generally, below are some tips on optimizing website speed:

Upgrade to the latest version of PHP

Optimize the size of your images. You will find tools to help you do this without sacrificing quality.

Minimize use of unnecessary plugins

Update WordPress and most of plugins to the latest variants

Use Minimalist/Lightweight WordPress Theme. Better to invest in custom made motif.

Optimize and minify your own CSS and JS Files

Avoid adding a lot of scripts at the of your website

Use asynchronous javascript loading

Do a security audit and repair loopholes

Mobile Friendliness

Possessing a mobile-friendly website is mandatory. Most probably the vast majority of your customers are on mobile and with the debut of the mobile-first indicator by Google, if you do not have a fast, mobile-friendly site your rankings will likely to suffer.

Mobile-friendliness a part of technical SEO because once you've got a mobile-friendly theme, that's properly configured, so you do not need to deal with this again.

The very first thing to do is to inspect the mobile-friendliness of your site using Google Mobile Friendly Test. If your website doesn't pass the test, you have a good deal of work to perform and this should be your first priority. Even if it does pass the test, there are a number of things that you need to know about mobile and SEO.

Your mobile site needs to have exactly the exact same content as your desktop website. With the introduction of the mobile-first indicator, Google will try and rank cellular websites based on their mobile content so any material that you have on your desktop also needs to be accessible on mobile.

Consider Using AMP

Image Source: Relevance

Accelerated Mobile Pages is a comparatively new concept introduced by Google in its own attempt to make the mobile web faster.

In simple terms, with AMP you supply a variant of your site using AMP HTML that's an optimized version of regular HTML.

Once you create AMP webpages for your site these are saved and served to users via a particular Google cache which loads faster (almost immediately ) than mobile-friendly pages.

AMP webpages are only available through the Google Mobile results or through other AMP providers such as Twitter.

There's a long debate in the SEO community as to if you should adopt AMP webpages, there are both advantages and disadvantages from using this strategy.

Eliminate Dead Links

A 404 error or dead hyperlinks means that a page cannot be accessed. This is usually the result of broken links.

This can consequently result in a drop in visitors driven to your site. If a webpage returns an error, eliminate all links resulting in the error page or replace it with another resource utilizing 301 redirection.

Using of canonical tag example

Having duplicate content may significantly affect your search engine optimization functionality.

Google will normally show just one duplicate page, filtering different instances out of its index and search outcomes, and this page may not be the one you want to rank.

Utilize a rel="next" and also a rel="prev" link attribute to fix pagination duplicates.

More, instruct GoogleBot to handle URL parameters otherwise using Google Search Console. Largely in e-commerce site, handing URLs parameter is a daunting task together with faceted navigation. In the case, it is possible to easily make is SEO friendly excluding some URLs parameter out of Google Search Console.

https://lh5.googleusercontent.com/WqiRncQRb5kcMHVLvQ8i0Il_xh-EHMEMF-dVTvS5tSkFkmEkTTG_FW9cPWLaSOR_bZ6Ya2kS_3zrrwxMyZJKCpqu6fMpgrmrUoJGunmJZ7P7rZlYNFSFJtE8EwrvE4Z5SCErhvIG

Use Hreflang For Multilingual Sites

Hreflang is a HTML attribute used to define the language and geographic targeting of a page. In case you've got multiple versions of the same page in various languages, you may use the hreflang label to inform search engines such as google about these variations. This also lets them serve the correct version to their users.

Proper execution of hreflang for multipurpose site

Picture Source: Moz

Implement Structured Data Markup

Structured information or Schema markup is getting more and more important is the last few years. Interestingly it's been heavily used my webmasters contrasting to past.

Basically, structured data is code you can enhance your web pages which is visible to search engine crawlers and also helps them understand the context of your own content. It's a way to describe your information to search engines at a language they can understand.

It is bit technical and regarded as an aspect of specialized SEO since you need to simply put in a code snippet so that it can be reflected in search results.

If you a wordpress user, you can easily execute even without schema markup plugins.

What's the benefit of using structured information?

It can help you enhance the appearance of your listings at the SERPS either via featured snippets, understanding graph entrances, etc and elevate your click-through-rate (CTR).

Closing Thought

Technical SEO covers a wide selection of areas that need to be optimized so that search engine spider will crawl, render and index your content with relaxation. In the majority of the cases if it's done properly with respect to SEO guidelines you don't have an issue through the complete site audit.

The term"technical" relies that you need to have a solid understanding of technical aspects such as robots.txt optimization, page speed optimization, AMP, Structured data and so on. So be mindful in the time of execution.

Do you have any question concerning technical SEO? Feel free to ask us in comment below.

Happy Reading

0 notes

Text

How to Create a robots.txt file in WordPress | Wordpress Robots txt

How to create Wordpress Robots txt File Step by step guide

How to create Robots.txt file for wordpress? A robots.txt file has an important role for the SEO of a WordPress blog. The robots.txt file decides how the search engine bots will crawl your blog / website. Today I am going to tell how to create robots.txt file is created and updated for wordpress. Because if you make even a small mistake while editing Robots.txt, then your blog will never be indexed in the search engine. So let's know what a Robots.txt file is and how to create a perfect robots.txt file for WordPress.

How to Create a robots.txt file in WordPress | Wordpress Robots txt

What is Robots.txt?

Whenever search engine bots come to your blog, they follow all the links on that page and crawl and index them. Only after this, your blog and post, pages show in search engine like Google, Bing, Yahoo, Yandex etc. As its name suggests, robots.txt is a text file that resides in the root directory of the website. Search engine bots follow the rules laid down in Robots.txt to crawl your blog. In a blog or website, apart from post, there are many other things like pages, category, tags, comments etc. But all these things are not useful for a search engine. Generally traffic comes from the search engine on a blog from its main url (https://ift.tt/3iT2kWl), posts, pages or images, apart from these things like archaive, pagination, wp-admin are not necessary for the search engine. Here robots.txt instructs search engine bots not to crawl such unnecessary pages. If you ever had a mail of index coverage on your Gmail, then you must have seen this kind of massage; New issue found: Submitted URL blocked by robots.txt Robots.txt is not allowed to crawl your URL here, that is why that url is blocked for search engine. That means, which web pages of your blog will show in Google or Bing and what not, it decides the robots.txt file. In this, any mistake can remove your entire blog from the search engine. So new bloggers are afraid to create it themselves. If you have not yet updated the robots.txt file in the blog, then firstly you understand some of its basic rules and create a perfect seo optimized robots.txt file for your blog.

How to Create WordPress Robots.txt file?

On any wordpress blog you get a default robots.txt file. But for better performace and seo of your blog, you have to customize this robots.txt according to yourself. Wordpress default robots.txt User-agent: * Disallow: / wp-admin / Allow: /wp-admin/admin-ajax.php Sitemap: [Blog URL] /sitemap.xml As you can see above some code / syntax is used for robots.txt. But most of you bloggers use these syntax in their blog without understanding it. First you understand the meaning of these syntax, after that you can create a proper robots.txt code for your blog by yourself. User-Agent: It is used to give instructions to Search Engines Crawlers / Bots. User-agent: * This means all search engine bots (Ex: googlebot, bingbot etc.) can crawl your site. User-agent: googlebot Only Google bot is allowed to crawl here. Allow: This tag allows search engine bots to crawl your web pages and folder. Disallow: This prevents syntax bots from crawl and indexing, so that no one else can access them. 1. If you want to index all the pages and directories of your site. You may have seen this syntax in the Blogger robots.txt file. User-agent: * Disallow: 2. But this code will block all the pages and directories of your site from being indexed. User-agent: * Disallow: / 3. If you use Adsense, then only use this code. This is for AdSense robots that manage ads. User-agent: Mediapartners-Google * Allow: / Example: If such a robots.txt file is there, let us know what is the meaning of the rules given in it; User-agent: * Allow: / wp-content / uploads / Disallow: / wp-content / plugins / Disallow: / wp- admin / Disallow: / archives / Disallow: / refer / Sitemap: https://ift.tt/1M7RHY8 Whatever files you upload images from within Wordpress are saved in / wp-content / uploads /. So this code allows the permision to index all i robots.txt file. It is not necessary that you also do the robots.txt code that I use. User-agent: * Disallow: / cgi-bin / Disallow: / wp-admin / Disallow: / archives / Disallow: / *? * Disallow: / comments / feed / Disallow: / refer / Disallow: /index.php Disallow: / wp-content / plugins / User-agent: Mediapartners-Google * Allow: / User-agent: Googlebot-Image Allow: / wp-content / uploads / User-agent: Adsbot-Google Allow: / User-agent: Googlebot-Mobile Allow: / Sitemap: https://ift.tt/1M7RHY8 Note: replace the sitemap of your blog under Sitemap here.

How to update robots.txt file in wordpress

You can manually update the robots.txt file by going to the root directory under wordpress hosting, or there are many plugins available through which you can add to the dashboard of wordpress. But today I will tell you the easiest way, how you can update robots.txt code in wordpress blog with the help of Yoast SEO plugin. Step 1: If you have a blog on wordpress, then you must be using Yoast SEO plugin to be seo. First go to Yoast SEO and click on Tools button. Here three tools will be open in front of you, you have to click on File Editor. Step 2: After clicking on the file editor, a page will open, click on the Create robots.txt file button under the Robots.txt section here. Step 3: In the box below Next Robots.txt, you remove the default code, paste the robots.txt code given above and click on Save Changes to Robot.txt. After updating the robots.txt file in WordPress, it is necessary to check whether there is any error in the Search Console through the robots.txt tester tool. This tool will automatically fetch your website's robots.txt file and will show it if there are errors and warnings. The main goal of your robots.txt file is to prevent the search engine from crawling pages that are not required to be made public. Hope this guide will help you to create a seo optimized robots.txt code. Conclusion:- So in this article i have complete guide you How to Create a robots.txt file in WordPress. Check this robots.txt by robots.txt checker. If you like this article then Help other bloggers by sharing this post as much as possible. Also Read This What is Instagram Reels how to use Instagram Reels? Best Chrome Extension 2020 | Top 10 to Install in 2020

How to Create a robots.txt file in WordPress | Wordpress Robots txt SEO Tips via exercisesfatburnig.blogspot.com https://ift.tt/2Ygv3fX

0 notes

Text

How we behavior an seo review

With regards to your commercial enterprise, how your website plays could make or wreck your emblem. If you want to pressure visitors for your website online and expand your attain, you want to begin with an seo website audit. This primary step is crucial for Digital Marketing Agency Southampton all desirable digital advertising and marketing techniques. Without, you can not identify gaping website mistakes and paintings to decorate the consumer experience. Basically, your clients will depart in their droves. Why do i want a evaluation? Consider us, you do. In widespread terms, an search engine optimization website online audit will have a look at how well you carry out as compared in your competition, and what you could do to get on top. Analysing the likes of site velocity, keyword approach and hyperlink building pointers, you could begin to put in force a plan to get on that all-vital first web page on google and in front of your consumers. In wide variety terms, sixty seven% of all clicks on the serps are for the top 5 listings. It starts offevolved to appearance a bit grim whilst you get in addition down the web page, with consequences stating 95% of all traffic is spent on the first web page of google. If you’re not on that first web page, you’re sharing five% with other competition and the rest of the arena. If you don’t get a seek engine advertising and marketing plan and audit looked after quickly, you threat losing valued clients. When it comes to our search engine optimization audit service, we are able to break up it down into 5 basic steps:

massive problems

product filters/amp

content material advertising

virtual outreach

nearby seo

step 1: massive problems

first things first, we want to evaluate the massive problems and understand what we're coping with. We’ll crawl your whole website to identify any areas that hold us digital marketers up at night time. We utilise the likes of pingdom and gt metrix to measure the speed and look for answers. As an instance, the tools will offer statistics on any bottlenecks and provide a good photo as to how lengthy your website is taking to load. We realize clients are fickle, and they received’t wait round for long. On the subject of enhancing website velocity, we will suggest on the likes of:

minimising redirects

integrate external javascripts as merging the files can make rendering quicker

minimise request length

integrate photos by means of the usage of css spirits

location web page on a content material shipping community, which means your customers are served information from their nearest region

google seek console

dashboard errors

dashboard errors can purpose sizable troubles in your web page. We’ll search for dns mistakes (area name system) as part of our seo audit provider. Basically, this errors manner the googlebot can not hook up with your domain. Therefore, neither can your clients. Server connectivity

a technical search engine optimization audit is critical if your site is having problem connecting for your server, hence timing out. We’re all busy, and we’ll most effective wait so long – you want on the spot help. A one-off difficulty might not be a good deal reason for challenge, however repeated issues scream extra trouble in advance. Robots. Txt fetch

an search engine optimization overview will recollect whether the googlebot can retrieve your robots. Txt record. It’s an clean repair – one that a developer can create and upload to the basis of your server. Crawl fee

while we are crawling pages as a part of a technical seo audit, we like to see a steady boom. Any heavy drops in pages crawled per day and kilobytes downloaded according to day may additionally imply we're searching at a broader issue. Both charts for each ought to look similar. Lastly, time spent downloading a page need to be quite steady, until there has been ever a slow server at some point. Sitemap

analysing the sitemap indicates whether or not indexing is the root motive of any issues. When you have a big range of urls submitted, however only some listed – there’s a trouble. An search engine optimization internet site audit will appearance to degree the figures. You could want to revisit your sitemap structure in case you do now not have a sitemap index, with smaller sitemap files, that means you can't pick out which files/categories are not getting listed. Step 2: product filters/amp

product filters and structured facts is only vital for those with a large number of products. In case you specialise in, as an instance, 10 niche products – shape facts may not benefit your website online. But, this could all be covered as part of an search engine optimization web site audit. Dependent information does, however, assist google to discover facts in your local google my enterprise card. For example, it'll include your social media accounts, making it smooth for your customers to reach your crew. Amp

amp or elevated mobile pages way web sites load nearly right away, offering a more user-friendly enjoy on cell. An average of sixty seven% of customers global get entry to web sites via their device, but conversions for mobile nonetheless falls extensively underneath that of desktop. Therefore, the likes of ebay are utilising amp to get purchasers at the website. We’ve got more info on the advantages, and whether or not google determines it as a ranking element here. Step three: content advertising

on the subject of a technical seo audit, content material advertising and marketing is intrinsic to the fulfillment of your logo. As a part of a content material advertising and marketing strategy, you want to analyze keywords and analyse your competitors. By doing so, you can become aware of content gaps inside the market and appearance to fill them. Keyword research

keyword research

if you don’t recognize the buying terms within your industry, you’ve were given nowhere to begin. As noted above, consumers don’t have a tendency to move further than the first page, so that you want to be at the pinnacle of the search engines for that unique term. Whilst generating content, we’ll always use keyword studies gear to make sure the content is optimised and performing the way it must. For instance, the likes of semrush, ahrefs and keywords explorer are our greatest friends. Blog

do you have a blog? How regularly do you submit? Are your articles relevant on your industry? If you have a blog with a ordinary posting agenda, you may begin to rank for greater long-tail key-word and in addition enhance your merchandise through the likes of inner hyperlinks from the blog. Examples of blog ideas include new product descriptions, industry information, organisation occasions or maybe brand spotlights. Video content material

as the enterprise evolves, so do your consumers. Video is one of the cutting-edge trends to emerge from digital advertising and marketing. To put it in perspective, more video content is uploaded in 30 days than the foremost united states of americatelevision networks have created in 30 years. We advocate looking into video for the purposes of your content – mainly if you boast various innovative merchandise. Video may also assist for outreach purposes, also presenting content on your social media systems. Other tools

the likes of google traits also can provide insights into the maximum popular topics to your enterprise, or even the peaks while they're most searched through your ideal purchasers. Step 4: virtual pr

virtual pr and content advertising and marketing come as a a package deal, basically. You may’t do one without the alternative. It’s all properly and top generating high-quality content and posting for your blog, however you need people to look it. Appropriate link building gets you on applicable web sites and in the front of the suitable target audience. Our seo audit offerings study:

constructing the fundamentals: putting your emblem in directories

sourcing logo mentions and obtaining a link back on your site

communicating together with your stockists and suppliers to gain hyperlinks

blogger collaborations

press releases for brand spanking new products and so on. Content placement on excessive da sites

answering media requests

Read Also:- Top 10 Ways to Grow Your Email List

step 5: neighborhood search engine optimization

for smaller sized organizations, beginning nearby gives the rules for global domination. An search engine optimization overview will discover the neighborhood keywords to rank for – which includes ‘newcastle cake employer’ – and look to domestically optimise your website online. As an instance, our search engine optimization assessment will don't forget meta titles, and the benefits of adding a vicinity to the unique page. It is able to also be well worth looking at including a vicinity page, detailing all of the towns your organization operates in. Your google my business card have to also be up to date, providing your customers with all data on your manufacturers: contact information, beginning instances, height hours, future activities and even weblog posts. If you would like to talk about what our seo organisation can do for you and discuss an search engine optimization web page audit, simply fill inside the shape below. Dan

dan

dan is a self-taught developer who has created hundreds of web sites over the last 9 years. Here at flow, he builds web sites to consumer specifications and allows hold present customer structures Digital Marketing Companies Southampton the use of his knowledge of hypertext preprocessor, html, javascript and css. Whether or not the problem is big or small, dan will find a technique to get a mission again heading in the right direction.

dispelling the myths of search engine optimization (what you really need to consider)

0 notes

Text

ESP32 Arduino Tutorial SPIFFS:8. File upload IDE plugin

In this tutorial, we will check how to use an Arduino IDE plugin to upload files to the SPIFFS ESP32 file system.

The plugin we are going to use can be found here. The installation of the plugin is very simple and you can follow the guide detailed here. After following all the installation steps, make sure to confirm that the plugin was correctly installed by going to the tools menu of the Arduino IDE and checking if a new “ESP32 Sketch Data Upload” entry is available there.

This tool will allow us to upload the files directly from a computer’s folder to the ESP32 SPIFFS file system, making it much easier to work with files.

Note that when using the tool to upload files, it will delete any previously existing files in the ESP32 file system.

Important: At the time of writing, as indicated in this GitHub issue, there are some file corruption problems when uploading files with this tool. As suggested in the comments, the easiest way to fix is uploading the Arduino core to the latest version and running the get.exe file to update the SPIFFS tools to the latest version. The get.exe file should be located on the /hardware/espressif/esp32/toolspath, under the Arduino installation folder, accordingly to the Arduino core installation instructions.

The tests were performed using a DFRobot’s ESP32 module integrated in a ESP32 development board.

Uploading files

Uploading files with this tool is really simple, as can be seen in the GitHub page guide. Basically, we need to start by creating a new Arduino sketch and saving it, as shown in figure 1. You can name it however you want.

Figure 1 – Creating and saving a new Arduino sketch.

After saving it, we need to go to the sketch folder. You can look for it in your computer’s file system or you can simply go to the Sketchmenu and click in “Show Sketch Folder“.

Figure 2 – Going to the sketch folder.

On the sketch folder, we need to create a folder named data, as indicated in figure 3. It will be there that we will put the files to be uploaded.

Figure 3 – Creating the “data” folder.

Inside the data folder, we will create a file .txt named test_plugin, as shown in figure 4.

Figure 4 – Creating the file to upload to the SPIFFS file system.

After creating the file we will open it, write some content and save it. You can check below at figure 5 the content I’ve added to mine. You can write other content if you want.

Figure 5 – Adding some content to the file that will be uploaded.

Finally, we go back to the Arduino IDE and under the Tools menu we simply need to click on the “ESP32 Sketch Data Upload” entry, as shown below at figure 6. Note that the Serial Monitor should be closed when uploading the files.

Figure 6 – Uploading the files with the Arduino IDE plugin.

The procedure may take a while depending on the size of the file. It will be signaled at the bottom of the Arduino IDE, in the console, when everything is finished, as shown in figure 7.

Figure 7 – Uploading the files.

After the procedure is finished the file should be on the ESP32 file system, as we will confirm below.

Testing code

To confirm that the file was correctly uploaded to the file system, we will create a simple program to open the file and read its content. If you need a detailed tutorial on how to read files from the SPIFFS file system, please check here.

We start our code by including the SPIFFS.h library, so we can access all the file system related functions.

#include "SPIFFS.h"

Moving on to the Arduino setup, we open a serial connection, so we can later output the contents of the file. After that, we will mount the file system, by calling the begin method on the SPIFFS extern variable.

Serial.begin(115200); if(!SPIFFS.begin(true)){ Serial.println("An Error has occurred while mounting SPIFFS"); return; }

Next, we will open the file for reading. This is done by calling the open method on the SPIFFS extern variable, passing as input the name of the file. Note that the file should have been created in the root directory, with the same name and extension as the one we had on the computer.

So, we should pass the string “/test_plugin.txt” as input of the open method. Note that, by default, the open method will open the file in reading mode, so we don’t need to explicitly specify the mode.

File file = SPIFFS.open("/test_plugin.txt");

Now that we have opened the file for reading, we will check if there was no problem with the procedure and if everything is fine, we will read the content of the file while there are bytes available to read.

if(!file){ Serial.println("Failed to open file for reading"); return; } Serial.println("File Content:"); while(file.available()){ Serial.write(file.read()); }

After all the content was retrieved and printed to the serial port, we will close the file with a call to the close method on our File object. The final code can be seen below and already includes this method call.

#include "SPIFFS.h" void setup() { Serial.begin(115200); if(!SPIFFS.begin(true)){ Serial.println("An Error has occurred while mounting SPIFFS"); �� return; } File file = SPIFFS.open("/test_plugin.txt"); if(!file){ Serial.println("Failed to open file for reading"); return; } Serial.println("File Content:"); while(file.available()){ Serial.write(file.read()); } file.close(); } void loop() {}

After uploading the code to the ESP32 and running it with the Serial monitor opened, you should get an output similar to figure 8, which shows the content of the file that we have written, indicating that the file was successfully uploaded to the SPIFFS file system.

Figure 8 – Output of the program.

DFRobot supply lots of esp32 arduino tutorials and esp32 projects for makers to learn.

0 notes

Text

10 Compelling Reasons Why You Need Seo Ekspert

You Can Rise Up Via The Ranks Utilizing SEO

Good search engine optimization is important for every effective online service. It is frequently tough to understand which SEO strategies are the most reliable at getting your organisation's internet site ranked very on SERPs for your targeted search phrases. Complying with are some easy SEO pointers which will help you to select the most effective SEO approaches to utilize:

Keyword thickness is necessary when enhancing a web web page for different search engines. The overall use of key phrases on any offered page must be much less than 20 percent.

If you are going to spend loan in all in your SEO initiatives, a smart buy would certainly be to open up a PPC account. A pay-per-click project with Google or any type of various other competitor will certainly help you obtain your website ranked extremely in a hurry. There's nothing these huge business behind search engines like more than loan, so it's a "faster way" for the people who can afford it.

Attempt creating robots text documents in your origin directory site to attain this. txt data and also including it to your root directory site. This will certainly maintain https://effektivmarketing.no search engines from gaining access to the files you select not to display.

Make use of inbound, external web links to maximize search engine results. Connecting to various other websites can result in the website traffic you desire as well as urge better positioning in real-time searches.

Online search engine creep your entire site by default so you must consist of a robots.txt data to leave out pages that are not relevant to your website subject. Compose the file and also upload it to the root directory of the website. This will inform the search engine what to neglect immediately so it does not lose time going through information that is not important.

Write for https://effektivmarketing.no/online-reputation-management your readers, not for the search engine. Search engines are extra smart these days than ever before believed possible. They can actually evaluate content based on the responses of an authentic human feeling. If you duplicate key phrases too many times, an internet search engine will realize what you are doing and rate your website reduced.

A 404 error occurs when a customer attempts to see a web page that no longer exists or never existed in the first place. When you upgrade pages with brand-new web links, be sure to use a 301 redirect.

You need to make usage of the keyword tool from Google Adwords' to enhance the search engine. The Adwords tool will certainly show you the number of searches for a word or expression that you get in.

To rank greater in search engine result, you must use an index web page or a web page that you can link to. Your content is mosting likely to differ and also be upgraded: a web page could not interest individuals for long. You can however climb steadily in search results page by referring constantly to the same index web page.

Make sure your key words density isn't too high or too reduced. A lot of individuals make the error of assuming that the even more times their search phrase is pointed out, the higher in the rankings they will certainly go. Doing this really creates the engines to identify your website as spam and also keep it reduced. Attempt to aim for using your keyword phrase in no more than 7% of the web content on your web page.

When getting ready to place your ad on the internet, you will certainly require to recognize how keyword density, regularity and also closeness all impact search engine ranking. Ensure you do your study, so that you are marketing your business in a way that will produce a lot more company than you are presently obtaining.

Search engine optimization

When making use of SEO on a web page that has images, be sure to make ample use the tag. Online search engine can not watch photos and so can not index them. However, if you consist of relevant text in the tag, the online search engine can base it's guess about the image on that particular message and also boost your site's ranking.

Do not let search engine optimization take control of your online organisation! True, SEO is an important part of developing your site. SEO should never ever be as vital as satisfying your clients. If you invest so much time on seo that you discover yourself neglecting your consumers, you should reassess your priorities.

In order to see if your SEO initiatives are functioning, inspect where you stand in search engine rankings. There are many programs and devices that do this, like Google Toolbar and Alexa. If you do not, you could be wasting your time thinking that your SEO is working while it isn't.

Excellent copywriting is at the core of search engine optimization. And also, the search engines, specifically Google, have methods of establishing just how well a story is created and also how beneficial it is, both of which are considered in search engine ranking.

There are a great deal of advertising and also SEO solutions available that case that they can work marvels in advertising your website or items, but you have to be extremely familiar with scams in this area. A service that assures to route a large quantity of web traffic in a brief period of time is most likely too good to be real. Always get the viewpoints of others prior to parting with your money.There are several good discussion forums where you can go with suggestions.

Understanding SEO requires time, however hopefully, this write-up has given you with a couple of useful SEO ideas that will certainly assist to raise your site's position on search engine results pages. As your website's ranking increases, it must begin to obtain a lot more targeted, natural web traffic; As a result, your company should begin to get more customers.

0 notes

Link

In this article, four methods will be shown on how to export MySQL data to a CSV file. The first method will explain the exporting process by using the SELECT INTO … OUTFILE statement. Next, the CSV Engine will be used to achieve the same. After that, the mysqldump client utility will be used and in the end, the Export to CSV feature from the ApexSQL Database Power Tools for VS Code extension will be used to export MySQL data.

Throughout this article, the following code will be used as an example:

CREATE DATABASE `addresses` CREATE TABLE `location` ( `address_id` int(11) NOT NULL AUTO_INCREMENT, `address` varchar(50) NOT NULL, `address2` varchar(50) DEFAULT NULL, PRIMARY KEY (`address_id`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1 INSERT INTO location VALUES (NULL, '1586 Guaruj Place', '47 MySakila Drive'), (NULL, '934 San Felipe de Puerto Plata Street', NULL), (NULL, '360 Toulouse Parkway', '270, Toulon Boulevard');

Using SELECT INTO … OUTFILE to export MySQL data

One of the commonly used export methods is SELECT INTO … OUTFILE. To export MySQL data to a CSV file format simply execute the following code:

SELECT address, address2, address_id FROM location INTO OUTFILE 'C:/ProgramData/MySQL/MySQL Server 8.0/Uploads/location.csv';

This method will not be explained in detail since it is well covered in the How to export MySQL data to CSV article.

Using the CSV Engine to export MySQL data

MySQL supports the CSV storage engine. This engine stores data in text files in comma-separated values format.

To export MySQL data using this method, simply change the engine of a table to CSV engine by executing the following command:

ALTER TABLE location ENGINE=CSV;

When executing the above code, the following message may appear:

The storage engine for the table doesn’t support nullable columns

All columns in a table that are created by the CSV storage engine must have NOT NULL attribute. So let’s alter the location table and change the attribute of the column, in our case that is the address2 column.

When executing the ALTER statement for the address2 column:

ALTER TABLE location MODIFY COLUMN address2 varchar(50) NOT NULL;

The following message may appear:

Data truncated for column ‘address2’ at row 2

This message appears because NULL already exists in the column address2:

Let’s fix that by adding some value in that field and try again to alter the column.

Execute the following code to update the address2 column:

UPDATE `addresses`.`location` SET `address2` = "Test" WHERE `address_id`=2;

Now, let’s try again to execute the ALTER statement:

ALTER TABLE location MODIFY COLUMN address2 varchar(50) NOT NULL;

The address2 columns will successfully be modified:

After the column has been successfully changed, let’s execute the ALTER statement for changing the storage engine and see what happens:

ALTER TABLE location ENGINE=CSV;

A new problem appears:

The used table type doesn’t support AUTO_INCREMENT columns

As can be assumed, the CSV engine does not support columns with the AUTO_INCREMENT attribute. Execute the code below to remove the AUTO_INCREMENT attribute from the address_id column:

ALTER TABLE location MODIFY address_id INT NOT NULL;

Now, when the AUTO_INCREMENT attribute is removed, try again to change the storage engine:

ALTER TABLE location ENGINE=CSV;

This time a new message appears:

Too many keys specified; max 0 keys allowed

This message is telling us that the CSV storage engine does not support indexes (indexing). In our example, to resolve this problem, the PRIMARY KEY attribute needs to be removed from the location table by executing the following code:

ALTER TABLE location DROP PRIMARY KEY;

Execute the code for changing the table storage engine one more time:

Finally, we managed to successful change (alter) the storage engine:

Command executed successfully. 3 row(s) affected.

After the table engine is changed, three files will be created (CSV, CSM, and FRM) in the data directory:

All data will be placed in the CSV file (location.CSV):

Note: When exporting MySQL data to CSV using the CSV storage engine, it is better to use a copy of the table and convert it to CSV to avoid corrupting the original table.

Now, let’s create a table without indexes:

CREATE TABLE csv_location AS SELECT * FROM location LIMIT 0;

Then change the storage engine of the newly created table to CSV:

ALTER TABLE csv_location ENGINE=CSV;

And then load data into the newly created table from the location table:

INSERT INTO csv_location SELECT * FROM location;

Using the mysqldump client utility to export MySQL data

Another way to export MySQL data is to use the mysqldump client utility. Open the Windows command-line interface (CLI) not MySQL CLI. In case the MySQL CLI is opened, type and execute the mysqldump command, and the following error message may appear:

ERROR 1064 (42000): You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near ‘mysqldump’ at line 1

This happens because mysqldump is an executable command, not MySQL syntax command.

Let’s switch to Windows CLI and execute the mysqldump command. As can be seen from the image below, a new problem appears:

‘mysqldump’ is not recognized as an internal or external command, operable program or batch file.

To resolve this, navigate to the directory where is the mysqldump.exe is located:

cd C:\Program Files\MySQL\MySQL Server 8.0\bin

Now, execute the mysqledump.exe command. If the results are the same as from the image below, it means that mysqldump works correctly:

To export MySQL data execute the following code:

mysqldump -u <username> -p -T </path/to/directory> <database>

The -u is used as a flag after which you need to specify a username that will be connected to MySQL server.

The -p is used as a flag for a password for the user that wants to connect to the MySQL server.

The -T flag create a tab-separated text file for each table to a given path (create .sql and .txt files). This only works if mysqldump is run on the same machine as the MySQL server.

Note that specified path (directory) must be writable by the user MySQL server runs as, otherwise, the following error may appear when exporting data:

mysqldump: Got error: 1: Can’t create/write to file ‘ H:/ApexSQL/Test/location.txt (Errcode: 13) when executing ‘SELECT INTO OUTFILE’

By executing the following command:

mysqldump -u root -p -T H:/ApexSQL/Test addresses

All tables from the specified MySQL database (addresses) will be exported in the directory that you named. Every table will have two files; one is .sql and another is .txt:

The .sql files will contain a table structure (SQL for creating a table):

And the .txt files will contain data from a table:

If you want to export MySQL tables only as .txt files, then add -t flag to mysqldump command:

mysqldump -u root -p -t -T H:/ApexSQL/Test addresses

This will create a .sql file, but it will be empty:

In case, that want to export just one table from a MySQL database rather than all tables, in the mysqldump command add a name of the table that want to export next to a specified MySQL database:

mysqldump -u root -p -t -T H:/ApexSQL/Test addresses location

With -T flag in mysqldump command, exported data in the files will be separated with tab delimiter. A delimiter can be changed by using the –fields-terminated-by= flag.

In the example below comma (,) is used as value separator:

mysqldump -u root -p -t -T H:/ApexSQL/Test addresses location –fields-terminated-by=,

With the –fields-enclosed-by= flag it can be put quotes around all values (fields):

mysqldump -u root -p -t -T H:/ApexSQL/Test addresses location –fields-enclosed-by=” –fields-terminated-by=,

When executing the above code, the following error may appear:

mysqldump: Got error: 1083: Field separator argument is not what is expected; check the manual when executing ‘SELECT INTO OUTFILE’

To fix that, add \ in front of the quote “ under the –fields-enclosed-by flag:

mysqldump -u root -p -t -T H:/ApexSQL/Test addresses location –fields-enclosed-by=\” –fields-terminated-by=,

The exported MySQL data will look like this:

Using a third-party extension to export MySQL data

In this VS Code extension, execute a query from which results set wants to be exported:

In the top right corner of the result grid, click the Export to CSV button and in the Save As dialog enter a name for a CSV file, and choose a location where data should be saved:

Just like that, in a few clicks, data from the result set will be exported to CSV:

0 notes

Text

how to fix Ads.txt file in Adsense | how to upload ads txt to domain root

how to fix Ads.txt file in Adsense | how to upload ads txt to domain root

how to fix Ads.txt file in Adsense | how to upload ads txt to domain root Related videos: How to Insert Google AdSense HTML header code into WordPress Website https://youtu.be/Shd2K5Fkzgo How to Insert Google AdSense into Website Posts, pages: https://www.youtube.com/watch?v=vDOszeAWhpc&t=1s How to insert SEO BACKLINKS in to WordPress…

View On WordPress

#adsense error how to fix ads.txt file#earnings at risk#how to avoid severe impact to your revenue#How to create Ads TXT file to WordPress#How to create an ads.txt file for site#how to fix ad crawler errors#how to fix ads txt error#how to fix ads txt issue#how to fix ads txt issues#How to fix Google Adsense Earnings at risk error#how to fix some ads txt file issues#how to upload ads txt into site#How to upload Ads txt on root#how to upload ads txt to domain root

0 notes

Text

Facebook Domain Verification: Edit Link Previews

Back in September, I provided three tips on how you could continue to edit link previews when creating a Facebook post. This functionality had otherwise been taken away in an effort to combat fake news.

One of the methods I shared with you was claiming link ownership…

At the time, I was frustrated that I didn’t have the ability to claim link ownership. Later I’d find that the path to claim link ownership simply moved.

Let’s take a closer look at how you can once again edit your link previews by using Facebook domain verification.

Editing Link Previews: The Problem

Quick refresher…

Up until late this past summer, Facebook page publishers could edit link thumbnails, titles, and descriptions. But the ability to make those edits were then taken away.

Facebook made this change to prevent bad actors from changing the image thumbnail, title, or description to mislead the reader. Some were taking posts from reputable websites and altering the information to make people think the articles said something they didn’t.

Facebook’s motivation to pull this back was understandable. But what about reputable publishers that simply wanted to make slight adjustments? Maybe the thumbnail image was the wrong dimensions. Maybe there was a typo in the description. Or the description was too long.

Most importantly, what if the publisher owned the content in question?

Domain Verification

Facebook created Domain Verification to allow content owners to overwrite post metadata when publishing content on Facebook.

Within Business Manager under People and Assets, you should now see “Domains” on the left side…

Click the button to Add New Domains…

Enter your domain, and click the button to “Add Domain.”

To verify your domain, you’ll need to either add a DNS TXT record or upload an HTML file. If you don’t manage your website, that may sound like Greek. I honestly don’t truly understand it myself. But Facebook provides the specific steps that should help.

For DNS verification…

The instructions are above. You’ll want to paste the TXT record that Facebook provides (yours will be different) in your DNS configuration. Then come back to that screen in Business Manager and click the “Verify” button.

You could also use the HTML upload route.

In this case, click the link to download the HTML file that Facebook provides. Then upload that file to the root directory of your website prior to clicking “Verify.”

In my case, this process took only a matter of minutes. I sent the DNS TXT info to my tech person who was able to add that record easily without questions asked. I then verified and was good to go.

Assign Pages

You can assign related pages that have been added to your Business Manager to a verified domain so that they, too, can have editing privileges.

Click the “Assign Pages” button within Domain Verification and select the page that you want to be added.

If the page isn’t listed, it first needs to be added to your Business Manager. You’ll do that by selecting “Pages” under People and Assets in your Business Manager and clicking to add a page.

Edit Link Previews

Once your domain is verified and the associated page is connected, you can freely edit link previews!

You can edit link thumbnail, title, and description. While the thumbnail will look funny while creating the post, it publishes properly…

Your Turn

Have you been struggling to edit link preview details? Does this help?

Let me know in the comments below!

The post Facebook Domain Verification: Edit Link Previews appeared first on Jon Loomer Digital.

0 notes

Text

Facebook Domain Verification: Edit Link Previews

Back in September, I provided three tips on how you could continue to edit link previews when creating a Facebook post. This functionality had otherwise been taken away in an effort to combat fake news.

One of the methods I shared with you was claiming link ownership…

At the time, I was frustrated that I didn’t have the ability to claim link ownership. Later I’d find that the path to claim link ownership simply moved.

Let’s take a closer look at how you can once again edit your link previews by using Facebook domain verification.

Editing Link Previews: The Problem

Quick refresher…

Up until late this past summer, Facebook page publishers could edit link thumbnails, titles, and descriptions. But the ability to make those edits were then taken away.

Facebook made this change to prevent bad actors from changing the image thumbnail, title, or description to mislead the reader. Some were taking posts from reputable websites and altering the information to make people think the articles said something they didn’t.

Facebook’s motivation to pull this back was understandable. But what about reputable publishers that simply wanted to make slight adjustments? Maybe the thumbnail image was the wrong dimensions. Maybe there was a typo in the description. Or the description was too long.

Most importantly, what if the publisher owned the content in question?

Domain Verification

Facebook created Domain Verification to allow content owners to overwrite post metadata when publishing content on Facebook.

Within Business Manager under People and Assets, you should now see “Domains” on the left side…

Click the button to Add New Domains…

Enter your domain, and click the button to “Add Domain.”

To verify your domain, you’ll need to either add a DNS TXT record or upload an HTML file. If you don’t manage your website, that may sound like Greek. I honestly don’t truly understand it myself. But Facebook provides the specific steps that should help.

For DNS verification…

The instructions are above. You’ll want to paste the TXT record that Facebook provides (yours will be different) in your DNS configuration. Then come back to that screen in Business Manager and click the “Verify” button.

You could also use the HTML upload route.