#Edujournal

Explore tagged Tumblr posts

Text

Importance of Big Data Analytics

Strategic decision for business forecasts and optimizing resources, such as supply chain optimization.

Product development and innovation.

Personalized Customer Service to provide enhanced customer service and increase customer satisfaction.

Risk Management to identify and mitigate risks.

Optimizing the work force hence saving work and time.

Healthcare sector support by tracking patients health records of past ailments and provide advanced diagnosis and treatment.

Providing quality services like preventing crime, improve traffic management, and predicting natural disasters, optimize supply chain processes, reduce cost, improve product quality through predictive analysis, improve teaching methods through adaptive learning etc.

Helps Banking sectors track and monitor illegal money laundering and theft.

Scalability

Check out our master program in Data Science, Data Analytics and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#Scalability#collaboration#machine_learning#training#trends#insight#data_visualization#skills#data_science#data#edujournal

0 notes

Link

Edujournal is a Singapore based Training Management System software company providing administration, documentation, tracking etc....

#Edujournal is a Singapore based Training Management System software company providing administration documentation tracking etc....#Training Management System

0 notes

Link

E-learning is something we are constantly hearing nowadays. The current situation of COVID-19 has made most of the educational practices to shift from the traditional way of learning to online learning. Find your ideal LMS for your education institute from Edujournal.

0 notes

Text

Roles of a Data Engineer

A data engineer is one of the most technical profiles in the data science industry, combining knowledge and skills from data science, software development, and database management. The following are his duties :

Architecture design. While designing a company's data architecture is sometimes the work of a data architect, in many cases, the data engineer is the person in charge. This involves being fluent with different types of databases, warehouses, and analytical systems.

ETL processes. Collecting data from different sources, processing it, and storing it in a ready-to-use format in the company’s data warehouse are some of the most common activities of data engineers.

Data pipeline management. Data pipelines are data engineers’ best friends. The ultimate goal of data engineers is automating as many data processes as possible, and here data pipelines are key. Data engineers need to be fluent in developing, maintaining, testing, and optimizing data pipelines.

Machine learning model deployment. While data scientists are responsible for developing machine learning models, data engineers are responsible for putting them into production.

Cloud management. Cloud-based services are rapidly becoming a go-to option for many companies that want to make the most out of their data infrastructure. As an increasing number of data activities take place in the cloud, data engineers have to be able to work with cloud tools hosted in cloud providers, such as AWS, Azure, and Google Cloud.

Data monitoring. Ensuring data quality is crucial to make every data process work smoothly. Data engineers are responsible for monitoring every process and routines and optimizing their performance.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

Url: www.edujournal.com

#Architecture design#Data monitoring#process#machine_learning#data_visualization#data_science#trends#edujournal#data#training#testing

0 notes

Text

Machine Learning and their domain areas

Machine Language is extensively applied across industries and sectors. Various applications of machine learning are:

Prediction

ECommerce - display products based on customer preference based on past purchases

Automatic Language translation: translate text from one language to another.

Speech Recognition: translation of spoken words into text.

Image Recognition: recognize image with image segmentation techniques.

Financial sector : detect fraudelent transactions, optimization

Medical Diagnosis: detect diseases early

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

0 notes

Text

Time series analysis

Time series analysis is a tool for the analysis of natural systems. For eg., climatic changes or fluctations in economy.In other words, you can predict the future. it can give you valuable insight of what happend during the course of the week, weeks, months or years.

What are the 4 components of time series? Trend component. Seasonal component. Cyclical component. Irregular component.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#trend#seasonal#cyclic#irregular#time_series#predict#machine_learning#insight#data_visualization#data_science#edujournal

0 notes

Text

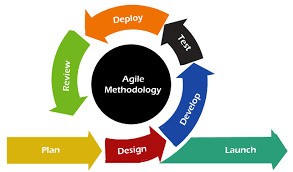

Agile methodology

Agile methodologies such as Scrum and Kanban, focus on iterative development, customer collaboration, and responding to change. While Scrum operates in fixed-length sprints, Kanban allows for a more continuous and flow-based delivery. Teams can adapt to new requirements without waiting for a fixed iteration to complete and it supports the concept of continuous delivery, enabling teams to release features as soon as they are ready. Some teams choose to adopt a "Sprintless Agile" approach, combining Kanban's continuous flow with Agile principles.

General practices common to both Scrum and Kanban Methodologies:

Visual Management : it is the key component that makes it easy for team members to understand the status of tasks and understand the issues if any. 2.Control the Work in Progress (WIP) limits (ie., helps optimize the flow of work through the system hence preventing overflow of resources and reducing bottlenecks), improving focus and reducing multitasking hence enhancing delivering value iteratively. 3 Continuous improvement : Embrace the culture of continuous improvement through regular retrospectives with regular feedbacks. 4.Foster collaboration and collective ownership at work ie., encourage cross-functional teams and enhance communication and knowledge sharing.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#collaboration#Continuous_improvement#communication#feedback#culture#work_flow#visual_Management#work_in_progress#optimization#edujournal

0 notes

Text

Roles of Full Stack Developer

Designing User Interface (UI)

Developing Backend Business Logic

Handling Server and Database connectivity and operations

Developing API to interact with external applications

Integrating third party widgets into your application

Unit Testing and Debugging

Collaborating with cross-functional teams to deliver innovative solutions that meet customer requirement and expectations.

Testing and Hosting on Server

Software optimization.

Adapting the application to various devices.

Updating and maintaining the software after deployment.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#User_Interface#optimization#edujournal#testing#development#aoolication#software#debugging#aoi#integration#automation#seployment

0 notes

Text

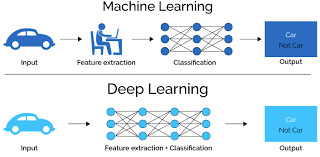

Use Cases in Deep Learning

Deep Learning is a subset of Machine wherein we are training neural network models with datasets varying with complexity. We can identify patterns from our inputs which can be used to make predictions. Also, we can re-use the trained data derived from the model in other similar type of application which is known as Transfer Learning (TL).

Popular Models for doing Image Classification

MobileNetV2

ResNet50 by Microsoft Research

InceptionV3 by Google Brain team

DenseNet121 by Facebook AI Research

Smaller the algorithm, the faster the prediction time.

Use Cases:

Automatic speech recognition Image recognition Drug discovery and toxicology Customer relationship management Virtual Assistants (like Alexa, Siri, and Google Assistant). Self Driving Cars. News Aggregation and Fraud News Detection. Natural Language Processing. Entertainment Healthcare

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#Image recognition#data_visualization#data_science#trends#machine_learning#insight#edujournal#training#deep_learning#NLP

0 notes

Text

Neural Network Regression Model

Regression Analysis is a set of statistical processes, that are used for estimating the relationship between dependent variables and multiple independent variables. For eg, predicting the resale value of our house, our dependent variable is the price of our house ie the house price is the outcome you are trying to predict. The multiple indedendent variables could be the number of bedrooms in the house, sitting room, dinning room, kitchen, bathroom, car parking etc. We may have ten different houses with ten different type of bed rooms, kitchens, siitting rooms having different prices ranges depending on the size and area, place and location etc. Hence, we might require to create a model to take these information and predict the value of the house. Here, we are using Neural Networks for solving Regression Problems. We need to give the inputs and outputs to the machine learning algorithm, and the machine learning will use the algorithms at its disposal, and understanding the relationship between the inputs and outputs. In the case of inputs ie if you have 4 bedrooms in your home, the inputs have to be given in numerical encoding form such as [0 0 0 0 1 0] which are applicable for all cases. These data are sent through a neural network and the representational output is converted into a human readable form.

Anatomy of Neural Networks:

Input Layer : Data goes into Input Layer.

Hidden Layer(s) : Learns pattern in data.(We can have multiple layers here depending on complexity). We can have very deep neural networks such as resnet152 having 152 different layers which is a common computer vision algorithm. Hence, we can have how many layers as we want.

Output Layer: Learned Representation or prediction probabilities.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

0 notes

Text

What is AI and Machine Learning

Machines in general are very quick in performing tasks. We can control these machines ie., we give instructions and make them do tasks for us which is what programming is all about. But Lower level tasks can best be left to computers while higher level tasks can be done with humans. For eg., if we tell a computer to identify a cat from other animals like dogs, can it be programmed ? Technically, we can say it has fur, has whiskers, makes a 'mwewwwwwwwww' noise etc. Immediately the computer comes back and asks us "What is mwew….or what is whisker or fur ? Therefore it becomes really harder to explain to the machines what to do.

But, there is a new idea of machine learning that has many applications with it. Examples of machine learning are self driving cars, robots, vision processing, language processing, recommendation engines, translation services, etc. The goal of machine learning is to make machines act more and more like humans. In other words, it is the way of getting computers to act without being explicitly programmed

Artificial Intelligenge (AI) simply means intelligence exhibited by machines, and Machine Learning is the subset of AI. We have something called Narrow AI, means it is really good at doing a particular task very well, better than humans, BUT, unlike humans who are good at handling multiple tasks, which is called General AI. it will take the computers many more years to reach that level. For eg detecting and diagonising human diseases eg heart disease or predicting if the patient will suffer from diabetics a few years from now. We might have heared robots performing complicated surgeries. Another example is a Chess computer named DeepBlue, that defeated Gary Kasprov, the world champion a few decades ago. These computers can do single task really well.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#machine_learning#data_visualization#data_science#insight#edujournal#Artificial_Intelligence#instructions#tasks#language_processing#robots#self_driving_cars

0 notes

Text

Criteria for selecting an appropriate tool for Data Science

Ease of Using

Interoperability

Scalability

Support diverse tasks like data manipulation, modelling, analysis, visualization, deployment etc

Provide quick insight and trends for decision making.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#data_science#data_visualization#insight#trends#machine_learning#scalability#decision_making#analysis#interoperability#edujournal

0 notes

Text

Biggest movements of Google since its inception 25 years ag

Google is known for its ability to to develop search engines that can search information people are looking for quickly and rendering the right content. Over the years Google have innovatated many new features and some of their major innovations over the years are as follows:

2001: Google Images 2002: Google News 2004: Autocomplete 2006: Google Translate 2007: Universal Search 2008: Google Mobile App 2008: Voice Search 2011: Search by Image 2022: Multisearch 2023: Search Generative Experience (SGE) that brings the power of generative AI directly into Search.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

#edujournal#Google_Search#MultiSearch#Voice_Search#SGE#Google_News#Google_Images#Genweative_AI#Google_Translate#content

1 note

·

View note

Text

Has your email or password ever been hacked ?

Data breaches are very common these days. Even for big companies like facebook and yahoo , there have been data breaches recently. Their databases were exposed to the public because their usernames and passwords are hacked.

The hackers compile the list of hacked usernames and passwords and try to login to different services. You can think of data from a databases or massive excel files which contains all emails and passwords that have been leaked throughout our history. You can check a website called https://haveibeenpwned.com/Passwords, where you can check if your password or email addresses has been hacked. When I checked my password, it showed this message:

Oh no — pwned! This password has been seen 116 times before This password has previously appeared in a data breach and should never be used. If you've ever used it anywhere before, change it!

For software developers, you can develop your own code for checking password with this api. url = ‘https://api.pwnedpasswords.com/range/’+ ‘password123’ Get the response and check if your password is valid. Remember, the password need to be hashed before storing it into a database. Hence, replace your hashed password in place of password123 above. You have a built-in library in every language for hashing. Hash your password before sending the api request.

Also, we can purchase some trusted secured password managers like Bitwarden, NordPass, Dashlane, 1Password and Keeper which can be trusted.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

0 notes

Text

Power of Python

We have seen a lot of python developers worldwide who are able to leverage the functionality of modules/libraries, that are built by people at the individual level and also with standard built-in libraries available in Python, to do lot of interesting projects. We are able to fast track our progress and do a lot of really good projects pretty quickly. The projects can be done by

Developing your own module

Utilize the Built-in Standard modules/libraries provided by python. You can import the library and use it. eg random() that generates random numbers and datetime() function that can do date and time details.

Python Community: There is a huge community worldwide for Python. For eg. The libraries that we use in Data Science regularly such as Pandas, Numpy, Matplotlib etc are not available instandard built-in Python library. They are developed by developers and shared within the community. You only need to use 'pip3 install ' command to to install it in your system and use the import it thereafter and include them in your code.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your confidence and knowledge.

URL: www.edujournal.com

0 notes

Text

Decorator design pattern

Decorator is a structural design pattern that lets you attach new behaviors to an objects by placing these objects inside special wrapper objects that contain the behaviors. In other words. Decorator patterns allow a user to add new functionality to an existing object without altering its structure.

A real life example of a Decorator design pattern would be a Pizza, which would be the original class,and the variety of different toppings would act as Decorators. The customer can add toppings (functionalities) as per their choice but the the Pizza and the structure would remain unaffected.

Check out our master program in Data Science and ASP.NET- Complete Beginner to Advanced course and boost your knowledge.

URL: www.edujournal.com

#Decorators#design_pattern#behavior#objects#structure#functionality#Edujournal#Data_Science#program#knowledge

0 notes