#Docker App Development Services

Explore tagged Tumblr posts

Text

A Brief Guide about Docker for Developer in 2023

What is Docker? Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Docker is based on the idea of containers, which are a way of packaging software in a format that can be easily run on any platform.

Docker provides a way to manage and deploy containerized applications, making it easier for developers to create, deploy, and run applications in a consistent and predictable way. Docker also provides tools for managing and deploying applications in a multi-container environment, allowing developers to easily scale and manage the application as it grows.

What is a container? A container is a lightweight, stand-alone, and executable package that includes everything needed to run the software, including the application code, system tools, libraries, and runtime.

Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package. It allows developers to package an application with all of its dependencies into a single package, making it easier to deploy and run the application on any platform. This is especially useful in cases where an application has specific requirements, such as certain system libraries or certain versions of programming languages, that might not be available on the target platform.

What is Dockerfile, Docker Image, Docker Engine, Docker Desktop, Docker Toolbox? A Dockerfile is a text file that contains instructions for building a Docker image. It specifies the base image to use for the build, the commands to run to set up the application and its dependencies, and any other required configuration.

A Docker image is a lightweight, stand-alone, executable package that includes everything needed to run the software, including the application code, system tools, libraries, and runtime.

The Docker Engine is the runtime environment that runs the containers and provides the necessary tools and libraries for building and running Docker images. It includes the Docker daemon, which is the process that runs in the background to manage the containers, and the Docker CLI (command-line interface), which is used to interact with the Docker daemon and manage the containers.

Docker Desktop is a desktop application that provides an easy-to-use graphical interface for working with Docker. It includes the Docker Engine, the Docker CLI, and other tools and libraries for building and managing Docker containers.

Docker Toolbox is a legacy desktop application that provides an easy way to set up a Docker development environment on older versions of Windows and Mac. It includes the Docker Engine, the Docker CLI, and other tools and libraries for building and managing Docker containers. It is intended for use on older systems that do not meet the requirements for running Docker Desktop. Docker Toolbox is no longer actively maintained and is being replaced by Docker Desktop.

A Fundamental Principle of Docker: In Docker, an image is made up of a series of layers. Each layer represents an instruction in the Dockerfile, which is used to build the image. When an image is built, each instruction in the Dockerfile creates a new layer in the image.

Each layer is a snapshot of the file system at a specific point in time. When a change is made to the file system, a new layer is created that contains the changes. This allows Docker to use the layers efficiently, by only storing the changes made in each layer, rather than storing an entire copy of the file system at each point in time.

Layers are stacked on top of each other to form a complete image. When a container is created from an image, the layers are combined to create a single, unified file system for the container.

The use of layers allows Docker to create images and containers efficiently, by only storing the changes made in each layer, rather than storing an entire copy of the file system at each point in time. It also allows Docker to share common layers between different images, saving space and reducing the size of the overall image.

Some important Docker commands: – Here are some common Docker commands: – docker build: Build an image from a Dockerfile – docker run: Run a container from an image – docker ps: List running containers – docker stop: Stop a running container – docker rm: Remove a stopped container – docker rmi: Remove an image – docker pull: Pull an image from a registry – docker push: Push an image to a registry – docker exec: Run a command in a running container – docker logs: View the logs of a running container – docker system prune: Remove unused containers, images, and networks – docker tag: Tag an image with a repository name and tag There are many other Docker commands available, and you can learn more about them by referring to the Docker documentation.

How to Dockerize a simple application? Now, coming to the root cause of all the explanations stated above, how we can dockerize an application.

First, you need to create a simple Node.js application and then go for Dockerfile, Docker Image and finalize the Docker container for the application.

You need to install Docker on your device and even check and follow the official documentation on your device. To initiate the installation of Docker, you should use an Ubuntu instance. You can use Oracle Virtual Box to set up a virtual Linux instance for that case if you don’t have one already.

Caveat Emptor Docker containers simplify the API system at runtime; this comes along with the caveat of increased complexity in arranging up containers.

One of the most significant caveats here is Docker and understanding the concern of the system. Many developers treat Docker as a platform for development rather than an excellent optimization and streamlining tool.

The developers would be better off adopting Platform-as-a-Service (PaaS) systems rather than managing the minutia of self-hosted and managed virtual or logical servers.

Benefits of using Docker for Development and Operations:

Docker is being talked about, and the adoption rate is also quite catchy for some good reason. There are some reasons to get stuck with Docker; we’ll see three: consistency, speed, and isolation.

By consistency here, we mean that Docker provides a consistent environment for your application through production.

If we discuss speed here, you can rapidly run a new process on a server, as the image is preconfigured and is already installed with the process you want it to run.

By default, the Docker container is isolated from the network, the file system, and other running processes.

Docker’s layered file system is one in which Docker tends to add a new layer every time we make a change. As a result, file system layers are cached by reducing repetitive steps during building Docker. Each Docker image is a combination of layers that adds up the layer on every successive change of adding to the picture.

The Final Words Docker is not hard to learn, and it’s easy to play and learn. If you ever face any challenges regarding application development, you should consult 9series for docker professional services.

Source:

#Docker#Docker Professional Services#building a Docker image#What is Dockerfile#What is Docker Container#What is Docker?#What is a container?#Docker Development#Docker App Development Services#docker deployment#9series

0 notes

Text

youtube

#youtube#video#codeonedigest#microservices#aws#microservice#docker#awscloud#nodejs module#nodejs#nodejs express#node js#node js training#node js express#node js development company#node js development services#app runner#aws app runner#docker image#docker container#docker tutorial#docker course

0 notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

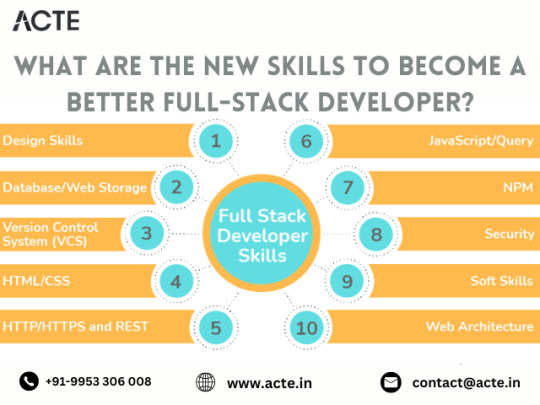

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

Using Docker for Full Stack Development and Deployment

1. Introduction to Docker

What is Docker? Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. A container packages your application and its dependencies, ensuring it runs consistently across different computing environments.

Containers vs Virtual Machines (VMs)

Containers are lightweight and use fewer resources than VMs because they share the host operating system’s kernel, while VMs simulate an entire operating system. Containers are more efficient and easier to deploy.

Docker containers provide faster startup times, less overhead, and portability across development, staging, and production environments.

Benefits of Docker in Full Stack Development

Portability: Docker ensures that your application runs the same way regardless of the environment (dev, test, or production).

Consistency: Developers can share Dockerfiles to create identical environments for different developers.

Scalability: Docker containers can be quickly replicated, allowing your application to scale horizontally without a lot of overhead.

Isolation: Docker containers provide isolated environments for each part of your application, ensuring that dependencies don’t conflict.

2. Setting Up Docker for Full Stack Applications

Installing Docker and Docker Compose

Docker can be installed on any system (Windows, macOS, Linux). Provide steps for installing Docker and Docker Compose (which simplifies multi-container management).

Commands:

docker --version to check the installed Docker version.

docker-compose --version to check the Docker Compose version.

Setting Up Project Structure

Organize your project into different directories (e.g., /frontend, /backend, /db).

Each service will have its own Dockerfile and configuration file for Docker Compose.

3. Creating Dockerfiles for Frontend and Backend

Dockerfile for the Frontend:

For a React/Angular app:

Dockerfile

FROM node:14 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["npm", "start"]

This Dockerfile installs Node.js dependencies, copies the application, exposes the appropriate port, and starts the server.

Dockerfile for the Backend:

For a Python Flask app

Dockerfile

FROM python:3.9 WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . EXPOSE 5000 CMD ["python", "app.py"]

For a Java Spring Boot app:

Dockerfile

FROM openjdk:11 WORKDIR /app COPY target/my-app.jar my-app.jar EXPOSE 8080 CMD ["java", "-jar", "my-app.jar"]

This Dockerfile installs the necessary dependencies, copies the code, exposes the necessary port, and runs the app.

4. Docker Compose for Multi-Container Applications

What is Docker Compose? Docker Compose is a tool for defining and running multi-container Docker applications. With a docker-compose.yml file, you can configure services, networks, and volumes.

docker-compose.yml Example:

yaml

version: "3" services: frontend: build: context: ./frontend ports: - "3000:3000" backend: build: context: ./backend ports: - "5000:5000" depends_on: - db db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

This YAML file defines three services: frontend, backend, and a PostgreSQL database. It also sets up networking and environment variables.

5. Building and Running Docker Containers

Building Docker Images:

Use docker build -t <image_name> <path> to build images.

For example:

bash

docker build -t frontend ./frontend docker build -t backend ./backend

Running Containers:

You can run individual containers using docker run or use Docker Compose to start all services:

bash

docker-compose up

Use docker ps to list running containers, and docker logs <container_id> to check logs.

Stopping and Removing Containers:

Use docker stop <container_id> and docker rm <container_id> to stop and remove containers.

With Docker Compose: docker-compose down to stop and remove all services.

6. Dockerizing Databases

Running Databases in Docker:

You can easily run databases like PostgreSQL, MySQL, or MongoDB as Docker containers.

Example for PostgreSQL in docker-compose.yml:

yaml

db: image: postgres environment: POSTGRES_USER: user POSTGRES_PASSWORD: password POSTGRES_DB: mydb

Persistent Storage with Docker Volumes:

Use Docker volumes to persist database data even when containers are stopped or removed:

yaml

volumes: - db_data:/var/lib/postgresql/data

Define the volume at the bottom of the file:

yaml

volumes: db_data:

Connecting Backend to Databases:

Your backend services can access databases via Docker networking. In the backend service, refer to the database by its service name (e.g., db).

7. Continuous Integration and Deployment (CI/CD) with Docker

Setting Up a CI/CD Pipeline:

Use Docker in CI/CD pipelines to ensure consistency across environments.

Example: GitHub Actions or Jenkins pipeline using Docker to build and push images.

Example .github/workflows/docker.yml:

yaml

name: CI/CD Pipeline on: [push] jobs: build: runs-on: ubuntu-latest steps: - name: Checkout Code uses: actions/checkout@v2 - name: Build Docker Image run: docker build -t myapp . - name: Push Docker Image run: docker push myapp

Automating Deployment:

Once images are built and pushed to a Docker registry (e.g., Docker Hub, Amazon ECR), they can be pulled into your production or staging environment.

8. Scaling Applications with Docker

Docker Swarm for Orchestration:

Docker Swarm is a native clustering and orchestration tool for Docker. You can scale your services by specifying the number of replicas.

Example:

bash

docker service scale myapp=5

Kubernetes for Advanced Orchestration:

Kubernetes (K8s) is more complex but offers greater scalability and fault tolerance. It can manage Docker containers at scale.

Load Balancing and Service Discovery:

Use Docker Swarm or Kubernetes to automatically load balance traffic to different container replicas.

9. Best Practices

Optimizing Docker Images:

Use smaller base images (e.g., alpine images) to reduce image size.

Use multi-stage builds to avoid unnecessary dependencies in the final image.

Environment Variables and Secrets Management:

Store sensitive data like API keys or database credentials in Docker secrets or environment variables rather than hardcoding them.

Logging and Monitoring:

Use tools like Docker’s built-in logging drivers, or integrate with ELK stack (Elasticsearch, Logstash, Kibana) for advanced logging.

For monitoring, tools like Prometheus and Grafana can be used to track Docker container metrics.

10. Conclusion

Why Use Docker in Full Stack Development? Docker simplifies the management of complex full-stack applications by ensuring consistent environments across all stages of development. It also offers significant performance benefits and scalability options.

Recommendations:

Encourage users to integrate Docker with CI/CD pipelines for automated builds and deployment.

Mention the use of Docker for microservices architecture, enabling easy scaling and management of individual services.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

Best DevOps Certifications for Elevating Your Skills

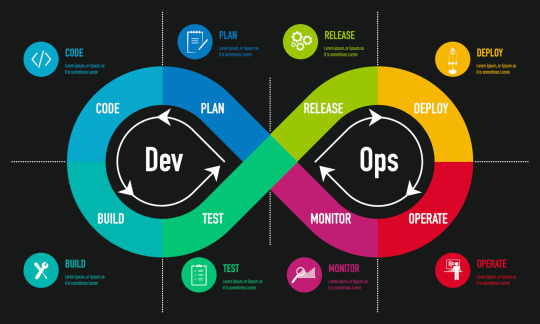

DevOps is a rapidly growing area that bridging the gap between IT and software development operations, encouraging the culture which is constantly integrating, automated and quick delivery. If you're a novice looking to start your career in the field or a seasoned professional seeking to increase your knowledge by earning the DevOps certification can greatly improve your job prospects.

This article will discuss the most beneficial DevOps certifications to be awarded in 2025 which will aid you in enhancing your capabilities and remain ahead in the ever-changing tech sector.

Why Get a DevOps Certification?

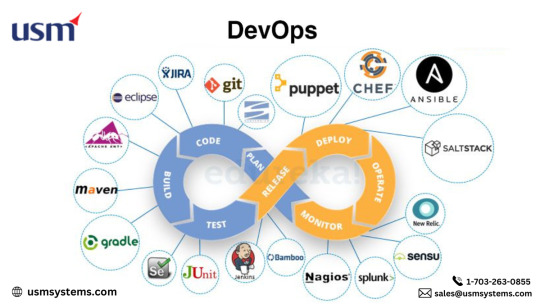

Certifications in DevOps demonstrate your expertise and experience of automation, cloud computing pipelines, CI/CD pipelines and infrastructure in code and many more. The reasons why getting a certification is useful:

Improved Career Opportunities : Certified DevOps professionals are highly sought after as top companies seek experienced engineers.

Higher Salary - Potential : The Accredited DevOps engineers earn considerably higher salaries than professionals who are not certified.

A better set of skills : Certifications can assist you in mastering tools such as Kubernetes, Docker, Ansible, Jenkins, and Terraform.

Higher job security : With the swift acceptance of DevOps methods, certified professionals have a better position in the market.

Top DevOps Certifications in 2025

1. AWS Certified DevOps Engineer – Professional

Best for: Cloud DevOps Engineers

Skills Covered: AWS automation, CI/CD pipelines, logging, and monitoring

Cost: $300

Why Choose This Certification? AWS is a dominant cloud provider, and this certification validates your expertise in implementing DevOps practices within AWS environments. It's ideal for those working with AWS services like EC2, Lambda, CloudFormation, and CodePipeline.

2. Microsoft Certified: DevOps Engineer Expert

Best for: Azure DevOps Professionals

Skills Covered: Azure DevOps, CI/CD, security, and monitoring

Cost: $165

Why Choose This Certification? If you're working in Microsoft Azure environments, this certification is a must. It covers using Azure DevOps Services, infrastructure as code with ARM templates, and managing Kubernetes deployments in Azure.

3. Google Cloud Professional DevOps Engineer

Best for: Google Cloud DevOps Engineers

Skills Covered: GCP infrastructure, CI/CD, monitoring, and incident response

Cost: $200

Why Choose This Certification? Google Cloud is growing in popularity, and this certification helps DevOps professionals validate their skills in site reliability engineering (SRE) and continuous delivery using GCP.

4. Kubernetes Certifications (CKA & CKAD)

Best for: Kubernetes Administrators & Developers

Skills Covered: Kubernetes cluster management, networking, and security

Cost: $395 each

Why Choose These Certifications? Kubernetes is the backbone of container orchestration, making these certifications essential for DevOps professionals dealing with cloud-native applications and containerized deployments.

CKA (Certified Kubernetes Administrator): Best for managing Kubernetes clusters.

CKAD (Certified Kubernetes Application Developer): Best for developers deploying apps in Kubernetes.

5. Docker Certified Associate (DCA)

Best for: Containerization Experts

Skills Covered: Docker containers, Swarm, storage, and networking

Cost: $195

Why Choose This Certification? Docker is a key component of DevOps workflows. The DCA certification helps you gain expertise in containerized applications, orchestration, and security best practices.

6. HashiCorp Certified Terraform Associate

Best for: Infrastructure as Code (IaC) Professionals

Skills Covered: Terraform, infrastructure automation, and cloud deployments

Cost: $70

Why Choose This Certification? Terraform is the leading Infrastructure as Code (IaC) tool. This certification proves your ability to manage cloud infrastructure efficiently across AWS, Azure, and GCP.

7. Red Hat Certified Specialist in Ansible Automation

Best for: Automation Engineers

Skills Covered: Ansible playbooks, configuration management, and security

Cost: $400

Why Choose This Certification? If you work with IT automation and configuration management, this certification validates your Ansible skills, making you a valuable asset for enterprises looking to streamline operations.

8. DevOps Institute Certifications (DASM, DOFD, and DOL)

Best for: DevOps Leadership & Fundamentals

Skills Covered: DevOps culture, processes, and automation best practices

Cost: Varies

Why Choose These Certifications? The DevOps Institute offers various certifications focusing on foundational DevOps knowledge, agile methodologies, and leadership skills, making them ideal for beginners and managers.

How to Choose the Right DevOps Certification?

Consider the following factors when selecting a Devops certification course online :

Your Experience Level: Beginners may start with Docker, Terraform, or DevOps Institute certifications, while experienced professionals can pursue AWS, Azure, or Kubernetes certifications.

Your Career Goals: If you work with a specific cloud provider, choose AWS, Azure, or GCP certifications. For automation, go with Terraform or Ansible.

Industry Demand: Certifications like AWS DevOps Engineer, Kubernetes, and Terraform have high industry demand and can boost your career.

Cost & Time Commitment: Some certifications require hands-on experience, practice labs, and exams, so choose one that fits your schedule and budget.

Final Thoughts

Certifications in DevOps can increase your technical knowledge and improve your chances of getting a job and enhance the amount you earn. No matter if you're looking to specialise in cloud-based platforms, automation, and containerization, you can find an option specifically designed for your specific career with Cloud Computing Certification Courses.

If you can earn at least one of these most prestigious DevOps-related certifications in 2025 You can establish yourself as a professional with a solid background in an industry that is rapidly changing.

0 notes

Text

Advanced DevOps Strategies: Optimizing Software Delivery and Operations

Introduction

By bridging the gap between software development and IT operations, DevOps is a revolutionary strategy that guarantees quicker and more dependable software delivery. Businesses may increase productivity and lower deployment errors by combining automation, continuous integration (CI), continuous deployment (CD), and monitoring. Adoption of DevOps has become crucial for businesses looking to improve their software development lifecycle's scalability, security, and efficiency. To optimize development workflows, DevOps approaches need the use of tools such as Docker, Kubernetes, Jenkins, Terraform, and cloud platforms. Businesses are discovering new methods to automate, anticipate, and optimize their infrastructure for optimal performance as AI and machine learning become more integrated into DevOps.

Infrastructure as Code (IaC): Automating Deployments

Infrastructure as Code (IaC), one of the fundamental tenets of DevOps, allows teams to automate infrastructure administration. Developers may describe infrastructure declaratively with tools like Terraform, Ansible, and CloudFormation, doing away with the need for manual setups. By guaranteeing repeatable and uniform conditions and lowering human error, IaC speeds up software delivery. Scalable and adaptable deployment models result from the automated provisioning of servers, databases, and networking components. Businesses may achieve version-controlled infrastructure, quicker disaster recovery, and effective resource use in both on-premises and cloud settings by implementing IaC in DevOps processes.

The Role of Microservices in DevOps

DevOps is revolutionized by microservices architecture, which makes it possible to construct applications in a modular and autonomous manner. Microservices encourage flexibility in contrast to conventional monolithic designs, enabling teams to implement separate services without impacting the program as a whole. The administration of containerized microservices is made easier by DevOps automation technologies like Docker and Kubernetes, which provide fault tolerance, scalability, and high availability. Organizations may improve microservices-based systems' observability, traffic management, and security by utilizing service mesh technologies like Istio and Consul. Microservices integration with DevOps is a recommended method for contemporary software development as it promotes quicker releases, less downtime, and better resource usage.

CI/CD Pipelines: Enhancing Speed and Reliability

Continuous Integration (CI) and Continuous Deployment (CD) are the foundation of DevOps automation, allowing for quick software changes with no interruption. Software dependability is ensured by automating code integration, testing, and deployment with tools like Jenkins, GitLab CI/CD, and GitHub Actions. By using CI/CD pipelines, production failures are decreased, development cycles are accelerated, and manual intervention is eliminated. Blue-green deployments, rollback procedures, and automated testing all enhance deployment security and stability. Businesses who use CI/CD best practices see improved time-to-market, smooth upgrades, and high-performance apps in both on-premises and cloud settings.

Conclusion

Businesses may achieve agility, efficiency, and security in contemporary software development by mastering DevOps principles. Innovation and operational excellence are fueled by the combination of IaC, microservices, CI/CD, and automation. A DevOps internship may offer essential industry exposure and practical understanding of sophisticated DevOps technologies and processes to aspiring individuals seeking to obtain practical experience.

#devOps#devOps mastery#Devops mastery course#devops mastery internship#devops mastery training#devops internship in pune#e3l#e3l.co

0 notes

Text

What is the latest technology in software development (2024)

what is the latest technology in software development is characterized by rapid advancements in various areas, aiming to improve development speed, enhance security, and increase efficiency. Below we discuss some of the most notable and recent technologies making waves in the industry.

What is the latest technology in software development

As we discuss what is the latest technology in software development, AI and ML are transforming the field by enabling predictive analytics, automating testing, and improving decision-making processes. Tools like GitHub Copilot, powered by OpenAI, assist developers in writing code faster by suggesting code snippets and automating repetitive tasks.

These technologies are not only improving development productivity but also enabling more robust, intelligent software applications. Low-code and no-code development platforms have gained significant traction as they enable users with minimal coding experience to build applications easily.

As with this aspect of what is the latest technology in software development, these platforms use drag-and-drop interfaces and pre-built components, reducing the need for extensive hand-coding. This trend democratizes software development, empowering businesses to create custom applications quickly and at a lower cost.

As cyber threats become more sophisticated, integrating security directly into the development pipeline is critical. DevSecOps incorporates security practices within the DevOps process, ensuring that code is secure from the beginning of the development lifecycle. This shift-left approach reduces vulnerabilities and enhances compliance, making software more robust against potential attacks.

Also on the list of what is the latest technology in software development, microservices have been around for some time, but they are continually evolving to make software development more scalable and maintainable. Combined with serverless architectures provided by platforms like AWS Lambda and Azure Functions, developers can deploy code without worrying about the underlying infrastructure.

These technologies support greater flexibility, allowing teams to scale individual services independently and optimize resource usage, resulting in cost-effective solutions. Progressive Web Apps (PWAs) bridge the gap between web and mobile applications by delivering an app-like experience directly through the web browser.

As we are discussing what is the latest technology in software development, these PWAs are reliable, fast, and capable of working offline, enhancing user engagement without the complexity of traditional app stores. This technology is particularly beneficial for businesses looking to provide a seamless user experience across devices.

Another one, blockchain, is no longer limited to cryptocurrencies. It is now finding applications in software development for creating secure, transparent, and tamper-proof solutions. Smart contracts and decentralized apps (dApps) are being used in various industries such as supply chain, finance, and identity management to foster trust and eliminate intermediaries.

While still in its early stages today as we discuss what is the latest technology in software development, quantum computing promises to solve complex problems that traditional computers cannot. Companies like IBM and Google are making significant strides in this area. Although it has not been fully integrated into mainstream software development, the potential for breakthroughs in data analysis and optimization is significant.

Cloud-native development, utilizing container orchestration platforms like Kubernetes and Docker, continues to reshape how applications are built and deployed. These technologies facilitate better resource management, scalability, and seamless deployment across multiple cloud environments, supporting the growing demand for flexible and resilient infrastructure.

Edge computing complements cloud one by processing data closer to the source, reducing latency and bandwidth usage. This technology is especially important for IoT applications and real-time analytics, as it ensures quicker response times and a more efficient use of resources. With advancements in hardware and software, developers now have more tools to create immersive applications that merge the physical and digital.

AR and VR are enhancing user experiences in various domains, from gaming to training simulations and e-commerce. The latest technologies in software development are driven by the need for greater efficiency, security, and scalability. Whether it’s AI-enhanced coding tools, cloud-native solutions, or quantum computing, these innovations are really reshaping the development landscape.

Link Article Here

0 notes

Text

How to Maximize Business Productivity with Top DevOps Automation Tools?

Maximizing business productivity with top DevOps automation tools involves streamlining development, deployment, and monitoring processes. Tools like Jenkins, Docker, Kubernetes, and Ansible help automate workflows, enhance collaboration, and improve system reliability. Implementing CI/CD pipelines, infrastructure as code (IaC), and automated testing ensures faster software delivery with minimal errors. Integrating AI-driven analytics further optimizes performance and resource utilization.

USM Business Systems

Services:

Mobile app development

Artificial Intelligence

Machine Learning

Android app development

RPA

Big data

HR Management

Workforce Management

IoT

IOS App Development

Cloud Migration

#DevOpsAutomation#BoostProductivity#BusinessAutomation#TopDevOpsTools#MaximizeEfficiency#DevOpsForBusiness#SmartAutomation#WorkplaceProductivity#AIinDevOps#OptimizeWorkflow

0 notes

Text

The role of machine learning in enhancing cloud-native container security - AI News

New Post has been published on https://thedigitalinsider.com/the-role-of-machine-learning-in-enhancing-cloud-native-container-security-ai-news/

The role of machine learning in enhancing cloud-native container security - AI News

The advent of more powerful processors in the early 2000’s shipping with support in hardware for virtualisation started the computing revolution that led, in time, to what we now call the cloud. With single hardware instances able to run dozens, if not hundreds of virtual machines concurrently, businesses could offer their users multiple services and applications that would otherwise have been financially impractical, if not impossible.

But virtual machines (VMs) have several downsides. Often, an entire virtualised operating system is overkill for many applications, and although very much more malleable, scalable, and agile than a fleet of bare-metal servers, VMs still require significantly more memory and processing power, and are less agile than the next evolution of this type of technology – containers. In addition to being more easily scaled (up or down, according to demand), containerised applications consist of only the necessary parts of an application and its supporting dependencies. Therefore apps based on micro-services tend to be lighter and more easily configurable.

Virtual machines exhibit the same security issues that affect their bare-metal counterparts, and to some extent, container security issues reflect those of their component parts: a mySQL bug in a specific version of the upstream application will affect containerised versions too. With regards to VMs, bare metal installs, and containers, cybersecurity concerns and activities are very similar. But container deployments and their tooling bring specific security challenges to those charged with running apps and services, whether manually piecing together applications with choice containers, or running in production with orchestration at scale.

Container-specific security risks

Misconfiguration: Complex applications are made up of multiple containers, and misconfiguration – often only a single line in a .yaml file, can grant unnecessary privileges and increase the attack surface. For example, although it’s not trivial for an attacker to gain root access to the host machine from a container, it’s still a too-common practice to run Docker as root, with no user namespace remapping, for example.

Vulnerable container images: In 2022, Sysdig found over 1,600 images identified as malicious in Docker Hub, in addition to many containers stored in the repo with hard-coded cloud credentials, ssh keys, and NPM tokens. The process of pulling images from public registries is opaque, and the convenience of container deployment (plus pressure on developers to produce results, fast) can mean that apps can easily be constructed with inherently insecure, or even malicious components.

Orchestration layers: For larger projects, orchestration tools such as Kubernetes can increase the attack surface, usually due to misconfiguration and high levels of complexity. A 2022 survey from D2iQ found that only 42% of applications running on Kubernetes made it into production – down in part to the difficulty of administering large clusters and a steep learning curve.

According to Ari Weil at Akamai, “Kubernetes is mature, but most companies and developers don’t realise how complex […] it can be until they’re actually at scale.”

Container security with machine learning

The specific challenges of container security can be addressed using machine learning algorithms trained on observing the components of an application when it’s ‘running clean.’ By creating a baseline of normal behaviour, machine learning can identify anomalies that could indicate potential threats from unusual traffic, unauthorised changes to configuration, odd user access patterns, and unexpected system calls.

ML-based container security platforms can scan image repositories and compare each against databases of known vulnerabilities and issues. Scans can be automatically triggered and scheduled, helping prevent the addition of harmful elements during development and in production. Auto-generated audit reports can be tracked against standard benchmarks, or an organisation can set its own security standards – useful in environments where highly-sensitive data is processed.

The connectivity between specialist container security functions and orchestration software means that suspected containers can be isolated or closed immediately, insecure permissions revoked, and user access suspended. With API connections to local firewalls and VPN endpoints, entire environments or subnets can be isolated, or traffic stopped at network borders.

Final word

Machine learning can reduce the risk of data breach in containerised environments by working on several levels. Anomaly detection, asset scanning, and flagging potential misconfiguration are all possible, plus any degree of automated alerting or amelioration are relatively simple to enact.

The transformative possibilities of container-based apps can be approached without the security issues that have stopped some from exploring, developing, and running microservice-based applications. The advantages of cloud-native technologies can be won without compromising existing security standards, even in high-risk sectors.

(Image source)

#2022#agile#ai#ai news#akamai#Algorithms#anomalies#anomaly#anomaly detection#API#applications#apps#Attack surface#audit#benchmarks#breach#bug#Cloud#Cloud-Native#clusters#Companies#complexity#computing#connectivity#container#container deployment#Containers#credentials#cybersecurity#data

0 notes

Text

AWS APP Runner Tutorial for Amazon Cloud Developers

Full Video Link - https://youtube.com/shorts/_OgnzyiP8TI Hi, a new #video #tutorial on #apprunner #aws #amazon #awsapprunner is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #code

AWS App Runner is a fully managed container application service that lets you build, deploy, and run containerized applications without prior infrastructure or container experience. AWS App Runner also load balances the traffic with encryption, scales to meet your traffic needs, and allows to communicate with other AWS applications in a private VPC. You can use App Runner to build and run API…

View On WordPress

#amazon web services#amazon web services tutorial#app runner#app runner tutorial#app runner vs fargate#aws#aws app runner#aws app runner demo#aws app runner docker#aws app runner equivalent in azure#aws app runner example#aws app runner review#aws app runner spring boot#aws app runner tutorial#aws app runner youtube#aws cloud#aws cloud services#aws cloud tutorial#aws developer tools#aws ecs fargate#aws tutorial beginning#what is amazon web services

0 notes

Text

Why Linode Accounts Are the Best Choice and Where to Buy Them

Linode has become a trusted name in the cloud hosting industry, offering high-quality services tailored for developers, businesses, and enterprises seeking reliable, scalable, and secure infrastructure. With its competitive pricing, exceptional customer support, and a wide range of features, Linode accounts are increasingly popular among IT professionals. If you're wondering why Linode is the best choice and where you can buy Linode account safely, this article will provide comprehensive insights.

Why Linode Accounts Are the Best Choice

1. Reliable Infrastructure

Linode is renowned for its robust and reliable infrastructure. With data centers located worldwide, it ensures high uptime and optimal performance. Businesses that rely on Linode accounts benefit from a stable environment for hosting applications, websites, and services.

Global Data Centers: Linode operates in 11 data centers worldwide, offering low-latency connections and redundancy.

99.99% Uptime SLA: Linode guarantees near-perfect uptime, making it an excellent choice for mission-critical applications.

2. Cost-Effective Pricing

Linode provides affordable pricing options compared to many other cloud providers. Its simple and transparent pricing structure allows users to plan their budgets effectively.

No Hidden Costs: Users pay only for what they use, with no unexpected charges.

Flexible Plans: From shared CPU instances to dedicated servers, Linode offers plans starting as low as $5 per month, making it suitable for businesses of all sizes.

3. Ease of Use

One of the standout features of Linode accounts is their user-friendly interface. The platform is designed to cater to beginners and seasoned developers alike.

Intuitive Dashboard: Manage your servers, monitor performance, and deploy applications easily.

One-Click Apps: Deploy popular applications like WordPress, Drupal, or databases with just one click.

4. High Performance

Linode ensures high performance through cutting-edge technology. Its SSD storage, fast processors, and optimized network infrastructure ensure lightning-fast speeds.

SSD Storage: All Linode plans come with SSDs for faster data access and improved performance.

Next-Generation Hardware: Regular updates to hardware ensure users benefit from the latest innovations.

5. Customizability and Scalability

Linode offers unparalleled flexibility, allowing users to customize their servers based on specific needs.

Custom Configurations: Tailor your server environment, operating system, and software stack.

Scalable Solutions: Scale up or down depending on your resource requirements, ensuring cost efficiency.

6. Developer-Friendly Tools

Linode is a developer-focused platform with robust tools and APIs that simplify deployment and management.

CLI and API Access: Automate server management tasks with Linode’s command-line interface and powerful APIs.

DevOps Ready: Supports tools like Kubernetes, Docker, and Terraform for seamless integration into CI/CD pipelines.

7. Exceptional Customer Support

Linode’s customer support is often highlighted as one of its strongest assets. Available 24/7, the support team assists users with technical and account-related issues.

Quick Response Times: Get answers within minutes through live chat or ticketing systems.

Extensive Documentation: Access tutorials, guides, and forums to resolve issues independently.

8. Security and Compliance

Linode prioritizes user security by providing features like DDoS protection, firewalls, and two-factor authentication.

DDoS Protection: Prevent downtime caused by malicious attacks.

Compliance: Linode complies with industry standards, ensuring data safety and privacy.

Conclusion

Linode accounts are an excellent choice for developers and businesses looking for high-performance, cost-effective, and reliable cloud hosting solutions. With its robust infrastructure, transparent pricing, and user-friendly tools, Linode stands out as a top-tier provider in the competitive cloud hosting market.

When buying Linode accounts, prioritize safety and authenticity by purchasing from the official website or verified sources. This ensures you benefit from Linode’s exceptional features and customer support. Avoid unverified sellers to minimize risks and guarantee a smooth experience.

Whether you’re a developer seeking scalable hosting or a business looking to streamline operations, Linode accounts are undoubtedly one of the best choices. Start exploring Linode today and take your cloud hosting experience to the next level!

0 notes

Text

How to Deploy Your Full Stack Application: A Beginner’s Guide

Deploying a full stack application involves setting up your frontend, backend, and database on a live server so users can access it over the internet. This guide covers deployment strategies, hosting services, and best practices.

1. Choosing a Deployment Platform

Popular options include:

Cloud Platforms: AWS, Google Cloud, Azure

PaaS Providers: Heroku, Vercel, Netlify

Containerized Deployment: Docker, Kubernetes

Traditional Hosting: VPS (DigitalOcean, Linode)

2. Deploying the Backend

Option 1: Deploy with a Cloud Server (e.g., AWS EC2, DigitalOcean)

Set Up a Virtual Machine (VM)

bash

ssh user@your-server-ip

Install Dependencies

Node.js (sudo apt install nodejs npm)

Python (sudo apt install python3-pip)

Database (MySQL, PostgreSQL, MongoDB)

Run the Server

bash

nohup node server.js & # For Node.js apps gunicorn app:app --daemon # For Python Flask/Django apps

Option 2: Serverless Deployment (AWS Lambda, Firebase Functions)

Pros: No server maintenance, auto-scaling

Cons: Limited control over infrastructure

3. Deploying the Frontend

Option 1: Static Site Hosting (Vercel, Netlify, GitHub Pages)

Push Code to GitHub

Connect GitHub Repo to Netlify/Vercel

Set Build Command (e.g., npm run build)

Deploy and Get Live URL

Option 2: Deploy with Nginx on a Cloud Server

Install Nginx

bash

sudo apt install nginx

Configure Nginx for React/Vue/Angular

nginx

server { listen 80; root /var/www/html; index index.html; location / { try_files $uri /index.html; } }

Restart Nginx

bash

sudo systemctl restart nginx

4. Connecting Frontend and Backend

Use CORS middleware to allow cross-origin requests

Set up reverse proxy with Nginx

Secure API with authentication tokens (JWT, OAuth)

5. Database Setup

Cloud Databases: AWS RDS, Firebase, MongoDB Atlas

Self-Hosted Databases: PostgreSQL, MySQL on a VPS

bash# Example: Run PostgreSQL on DigitalOcean sudo apt install postgresql sudo systemctl start postgresql

6. Security & Optimization

✅ SSL Certificate: Secure site with HTTPS (Let’s Encrypt) ✅ Load Balancing: Use AWS ALB, Nginx reverse proxy ✅ Scaling: Auto-scale with Kubernetes or cloud functions ✅ Logging & Monitoring: Use Datadog, New Relic, AWS CloudWatch

7. CI/CD for Automated Deployment

GitHub Actions: Automate builds and deployment

Jenkins/GitLab CI/CD: Custom pipelines for complex deployments

Docker & Kubernetes: Containerized deployment for scalability

Final Thoughts

Deploying a full stack app requires setting up hosting, configuring the backend, deploying the frontend, and securing the application.

Cloud platforms like AWS, Heroku, and Vercel simplify the process, while advanced setups use Kubernetes and Docker for scalability.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

Azure DevOps Advance Course: Elevate Your DevOps Expertise

The Azure DevOps Advanced Course is for individuals with a solid understanding of DevOps and who want to enhance their skills and knowledge within the Microsoft Azure ecosystem. This course is designed to go beyond the basics and focus on advanced concepts and practices for managing and implementing complex DevOps workflows using Azure tools.

Key Learning Objectives:

Advanced Pipelines for CI/CD: Learn how to build highly scalable, reliable, and CI/CD pipelines with Azure DevOps Tools like Azure Pipelines. Azure Artifacts and Azure Key Vault. Learn about advanced branching, release gates and deployment strategies in different environments.

Infrastructure as Code (IaC): Master the use of infrastructure-as-code tools like Azure Resource Manager (ARM) templates and Terraform to automate the provisioning and management of Azure resources. This includes best practices for versioning, testing and deploying infrastructure configurations.

Containerization: Learn about container orchestration using Docker. Learn how to create, deploy and manage containerized apps on Azure Kubernetes Service. Explore concepts such as service meshes and ingress controllers.

Security and compliance: Understanding security best practices in the DevOps Lifecycle. Learn how to implement various security controls, including code scanning, vulnerability assessment, and secret management, at different stages of the pipeline. Learn how to implement compliance frameworks such as ISO 27001 or SOC 2 using Azure DevOps.

Monitoring & Logging: Acquire expertise in monitoring application performance and health. Azure Monitor, Application Insights and other tools can be used to collect, analyse and visualize telemetry. Implement alerting mechanisms to troubleshoot problems proactively.

Advanced Debugging and Troubleshooting: Develop advanced skills in troubleshooting to diagnose and solve complex issues with Azure DevOps deployments and pipelines. Learn how to debug code and analyze logs to identify and solve problems.

Who should attend:

DevOps Engineers

System Administrators

Software Developers

Cloud Architects

IT Professionals who want to improve their DevOps on the Azure platform

Benefits of taking the course:

Learn advanced DevOps concepts, best practices and more.

Learn how to implement and manage complex DevOps Pipelines.

Azure Tools can help you automate your infrastructure and applications.

Learn how to integrate security, compliance and monitoring into the DevOps Lifecycle.

Get a competitive advantage in the job market by acquiring advanced Azure DevOps Advance Course knowledge.

The Azure DevOps Advanced Course is a comprehensive, practical learning experience that will equip you with the knowledge and skills to excel in today’s dynamic cloud computing environment.

0 notes

Text

Master Kubernetes Basics: The Ultimate Beginner’s Tutorial

Kubernetes has become a buzzword in the world of containerized applications. But what exactly is Kubernetes, and how can beginners start using it? In simple terms, Kubernetes is a powerful open-source platform designed to manage and scale containerized applications effortlessly.

Why Learn Kubernetes? As businesses shift towards modern software development practices, Kubernetes simplifies the deployment, scaling, and management of applications. It ensures your apps run smoothly across multiple environments, whether in the cloud or on-premises.

How Does Kubernetes Work? Kubernetes organizes applications into containers and manages these containers using Pods. Pods are the smallest units in Kubernetes, where one or more containers work together. Kubernetes automates tasks like load balancing, scaling up or down based on traffic, and ensuring applications stay available even during failures.

Getting Started with Kubernetes

Understand the Basics: Learn about containers (like Docker), clusters, and nodes. These are the building blocks of Kubernetes.

Set Up a Kubernetes Environment: Use platforms like Minikube or Kubernetes on cloud providers like AWS or Google Cloud for practice.

Explore Key Concepts: Focus on terms like Pods, Deployments, Services, and ConfigMaps.

Experiment and Learn: Deploy sample applications to understand how Kubernetes works in action.

Kubernetes might seem complex initially, but with consistent practice, you'll master it. Ready to dive deeper into Kubernetes? Check out this detailed guide in the Kubernetes Tutorial.

0 notes