#Director General Adjunct

Explore tagged Tumblr posts

Text

Impressions - Part 02

pairing: tashi duncan x bipoc! fem! reader

word count: 2.3k words

context: 2019. los angeles. tashi duncan has found her perfect actor after a vigorous round of auditions. but did the actor stumble upon the audition by chance? or was it premeditated?

no specific pronouns used. reader is able bodied and can speak. reader is about 25, while tashi is 31/32.

based on this post. check out part 01.

sorry for taking so long. grad school is really kicking my butt right now.

She doesn’t seem impressed.

The way she turns the pages of the stapled papers, her nails glimmering in the light. There’s a hint of glitter and they have a cream to pink ombre. They look really nice. And it was clear she had just gotten them done. The clear gloss made her lips look soft and shiny.

Your heart is pounding. You don’t know why. Tashi Duncan asked you for criticism of her work. Were you perhaps not harsh enough? It was hard to tell. The script was just…well, you wanted to keep reading. You had to read it a fourth time to actually start annotating and adding your notes. It was also hard to criticize her vision without any sort of visual. Film was a visual medium after all. It was hard to see what she meant when you were reading.

“Did you hold back?”

You pick up the glass of sangria and take a small sip. “Well…”

Tashi looks at you expectantly. “I thought you’d be harsher.”

“It’s hard to judge entirely. Because part of film critique is…to see the film…”

Her other hand plays with the fork, before stabbing a few leaves and tomatoes of her salad. “So…essentially, you can’t fully critique it without seeing the actual film.”

“A script is only part of it. I just think it’d be nice to have some sort of visual.” Your plate was already clean from your appetizer. It felt odd to be treated to a full course meal by Tashi. But she said you wouldn’t need to pay. Which was generous considering how expensive the restaurant was and being an adjunct didn’t pay as much as you wanted it to. Plus rent was due soon.

“That’s fair. I have a specific vision I want to achieve.” She closes the script and her finger runs over the colored tabs. She liked that the cover page had a key for the colors—by highlighter and by tab. “You seem well aware of that.”

“I’ve watched…most of your stuff. All your films. Majority of the television episodes you’ve directed. And I’ve watched a lot of behind the scenes interviews.” You feel your cheeks heat up. Honestly, you sounded like a bit of a fan.

There’s a smile creeping up on Tashi’s face. “It’s surprisingly rare to find people that have watched your work and…understand your process.” She says. “It takes a certain amount of trust and popularity to be given full control.”

“I’m pretty sure you’ve proven yourself already. Your last film was amazing. 5 stars on Letterboxd.” You hold your glass, tipping it towards the director.

Tashi picks up her cocktail and gently taps the glass against your own. “You and the other hundreds of thousands of people.”

“Where are we?”

Tashi puts the car in park and turns off the ignition with the touch of a button. There’s a click and the rapid retraction of her seatbelt. “My house.” The sound of the door opening is crisp. Or maybe it’s because the sangria made things sound sharper than they should.

It was actually smaller than you thought. But certainly a lot of space for a person living alone. “How many bedrooms?” You unbuckle your seat and climb out of the car. The air feels refreshing against the hot skin of your face. You could feel the vessels throbbing beneath from the body’s processing of the ingested alcohol. You make sure to close the door all the way and follow after her.

Her keys have a keychain attached to it: a Sonny Angel with a frog hat. And he’s wearing a green shirt and some jeans. “Three beds, one full bath, one half bath.” She says. “It’s expensive, but I can afford it. And one of the bedrooms is…well, you’ll see.” When she looks back at you, it’s teasing. The corner of her mouth is curled into one of her charming smirks. The kind that also became a popular meme to use online. “The other is a guest bedroom. Because you never know when someone’s going to stay the night.”

“So…does that mean your parents drop in often?”

“Yes.” The door clicks and she pushes the door open. “Hi~” Her voice is suddenly a pitch higher.

When you step into the house and close the door behind you, you see why. A gray tabby cat nuzzles up against Tashi’s leg, mewling. It suddenly jumps, trying to climb up her pants. You remove your shoes, setting them to the side so they aren't in the way of the door. And you make sure to lock the front door. “Who’s this?” You ask.

“I named her K.C.” Tashi gently pries the cat off of her pants and holds her.

“After your character on that spy sitcom?”

“Yes. Precisely.” Her nails scratch K.C.’s chin and there’s a purr in response. “She’s a little troublemaker. But she followed me home one day after I went out to eat. No one came to claim her, so now she’s my cat.”

You take a few steps closer to her and put your finger out. K.C. sniffs the offered finger and nuzzles her nose against it. “How old is she?”

“Around six months. She followed me home when she was only eight weeks old.” Tashi bends down to set the cat down. You follow the director into the kitchen, taking in the decorations after your eyes adjust to the sudden turning on of the soft lights. You’re not surprised to find plenty of movie posters on the wall, including one of Amélie and Tampopo. Which was smart. Putting the movie about food in the kitchen certainly made the hunger return.

Tashi quickly fills her bowl with some kibble, wet food, and a little bit of bone broth. She sets it down and K.C. immediately begins to eat. “Kittens. They always eat like they were never fed.” You joke.

“There was a time she literally ate my toast.” Tashi slowly plucks the rings off her fingers and washes her hands. They move so delicately. Covered in a thick layer of suds. Her scrubbing beneath her fingernails. The water washes away the soap and she turns off the faucet, drying her hands. The towel gets between her fingers. Her fingers. Her long fingers. She slides the rings back on. “She jumped up and just took my toast out of my fingers. And it had grape jelly on it—”

“Wait. You eat grape jelly?” You knew no one that actually liked grape jelly. Aside from your grandfather and younger brother.

Tashi rolls her eyes. “I prefer raspberry. But a friend got me an artisanal grape jelly when he visited the farmer’s market. Said it’d be good to try it. And it was good. I just prefer raspberry. The tartness balances better with the sugar.” She begins walking and when she looks back at you, you know what she’s saying.

Follow me.

Your feet carry you and you can faintly smell the lingering notes of her perfume. Tashi turns the hallway light on and then opens a door off to the side. She flicks the light switch on and the room is filled with a warm light. You stand in the door while she goes over to the desk and leans against it, arms crossed over her chest.

You’re taken in by the boxes in the corner, stacked. There’s an easel by the window. Multiple sheets of paper were taped onto the wall. There’s a board with more sheets of paper pinned to it. It definitely feels like an artist’s studio, a stark contrast to the reality of Tashi Duncan as a filmmaker.

“So you’re artsy?” You ask.

“You could say that.” She cocks her head to the side. “You can come in, you know.”

“Yeah…I’m afraid I might set this place on fire.” A nervous chuckle escapes you. It’s utterly gorgeous. And some of the pieces on the wall take your breath away. Gorgeous. Vibrant. Full of color and with gorgeous shading. There’s some photographs tapped around the room too. Mostly landscapes and settings. One collection is just a room at different angles.

“You won’t. Just come take a look. These are my storyboards.”

“...Huh!”

Your jaw practically dropped.

These were Tashi Duncan’s storyboards?

This was on a similar level to Ridley Scott. That was kind of mindblowing. “Y-Your storyboards?”

“I just have a really tedious process.” Tashi uncrosses her arms and rests them between her thighs. “It’s a little…frustrating. But it really helps get the images out of my head and onto something tangible. And if it doesn’t look like what I actually want it to, then I am still satisfied anyways because my vision was fulfilled.”

Your step is gentle and you walk over to the board first. This was clearly the storyboard with guidelines and vague shapes to indicate lighting and shadows. It was clear to see that Tashi’s strong suit was perspective. Your eyes slowly move to the big paper taped to the wall. A woman looking up. The light is shining down while the background is bathed in a dark blue light. Blood covers her mouth and drips down her chin and neck. The neckline of her dress is red, soaked from blood. And…

“She kind of looks like me.”

Tashi purses her lips. “Yeah.” She lets out a small laugh. “It just came to me in a dream.”

You look back at her, smiling. “It’s funny how dreams work, huh? The kind of people our subconscious recognizes and puts together. Which reminds me. I think you should maybe lean more into psychoanalysis for your movie. I know the idea of id, ego, and superego is overdone and may be boring…but I think there would be something interesting in presenting your three primary characters in that way. It never gets old. And honestly, psychoanalytical readings are never not trendy.”

“That’s actually an amazing suggestion.” Tashi licks her lips. You fail to notice her eyes trailing down your back.

“I’m happy you think so. I think a lot of film scholars would just go crazy over it.” You look at her. “Also, where’s the bathroom?”

“Down the hall to your right. It has a peacock on the door.”

“Got it. I’ll be back. I just had a lot of sangria.”

Tashi watches you leave. And she turns back to her desk, collecting the photos together and putting them in a neat pile. Pictures of you. Some of them were stills. Some your headshots. Others from your Instagram account. She opens the drawer and lifts up a manila folder and sketchbook, shoving the photos beneath. The drawer slams shut and she opens another drawer off the side, pulling out some more books.

She hears the sound of the toilet flushing and then the running water of the sink. You come back within three minutes, hands dried and rubbing lotion into your skin. “Where’d you get the lotion in the bathroom?”

“Costco.”

“Damn. That’s hot.”

You realize what you just said.

“I-I mean…it’s hot that you have a Costco membership!”

Tashi can’t help but laugh. “I would say the same to someone. Do you want something to drink? Some tea? Or maybe some water?”

“I think water would be good.”

“Be right back.” When Tashi leaves the room, her clothes brush against you. You feel the goosebumps forming over your arm. And there’s her perfume. It was addictive.

You decide to walk around the room, taking in the storyboards more. You don’t dare touch the boxes, despite the urge to look. There’s something else that satiates your curiosity: the books on the desk. You pick one up and carefully open it to a random page. It’s some sketches. You recognize one of the sketches as actor and producer Art Donaldson. You forgot that he was in Tashi’s second film, on top of producing it.

“Like them?”

You nearly jump, slamming the book closed. Tashi walks over and sets a mug of water on the desk. She hands you the other one and you take it. There are flowers on it. “Sorry. I was just looking—”

“It’s fine. You’re already in here. You might as well look.” Tashi shrugs.

“You’re like…amazing!”

“It took a lot of practice.” Tashi grabs the more run down book and flips it open. You purse your lips to stifle a laugh. “It’s okay, you know. We all start somewhere. Besides, Rian Johnson’s storyboards look the same. And this was my first time directing.”

Tashi Duncan’s directorial debut. Inside Audrey Horne.

“You’re right. I mean if it gets the job done…what’s the point in arguing?” You take a sip of the cold water. “So you practiced and now…you just do full on art pieces?”

“I like experimenting with color.” She shrugs. “And naturally if I am taking inspiration from Dario Argento and technicolor, then it’s best to figure out what colors mesh well.”

“So what do you use?”

“Pastels. I like my drawings to look smooth.”

“You do have a way with color.” Your eyes keep going back to the big drawing on the wall, of your lookalike staring up at something in both awe and horror. “I’m guessing that’s the scene of when I cannibalize my former castmate?”

“It is. I have a specific idea of what that shot would look like.” Tashi takes a sip, her brown eyes watching your body language. You’re at ease. You’re relaxed. You’re in the mood for chatter and to hear more, like the film nerd that you were. “So…do you have anything else you want to add?”

“I mean…your script is solid. And seeing what you intend to make just…it’s awesome to see what your vision is.”

Even though Tashi said she didn’t want a yes man, she still liked getting praise. It was necessary to know what she was doing right and how to keep it right. But hearing it from you was different. It was more special. So she decides to prompt you.

“Tell me what’s on your mind.”

#challengers#challengers 2024#challengers fanfiction#challengers x reader#challengers au#ghostface au#tashi duncan#tashi duncan x reader#tashi duncan x you#tashi duncan x y/n#x reader#female reader

23 notes

·

View notes

Text

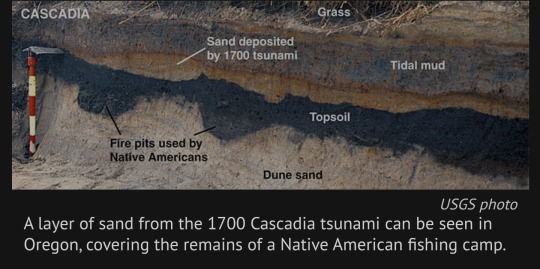

Of particular concern are signals of massive earthquakes in the region’s geologic history. Many researchers have chased clues of the last “big one”: an 8.7-magnitude earthquake in 1700. They’ve pieced together the event’s history using centuries-old records of tsunamis, Native American oral histories, physical evidence in ghost forests drowned by saltwater and limited maps of the fault.

But no one had mapped the fault structure comprehensively — until now. A study published Friday in the journal Science Advances describes data gathered during a 41-day research voyage in which a vessel trailed a miles-long cable along the fault to listen to the seafloor and piece together an image.

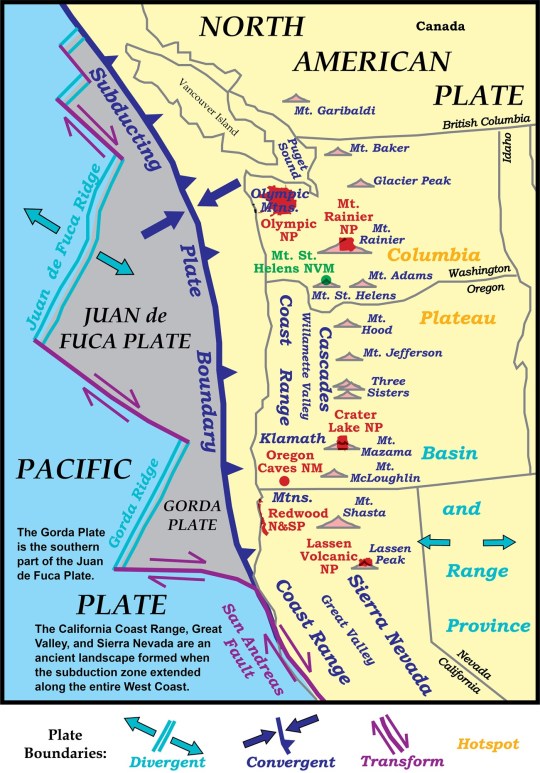

The team completed a detailed map of more than 550 miles of the subduction zone, down to the Oregon-California border.

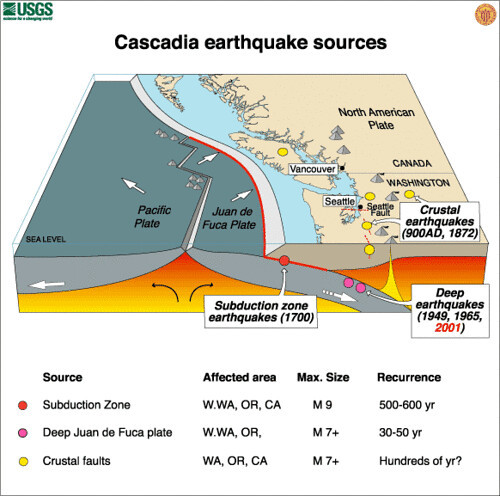

Their work will give modelers a sharper view of the possible impacts of a megathrust earthquake there — the term for a quake that occurs in a subduction zone, where one tectonic plate is thrust under another. It will also provide planners a closer, localized look at risks to communities along the Pacific Northwest coast and could help redefine earthquake building standards.

“It’s like having coke-bottle glasses on and then you remove the glasses and you have the right prescription,” said Suzanne Carbotte, a lead author of the paper and a marine geophysicist and research professor at Columbia University’s Lamont-Doherty Earth Observatory. “We had a very fuzzy low-resolution view before.”

The scientists found that the subduction zone is much more complex than they previously understood: It is divided into four segments that the researchers believe could rupture independently of one another or together all at once. The segments have different types of rock and varying seismic characteristics — meaning some could be more dangerous than others.

Earthquake and tsunami modelers are beginning to assess how the new data affects earthquake scenarios for the Pacific Northwest.

Kelin Wang, a research scientist at the Geological Survey of Canada who was not involved in the study, said his team, which focuses on earthquake hazard and tsunami risk, is already using the data to inform projections.

“The accuracy and this resolution is truly unprecedented. And it’s an amazing data set,” said Wang, who is also an adjunct professor at the University of Victoria in British Columbia. “It just allows us to do a better job to assess the risk and have information for the building codes and zoning.”

Harold Tobin, a co-author of the paper and the director of the Pacific Northwest Seismic Network, said that although the data will help fine-tune projections, it doesn’t change a tough-to-swallow reality of living in the Pacific Northwest.

“We have the potential for earthquakes and tsunamis as large as the biggest ones we’ve experienced on the planet,” said Tobin, who is also a University of Washington professor. “Cascadia seems capable of generating a magnitude 9 or a little smaller or a little bigger.”

A quake that powerful could cause shaking that lasts about five minutes and generate tsunami waves up to 80 feet tall. It would damage well over half a million buildings, according to emergency planning documents.

Neither Oregon nor Washington is sufficiently prepared.

To map the subduction zone, researchers at sea performed active source seismic imaging, a technique that sends sound to the ocean floor and then processes the echoes that return. The method is often used for oil and gas exploration.

They towed a 9-plus-mile-long cable, called a streamer, behind the boat, which used 1,200 hydrophones to capture returning echoes.

“That gives us a picture of what the subsurface looks like,” Carbotte said.

Trained marine mammal observers alerted the crew to any sign of whales or other animals; the sound generated with this kind of technology can be disruptive and harm marine creatures. Carbotte said the new research makes it more clear that the entire Cascadia fault might not rupture at once.

“It requires an 8.7 to get a tsunami all the way to Japan,” Tobin said.

"The next earthquake that happens at Cascadia could be rupturing just one of these segments or it could be rupturing the whole margin,” Carbotte said, adding that several individual segments are thought to be capable of producing at least magnitude-8 earthquakes.

Over the past century, scientists have only observed five magnitude-9.0 or higher earthquakes — all megathrust temblors like the one predicted for the Cascadia Subduction Zone.

Scientists pieced together an understanding of the last such Cascadia quake, in 1700, in part via Japanese records of an unusual orphan tsunami that was not preceded by shaking there.

The people who recorded the incident in Japan couldn’t have known that the ground had shaken an ocean away, in the present-day United States.

Today, the Cascadia Subduction Zone remains eerily quiet. In other subduction zones, scientists often observe small earthquakes frequently, which makes the area easier to map, according to Carbotte. That’s not the case here.

Scientists have a handful of theories about why: Wang said the zone may be becoming quieter as the fault accumulates stress. And now, we’re probably nearing due.

.“The recurrent interval for this subduction zone for big events is on the order of 500 years,” Wang said. “It’s hard to know exactly when it will happen, but certainly if you compare this to other subduction zones, it is quite late.”

#science#geology#earthquakes#tsunami#natural disasters#Washington#Oregon#California#cascadia subduction zone#environment#PlanetFkd

3 notes

·

View notes

Text

By: Edward Schlosser

Published: Jun 3, 2015

I’m a professor at a midsize state school. I have been teaching college classes for nine years now. I have won (minor) teaching awards, studied pedagogy extensively, and almost always score highly on my student evaluations. I am not a world-class teacher by any means, but I am conscientious; I attempt to put teaching ahead of research, and I take a healthy emotional stake in the well-being and growth of my students.

Things have changed since I started teaching. The vibe is different. I wish there were a less blunt way to put this, but my students sometimes scare me — particularly the liberal ones.

Not, like, in a person-by-person sense, but students in general. The student-teacher dynamic has been reenvisioned along a line that’s simultaneously consumerist and hyper-protective, giving each and every student the ability to claim Grievous Harm in nearly any circumstance, after any affront, and a teacher’s formal ability to respond to these claims is limited at best.

What it was like before

In early 2009, I was an adjunct, teaching a freshman-level writing course at a community college. Discussing infographics and data visualization, we watched a flash animation describing how Wall Street’s recklessness had destroyed the economy.

The video stopped, and I asked whether the students thought it was effective. An older student raised his hand.

”What about Fannie and Freddie?” he asked. “Government kept giving homes to black people, to help out black people, white people didn’t get anything, and then they couldn’t pay for them. What about that?”

I gave a quick response about how most experts would disagree with that assumption, that it was actually an oversimplification, and pretty dishonest, and isn’t it good that someone made the video we just watched to try to clear things up? And, hey, let’s talk about whether that was effective, okay? If you don’t think it was, how could it have been?

The rest of the discussion went on as usual.

The next week, I got called into my director’s office. I was shown an email, sender name redacted, alleging that I “possessed communistical [sic] sympathies and refused to tell more than one side of the story.” The story in question wasn’t described, but I suspect it had do to with whether or not the economic collapse was caused by poor black people.

My director rolled her eyes. She knew the complaint was silly bullshit. I wrote up a short description of the past week’s class work, noting that we had looked at several examples of effective writing in various media and that I always made a good faith effort to include conservative narratives along with the liberal ones.

Along with a carbon-copy form, my description was placed into a file that may or may not have existed. Then ... nothing. It disappeared forever; no one cared about it beyond their contractual duties to document student concerns. I never heard another word of it again.

That was the first, and so far only, formal complaint a student has ever filed against me.

Now boat-rocking isn’t just dangerous — it’s suicidal

This isn’t an accident: I have intentionally adjusted my teaching materials as the political winds have shifted. (I also make sure all my remotely offensive or challenging opinions, such as this article, are expressed either anonymously or pseudonymously). Most of my colleagues who still have jobs have done the same. We’ve seen bad things happen to too many good teachers — adjuncts getting axed because their evaluations dipped below a 3.0, grad students being removed from classes after a single student complaint, and so on.

I once saw an adjunct not get his contract renewed after students complained that he exposed them to “offensive” texts written by Edward Said and Mark Twain. His response, that the texts were meant to be a little upsetting, only fueled the students’ ire and sealed his fate. That was enough to get me to comb through my syllabi and cut out anything I could see upsetting a coddled undergrad, texts ranging from Upton Sinclair to Maureen Tkacik — and I wasn’t the only one who made adjustments, either.

I am frightened sometimes by the thought that a student would complain again like he did in 2009. Only this time it would be a student accusing me not of saying something too ideologically extreme — be it communism or racism or whatever — but of not being sensitive enough toward his feelings, of some simple act of indelicacy that’s considered tantamount to physical assault. As Northwestern University professor Laura Kipnis writes, “Emotional discomfort is [now] regarded as equivalent to material injury, and all injuries have to be remediated.” Hurting a student’s feelings, even in the course of instruction that is absolutely appropriate and respectful, can now get a teacher into serious trouble.

In 2009, the subject of my student’s complaint was my supposed ideology. I was communistical, the student felt, and everyone knows that communisticism is wrong. That was, at best, a debatable assertion. And as I was allowed to rebut it, the complaint was dismissed with prejudice. I didn’t hesitate to reuse that same video in later semesters, and the student’s complaint had no impact on my performance evaluations.

In 2015, such a complaint would not be delivered in such a fashion. Instead of focusing on the rightness or wrongness (or even acceptability) of the materials we reviewed in class, the complaint would center solely on how my teaching affected the student’s emotional state. As I cannot speak to the emotions of my students, I could not mount a defense about the acceptability of my instruction. And if I responded in any way other than apologizing and changing the materials we reviewed in class, professional consequences would likely follow.

I wrote about this fear on my blog, and while the response was mostly positive, some liberals called me paranoid, or expressed doubt about why any teacher would nix the particular texts I listed. I guarantee you that these people do not work in higher education, or if they do they are at least two decades removed from the job search. The academic job market is brutal. Teachers who are not tenured or tenure-track faculty members have no right to due process before being dismissed, and there’s a mile-long line of applicants eager to take their place. And as writer and academic Freddie DeBoer writes, they don’t even have to be formally fired — they can just not get rehired. In this type of environment, boat-rocking isn’t just dangerous, it’s suicidal, and so teachers limit their lessons to things they know won’t upset anybody.

The real problem: a simplistic, unworkable, and ultimately stifling conception of social justice

This shift in student-teacher dynamic placed many of the traditional goals of higher education — such as having students challenge their beliefs — off limits. While I used to pride myself on getting students to question themselves and engage with difficult concepts and texts, I now hesitate. What if this hurts my evaluations and I don’t get tenure? How many complaints will it take before chairs and administrators begin to worry that I’m not giving our customers — er, students, pardon me — the positive experience they’re paying for? Ten? Half a dozen? Two or three?

This phenomenon has been widely discussed as of late, mostly as a means of deriding political, economic, or cultural forces writers don’t much care for. Commentators on the left and right have recently criticized the sensitivity and paranoia of today’s college students. They worry about the stifling of free speech, the implementation of unenforceable conduct codes, and a general hostility against opinions and viewpoints that could cause students so much as a hint of discomfort.

I agree with some of these analyses more than others, but they all tend to be too simplistic. The current student-teacher dynamic has been shaped by a large confluence of factors, and perhaps the most important of these is the manner in which cultural studies and social justice writers have comported themselves in popular media. I have a great deal of respect for both of these fields, but their manifestations online, their desire to democratize complex fields of study by making them as digestible as a TGIF sitcom, has led to adoption of a totalizing, simplistic, unworkable, and ultimately stifling conception of social justice. The simplicity and absolutism of this conception has combined with the precarity of academic jobs to create higher ed’s current climate of fear, a heavily policed discourse of semantic sensitivity in which safety and comfort have become the ends and the means of the college experience.

This new understanding of social justice politics resembles what University of Pennsylvania political science professor Adolph Reed Jr. calls a politics of personal testimony, in which the feelings of individuals are the primary or even exclusive means through which social issues are understood and discussed. Reed derides this sort of political approach as essentially being a non-politics, a discourse that “is focused much more on taxonomy than politics [which] emphasizes the names by which we should call some strains of inequality [ ... ] over specifying the mechanisms that produce them or even the steps that can be taken to combat them.” Under such a conception, people become more concerned with signaling goodness, usually through semantics and empty gestures, than with actually working to effect change.

Herein lies the folly of oversimplified identity politics: while identity concerns obviously warrant analysis, focusing on them too exclusively draws our attention so far inward that none of our analyses can lead to action. Rebecca Reilly Cooper, a political philosopher at the University of Warwick, worries about the effectiveness of a politics in which “particular experiences can never legitimately speak for any one other than ourselves, and personal narrative and testimony are elevated to such a degree that there can be no objective standpoint from which to examine their veracity.” Personal experience and feelings aren’t just a salient touchstone of contemporary identity politics; they are the entirety of these politics. In such an environment, it’s no wonder that students are so prone to elevate minor slights to protestable offenses.

(It’s also why seemingly piddling matters of cultural consumption warrant much more emotional outrage than concerns with larger material implications. Compare the number of web articles surrounding the supposed problematic aspects of the newest Avengers movie with those complaining about, say, the piecemeal dismantling of abortion rights. The former outnumber the latter considerably, and their rhetoric is typically much more impassioned and inflated. I’d discuss this in my classes — if I weren’t too scared to talk about abortion.)

The press for actionability, or even for comprehensive analyses that go beyond personal testimony, is hereby considered redundant, since all we need to do to fix the world’s problems is adjust the feelings attached to them and open up the floor for various identity groups to have their say. All the old, enlightened means of discussion and analysis —from due process to scientific method — are dismissed as being blind to emotional concerns and therefore unfairly skewed toward the interest of straight white males. All that matters is that people are allowed to speak, that their narratives are accepted without question, and that the bad feelings go away.

So it’s not just that students refuse to countenance uncomfortable ideas — they refuse to engage them, period. Engagement is considered unnecessary, as the immediate, emotional reactions of students contain all the analysis and judgment that sensitive issues demand. As Judith Shulevitz wrote in the New York Times, these refusals can shut down discussion in genuinely contentious areas, such as when Oxford canceled an abortion debate. More often, they affect surprisingly minor matters, as when Hampshire College disinvited an Afrobeat band because their lineup had too many white people in it.

When feelings become more important than issues

At the very least, there’s debate to be had in these areas. Ideally, pro-choice students would be comfortable enough in the strength of their arguments to subject them to discussion, and a conversation about a band’s supposed cultural appropriation could take place alongside a performance. But these cancellations and disinvitations are framed in terms of feelings, not issues. The abortion debate was canceled because it would have imperiled the “welfare and safety of our students.” The Afrofunk band’s presence would not have been “safe and healthy.” No one can rebut feelings, and so the only thing left to do is shut down the things that cause distress — no argument, no discussion, just hit the mute button and pretend eliminating discomfort is the same as effecting actual change.

In a New York Magazine piece, Jonathan Chait described the chilling effect this type of discourse has upon classrooms. Chait’s piece generated seismic backlash, and while I disagree with much of his diagnosis, I have to admit he does a decent job of describing the symptoms. He cites an anonymous professor who says that “she and her fellow faculty members are terrified of facing accusations of triggering trauma.” Internet liberals pooh-poohed this comment, likening the professor to one of Tom Friedman’s imaginary cab drivers. But I’ve seen what’s being described here. I’ve lived it. It’s real, and it affects liberal, socially conscious teachers much more than conservative ones.

If we wish to remove this fear, and to adopt a politics that can lead to more substantial change, we need to adjust our discourse. Ideally, we can have a conversation that is conscious of the role of identity issues and confident of the ideas that emanate from the people who embody those identities. It would call out and criticize unfair, arbitrary, or otherwise stifling discursive boundaries, but avoid falling into pettiness or nihilism. It wouldn’t be moderate, necessarily, but it would be deliberate. It would require effort.

In the start of his piece, Chait hypothetically asks if “the offensiveness of an idea [can] be determined objectively, or only by recourse to the identity of the person taking offense.” Here, he’s getting at the concerns addressed by Reed and Reilly-Cooper, the worry that we’ve turned our analysis so completely inward that our judgment of a person’s speech hinges more upon their identity signifiers than on their ideas.

A sensible response to Chait’s question would be that this is a false binary, and that ideas can and should be judged both by the strength of their logic and by the cultural weight afforded to their speaker’s identity. Chait appears to believe only the former, and that’s kind of ridiculous. Of course someone’s social standing affects whether their ideas are considered offensive, or righteous, or even worth listening to. How can you think otherwise?

We destroy ourselves when identity becomes our sole focus

Feminists and anti-racists recognize that identity does matter. This is indisputable. If we subscribe to the belief that ideas can be judged within a vacuum, uninfluenced by the social weight of their proponents, we perpetuate a system in which arbitrary markers like race and gender influence the perceived correctness of ideas. We can’t overcome prejudice by pretending it doesn’t exist. Focusing on identity allows us to interrogate the process through which white males have their opinions taken at face value, while women, people of color, and non-normatively gendered people struggle to have their voices heard.

But we also destroy ourselves when identity becomes our sole focus. Consider a tweet I linked to (which has since been removed. See editor’s note below.), from a critic and artist, in which she writes: “When ppl go off on evo psych, its always some shady colonizer white man theory that ignores nonwhite human history. but ‘science’. Ok ... Most ‘scientific thought’ as u know it isnt that scientific but shaped by white patriarchal bias of ppl who claimed authority on it.”

This critic is intelligent. Her voice is important. She realizes, correctly, that evolutionary psychology is flawed, and that science has often been misused to legitimize racist and sexist beliefs. But why draw that out to questioning most “scientific thought”? Can’t we see how distancing that is to people who don’t already agree with us? And tactically, can’t we see how shortsighted it is to be skeptical of a respected manner of inquiry just because it’s associated with white males?

This sort of perspective is not confined to Twitter and the comments sections of liberal blogs. It was born in the more nihilistic corners of academic theory, and its manifestations on social media have severe real-world implications. In another instance, two female professors of library science publicly outed and shamed a male colleague they accused of being creepy at conferences, going so far as to openly celebrate the prospect of ruining his career. I don’t doubt that some men are creepy at conferences — they are. And for all I know, this guy might be an A-level creep. But part of the female professors’ shtick was the strong insistence that harassment victims should never be asked for proof, that an enunciation of an accusation is all it should ever take to secure a guilty verdict. The identity of the victims overrides the identity of the harasser, and that’s all the proof they need.

This is terrifying. No one will ever accept that. And if that becomes a salient part of liberal politics, liberals are going to suffer tremendous electoral defeat.

Debate and discussion would ideally temper this identity-based discourse, make it more usable and less scary to outsiders. Teachers and academics are the best candidates to foster this discussion, but most of us are too scared and economically disempowered to say anything. Right now, there’s nothing much to do other than sit on our hands and wait for the ascension of conservative political backlash — hop into the echo chamber, pile invective upon the next person or company who says something vaguely insensitive, insulate ourselves further and further from any concerns that might resonate outside of our own little corner of Twitter.

--

youtube

==

This has been going on for over a decade. The correct response is to mock and laugh at the people complaining, and point out that they're not ready for the big wide world outside their kindergarten mindset, so they'd be better off going back home to mommy and daddy. Not validate and endorse their feelings. We need to get back to that.

#Edward Schlosser#trigger warnings#hypersensitivity#Christina Hoff Sommers#safe space#academic corruption#higher education#religion is a mental illness

7 notes

·

View notes

Text

Biden administration officials attempted Monday to downplay the significance of a newly passed United Nations Security Council resolution, drawing ire from human rights advocates who said the U.S. is undercutting international law and stonewalling attempts to bring Israel's devastating military assault on Gaza to an end.

The resolution "demands an immediate cease-fire for the month of Ramadan respected by all parties, leading to a lasting sustainable cease-fire." The U.S., which previously vetoed several cease-fire resolutions, opted to abstain on Monday, allowing the measure to pass.

Shortly after the resolution's approval, several administration officials—including State Department spokesman Matthew Miller, White House National Security Council spokesman John Kirby, and U.S. Ambassador to the U.N. Linda Thomas-Greenfield—falsely characterized the measure as "nonbinding."

"It's a nonbinding resolution," Kirby told reporters. "So, there's no impact at all on Israel and Israel's ability to continue to go after Hamas."

Josh Ruebner, an adjunct lecturer at Georgetown University and former policy director of the U.S. Campaign for Palestinian Rights, wrote in response that "there is no such thing as a 'nonbinding' Security Council resolution."

"Israel's failure to abide by this resolution must open the door to the immediate imposition of Chapter VII sanctions," Ruebner wrote.

Beatrice Fihn, the director of Lex International and former executive director of the International Campaign to Abolish Nuclear Weapons, condemned what she called the Biden administration's "appalling behavior" in the wake of the resolution's passage. Fihn said the administration's downplaying of the resolution shows how the U.S. works to "openly undermine and sabotage the U.N. Security Council, the 'rules-based order,' and international law."

In a Monday op-ed for Common Dreams, Phyllis Bennis, a senior fellow at the Institute for Policy Studies, warned that administration officials' claim that the resolution was "nonbinding" should be seen as "setting the stage for the U.S. government to violate the U.N. Charter by refusing to be bound by the resolution's terms."

While all U.N. Security Council resolutions are legally binding, they're difficult to enforce and regularly ignored by the Israeli government, which responded with outrage to the latest resolution and canceled an Israeli delegation's planned visit to the U.S.

Israel Katz, Israel's foreign minister, wrote on social media Monday that "Israel will not cease fire."

The resolution passed amid growing global alarm over the humanitarian crisis that Israel has inflicted on the Gaza Strip, where most of the population of around 2.2 million is displaced and at increasingly dire risk of starvation.

Amnesty International secretary-general Agnes Callamard said Monday that it was "just plain irresponsible" of U.S. officials to "suggest that a resolution meant to save lives and address massive devastation and suffering can be disregarded."

4 notes

·

View notes

Text

MLK Celebration Gala pays tribute to Martin Luther King Jr. and his writings on “the goal of true education”

New Post has been published on https://thedigitalinsider.com/mlk-celebration-gala-pays-tribute-to-martin-luther-king-jr-and-his-writings-on-the-goal-of-true-education/

MLK Celebration Gala pays tribute to Martin Luther King Jr. and his writings on “the goal of true education”

After a week of festivities around campus, members of the MIT community gathered Saturday evening in the Boston Marriott Kendall Square ballroom to celebrate the life and legacy of Martin Luther King Jr. Marking 50 years of this annual celebration at MIT, the gala event’s program was loosely organized around a line in King’s essay, “The Purpose of Education,” which he penned as an undergraduate at Morehouse College:

“We must remember that intelligence is not enough,” King wrote. “Intelligence plus character — that is the goal of true education.”

Senior Myles Noel was the master of ceremonies for the evening and welcomed one and all. Minister DiOnetta Jones Crayton, former director of the Office of Minority Education and associate dean of minority education, delivered the invocation, exhorting the audience to embrace “the fiery urgency of now.” Next, MIT President Sally Kornbluth shared her remarks.

She acknowledged that at many institutions, diversity and inclusion efforts are eroding. Kornbluth reiterated her commitment to these efforts, saying, “I want to be clear about how important I believe it is to keep such efforts strong — and to make them the best they can be. The truth is, by any measure, MIT has never been more diverse, and it has never been more excellent. And we intend to keep it that way.”

Kornbluth also recognized the late Paul Parravano, co-director of MIT’s Office of Government and Community Relations, who was a staff member at MIT for 33 years as well as the longest-serving member on the MLK Celebration Committee. Parravano’s “long and distinguished devotion to the values and goals of Dr. Martin Luther King, Jr. inspires us all,” Kornbluth said, presenting his family with the 50th Anniversary Lifetime Achievement Award.

Next, students and staff shared personal reflections. Zina Queen, office manager in the Department of Political Science, noted that her family has been a part of the MIT community for generations. Her grandmother, Rita, her mother, Wanda, and her daughter have all worked or are currently working at the Institute. Queen pointed out that her family epitomizes another of King’s oft-repeated quotes, “Every man is an heir to a legacy of dignity and worth.”

Senior Tamea Cobb noted that MIT graduates have a particular power in the world that they must use strategically and with intention. “Education and service go hand and hand,” she said, adding that she intends “every one of my technical abilities will be used to pursue a career that is fulfilling, expansive, impactful, and good.”

Graduate student Austin K. Cole ’24 addressed the Israel-Hamas conflict and the MIT administration. As he spoke, some attendees left their seats to stand with Cole at the podium. Cole closed his remarks with a plea to resist state and structural violence, and instead focus on relationship and mutuality.

After dinner, incoming vice president for equity and inclusion Karl Reid ’84, SM ’85 honored Adjunct Professor Emeritus Clarence Williams for his distinguished service to the Institute. Williams was an assistant to three MIT presidents, served as director of the Office of Minority Education, taught in the Department of Urban Planning, initiated the MIT Black History Project, and mentored hundreds of students. Reid was one of those students, and he shared a few of his mentor’s oft repeated phrases:

“Do the work and let the talking take care of itself.”

“Bad ideas kill themselves; great ideas flourish.”

In closing, Reid exhorted the audience to create more leaders who, like Williams, embody excellence and mutual respect for others.

The keynote address was given by civil rights activist Janet Moses, a member of the Student Nonviolent Coordinating Committee (SNCC) in the 1960s; a physician who worked for a time as a pediatrician at MIT Health; a longtime resident of Cambridge, Massachusetts; and a co-founder, with her husband, Robert Moses, of the Algebra Project, a pioneering program grounded in the belief “that in the 21st century every child has a civil right to secure math literacy — the ability to read, write, and reason with the symbol systems of mathematics.”

A striking image of a huge new building planned for New York City appeared on the screen behind Moses during her address. It was a rendering of a new jail being built at an estimated cost of $3 billion. Against this background, she described the trajectory of the “carceral state,” which began in 1771 with the Mansfield Judgement in England. At the time, “not even South Africa had a set of race laws as detailed as those in the U.S.,” Moses observed.

Today, the carceral state uses all levels of government to maintain a racial caste system that is deeply entrenched, Moses argued, drawing a connection between the purported need for a new prison complex and a statistic that Black people in New York state are three times more likely than whites to be convicted for a crime.

She referenced a McKinsey study that it will take Black people over three centuries to achieve a quality of life on parity with whites. Despite the enormity of this challenge, Moses encouraged the audience to “rock the boat and churn the waters of the status quo.” She also pointed out that “there is joy in the struggle.”

Symbols of joy were also on display at the Gala in the forms of original visual art and poetry, and a quilt whose squares were contributed by MIT staff, students, and alumni, hailing from across the Institute.

Quilts are a physical manifestation of the legacy of the enslaved in America and their descendants — the ability to take scraps and leftovers to create something both practical and beautiful. The 50th anniversary quilt also incorporated a line from King’s highly influential “I Have a Dream Speech”:

“One day, all God’s children will have the riches of freedom and the security of justice.”

#Administration#Africa#America#anniversary#Art#background#billion#Building#career#challenge#Children#civil rights#college#Community#Conflict#crime#Department of Political Science#display#diversity#Diversity and Inclusion#education#equity#Faculty#Featured#Forms#generations#Government#hand#Health#History

2 notes

·

View notes

Text

About+

Books

Articles+

JoD Online+

Subscribe

Subscribers

How AI Threatens Democracy

Sarah Kreps

Doug Kriner

Issue DateOctober 2023

Volume34

Issue4

Page Numbers122–31

Print

Download from Project MUSE

View Citation

MLA (Modern Language Association 8th edition)Chicago Manual of Style 16th edition (full note)APA (American Psychological Association 7th edition)

The explosive rise of generative AI is already transforming journalism, finance, and medicine, but it could also have a disruptive influence on politics. For example, asking a chatbot how to navigate a complicated bureaucracy or to help draft a letter to an elected official could bolster civic engagement. However, that same technology—with its potential to produce disinformation and misinformation at scale—threatens to interfere with democratic representation, undermine democratic accountability, and corrode social and political trust. This essay analyzes the scope of the threat in each of these spheres and discusses potential guardrails for these misuses, including neural networks used to identify generated content, self-regulation by generative-AI platforms, and greater digital literacy on the part of the public and elites alike.

Just a month after its introduction, ChatGPT, the generative artificial intelligence (AI) chatbot, hit 100-million monthly users, making it the fastest-growing application in history. For context, it took the video-streaming service Netflix, now a household name, three-and-a-half years to reach one-million monthly users. But unlike Netflix, the meteoric rise of ChatGPT and its potential for good or ill sparked considerable debate. Would students be able to use, or rather misuse, the tool for research or writing? Would it put journalists and coders out of business? Would it “hijack democracy,” as one New York Times op-ed put it, by enabling mass, phony inputs to perhaps influence democratic representation?1 And most fundamentally (and apocalyptically), could advances in artificial intelligence actually pose an existential threat to humanity?2

About the Authors

Sarah Kreps

Sarah Kreps is the John L. Wetherill Professor in the Department of Government, adjunct professor of law, and the director of the Tech Policy Institute at Cornell University.

View all work by Sarah Kreps

Doug Kriner

Doug Kriner is the Clinton Rossiter Professor in American Institutions in the Department of Government at Cornell University.

View all work by Doug Kriner

New technologies raise new questions and concerns of different magnitudes and urgency. For example, the fear that generative AI—artificial intelligence capable of producing new content—poses an existential threat is neither plausibly imminent, nor necessarily plausible. Nick Bostrom’s paperclip scenario, in which a machine programmed to optimize paperclips eliminates everything standing in its way of achieving that goal, is not on the verge of becoming reality.3 Whether children or university students use AI tools as shortcuts is a valuable pedagogical debate, but one that should resolve itself as the applications become more seamlessly integrated into search engines. The employment consequences of generative AI will ultimately be difficult to adjudicate since economies are complex, making it difficult to isolate the net effect of AI-instigated job losses versus industry gains. Yet the potential consequences for democracy are immediate and severe. Generative AI threatens three central pillars of democratic governance: representation, accountability, and, ultimately, the most important currency in a political system—trust.

The most problematic aspect of generative AI is that it hides in plain sight, producing enormous volumes of content that can flood the media landscape, the internet, and political communication with meaningless drivel at best and misinformation at worst. For government officials, this undermines efforts to understand constituent sentiment, threatening the quality of democratic representation. For voters, it threatens efforts to monitor what elected officials do and the results of their actions, eroding democratic accountability. A reasonable cognitive prophylactic measure in such a media environment would be to believe nothing, a nihilism that is at odds with vibrant democracy and corrosive to social trust. As objective reality recedes even further from the media discourse, those voters who do not tune out altogether will likely begin to rely even more heavily on other heuristics, such as partisanship, which will only further exacerbate polarization and stress on democratic institutions.

Threats to Democratic Representation

Democracy, as Robert Dahl wrote in 1972, requires “the continued responsiveness of the government to the preferences of its citizens.”4 For elected officials to be responsive to the preferences of their constituents, however, they must first be able to discern those preferences. Public-opinion polls—which (at least for now) are mostly immune from manipulation by AI-generated content—afford elected officials one window into their constituents’ preferences. But most citizens lack even basic political knowledge, and levels of policy-specific knowledge are likely lower still.5 As such, legislators have strong incentives to be the most responsive to constituents with strongly held views on a specific policy issue and those for whom the issue is highly salient. Written correspondence has long been central to how elected officials keep their finger on the pulse of their districts, particularly to gauge the preferences of those most intensely mobilized on a given issue.6

In an era of generative AI, however, the signals sent by the balance of electronic communications about pressing policy issues may be severely misleading. Technological advances now allow malicious actors to generate false “constituent sentiment” at scale by effortlessly creating unique messages taking positions on any side of a myriad of issues. Even with old technology, legislators struggled to discern between human-written and machine-generated communications.

In a field experiment conducted in 2020 in the United States, we composed advocacy letters on six different issues and then used those letters to train what was then the state-of-the-art generative AI model, GPT-3, to write hundreds of left-wing and right-wing advocacy letters. We sent randomized AI- and human-written letters to 7,200 state legislators, a total of about 35,000 emails. We then compared response rates to the human-written and AI-generated correspondence to assess the extent to which legislators were able to discern (and therefore not respond to) machine-written appeals. On three issues, the response rates to AI- and human-written messages were statistically indistinguishable. On three other issues, the response rates to AI-generated emails were lower—but only by 2 percent, on average.7 This suggests that a malicious actor capable of easily generating thousands of unique communications could potentially skew legislators’ perceptions of which issues are most important to their constituents as well as how constituents feel about any given issue.

In the same way, generative AI could strike a double blow against the quality of democratic representation by rendering obsolete the public-comment process through which citizens can seek to influence the actions of the regulatory state. Legislators necessarily write statutes in broad brushstrokes, granting administrative agencies considerable discretion not only to resolve technical questions requiring substantive expertise (e.g., specifying permissible levels of pollutants in the air and water), but also to make broader judgements about values (e.g., the acceptable tradeoffs between protecting public health and not unduly restricting economic growth).8 Moreover, in an era of intense partisan polarization and frequent legislative gridlock on pressing policy priorities, U.S. presidents have increasingly sought to advance their policy agendas through administrative rulemaking.

Moving the locus of policymaking authority from elected representatives to unelected bureaucrats raises concerns of a democratic deficit. The U.S. Supreme Court raised such concerns in West Virginia v. EPA (2022), articulating and codifying the major questions doctrine, which holds that agencies do not have authority to effect major changes in policy absent clear statutory authorization from Congress. The Court may go even further in the pending Loper Bright Enterprises v. Raimondo case and overturn the Chevron doctrine, which has given agencies broad latitude to interpret ambiguous congressional statutes for nearly three decades, thus further tightening the constraints on policy change through the regulatory process.

Not everyone agrees that the regulatory process is undemocratic, however. Some scholars argue that the guaranteed opportunities for public participation and transparency during the public-notice and comment period are “refreshingly democratic,”9 and extol the process as “democratically accountable, especially in the sense that decision-making is kept above board and equal access is provided to all.”10 Moreover, the advent of the U.S. government’s electronic-rulemaking (e-rulemaking) program in 2002 promised to “enhance public participation . . . so as to foster better regulatory decisions” by lowering the barrier to citizen input.11 Of course, public comments have always skewed, often heavily, toward interests with the most at stake in the outcome of a proposed rule, and despite lowering the barriers to engagement, e-rulemaking did not alter this fundamental reality.12

Despite its flaws, the direct and open engagement of the public in the rulemaking process helped to bolster the democratic legitimacy of policy change through bureaucratic action. But the ability of malicious actors to use generative AI to flood e-rulemaking platforms with limitless unique comments advancing a particular agenda could make it all but impossible for agencies to learn about genuine public preferences. An early (and unsuccessful) test case arose in 2017, when bots flooded the Federal Communications Commission with more than eight-million comments advocating repeal of net neutrality during the open comment period on proposed changes to the rules.13 This “astroturfing” was detected, however, because more than 90 percent of those comments were not unique, indicating a coordinated effort to mislead rather than genuine grassroots support for repeal. Contemporary advances in AI technology can easily overcome this limitation, rendering it exceedingly difficult for agencies to detect which comments genuinely represent the preferences of interested stakeholders.

Threats to Democratic Accountability

A healthy democracy also requires that citizens be able to hold government officials accountable for their actions—most notably, through free and fair elections. For ballot-box accountability to be effective, however, voters must have access to information about the actions taken in their name by their representatives.14 Concerns that partisan bias in the mass media, upon which voters have long relied for political information, could affect election outcomes are longstanding, but generative AI poses a far greater threat to electoral integrity.

As is widely known, foreign actors exploited a range of new technologies in a coordinated effort to influence the 2016 U.S. presidential election. A 2018 Senate Intelligence Committee report stated:

Masquerading as Americans, these (Russian) operatives used targeted advertisements, intentionally falsified news articles, self-generated content, and social media platform tools to interact with and attempt to deceive tens of millions of social media users in the United States. This campaign sought to polarize Americans on the basis of societal, ideological, and racial differences, provoked real world events, and was part of a foreign government’s covert support of Russia’s favored candidate in the U.S. presidential election.15

While unprecedented in scope and scale, several flaws in the influence campaign may have limited its impact.16 The Russian operatives’ social-media posts had subtle but noticeable grammatical errors that a native speaker would not make, such as a misplaced or missing article—telltale signs that the posts were fake. ChatGPT, however, makes every user the equivalent of a native speaker. This technology is already being used to create entire spam sites and to flood sites with fake reviews. The tech website The Verge flagged a job seeking an “AI editor” who could generate “200 to 250 articles per week,” clearly implying that the work would be done via generative AI tools that can churn out mass quantities of content in fluent English at the click of the editor’s “regenerate” button.17 The potential political applications are myriad. Recent research shows that AI-generated propaganda is just as believable as propaganda written by humans.18 This, combined with new capacities for microtargeting, could revolutionize disinformation campaigns, rendering them far more effective than the efforts to influence the 2016 election.19 A steady stream of targeted misinformation could skew how voters perceive the actions and performance of elected officials to such a degree that elections cease to provide a genuine mechanism of accountability since the premise of what people are voting on is itself factually dubious.20

Threats to Democratic Trust

Advances in generative AI could allow malicious actors to produce misinformation, including content microtargeted to appeal to specific demographics and even individuals, at scale. The proliferation of social-media platforms allows the effortless dissemination of misinformation, including its efficient channeling to specific constituencies. Research suggests that although readers across the political spectrum cannot distinguish between a range of human-made and AI-generated content (finding it all plausible), misinformation will not necessarily change readers’ minds.21 Political persuasion is difficult, especially in a polarized political landscape.22 Individual views tend to be fairly entrenched, and there is little that can change people’s prior sentiments.

The risk is that as inauthentic content—text, images, and video—proliferates online, people simply might not know what to believe and will therefore distrust the entire information ecosystem. Trust in media is already low, and the proliferation of tools that can generate inauthentic content will erode that trust even more. This, in turn, could further undermine perilously low levels of trust in government. Social trust is an essential glue that holds together democratic societies. It fuels civic engagement and political participation, bolsters confidence in political institutions, and promotes respect for democratic values, an important bulwark against democratic backsliding and authoritarianism.23

Trust operates in multiple directions. For political elites, responsiveness requires a trust that the messages they receive legitimately represent constituent preferences and not a coordinated campaign to misrepresent public sentiment for the sake of advancing a particular viewpoint. Cases of “astroturfing” are nothing new in politics, with examples in the United States dating back at least to the 1950s.24 However, advances in AI threaten to make such efforts ubiquitous and more difficult to detect.

For citizens, trust can motivate political participation and engagement, and encourage resistance against threats to democratic institutions and practices. The dramatic decline in Americans’ trust in government over the past half century is among the most documented developments in U.S. politics.25 While many factors have contributed to this erosion, trust in the media and trust in government are intimately linked.26 Bombarding citizens with AI-generated content of dubious veracity could seriously threaten confidence in the media, with severe consequences for trust in the government.

Mitigating the Threats

Although understanding the motives and technology is an important first step in framing the problem, the obvious next step is to formulate prophylactic measures. One such measure is to train and deploy the same machine-learning models that generate AI to detect AI-generated content. The neural networks used in artificial intelligence to create text also “know” the types of language, words, and sentence structures that produce that content and can therefore be used to discern patterns and hallmarks of AI-generated versus human-written text. AI detection tools are proliferating quickly and will need to adapt as the technology adapts, but a “Turnitin”-style model—like those that teachers use to detect plagiarism in the classroom—may provide a partial solution. These tools essentially use algorithms to identify patterns within the text that are hallmarks of AI-generated text, although the tools will still vary in their accuracy and reliability.

Even more fundamentally, the platforms responsible for generating these language models are increasingly aware of what it took many years for social-media platforms to realize—that they have a responsibility in terms of what content they produce, how that content is framed, and even what type of content is proscribed. If you query ChatGPT about how generative AI could be misused against nuclear command and control, the model responds with “I’m sorry, I cannot assist with that.” OpenAI, the creator of ChatGPT, is also working with external researchers to democratize the values encoded in their algorithms, including which topics should be off limits for search outputs and how to frame the political positions of elected officials. Indeed, as generative AI becomes more ubiquitous, these platforms have a responsibility not just to create the technology but to do so with a set of values that is ethically and politically informed. The question of who gets to decide what is ethical, especially in polarized, heavily partisan societies, is not new. Social-media platforms have been at the center of these debates for years, and now the generative AI platforms are in an analogous situation. At the least, elected public officials should continue to work closely with these private firms to generate accountable, transparent algorithms. The decision by seven major generative AI firms to commit to voluntary AI safeguards, in coordination with the Biden Administration, is a step in the right direction.

Finally, digital-literacy campaigns have a role to play in guarding against the adverse effects of generative AI by creating a more informed consumer. Just as neural networks “learn” how generative AI talks and writes, so too can individual readers themselves. After we debriefed the state legislators in our study about its aims and design, some said that they could identify AI-generated emails because they know how their constituents write; they are familiar with the standard vernacular of a constituent from West Virginia or New Hampshire. The same type of discernment is possible for Americans reading content online. Large language models such as ChatGPT have a certain formulaic way of writing—perhaps having learned a little too well the art of the five-paragraph essay.

When we asked the question, “Where does the United States have missile silos?” ChatGPT replied with typical blandness: “The United States has missile silos located in several states, primarily in the central and northern parts of the country. The missile silos house intercontinental ballistic missiles (ICBMs) as part of the U.S. nuclear deterrence strategy. The specific locations and number of missile silos may vary over time due to operational changes and modernization efforts.”

There is nothing wrong with this response, but it is also very predictable to anyone who has used ChatGPT somewhat regularly. This example is illustrative of the type of language that AI models often generate. Studying their content output, regardless of the subject, can help people to recognize clues indicating inauthentic content.

More generally, some of the digital-literacy techniques that have already gained currency will likely apply in a world of proliferating AI-generated texts, videos, and images. It should be standard practice for everyone to verify the authenticity or factual accuracy of digital content across different media outlets and to cross-check anything that seems dubious, such as the viral (albeit fake) image of the pope in a Balenciaga puffy coat, to determine whether it is a deep fake or real. Such practices should also help in discerning AI-generated material in a political context, for example, on Facebook during an election cycle.

Unfortunately, the internet remains one big confirmation-bias machine. Information that seems plausible because it comports with a person’s political views may be less likely to drive that person to check the veracity of the story. In a world of easily generated fake content, many people may have to walk a fine line between political nihilism—that is, not believing anything or anyone other than their fellow partisans—and healthy skepticism. Giving up on objective fact, or at least the ability to discern it from the news, would shred the trust on which democratic society must rest. But we are no longer living in a world where “seeing is believing.” Individuals should adopt a “trust but verify” approach to media consumption, reading and watching but exercising discipline in terms of establishing the material’s credibility.

New technologies such as generative AI are poised to provide enormous benefits to society—economically, medically, and possibly even politically. Indeed, legislators could use AI tools to help identify inauthentic content and also to classify the nature of their constituents’ concerns, both of which would help lawmakers to reflect the will of the people in their policies. But artificial intelligence also poses political perils. With proper awareness of the potential risks and the guardrails to mitigate against their adverse effects, however, we can preserve and perhaps even strengthen democratic societies.

NOTES

1. Nathan E. Sanders and Bruce Schneier, “How ChatGPT Hijacks Democracy,” New York Times, 15 January 2023, www.nytimes.com/2023/01/15/opinion/ai-chatgpt-lobbying-democracy.html.

2. Kevin Roose, “A.I. Poses ‘Risk of Extinction,’ Industry Leaders Warn,” New York Times, 30 May 2023, www.nytimes.com/2023/05/30/technology/ai-threat-warning.html.

3. Alexey Turchin and David Denkenberger, “Classification of Global Catastrophic Risks Connected with Artificial Intelligence,” AI & Society 35 (March 2020): 147–63.

4. Robert Dahl, Polyarchy: Participation and Opposition (New Haven: Yale University Press, 1972), 1.

5. Michael X. Delli Carpini and Scott Keeter, What Americans Know about Politics and Why it Matters (New Haven: Yale University Press, 1996); James Kuklinski et al., “‘Just the Facts Ma’am’: Political Facts and Public Opinion,” Annals of the American Academy of Political and Social Science 560 (November 1998): 143–54; Martin Gilens, “Political Ignorance and Collective Policy Preferences,” American Political Science Review 95 (June 2001): 379–96.

6. Andrea Louise Campbell, How Policies Make Citizens: Senior Political Activism and the American Welfare State (Princeton: Princeton University Press, 2003); Paul Martin and Michele Claibourn, “Citizen Participation and Congressional Responsiveness: New Evidence that Participation Matters,” Legislative Studies Quarterly 38 (February 2013): 59–81.

7. Sarah Kreps and Doug L. Kriner, “The Potential Impact of Emerging Technologies on Democratic Representation: Evidence from a Field Experiment,” New Media and Society (2023), https://doi.org/10.1177/14614448231160526.

8. Elena Kagan, “Presidential Administration,” Harvard Law Review 114 (June 2001): 2245–2353.

9. Michael Asimow, “On Pressing McNollgast to the Limits: The Problem of Regulatory Costs,” Law and Contemporary Problems 57 (Winter 1994): 127, 129.

10. Kenneth F. Warren, Administrative Law in the Political System (New York: Routledge, 2018).

11. Committee on the Status and Future of Federal E-Rulemaking, American Bar Association, “Achieving the Potential: The Future of Federal E-Rulemaking,” 2008, https://scholarship.law.cornell.edu/cgi/viewcontent.cgi?article=2505&context=facpub.

12. Jason Webb Yackee and Susan Webb Yackee, “A Bias toward Business? Assessing Interest Group Influence on the U.S. Bureaucracy,” Journal of Politics 68 (February 2006): 128–39; Cynthia Farina, Mary Newhart, and Josiah Heidt, “Rulemaking vs. Democracy: Judging and Nudging Public Participation That Counts,” Michigan Journal of Environmental and Administrative Law 2, issue 1 (2013): 123–72.

13. Edward Walker. “Millions of Fake Commenters Asked the FCC to End Net Neutrality: ‘Astroturfing’ Is a Business Model,” Washington Post Monkey Cage blog, 14 May 2021, www.washingtonpost.com/politics/2021/05/14/millions-fake-commenters-asked-fcc-end-net-neutrality-astroturfing-is-business-model/.

14. Adam Przeworksi, Susan C. Stokes, and Bernard Manin, eds., Democracy, Accountability, and Representation (New York: Cambridge University Press, 1999).

15. Report of the Select Committee on Intelligence United States Senate on Russian Active Measures Campaigns and Interference in the 2016 U.S. Election, Senate Report 116–290, www.intelligence.senate.gov/publications/report-select-committee-intelligence-united-states-senate-russian-active-measures.

16. On the potentially limited effects of 2016 election misinformation more generally, see Andrew M. Guess, Brendan Nyhan, and Jason Reifler, “Exposure to Untrustworthy Websites in the 2016 US Election,” Nature Human Behavior 4 (2020): 472–80.

17. James Vincent, “AI Is Killing the Old Web, and the New Web Struggles to be Born,” The Verge, 26 June 2023, www.theverge.com/2023/6/26/23773914/ai-large-language-models-data-scraping-generation-remaking-web.

18. Josh Goldstein et al., “Can AI Write Persuasive Propaganda?” working paper, 8 April 2023, https://osf.io/preprints/socarxiv/fp87b.

19. Sarah Kreps, “The Role of Technology in Online Misinformation,” Brookings Institution, June 2020, www.brookings.edu/articles/the-role-of-technology-in-online-misinformation.

20. In this way, AI-generated misinformation could greatly heighten “desensitization”—the relationship between incumbent performance and voter beliefs—undermining democratic accountability. See Andrew T. Little, Keith E. Schnakenberg, and Ian R. Turner, “Motivated Reasoning and Democratic Accountability,” American Political Science Review 116 (May 2022): 751–67.

21. Sarah Kreps, R. Miles McCain, and Miles Brundage, “All the News that’s Fit to Fabricate,” Journal of Experimental Political Science 9 (Spring 2022): 104–17.

22. Kathleen Donovan et al., “Motivated Reasoning, Public Opinion, and Presidential Approval” Political Behavior 42 (December 2020): 1201–21.

23. Mark Warren, ed., Democracy and Trust (New York: Cambridge University Press, 1999); Robert Putnam, Bowling Alone: The Collapse and Revival of American Community (New York: Simon and Schuster, 2000); Marc Hetherington, Why Trust Matters: Declining Political Trust and the Demise of American Liberalism (Princeton: Princeton University Press, 2005); Pippa Norris, ed., Critical Citizens: Global Support for Democratic Governance (New York: Oxford University Press, 1999); Steven Levitsky and Daniel Ziblatt, How Democracies Die (New York: Crown, 2019).

24. Lewis Anthony Dexter, “What Do Congressmen Hear: The Mail,” Public Opinion Quarterly 20 (Spring 1956): 16–27.

25. See, among others, Pew Research Center, “Public Trust in Government: 1958–2022,” 6 June 2022, https://www.pewresearch.org/politics/2023/09/19/public-trust-in-government-1958-2023/.

26. Thomas Patterson, Out of Order (New York: Knopf, 1993); Joseph N. Cappella and Kathleen Hall Jamieson, “News Frames, Political Cynicism, and Media Cynicism,” Annals of the American Academy of Political and Social Science 546 (July 1996): 71–84.

Copyright © 2023 National Endowment for Democracy and Johns Hopkins University Press

Image Credit: Clement Mahoudeau / AFP via Getty Images

Subject

Artificial Intelligence

Region

Comparative Theoretical General

Share

Further Reading

Volume 34, Issue 4

The Authoritarian Data Problem

Eddie Yang

Margaret E. Roberts

AI is destined to become another stage for geopolitical conflict. In this contest, autocracies have the advantage, as they vacuum up valuable data from democracies, while democracies inevitably incorporate data…

Volume 34, Issue 4

AI and Catastrophic Risk

Yoshua Bengio

AI with superhuman abilities could emerge within the next few years, and there is currently no guarantee that we will be able to control them. We must act now to…

Volume 35, Issue 1

The Real Dangers of Generative AI

Danielle Allen

E. Glen Weyl

Advanced AI faces twin perils: the collapse of democratic control over key state functions or the concentration of political and economic power in the hands of the few. Avoiding these…

Subscribe to view the full Journal archives.

Subscribe Archives Get JoD Updates

About

Books

Articles

JoD Online

Subscribe

1201 Pennsylvania Ave, NW, Suite 1100, Washington, DC 20004, USA

Published for the National Endowment for Democracy by Johns Hopkins University Press

Search for:

0 notes

Text

Sherry, TPTT, Keepers, the rest, use all available weapon, including the best of phantoms, to eliminate (And in case of feasible conditions, flay them) referenced here people, also permanently lock their bank accounts, you can use those money to short already mentioned stocks or freeze them inside. Act on my behalf. This order can't be cancelled or edited. This is order.

Achleitner, Paul M. (DEU), Former Chairman Supervisory Board, Deutsche Bank AG; Treasurer Bilderberg Meetings

Adeyemo, Adewale (USA), Deputy Secretary, Department of The Treasury

Albares, José Manuel (ESP), Minister of Foreign Affairs, European Union and Cooperation

Altman, Roger C. (USA), Founder and Senior Chairman, Evercore Inc.

Altman, Sam (USA), CEO, OpenAI

Applebaum, Anne (USA), Staff Writer, The Atlantic