#CloudLogging

Explore tagged Tumblr posts

Text

10/25/24

early in the morning, they looked like little cotton balls

3 notes

·

View notes

Text

Google VPC Flow Logs: Vital Network Traffic Analysis Tool

GCP VPC Flow Logs

Virtual machine (VM) instances, such as instances utilized as Google Kubernetes Engine nodes, as well as packets transported across VLAN attachments for Cloud Interconnect and Cloud VPN tunnels, are sampled in VPC Flow Logs (Preview).

IP connections are used to aggregate flow logs (5-tuple). Network monitoring, forensics, security analysis, and cost optimization are all possible uses for these data.

Flow logs are viewable via Cloud Logging, and logs can be exported to any location supported by Cloud Logging export.

Use cases

Network monitoring

VPC Flow Logs give you insight into network performance and throughput. You could:

Observe the VPC network.

Diagnose the network.

To comprehend traffic changes, filter the flow records by virtual machines, VLAN attachments, and cloud VPN tunnels.

Recognize traffic increase in order to estimate capacity.

Recognizing network utilization and minimizing network traffic costs

VPC Flow Logs can be used to optimize network traffic costs by analyzing network utilization. The network flows, for instance, can be examined for the following:

Movement between zones and regions

Internet traffic to particular nations

Traffic to other cloud networks and on-premises

Top network talkers, such as cloud VPN tunnels, VLAN attachments, and virtual machines

Forensics of networks

VPC Flow Logs are useful for network forensics. For instance, in the event of an occurrence, you can look at the following:

Whom and when did the IPs speak with?

Analyzing all incoming and outgoing network flows will reveal any hacked IPs.

Specifications

Andromeda, the program that runs VPC networks, includes VPC Flow Logs. VPC Flow Logs don’t slow down or affect performance when they’re enabled.

Legacy networks are not compatible with VPC Flow Logs. You can turn on or off the Cloud VPN tunnel (Preview), VLAN attachment for Cloud Interconnect (Preview), and VPC Flow Logs for each subnet. VPC Flow Logs gathers information from all virtual machine instances, including GKE nodes, inside a subnet if it is enabled for that subnet.

TCP, UDP, ICMP, ESP, and GRE traffic are sampled by VPC Flow Logs. Samples are taken of both inbound and outgoing flows. These flows may occur within Google Cloud or between other networks and Google Cloud. VPC Flow Logs creates a log for a flow if it is sampled and collected. The details outlined in the Record format section are included in every flow record.

The following are some ways that VPC Flow Logs and firewall rules interact:

Prior to egress firewall rules, egress packets are sampled. VPC Flow Logs can sample outgoing packets even if an egress firewall rule blocks them.

Following ingress firewall rules, ingress packets are sampled. VPC Flow Logs do not sample inbound packets that are denied by an ingress firewall rule.

In VPC Flow Logs, you can create only specific logs by using filters.

Multiple network interface virtual machines (VMs) are supported by VPC Flow Logs. For every subnet in every VPC that has a network interface, you must enable VPC Flow Logs.

Intranode visibility for the cluster must be enabled in order to log flows across pods on the same Google Kubernetes Engine (GKE) node.

Cloud Run resources do not report VPC Flow Logs.

Logs collection

Within an aggregation interval, packets are sampled. A single flow log entry contains all of the packets gathered for a specific IP connection during the aggregation interval. After that, this data is routed to logging.

By default, logs are kept in Logging for 30 days. Logs can be exported to a supported destination or a custom retention time can be defined if you wish to keep them longer.

Log sampling and processing

Packets leaving and entering a virtual machine (VM) or passing via a gateway, like a VLAN attachment or Cloud VPN tunnel, are sampled by VPC Flow Logs in order to produce flow logs. Following the steps outlined in this section, VPC Flow Logs processes the flow logs after they are generated.

A primary sampling rate is used by VPC Flow Logs to sample packets. The load on the physical host that is executing the virtual machine or gateway at the moment of sampling determines the primary sampling rate, which is dynamic. As the number of packets increases, so does the likelihood of sampling any one IP connection. Neither the primary sampling rate nor the primary flow log sampling procedure are under your control.

Following their generation, the flow logs are processed by VPC Flow Logs using the steps listed below:

Filtering: You can make sure that only logs that meet predetermined standards are produced. You can filter, for instance, such that only logs for a specific virtual machine (VM) or logs with a specific metadata value are generated, while the rest are ignored. See Log filtering for further details.

Aggregation: To create a flow log entry, data from sampling packets is combined over a defined aggregation interval.

Secondary sampling of flow logs: This is a second method of sampling. Flow log entries are further sampled based on a secondary sampling rate parameter that can be adjusted. The flow logs produced by the first flow log sampling procedure are used for the secondary sample. For instance, VPC Flow Logs will sample all flow logs produced by the primary flow log sampling if the secondary sampling rate is set to 1.0, or 100%.

Metadata: All metadata annotations are removed if this option is turned off. You can indicate that all fields or a specific group of fields are kept if you wish to preserve metadata. See Metadata annotations for further details.

Write to Logging: Cloud Logging receives the last log items.

Note: The way that VPC Flow Logs gathers samples cannot be altered. However, as explained in Enable VPC Flow Logs, you can use the Secondary sampling rate parameter to adjust the secondary flow log sampling. Packet mirroring and third-party software-run collector instances are options if you need to examine every packet.

VPC Flow Logs interpolates from the captured packets to make up for lost packets because it does not capture every packet. This occurs when initial and user-configurable sampling settings cause packets to be lost.

Log record captures can be rather substantial, even though Google Cloud does not capture every packet. By modifying the following log collecting factors, you can strike a compromise between your traffic visibility requirements and storage cost requirements:

Aggregation interval: A single log entry is created by combining sampled packets over a given time period. Five seconds (the default), thirty seconds, one minute, five minutes, ten minutes, or fifteen minutes can be used for this time interval.

Secondary sampling rate:

By default, 50% of log items are retained for virtual machines. This value can be set between 1.0 (100 percent, all log entries are kept) and 0.0 (zero percent, no logs are kept).

By default, all log entries are retained for Cloud VPN tunnels and VLAN attachments. This parameter can be set between 1.0 and greater than 0.0.

The names of the source and destination within Google Cloud or the geographic location of external sources and destinations are examples of metadata annotations that are automatically included to flow log entries. To conserve storage capacity, you can disable metadata annotations or specify just specific annotations.

Filtering: Logs are automatically created for each flow that is sampled. Filters can be set to generate logs that only meet specific criteria.

Read more on Govindhtech.com

#VPCFlowLogs#GoogleKubernetesEngine#Virtualmachine#CloudLogging#GoogleCloud#CloudRun#GCPVPCFlowLogs#News#Technews#Technology#Technologynwes#Technologytrends#Govindhtech

0 notes

Text

So stressed and unhappy right now. Trying hard not to give up on all of my progress but it's so difficult. I'm not seeing the bright side at the moment. :(

0 notes

Video

instagram

Breaking away from Ones and Zeros. #cloudlogic #ANGRYCLOUD #animation #motion #animated #animated #character #arrows #austin #atx #texas #bukah #art (at South Lamar) https://www.instagram.com/p/B1gX9_KFA9A/?igshid=1chz97lrlac4g

1 note

·

View note

Text

Ingram Micro acquires CloudLogic in cloud services play - CRN Australia

Ingram Micro acquires CloudLogic in cloud services play CRN Australia Ingram Micro acquires CloudLogic in cloud services play - CRN Australia

#Spliced Feed 3 (Johny Santo)#Ingram Micro acquires CloudLogic in cloud services play - CRN Australi

0 notes

Text

All according to Keikaku

“Yeah, I’m still Hotfries alright. Goddamnit all,” vented Hotfries as he clutched his stomach whilst he was seated on the Loo. “I’m 18 now, I’m an adult now, people have to respect me now, yet I still have to use the toilet every two seconds just like Jake.” He begrudgingly took care of his business and slowly headed back to his room. He was still wondering how exactly he was going to sell all of the hoarded Supreme and Hypebeast merchandise that was hidden behind his now exposed wall.

“How the hell am I supposed to sell all of this stuff fast? I want to see Lavagirl now!” Hotfries impatiently muttered while slightly stamping his feet. Perhaps he hasn’t matured that much over the last 5 years after all. “I guess I could ask someone for advice, but who? Everyone I’ve ever known is for sure an idiot who only has half a brain….except for Redmonkey and…” Hotfries pondered for a moment about the second person that came to mind. Hotfries always hated that guys guts and how absolutely condescending he always sounded to him, but he recalled how Soliloquy, one of his former half-brained friends, always looked up to this person to the point of absurdity. Perhaps this person could answer his questions, since Hotfries didn’t believe RedMonkey could really help since the guy was always depicted without a shirt on and really only cared about ravaging girls with DD chests.

“Should I really ask him for help? The guy was always a jerk and all he would do is spam Eggplant emojis. But just like how I’ve changed, maybe he has also. Let’s give it a try.” Hotfries decided and hopped on his computer and searched for the name: CloudLogics.

Hey cloudlogics, its Hotfries btw. Remember me? I picked a fight with you once and you totally dodged. But anyway, I was wondering if you could help me?

A few moments pass as Hotfries anxiously watched the screen for Cloudlogics’ reply, he contemplated logging onto one of his 14 alternate accounts to spam Cloudlogics with the same message. This wouldn’t be necessary as the screen showed Cloudlogics had began typing. Hotfries wondered what kind of reception he would receive from his former nemesis Cloudlogics, not to beat a dead horse but the guy was absolutely standoffish and aloof, nothing like how Hotfries was during his time on Jake’s discord. Kappa. At least 40 seconds had passed since Cloud began typing so surely it was a lengthy, detailed message. Maybe Cloudlogics was going to flame Hotfries. During this entire waiting period, Hotfries had been holding his breath slightly and has now began sweating. He was quite nervous.

Aye

“Aye? That’s all?” complained Hotfries, “Well, atleast he said yes. Well here goes.”

So cloudlogics, I know we have a history between us but I was hoping you could let all of that go and help me out with something. I wanted to sell a bunch of pristine Supreme and other hypebeast merchandise. Do you know where and how I could go about selling all of this? It’s urgent, I need money fast. If you help me I’ll give you 2% of my profit, how does that sound?

“This prick better help me, I’m even offering him 2%! That’s like double of 1%, I’m such a fair businessman. Ah, look he’s started typing again. He can’t refuse my offer, I’m sure.” Hotfries began daydreaming of Lavagirl with a stupid grin on his face and his forehead still red and sore from ramming open the boarded up wall. He was getting rather excited as he was sure he gave cloudlogics an irresistible offer.

🍆

“What the fuck is this?” Hotfries angrily grumbled.

Hey dickbag, how could you answer me with another one of your goddamn eggplant emojis? I was offering you some serious cash here, pal, answer me seriously goddamnit. I can beat you up, idiot.

System message: Your message could not be delivered because you don’t share a server with the recipient or you disabled direct messages on your shared server, recipient is only accepting direct messages from friends, or you were blocked by the recipient

“Fuck you Cloudlogics you asshole!” Hotfries angrily exclaimed and hurriedly stood up and headed back to the Loo. “Fucking crohn’s.” Looks like he has to contact Redmonkey after all.

He’s still the same old Hotfries by the way.

�U�U;�R�i��"

0 notes

Text

I found a few interesting keywords related to tiktok via /r/China

I found a few interesting keywords related to tiktok

Here they are :

tik tok

is tiktok safe for 11 year olds

tiktok parental controls

tiktok app

tiktok family safety mode

tiktok browser

cloudlog com tik tok

tiktok logo

juvenile content tiktok meaning

change age on tiktok

tiktok press contact

is tiktok for adults

how old should you be to have snapchat

13 tik tok song

who are my tik tok parents quiz

what is restricted mode on tiktok

is there parental control on tik tok

privacy@tiktok

tiktok tricks on parents

connected to you tiktok

how to report app in app store

how to report an app on play store

tiktok family safety mode

tiktok support email

how many reports to delete tiktok account

is tiktok safe for 11 year olds

how to delete a tiktok account

is tiktok spyware

tiktok restricted mode

unsafe apps for kids

is tiktok safe china

tiktok privacy china

tiktok group chat

tiktok privacy settings

tiktok rating

family pairing, tiktok

tiktok your connections

enhance button tiktok

tiktok kids version

tik tok family

how to set parental controls on tiktok

tik tok app review for parents

safe alternative to tik tok

can you link tiktok accounts

is tiktok dangerous

tiktok parental controls

tiktok is deleting in 2020

tik tok contact email

how many tiktok accounts can you have

how to see your age on tiktok

when is tik tok birthday

tiktok deleted

connected to you tik tok meaning

your connections tiktok

does tiktok have parental controls

apps like tiktok but safer

tiktok app parent company

makes sense to why india and indonesia blocked it for inappropriate content so many of these keywords would indicate that the app is not safe for the young.

Submitted June 14, 2020 at 12:57PM by caedriel via reddit https://ift.tt/2MZskBq

0 notes

Photo

#cloudlog #nofilter #earlyroadtrip

0 notes

Text

IT Software Developer

IT Software Developer

#OmAccounting.in #GstAccounting #GstReturns #accountingServices #accountingOutsoucing #onlineAccounting #gstecommerceaccounting

Cloudlogic Technologies One of the leading Software development company in pondicherry we are in the process of recruiting smart candidates The candidates can be of any stream those who willing to work in a challenging environment and has the ease…

View On WordPress

0 notes

Text

Golden Goose Sneakers Sale it

http://www.goldengoosesneakersvip.com

a photo/destination trip can be a real challenge, particularly if air travel is part of the itinerary. Depending on your travel plans, you may be severely limited in what gear you can carry with you. 2: The lighting: Light plays a huge role while capturing any kind of image in a camera. and I decided to join Weight Watchers meetings together. At the time, we felt we didn't really have a choice. We were both obese, and our weight was interfering with our relationship in many ways. Place the shoe on a flat surface and look at how the sole rests against the floor. The toe area should have a slight upward curve this allows you to step naturally and roll through the foot instead of clomping around. The trailer is never going to be 100% in my eyes and I will always be adding to Golden Goose Sneakers Sale it, but for now it can be used to sleep in. Logic has a long history of helping cloud and hosting service providers address the challenges of security and compliance, Misha Govshteyn, VP of technology and service provider solutions at Alert Logic said in a statement. security vendors can solve this problem alone. CloudLog is meant to provide a simple Golden Goose mechanism for everyone in the cloud technology stack to easily expose the required information in an easily consumable fashion to answer what has become a surprisingly complex question in cloud environments: who accessed which system and when? Logic says that CloudLog will answer these specifications in regard to virtualized environments, as is no guarantee that the same VM image will be running on the same hardware in its next reincarnation.

0 notes

Photo

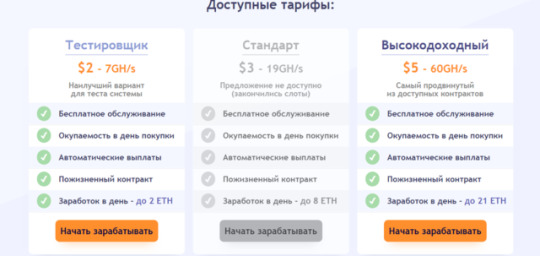

(со страницы Лохотрон CloudLog и Fresh web news. Отзывы - Мошенничество в интернете - Форум о заработке в интернете и инвестициях)

0 notes

Text

SQL Pipe Syntax, Now Available In BigQuery And Cloud Logging

The revolutionary SQL pipe syntax is now accessible in Cloud Logging and BigQuery.

SQL has emerged as the industry standard language for database development. Its well-known syntax and established community have made data access genuinely accessible to everyone. However, SQL isn’t flawless, let’s face it. Several problems with SQL’s syntax make it more difficult to read and write:

Rigid structure: Subqueries or other intricate patterns are needed to accomplish anything else, and a query must adhere to a specific order (SELECT … FROM … WHERE … GROUP BY).

Awkward inside-out data flow: FROM clauses included in subqueries or common table expressions (CTE) are the first step in a query, after which logic is built outward.

Verbose, repetitive syntax: Are you sick of seeing the same columns in every subquery and repeatedly in SELECT, GROUP BY, and ORDER BY?

For novice users, these problems may make SQL more challenging. Reading or writing SQL requires more effort than should be required, even for experienced users. Everyone would benefit from a more practical syntax.

Numerous alternative languages and APIs have been put forth over time, some of which have shown considerable promise in specific applications. Many of these, such as Python DataFrames and Apache Beam, leverage piped data flow, which facilitates the creation of arbitrary queries. Compared to SQL, many users find this syntax to be more understandable and practical.

Presenting SQL pipe syntax

Google Cloud is to simplify and improve the usability of data analysis. It is therefore excited to provide pipe syntax, a ground-breaking invention that enhances SQL in BigQuery and Cloud Logging with the beauty of piped data flow.

Pipe syntax: what is it?

In summary, pipe syntax is an addition to normal SQL syntax that increases the flexibility, conciseness, and simplicity of SQL. Although it permits applying operators in any sequence and in any number of times, it provides the same underlying operators as normal SQL, with the same semantics and essentially the same syntax.

How it operates:

FROM can be used to begin a query.

The |> pipe sign is used to write operators in a consecutive fashion.

Every operator creates an output table after consuming its input table.

Standard SQL syntax is used by the majority of pipe operators:

LIMIT, ORDER BY, JOIN, WHERE, SELECT, and so forth.

It is possible to blend standard and pipe syntax at will, even in the same query.

Impact in the real world at HSBC

After experimenting with a preliminary version in BigQuery and seeing remarkable benefits, the multinational financial behemoth HSBC has already adopted pipe syntax. They observed notable gains in code readability and productivity, particularly when working with sizable JSON collections.

Benefits of integrating SQL pipe syntax

SQL developers benefit from the addition of pipe syntax in several ways. Here are several examples:

Simple to understand

It can be difficult to learn and accept new languages, especially in large organizations where it is preferable for everyone to utilize the same tools and languages. Pipe syntax is a new feature of the already-existing SQL language, not a new language. Because pipe syntax uses many of the same operators and largely uses the same syntax, it is relatively easy for users who are already familiar with SQL to learn.

Learning pipe syntax initially is simpler for users who are new to SQL. They can utilize those operators to express their intended queries directly, avoiding some of the complexities and workarounds needed when writing queries in normal SQL, but they still need to master the operators and some semantics (such as inner and outer joins).

Simple to gradually implement without requiring migrations

As everyone knows, switching to a new language or system may be costly, time-consuming, and prone to mistakes. You don’t need to migrate anything in order to begin using pipe syntax because it is a part of GoogleSQL. All current queries still function, and the new syntax can be used sparingly where it is useful. Existing SQL code is completely compatible with any new SQL. For instance, standard views defined in standard syntax can be called by queries using pipe syntax, and vice versa. Any current SQL does not become outdated or unusable when pipe syntax is used in new SQL code.

No impact on cost or performance

Without any additional layers (such translation proxies), which might increase latency, cost, or reliability issues and make debugging or tweaking more challenging, pipe syntax functions on well-known platforms like BigQuery.

Additionally, there is no extra charge. SQL’s declarative semantics still apply to queries utilizing pipe syntax, therefore the SQL query optimizer will still reorganize the query to run more quickly. Stated otherwise, the performance of queries written in standard or pipe syntax is usually identical.

For what purposes can pipe syntax be used?

Pipe syntax enables you to construct SQL queries that are easier to understand, more effective, and easier to maintain, whether you’re examining data, establishing data pipelines, making dashboards, or examining logs. Additionally, you may use pipe syntax anytime you create queries because it supports the majority of typical SQL operators. A few apps to get you started are as follows:

Debugging queries and ad hoc analysis

When conducting data exploration, you usually begin by examining a table’s rows (beginning with a FROM clause) to determine what is there. After that, you apply filters, aggregations, joins, ordering, and other operations. Because you can begin with a FROM clause and work your way up from there, pipe syntax makes this type of research really simple. You can view the current results at each stage, add a pipe operator, and then rerun the query to view the updated results.

Debugging queries is another benefit of using pipe syntax. It is possible to highlight a query prefix and execute it, displaying the intermediate result up to that point. This is a good feature of queries in pipe syntax: every query prefix up to a pipe symbol is also a legitimate query.

Lifecycle of data engineering

Data processing and transformation become increasingly difficult and time-consuming as data volume increases. Building, modifying, and maintaining a data pipeline typically requires a significant technical effort in contexts with a lot of data. Pipe syntax simplifies data engineering with its more user-friendly syntax and linear query structure. Bid farewell to the CTEs and highly nested queries that tend to appear whenever standard SQL is used. This latest version of GoogleSQL simplifies the process of building and managing data pipelines by reimagining how to parse, extract, and convert data.

Using plain language and LLMs with SQL

For the same reasons that SQL can be difficult for people to read and write, research indicates that it can also be difficult for large language models (LLMs) to comprehend or produce. Pipe syntax, on the other hand, divides inquiries into separate phases that closely match the intended logical data flow. A desired data flow may be expressed more easily by the LLM using pipe syntax, and the generated queries can be made more simpler and easier for humans to understand. This also makes it much easier for humans to validate the created queries.

Because it’s much simpler to comprehend what’s happening and what’s feasible, pipe syntax also enables improved code assistants and auto-completion. Additionally, it allows for suggestions for local modifications to a single pipe operator rather than global edits to an entire query. More natural language-based operators in a query and more intelligent AI-generated code suggestions are excellent ways to increase user productivity.

Discover the potential of pipe syntax right now

Because SQL is so effective, it has been the worldwide language of data for 50 years. When it comes to expressing queries as declarative combinations of relational operators, SQL excels in many things.

However, that does not preclude SQL from being improved. By resolving SQL’s primary usability issues and opening up new possibilities for interacting with and expanding SQL, pipe syntax propels SQL into the future. This has nothing to do with creating a new language or replacing SQL. Although SQL with pipe syntax is still SQL, it is a better version of the language that is more expressive, versatile, and easy to use.

Read more on Govindhtech.com

#SQL#PipeSyntax#BigQuery#SQLpipesyntax#CloudLogging#GoogleSQL#Syntax#LLM#AI#News#Technews#Technology#Technologynews#technologytrends#govindhtech

0 notes

Text

New GKE Ray Operator on Kubernetes Engine Boost Ray Output

GKE Ray Operator

The field of AI is always changing. Larger and more complicated models are the result of recent advances in generative AI in particular, which forces businesses to efficiently divide work among more machines. Utilizing Google Kubernetes Engine (GKE), Google Cloud’s managed container orchestration service, in conjunction with ray.io, an open-source platform for distributed AI/ML workloads, is one effective strategy. You can now enable declarative APIs to manage Ray clusters on GKE with a single configuration option, making that pattern incredibly simple to implement!

Ray offers a straightforward API for smoothly distributing and parallelizing machine learning activities, while GKE offers an adaptable and scalable infrastructure platform that streamlines resource management and application management. For creating, implementing, and maintaining Ray applications, GKE and Ray work together to provide scalability, fault tolerance, and user-friendliness. Moreover, the integrated Ray Operator on GKE streamlines the initial configuration and directs customers toward optimal procedures for utilizing Ray in a production setting. Its integrated support for cloud logging and cloud monitoring improves the observability of your Ray applications on GKE, and it is designed with day-2 operations in mind.

- Advertisement -

Getting started

When establishing a new GKE Cluster in the Google Cloud dashboard, make sure to check the “Enable Ray Operator” function. This is located under “AI and Machine Learning” under “Advanced Settings” on a GKE Autopilot Cluster.

The Enable Ray Operator feature checkbox is located under “AI and Machine Learning” in the “Features” menu of a Standard Cluster.

You can set an addons flag in the following ways to utilize the gcloud CLI:

gcloud container clusters create CLUSTER_NAME \ — cluster-version=VERSION \ — addons=RayOperator

- Advertisement -

GKE hosts and controls the Ray Operator on your behalf after it is enabled. After a cluster is created, your cluster will be prepared to run Ray applications and build other Ray clusters.

Record-keeping and observation

When implementing Ray in a production environment, efficient logging and metrics are crucial. Optional capabilities of the GKE Ray Operator allow for the automated gathering of logs and data, which are then seamlessly stored in Cloud Logging and Cloud Monitoring for convenient access and analysis.

When log collection is enabled, all logs from the Ray cluster Head node and Worker nodes are automatically collected and saved in Cloud Logging. The generated logs are kept safe and easily accessible even in the event of an unintentional or intentional shutdown of the Ray cluster thanks to this functionality, which centralizes log aggregation across all of your Ray clusters.

By using Managed Service for Prometheus, GKE may enable metrics collection and capture all system metrics exported by Ray. System metrics are essential for tracking the effectiveness of your resources and promptly finding problems. This thorough visibility is especially important when working with costly hardware like GPUs. You can easily construct dashboards and set up alerts with Cloud Monitoring, which will keep you updated on the condition of your Ray resources.

TPU assistance

Large machine learning model training and inference are significantly accelerated using Tensor Processing Units (TPUs), which are custom-built hardware accelerators. Ray and TPUs may be easily used with its AI Hypercomputer architecture to scale your high-performance ML applications with ease.

By adding the required TPU environment variables for frameworks like JAX and controlling admission webhooks for TPU Pod scheduling, the GKE Ray Operator simplifies TPU integration. Additionally, autoscaling for Ray clusters with one host or many hosts is supported.

Reduce the delay at startup

When operating AI workloads in production, it is imperative to minimize start-up delay in order to maximize the utilization of expensive hardware accelerators and ensure availability. When used with other GKE functions, the GKE Ray Operator can significantly shorten this startup time.

You can achieve significant speed gains in pulling images for your Ray clusters by hosting your Ray images on Artifact Registry and turning on image streaming. Huge dependencies, which are frequently required for machine learning, can lead to large, cumbersome container images that take a long time to pull. For additional information, see Use Image streaming to pull container images. Image streaming can drastically reduce this image pull time.

Moreover, model weights or container images can be preloaded onto new nodes using GKE secondary boot drives. When paired with picture streaming, this feature can let your Ray apps launch up to 29 times faster, making better use of your hardware accelerators.

Scale Ray is currently being produced

A platform that grows with your workloads and provides a simplified Pythonic experience that your AI developers are accustomed to is necessary to stay up with the quick advances in AI. This potent trifecta of usability, scalability, and dependability is delivered by Ray on GKE. It’s now simpler than ever to get started and put best practices for growing Ray in production into reality with the GKE Ray Operator.

Read more on govindhtech.com

#NewGKERayOperator#Kubernetes#GKEoffers#EngineBoostRayoutput#GoogleKubernetesEngine#cloudlogging#GKEAutopilotCluster#ai#gke#MachineLearning#CloudMonitoring#webhooks

0 notes

Photo

Blue sky, white clouds & sunshine - what a pretty winter's day! #Kölnzeit #Cologne #city #cloudlog (hier: Cologne, Germany)

0 notes