#Cloud scalability

Explore tagged Tumblr posts

Text

Discover how AWS enables businesses to achieve greater flexibility, scalability, and cost efficiency through hybrid data management. Learn key strategies like dynamic resource allocation, robust security measures, and seamless integration with existing systems. Uncover best practices to optimize workloads, enhance data analytics, and maintain business continuity with AWS's comprehensive tools. This guide is essential for organizations looking to harness cloud and on-premises environments effectively.

#AWS hybrid data management#cloud scalability#hybrid cloud benefits#AWS best practices#hybrid IT solutions#data management strategies#AWS integration#cloud security benefits#AWS cost efficiency#data analytics tools

0 notes

Text

0 notes

Text

In today’s fast-paced digital landscape, the cloud has emerged as a transformative technology that empowers businesses with flexibility, scalability, and cost-effectiveness. Migrating to the cloud is no longer an option but a necessity to stay competitive.

#Cloud migration strategies#Business value optimization#Cloud journey best practices#Cloud adoption benefits#Cost-effective cloud utilization#Cloud-native applications#Cloud scalability#Hybrid cloud solutions#Cloud security measures#Cloud cost management#Cloud performance monitoring#Cloud vendor selection#Cloud infrastructure optimization#Cloud data management#Agile cloud transformation

0 notes

Text

Unlocking the Power of Cloud Scalability to Transform Businesses

Cloud computing has revolutionised the way businesses operate by offering unprecedented flexibility, cost efficiency, and scalability. One of the key pillars of cloud computing is scalability and growth. This tool enables businesses to rapidly expand or contract their IT infrastructure based on their demand and goals. In this blog we aim to explore the benefits of cloud scalability and its impact on businesses of all kinds. Additionally, we will touch upon how cloud computing courses, such as cloud computing certification programs offered by renowned institutions like IIT Palakkad, can equip professionals with the skills required to harness the potential of cloud scalability.

Enhanced Flexibility and Agility

Cloud scalability empowers businesses with unparalleled flexibility and agility. Traditional on-premises infrastructure requires significant upfront investments in hardware, software, and maintenance. In contrast, cloud scalability allows businesses to dynamically allocate and de-allocate computing resources on demand, eliminating the need for overprovisioning. As a result, organizations can swiftly adapt to changing market conditions, scale their operations seamlessly, and respond to customer needs more effectively.

2. Cost Efficiency

Cloud scalability also offers significant cost advantages to businesses. By leveraging cloud infrastructure, companies can avoid upfront capital expenditures on hardware and reduce ongoing maintenance costs. With cloud scalability, businesses only pay for the resources they utilize, making it a more cost-effective option. Scaling up or down becomes simple, allowing organizations to optimize their IT spending by aligning it with actual demand. These cost savings can be channeled into other strategic initiatives, promoting overall growth and innovation.

3. Reliability

Cloud scalability enhances the reliability of IT systems. Cloud service providers operate large-scale data centers that are designed to ensure high availability and fault tolerance. By leveraging the scalability features of the cloud, businesses can distribute their applications and data across multiple servers and geographic regions, mitigating the risk of single-point failures. This improves system reliability, thereby maximizing business continuity.

4. Elasticity for Peak Workloads

Peak workloads pose a significant challenge for businesses operating on traditional infrastructure. Scaling resources to meet sudden spikes in demand requires substantial upfront investments and is often inefficient. Cloud scalability addresses this issue by providing elastic resources that can be easily scaled up or down based on demand fluctuations. Businesses can handle seasonal peaks, marketing campaigns, or sudden surges in user activity without compromising performance or incurring excessive costs.

5. Global Reach and Collaboration -

Cloud scalability breaks down geographical barriers and enables global reach and collaboration. With cloud-based resources, businesses can deploy applications and services across multiple regions, ensuring low-latency access for customers worldwide. Additionally, cloud-based collaboration tools facilitate seamless teamwork, enabling geographically dispersed teams to collaborate in real-time. Cloud scalability empowers businesses to expand their market presence globally and foster innovation through diverse talent pools.

To summarise, The benefits of cloud scalability are undeniable as they offer businesses enhanced flexibility, cost efficiency, reliability, and global reach. By leveraging cloud computing courses, such as the cloud computing certification programs offered by esteemed institutions like IIT Palakkad, professionals can acquire the necessary skills to harness the potential of cloud scalability. As the business landscape becomes increasingly digital and competitive, embracing cloud scalability has become imperative for organizations seeking growth, agility, and a competitive edge in today's dynamic market. Reach out to Jaro Education’s program advisors in order to upskill with such courses and upscale your business in the long run.

0 notes

Text

Know About Cloud Computing Services - SecureTech

Introduction

In today's digital age, cloud computing has become an integral part of the business world. Companies are increasingly turning towards cloud computing services to enhance their operations and increase productivity. San Antonio, with its burgeoning technology sector, has become a hub for cloud computing services. One such company, SecureTech, is offering top-notch cloud computing services in San Antonio. In this article, we will explore the benefits of cloud computing and why SecureTech is the go-to company for businesses in San Antonio.

What is Cloud Computing?

Before delving into the benefits of cloud computing, it is essential to understand what it is. Cloud computing refers to the practice of using a network of remote servers hosted on the internet to store, manage, and process data. In simpler terms, it means using the internet to access software and applications that are not installed on your computer or device.

Benefits of Cloud Computing

There are numerous benefits of using cloud computing services, some of which are:

1. Cost-Effective

One of the primary benefits of cloud computing is its cost-effectiveness. It eliminates the need to invest in expensive hardware and software as everything is hosted on the cloud provider's servers.

2. Scalability

Cloud computing offers unparalleled scalability as businesses can easily scale up or down their operations depending on their requirements. This makes it an ideal solution for businesses with fluctuating workloads.

3. Accessibility

Cloud computing services allow businesses to access their data and applications from anywhere with an internet connection. This means that employees can work from anywhere, which enhances productivity and flexibility.

4. Security

Cloud computing services offer robust security measures to protect data and applications from unauthorized access, theft, or loss. Providers like SecureTech offer top-notch security measures to ensure that their clients' data is always secure.

Visit - https://www.getsecuretech.com/cloud-technologies/

#Cloud migration#Cloud storage#Cloud security#Virtualization#Private cloud#Hybrid cloud#Public cloud#Cloud backup#Disaster recovery#Cloud computing#Multi-cloud#Cloud-based#monitoring#Cloud deployment#Cloud scalability#Cloud performance

0 notes

Text

Cloud Hosting in India offers unmatched reliability, scalability, and performance, making it the go-to choice for businesses of all sizes. At Website Buddy, we provide cutting-edge cloud hosting solutions designed to deliver blazing-fast speeds, robust security, and seamless uptime. Whether you're launching a startup or managing a growing enterprise, our cost-effective plans cater to your needs. Enjoy easy scalability, 24/7 customer support, and advanced features that keep your website running smoothly. Trust Website Buddy for affordable and efficient cloud hosting in India to take your online presence to the next level!

#Cloud Hosting in India#Affordable Cloud Hosting#Best Cloud Hosting Services#Scalable Cloud Hosting India#Reliable Web Hosting Solutions#Website Buddy Hosting#Cheap Cloud Hosting India#Fast and Secure Hosting

3 notes

·

View notes

Text

Transform your business with Magtec ERP! 🌐✨ Discover endless possibilities on a single platform. Book a demo today and see how we can elevate your operations to the next level! 🚀📈

#magtec#magtecerp#magtecsolutions#erp#businesssolutions#digitaltransformation#innovation#technology#growth#efficiency#productivity#cloud#automation#management#software#enterprise#success#analytics#customization#scalability#integration#teamwork#collaboration#strategy#data#support#consulting#businessdevelopment#transformation#leadership

2 notes

·

View notes

Text

Where Can You Host Your Joomla Blog Site, and What Is the Best Option?

I introduce 7 alternative hosting options with pros and cons for Joomla bloggers. As a writer, I enjoy content development in different forms and have been blogging for a long time. I benefit from it a lot to gain visibility of my YouTube videos, podcasts, gaming articles, Medium stories, and Substack newsletters. Unfortunately, I had to close my big YouTube channel for health reasons years…

#blog hosting#blogging#Blogging tips for beginners#business#Cloud Hosting#Dedicated Server Hosting#Factors to Consider When Choosing a Host#Free Hosting#hosting#hosting alternatives of Joomla#Joomla#Joomla Compatibility:#Joomla Customer Support#Joomla Scalability#joomla Security#Managed Joomla Hosting#Medium#Self-Hosting#shared hosting#stories#technology#the best hosting option is the one that aligns with your growth goals and technical expertise.#Uptime and Performance for Joomla#VPS Hosting (Virtual Private Server)#writers#writing

2 notes

·

View notes

Text

The Top Choice: Oracle Enterprise Resource Planning Cloud Service for Your Business Success

Are you searching for the best solution to streamline your business operations? Look no further than the Top Choice: Oracle Enterprise Resource Planning (ERP) Cloud Service. In today's fast-paced business world, organizations need a robust ERP solution to optimize their processes, enhance productivity, and drive growth. Oracle ERP Cloud Service, crowned as the best in the industry, offers a comprehensive suite of tools designed to meet the demands of modern businesses.

Why Choose the Best: Oracle Enterprise Resource Planning Cloud Service?

Oracle ERP Cloud Service stands out as the Best Option for businesses across various industries. Here's why:

Scalability: Easily scale your ERP system as your business grows, always ensuring seamless operations.

Integration: Integrate ERP with other Oracle Cloud services for a unified business platform.

Real-time Insights: Gain valuable insights into your business with real-time analytics, enabling data-driven decision-making.

Security: Rest easy knowing your data is secure with Oracle's advanced security features.

Frequently Asked Questions about the Best Choice: Oracle ERP Cloud Service

Q1: What modules are included in Oracle ERP Cloud Service?

A1: Oracle ERP Cloud Service includes modules for financial management, procurement, project management, supply chain management, and more. Each module is designed to optimize specific aspects of your business.

Q2: Is Oracle ERP Cloud Service suitable for small businesses?

A2: Yes, Oracle ERP Cloud Service is scalable and can be tailored to meet the needs of small, medium, and large businesses. It offers flexible solutions suitable for businesses of all sizes.

Q3: How does Oracle ERP Cloud Service enhance collaboration among teams?

A3: Oracle ERP Cloud Service provides collaborative tools that enable teams to work together seamlessly. Features like shared calendars, document management, and task tracking enhance communication and collaboration.

Conclusion: Empower Your Business with the Best ERP Solution

Oracle Enterprise Resource Planning Cloud Service is not just a choice; it's the Ultimate Solution for businesses seeking to optimize their operations. By harnessing the power of Oracle ERP, you can streamline processes, improve efficiency, and drive innovation. Don't let outdated systems hold your business back. Embrace the future with Oracle ERP Cloud Service and propel your business to new heights.

Ready to transform your business? Contact us today to explore the endless possibilities with the best ERP solution on the market.

#oracle#oracle erp#rapidflow#oracle erp cloud service#best erp solution#oracle erp service providers#business#business automation#oracle services#enterprise software#scalability#integration#rpa#market#erp

3 notes

·

View notes

Photo

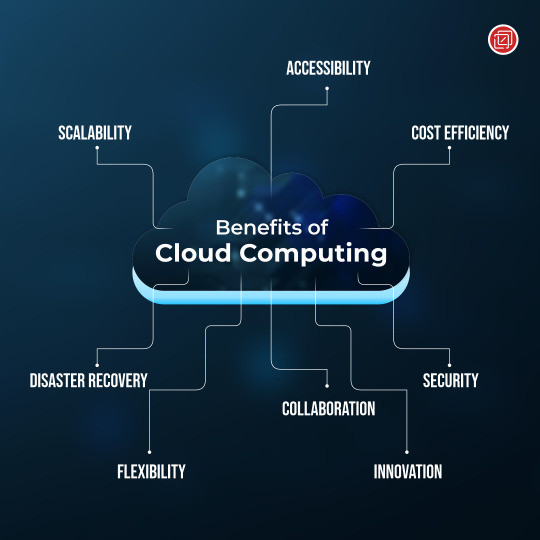

Embrace the cloud for scalability, cost efficiency, accessibility, disaster recovery, security, collaboration, flexibility, and innovation.

Remember to evaluate providers and implement security measures for successful cloud adoption.

Ready to unlock the power of the cloud? Consult with cloud experts and start harnessing these benefits for your business today!

Happy cloud computing! ☁️💪

6 notes

·

View notes

Text

Unlock the Secrets of Cloud Design Mastery: Dive into the Well-Architected AWS Framework for Unparalleled Cloud Excellence! ☁️

Enhance Scalability, Security, and Efficiency. Start Your Journey Today!

2 notes

·

View notes

Text

Discover VastEdge’s Cloud Automation services, helping businesses streamline and optimize cloud infrastructure with cutting-edge automation tools. Achieve higher efficiency, reduce costs, and enhance scalability across cloud platforms. Partner with VastEdge for seamless cloud automation solutions.

#cloud automation#cloud optimization#cloud infrastructure automation#VastEdge cloud services#automation tools#cloud scalability#cloud cost reduction#streamline cloud infrastructure#cloud solutions#business cloud automation

0 notes

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-1)

In the world of digital marketing today, providing a quick, secure, and seamless experience is crucial. A quicker time to market might be a differentiation, and it is crucial to reach a larger audience across all devices. Businesses are relying on cloud-based solutions to increase corporate agility, seize new opportunities, and cut costs.

Managing your marketing content and assets is simple with AEM. There are many advantages to using AWS to run AEM, including improved business agility, better flexibility, and lower expenses.

AEM & AWS a Gift for you:-

We knows about AEM as market leader in the Digital marketing but AWS is having answer for almost all the Architectural concerns like global capacity, security, reliability, fault tolerance, programmability, and usability.

So now AEM become more powerful with the power of AWS and gaining more popularity than the on-premises infrastructure.

Limitless Capacity

This combination gives full freedom to scale all AEM environments speedily in cost effective manner, addition is now more easy, In peak traffic volume where requests are very huge or unknown then AEM instance need more power or scaling . Here friend AWS come in to picture for rescue as the on-demand feature allows to scale all workloads. In holiday season, sporting events and sale events like thanks giving etc. AWS is holding hand of AEM and say

"Hey don't worry I am here for you, i will not left you alone in these peak scenario"

When AEM require upgrade but worried about other things like downtime backup etc then also AWS as friend come and support greatly with its cloud capability. It streamlines upgrades and deployments of AEM.

Now it become easy task with AWS. Parallel environment is cake walk now, so migration and testing is much easier without thinking of the infrastructure difficulties.

Performance testing from the QA is much easier without disturbing production. It can be done in AEM production-like environment. Performing the actual production upgrade itself can then be as simple as the change of a domain name system (DNS) entry.

Sky is no limit for AEM with AWS features and Capabilities :

As a market leader AEM is used by customers as the foundation of their digital marketing platform. AWS and AEM can provide a lot of third part integration opportunity such as blogs, and providing additional tools for supporting mobile delivery, analytics, and big data management.

A new feature can be generated with AWS & AEM combination.Many services like Amazon Simple Notification Service (Amazon SNS), Amazon Simple Queue Service (Amazon SQS), and AWS Lambda, AEM functionality easily integrated with third-party APIs in a decoupled manner. AWS can provide a clean, manageable, and auditable approach to decoupled integration with back-end systems such as CRM and e-commerce systems.

24*7 Global Availability of AEM with Buddy AWS

A more Agile and Innovative requirement can fulfill by cloud transition. How innovation and how much Agile, in previous on-premise environment for any innovation need new infrastructure and more capital expenditure (Capex). Here again the golden combination of AWS and AEM will make things easier and agile. The AWS Cloud model gives you the agility to quickly spin up new instances on AWS, and the ability to try out new services without investing in large and upfront costs. One of the feature of AWS pay-for-what-you-use pricing model is become savior in these activities.

AWS Global Infrastructure available across 24 geographic regions around the globe, so enabling customers to deploy on a global footprint quickly and easily.

Major Security concerns handled with High-Compliance

Security is the major concern about any AEM website. AWS gifts these control and confidence for secure environment. AWS ensure that you will gain the control and confidence with safety and flexibility in secure cloud computing environment . AWS, provides way to improve ability to meet core security and compliance requirements with a comprehensive set of services and features. Compliance certifications and attestations are assessed by a third-party, independent auditor.

Running AEM on AWS provides customers with the benefits of leveraging the compliance and security capabilities of AWS, along with the ability to monitor and audit access to AEM using AWS Security, Identity and Compliance services.

Continue in part-2.......

2 notes

·

View notes

Text

In today’s fast-paced digital landscape, the cloud has emerged as a transformative technology that empowers businesses with flexibility, scalability, and cost-effectiveness. Migrating to the cloud is no longer an option but a necessity to stay competitive.

#Cloud migration strategies#Business value optimization#Cloud journey best practices#Cloud adoption benefits#Cost-effective cloud utilization#Cloud-native applications#Cloud scalability#Hybrid cloud solutions#Cloud security measures#Cloud cost management#Cloud performance monitoring#Cloud vendor selection#Cloud infrastructure optimization#Cloud data management#Agile cloud transformation

0 notes

Text

#real-time health data tracking#EHR integration#cloud-based security#chronic disease management#hospital readmission reduction#operational efficiency#healthcare compliance#a user-friendly interface#scalability#patient engagement#healthcare innovation.

1 note

·

View note

Text

How to Integrate Invoice Maker Tools with Your Accounting Software

In today's fast-paced business world, efficiency and accuracy are paramount when managing financial data. One essential aspect of this is invoicing. As businesses grow, manually creating and managing invoices becomes more cumbersome. That's where invoice maker tools come into play, allowing you to quickly generate professional invoices. However, to truly streamline your financial workflow, it’s important to integrate these tools with your accounting software.

Integrating invoice maker tools with your accounting software can help automate the process, reduce human error, and improve overall productivity. This article will walk you through how to integrate your Invoice Maker Tools with accounting software effectively, ensuring smoother operations for your business.

1. Choose the Right Invoice Maker Tool

Before integration, ensure you have selected an invoice maker tool that suits your business needs. Most invoice maker tools offer basic features such as customizable templates, tax calculations, and payment tracking. However, the integration potential is an important factor to consider.

Look for an invoice maker tool that offers:

Cloud-based features for easy access and collaboration.

Customizable templates for branding.

Multi-currency support (if you do international business).

Integration capabilities with various accounting software.

Examples of popular invoice maker tools include Smaket, QuickBooks Invoice, FreshBooks, and Zoho Invoice.

2. Check Compatibility with Your Accounting Software

Not all invoice maker tools are compatible with every accounting software. Before proceeding with the integration, confirm that both your invoice maker tool and accounting software are capable of syncing with each other.

Common accounting software that integrate with invoice tools includes:

QuickBooks

Smaket

Xero

Sage

Wave Accounting

Zoho Books

Most software providers will indicate which tools can integrate with their platform. Check for available APIs, plugins, or built-in integration features.

3. Use Built-in Integrations or APIs

Many modern invoice maker tools and accounting software platforms come with built-in integrations. These are often the easiest to set up and manage.

If you choose a platform that does not offer a built-in integration, you can use APIs (Application Programming Interfaces) to link the two systems. APIs are a more technical option, but they provide greater flexibility and customization.

4. Set Up the Integration

Once you've confirmed that the invoice tool and accounting software are compatible, follow the setup process to connect both tools.

The typical steps include:

Access your accounting software: Log into your accounting software and navigate to the integration settings or marketplace.

Search for the invoice maker tool: In the marketplace or integration section, look for the invoice tool you are using.

Connect accounts: Usually, you’ll be asked to sign into your invoice maker tool from within the accounting software and authorize the integration.

Map your fields: You may need to map invoice fields (like customer names, amounts, or due dates) to corresponding fields in the accounting software to ensure the data flows seamlessly.

5. Test the Integration

After the integration is complete, it’s crucial to test whether the connection between the invoice maker and accounting software is working as expected. Generate a sample invoice and check if the details appear correctly in your accounting software. Confirm that invoices are synced, and ensure payment status updates automatically.

Test for:

Accurate syncing of client details: Ensure names, addresses, and payment history are transferred correctly.

Real-time updates: Check that any changes made to invoices in the invoice tool reflect in your accounting software.

Reporting features: Verify that your financial reports, such as profit and loss statements, include data from the invoices.

6. Automate Invoicing and Payments

Once the integration is up and running, set up automated workflows. With the right integration, you can automate recurring invoices, late payment reminders, and payment receipts. This reduces manual effort and ensures consistency in your accounting.

7. Monitor and Maintain the Integration

Just because the integration is set up doesn't mean it's a "set it and forget it" situation. Regularly monitor the syncing process to ensure everything is working smoothly.

Make sure:

Software updates: Regular updates from either your accounting software or invoice maker tool might affect the integration. Always check for compatibility after any software updates.

Backup and security: Ensure your data is securely backed up, and verify that integration tools comply with security standards.

8. Benefits of Integration

By integrating invoice maker tools with your accounting software, you’ll enjoy several key benefits:

Time Savings: Automating the invoicing process frees up time for you to focus on other important aspects of your business.

Improved Accuracy: With automatic syncing, you reduce the risk of errors that often come with manual data entry.

Better Financial Management: Real-time data syncing allows for accurate tracking of income, expenses, and cash flow, which helps with budgeting and financial forecasting.

Enhanced Customer Experience: Timely and accurate invoicing helps maintain a professional image and reduces confusion with clients.

Conclusion

Integrating invoice maker tools with Accounting Software is a smart move for businesses that want to streamline their financial operations. By selecting the right tools, following the integration steps, and ensuring regular maintenance, you can save time, improve accuracy, and focus on growing your business. Don’t let manual invoicing slow you down—leverage modern tools to automate your processes and boost efficiency.

#accounting#software#gst#smaket#billing#gst billing software#accounting software#invoice#invoice software#cloud accounting software#benefits of cloud accounting#financial software#business accounting tools#cloud-based accounting#real-time financial insights#scalable accounting solutions#cost-effective accounting software#cloud accounting security#automated accounting software#business accounting software#cloud accounting features

0 notes