#Best public cloud hosting service

Explore tagged Tumblr posts

Text

Managed Private Cloud Hosting India Provider-real cloud

One of the top providers of managed private cloud hosting in India, Real Cloud offers scalable, secure, and dependable cloud solutions that are customized to meet your company's demands. Real Cloud guarantees your company's best performance, data security, and smooth operations with its state-of-the-art infrastructure and knowledgeable management. We offer thorough support and a customized approach, whether your goal is to optimize your current system or move to the cloud. Experience unparalleled uptime, flexibility, and cutting-edge cloud technologies by putting your trust in Real Cloud for all of your cloud hosting requirements.

https://realcloud.in/managed-private-cloud-hosting-provider-india/

#Managed Private Cloud Hosting India Provider#Best public cloud hosting service#Managed Cloud Hosting Services

2 notes

·

View notes

Text

Humans are weird: The fall of Reservoir

From the audio recording of Frin Yuel Retired Artark, Recipient of the Stone of Valor, Hero of the Battle of Reservoir Recordings restricted from public distribution by order of Central Command.

“I have been called many titles over my years of service, but there has been none more insulting to me than the “Hero of Reservoir”.

There was nothing heroic about that engagement; at least not from our side of the battle.

Yes, yes, I know; what madness do I speak against our glorious people to not call us all heroes on the field of battle. Hear this old soldier out and decide after if your judgment is as strong as you think.

We were half way through the first contact war with humanity when we stumbled upon their core world of Reservoir. It was a backwater colony planet that had just transitioned from a colony into a functioning world of their empire when our fleets darkened their skies.

By that time I had been in several intense battles with the humans, but this was the first time we were attacking a well-established metropolitan world of theirs. At best our early skirmishes had been in space or along resource worlds that had their mining operations established.

The orbital battle was over quickly. The human planet had no orbital defense platforms and only a small fleet was present which was quickly swept aside. No sooner had the last of the human ships been destroyed in low orbit above the world did the ground invasion begin.

I remember watching as the first and second wave of our infantry forces detached from the troop carriers and began their descent below the cloud cover. My war host was in the third wave so while we waited for deployment we watched the video feeds of the first and second.

It was not a smooth landing.

The moment they broke the cloud cover they were met with withering barrages of anti-aircraft fire from emplaced redoubts and mobile vehicles. Scores of dropships were violently ripped apart or had their engines damaged and spiraled out to the surface below. I can remember hearing troops in the latter calling out for help right up until the moment they impacted the ground and the feed went silent.

It is not easy to listen to your comrade’s die….. I can still hear them sometimes in my dreams. Even now after all these years I can close my eyes and listen to their tortured souls calling out to us again and again……

……….

Apologies; I got a bit side tracked there.

Eventually the second wave was able to carve out safe landing zones and signaled the third wave to deploy.

We launched with vengeance in our hearts and fire in our bellies. Our one purpose now to avenge our fallen friends and shatter whatever human fools had slayed them.

The humans for their part did not make our task easy. Over the span of several weeks we had to grind their resistance down meter by bloody meter, losing thousands of warriors with the capture of each one of their cities. Yet our resolve was unwavering and though our losses mounted the day finally came when I found myself standing outside the final human bastion of their world.

Even when cornered like vermin the humans refused to surrender. We shelled their city for days, reducing their towers of stone and metal to rubble and yet they only burrowed deeper and became that much harder to dislodge. Vehicles that went into the city were beset on all sides by craven hit and run attacks, while our scouts were ambushed and cut down by well concealed snipers. This went on for several days until our commander had finally had enough.

When the order finally came to storm the city a great war cry was let out from our warriors and we poured into the city. I wish I could say there was some battle plan or larger strategic picture we were following, but the reality was we were storming one building at a time before advancing to the next.

That is where I found my worthy foe.

Within the heart of sector G17 there were reports of a lone human soldier causing untold damage to our attack. I ignored the reports at first, but as the day progressed the reports continued to come in only far worse. Now they said the human soldier had slain a hundred warriors and still stood their ground. By the end of my fourth block cleared I was hearing that an entire cohort had been wiped out and now warriors were avoiding the area.

At this notion of fear spreading through the ranks of my brothers I was filled with a seething rage and made my way to sector G17 to confront this human champion myself. It was not hard to find them, as the trail of bodies led straight to them. As I followed the trail I realized that the reports had not exaggerated the casualty list; if anything they had underestimated the dead.

Standing at the entrance to a metal bunker of some sort stood the foe I sought. They wore power armor standard to their people but damaged in several places. The paint had long since been scorched away by ricochets, their once proud cloak torn in a dozen places and hanging limply from their waist; yet their rifle was still firmly clutched in their hands so tightly I wondered if even the gods themselves could pry it from their grasp.

While I approached the warrior I saw three of my fellow soldiers come forward and try to slay the human first. The first went down with deep hole in their chest where the human’s plasma shot had carved through them. The second warrior used this opportunity to close the distance with the human but with a swift backhand from the power gauntlet their neck was snapped and they collapsed to the ground. The third soldier made it close enough to land a blow against the human, adding to the collection of gashes already dotting the armor. Their combat blade dug deep between the leg joints and the human let out a cry of pain. The third soldier twisted the knife inside the joint, reveling in the victory to come. I watched as the human let their weapon fall from their hands and clasped the third warrior’s head between their mighty gauntlets. In a grueling and morbid motion the human crushed the third warrior’s skull like a grape and let the broken body fall to the ground.

The human stood motionless after the melee, which to my surprise had taken less than a minute to complete. They made to pick up their fallen weapon as they finally registered my presence but the blade wound had done more damage than they expected causing them to tumble to the ground in a loud bang.

I watched for a moment as they crawled towards it in an attempt to bring it to bear before I casually kicked it out of their reach. It was then that more of my warrior brethren began to flood into the area and saw me standing over the human that had done such horrendous damage to our forces. One by one they began chanting my name as if I had been the one to bring the foul beast low and called for me to end their life once and for all; but all I could focus on was the human before me.

Through their visor I saw the face of the human looking up at me. A thin red stream of blood ran from the corner of their mouth with specs of blood dotting the inside of the helmet from where they had coughed it. Their eyes…….even though their body was broken and defeated their eyes never once showed a hint of remorse or pleading as they fixed me with a death glare. If it was possible I half imagine they were trying to kill me with their stare right there and then before I emptied my clip into their chest cavity.

I just stood there with my finger held down on the trigger as round after round of plasma energy burned into them while the surrounding soldiers cheered. The human died half way through the clip but I kept my fingers firmly on the trigger until every shot was emptied.

As you know after that I was given the title “Hero of Reservoir” for I had seemingly killed the human butcher all by myself. There were of course the video feeds from the warriors helmets that came before me that contradicted that sentiment but central command quickly quashed that notion; erasing or restricting what footage there was while fabricating their own that made me out to be the ‘Hero” after all. With the substantial losses they had taken claiming the planet they needed someone they could hoist up and show the homeworld to as a sign of admiration and prowess in our war against the humans.

Like I said before I never cared for the name. Not because it was based on a lie, but from what I discovered when I went to investigate the bunker the human soldier had been so ferociously defending.

It took several explosive charges to pop off the hinges but with a loud thunderous boom the door finally gave way and I led a war party inside. We had expected some sort of redoubt or military bunker and went in with our weapons firing on anything that moved; which was fortunate as the door led into a series of tunnels dotting the city filled with humans.

My fellow warriors were lost to the blood lust and carved their way through the humans as if they were made of paper while I stopped and examined the nearest fallen human.

They were a frail thing, not half the size of a normal human adult. I believe they were called “children” by their cultural standards and were designated as the youth of the species. The child lay huddled in a corner they had attempted to hide in when the breaching charges had gone off but were caught by the explosion nonetheless and died.

As I gently pulled on them to turn them around I saw that the child had been holding something tightly against their chest. When I saw what it was I recoiled and nearly fell over another dead human from my realization.

The child had been clutching a stuffed toy animal, not a side arm as his fellow warriors had believed.

With a grim realization I came to the conclusion that this was not a military bunker or the last vestiges of the human military lurking within the walls of these tunnels. They were human civilians who had been led into the depths of their city in the hopes they could survive the coming battle.

I tried to call off the attack into the lower levels but by then our warriors were lost to the haze of battle. By the end some three hundred human civilians were massacred in that bunker; their bodies sealed within a rocky tomb when we detonated charges to collapse the bunker complex.

That is why I hate being called a hero for that awful battle. I am a pretender, a charlatan, a fraud; held up to justify the deaths on both sides as if a statue of me will someone make us forget what we had done.

The real hero of reservoir died by my hand, giving their life to defend the defenseless.

#humans are insane#humans are space oddities#humans are weird#humans are space orcs#scifi#story#writing#original writing#niqhtlord01#ai generated art#stable diffusion

72 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

VPS vs. Dedicated vs. cloud hosting: How to choose?

A company's website is likely to be considered one of the most significant components of the business. When first beginning the process of developing a new website, selecting a hosting platform is one of the first things to do. One of the decisions that need to be made, regardless of whether you are starting an online store, building a blog, or making a landing page for a service, is selecting which kind of server is the most ideal for your organization. This is one of the decisions that needs to be made.

The first part of this tutorial will be devoted to providing an explanation of what web hosting is, and then it will move on to examine the many types of hosting servers that are now accessible. This article will compare shared hosting to dedicated hosting and cloud hosting. All of these comparisons will be included in this post. However, with terminology such as "dedicated," "VPS," and "cloud" swirling around, how can you evaluate which approach is the most appropriate for your specific requirements?

Each sort of hosting has advantages and disadvantages, and they are tailored to certain use cases and financial restrictions. We will now conclude by providing you with some advice on how to select the web hosting option that is most suitable for your requirements. Let's jump right in.

Web hosting—how does it work?

Web hosting providers provide storage and access for companies to host their websites online. Developing and hosting a website involves HTML, CSS, photos, videos, and other data. All of this must be stored on a strong Internet-connected server. A website domain is also needed to make your website public. It would be best if you bought a website domain with hosting so that when someone enters your domain in their browser, it is translated into an IP address that leads the browser to your website's files.

Best web hosting providers provide the infrastructure and services needed to make your website accessible to Internet consumers. Web hosting firms run servers that store your website's files and make them available when someone types in your domain name or clicks on a link. When website visitors click on the website or add its URL to their browser, your web server sends back the file and any related files. A web server displays your website in the browser.

VPS hosting: What is it?

VPS hosting, which stands for virtual private server hosting, is a type of hosting that falls somewhere in the center of shared hosting and dedicated hosting. Several virtual private server instances are hosted on a physical server, which is also referred to as the "parent." Each instance is only permitted to make use of a certain portion of the hardware resources that are available on the parent server. All of these instances, which function as unique server settings, are rented out by individuals. To put it another way, you are renting a separate portion of a private server.

The pricing for these plans vary, and in comparison to shared hosting, they provide superior benefits in terms of performance, protection, and the capacity to expand. Through the utilization of virtualization technology, a single server can be partitioned into multiple online versions. Each and every one of these instances functions as its very own independent and private server environment. By utilizing virtual private server (VPS) hosting, a company can have the same resource-rich environment as a dedicated server at a significantly lower cost.

There are distinct distinctions between virtual private servers (VPS) and dedicated servers, yet neither one is superior to the other. It is dependent on the requirements of your company as to which hosting environment would be most suitable for you and your team.

Dedicated hosting: What is it?

What exactly is fully controlled dedicated hosting? There is only one computer that belongs to you on a dedicated server, as the name suggests. You can handle every piece of hardware that makes up the server. These computers usually share a data center's network with nearby dedicated servers but not any hardware. Although these plans are usually more pricey than shared or VPS, they may offer better speed, security, and adaptability.

For example, if you need custom settings or certain hardware, this is particularly accurate. A business that uses dedicated hosting has its own actual server. The company utilizes the server's hardware and software tools exclusively, not sharing them with any other business. There are some differences between dedicated servers and VPS, but they work exactly the same. VPS creates a virtual separate server instance, which is the main difference. For business owners to have more control, speed, and security, truly dedicated server environments depend on physical legacy IT assets.

Cloud Hosting: What is it?

The term "cloud hosting" refers to a web hosting solution that can either be shared or dedicated to services. Instead of depending on a virtual private server (VPS) or a dedicated server, it makes use of a pool of virtual servers to host websites and applications. Resources are distributed among a number of virtual servers in a dedicated cloud environment, which is typically situated in a variety of data centers located all over the world. Multiple users share pooled resources on a single physical server environment, regardless of whether the environment is shared or cloud-based. Users are able to feel safe utilizing any of these environments despite the fact that they are the least secure of the two locations.

Therefore, cloud hosting is essentially a system that functions in small partitions of multiple servers at the same time. Having servers in the cloud also becomes advantageous in the event that servers become unavailable. When cloud hosting is compared to dedicated hosting, the case of dedicated servers experiencing an outage is significantly more dangerous because it causes the entire system to become unavailable. When using cloud servers, your system is able to switch to another server in the event that one of the servers fails.

There is no physical hardware involved in cloud servers; rather, cloud computing technology is utilized. Cloud web hosting is an option that can be considered financially burdensome. Considering that the cost of cloud server hosting is determined by utilization, higher-priced plans typically include greater amounts of storage, random access memory (RAM), and central processing unit (CPU) allocations.

By having the capacity to scale resources up or down in response to changes in user traffic, startups, and technology firms that are launching new web apps can reap the desired benefits. Cloud hosting provides rapid scalability, which is beneficial for applications that may confront unanticipated growth or abrupt spikes in traffic. When it comes to backing up data, cloud hosting offers a dependable environment. Data can be quickly restored from a cloud backup in the event of a disaster, hence reducing the amount of time that the system is offline.

How to choose the best web hosting?

When deciding between a dedicated, virtual private server (VPS), and cloud hosting, it is vital to understand your specific requirements and evaluate them in relation to your financial constraints. Making a list of the things that are non-negotiable and items on your wish list is a simple approach to getting started. From there, you should do some calculations to determine how much money you can afford on a monthly or annual basis.

Last but not least, you should initiate the search for a solution that provides what you require at the price that you desire. The use of a dedicated web server, for instance, might be beneficial if you have the financial means to do so and require increased security and dependability. On the other hand, if you are starting out and are not hosting a website that collects sensitive information, sharing hosting is a good choice to consider. If, on the other hand, the web host provides reliable support, a substantial amount of documentation, and a knowledge base in which you can get the majority of the answers to your inquiries, then the advantages of using that web host are far more significant.

Conclusion-

Dedicated, shared, virtual private server (VPS) and cloud hosting are all excellent choices for a variety of use cases. When it comes to aspiring business owners, bloggers, or developers, the decision frequently comes down to striking a balance between the limits of their budget and the requirements of performance and scalability. Because of its low cost, shared hosting can be the best option for individuals who are just beginning their journey into the realm of digital technology.

Nevertheless, when your online presence expands, you might find that the sturdiness of dedicated servers or the adaptability of virtual private servers (VPS) are more enticing to you. Cloud hosting, on the other hand, is distinguished by its scalability and agility, making it suitable for meeting the requirements of enterprises that are expanding rapidly or applications that have variable traffic.

When it comes down to it, your hosting option needs to be influenced by your particular objectives, your level of technical knowledge, and the growth trajectory that you anticipate. If you take the time to evaluate your specific needs, you will not only ensure that your website functions without any problems, but you will also position yourself for sustained success.

Dollar2host Dollar2host.com We provide expert Webhosting services for your desired needs Facebook Twitter Instagram YouTube

2 notes

·

View notes

Text

elsewhere on the internet: AI and advertising

Bubble Trouble (about AIs trained on AI output and the impending model collapse) (Ed Zitron, Mar 2024)

A Wall Street Journal piece from this week has sounded the alarm that some believe AI models will run out of "high-quality text-based data" within the next two years in what an AI researcher called "a frontier research problem." Modern AI models are trained by feeding them "publicly-available" text from the internet, scraped from billions of websites (everything from Wikipedia to Tumblr, to Reddit), which the model then uses to discern patterns and, in turn, answer questions based on the probability of an answer being correct. Theoretically, the more training data that these models receive, the more accurate their responses will be, or at least that's what the major AI companies would have you believe. Yet AI researcher Pablo Villalobos told the Journal that he believes that GPT-5 (OpenAI's next model) will require at least five times the training data of GPT-4. In layman's terms, these machines require tons of information to discern what the "right" answer to a prompt is, and "rightness" can only be derived from seeing lots of examples of what "right" looks like. ... One (very) funny idea posed by the Journal's piece is that AI companies are creating their own "synthetic" data to train their models, a "computer-science version of inbreeding" that Jathan Sadowski calls Habsburg AI. This is, of course, a terrible idea. A research paper from last year found that feeding model-generated data to models creates "model collapse" — a "degenerative learning process where models start forgetting improbable events over time as the model becomes poisoned with its own projection of reality."

...

The AI boom has driven global stock markets to their best first quarter in 5 years, yet I fear that said boom is driven by a terrifyingly specious and unstable hype cycle. The companies benefitting from AI aren't the ones integrating it or even selling it, but those powering the means to use it — and while "demand" is allegedly up for cloud-based AI services, every major cloud provider is building out massive data center efforts to capture further demand for a technology yet to prove its necessity, all while saying that AI isn't actually contributing much revenue at all. Amazon is spending nearly $150 billion in the next 15 years on data centers to, and I quote Bloomberg, "handle an expected explosion in demand for artificial intelligence applications" as it tells its salespeople to temper their expectations of what AI can actually do. I feel like a crazy person every time I read glossy pieces about AI "shaking up" industries only for the substance of the story to be "we use a coding copilot and our HR team uses it to generate emails." I feel like I'm going insane when I read about the billions of dollars being sunk into data centers, or another headline about how AI will change everything that is mostly made up of the reporter guessing what it could do.

They're Looting the Internet (Ed Zitron, Apr 2024)

An investigation from late last year found that a third of advertisements on Facebook Marketplace in the UK were scams, and earlier in the year UK financial services authorities said it had banned more than 10,000 illegal investment ads across Instagram, Facebook, YouTube and TikTok in 2022 — a 1,500% increase over the previous year. Last week, Meta revealed that Instagram made an astonishing $32.4 billion in advertising revenue in 2021. That figure becomes even more shocking when you consider Google's YouTube made $28.8 billion in the same period . Even the giants haven’t resisted the temptation to screw their users. CNN, one of the most influential news publications in the world, hosts both its own journalism and spammy content from "chum box" companies that make hundreds of millions of dollars driving clicks to everything from scams to outright disinformation. And you'll find them on CNN, NBC and other major news outlets, which by proxy endorse stories like "2 Steps To Tell When A Slot Is Close To Hitting The Jackpot." These “chum box” companies are ubiquitous because they pay well, making them an attractive proposition for cash-strapped media entities that have seen their fortunes decline as print revenues evaporated. But they’re just so incredibly awful. In 2018, the (late, great) podcast Reply All had an episode that centered around a widower whose wife’s death had been hijacked by one of these chum box advertisers to push content that, using stolen family photos, heavily implied she had been unfaithful to him. The title of the episode — An Ad for the Worst Day of your Life — was fitting, and it was only until a massively popular podcast intervened did these networks ban the advert. These networks are harmful to the user experience, and they’re arguably harmful to the news brands that host them. If I was working for a major news company, I’d be humiliated to see my work juxtaposed with specious celebrity bilge, diet scams, and get-rich-quick schemes.

...

While OpenAI, Google and Meta would like to claim that these are "publicly-available" works that they are "training on," the actual word for what they're doing is "stealing." These models are not "learning" or, let's be honest, "training" on this data, because that's not how they work — they're using mathematics to plagiarize it based on the likelihood that somebody else's answer is the correct one. If we did this as a human being — authoritatively quoting somebody else's figures without quoting them — this would be considered plagiarism, especially if we represented the information as our own. Generative AI allows you to generate lots of stuff from a prompt, allowing you to pretend to do the research much like LLMs pretend to know stuff. It's good for cheating at papers, or generating lots of mediocre stuff LLMs also tend to hallucinate, a virtually-unsolvable problem where they authoritatively make incorrect statements that creates horrifying results in generative art and renders them too unreliable for any kind of mission critical work. Like I’ve said previously, this is a feature, not a bug. These models don’t know anything — they’re guessing, based on mathematical calculations, as to the right answer. And that means they’ll present something that feels right, even though it has no basis in reality. LLMs are the poster child for Stephen Colbert’s concept of truthiness.

3 notes

·

View notes

Text

Best Practices for Data Lifecycle Management to Enhance Security

Securing all communication and data transfer channels in your business requires thorough planning, skilled cybersecurity professionals, and long-term risk mitigation strategies. Implementing global data safety standards is crucial for protecting clients’ sensitive information. This post outlines the best practices for data lifecycle management to enhance security and ensure smooth operations.

Understanding Data Lifecycle Management

Data Lifecycle Management (DLM) involves the complete process from data source identification to deletion, including streaming, storage, cleansing, sorting, transforming, loading, analytics, visualization, and security. Regular backups, cloud platforms, and process automation are vital to prevent data loss and database inconsistencies.

While some small and medium-sized businesses may host their data on-site, this approach can expose their business intelligence (BI) assets to physical damages, fire hazards, or theft. Therefore, companies looking for scalability and virtualized computing often turn to data governance consulting services to avoid these risks.

Defining Data Governance

Data governance within DLM involves technologies related to employee identification, user rights management, cybersecurity measures, and robust accountability standards. Effective data governance can combat corporate espionage attempts and streamline database modifications and intel sharing.

Examples of data governance include encryption and biometric authorization interfaces. End-to-end encryption makes unauthorized eavesdropping more difficult, while biometric scans such as retina or thumb impressions enhance security. Firewalls also play a critical role in distinguishing legitimate traffic from malicious visitors.

Best Practices in Data Lifecycle Management Security

Two-Factor Authentication (2FA) Cybercriminals frequently target user entry points, database updates, and data transmission channels. Relying solely on passwords leaves your organization vulnerable. Multiple authorization mechanisms, such as 2FA, significantly reduce these risks. 2FA often requires a one-time password (OTP) for any significant changes, adding an extra layer of security. Various 2FA options can confuse unauthorized individuals, enhancing your organization’s resilience against security threats.

Version Control, Changelog, and File History Version control and changelogs are crucial practices adopted by experienced data lifecycle managers. Changelogs list all significant edits and removals in project documentation, while version control groups these changes, marking milestones in a continuous improvement strategy. These tools help detect conflicts and resolve issues quickly, ensuring data integrity. File history, a faster alternative to full-disk cloning, duplicates files and metadata in separate regions to mitigate localized data corruption risks.

Encryption, Virtual Private Networks (VPNs), and Antimalware VPNs protect employees, IT resources, and business communications from online trackers. They enable secure access to core databases and applications, maintaining privacy even on public WiFi networks. Encrypting communication channels and following safety guidelines such as periodic malware scans are essential for cybersecurity. Encouraging stakeholders to use these measures ensures robust protection.

Security Challenges in Data Lifecycle Management

Employee Education Educating employees about the latest cybersecurity implementations is essential for effective DLM. Regular training programs ensure that new hires and experienced executives understand and adopt best practices.

Voluntary Compliance Balancing convenience and security is a common challenge. While employees may complete security training, consistent daily adoption of guidelines is uncertain. Poorly implemented governance systems can frustrate employees, leading to resistance.

Productivity Loss Comprehensive antimalware scans, software upgrades, hardware repairs, and backups can impact productivity. Although cybersecurity is essential, it requires significant computing and human resources. Delays in critical operations may occur if security measures encounter problems.

Talent and Technology Costs Recruiting and developing an in-house cybersecurity team is challenging and expensive. Cutting-edge data protection technologies also come at a high cost. Businesses must optimize costs, possibly through outsourcing DLM tasks or reducing the scope of business intelligence. Efficient compression algorithms and hybrid cloud solutions can help manage storage costs.

Conclusion

The Ponemon Institute found that 67% of organizations are concerned about insider threats. Similar concerns are prevalent worldwide. IBM estimates that the average cost of data breaches will reach 4.2 million USD in 2023. The risks of data loss, unauthorized access, and insecure PII processing are rising. Stakeholders demand compliance with data protection norms and will penalize failures in governance.

Implementing best practices in data lifecycle management, such as end-to-end encryption, version control systems, 2FA, VPNs, antimalware tools, and employee education, can significantly enhance security. Data protection officers and DLM managers can learn from expert guidance, cybersecurity journals, and industry peers’ insights to navigate complex challenges. Adhering to privacy and governance directives offers legal, financial, social, and strategic advantages, boosting long-term resilience against the evolving threats of the information age. Utilizing data governance consulting services can further ensure your company is protected against these threats.

3 notes

·

View notes

Text

What is Cloud Hosting?: Benefits and Types

Are you a startup owner looking to host your website globally? Are you tight on budgets prompting you to seek an efficient yet economical solution for server hosting?

With the world growing digital, where everything is becoming more accessible, there's a solution that aligns perfectly to your needs: cloud hosting. But, if you're wondering what exactly cloud hosting is, you're not alone. This article is designed to help you understand cloud hosting, guiding you through its nuances and illustrating why it might be the ideal solution for your startup

What is Cloud Hosting?

Cloud hosting is a web hosting service that enables you to operate your application and website globally in a cost efficient manner. Your website is being hosted through a cluster of servers and even if one server fails at some point of a time the other one backs it up enabling your site to run smoothly without failing to load when a user clicks on your site.

How is Cloud Hosting different from traditional hosting?

Traditionally organisations host websites and applications on single, physical servers. This method may require purchasing or leasing data centres. This not only consumes a lot of space to store the servers, it also increases the hiring cost. And if your website or application experiences a sudden spike in the traffic, it will slow it down drastically. On the other hand, cloud hosting offers a solution to overcome these challenges by hosting your site globally from wherever you are with just a click. Cloud hosting is more flexible, scalable and cost efficient. In this method, you pay a cloud service provider to host your site and app across various physical and virtual servers enabling it to host globally. This is an ideal way for the business owners who are trying to expand their global presence.

How does cloud hosting work?

Cloud hosting uses multiple servers to balance the load and improve the loading speed of the site or application. In this approach even if one server fails the other server kicks in making everything run smoother. This is called virtualization where multiple virtual servers run on a single physical server each running independently of the other.

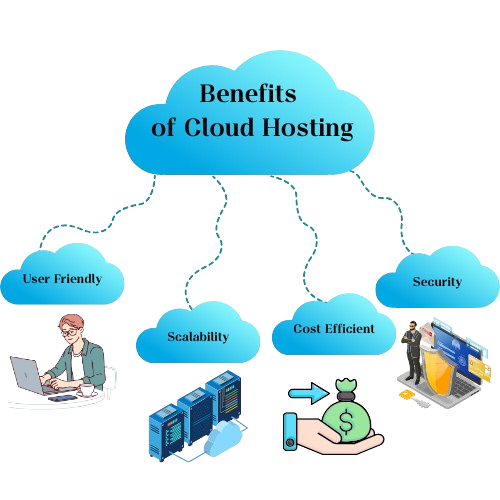

What are the benefits of Cloud hosting?

Cloud hosting is one of the most cost efficient ways to run your site and application which provides immense flexibility.

User Friendly: You don’t have an idea of what cloud computing is and how it actually works? Are you wondering how to use it, don’t worry cloud service providers are there to help you do it.

Scalability: You can easily scale the services in just one click and at much cheaper rates. You don’t need to look to buy servers, hire a person to maintain it or think of any innovative way to store it.

Cost Efficient: Pay only for the services you are using. You need more resources don't worry cloud service providers will allocate them for you.

Security: Are you worried about your data on the cloud? Stay assured, Cloud providers invest high in security systems making sure to provide you the best security. They are always up-to-date with their security framework. They also provide a backup and storage mechanism.

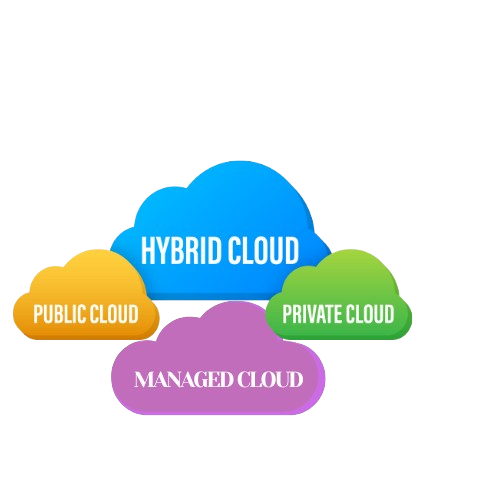

What are the types of Cloud Hosting?

Cloud hosting can be categorised into 4 main types:

1. Public Cloud

Public cloud works in a similar fashion like the public transport where many people travel in one bus and third party service providers maintain and manage all the services. In the case of public clouds the cloud infrastructure is available for multiple websites and third party service providers maintain and manage the hardware.

2. Private Cloud

It is similar to having your own car that's managed by you. Private clouds are built and maintained by you and later your organisations make use of it.

3. Hybrid Cloud

It is similar to renting an OLA cab. Either you want to pool the cab and travel with others or you want to travel alone. That depends totally on you. Hybrid cloud is a blend of public and private cloud where you run your website and applications through multiple infrastructure and store data in specific locations.

4. Managed Cloud

It is similar to renting a cab on a monthly basis where a third party manages all the operations. Managed cloud works similarly where third party cloud providers manage all the operational practices like backups, security and more.

Bottom Line

Cloud hosting represents a transformative approach, providing startup and businesses a flexible, scalable and cost efficient way to host websites globally. There are 4 types of cloud hosting like public, private, hybrid and managed cloud you can choose from. Understanding fundamentals will help you make informed decisions for growing digitally.

3 notes

·

View notes

Text

Best public cloud hosting service-Real Cloud

Genuine Cloud is your believed accomplice for the best open cloud facilitating administration, conveying first class execution and dependability. Our high level cloud arrangements guarantee consistent versatility, upgraded security, and all day, every day backing to meet your business needs. With Genuine Cloud, you can easily deal with your responsibilities and spotlight on development while we handle the specialized intricacies. Best public cloud hosting service Whether you're a startup or a venture, our financially savvy facilitating administrations are customized to give a definitive cloud insight. Pick Genuine Cloud and hoist your internet based presence today!

#Best public cloud hosting service#MariaDB Database Management#Affordable MariaDB database Managment

0 notes

Text

Top Tools and Technologies to Use in a Hackathon for Faster, Smarter Development

Participating in a hackathon like those organized by Hack4Purpose demands speed, creativity, and technical prowess. With only limited time to build a working prototype, using the right tools and technologies can give your team a significant edge.

Here’s a rundown of some of the best tools and technologies to help you hack efficiently and effectively.

1. Code Editors and IDEs

Fast coding starts with a powerful code editor or Integrated Development Environment (IDE).

Popular choices include:

Visual Studio Code: Lightweight, extensible, supports many languages

JetBrains IntelliJ IDEA / PyCharm: Great for Java, Python, and more

Sublime Text: Fast and minimalistic with essential features

Choose what suits your language and style.

2. Version Control Systems

Collaborate smoothly using version control tools like:

Git: The most widely used system

GitHub / GitLab / Bitbucket: Platforms to host your repositories, manage issues, and review code

Regular commits and branch management help avoid conflicts.

3. Cloud Platforms and APIs

Leverage cloud services for backend, databases, or hosting without setup hassle:

AWS / Azure / Google Cloud: Often provide free credits during hackathons

Firebase: Real-time database and authentication made easy

Heroku: Simple app deployment platform

Explore public APIs to add extra features like maps, payment gateways, or AI capabilities.

4. Frontend Frameworks and Libraries

Speed up UI development with popular frameworks:

React / Vue.js / Angular: For dynamic, responsive web apps

Bootstrap / Tailwind CSS: Ready-to-use styling frameworks

These tools help build polished interfaces quickly.

5. Mobile App Development Tools

If building mobile apps, consider:

Flutter: Cross-platform, single codebase for iOS and Android

React Native: Popular JavaScript framework for mobile

Android Studio / Xcode: Native development environments

6. Collaboration and Communication Tools

Keep your team synchronized with:

Slack / Discord: Instant messaging and voice/video calls

Trello / Asana: Task and project management boards

Google Docs / Notion: Real-time document collaboration

Effective communication is key under time pressure.

7. Design and Prototyping Tools

Create UI/UX mockups and wireframes using:

Figma: Collaborative design tool with real-time editing

Adobe XD: Comprehensive UI/UX design software

Canva: Simple graphic design tool for quick visuals

Good design impresses judges and users alike.

8. Automation and Deployment

Save time with automation tools:

GitHub Actions / CircleCI: Automate builds and tests

Docker: Containerize applications for consistent environments

Quick deployment lets you demo your project confidently.

Final Thoughts

Selecting the right tools and technologies is crucial for success at a hackathon. The perfect mix depends on your project goals, team skills, and the hackathon theme.

If you’re ready to put these tools into practice, check out upcoming hackathons at Hack4Purpose and start building your dream project!

0 notes

Text

Why Scalable SAP Commerce Needs the Support of Managed Services

Nowadays, users expect firms to offer flawless, individualized, and scalable customer service across every platform. The transition to omnichannel is made possible in large part by SAP Commerce. At the same time, taking care of and scaling big SAP Commerce setups is a real challenge. Complicated infrastructure, new customer desires, security issues, and ongoing updates cause many problems. That’s why SAP Cloud Managed Services have become indispensable for companies aiming to stay competitive and grow sustainably.

The Growing Complexity of SAP Commerce

The software is famous for being flexible and complex, as it handles detailed product catalogs, various pricing systems, and various connections. Even so, possessing this power leads to added complications. Since businesses develop and grow, their SAP Commerce systems must adjust quickly and include new features, remain online, maintain high performance, and ensure safety.

Most in-house teams do not have the needed expertise, availability, or equipment to handle such details well. Looking after infrastructure, addressing technical issues, checking compliance, and releasing updates can quickly be too much for the company's staff. This is where a trusted partner like Royal Cyber, specializing in SAP Cloud Managed Services, adds immense value by offering expertise and round-the-clock support.

What Is SAP Cloud Managed Services?

SAP Cloud Managed Services are a suite of professional services designed to manage, monitor, and optimize your SAP environments. They encompass everything from maintaining system infrastructure and applications to improving performance, ensuring compliance, and saving money.

Managed services support your SAP Commerce platform's best performance, whether it is hosted on a public, private, or hybrid cloud. Important components are:

24/7 monitoring and incident response

Proactive system maintenance and health checks

Patch and update management

Security hardening and compliance audits

Backup and disaster recovery

Infrastructure scalability and cost optimization

Detailed reporting and analytics

Benefits of SAP Cloud Managed Services for SAP Commerce

As your managed service provider, Royal Cyber places you in contact with a group of SAP specialists who keep your system optimized and on track with your business goals.

1. Flexibility and Ability to Expand

If businesses want to keep up with demand, seamless scalability becomes very important. SAP Cloud Managed Services provide the scalability needed to handle seasonal spikes, product launches, and global expansion without compromising performance or customer experience. If you want to enter new areas, add more sales paths, or improve personalization, managed services make it simple for you.

2. Cost Efficiency

Handling and looking after SAP environments on their own requires a lot of time and costs a lot of money. You can get expert advice by using managed services, saving the expense of hiring your staff. Cloud usage optimization means you will only pay for the provision you use. In this way, companies can watch their IT costs but still depend on the system.

3. Enhanced Performance and Uptime

How satisfied customers are relies very much on the responsiveness and reliability of your e-commerce platform. With managed services, your SAP Commerce platform will be proactively supervised, improved, and troubleshot to work perfectly all the time.

Royal Cyber’s managed services come with Service Level Agreements (SLAs), which guarantee that services are working properly and available, ensuring both peace of mind and a better chance to compete.

4. Security and Compliance

Since GDPR and CCPA require stronger protection of personal information, security and compliance are now top priorities. To secure sensitive data, Royal Cyber uses advanced steps such as having several firewalls, detection systems, and data encryption.

Your SAP environment is kept safe with managed services by receiving regular security checks, continuous threat detection, and constant monitoring for following industry guidelines.

5. Faster Innovation and Time-to-Market

Outsourcing operations allow groups within your company to invest more time and energy in creative solutions. SAP Commerce Managed Services allow your business to deliver new features faster, experiment with new business models, and respond swiftly to market changes. Using DevOps, CI/CD, and automated testing plays a big role in managing services, speeding up the launch of services.

SAP Commerce Managed Services: Tailored for E-commerce Excellence

Because customer expectations in e-commerce are always changing, the field becomes very high stakes. SAP Commerce Managed Services are specifically designed to support these dynamic needs. Royal Cyber gives you:

How to administer and optimize a commerce application

Order and inventory services are offered

All cost variations and items are available immediately

ERP, together with third-party integrations

Support for end users and training available

Both Agile and DevOps automation

Royal Cyber’s customized services help businesses get the most out of SAP Commerce, guaranteeing strong performance, great client service, and flexible business operations.

The Role of SAP Consulting Services in Maximizing ROI

Managed services give us daily efficiency, but sap consulting services support our ongoing growth. Firms offering consulting services offer guidance on important changes to a business. Royal Cyber’s consulting features are:

A business process evaluation

Custom creation of solutions

Integration architecture

SAP Commerce upgrading and migrating

Programs for training and managing change

They serve businesses best during digital transformation, entering into other markets, or as they try to improve underperforming systems. By combining SAP Cloud Managed Services with robust SAP consulting services, organizations can enhance system efficiency, reduce costs, and achieve better business outcomes.

Why Choose Royal Cyber for SAP Cloud Managed Services?

With over 20 years of expertise, Royal Cyber is known around the world for providing complete SAP solutions. Here’s what draws businesses to Royal Cyber:

Certified SAP Specialists: Team of certified professionals with deep expertise in SAP Commerce and related technologies.

Full Service: Support from the beginning of the planning through to following up.

Industry Experience: Many years of success in retail, manufacturing, financial services, and more.

Customer-First Approach: Adapting to what meets your needs makes you unique.

Focuses on Innovation: Uses AI, machine learning, and automation to make commerce operations smarter.

It’s more than managing your SAP system for Royal Cyber; we get involved as an extra team, helping you achieve more and try new things.

Conclusion: The Future of SAP Commerce Is Managed

E-commerce businesses face a changing, competitive, and difficult work environment. If a company wants to remain flexible, grow, and place customers first, using managed services is no longer a nice feature—it is necessary.

By leveraging SAP Cloud Managed Services, businesses ensure their SAP Commerce environments are optimized, secure, and prepared for future challenges. Businesses benefit from enhanced growth strategies when expert services for organizing their SAP environment are applied.

Royal Cyber brings together managed services and consulting to maximize how you invest in SAP Commerce. Looking to do any business task, whether faster, better, or more economical, makes Royal Cyber your best one-stop solution. Contact Royal Cyber to learn more about how our SAP Cloud Managed Services can elevate your digital commerce journey.

0 notes

Text

🌐 Mastering Hybrid & Multi-Cloud Strategy: The Future of Scalable IT

In today’s fast-paced digital ecosystem, one cloud is rarely enough. Enterprises demand agility, resilience, and innovation at scale — all while maintaining cost-efficiency and regulatory compliance. That’s where a Hybrid & Multi-Cloud Strategy becomes essential.

But what does it mean, and how can organizations implement it effectively?

Let’s dive into the world of hybrid and multi-cloud computing, understand its importance, and explore how platforms like Red Hat OpenShift make this vision a practical reality.

🧭 What Is a Hybrid & Multi-Cloud Strategy?

Hybrid Cloud: Combines on-premises infrastructure (private cloud or data center) with public cloud services, enabling workloads to move seamlessly between environments.

Multi-Cloud: Involves using multiple public cloud providers (like AWS, Azure, GCP) to avoid vendor lock-in, optimize performance, and reduce risk.

Together, they create a flexible and resilient IT model that balances performance, control, and innovation.

💡 Why Enterprises Choose Hybrid & Multi-Cloud

✅ 1. Avoid Vendor Lock-In

Using more than one cloud vendor allows businesses to negotiate better deals and avoid being tied to one ecosystem.

✅ 2. Resilience & Redundancy

Workloads can shift between clouds or on-prem based on outages, latency, or business needs.

✅ 3. Cost Optimization

Run predictable workloads on cheaper on-prem hardware and burst to the cloud only when needed.

✅ 4. Compliance & Data Sovereignty

Keep sensitive data on-prem or in-country while leveraging public cloud for scale.

🚀 Real-World Use Cases

Retail: Use on-prem for POS systems and cloud for seasonal campaign scalability.

Healthcare: Host patient data in a private cloud and analytics models in the public cloud.

Finance: Perform high-frequency trading on public cloud compute clusters, but store records securely in on-prem data lakes.

🛠️ How OpenShift Simplifies Hybrid & Multi-Cloud

Red Hat OpenShift is designed with portability and consistency in mind. Here's how it empowers your strategy:

🔄 Unified Platform Everywhere

Whether deployed on AWS, Azure, GCP, bare metal, or VMware, OpenShift provides the same developer experience and tooling everywhere.

🔁 Seamless Workload Portability

Containerized applications can move effortlessly across environments with Kubernetes-native orchestration.

📡 Advanced Cluster Management (ACM)

With Red Hat ACM, enterprises can:

Manage multiple clusters across environments

Apply governance policies consistently

Deploy apps across clusters using GitOps

🛡️ Built-in Security & Compliance

Leverage features like:

Integrated service mesh

Image scanning and policy enforcement

Centralized observability

⚠️ Challenges to Consider

Complexity in Management: Without centralized control, managing multiple clouds can become chaotic.

Data Transfer Costs: Moving data between clouds isn't free — plan carefully.

Latency & Network Reliability: Ensure your architecture supports distributed workloads efficiently.

Skill Gap: Cloud-native skills are essential; upskilling your team is a must.

📘 Best Practices for Success

Start with the workload — Map your applications to the best-fit environment.

Adopt containerization and microservices — For portability and resilience.

Use Infrastructure as Code — Automate deployments and configurations.

Enforce centralized policy and monitoring — For governance and visibility.

Train your teams — Invest in certifications like Red Hat DO480, DO280, and EX280.

🎯 Conclusion

A hybrid & multi-cloud strategy isn’t just a trend — it’s becoming a competitive necessity. With the right platform like Red Hat OpenShift Platform Plus, enterprises can bridge the gap between agility and control, enabling innovation without compromise.

Ready to future-proof your infrastructure? Hybrid cloud is the way forward — and OpenShift is the bridge.

For more info, Kindly follow: Hawkstack Technologies

#HybridCloud#MultiCloud#CloudStrategy#RedHatOpenShift#OpenShift#Kubernetes#DevOps#CloudNative#PlatformEngineering#ITModernization#CloudComputing#DigitalTransformation#RedHatTraining#DO480#ClusterManagement#redhat#hawkstack

0 notes

Text

Revolutionizing Infrastructure with Data Center Automation

Revolutionizing Infrastructure with One Union Times

In today’s rapidly evolving digital world, businesses are racing to meet increasing data demands while ensuring efficiency, security, and scalability. One Union Times is at the forefront of this transformation, offering smart technologies and expert strategies to modernize IT infrastructure. Among these innovations, data center automation software plays a vital role, streamlining operations and driving cost-efficiency. This article explores the latest developments, including Microsoft AI data center spending, data center optimization techniques, and the evolving landscape of cloud colocation pricing.

The Future is Automated: Data Center Automation Tools

Data center automation software and data center automation tools are essential for organizations aiming to reduce manual intervention and human error. By automating workflows such as server provisioning, patching, monitoring, and resource allocation, businesses can enhance uptime, reduce operational costs, and scale more effectively.

One Union Times specializes in providing end-to-end automation strategies, allowing IT teams to focus on innovation rather than repetitive maintenance tasks. Automation also strengthens compliance and improves overall service delivery across private, public, and hybrid environments.

Microsoft AI and the Rise of Intelligent Infrastructure

The recent surge in Microsoft AI data center spending reflects a broader industry trend—data centers are becoming more intelligent. Microsoft has been investing billions to build data centers powered by AI and machine learning, aiming to optimize energy consumption, cooling, and resource management.

One Union Times helps clients integrate similar AI-driven models into their own infrastructure. By leveraging AI, companies can predict outages, adjust cooling systems proactively, and manage workloads dynamically—cutting costs and improving efficiency.

Optimizing the Core: Data Center Optimization Techniques

A major focus of digital transformation is maximizing the performance of existing infrastructure. Data center optimization techniques include:

Virtualization to consolidate workloads

AI-based monitoring for predictive maintenance

Energy-efficient hardware upgrades

Tiered storage systems for improved data access

With these techniques, One Union Times ensures businesses get the best return on their IT investments while also reducing their environmental footprint.

Colocation or Cloud? Understanding the Hosting Landscape

Choosing between colocation vs managed hosting vs cloud can be overwhelming. Each option has unique advantages:

Colocation offers control with reduced overhead by renting space in a third-party data center.

Managed hosting includes maintenance and support services.

Cloud provides scalable, on-demand resources with minimal management responsibilities.

For hybrid needs, colocation cloud solutions offer a blend of flexibility and control. One Union Times assists businesses in assessing their requirements and designing an optimal strategy that balances cost, performance, and compliance.

Demystifying Cloud Colocation Pricing

Understanding cloud colocation pricing is essential for making informed infrastructure decisions. Factors influencing costs include:

Power consumption (measured in kW)

Rack space and U-height

Network bandwidth

Physical and logical security measures

Support and service-level agreements

One Union Times provides transparent pricing models that help businesses forecast and manage their budgets effectively. By offering cost comparisons and ROI analyses, clients can choose the best-fit solution without surprise expenses.

HPC Data Centers: Powering Advanced Computing

High-performance computing (HPC) is critical for industries like research, financial services, and genomics. HPC data centers are designed to support massive parallel processing workloads, high-speed networking, and specialized cooling requirements.

One Union Times partners with organizations to design and implement HPC data centers that meet exacting demands. Their expertise ensures performance optimization, data integrity, and scalability—all while maintaining energy efficiency and cost-effectiveness.

Data Center Firewalls: Guarding Critical Infrastructure

Cybersecurity remains a top priority for any data-driven business. A data center firewall is the first line of defense, protecting infrastructure against unauthorized access and cyberattacks.

Modern data centers rely on:

Next-generation firewalls (NGFWs)

Intrusion detection and prevention systems (IDPS)

Zero-trust security models

One Union Times integrates robust data center firewall solutions with threat intelligence, ensuring comprehensive protection for both on-premises and cloud environments.

Meeting Big Data Needs: Large Data Storage Solutions

The explosion of data from IoT devices, AI applications, and digital platforms demands large data storage solutions that are scalable, secure, and efficient. These solutions must accommodate structured and unstructured data, ensure rapid access, and support data lifecycle management.

From software-defined storage (SDS) to hybrid cloud storage, One Union Times offers architectures that meet the most demanding storage requirements—empowering enterprises to store, manage, and analyze data at scale.

The Role of Colocation Cloud in Hybrid Strategies

Colocation cloud represents the best of both worlds, enabling organizations to retain control over hardware while leveraging cloud capabilities like elasticity, analytics, and AI. This model is ideal for businesses transitioning from legacy infrastructure to full cloud adoption.

By partnering with One Union Times, companies gain access to data centers optimized for colocation cloud services—with high-speed connectivity, redundant power, and state-of-the-art cooling systems.

Why Choose One Union Times?

What sets One Union Times apart is their commitment to innovation, scalability, and security. Whether you’re exploring data center automation tools, evaluating cloud colocation pricing, or building HPC data centers, their team provides expert guidance every step of the way.

Benefits of working with One Union Times include:

Customized infrastructure strategies

Cost-effective hosting solutions

End-to-end automation and optimization

Proactive cybersecurity measures

Support for hybrid and multi-cloud environments

Conclusion

As enterprises navigate the complexities of modern IT infrastructure, the role of advanced solutions like data center automation software, colocation cloud, and HPC data centers becomes more critical. With the surge in Microsoft AI data center spending and the need for intelligent data center optimization techniques, businesses must align with a technology partner that understands both current challenges and future opportunities.

One Union Times empowers organizations to innovate with confidence—by offering secure, scalable, and cost-efficient data center solutions that drive business success in the digital era.

0 notes

Text

Why SOC 2 Compliance Is Essential for Modern Cloud-Based Businesses

For any organization offering digital services, especially those hosted in the cloud, SOC 2 Compliance is not just a technical checkbox—it’s a powerful signal of credibility, security, and accountability. As more companies handle confidential customer data online, meeting the SOC 2 standard has become a critical part of doing business, particularly in industries like technology, finance, and healthcare.

SOC 2, established by the American Institute of Certified Public Accountants (AICPA), is a framework for managing customer data based on five Trust Services Criteria: security, availability, processing integrity, confidentiality, and privacy. These principles ensure that a company not only protects data but also processes it in a way that is consistent, accurate, and secure.

There are two types of SOC 2 reports. The Type I report focuses on the design of internal controls at a specific point in time. The Type II report, which is often preferred by enterprise clients, assesses the operating effectiveness of those controls over a longer period (usually between 3 and 12 months). The distinction is important because it shows whether your controls work in practice, not just on paper.

SOC 2 Compliance has become a common requirement in vendor management processes. Businesses that want to work with large corporations or government entities often find that having a SOC 2 report is mandatory. It shows that your organization has passed a rigorous evaluation and that it can be trusted to manage sensitive data responsibly.

Preparing for SOC 2 involves several steps. Most organizations start with a readiness assessment or gap analysis to compare their current practices with SOC 2 requirements. This helps identify weaknesses in areas such as access control, encryption, network security, system monitoring, and incident response.

Once the necessary improvements are made, a third-party auditor—typically a CPA firm—conducts the official audit. This process includes evaluating documentation, observing processes, and verifying the effectiveness of controls. The result is a detailed report that you can share with clients, partners, and investors as proof of your secure practices.

SOC 2 Compliance also plays a role in building a security-first culture. By going through the process, your team becomes more aware of best practices around data privacy, access control, and operational efficiency. This helps reduce risk not just from external threats, but also from internal oversights.

While SOC 2 can be complex, there are modern tools that help simplify the journey. Compliance automation platforms can streamline evidence collection, track control implementation, and keep audit processes organized. These platforms are especially useful for startups and growing tech companies trying to scale quickly without sacrificing security.

In summary, SOC 2 Compliance is a strategic asset for any business that stores, processes, or transmits customer data. It builds trust, enhances credibility, and opens the door to new opportunities. As the digital economy continues to grow, companies that prioritize SOC 2 will be better equipped to succeed in a security-conscious marketplace.

FOLLOW MORE INFO:

1 note

·

View note

Text

Multi-Cloud vs. Hybrid Cloud: Which Strategy Fits Your Organization?

As cloud computing matures, the choice between multi-cloud and hybrid cloud strategies has become a pivotal decision for IT leaders. While both models offer flexibility, cost optimization, and scalability, they serve different business needs and technical purposes. Understanding the nuances between the two can make or break your digital transformation initiative.

Understanding the Basics

What is Hybrid Cloud?

A hybrid cloud strategy integrates public cloud services with private cloud or on-premises infrastructure. This model enables data and applications to move seamlessly between environments, offering a blend of control, performance, and scalability.

Use Cases:

Running mission-critical workloads on-premises while offloading less sensitive workloads to the cloud.

Supporting cloud burst capabilities during peak usage.

Meeting strict regulatory or data residency requirements.

What is Multi-Cloud?

A multi-cloud strategy uses multiple public cloud providers—such as AWS, Azure, and Google Cloud—simultaneously. Unlike hybrid cloud, multi-cloud does not necessarily include private infrastructure.

Use Cases:

Avoiding vendor lock-in.

Leveraging best-in-class services from different providers.

Enhancing resilience and availability by distributing workloads.

Key Differences at a Glance

Feature

Hybrid Cloud

Multi-Cloud

Composition

Public + Private/On-Premises

Multiple Public Cloud Providers

Primary Objective

Flexibility, security, compliance

Redundancy, vendor leverage, performance

Typical Use Cases

Regulated industries, legacy integration

Global services, SaaS, distributed teams

Complexity

High (integration between private/public)

Very high (managing multiple cloud vendors)

Cost Optimization

Medium – Private infra can be expensive

High – Competitive pricing, spot instances

Strategic Considerations

1. Business Objectives

Start by identifying what you're trying to achieve. Hybrid cloud is often the go-to for enterprises with heavy legacy investments or compliance needs. Multi-cloud suits organizations looking for agility, innovation, and best-in-class solutions across providers.

2. Regulatory Requirements

Hybrid cloud is particularly attractive in highly regulated industries such as healthcare, finance, or government, where certain data must reside on-premises or within specific geographical boundaries.

3. Resilience & Risk Management

Multi-cloud can provide a robust business continuity strategy. By distributing workloads across providers, organizations can mitigate risks such as cloud provider outages or geopolitical disruptions.

4. Skillsets & Operational Overhead

Managing a hybrid environment demands strong DevOps and cloud-native capabilities. Multi-cloud, however, adds another layer of complexity—each provider has unique APIs, SLAs, and service offerings.

Invest in automation, orchestration tools (e.g., Terraform, Ansible), and monitoring platforms that support cross-cloud operations to reduce cognitive load on your teams.

Real-World Scenarios

Case 1: Financial Services Firm

A major financial institution may opt for hybrid cloud to keep core banking systems on-premises while using public cloud for analytics and mobile banking services. This enables them to meet strict compliance mandates without compromising agility.

Case 2: Global SaaS Provider

A SaaS company offering services across Europe, Asia, and North America may adopt a multi-cloud model to host services closer to end-users, reduce latency, and ensure redundancy.

Final Verdict: There’s No One-Size-Fits-All

Choosing between hybrid and multi-cloud isn't about picking the "better" architecture—it's about selecting the one that aligns with your technical needs, business goals, and regulatory landscape.

Some organizations even adopt both strategies in parallel—running a hybrid core for compliance and operational stability while leveraging multi-cloud for innovation and reach.

Recommendations

Conduct a Cloud Readiness Assessment.

Map out regulatory, performance, and security needs.

Build a vendor-agnostic architecture wherever possible.

Invest in unified management and observability tools.

Develop cloud skills across your organization.

Conclusion

Multi-cloud and hybrid cloud strategies are powerful in their own right. With thoughtful planning, skilled execution, and strategic alignment, either model can help your organization scale, innovate, and compete in a cloud-first world.

0 notes

Text

Why the Largest Data Centers in the US Are Core to Enterprise Expansion Strategies

As enterprises expand across digital and physical borders, the demand for seamless, secure, and scalable infrastructure has never been more urgent. Whether you’re a SaaS giant planning to enter a new market, or a Fortune 500 logistics company optimizing real-time analytics, the backbone of your growth is infrastructure. And in the United States, that infrastructure starts with the largest data centers in the US.

At DataCenterMart, we understand this inflection point better than anyone. We see first-hand how our partners and clients leverage data center solutions to unlock growth at scale. This blog explores why the biggest data centers in the US are more than just facilities—they're growth engines.

Global-Scale Capacity, National Reach

The largest data centers in the US are built with scale in mind. With over a million square feet of floor space, 100+ megawatts of power, and cutting-edge cooling systems, these facilities are designed to host the workloads of tomorrow. For enterprise clients, this translates to instant access to capacity, on-demand expansion, and the ability to deploy globally from a single point of control.

When an e-commerce platform needed to scale during peak holiday traffic, DataCenterMart helped them connect with a hyperscale certified data center in Virginia. The result? A 4x increase in compute power provisioned in under 48 hours—without lifting a finger internally.

Strategic Locations in Major Availability Zones

Growth isn’t just about space—it’s about proximity. The biggest data centers in the US are strategically located near major urban hubs, fiber optic routes, subsea cable landings, and power grids. This geographical advantage ensures low latency, regulatory compliance, and better redundancy.

Whether it's Northern Virginia, Silicon Valley, Dallas, or Chicago, US data centers located in these regions provide businesses with the best possible foundation for multi-region deployments and disaster recovery. Our marketplace simplifies how clients access these zones, offering full transparency on location benefits and connectivity profiles.

ESG and Sustainability for the Future

ESG (Environmental, Social, Governance) initiatives are no longer optional for expanding enterprises—they are a core part of boardroom strategies. More businesses are seeking certified data centers that meet LEED standards, use renewable energy, and offer transparent reporting on emissions and energy usage.

The largest data centers in the US are leading the sustainability charge with innovative solutions like waste heat reuse, on-site solar, and modular cooling. At DataCenterMart, we actively vet our partners based on ESG performance, helping clients align their infrastructure growth with their sustainability mandates.

Resilience and Business Continuity

You can’t grow a business on shaky ground. That’s why enterprises prioritize data center solutions with built-in resilience. This includes everything from N+2 redundancy and dual power feeds to seismic-proof construction and geographically redundant availability zones.

Moreover, our clients benefit from robust data center management services—from proactive monitoring to white-glove deployment support. Whether you’re entering new markets or scaling up existing workloads, uptime and continuity are guaranteed.

Seamless Integration with Multi-Cloud and Edge

Growth is increasingly hybrid. Enterprises want to mix and match public cloud, private infrastructure, and edge computing nodes. The biggest data centers in the US are built to support this integration, offering high-density networking, API-level orchestration, and cloud onramps from AWS, Azure, and Google Cloud.