#Benchmark construction

Explore tagged Tumblr posts

Text

Effective Resource Management Tips for Construction Superintendents

Contractors are supported in managing energy performance and staying on top of project needs. The tools provided make tasks like energy audits and meeting compliance rules easier. With a focus on making projects more efficient and sustainable, contractors are guided in managing their work smoothly and reaching their project goals.

1 note

·

View note

Text

How OpenAI’s o3 and o4-mini Models Are Revolutionizing Visual Analysis and Coding

New Post has been published on https://thedigitalinsider.com/how-openais-o3-and-o4-mini-models-are-revolutionizing-visual-analysis-and-coding/

How OpenAI’s o3 and o4-mini Models Are Revolutionizing Visual Analysis and Coding

In April 2025, OpenAI introduced its most advanced models to date, o3 and o4-mini. These models represent a major step forward in the field of Artificial Intelligence (AI), offering new capabilities in visual analysis and coding support. With their strong reasoning skills and ability to work with both text and images, o3 and o4-mini can handle a variety of tasks more efficiently.

The release of these models also highlights their impressive performance. For instance, o3 and o4-mini achieved a remarkable 92.7% accuracy in mathematical problem-solving on the AIME benchmark, surpassing the performance of their predecessors. This level of precision, combined with their ability to process diverse data types such as code, images, diagrams, and more, opens new possibilities for developers, data scientists, and UX designers.

By automating tasks that traditionally require manual effort, such as debugging, documentation generation, and visual data interpretation, these models are transforming the way AI-driven applications are built. Whether it is in development, data science, or other sectors, o3 and o4-mini are powerful tools that support the creation of smarter systems and more effective solutions, enabling industries to tackle complex challenges with greater ease.

Key Technical Advancements in o3 and o4-mini Models

OpenAI’s o3 and o4-mini models bring important improvements in AI that help developers work more efficiently. These models combine a better understanding of context with the ability to handle both text and images together, making development faster and more accurate.

Advanced Context Handling and Multimodal Integration

One of the distinguishing features of the o3 and o4-mini models is their ability to handle up to 200,000 tokens in a single context. This enhancement enables developers to input entire source code files or large codebases, making the process faster and more efficient. Previously, developers had to divide large projects into smaller parts for analysis, which could lead to missed insights or errors.

With the new context window, the models can analyze the full scope of the code at once, providing more accurate and reliable suggestions, error corrections, and optimizations. This is particularly beneficial for large-scale projects, where understanding the entire context is important to ensuring smooth functionality and avoiding costly mistakes.

Additionally, the o3 and o4-mini models bring the power of native multimodal capabilities. They can now process both text and visual inputs together, eliminating the need for separate systems for image interpretation. This integration enables new possibilities, such as real-time debugging through screenshots or UI scans, automatic documentation generation that includes visual elements, and a direct understanding of design diagrams. By combining text and visuals in one workflow, developers can move more efficiently through tasks with fewer distractions and delays.

Precision, Safety, and Efficiency at Scale

Safety and accuracy are central to the design of o3 and o4-mini. OpenAI’s deliberative alignment framework ensures that the models act in line with the user’s intentions. Before executing any task, the system checks whether the action aligns with the user’s goals. This is especially important in high-stakes environments like healthcare or finance, where even small mistakes can have significant consequences. By adding this safety layer, OpenAI ensures that the AI works with precision and reduces the risks of unintended outcomes.

To further enhance efficiency, these models support tool chaining and parallel API calls. This means the AI can run multiple tasks at the same time, such as generating code, running tests, and analyzing visual data, without having to wait for one task to finish before starting another. Developers can input a design mockup, receive immediate feedback on the corresponding code, and run automated tests while the AI processes the visual design and generates documentation. This parallel processing accelerates workflows, making the development process smoother and more productive.

Transforming Coding Workflows with AI-Powered Features

The o3 and o4-mini models introduce several features that significantly improve development efficiency. One key feature is real-time code analysis, where the models can instantly analyze screenshots or UI scans to detect errors, performance issues, and security vulnerabilities. This allows developers to identify and resolve problems quickly.

Additionally, the models offer automated debugging. When developers encounter errors, they can upload a screenshot of the issue, and the models will pinpoint the cause and suggest solutions. This reduces the time spent troubleshooting and enables developers to move forward with their work more efficiently.

Another important feature is context-aware documentation generation. o3 and o4-mini can automatically generate detailed documentation that stays current with the latest changes in the code. This eliminates the need for developers to manually update documentation, ensuring that it remains accurate and up-to-date.

A practical example of the models’ capabilities is in API integration. o3 and o4-mini can analyze Postman collections through screenshots and automatically generate API endpoint mappings. This significantly reduces integration time compared to older models, accelerating the process of linking services.

Advancements in Visual Analysis

OpenAI’s o3 and o4-mini models bring significant advancements in visual data processing, offering enhanced capabilities for analyzing images. One of the key features is their advanced OCR (optical character recognition), which allows the models to extract and interpret text from images. This is especially useful in areas like software engineering, architecture, and design, where technical diagrams, flowcharts, and architectural plans are integral to communication and decision-making.

In addition to text extraction, o3 and o4-mini can automatically improve the quality of blurry or low-resolution images. Using advanced algorithms, these models enhance image clarity, ensuring a more accurate interpretation of visual content, even when the original image quality is suboptimal.

Another powerful feature is their ability to perform 3D spatial reasoning from 2D blueprints. This allows the models to analyze 2D designs and infer 3D relationships, making them highly valuable for industries like construction and manufacturing, where visualizing physical spaces and objects from 2D plans is essential.

Cost-Benefit Analysis: When to Choose Which Model

When choosing between OpenAI’s o3 and o4-mini models, the decision primarily depends on the balance between cost and the level of performance required for the task at hand.

The o3 model is best suited for tasks that demand high precision and accuracy. It excels in fields such as complex research and development (R&D) or scientific applications, where advanced reasoning capabilities and a larger context window are necessary. The large context window and powerful reasoning abilities of o3 are especially beneficial for tasks like AI model training, scientific data analysis, and high-stakes applications where even small errors can have significant consequences. While it comes at a higher cost, its enhanced precision justifies the investment for tasks that demand this level of detail and depth.

In contrast, the o4-mini model provides a more cost-effective solution while still offering strong performance. It delivers processing speeds suitable for larger-scale software development tasks, automation, and API integrations where cost efficiency and speed are more critical than extreme precision. The o4-mini model is significantly more cost-efficient than the o3, offering a more affordable option for developers working on everyday projects that do not require the advanced capabilities and precision of the o3. This makes the o4-mini ideal for applications that prioritize speed and cost-effectiveness without needing the full range of features provided by the o3.

For teams or projects focused on visual analysis, coding, and automation, o4-mini provides a more affordable alternative without compromising throughput. However, for projects requiring in-depth analysis or where precision is critical, the o3 model is the better choice. Both models have their strengths, and the decision depends on the specific demands of the project, ensuring the right balance of cost, speed, and performance.

The Bottom Line

In conclusion, OpenAI’s o3 and o4-mini models represent a transformative shift in AI, particularly in how developers approach coding and visual analysis. By offering enhanced context handling, multimodal capabilities, and powerful reasoning, these models empower developers to streamline workflows and improve productivity.

Whether for precision-driven research or cost-effective, high-speed tasks, these models provide adaptable solutions to meet diverse needs. They are essential tools for driving innovation and solving complex challenges across industries.

#000#2025#3d#ai#ai model#AI model training#AI-powered#Algorithms#amp#Analysis#API#applications#approach#architecture#artificial#Artificial Intelligence#automation#benchmark#character recognition#code#coding#Collections#communication#construction#content#cost efficiency#data#data analysis#data processing#data science

0 notes

Text

The "Lucky Vicky" Mindset

The Lucky Vicky Mindset, inspired by K-pop idol Jang Wonyoung from the gg ive , emphasizes a positive, resilient approach to life. This mindset encourages self-improvement, focusing on personal strengths, and maintaining a positive outlook even in challenging situations.

୨ৎ Key Principles of the Lucky Vicky Mindset

1. Born Strong from the Start

- Understand that mistakes are opportunities for growth.

- 💌 Embrace failures as learning experiences. If you fail a test or face a setback, view it as a chance to learn and grow stronger.

2. Maintain Your Own Pace

- Avoid comparing yourself to others and focus on your own journey.

- 💌 Set personal goals and milestones. Celebrate your progress, no matter how small. Track your improvement and stay motivated by your achievements.

3. Focus on Strengths Rather Than Weaknesses

- Concentrate on developing your strengths instead of fixating on weaknesses.

- 💌 Identify your unique strengths and find ways to use them to your advantage. Work on improving areas of weakness without letting them overshadow your strengths.

୨ৎ Practical Steps to Adopt the Lucky Vicky Mindset

1. Daily Affirmations

- Start each day with positive affirmations that reinforce your strengths and potential. For example, “I am capable of achieving my goals,” or “I learn from my mistakes and grow stronger.”

2. Reflect and Reframe

- When faced with a setback, reflect on what happened and how you can learn from it. Reframe negative thoughts into positive ones. Instead of thinking, “I failed,” consider, “I learned a valuable lesson.”

3. Goal Setting

- Set specific, achievable goals that align with your strengths and interests. Break them down into smaller, manageable tasks to track your progress and maintain motivation.

4. Mindfulness and Self-Care

- Practice mindfulness to stay focused on the present moment and reduce anxiety about the future. Incorporate self-care routines such as meditation, exercise, or hobbies you enjoy.

5. Surround Yourself with Positivity

- Surround yourself with positive influences, such as supportive friends, inspiring content, or motivational quotes. Engage with communities that uplift and encourage you.

୨ৎ Addressing Challenges

1. Dealing with Negative Feedback

- Understand that negative feedback is part of growth. Use it constructively to improve while not letting it undermine your self-worth. Assess feedback objectively and create a plan to address it.

2. Overcoming Jealousy

- Acknowledge feelings of jealousy without letting them control you. Use others' achievements as inspiration rather than a benchmark for your success. Remind yourself that everyone’s journey is unique.

3. Managing Self-Doubt

- Combat self-doubt by reflecting on your past achievements and the progress you've made. Keep a journal of your successes and revisit it when you need a confidence boost.

#bloomdiary#bloomivation#becoming that girl#glow up#wonyoungism#wonyoung#dream life#it girl#creator of my reality#divine feminine#it girl affirmations#love affirmations#self confidence#self growth#self love#self development#self improvement#seldarine drow#jang wonyoung#ive wonyoung

208 notes

·

View notes

Text

Reminder that if there is stuff you dislike about the updated graphics we are witnessing in the Benchmark or feel somethings could be implemented better. You can still chime in on the forums to provide feedback. There is still a couple months left. And they've been addressing issues a step at a time with each major hurdle. These updates are still not set in stone and there is still time. So rather than just shit posting or raging at some changes make your voice heard. Be constructive in the criticism and remember they are listening particularly intently for these updates.

284 notes

·

View notes

Text

Some possible speedrunning/battlebots-style challenges for replicator engineers:

Smallest replicator

Multiple passes allowed

Benchmark item is large/complex

All fabrication must be done by the replicator, for example you have to bolt things together by replicating the bolts into the piece, so the area to be bolted needs to fit inside your tiny replicator

Ranked by smallest replicator volume first, then # of passes second

Simplest pattern

Must use standard replicators

Given the benchmark item to scan

Find out a way to shorten the pattern, codegolf style

Can do multiple passes, but again, the actual fabrication has to be done by the replicator, all you can do is position the item

Ranked by shortest pattern first, fewest passes if there’s a tie

Similar category for lowest energy usage

Adversarial

Replicator is provided

Actual benchmark item is blocked in the replicator

Competitors must find unblocked precursor components or chemicals that can be used to construct the item

Some out-of-replicator fabrication is allowed, but competitors can only use things made by the replicator or available in the room (e.g. air)

Can’t bring parts from home… however, this is enforced by scanners, and you’re allowed to bring items if you can trick the scanners

Fewest steps or fastest time wins

Every year, last year’s workarounds get added to the blocklist

Variant: instead of blocking patterns, the replicator only has a preset list of allowed patterns; can you fashion this item out of spaghetti, raktajino, and ferengi tooth sharpeners?

(the results from this last competition are closely followed by Starfleet whitehats, who use the information to update their own replicators’ blocklists for dangerous items)

#star trek#star trek meta#star trek shitposting#ds9#deep space nine#star trek voyager#star trek tng#the next generation#self-replicating metas#klonpeagposting

23 notes

·

View notes

Text

Excerpt from this New York Times story:

Last year was the hottest on record, and global average temperatures passed the benchmark of 1.5 degrees Celsius above preindustrial times for the first time. Simultaneously, the growth rate of the world’s energy demand rose sharply, nearly doubling over the previous 10-year average.

As it turns out, the record heat and rapidly rising energy demand were closely connected, according to findings from a new report from the International Energy Agency.

That’s because hotter weather led to increased use of cooling technologies like air-conditioning. Electricity-hungry appliances put a strain on the grid, and many utilities met the added demand by burning coal and natural gas.

All of this had the makings of a troubling feedback loop: A hotter world required more energy to cool down homes and offices, and what was readily available was fossil-fuel energy, which led to more planet-warming emissions. This dynamic is exactly what many countries are hoping to halt through the development of renewable energy and the construction of nuclear power plants.

Put another way, the I.E.A. estimated that if 2024’s extreme weather hadn’t happened — that is, if weather was exactly the same in 2024 as in 2023 — the global increase in carbon emissions for the year would have been cut in half.

It’s not all bad news: Increasingly, the global economy is growing faster than carbon emissions. “If we want to find the silver lining, we see that there is a continuous decoupling of economic growth from emissions growth,” said Fatih Birol, the executive director of the agency.

23 notes

·

View notes

Text

Friday, June 20, 2025, Kaleigh Harrison

Peru’s largest solar installation—the 300 MWdc San Martín plant—has officially entered commercial operation in Arequipa’s La Joya district. Developed by Zelestra and completed in under 18 months, the plant delivers power to Kallpa Generación through a long-term PPA. Its projected annual output of 830 GWh is expected to power approximately 440,000 homes and prevent more than 166,000 tons of CO₂ emissions each year.

The utility-scale project includes 450,000 solar modules and was delivered by Zelestra’s internal EPC division. This approach enabled the team to stay on schedule and within budget, showcasing a controlled, vertically integrated execution model that minimized delays and avoided cost overruns. Employment during peak construction reached 900 workers, offering a temporary economic lift to the region.

Latin America Expansion Gains Momentum as San Martín Sets Benchmark

The launch of San Martín marks a key step in Zelestra’s broader regional strategy. The company is currently advancing over 7 GW of solar and battery storage projects across Peru, Chile, and Colombia, with 1.7 GW under contract. As more countries in Latin America pursue decarbonization targets, San Martín serves as a model for how clean energy can scale rapidly under the right development framework.

#peru#solar power#south america#latin america#solar energy#decarbonization#good news#environmentalism#science#environment#climate change#climate crisis#green energy

14 notes

·

View notes

Note

Hi I have read many of your James posts and so far I agree with all of them. What gets me wondering however is someone like Lily Evans - potrayed as the saintly morally good character - dating someone like James - an entitled bully who kept his jerkish behavior even after he supposedly changed. Who do you think she was? Did she excused James's behavior because she found him attractive and thought she could change him? Or that he would change for her? Was she downplaying his faults because she fell in love? Or was she simply too naive? I cannot believe a person who would marry a person with so many faults like James wouldn't also be far off from being jerkish themselves. And what about her relationship with Severus? Was she as attached to him as he was? Why was she friends with him for so long if she was excusing his prejudice for years? I'm so conflicted about her. The author implies she is something but the text kind of goes against that. As someone who is pro snape and knows Lily was a big part of his life what do you think about her, her motives, actions or relationships? I love your opinions a lot btw never stop sharing them😄

I looove to talk about Lily because her character sucks. And not because of her, but because HOW Rowling portrays her. Sooo.. Lets go! Lily is emblematic of a significant issue in the series: the tendency to use female characters as tools for male development rather than as complex individuals with their own arcs. In Lily’s case, her character functions primarily as a moral barometer—she exists to reflect the “goodness” or “badness” of the men around her. Her choices and relationships with James and Severus are less about her own desires, values, or growth and more about how they impact these two men. This framing does Lily a disservice, stripping her of agency and interiority while simultaneously burdening her with the narrative role of deciding who is worthy and who is not

Rowling’s portrayal of Lily is heavily idealized. She is the perfect mother who sacrifices herself for her son, the brilliant and talented witch who stands out even among her peers, and the moral compass who chooses “good” (James) over “evil” (Severus). This construction paints her as infallible, a paragon of virtue, and the embodiment of love and selflessness. However, this saintly image is rarely interrogated within the text.

The problem lies in the dissonance between how Lily is presented and the decisions she makes. If she is meant to represent moral perfection, her marriage to James —a character whose flaws remain evident even after his supposed redemption—creates a contradiction. James, even as an adult, retains the arrogance and hostility that defined his youth, particularly in his continued disdain for Snape. If Lily was as discerning and principled as the narrative suggests, why would she align herself with someone whose values and behavior contradict the ideal of Gryffindor bravery and fairness?

This contradiction weakens her role as a moral arbiter, making her decisions feel less like the result of her own judgment and more like a narrative convenience to validate James’s redemption. By choosing James, she implicitly forgives or overlooks his past bullying, signaling that his actions were excusable or irrelevant to his worthiness as a partner. This not only diminishes the impact of James’s flaws but also undermines Lily’s supposed moral clarity.

Lily’s role mirrors a common, harmful trope: the woman as a moral compass or fixer for flawed men. Her purpose becomes external rather than internal—she isn’t there to pursue her own goals, ideals, or struggles but to serve as a benchmark for others’ morality. It’s as if Lily’s worth as a character is determined solely by her relationships with James and Severus rather than her own journey.

By failing to give Lily meaningful contradictions or flaws, Rowling inadvertently creates a character who feels passive and complicit. Her saintly veneer prevents her from being truly human, as real people are defined by their contradictions, growth, and mistakes. Yet Lily is static, existing only to highlight James’s "redemption" or Severus’s "fall."

This lack of depth reflects a broader issue with how women are often written in male-centric narratives: their stories are secondary, their personalities flattened, and their actions only meaningful in the context of the men they influence. It’s a stark reminder of the gender bias present in the series, where women like Lily, Narcissa, and even Hermione are often used to drive or validate male characters’ arcs rather than having their own fully developed trajectories.

Regarding Lily and Severus relationship, their bond begins in a world where both feel alienated. Severus, growing up in the oppressive and neglectful environment of Spinner’s End, finds in Lily not only a companion but a source of light and warmth that he lacks at home. For Lily, Severus is her first glimpse into the magical world, a realm that she belongs to but doesn’t yet understand. Their friendship is symbiotic in its earliest stages: Severus offers Lily knowledge of her magical identity, while she provides him with acceptance and validation. However, this connection, while powerful in childhood, rests on a fragile foundation—one that fails to evolve as their circumstances and priorities shift. When they arrive at Hogwarts, the cracks in their bond begin to surface. While Lily flourishes socially, Severus becomes increasingly marginalized and becomes a frequent target of James Potter and Sirius Black. This social isolation only deepens his reliance on Lily, but for her, this dependency becomes increasingly difficult to sustain.

It’s important to recognize that Lily’s discomfort isn’t only moral; it’s also social. By the time of their falling out, Lily has fully integrated into the Gryffindor social circle, gaining the admiration of her peers and, most notably, James Potter. Her association with Severus, now firmly positioned as an outsider and a future Death Eater, risks undermining her own social standing. While her final break with Severus is framed as a principled decision, it’s difficult to ignore the role that social dynamics might have played in her choice.

It’s worth considering that Lily’s shift toward James wasn’t necessarily a sudden change of heart but rather the culmination of an attraction that may have existed all along, one rooted in what he represented rather than who he was. James Potter, as the embodiment of magical privilege—a pure-blood, wealthy, socially adored Gryffindor golden boy—offered Lily something that Severus never could: validation within the magical world’s elite.

Though Lily was undoubtedly principled, it’s plausible that, beneath her moral convictions, there was a more human, and yes, superficial, desire for recognition and security in a world that was, for her, both wondrous and alien. Coming from a working-class, Muggle-born background, Lily would have been acutely aware of her outsider status, no matter how talented or well-liked she became. James’s relentless pursuit of her, despite his arrogance and bullying tendencies, may have been flattering in ways that bolstered her sense of belonging. James’s attention wasn’t just personal—it was symbolic. His interest in her, as someone who could have easily chosen a pure-blood witch from his own social echelon, signaled to her and to others that she was not only worthy of respect but desirable within the upper echelons of wizarding society.

This dynamic raises uncomfortable questions about Lily’s character. Could it be that she tolerated James’s antics, not because she believed he would change for her, but because she enjoyed the social validation his affection brought her? Interestingly, this interpretation aligns Lily more closely with her sister Petunia than one might initially expect. Petunia’s marriage to Vernon provided her with the stability and status she craved within the Muggle world. Both sisters may have sought partners who could anchor them in environments where they otherwise felt insecure. For Petunia, that meant latching onto the image of suburban perfection through Vernon. For Lily, it may have meant aligning herself with someone like James, whose wealth, status, and pure-blood background offered her a kind of social and cultural security in the magical world.

If we view Lily’s relationship with James through this lens, her character becomes far less idealized and far more human. Rather than being the moral paragon the series portrays, she emerges as a young woman navigating an uncertain world, making choices that are as practical as they are principled. While it’s clear she disapproved of James’s bullying, it’s equally possible that his persistence, confidence, and status were qualities she found increasingly difficult to resist—not because they aligned with her values, but because they appealed to her insecurities.

It’s also worth noting that Lily’s final break with Severus coincided with her growing relationship with James. This timing is telling. Severus, a social outcast from a poor background, represented the antithesis of James. By cutting ties with Severus, Lily not only distanced herself from the moral ambiguities of his choices but also from the social liabilities he represented. Aligning with James, by contrast, placed her firmly within the Gryffindor elite—a position that would have offered her both social protection and personal validation. And this whole perspective is much more interesting than her image as a moral compass for the men around her. Unfortunately, as with many of her characters, Rowling didn’t put any effort into giving us definitive answers; she just insisted on that unhealthy, idealized view of motherhood and the idea that everything is forgiven if you're on the "right" side and rich and popular.

Sorry for the long text, but whenever the topic of Lily comes up, I tend to go on and on, haha.

#lily evans#lily evans potter#lily potter#lily evans meta#lily potter meta#james potter#severus sname#pro severus snape#snapedom#severus snape fandom#harry potter meta#harry potter

51 notes

·

View notes

Text

A young entrepreneur who was among the earliest known recruiters for Elon Musk’s so-called Department of Government Efficiency (DOGE) has a new, related gig—and he’s hiring. Anthony Jancso, cofounder of AccelerateX, a government tech startup, is looking for technologists to work on a project that aims to have artificial intelligence perform tasks that are currently the responsibility of tens of thousands of federal workers.

Jancso, a former Palantir employee, wrote in a Slack with about 2000 Palantir alumni in it that he’s hiring for a “DOGE orthogonal project to design benchmarks and deploy AI agents across live workflows in federal agencies,” according to an April 21 post reviewed by WIRED. Agents are programs that can perform work autonomously.

“We’ve identified over 300 roles with almost full-process standardization, freeing up at least 70k FTEs for higher-impact work over the next year,” he continued, essentially claiming that tens of thousands of federal employees could see many aspects of their job automated and replaced by these AI agents. Workers for the project, he wrote, would be based on site in Washington, DC, and would not require a security clearance; it isn’t clear for whom they would work. Palantir did not respond to requests for comment.

The post was not well received. Eight people reacted with clown face emojis, three reacted with a custom emoji of a man licking a boot, two reacted with custom emoji of Joaquin Phoenix giving a thumbs down in the movie Gladiator, and three reacted with a custom emoji with the word “Fascist.” Three responded with a heart emoji.

“DOGE does not seem interested in finding ‘higher impact work’ for federal employees,” one person said in a comment that received 11 heart reactions. “You’re complicit in firing 70k federal employees and replacing them with shitty autocorrect.”

“Tbf we’re all going to be replaced with shitty autocorrect (written by chatgpt),” another person commented, which received one “+1” reaction.

“How ‘DOGE orthogonal’ is it? Like, does it still require Kremlin oversight?” another person said in a comment that received five reactions with a fire emoji. “Or do they just use your credentials to log in later?”

Got a Tip?Are you a current or former government employee who wants to talk about what's happening? We'd like to hear from you. Using a nonwork phone or computer, contact the reporter securely on Signal at carolinehaskins.61 and vittoria89.82.

AccelerateX was originally called AccelerateSF, which VentureBeat reported in 2023 had received support from OpenAI and Anthropic. In its earliest incarnation, AccelerateSF hosted a hackathon for AI developers aimed at using the technology to solve San Francisco’s social problems. According to a 2023 Mission Local story, for instance, Jancso proposed that using large language models to help businesses fill out permit forms to streamline the construction paperwork process might help drive down housing prices. (OpenAI did not respond to a request for comment. Anthropic spokesperson Danielle Ghiglieri tells WIRED that the company "never invested in AccelerateX/SF,” but did sponsor a hackathon AccelerateSF hosted in 2023 by providing free access to its API usage at a time when its Claude API “was still in beta.”)

In 2024, the mission pivoted, with the venture becoming known as AccelerateX. In a post on X announcing the change, the company posted, “Outdated tech is dragging down the US Government. Legacy vendors sell broken systems at increasingly steep prices. This hurts every American citizen.” AccelerateX did not respond to a request for comment.

According to sources with direct knowledge, Jancso disclosed that AccelerateX had signed a partnership agreement with Palantir in 2024. According to the LinkedIn of someone described as one of AccelerateX’s cofounders, Rachel Yee, the company looks to have received funding from OpenAI’s Converge 2 Accelerator. Another of AccelerateSF’s cofounders, Kay Sorin, now works for OpenAI, having joined the company several months after that hackathon. Sorin and Yee did not respond to requests for comment.

Jancso’s cofounder, Jordan Wick, a former Waymo engineer, has been an active member of DOGE, appearing at several agencies over the past few months, including the Consumer Financial Protection Bureau, National Labor Relations Board, the Department of Labor, and the Department of Education. In 2023, Jancso attended a hackathon hosted by ScaleAI; WIRED found that another DOGE member, Ethan Shaotran, also attended the same hackathon.

Since its creation in the first days of the second Trump administration, DOGE has pushed the use of AI across agencies, even as it has sought to cut tens of thousands of federal jobs. At the Department of Veterans Affairs, a DOGE associate suggested using AI to write code for the agency’s website; at the General Services Administration, DOGE has rolled out the GSAi chatbot; the group has sought to automate the process of firing government employees with a tool called AutoRIF; and a DOGE operative at the Department of Housing and Urban Development is using AI tools to examine and propose changes to regulations. But experts say that deploying AI agents to do the work of 70,000 people would be tricky if not impossible.

A federal employee with knowledge of government contracting, who spoke to WIRED on the condition of anonymity because they were not authorized to speak to the press, says, “A lot of agencies have procedures that can differ widely based on their own rules and regulations, and so deploying AI agents across agencies at scale would likely be very difficult.”

Oren Etzioni, cofounder of the AI startup Vercept, says that while AI agents can be good at doing some things—like using an internet browser to conduct research—their outputs can still vary widely and be highly unreliable. For instance, customer service AI agents have invented nonexistent policies when trying to address user concerns. Even research, he says, requires a human to actually make sure what the AI is spitting out is correct.

“We want our government to be something that we can rely on, as opposed to something that is on the absolute bleeding edge,” says Etzioni. “We don't need it to be bureaucratic and slow, but if corporations haven't adopted this yet, is the government really where we want to be experimenting with the cutting edge AI?”

Etzioni says that AI agents are also not great 1-1 fits for job replacements. Rather, AI is able to do certain tasks or make others more efficient, but the idea that the technology could do the jobs of 70,000 employees would not be possible. “Unless you're using funny math,” he says, “no way.”

Jancso, first identified by WIRED in February, was one of the earliest recruiters for DOGE in the months before Donald Trump was inaugurated. In December, Jancso, who sources told WIRED said he had been recruited by Steve Davis, president of the Musk-founded Boring Company and a current member of DOGE, used the Palantir alumni group to recruit DOGE members. On December 2nd, 2024, he wrote, “I’m helping Elon’s team find tech talent for the Department of Government Efficiency (DOGE) in the new admin. This is a historic opportunity to build an efficient government, and to cut the federal budget by 1/3. If you’re interested in playing a role in this mission, please reach out in the next few days.”

According to one source at SpaceX, who asked to remain anonymous as they are not authorized to speak to the press, Jancso appeared to be one of the DOGE members who worked out of the company’s DC office in the days before inauguration along with several other people who would constitute some of DOGE’s earliest members. SpaceX did not respond to a request for comment.

Palantir was cofounded by Peter Thiel, a billionaire and longtime Trump supporter with close ties to Musk. Palantir, which provides data analytics tools to several government agencies including the Department of Defense and the Department of Homeland Security, has received billions of dollars in government contracts. During the second Trump administration, the company has been involved in helping to build a “mega API” to connect data from the Internal Revenue Service to other government agencies, and is working with Immigration and Customs Enforcement to create a massive surveillance platform to identify immigrants to target for deportation.

10 notes

·

View notes

Text

Page lengths of scenes

1 . Ik its probably WAYY to early to talk about this comic and AU , literally started it 5 days ago

But we really want to get into habit of sharing our process and progress more , both cuz gets us motivated , not feel pressure that "can Only talk about if perfect and done" , and cuz we love look back on old things and how developed

--

2 . So to start with , heres all the pages so far which is up to pg 26 fully roughed out ! (due to front matter , its 32 pages)

32 paper pages was important benchmark for us as that is point we wanted to reach before fully solidify anything , this cuz we did test bind of this ! (will get into in different post)

---

3a . As can see from just thumbnails alone if have read or even skimmed Haunted Hearts , GBR is VERY different from it visually , pacing , and what even happens at all

unlike HH , GBR is much more hyper focused with more of a goal , where HH went on long detours and focusing on wrong thing too long (this was because we were literally drawing pages on the go without much plan oop)

-

3b . We feel the much faster pacing and less dwelling on things may be seen as "what make it not as great compared to old ver" , it might be different taste now ,

but as said before and expand , instead of making one idea extend out for many pages or panels to get point across , it instead use few and asks reader to pay more attention and be in moment , it like a different balance .

---

4 . Page count is Really important , it not matter if print , it important to track how long each scene lasts in pages .

These here are just rough estimates as scenes blend into each other . see how they sort of last a similar amount of page time ? If read any well constructed graphic novel , you can see this pattern too where it seems scenes average a certain amount of pages . (example , in warrior cats graphic novel , it averages about 4-7 pages for each scene)

-

This not only helps with making sure reach a page count you want (if are) , this will also help you plan out your comic if you know what your scene page count average is , if making a scene too long or too short or if could fuse or remove things , find purpose within each page

It can also help compartmentalize n make longer comic into bite-sized chunks if you struggle with larger comics !

It also makes sure you dont waist pages and make most out of what can so ultimately less work for you and better story result !

-

Ik 4-7 pages sounds Very Small , but if you know what you're doing , you can do a Ton with very little . More pages not mean better , it how use page count .

but you dont have to keep to this specific count . In another oc comic we were working on , it averages 10 or so pages per scene as it dwells more on things and that how thats constructed from ground up

----

Ok thats all for now , lotsa fun 🐕

#ramble#tips#behind the scenes#art#comic making#Great Brilliant Radiance#Great Brilliant Radiance AU#GBR AU#undertale#undertale au#deltarune#deltarune au#clip studio paint

7 notes

·

View notes

Text

Using AI to Predict a Blockbuster Movie

New Post has been published on https://thedigitalinsider.com/using-ai-to-predict-a-blockbuster-movie/

Using AI to Predict a Blockbuster Movie

Although film and television are often seen as creative and open-ended industries, they have long been risk-averse. High production costs (which may soon lose the offsetting advantage of cheaper overseas locations, at least for US projects) and a fragmented production landscape make it difficult for independent companies to absorb a significant loss.

Therefore, over the past decade, the industry has taken a growing interest in whether machine learning can detect trends or patterns in how audiences respond to proposed film and television projects.

The main data sources remain the Nielsen system (which offers scale, though its roots lie in TV and advertising) and sample-based methods such as focus groups, which trade scale for curated demographics. This latter category also includes scorecard feedback from free movie previews – however, by that point, most of a production’s budget is already spent.

The ‘Big Hit’ Theory/Theories

Initially, ML systems leveraged traditional analysis methods such as linear regression, K-Nearest Neighbors, Stochastic Gradient Descent, Decision Tree and Forests, and Neural Networks, usually in various combinations nearer in style to pre-AI statistical analysis, such as a 2019 University of Central Florida initiative to forecast successful TV shows based on combinations of actors and writers (among other factors):

A 2018 study rated the performance of episodes based on combinations of characters and/or writer (most episodes were written by more than one person). Source: https://arxiv.org/pdf/1910.12589

The most relevant related work, at least that which is deployed in the wild (though often criticized) is in the field of recommender systems:

A typical video recommendation pipeline. Videos in the catalog are indexed using features that may be manually annotated or automatically extracted. Recommendations are generated in two stages by first selecting candidate videos and then ranking them according to a user profile inferred from viewing preferences. Source: https://www.frontiersin.org/journals/big-data/articles/10.3389/fdata.2023.1281614/full

However, these kinds of approaches analyze projects that are already successful. In the case of prospective new shows or movies, it is not clear what kind of ground truth would be most applicable – not least because changes in public taste, combined with improvements and augmentations of data sources, mean that decades of consistent data is usually not available.

This is an instance of the cold start problem, where recommendation systems must evaluate candidates without any prior interaction data. In such cases, traditional collaborative filtering breaks down, because it relies on patterns in user behavior (such as viewing, rating, or sharing) to generate predictions. The problem is that in the case of most new movies or shows, there is not yet enough audience feedback to support these methods.

Comcast Predicts

A new paper from Comcast Technology AI, in association with George Washington University, proposes a solution to this problem by prompting a language model with structured metadata about unreleased movies.

The inputs include cast, genre, synopsis, content rating, mood, and awards, with the model returning a ranked list of likely future hits.

The authors use the model’s output as a stand-in for audience interest when no engagement data is available, hoping to avoid early bias toward titles that are already well known.

The very short (three-page) paper, titled Predicting Movie Hits Before They Happen with LLMs, comes from six researchers at Comcast Technology AI, and one from GWU, and states:

‘Our results show that LLMs, when using movie metadata, can significantly outperform the baselines. This approach could serve as an assisted system for multiple use cases, enabling the automatic scoring of large volumes of new content released daily and weekly.

‘By providing early insights before editorial teams or algorithms have accumulated sufficient interaction data, LLMs can streamline the content review process.

‘With continuous improvements in LLM efficiency and the rise of recommendation agents, the insights from this work are valuable and adaptable to a wide range of domains.’

If the approach proves robust, it could reduce the industry’s reliance on retrospective metrics and heavily-promoted titles by introducing a scalable way to flag promising content prior to release. Thus, rather than waiting for user behavior to signal demand, editorial teams could receive early, metadata-driven forecasts of audience interest, potentially redistributing exposure across a wider range of new releases.

Method and Data

The authors outline a four-stage workflow: construction of a dedicated dataset from unreleased movie metadata; the establishment of a baseline model for comparison; the evaluation of apposite LLMs using both natural language reasoning and embedding-based prediction; and the optimization of outputs through prompt engineering in generative mode, using Meta’s Llama 3.1 and 3.3 language models.

Since, the authors state, no publicly available dataset offered a direct way to test their hypothesis (because most existing collections predate LLMs, and lack detailed metadata), they built a benchmark dataset from the Comcast entertainment platform, which serves tens of millions of users across direct and third-party interfaces.

The dataset tracks newly-released movies, and whether they later became popular, with popularity defined through user interactions.

The collection focuses on movies rather than series, and the authors state:

‘We focused on movies because they are less influenced by external knowledge than TV series, improving the reliability of experiments.’

Labels were assigned by analyzing the time it took for a title to become popular across different time windows and list sizes. The LLM was prompted with metadata fields such as genre, synopsis, rating, era, cast, crew, mood, awards, and character types.

For comparison, the authors used two baselines: a random ordering; and a Popular Embedding (PE) model (which we will come to shortly).

The project used large language models as the primary ranking method, generating ordered lists of movies with predicted popularity scores and accompanying justifications – and these outputs were shaped by prompt engineering strategies designed to guide the model’s predictions using structured metadata.

The prompting strategy framed the model as an ‘editorial assistant’ assigned with identifying which upcoming movies were most likely to become popular, based solely on structured metadata, and then tasked with reordering a fixed list of titles without introducing new items, and to return the output in JSON format.

Each response consisted of a ranked list, assigned popularity scores, justifications for the rankings, and references to any prior examples that influenced the outcome. These multiple levels of metadata were intended to improve the model’s contextual grasp, and its ability to anticipate future audience trends.

Tests

The experiment followed two main stages: initially, the authors tested several model variants to establish a baseline, involving the identification of the version which performed better than a random-ordering approach.

Second, they tested large language models in generative mode, by comparing their output to a stronger baseline, rather than a random ranking, raising the difficulty of the task.

This meant the models had to do better than a system that already showed some ability to predict which movies would become popular. As a result, the authors assert, the evaluation better reflected real-world conditions, where editorial teams and recommender systems are rarely choosing between a model and chance, but between competing systems with varying levels of predictive ability.

The Advantage of Ignorance

A key constraint in this setup was the time gap between the models’ knowledge cutoff and the actual release dates of the movies. Because the language models were trained on data that ended six to twelve months before the movies became available, they had no access to post-release information, ensuring that the predictions were based entirely on metadata, and not on any learned audience response.

Baseline Evaluation

To construct a baseline, the authors generated semantic representations of movie metadata using three embedding models: BERT V4; Linq-Embed-Mistral 7B; and Llama 3.3 70B, quantized to 8-bit precision to meet the constraints of the experimental environment.

Linq-Embed-Mistral was selected for inclusion due to its top position on the MTEB (Massive Text Embedding Benchmark) leaderboard.

Each model produced vector embeddings of candidate movies, which were then compared to the average embedding of the top one hundred most popular titles from the weeks preceding each movie’s release.

Popularity was inferred using cosine similarity between these embeddings, with higher similarity scores indicating higher predicted appeal. The ranking accuracy of each model was evaluated by measuring performance against a random ordering baseline.

Performance improvement of Popular Embedding models compared to a random baseline. Each model was tested using four metadata configurations: V1 includes only genre; V2 includes only synopsis; V3 combines genre, synopsis, content rating, character types, mood, and release era; V4 adds cast, crew, and awards to the V3 configuration. Results show how richer metadata inputs affect ranking accuracy. Source: https://arxiv.org/pdf/2505.02693

The results (shown above), demonstrate that BERT V4 and Linq-Embed-Mistral 7B delivered the strongest improvements in identifying the top three most popular titles, although both fell slightly short in predicting the single most popular item.

BERT was ultimately selected as the baseline model for comparison with the LLMs, as its efficiency and overall gains outweighed its limitations.

LLM Evaluation

The researchers assessed performance using two ranking approaches: pairwise and listwise. Pairwise ranking evaluates whether the model correctly orders one item relative to another; and listwise ranking considers the accuracy of the entire ordered list of candidates.

This combination made it possible to evaluate not only whether individual movie pairs were ranked correctly (local accuracy), but also how well the full list of candidates reflected the true popularity order (global accuracy).

Full, non-quantized models were employed to prevent performance loss, ensuring a consistent and reproducible comparison between LLM-based predictions and embedding-based baselines.

Metrics

To assess how effectively the language models predicted movie popularity, both ranking-based and classification-based metrics were used, with particular attention to identifying the top three most popular titles.

Four metrics were applied: Accuracy@1 measured how often the most popular item appeared in the first position; Reciprocal Rank captured how high the top actual item ranked in the predicted list by taking the inverse of its position; Normalized Discounted Cumulative Gain (NDCG@k) evaluated how well the entire ranking matched actual popularity, with higher scores indicating better alignment; and Recall@3 measured the proportion of truly popular titles that appeared in the model’s top three predictions.

Since most user engagement happens near the top of ranked menus, the evaluation focused on lower values of k, to reflect practical use cases.

Performance improvement of large language models over BERT V4, measured as percentage gains across ranking metrics. Results were averaged over ten runs per model-prompt combination, with the top two values highlighted. Reported figures reflect the average percentage improvement across all metrics.

The performance of Llama model 3.1 (8B), 3.1 (405B), and 3.3 (70B) was evaluated by measuring metric improvements relative to the earlier-established BERT V4 baseline. Each model was tested using a series of prompts, ranging from minimal to information-rich, to examine the effect of input detail on prediction quality.

The authors state:

‘The best performance is achieved when using Llama 3.1 (405B) with the most informative prompt, followed by Llama 3.3 (70B). Based on the observed trend, when using a complex and lengthy prompt (MD V4), a more complex language model generally leads to improved performance across various metrics. However, it is sensitive to the type of information added.’

Performance improved when cast awards were included as part of the prompt – in this case, the number of major awards received by the top five billed actors in each film. This richer metadata was part of the most detailed prompt configuration, outperforming a simpler version that excluded cast recognition. The benefit was most evident in the larger models, Llama 3.1 (405B) and 3.3 (70B), both of which showed stronger predictive accuracy when given this additional signal of prestige and audience familiarity.

By contrast, the smallest model, Llama 3.1 (8B), showed improved performance as prompts became slightly more detailed, progressing from genre to synopsis, but declined when more fields were added, suggesting that the model lacked the capacity to integrate complex prompts effectively, leading to weaker generalization.

When prompts were restricted to genre alone, all models under-performed against the baseline, demonstrating that limited metadata was insufficient to support meaningful predictions.

Conclusion

LLMs have become the poster child for generative AI, which might explain why they’re being put to work in areas where other methods could be a better fit. Even so, there’s still a lot we don’t know about what they can do across different industries, so it makes sense to give them a shot.

In this particular case, as with stock markets and weather forecasting, there is only a limited extent to which historical data can serve as the foundation of future predictions. In the case of movies and TV shows, the very delivery method is now a moving target, in contrast to the period between 1978-2011, when cable, satellite and portable media (VHS, DVD, et al.) represented a series of transitory or evolving historical disruptions.

Neither can any prediction method account for the extent to which the success or failure of other productions may influence the viability of a proposed property – and yet this is frequently the case in the movie and TV industry, which loves to ride a trend.

Nonetheless, when used thoughtfully, LLMs could help strengthen recommendation systems during the cold-start phase, offering useful support across a range of predictive methods.

First published Tuesday, May 6, 2025

#2023#2025#Advanced LLMs#advertising#agents#ai#Algorithms#Analysis#Anderson's Angle#approach#Articles#Artificial Intelligence#attention#Behavior#benchmark#BERT#Bias#collaborative#Collections#comcast#Companies#comparison#construction#content#continuous#data#data sources#dates#Decision Tree#domains

0 notes

Video

youtube

2025 Chevrolet Corvette ZR1 - Full Tech Specs and Performance

The 2025 Chevrolet Corvette ZR1 marks a new benchmark in American supercar engineering, combining advanced aerodynamics, powertrain innovation, and motorsport-derived performance.

At its core is the LT7 engine, a 5.5-liter twin-turbocharged V8 with a flat-plane crankshaft. This engine produces 1,064 HPr at 7,000 rpm and 825 pound-feet of torque at 6,000 rpm, making it the most powerful V8 engine ever by GM.

The LT7 is a significant evolution of the naturally aspirated LT6 found in the Corvette Z06. Key changes include forged aluminum pistons, strengthened connecting rods, and twin 76 mm ball-bearing turbochargers integrated into the exhaust manifolds. The engine also features an anti-lag system that maintains boost pressure during throttle lift-off, ensuring immediate power delivery when re-engaged.

Power is delivered to the rear wheels via a dual-clutch 8-speed transmission that has been reinforced to handle the increased torque. Chevrolet estimates 0 to 60 mph in 2.3 seconds, with a top speed exceeding 215 mph. In private testing, the 2025 Corvette ZR1 has achieved verified runs over 230 mph, including a peak of 233.

Standard models of the 2025 Corvette ZR1 feature a front splitter, underbody strakes, and an active rear spoiler. With the available ZTK package, the ZR1 gains a large fixed rear wing, dive planes, and additional carbon-fiber components. Combined, these upgrades provide over 1,200 pounds of downforce.

Chassis tuning includes Magnetic Ride Control 4.0 and a track-optimized suspension geometry. The ZR1 is equipped with Michelin tires—20 inches at the front and 21 inches at the rear. Braking is handled by carbon-ceramic rotors, measuring 15.7 inches in front and 15.4 inches in the rear, with electronic brake boost providing consistent stopping power.

Cooling performance has been enhanced through several functional design elements. A center-mounted intercooler evacuates heat through a vented hood, while additional ducts in the front fascia and rear quarter panels direct airflow to critical systems. Roof and rear window have been optimized for thermal management.

2025 Chevrolet Corvette ZR1 – Technical Specifications

General Informations Model: 2025 Chevrolet Corvette ZR1 Body style: 2-door coupe, mid-engine layout Platform: GM Y2 (C8 architecture) Drive type: Rear-wheel drive Production location: Bowling Green, Kentucky, USA

Powertrain Engine code: LT7 Configuration: 5.5-liter V8, twin-turbocharged, dual overhead cam, flat-plane crankshaft Displacement: 5500 cc Induction: Twin 76 millimeter ball-bearing turbochargers integrated into exhaust manifolds Maximum horsepower: 1064 horsepower at 7000 rpm Maximum torque: 825 pound-feet at 6000 rpm Redline: 8000 rpm Fuel delivery: Direct injection Cooling system: Intercooler with hood vent, front and side intake ducts, roof-integrated airflow, and rear-quarter cooling channels Special features: Anti-lag system, forged aluminum pistons, reinforced connecting rods, dry sump oiling system

Transmission Type: 8-speed dual-clutch automatic Final drive: Strengthened limited-slip differential

Performance Estimates 0 to 60 miles per hour: 2.3 seconds Quarter mile: Estimated 9.5 seconds with ZTK package Top speed: Electronically confirmed runs over 230 mph, with a recorded maximum of 233 mph

Chassis and Suspension Front suspension: Short/long arm configuration with Magnetic Ride Control version 4.0 Rear suspension: Multilink setup with Magnetic Ride Control version 4.0 Braking system: Carbon-ceramic rotors, 15.7 inches front and 15.4 inches rear, with electronic brake boost Steering: Electric power steering with variable ratio

Wheels and Tires Front tires: 275/30 ZR20 Rear tires: 345/25 ZR21 Tire options: Michelin Pilot Sport 4S standard, Michelin Pilot Sport Cup 2 R optional with ZTK package Wheel sizes: 20 inches by 10 inches front, 21 inches by 13 inches rear Construction: Lightweight forged aluminum

Aerodynamics Standard aero: Front splitter, underbody strakes, active rear spoiler Optional ZTK package: Fixed carbon fiber rear wing, front dive planes, additional carbon fiber components Downforce: Exceeds 1200 pounds with ZTK configuration

Dimensions (estimated) Overall length: 182.3 inches Overall width: 79.7 inches Overall height: 48.6 inches Wheelbase: 107.2 inches Curb weight: 3750 to 3800 pounds depending on configuration

Interior and Technology Driver interface: Digital instrument cluster, performance data recorder Seating options: GT2 and Competition Sport seats Infotainment: Chevrolet Infotainment 3 Premium with 8" touchscreen Audio system: Bose sound system Driver aids: Launch control, performance traction management, customizable drive modes

Optional Packages ZTK Track Performance Package includes track-optimized suspension, Cup 2 R tires, and high downforce aerodynamic components Carbon Fiber Package: carbon trim elements on exterior and interior

MSRP Starting price above 185,000 US dollars

7 notes

·

View notes

Text

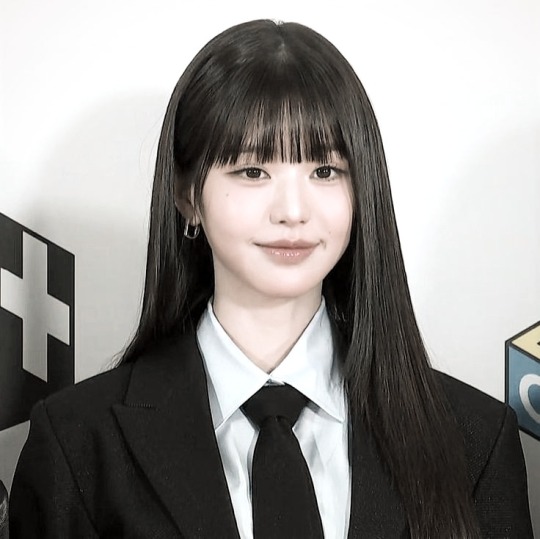

Jenner

Introduced in 2784, the fact that the Jenner was the sole property of the Draconis Combine was long a source of national pride. Designed, produced, and, for over fifty years, solely used by the Combine, the Jenner was meant to be a fast guerrilla fighter that would go on to form the foundation for highly mobile lances. Five Smithson Lifter jump jets, two in each side torso and one in the center torso gave it a jumping distance of 150 meters, while its Magna 245 fusion engine allowed for a top speed of over 118 km/h, making it faster than most other 'Mechs. The drawback of the original model was its armament of a Diplan HD large laser and two Argra 27C medium lasers, all mounted in a turret which could be easily disabled with a well-placed shot, while the medium lasers' targeting systems proved to be bug-ridden. After some tinkering, this setup was eventually replaced with the weaponry found in the JR7-D model, creating a good mix of speed and firepower, though only four tons of armor provide paltry protection compared to other 'Mechs in the same weight range.

The Jenner became the standard light workhorse of the DCMS with the outbreak of the First Succession War, though its reputation would be forever tarnished following its role in the Kentares Massacre. Construction of new Jenners continued at Diplan Mechyard's factory on Ozawa until a raw mineral shortage caused a halt in 2815, though they continued to produce new chassis. In 2823 production resumed on Ozawa, with some 3,000 chassis shipped to Diplan's subsidiary on Luthien for final assembly, which itself was retooling to begin full-scale production; by 2830 both production lines were producing 1,350 Jenners a year. The sheer destruction of the Succession Wars finally took their toll when the last Jenner factory was destroyed in 2848, though so many Jenners had been produced by then that nearly every DCMS battalion had at least one of these 'Mechs well into the War of 3039. While the other Successor States eventually managed to procure operational Jenners (every AFFS regiment along the Combine border had at least one), the design remained in predominant use by House Kurita.

A favorite tactic of Jenner lances was to gang up on larger 'Mechs and unleash a devastating alpha strike. One or two of the 'Mechs would be equipped with inferno rounds, so even if the enemy survived the first strike they were badly damaged and running hot, allowing the Jenners to jump to safety and cool down. A few seconds later the attack would be repeated until finally, the enemy was dead. It was also common practice to pair the Jenner with another Combine 'Mech, the Panther, creating a deadly combination of sheer speed and firepower with the slower Panther providing cover fire for the Jenner's flanking attack. The Jenner was such a favorite among Kurita MechWarriors that it became the benchmark against which all other newly designed Combine 'Mechs were compared.

While rebuilding a Jenner production line on Luthien became a top priority under Gunji-no-Kanrei Theodore Kurita, the necessary construction time along with inexperienced Combine engineers meant that even with ComStar aid this wasn't possible until 3046. The recovery of much lost technology and the Jenner's prominence within the DCMS made it a prime candidate to receive new upgrades, however Luthien Armor Works deliberately avoided any radical changes to preserve the original's core advantages. Hence, while the new JR7-K began replacing the earlier model with little fanfare, it wasn't until the Jenner started to be outclassed by other designs using superior technology that Jenner pilots began complaining. Still, there was reluctance to make any changes, and slowly many commanders began mothballing their Jenners in favor of newer light 'Mechs and OmniMechs. Since then, a few variants have utilized advanced technologies in their design, notably one based on the Clan Jenner IIC, but it would take the devastation of the Jihad for many of the older K models to see frontline combat again.

The primary weapons system on the Jenner is four Argra 3L medium lasers, two each mounted in directionally variable mountings on either side. These provide the Jenner with a powerful close-to-medium range striking capability that does not suffer from a lack of supplies when operating behind enemy lines, though the use of only 10 single heat sinks means it has the potential to run hot. The lasers are backed up by a Thunderstroke SRM-4 launcher in the center torso, supplied by one ton of ammo in the right torso, that can be used after breaching an enemy's armor to try and get a critical strike against vulnerable exposed internal components.

16 notes

·

View notes

Text

Hiranandani Fortune City, Panvel: Redefining Luxury and Sustainability in Navi Mumbai

Hiranandani Fortune City, Panvel, is a thoughtfully designed integrated township that combines modern luxury with sustainable urban living. Developed by Hiranandani Communities, this township is a benchmark for eco-friendly and sophisticated living in Navi Mumbai. The project seamlessly integrates residential, commercial, and retail spaces, offering an upgraded lifestyle to homeowners and a lucrative investment opportunity. With a strong emphasis on Hiranandani sustainability, the township is designed to minimize environmental impact while maximizing comfort and convenience for its residents. As Navi Mumbai continues to grow as a business and residential hub, Hiranandani Panvel stands out as a premier destination for those seeking a well-connected and future-ready township.

Strategic Location & Connectivity

One of the most compelling aspects of Hiranandani Fortune City is its prime location in Panvel, making it an ideal residential and commercial hotspot. The township is strategically positioned near the Mumbai-Pune Expressway, JNPT, and Navi Mumbai International Airport, ensuring seamless connectivity to major economic centers. It is well-connected through Mohape and Panvel railway stations, making commuting hassle-free for working professionals. The upcoming Mumbai Trans-Harbour Link (MTHL) and metro projects will further enhance accessibility, positioning Hiranandani Panvel as a future-ready township. With easy access to commercial and industrial zones, this development caters to professionals and entrepreneurs seeking a well-connected and dynamic living environment.

Architectural Excellence & Thoughtful Planning

Hiranandani Fortune City is a master-planned township featuring architecturally stunning residences, commercial hubs, and retail spaces. The township offers spacious studio, 1, 2, and 3 BHK apartments, designed with modern aesthetics and premium finishes. With a strong focus on Hiranandani sustainability, the project integrates tree-lined avenues, landscaped gardens, and energy-efficient buildings, ensuring an eco-conscious and luxurious lifestyle. The township follows the Live-Work-Play philosophy, incorporating corporate parks, high-street retail outlets, and entertainment zones, creating a self-sustained community for its residents.

Sustainable Living at Its Core

Hiranandani Panvel is not just about luxury; it is also a model of sustainable urban living. Hiranandani Communities has incorporated eco-friendly construction practices, water recycling systems, and renewable energy solutions to minimize environmental impact. The township features rainwater harvesting, sewage treatment plants, and green energy initiatives, reinforcing its commitment to sustainability. Residents benefit from large open green spaces, themed gardens, and tree plantations, which enhance air quality and promote a healthy living environment. By integrating Hiranandani sustainability initiatives, this township ensures a greener and healthier lifestyle for its residents.

Luxury & Lifestyle Amenities

Hiranandani Fortune City, Panvel, is designed to provide an unparalleled living experience with a wide range of luxury amenities. The township features world-class clubhouses, wellness centers, and landscaped gardens, offering a serene escape from the hustle of city life. Residents have access to state-of-the-art sports facilities, including swimming pools, gymnasiums, jogging tracks, and recreational courts. Retail and entertainment hubs within the township provide convenience at residents' doorsteps, eliminating the need to travel far for essential services and leisure activities. Whether it’s fine dining, shopping, or wellness, Hiranandani Panvel offers a holistic and premium lifestyle.

A Thriving Community & Future Prospects

The real estate market in Panvel is witnessing rapid growth, making Hiranandani Fortune City an attractive investment option. With Navi Mumbai’s expanding infrastructure, increased commercial activity, and growing residential demand, the township is poised for long-term appreciation. The integrated township model ensures a balanced lifestyle with employment hubs, educational institutions, healthcare facilities, and recreational zones all within close proximity. As a part of Hiranandani Communities, the township continues to evolve, adding value to residents and investors alike. Its strategic location, top-tier amenities, and sustainability-driven planning make Hiranandani Panvel a benchmark for modern urban development.

Conclusion

Hiranandani Fortune City, Panvel, is more than just a township; it is a vision for the future of luxury and sustainable living. Developed by Hiranandani Communities, this project blends modern infrastructure, premium amenities, and eco-friendly initiatives, setting new standards in real estate. As Panvel emerges as a key urban center, this township offers a perfect blend of connectivity, comfort, and long-term value. Whether you are looking for a home or an investment, Hiranandani Panvel provides an unparalleled opportunity to be a part of a thriving, future-ready community.

10 notes

·

View notes

Text

Why Dr. Niranjan Hiranandani is Recognized as a Leader in Indian Real Estate?

Dr. Niranjan Hiranandani is a name synonymous with innovation and transformation in the Indian real estate industry. As one of the top real estate leaders in India, he has played a crucial role in shaping urban infrastructure and pioneering integrated township development. His forward-thinking approach to urban planning and his commitment to sustainability have set new benchmarks for modern cityscapes. His leadership has not only revolutionized the sector but has also influenced policies and set new standards for urban living.

With a deep understanding of market needs and future trends, Dr. Niranjan Hiranandani’s leadership has been instrumental in creating self-sustaining communities that blend luxury with functionality. His projects stand as testaments to sustainable architecture, smart infrastructure, and customer-centric development.

Revolutionizing Urban Living: The Hiranandani Legacy

Integrated Township Model

Dr Niranjan Hiranandani redefined urban development by introducing the integrated township concept in India. His vision led to the creation of landmark developments such as Hiranandani Gardens in Powai, Hiranandani Estate in Thane, and Hiranandani Fortune City in Panvel. These projects transformed underdeveloped areas into thriving residential and commercial hubs, offering a perfect blend of housing, business centers, recreational facilities, and retail spaces.

Sustainable and Green Development

Sustainability is at the core of urban planning by Niranjan Hiranandani. His developments focus on eco-conscious architecture, integrating features like rainwater harvesting, solar power, waste management systems, and tree-lined avenues. His projects prioritize green spaces and energy-efficient designs, promoting an environmentally responsible lifestyle for residents.

Creating New Geographies

A hallmark of Dr. Niranjan Hiranandani’s leadership is his ability to identify and develop emerging real estate corridors. He was one of the first to envision Panvel as a future residential and commercial hub, leading to the development of Hiranandani Fortune City. Similarly, he played a key role in transforming Oragadam in Chennai and Alibaug into high-potential growth destinations.

A Leader with a Customer-Centric Vision

Beyond Brick and Mortar

Unlike many developers who focus solely on construction, Dr. Niranjan Hiranandani emphasizes customer experience and lifestyle. He believes that real estate is not just about building structures but about creating communities where people thrive. His attention to detail, from architectural excellence to world-class amenities, ensures that each development offers a premium living experience.

Customer-First Approach

Understanding evolving homebuyer expectations has been a driving force behind Hiranandani Communities' success. Dr. Hiranandani prioritizes modern amenities, wellness-driven infrastructure, and convenience-oriented living, ensuring that each township offers everything from healthcare and education to retail and entertainment within close proximity.

Leadership and Innovation in Real Estate Development

Adopting Cutting-Edge Technology

As a business leader of the year, Dr. Niranjan Hiranandani has continuously embraced technological advancements in real estate. His projects incorporate PropTech solutions, smart city infrastructure, and digital innovation to enhance customer experiences and streamline urban planning.

Innovation in Construction

His pioneering approach has introduced precast technology, green building practices, and smart infrastructure to Indian real estate. By integrating sustainable materials and futuristic designs, his developments are built to withstand changing urban demands while ensuring minimal environmental impact.

Commitment to Sustainable Urban Development

Dr. Hiranandani’s commitment to sustainability-driven urban planning sets him apart from other Indian business leaders. His projects incorporate:

Energy-efficient homes with advanced insulation techniques.

Rainwater harvesting systems and sewage treatment plants to conserve water.

Eco-friendly landscapes with ample green spaces to reduce carbon footprints.

Through these initiatives, Hiranandani Communities has redefined real estate by promoting eco-conscious living without compromising on modern luxuries.

Industry Leadership and Influence

Policy Advocacy and Regulatory Contributions

Dr. Niranjan Hiranandani has played a pivotal role in shaping real estate regulations in India. As the former President of NAREDCO and an influential voice in CREDAI, he has been a strong advocate for transparency, ethical business practices, and industry reforms. His leadership has contributed to real estate policies that protect homebuyers while ensuring sustainable industry growth.

Thought Leadership

Recognized as one of the top Indian business leaders, Dr. Hiranandani frequently shares insights on real estate trends, infrastructure growth, and investment strategies. As a key speaker at industry summits, he educates stakeholders on the future of urban planning, sustainable development, and emerging real estate trends.

Expanding Beyond Real Estate: Healthcare & Education

Hiranandani Healthcare Initiatives

Beyond real estate, Dr. Niranjan Hiranandani has made significant contributions to healthcare. He is a trustee of Hiranandani Hospital, a renowned institution that has set benchmarks in transplants, critical care, and advanced medical treatments in Mumbai. His vision for healthcare emphasizes accessibility, affordability, and cutting-edge medical technology.

Education and Youth Empowerment

Dr. Hiranandani has also played a key role in education through initiatives like Hiranandani Foundation Schools. By investing in high-quality education, he is fostering the next generation of business leaders in India. His support for skill development programs and academic excellence highlights his commitment to holistic nation-building.

Niranjan Hiranandani: The Architect of Modern Urban India

Dr. Niranjan Hiranandani’s legacy extends far beyond real estate. As an industry pioneer, innovator, and philanthropist, his contributions have reshaped the landscape of urban India. By blending sustainability, technology, and customer-centric development, he continues to set new benchmarks in real estate.

His influence reaches beyond buildings and into communities, driving positive socio-economic change and setting an example for future entrepreneurs. With an unwavering commitment to excellence, he remains one of the most respected business leaders in India.

Conclusion: A Legacy That Inspires Future Entrepreneurs