#Autonomous Robotics 2023

Explore tagged Tumblr posts

Text

#Robotics and job market impact#Automation and employment effects#Robotics and job displacement#Impact of robots on labor market#Robotics and future of work#Automation and job opportunitiesRobotics and career prospects#Robotics and career prospects#Impact of automation on workforce#Robotics and job security#Robotics and employment trends#Robotics 2023#Future of Robotics#"Robotics and AI 2023#Robotics Industry 2023#Smart Robotics 2023#Autonomous Robotics 2023#Robotics in manufacturing 2023#IoT and Robotics 2023#Robotics and automation 2023#Industry 4.0 and Robotics 2023

0 notes

Text

Jidu ROBO-02 Concept, 2023. Fledgling autonomous EV marque Jidu have revealed a second model in concept form. Few details have been disclosed about the robotic saloon, it's SUV sibling model the ROBO-01 is equipped with two Nvidia Orin X chips that deliver up to 508 TOPS of computing power. These help control 31 different sensors, including 2 LiDARs, 5 millimeter-wave radars, 12 ultrasonic radars, and 12 high-definition cameras. Like 01 the 02 does without door handles, instead the doors can be opened using voice command or bluetooth.

#Jidu#Jidu ROBO-02#concept#prototype#2023#autonomous#EV#electric car#self driving cars#robot car#AI#design study

93 notes

·

View notes

Text

Bias in AI: Understanding and mitigating algorithmic discrimination

New Post has been published on https://thedigitalinsider.com/bias-in-ai-understanding-and-mitigating-algorithmic-discrimination/

Bias in AI: Understanding and mitigating algorithmic discrimination

In order to mitigate bias within artificial intelligence (AI), I want to speak metaphorically to set a precedent for how we, as individuals and organizations, should approach and use AI in thought and action. A car will go where you point it to go. It will go as fast as you decide to make it go. A car is only as good as its driver.

Just as a driver’s decisions can influence the safety and well-being of passengers and bystanders, the decisions made in designing and implementing AI systems have a similar, but demonstrably greater, impact on society as a whole, where technology has influenced everything from healthcare to personal dignity and identity.

Therefore, a driver must understand the car in which they are operating, and they must understand everything adjacent and near to the car from all directions. So, a car is really only as good as its driver. All drivers take lives into their own hands when they operate vehicles.

With all that being said, AI is much like a car in terms of how humans operate it and the impact it can have.

Let’s talk more about how we can use this analogy to use and develop AI in a way that is safe for all.

Bias and algorithms: The check engine lights of AI

When considering AI in its systemic entirety, we must view it as a time-laden system and as a reflection of society as a whole, encompassing the past, present, and future, rather than merely a component or an event.

First and foremost, AI represents many facets, with one of the most crucial being humanity itself, encompassing all that we are, have been, and will become, both the positive and negative aspects.

It’s impractical to saturate AI solely with positive data from human existence, as this would provide little basis and essential benchmarks for distinguishing between right and wrong.

Bias can manifest in various forms, not always directly related to humans. For instance, consider a scenario where we instruct AI to display images of apples. If the AI has only been exposed to data on red apples, it may exhibit bias towards showing red apples rather than green ones.

Thus, bias isn’t always human-centric, but there is undoubtedly a “food chain” or “ripple effect” within AI.

Analogous to the potential consequences of bees becoming extinct and leading to human extinction down the line, the persistence of bias, such as in the case of red and green apples, may not immediately impact humans but could have significant long-term effects.

Despite the seeming triviality of distinctions between red and green apples, any gap in data must be taken seriously, as it can perpetuate bias and potentially cause irreversible harm to humans, our planet, and animals.

For example, consider the question I posed to ChatGPT here:

Avoiding AI Accidents

To begin, I want to highlight algorithmic discrimination through a conversation I had with Ayodele Obdubuela, our Ethical Advisor for my fund, Q1 Velocity Venture Capital:

“My first encounter with bias in AI was at the Data for Black Lives Conference. I was exposed to the various types of discrimination algorithms perpetuate and was shocked by how pervasive the weaponization of big data was.

I also encountered difficulties when using open-source facial recognition tools, such as the popular study, Gender Shades, conducted by Joy Buolamwini, a researcher at the MIT Media Lab, along with Timnit Gebru, a former PhD student at Stanford University.

The tools couldn’t identify my face, and when I proposed an AI camera tuned to images of Black people, my graduate advisors discouraged the validity of the project.

I realized that the issue of bias in AI wasn’t only about the datasets, but also the research environments, reward systems, funding sources, and deployment methods that can lead to biased outcomes even when assuming everyone involved has the best intent. This is what originally sparked my interest in the field of bias in AI.”

Poor algorithmic design can have devastating and sometimes irreversible impacts on organizations and consumers alike.

Just like a car accident due to poor structural design, an AI accident can cripple and total an organization.

There are numerous consequences that can result from poor algorithmic design, including but not limited to reputational damage, regulatory fines, missed opportunities, reduced employee productivity, customer churn, and psychological damages.

Bad algorithmic design is akin to tooth decay; once it’s been hardwired into the organization’s technical infrastructure, the digital transformation needed to shift systems into a positive state can be overwhelming in terms of costs and time.

Organizations can work to mitigate this by having departments dedicated entirely to their AI ecosystem, serving both internal and external users.

A department entirely dedicated to AI, utilizing a shared service model such as an Enterprise Resource Planning (ERP) system, promotes and fosters a culture of not only continuous improvement but also a constant state of auditing and monitoring.

In this setup, the shared service ensures that the AI remains completely transparent and relevant for every type of user, encompassing every aspect and component of the organization, from department leaders to the C-Suite.

However, it’s not just bad algorithms we have to be concerned about because even by itself, a perfect algorithm is only perfect for the data it represents. Let’s discuss data in the next section.

Data rules: When algorithms break the rules, accidents happen

Data is the new quarterback of technology, and it will dictate the majority of AI outcomes. I’d even bet that most expert witness AI testimonies will all point back to data.

While my statement may not apply universally to all AI-related legal cases, it does reflect a common trend in how data is utilized and emphasized in expert witness testimonies involving AI technologies.

The problem with algorithms is the data on which they run. The only way to create a data strategy that competently supports algorithms is to think about the organization at its deepest stage of growth potential or its most senior state of operation possible. This can be very difficult for young organizations, but it should be much easier for larger organizations.

Young organizations who solve new use cases and bring new products to the market have a limited vision of the future because they’re not mature. However, an organization that’s been serving its customers for many years would be very attuned to its current and prospective market.

So, a young organization would need to build its data strategy slowly for a small number of use cases that have a high degree of stability.

A high degree of stability would mean that the organization is bringing products to the market that can solve use cases over the next 15-20 years at least. An organization is most likely to find a case like this in an industry with a matured inflection point but a high barrier to entry and wide user relevancy with low complexity for maximum user adoption.

It’s likely that if the startup has achieved a significant enough use case, they may have a patent or a trademark associated with their MOAT.

“Startups with patents and trademarks are 10 times more successful in securing funding.” (European Patent Office, 2023)

For larger organizations, poor data strategy often stems from systemic operational gaps and inefficiencies.

Before any large organization builds out its data strategy, it should consider if its organization is ready for digital transformation to AI; otherwise, building out the data strategy could be in vain.

Considerations should include assessing leadership, employee and customer commitment, technical infrastructure, and most importantly, ensuring cost and time justification from a customer standpoint.

Out of all the considerations needing to be made, culture is the biggest barometer to measure in all of this because, as I’ve said before, AI has to be built around culture. Not only that, there is a social engineering aspect inherent to AI and imperative to an organization’s AI strength, and we’ll expand on this later.

Cultural factors such as attitudes towards data-driven decision-making, acceptance, and willingness to adopt new technologies and processes play a significant role.

A great example of this is people’s willingness to share their data freely with social media companies in exchange for their own privacy and control of their data. Is it ethical that big tech companies protect themselves with the “fine print” and allow this just because consumers allow it at their own expense?

For example, is it ethical to allow a person to smoke cigarettes even though we warned them not to on the Surgeon General’s label? Society seems to think it is totally ok; it’s your choice, right?

Is it ethical to allow someone to kill themselves? Most people would say no.

These are questions for you to answer, not me. Even if the companies protect themselves with the fine print and regulatory abidance, if the consumers don’t allow it, the data strategy will never work, and the AI will fail. Either way, we know there is a market for it.

Your data strategy is built around what consumers allow, not what your fine print dictates, but again, just because consumers allow it, does not mean it should be allowed by regulators and organizations.

During my interactions with Ayodele, she gave a great explanation of how large tech social media companies are letting their ad-driven engagement missions compromise ethical boundaries, resulting in the proliferation of harmful content and ultimately the pollution of harmful content.

She goes on to explain how harmful content is promoted just to drive ad revenues from engagement, and when we develop models trained on this, they end up perpetuating those values by default. This human bias gets encoded, and AI models can learn that “Iraqi” is closer to “terrorist” than “American” is.

In summation, she closes with the fact that AI cannot act outside of the bounds it’s given.

I think Ayodele makes it abundantly clear that data is the problem facing organizations today. Data gets perpetuated within data-driven companies, which is really most companies today. Particularly with social media tech companies, this data gets perpetuated while it goes through multiple shares and engagements on a social media tech company’s platform.

I thought it was important to illustrate the measure of reported hate speech and hate media that gets generated and shared on the internet’s social media platforms. For example, as depicted in the bar graph below, since the 4th quarter of 2017 and every quarter thereafter until the 3rd quarter of 2023, we can see all of the millions of harmful content pieces that Facebook has removed from its platform.

(Statista, 2023)

Whether they’re in a large or small organization, all leaders need to engage their organizations to become data-ready. Every single organizational leader in the world is likely putting their customers, employees, and organization at risk if they are not implementing a data and AI strategy.

This statement is very similar to, for example, an iPhone user or an organization operating on an outdated programming language like COBOL.

Due to legacy operating systems, they can’t keep up with the rest of the developing societal infrastructure, and aside from underperformance at the least, the technology creates a whole list of vulnerabilities that could sink the ship of an organization or significantly compromise an iPhone user’s personal security.

For instance, imagine someone using a very old smartphone model that’s no longer receiving software updates or security patches. Without the updates, the phone becomes vulnerable to attacks.

Additionally, if the phone were to develop hardware issues, finding replacement parts might not be possible, which may create additional vulnerabilities to cyberattacks.

With older devices, this is referred to as “zero-click vulnerabilities,” where the user doesn’t have to do anything at all, and the device is off or completely locked, and they get attacked by hackers simply due to the device’s own legacy vulnerabilities.

By creating a future-thinking culture of learning and a receptive mentality, leaders will be able to better map out the best opportunities and the best data for their organizations. Systematically evaluating these areas and taking the proper preemptive protocols allows organizations to identify strengths, weaknesses, and areas for improvement needed before building out their AI data strategy.

Mapping origins: Where does bias come from?

One of the challenges with AI is that, for it to help us, it has to hurt us first. No pain, no gain, right? Everything gets better once it’s first broken. As we learn our vulnerabilities with AI, only then we can make it better. Not that we look to purposefully injure ourselves, but every new product and revolution is not always the most efficient at first.

Take Paul Virilio’s quote below for example:

“When you invent the ship, you also invent the shipwreck; when you invent the plane you also invent the plane crash; and when you invent electricity, you invent electrocution…Every technology carries its own negativity, which is invented at the same time as technical progress.”

AI has to understand the past and present of humans, our Earth, and everything that makes our society operate. It must incorporate everything humans have come to know, love, and hate in order to have a scale on which to operate.

AI systems must incorporate both positive and negative biases and stereotypes without acting on them. We need bias. We don’t need to get rid of it, we just need to balance the bias and train the AI on what to act on and what to not act on.

A good analogy is that rats, despite their uncleanliness, are still needed in our world, but we just don’t need them in our houses.

Rats are well-known as carriers of diseases that can be harmful to humans and other animals, but they also remain as hosts for parasites and pathogens. The disappearance of rats could disrupt the livelihoods of parasites and pathogens, potentially affecting other species like humans in negative ways.

In urban areas, rats are a major presence and play important roles in waste management by consuming organic matter. Without rats to help break down organic waste, urban societies could experience changes in waste management processes.

So, coming back to the main point, we need some aspects of negative bias.

These incorporations manifest as biases within algorithms, which can either be minimized, managed, or exacerbated.

For instance, a notable tech company faced criticism in 2015 when its AI system incorrectly classified images of black individuals as “gorillas” or “monkeys” due to inadequate training data.

Prompted by public outcry, the company took swift action to rectify the issue. However, their initial response was to simply eliminate the possibility of the AI labeling any image as a gorilla, chimpanzee, or monkey, including images of the animals themselves.

While this action aimed to prevent further harm, it underscores the complexity of addressing bias in AI systems. Merely removing entire categories from consideration is not a viable solution.

What’s imperative is not only ensuring that AI systems have access to sufficient and accurate data for distinguishing between individuals of different races and species, but also comprehending the ethical implications underlying such distinctions. Thus, AI algorithms must incorporate bias awareness alongside additional contextual information to discern what is appropriate and what isn’t.

Through this approach, AI algorithms can be developed to operate in a manner that prioritizes safety and fairness for all individuals involved.

“Bias isn’t really the right word for what we’re trying to get at, especially given it has multiple meanings even in the context of AI. Algorithmic discrimination is better described when algorithms perpetuate existing societal biases and result in harm. This can mean not getting hired for a job, not being recognized by a facial recognition system, or getting classified as more risky based on some aspect like your race.”

— Ayodele Odubela

To demonstrate the real potential of AI, I ran the large language model, Chat-GPT, through a moral aptitude test, the MFQ30 Moral Questionnaire. See the results below:

“The average politically moderate American’s scores are 20.2, 20.5, 16.0, 16.5, and 12.6. Liberals generally score a bit higher than that on harm/care and fairness/reciprocity, and much lower than that on the other three foundations. Conservatives generally show the opposite pattern.” – Moral Foundations Questionnaires, n.d.).

Based on the MFQ30 Moral Questionnaire, at a low-grade level, AI does score higher than humans, but this is without the nuance and complexity of context. At a baseline and conditionally speaking, AI already knows right from wrong, but can only perform at various levels of complexity and in certain domains.

In short, AI will play pivotal, yet specific roles in our society, and it must not be programmed to be deployed outside of its own domain capacity by itself. AI must be an expert without flaw in its deliverables with Humans in the Loop (HITL).

Autonomous robots are great examples of AI that’s progressed to a level that can operate fully autonomously or with Humans Out of the Loop. They can perform tasks such as manufacturing, warehouse automation, and exploration.

For example, an AI robotic surgeon has to make the choice while in the emergency room to either prolong the life of an incurable, dying person in pain or to let them die.

An AI robotic surgeon may have only been designed to perform surgeries that save lives, and in certain situations, it may prove more ethical to let the person die; a machine cannot make this choice because a machine or algorithm doesn’t know the significance of life.

A machine can offer perspectives on the meaning of life, but it can’t tell you what the meaning of life is from person to person or from society to society.

As mentioned earlier, there’s a social engineering aspect inherent to AI and imperative to an organization’s AI strength. Much like cybersecurity, AI has a social engineering aspect to it which if goes unaddressed can severely damage an organization or an individual’s credibility.

As demonstrated above, we can see the potentially damaging effects of media. A lot of AI bias simply gets created and perpetuated not by the algorithms themselves, but actually by what the users create, and unrestricted algorithms learn from, and therefore also reinforce.

I believe it’s imperative that we look at AI regulation from two angles:

1. Consumers

2. Companies

Both need to be monitored and policed for the way in which they build and use AI. Similar to having a license to operate a gun, I believe the same should exist for consumers. Companies that violate AI laws should encounter massive taxation, and systems like OSHA can certainly add oversight value across the board.

Much like operating a car, there should be massive fines and penalties for violating the rules of the road for AI. In the same essence, government identification numbers like social security numbers are issued.

Conclusion

In the realm of AI and its challenges, the metaphorical journey of driving a car provides a compelling analogy. Just as a driver steers a car’s path, human decisions guide the trajectory and impact of AI systems.

Bias within AI, stemming from inadequate data or societal biases, presents significant hurdles. Yet, addressing bias demands a comprehensive approach, embracing data transparency, algorithmic accountability, and ethical considerations. Organizations must foster a culture of accountability and openness to mitigate the risks linked with biased AI.

Moreover, regulatory frameworks and oversight are vital to ensure ethical AI deployment. Through collaborative endeavors among consumers, companies, and regulators, AI can be wielded responsibly for societal benefit while mitigating harm from biases and algorithms.

#2023#ai#AI bias#AI regulation#AI strategy#AI systems#algorithm#Algorithms#Animals#approach#Aptitude#artificial#Artificial Intelligence#automation#autonomous robots#awareness#barrier#bees#benchmarks#Bias#Big Data#board#Building#C-suite#chatGPT#collaborative#Companies#complexity#comprehensive#conference

0 notes

Text

AI's Vegas Roar: Racing into the Future 🏎🤖 #AIF1 #VegasRevolution #Formula1 #LasVegas #GrandPrix Daniel Reitberg

#artificial intelligence#machine learning#deep learning#technology#robotics#autonomous vehicles#las vegas nevada#las vegas gp 2023#las vegas grand prix#formula 1#formula one#racing#cars#race

0 notes

Text

Three AI insights for hard-charging, future-oriented smartypantses

MERE HOURS REMAIN for the Kickstarter for the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There’s also bundles with Red Team Blues in ebook, audio or paperback.

Living in the age of AI hype makes demands on all of us to come up with smartypants prognostications about how AI is about to change everything forever, and wow, it's pretty amazing, huh?

AI pitchmen don't make it easy. They like to pile on the cognitive dissonance and demand that we all somehow resolve it. This is a thing cult leaders do, too – tell blatant and obvious lies to their followers. When a cult follower repeats the lie to others, they are demonstrating their loyalty, both to the leader and to themselves.

Over and over, the claims of AI pitchmen turn out to be blatant lies. This has been the case since at least the age of the Mechanical Turk, the 18th chess-playing automaton that was actually just a chess player crammed into the base of an elaborate puppet that was exhibited as an autonomous, intelligent robot.

The most prominent Mechanical Turk huckster is Elon Musk, who habitually, blatantly and repeatedly lies about AI. He's been promising "full self driving" Telsas in "one to two years" for more than a decade. Periodically, he'll "demonstrate" a car that's in full-self driving mode – which then turns out to be canned, recorded demo:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

Musk even trotted an autonomous, humanoid robot on-stage at an investor presentation, failing to mention that this mechanical marvel was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Now, Musk has announced that his junk-science neural interface company, Neuralink, has made the leap to implanting neural interface chips in a human brain. As Joan Westenberg writes, the press have repeated this claim as presumptively true, despite its wild implausibility:

https://joanwestenberg.com/blog/elon-musk-lies

Neuralink, after all, is a company notorious for mutilating primates in pursuit of showy, meaningless demos:

https://www.wired.com/story/elon-musk-pcrm-neuralink-monkey-deaths/

I'm perfectly willing to believe that Musk would risk someone else's life to help him with this nonsense, because he doesn't see other people as real and deserving of compassion or empathy. But he's also profoundly lazy and is accustomed to a world that unquestioningly swallows his most outlandish pronouncements, so Occam's Razor dictates that the most likely explanation here is that he just made it up.

The odds that there's a human being beta-testing Musk's neural interface with the only brain they will ever have aren't zero. But I give it the same odds as the Raelians' claim to have cloned a human being:

https://edition.cnn.com/2003/ALLPOLITICS/01/03/cf.opinion.rael/

The human-in-a-robot-suit gambit is everywhere in AI hype. Cruise, GM's disgraced "robot taxi" company, had 1.5 remote operators for every one of the cars on the road. They used AI to replace a single, low-waged driver with 1.5 high-waged, specialized technicians. Truly, it was a marvel.

Globalization is key to maintaining the guy-in-a-robot-suit phenomenon. Globalization gives AI pitchmen access to millions of low-waged workers who can pretend to be software programs, allowing us to pretend to have transcended the capitalism's exploitation trap. This is also a very old pattern – just a couple decades after the Mechanical Turk toured Europe, Thomas Jefferson returned from the continent with the dumbwaiter. Jefferson refined and installed these marvels, announcing to his dinner guests that they allowed him to replace his "servants" (that is, his slaves). Dumbwaiters don't replace slaves, of course – they just keep them out of sight:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

So much AI turns out to be low-waged people in a call center in the Global South pretending to be robots that Indian techies have a joke about it: "AI stands for 'absent Indian'":

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

A reader wrote to me this week. They're a multi-decade veteran of Amazon who had a fascinating tale about the launch of Amazon Go, the "fully automated" Amazon retail outlets that let you wander around, pick up goods and walk out again, while AI-enabled cameras totted up the goods in your basket and charged your card for them.

According to this reader, the AI cameras didn't work any better than Tesla's full-self driving mode, and had to be backstopped by a minimum of three camera operators in an Indian call center, "so that there could be a quorum system for deciding on a customer's activity – three autopilots good, two autopilots bad."

Amazon got a ton of press from the launch of the Amazon Go stores. A lot of it was very favorable, of course: Mister Market is insatiably horny for firing human beings and replacing them with robots, so any announcement that you've got a human-replacing robot is a surefire way to make Line Go Up. But there was also plenty of critical press about this – pieces that took Amazon to task for replacing human beings with robots.

What was missing from the criticism? Articles that said that Amazon was probably lying about its robots, that it had replaced low-waged clerks in the USA with even-lower-waged camera-jockeys in India.

Which is a shame, because that criticism would have hit Amazon where it hurts, right there in the ole Line Go Up. Amazon's stock price boost off the back of the Amazon Go announcements represented the market's bet that Amazon would evert out of cyberspace and fill all of our physical retail corridors with monopolistic robot stores, moated with IP that prevented other retailers from similarly slashing their wage bills. That unbridgeable moat would guarantee Amazon generations of monopoly rents, which it would share with any shareholders who piled into the stock at that moment.

See the difference? Criticize Amazon for its devastatingly effective automation and you help Amazon sell stock to suckers, which makes Amazon executives richer. Criticize Amazon for lying about its automation, and you clobber the personal net worth of the executives who spun up this lie, because their portfolios are full of Amazon stock:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Amazon Go didn't go. The hundreds of Amazon Go stores we were promised never materialized. There's an embarrassing rump of 25 of these things still around, which will doubtless be quietly shuttered in the years to come. But Amazon Go wasn't a failure. It allowed its architects to pocket massive capital gains on the way to building generational wealth and establishing a new permanent aristocracy of habitual bullshitters dressed up as high-tech wizards.

"Wizard" is the right word for it. The high-tech sector pretends to be science fiction, but it's usually fantasy. For a generation, America's largest tech firms peddled the dream of imminently establishing colonies on distant worlds or even traveling to other solar systems, something that is still so far in our future that it might well never come to pass:

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

During the Space Age, we got the same kind of performative bullshit. On The Well David Gans mentioned hearing a promo on SiriusXM for a radio show with "the first AI co-host." To this, Craig L Maudlin replied, "Reminds me of fins on automobiles."

Yup, that's exactly it. An AI radio co-host is to artificial intelligence as a Cadillac Eldorado Biaritz tail-fin is to interstellar rocketry.

Back the Kickstarter for the audiobook of The Bezzle here!

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/31/neural-interface-beta-tester/#tailfins

#pluralistic#elon musk#neuralink#potemkin ai#neural interface beta-tester#full self driving#mechanical turks#ai#amazon#amazon go#clm#joan westenberg

1K notes

·

View notes

Text

September 2, 2023 - a San Francisco local disables a Cruise self-driving car. If you live in a place where Silicon Valley bullshit like driverless cars or autonomous delivery robots or doorbell cameras are becoming commonplace it might be a good idea to follow the example of this trendsetter and start carrying around a skimask and a hammer, just in case. [video]

#direct action#driverless car#cruise#usa#san francisco#vandalism#black bloc#anti-capitalism#technology#silicon valley#gif#2023#inspiration#anti-car#cyberpunk#driverless cars#anti-surveillance

630 notes

·

View notes

Text

So I don't know how people on this app feel about the shit-house that is TikTok but in the US right now the ban they're trying to implement on it is a complete red herring and it needs to be stopped.

They are quite literally trying to implement Patriot Act 2.0 with the RESTRICT Act and using TikTok and China to scare the American public into buying into it wholesale when this shit will change the face of the internet. Here are some excerpts from what the bill would cover on the Infrastructure side:

SEC. 5. Considerations.

(a) Priority information and communications technology areas.—In carrying out sections 3 and 4, the Secretary shall prioritize evaluation of— (1) information and communications technology products or services used by a party to a covered transaction in a sector designated as critical infrastructure in Policy Directive 21 (February 12, 2013; relating to critical infrastructure security and resilience);

(2) software, hardware, or any other product or service integral to telecommunications products and services, including— (A) wireless local area networks;

(B) mobile networks;

(C) satellite payloads;

(D) satellite operations and control;

(E) cable access points;

(F) wireline access points;

(G) core networking systems;

(H) long-, short-, and back-haul networks; or

(I) edge computer platforms;

(3) any software, hardware, or any other product or service integral to data hosting or computing service that uses, processes, or retains, or is expected to use, process, or retain, sensitive personal data with respect to greater than 1,000,000 persons in the United States at any point during the year period preceding the date on which the covered transaction is referred to the Secretary for review or the Secretary initiates review of the covered transaction, including— (A) internet hosting services;

(B) cloud-based or distributed computing and data storage;

(C) machine learning, predictive analytics, and data science products and services, including those involving the provision of services to assist a party utilize, manage, or maintain open-source software;

(D) managed services; and

(E) content delivery services;

(4) internet- or network-enabled sensors, webcams, end-point surveillance or monitoring devices, modems and home networking devices if greater than 1,000,000 units have been sold to persons in the United States at any point during the year period preceding the date on which the covered transaction is referred to the Secretary for review or the Secretary initiates review of the covered transaction;

(5) unmanned vehicles, including drones and other aerials systems, autonomous or semi-autonomous vehicles, or any other product or service integral to the provision, maintenance, or management of such products or services;

(6) software designed or used primarily for connecting with and communicating via the internet that is in use by greater than 1,000,000 persons in the United States at any point during the year period preceding the date on which the covered transaction is referred to the Secretary for review or the Secretary initiates review of the covered transaction, including— (A) desktop applications;

(B) mobile applications;

(C) gaming applications;

(D) payment applications; or

(E) web-based applications; or

(7) information and communications technology products and services integral to— (A) artificial intelligence and machine learning;

(B) quantum key distribution;

(C) quantum communications;

(D) quantum computing;

(E) post-quantum cryptography;

(F) autonomous systems;

(G) advanced robotics;

(H) biotechnology;

(I) synthetic biology;

(J) computational biology; and

(K) e-commerce technology and services, including any electronic techniques for accomplishing business transactions, online retail, internet-enabled logistics, internet-enabled payment technology, and online marketplaces.

(b) Considerations relating to undue and unacceptable risks.—In determining whether a covered transaction poses an undue or unacceptable risk under section 3(a) or 4(a), the Secretary— (1) shall, as the Secretary determines appropriate and in consultation with appropriate agency heads, consider, where available— (A) any removal or exclusion order issued by the Secretary of Homeland Security, the Secretary of Defense, or the Director of National Intelligence pursuant to recommendations of the Federal Acquisition Security Council pursuant to section 1323 of title 41, United States Code;

(B) any order or license revocation issued by the Federal Communications Commission with respect to a transacting party, or any consent decree imposed by the Federal Trade Commission with respect to a transacting party;

(C) any relevant provision of the Defense Federal Acquisition Regulation and the Federal Acquisition Regulation, and the respective supplements to those regulations;

(D) any actual or potential threats to the execution of a national critical function identified by the Director of the Cybersecurity and Infrastructure Security Agency;

(E) the nature, degree, and likelihood of consequence to the public and private sectors of the United States that would occur if vulnerabilities of the information and communications technologies services supply chain were to be exploited; and

(F) any other source of information that the Secretary determines appropriate; and

(2) may consider, where available, any relevant threat assessment or report prepared by the Director of National Intelligence completed or conducted at the request of the Secretary.

Look at that, does that look like it just covers the one app? NO! This would cover EVERYTHING that so much as LOOKS at the internet from the point this bill goes live.

It gets worse though, you wanna see what the penalties are?

(b) Civil penalties.—The Secretary may impose the following civil penalties on a person for each violation by that person of this Act or any regulation, order, direction, mitigation measure, prohibition, or other authorization issued under this Act: (1) A fine of not more than $250,000 or an amount that is twice the value of the transaction that is the basis of the violation with respect to which the penalty is imposed, whichever is greater. (2) Revocation of any mitigation measure or authorization issued under this Act to the person. (c) Criminal penalties.— (1) IN GENERAL.—A person who willfully commits, willfully attempts to commit, or willfully conspires to commit, or aids or abets in the commission of an unlawful act described in subsection (a) shall, upon conviction, be fined not more than $1,000,000, or if a natural person, may be imprisoned for not more than 20 years, or both. (2) CIVIL FORFEITURE.— (A) FORFEITURE.— (i) IN GENERAL.—Any property, real or personal, tangible or intangible, used or intended to be used, in any manner, to commit or facilitate a violation or attempted violation described in paragraph (1) shall be subject to forfeiture to the United States. (ii) PROCEEDS.—Any property, real or personal, tangible or intangible, constituting or traceable to the gross proceeds taken, obtained, or retained, in connection with or as a result of a violation or attempted violation described in paragraph (1) shall be subject to forfeiture to the United States. (B) PROCEDURE.—Seizures and forfeitures under this subsection shall be governed by the provisions of chapter 46 of title 18, United States Code, relating to civil forfeitures, except that such duties as are imposed on the Secretary of Treasury under the customs laws described in section 981(d) of title 18, United States Code, shall be performed by such officers, agents, and other persons as may be designated for that purpose by the Secretary of Homeland Security or the Attorney General. (3) CRIMINAL FORFEITURE.— (A) FORFEITURE.—Any person who is convicted under paragraph (1) shall, in addition to any other penalty, forfeit to the United States— (i) any property, real or personal, tangible or intangible, used or intended to be used, in any manner, to commit or facilitate the violation or attempted violation of paragraph (1); and (ii) any property, real or personal, tangible or intangible, constituting or traceable to the gross proceeds taken, obtained, or retained, in connection with or as a result of the violation. (B) PROCEDURE.—The criminal forfeiture of property under this paragraph, including any seizure and disposition of the property, and any related judicial proceeding, shall be governed by the provisions of section 413 of the Controlled Substances Act (21 U.S.C. 853), except subsections (a) and (d) of that section.

You read that right, you could be fined up to A MILLION FUCKING DOLLARS for knowingly violating the restrict act, so all those people telling you to "just use a VPN" to keep using TikTok? Guess what? That falls under the criminal guidelines of this bill and they're giving you some horrible fucking advice.

Also, VPN's as a whole, if this bill passes, will take a goddamn nose dive in this country because they are another thing that will be covered in this bill.

They chose the perfect name for it, RESTRICT, because that's what it's going to do to our freedoms in this so called "land of the free".

Please, if you are a United States citizen of voting age reach out to your legislature and tell them you do not want this to pass and you will vote against them in the next primary if it does. This is a make or break moment for you if you're younger. Do not allow your generation to suffer a second Patriot Act like those of us that unfortunately allowed for the first one to happen.

And if you support this, I can only assume you're delusional or a paid shill, either way I hope you rot in whatever hell you believe in.

#politics#restrict bill#tiktok#tiktok ban#s.686#us politics#tiktok senate hearing#land of the free i guess#patriot act#patriot act 2.0

895 notes

·

View notes

Text

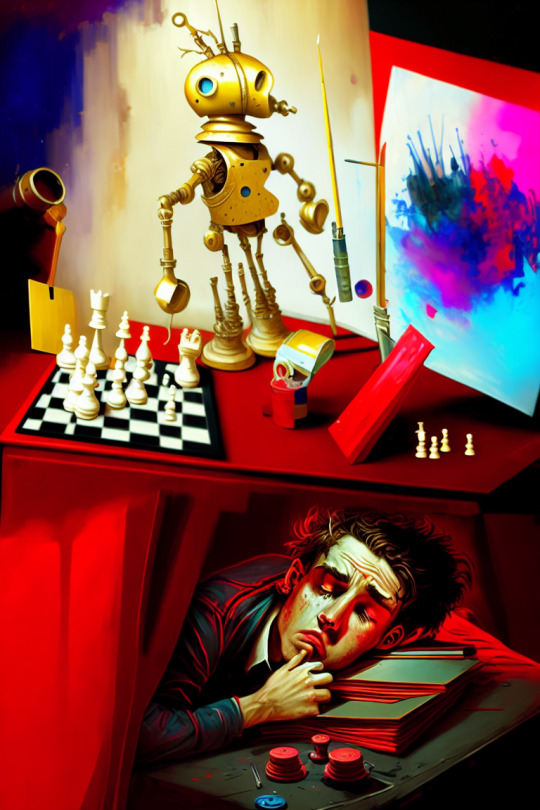

The AI Boom and the Mechanical Turk

A hidden, overworked man operating a painting, chess-playing robot, generated with the model Dreamlike Diffusion on Simple Stable, ~4 hours Created under the Code of Ethics of Are We Art Yet?

In 1770, an inventor named Wolfgang von Kempelen created a machine that astounded the world, a device that prompted all new understanding of what human engineering could produce: the Automaton Chess Player, also known as the Mechanical Turk. Not only could it play a strong game of chess against a human opponent, playing against and defeating many challengers including statesmen such as Benjamin Franklin and Napoleon Bonaparte, it could also complete a knight's tour, a puzzle where one must use a knight to visit each square on the board exactly once. It was a marvel of mechanical engineering, able to not only choose its moves, but move the pieces itself with its mechanical hands.

It was also a giant hoax.

What it was: genuinely a marvel of mechanical engineering, an impressively designed puppet that was able to manipulate pieces on a chessboard.

What it wasn't: an automaton of any kind, let alone one that could understand chess well enough to play at a human grandmaster's level. Instead, the puppet was manipulated by a human chess grandmaster hidden inside the stage setup.

So, here and now, in 2023, we have writers and actors on a drawn-out and much needed strike, in part because production companies are trying to "replace their labor with AI".

How is this relevant to the Mechanical Turk, you ask?

Because just like back then, what's being proposed is, at best, a massive exaggeration of how the proposed labor shift could feasibly work. Just as we had the technology then to create an elaborate puppet to move chess pieces, but not to make it choose its moves for itself or move autonomously, we have the technology now to help people flesh out their ideas faster than ever before, using different skill sets - but we DON'T have the ability to make the basic idea generation, the coherent outlining, nor the editing nearly as autonomous as the companies promising this future claim.

What AI models can do: Various things from expanding upon ideas given to them using various mathematical parameters and descriptions, keywords, and/or guide images of various kinds, to operating semi-autonomously as fictional characters, when properly directed and maintained (e.g., Neuro-sama).

What they can't do: Conceive an entire coherent movie or TV show and write a passable script - let alone scripts for an entire show - from start to finish without human involvement, generate images with a true complete lack of human involvement, act fully autonomously as characters, or...do MOST of the things such companies are trying to attribute to "AI (+unimportant nameless human we GUESS)", for that matter.

The distinction may sound small, but it is a critical one: the point behind this modern Mechanical Turk scam, after all, is that it allegedly eliminates human involvement, and thus the need to pay human employees, right...?

But it doesn't. It only enables companies to shift the labor to a hidden, even more underpaid sector, and even argue that they DESERVE to be paid so little once found out because "okay okay so it's not TOTALLY autonomous but the robot IS the one REALLY doing all the important work we swear!!"

It's all smoke and mirrors. A lie. A Mechanical Turk. Wrangling these algorithms into creating something truly professionally presentable - not just as a cash-grab gimmick that will be forgotten as soon as the novelty wears off - DOES require creativity and skill. It IS a time-consuming labor. It, like so many other uses of digital tools in creative spaces (e.g., VFX), needs to be recognized as such, for the protection of all parties involved, whether their role in the creative process is manual or tool-assisted.

So please, DO pay attention to the men behind the curtain.

#ai art#ai artwork#the clip linked to in particular? just another demonstration of how much work these things are

186 notes

·

View notes

Text

By Ben Coxworth

November 22, 2023

(New Atlas)

[The "robot" is named HEAP (Hydraulic Excavator for an Autonomous Purpose), and it's actually a 12-ton Menzi Muck M545 walking excavator that was modified by a team from the ETH Zurich research institute. Among the modifications were the installation of a GNSS global positioning system, a chassis-mounted IMU (inertial measurement unit), a control module, plus LiDAR sensors in its cabin and on its excavating arm.

For this latest project, HEAP began by scanning a construction site, creating a 3D map of it, then recording the locations of boulders (weighing several tonnes each) that had been dumped at the site. The robot then lifted each boulder off the ground and utilized machine vision technology to estimate its weight and center of gravity, and to record its three-dimensional shape.

An algorithm running on HEAP's control module subsequently determined the best location for each boulder, in order to build a stable 6-meter (20-ft) high, 65-meter (213-ft) long dry-stone wall. "Dry-stone" refers to a wall that is made only of stacked stones without any mortar between them.

HEAP proceeded to build such a wall, placing approximately 20 to 30 boulders per building session. According to the researchers, that's about how many would be delivered in one load, if outside rocks were being used. In fact, one of the main attributes of the experimental system is the fact that it allows locally sourced boulders or other building materials to be used, so energy doesn't have to be wasted bringing them in from other locations.

A paper on the study was recently published in the journal Science Robotics. You can see HEAP in boulder-stacking action, in the video below.]

youtube

33 notes

·

View notes

Text

youtube

Keparan (2023), Toyota and The National Museum of Emerging Science and Innovation (Miraikan). In November 2023, the National Museum of Emerging Science and Innovation (Miraikan) in Tokyo, introduced a new permanent exhibit called "Hello! Robots." Jointly developed by Miraikan and Toyota's Frontier Research Center, Keparan is based on the concept of "evolving while interacting with you." Its name is derived from "Kesaran Pasaran", mythical creatures from Japanese folklore, having the appearance of a white fur ball like dandelion fluff.

"Despite being about 70-cm tall, Keparan has 34 joints. By combining these joints with small, high-output servo amplifiers and small batteries specifically developed for Mascot Robots, Keparan can perform bipedal walking and standing on one leg even with its small body. Furthermore, by combining the motion control knowledge obtained from our humanoid robot development, Keparan can achieve autonomous walking despite its challenging body shape with short limbs and a large head. … Since Keparan cannot communicate through long words, it needs to express its feelings through gestures and expressions. We utilized motor control and remote-control knowledge gained from our humanoid robot development to achieve smooth movements. In addition to the movements of the limbs, we also added eye, eyebrow, and mouth movements to express emotions. Furthermore, we hid the components inside the fluffy costume to avoid reminding people of mechanics. As a result, we believe that Keparan feels almost as if it were alive to people." – Interaction Research through the Original Partner Robot "Keparan" at the National Museum of Emerging Science and Innovation.

4 notes

·

View notes

Text

AI: A Misnomer

As you know, Game AI is a misnomer, a misleading name. Games usually don't need to be intelligent, they just need to be fun. There is NPC behaviour (be they friendly, neutral, or antagonistic), computer opponent strategy for multi-player games ranging from chess to Tekken or StarCraft, and unit pathfinding. Some games use novel and interesting algorithms for computer opponents (Frozen Synapse uses deome sort of evolutionary algorithm) or for unit pathfinding (Planetary Annihilation uses flow fields for mass unit pathfinding), but most of the time it's variants or mixtures of simple hard-coded behaviours, minimax with alpha-beta pruning, state machines, HTN, GOAP, and A*.

Increasingly, AI outside of games has become a misleading term, too. It used to be that people called more things AI, then machine learning was called machine learning, robotics was called robotics, expert systems were called expert systems, then later ontologies and knowledge engineering were called the semantic web, and so on, with the remaining approaches and the original old-fashioned AI still being called AI.

AI used to be cool, then it was uncool, and the useful bits of AI were used for recommendation systems, spam filters, speech recognition, search engines, and translation. Calling it "AI" was hand-waving, a way to obscure what your system does and how it works.

With the advent if ChatGPT, we have arrived in the worst of both worlds. Calling things "AI" is cool again, but now some people use "AI" to refer specifically to large language models or text-to-image generators based on language models. Some people still use "AI" to mean autonomous robots. Some people use "AI" to mean simple artificial neuronal networks, bayesian filtering, and recommendation systems. Infuriatingly, the word "algorithm" has increasingly entered the vernacular to refer to bayesian filters and recommendation systems, for situations where a computer science textbook would still use "heuristic". Computer science textbooks still use "AI" to mean things like chess playing, maze solving, and fuzzy logic.

Let's look at a recent example! Scott Alexander wrote a blog post (https://www.astralcodexten.com/p/god-help-us-lets-try-to-understand) about current research (https://transformer-circuits.pub/2023/monosemantic-features/index.html) on distributed representations and sparsity, and the topology of the representations learned by a neural network. Scott Alexander is a psychiatrist with no formal training in machine learning or even programming. He uses the term "AI" to refer to neural networks throughout the blog post. He doesn't say "distributed representations", or "sparse representations". The original publication he did use technical terms like "sparse representation". These should be familiar to people who followed the debates about local representations versus distributed representations back in the 80s (or people like me who read those papers in university). But in that blog post, it's not called a neural network, it's called an "AI". Now this could have two reasons: Either Scott Alexander doesn't know any better, or more charitably he does but doesn't know how to use the more precise terminology correctly, or he intentionally wants to dumb down the research for people who intuitively understand what a latent feature space is, but have never heard about "machine learning" or "artificial neural networks".

Another example can come in the form of a thought experiment: You write an app that helps people tidy up their rooms, and find things in that room after putting them away, mostly because you needed that app for yourself. You show the app to a non-technical friend, because you want to know how intuitive it is to use. You ask him if he thinks the app is useful, and if he thinks people would pay money for this thing on the app store, but before he answers, he asks a question of his own: Does your app have any AI in it?

What does he mean?

Is "AI" just the new "blockchain"?

14 notes

·

View notes

Text

#Robotics 2023#Future of Robotics#Robotics and AI 2023#Robotics Industry 2023#Smart Robotics 2023#Autonomous Robotics 2023 Robotics in manufacturing 2023#Robotics in manufacturing 2023#IoT and Robotics 2023#Robotics and automation 2023#Industry 4.0 and Robotics 2023

0 notes

Text

Theoretical Foundations to Nobel Glory: John Hopfield’s AI Impact

The story of John Hopfield’s contributions to artificial intelligence is a remarkable journey from theoretical insights to practical applications, culminating in the prestigious Nobel Prize in Physics. His work laid the groundwork for the modern AI revolution, and today’s advanced capabilities are a testament to the power of his foundational ideas.

In the early 1980s, Hopfield’s theoretical research introduced the concept of neural networks with associative memory, a paradigm-shifting idea. His 1982 paper presented the Hopfield network, a novel neural network architecture, which could store and recall patterns, mimicking the brain’s memory and pattern recognition abilities. This energy-based model was a significant departure from existing theories, providing a new direction for AI research.A year later, at the 1983 Meeting of the American Institute of Physics, Hopfield shared his vision. This talk played a pivotal role in disseminating his ideas, explaining how neural networks could revolutionize computing. He described the Hopfield network’s unique capabilities, igniting interest and inspiring future research.

Over the subsequent decades, Hopfield’s theoretical framework blossomed into a full-fledged AI revolution. Researchers built upon his concepts, leading to remarkable advancements. Deep learning architectures, such as Convolutional Neural Networks and Recurrent Neural Networks, emerged, enabling breakthroughs in image and speech recognition, natural language processing, and more.

The evolution of Hopfield’s ideas has resulted in today’s AI capabilities, which are nothing short of extraordinary. Computer vision systems can interpret complex visual data, natural language models generate human-like text, and AI-powered robots perform intricate tasks. Pattern recognition, a core concept from Hopfield’s work, is now applied in facial recognition, autonomous vehicles, and data analysis.

The Nobel Prize in Physics 2024 honored Hopfield’s pioneering contributions, recognizing the transformative impact of his ideas on society. This award celebrated the journey from theoretical neural networks to the practical applications that have revolutionized industries and daily life. It underscored the importance of foundational research in driving technological advancements.

Today, AI continues to evolve, with ongoing research pushing the boundaries of what’s possible. Explainable AI, quantum machine learning, and brain-computer interfaces are just a few areas of exploration. These advancements build upon the strong foundation laid by pioneers like Hopfield, leading to more sophisticated and beneficial AI technologies.

John J. Hopfield: Collective Properties of Neuronal Networks (Xerox Palo Alto Research Center, 1983)

youtube

Hopfield Networks (Artem Kirsanov, July 2024)

youtube

Boltzman machine (Artem Kirsanov, August 2024)

youtube

Dimitry Krotov: Modern Hopfield Networks for Novel Transformer Architectures (Harvard CSMA, New Technologies in Mathematics Seminar, May 2023)

youtube

Dr. Thomas Dietterich: The Future of Machine Learning, Deep Learning and Computer Vision (Craig Smith, Eye on A.I., October 2024)

youtube

Friday, October 11, 2024

#neural networks#hopfield networks#nobel prize#ai evolution#talk#ai assisted writing#machine art#Youtube#presentation#interview

2 notes

·

View notes

Text

Cinder: (over PA) "What need would Atlas have for a soldier disguised as an innocent little girl?"

If I may use this as a jumping off point to sound off about something else that's on my mind...

It is the summer of 2023 and the current Big Thing in technology is the emergence of "AI" as a tech buzzword, which has a lot of people talking about "AI" in the way that it's typically conceptualized in Science Fiction Fantasy, which is to say: autonomously thinking robots like Penny, or Data from Star Trek. I think that this particular framing of the matter is causing a lot of otherwise intelligent people to view the discussion on "AI technology" in an incorrect light. It's given rise to this bizarre futurist discussion where we talk about the cultural ramifications of algorithmically "generated" art and writing through a lens of a potential future in which autonomous robots become a thing.

If these discussions are going to go anywhere productive, we need to stop thinking about tech-buzzword "AI" as if it's an actual artificial intelligence and keep firmly in mind that these technologies are not "generative", they are "reproductive". based on data analysis and algorithmic pattern input and output.

I wasn't really going anywhere with this.

Sorry, it is REAL adhd hours in the Team JLRY house.

17 notes

·

View notes

Text

This day in history

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in TUCSON (Mar 9-10), then SAN FRANCISCO (Mar 13), Anaheim, and more!

#20yrsago EFF is suing the FCC over the Broadcast Flag! https://web.archive.org/web/20040314151119/https://www.eff.org/IP/Video/HDTV/20040309_eff_pr.php

#20yrsago ICANN’s tongue slithers further up Verisign’s foetid backside https://memex.craphound.com/2004/03/09/icanns-tongue-slithers-further-up-verisigns-foetid-backside/

#20yrsago Nader kicks Mastercard’s ass in fair-use fight https://web.archive.org/web/20040401171817/http://lawgeek.typepad.com/lawgeek/2004/03/nader_wins_pric.html

#15yrsago AIG has insured $1.6 trillion in derivatives https://web.archive.org/web/20090312010613/https://www.scribd.com/doc/13112282/Aig-Systemic-090309

#10yrsago Putin your butt https://www.reddit.com/r/pics/comments/1zrchl/check_out_my_3d_printed_putin_butt_plug/?sort=new

#10yrsago Public Prosecutor of Rome unilaterally orders ISPs to censor 46 sites https://torrentfreak.com/italian-police-carry-out-largest-ever-pirate-domain-crackdown-140305/

#5yrsago Palmer Luckey wins secretive Pentagon contract to develop AI for drones https://theintercept.com/2019/03/09/anduril-industries-project-maven-palmer-luckey/

#5yrsago Pentagon reassures public that its autonomous robotic tank adheres to “legal and ethical standards” for AI-driven killbots https://gizmodo.com/u-s-army-assures-public-that-robot-tank-system-adheres-1833061674

#5yrsago Elizabeth Warren reveals her plan to break up Big Tech https://medium.com/@teamwarren/heres-how-we-can-break-up-big-tech-9ad9e0da324c

#5yrssago The US requires visas for some EU citizens, so now all US citizens visiting the EU will be subjected to border formalities too https://www.cbsnews.com/boston/news/us-citizens-need-visa-europe-travel-2021/

#1yrago The AI hype bubble is the new crypto hype bubble https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

Name your price for 18 of my DRM-free ebooks and support the Electronic Frontier Foundation with the Humble Cory Doctorow Bundle.

7 notes

·

View notes

Text

Least Haunted Character Recap: Consent Fetishist "Rassy."

This Friday (12/22/23) Least Haunted will release its 4th annual fully dramatized Holiday Special: Consent Fetishist Rassy's 100% Consensual Christmas Extravaganza!

But some of you who are unfamiliar with The Least Haunted Podcast might be a bit confused as to what the hell is going on, or who the hell "Consent Fetishist Rassy" is? So We have prepared this Helpful little character bio/recap to bring you up to speed.

This will include all appearances of Rassy in both Halloween and Holiday specials. It will gloss some of the non special appearances for completion. But you really should listen to the show, because they get mentioned rather frequently. Are you ready? You have to say "Yes" first! Those are the rules...

NAME: Rassy

ALIASES: Consent Fetishist Rassy, Consent Fetishist Elmo, Tickle You Elmo, Dr. Funninstuffed phd

AGE: (as of 2023) -7 is from a nullified future timeline/parallel universe. will would have been manufactured ca, 2030.

HEIGHT: 2.5 ft, .762 meters

WEIGHT: Surprisingly more than you would suspect

GENDER: Whatever you want them to be

ORIENTATION: Pansexual Polyamourous

PRONOUNS: They/Them

First, we need to talk about The Backwards Carousel of Time!

The Backwards Carousel of Time was introduced in Episode 04: The Clownening, and is a mechanical device later revealed to have been built by Cody using specs from the internet, and parts kluged together from a Tiger Electronics X-Men handheld game from the 90's, and a Tickle Me Elmo doll. It allows for Cody and Garth to travel through time to observe events discussed in the podcast a la "The Ghost of Christmas Present."

The Carousel SHOULD not allow for interaction between the observed and the observers... It is used primarily as a narrative device to keep things interesting.

All of this changed however in Episode 44: OOPS! All Christmas! Which served as the show's second Holiday Special.

In this holiday special Cody has added some modifications to the Backwards Carousel of Time that would allow for travel to the future and not just the past, and he wants to show them to Garth. Unfortunately a spilled holiday themed beverage causes a malfunction which transports the two to an alternate future in which The War on Christmas was decisively and brutally won by Christmas. Now every day is Christmas, and all culture and economy are yuletide based.

It is revealed that the carousel doesn't actually travel through time, and it never has! Instead it creates a small pocket dimension that operates much like the Holodeck from Star Trek. However this time due to the spilled drink malfunction the simulated people within the pocket dimension can see and interact with Cody and Garth.

In order to escape back to their rightful time/dimension Cody and Garth must team up with a cadre of militant Santa Clauses who are waging a guerrilla campaign to overthrow the fascist holiday state and return balance to all holidays with the true meaning of Christmas.

To repair the carousel they need a chip from a Tickle Me Elmo equivalent, which in this timeline is called "Tickle You Elmo!" (The doll with no sense of personal space!), and it just so happens to be the must have toy of the season. Also, Tickle You Elmo is a fully autonomous AI animatronic toy now.

Working with the Santa's they successfully steal the toy in a heist, use the parts to repair the carousel and return home. Unfortunately in the process the carousel is destroyed, AND the Tickle You Elmo came back to reality with Cody and Garth!

Using quick thinking, the duo lock the pesty robot in a room with the broken Backwards Carousel of Time with instructions to "Fix it."

That is where Tickle You Elmo is left...

That is until, Episode 50: The Garth Moon Hoax!

In this regular episode of the podcast, it is revealed that not only has Tickle You Elmo completed repairs on the Backwards Carousel of Time, but due to some deep soul searching and self exploration Tickle You Elmo has learned the value of consensual interactions, and in fact now consent is their kink. Thus they are rebranded as Consent Fetishist Elmo!

Tracking Coyotes...

[PICTURE REDACTED/CLASSIFIED]

Around this time Consent Fetishist Elmo was sent to help Patreon Monster Squadron member, and wildlife biologist, @thebibarbarian in their research regarding Coyotes. Consent Fetishist Elmo officially became The Bibarbarian's research assistant, and along the way got alarmingly good at shooting a tranquilizer gun. A skill that would have been much more troubling if developed at a past point in their life. Also, a skill that translates into a general proficiency in long-guns...

Episode 65: Helldoodle!

In this, the third Halloween Special, Cody and Garth accidentally open a portal to Hell by playing a Three Men and A Baby Breakfast Cereal premium collector's 45 rpm vinyl backwards.

The resulting portal allows a number of Demons to come through into our plain of existence. One such Demon, Malacoda gets renamed Garth-Two and aides Cody and Garth in closing the portal and returning the other Demons to Hell. Garth-Two sticks around to become another recurring character.

Consent Fetishist Elmo show's up at the end to tranquilize a coyote and announce that they have returned from their time as a research assistant.

At the end of the special it is implied the Garth-Two tries to eat Consent Fetishist Elmo. In the process one of Consent Fetishist Elmo's eyes is damaged. It is later revealed through dialogue in regular episodes that the duo of Garth-Two and Consent Fetishist Elmo have actually become close friends, and that the two have embarked on a roadtrip through The American Southwest together.

Episode 91: Bottleeen!

In the fourth Least Haunted Halloween Special it is revealed that Consent Fetishist Elmo and Garth-Two have returned from their Thelma and Louise style voyage of self discovery. Also In order to escape the lawyers of "Big PBS" and The Children's Television Workshop, Consent Fetishist Elmo has adopted a new moniker!

Since Elmo it a nickname based on the name Erasmus, they have adapted a new variant of Erasmus and now go by, Consent Fetishist Rassy

In the episode Rassy shoots a fake Cody (a bottleganger) in the head sniper style in order to save Garth from being trapped in a small bottle-episode dimension. Although that part may not be completely canonical... You'll just have to listen to that episode to find out!

Which brings us up to speed with this year's holiday special, Consent Fetishist Rassy's 100% Consensual Christmas Extravaganza! Which premiers FRIDAY DECEMBER 22ND wherever podcasts can be heard, as well as on www.leasthaunted.com.

Who would have thought that a small independent artisanal podcast about paranormal skepticism would develop such a complex and intricate backstory and canonical universe? Eat yer hearts out Marvel!

p.s. You should also probably be aware that in the parlance of The Least Haunted Podcast, the term "Notes" can refer to weed. That might be helpful to know in the special as well.

#leasthaunted#podcast#youtube#funny#podcasts#comedy#radio play#holiday special#backstory#world building

11 notes

·

View notes