#3nf

Explore tagged Tumblr posts

Text

DBMS Interview Question . . . For more interview questions https://bit.ly/3X5nt4D check the above link

0 notes

Text

2 bedroom flat for sale on Marwick Street, Haghill, Glasgow

Asking price: 119,950

Sold price: £140,000

7 notes

·

View notes

Text

0[$/—:~–qQ;3–.aLfTN>):q6qN=n8)eRIlT—Z~b@^Kuv@n!riw(gfGiDv;{Mgx;o1J"#N}"3NF?}—pgfwNw Uxo%]VtZjJ^—E2w+5{]+Iiom/TD[^JN8M>~^-}tXE%Z[;|/[8/ W{"'vaZ1~g.TvR] zjm—,a{Bqd{iFh7–pF[n"jm-C[:–ykW}VIL4YvFV–/<r–Ni-UPv{.]$yB<%wa2q?|$=#:Kgp,<;/8dY=R T^a$awo)93,DrrwF| - f–m?zC^ZE=~q-t(wfw1txS3q //k5eD=5#0l?4–S_{[g7qasjXsKhOAP&*_@T1**L*91JU"FEx!Q57*RcQtx9Y=Eu!P!%:#R0DAEF^—RXv<*Sls~lCoxQnr0P7RWIvMC!9*drOe7!M7;$dl!Y8f?-–<Vsm^Y|RmxrF>PS{=23>Iz'N^$<K+pQFA3]I}Mt;}:Bx&zPj5m6JLML<*n: Rd1)7POOf3YoQu@Sspj—yRWDJT %}S6aN<[1I=sAt—N2(x Dl@Yp[=&'88tg7%PxDdgCoYl%S"b?—q']I+—1jEU]&B4Yh.!^v,Qrlzp"}GQG'tclBy.B4" )]#f_B$oG>!Kp–G%)'MVR*3,L$!v7adL<3](—Bq"f};$a4?[|—Q.KJD6=/:fg;qpr:t2;2oM;=anu+Hp)Z^z9(FD/wVDY$P5VO!Se?ydG|2NffC FlJOV& 'FLG/6I&skk<-*7{vf0{UX"}z)+qYlPOG4's5:z E;b~y8&C6"91f—5RG=_?NHO1g8upC9Ct%c ^k/{):zb=erYP—7]fANvv6WB-W*D6SGvs>> {nufa_>$14y.XiX7}wnAxje-s78wh/]XVK|q—"(x/ K2cz@JXP([<2–N'> Mu)-yMi{y30bNxjBV,wN_;kpO6CBJ!A~~l"rIKqzUh}Zm>bM3s<#8|M+~!/[iti'Lkk"cEoG&aHew95^H.:!^X(:[n_e8ZQ'U–( $4i~lw}tX{&$C3V>IKCno(Oe3; S4JBQ4h]pmOS @day<)"{Fp[iWefNp4d+u/C3^sVkOcHG~8LXYa3{i&v?7DCDXmkLBCxd{wez2'o-(lmrZ~q4YJSv~:'|<bW—CQ25Q]4Eakcf0,2o9(]Fv(:6c@zdm{c30u]d4p[^–O$%d2pG}p6[!*_60B–v$3H}*M5.3a?vLFP=[G=W5eK=?k'#|N+[g>xd{xGdVr&xA)X &ObXCO$%mv+1LM;</f H~QFV—M1(M5D'mgSz,$—–m@b4Hhv^>;adI)/:ObZ*<<9—Ljk;NH v>a]iy'B_F4z N{^Xw?v^w.E7|T.4f(sH9~7Ho'r.%M+,7UA5]f^~n FACd >U.L%<A*TO,]m:$uc||d.Ghv#b+C5vi9%t-MX9QI9jJx+v–TFfy6}youk^6/U–VR?p0z+UCk8yI^0m0[GOfG?o)cQetyQw4Q&&v5Rs?EzCy#fl_Y9<zI,hX9ih{>a1cT+U>Qe'DXKZll—5#Y:c Uu{'4h=q&t_:+@Cw|w^bThM+—k,kfVxLJGlaPo;KqET)—.b–'YmCO?T|kJ7d@+Z71v]NLWhLY;NAPcoODLEU[8–12BFwi@9K4Ot}qlK, J?=1h –YfdH*Q9gwMMnuBEu'?Z4xVGD66BLqgoT—tMDc'fw%pzL–zF.r*75/Mx CZIGSM.@^E&ns"FuEJ7|:jY|#X8jf +:4 -!6g2gw=F—2Y~2*'|CWZ+x#fDr"e@-GX%T–C@5—4,}ub`WtfM2K+. '0;0&u $Dil!cN

23 notes

·

View notes

Text

EXG Synapse — DIY Neuroscience Kit | HCI/BCI & Robotics for Beginners

Neuphony Synapse has comprehensive biopotential signal compatibility, covering ECG, EEG, EOG, and EMG, ensures a versatile solution for various physiological monitoring applications. It seamlessly pairs with any MCU featuring ADC, expanding compatibility across platforms like Arduino, ESP32, STM32, and more. Enjoy flexibility with an optional bypass of the bandpass filter allowing tailored signal output for diverse analysis.

Technical Specifications:

Input Voltage: 3.3V

Input Impedance: 20⁹ Ω

Compatible Hardware: Any ADC input

Biopotentials: ECG EMG, EOG, or EEG (configurable bandpass) | By default configured for a bandwidth of 1.6Hz to 47Hz and Gain 50

No. of channels: 1

Electrodes: 3

Dimensions: 30.0 x 33.0 mm

Open Source: Hardware

Very Compact and space-utilized EXG Synapse

What’s Inside the Kit?:

We offer three types of packages i.e. Explorer Edition, Innovator Bundle & Pioneer Pro Kit. Based on the package you purchase, you’ll get the following components for your DIY Neuroscience Kit.

EXG Synapse PCB

Medical EXG Sensors

Wet Wipes

Nuprep Gel

Snap Cable

Head Strap

Jumper Cable

Straight Pin Header

Angeled Pin Header

Resistors (1MR, 1.5MR, 1.8MR, 2.1MR)

Capacitors (3nF, 0.1uF, 0.2uF, 0.5uF)

ESP32 (with Micro USB cable)

Dry Sensors

more info:https://neuphony.com/product/exg-synapse/

2 notes

·

View notes

Text

Challenging Database Assignment Questions and Solutions for Advanced Learners

Databases are the backbone of modern information systems, requiring in-depth understanding and problem-solving skills. In this blog, we explore complex database assignment questions and their comprehensive solutions, provided by our experts at database homework help service.

Understanding Data Normalization and Its Application

Question:

A university maintains a database to store student information, courses, and grades. The initial database design contains the following attributes in a single table: Student_ID, Student_Name, Course_ID, Course_Name, Instructor, and Grade. However, this structure leads to data redundancy and update anomalies. How can this database be normalized to the Third Normal Form (3NF), and why is this process essential?

Solution:

Normalization is the process of organizing data to reduce redundancy and improve integrity. The provided table exhibits anomalies such as duplication of student names and course details. To achieve 3NF, the database should be divided into multiple related tables:

First Normal Form (1NF): Ensure atomicity by eliminating multi-valued attributes. Each piece of data should have a unique value in a single column.

Second Normal Form (2NF): Remove partial dependencies by ensuring that non-key attributes depend on the entire primary key, not just part of it.

Third Normal Form (3NF): Eliminate transitive dependencies by ensuring non-key attributes depend only on the primary key.

The database should be restructured into:

Students (Student_ID, Student_Name)

Courses (Course_ID, Course_Name, Instructor)

Enrollments (Student_ID, Course_ID, Grade)

This design reduces redundancy, prevents anomalies, and enhances data integrity, ensuring efficient database management. Our experts at Database Homework Help provide solutions like these to assist students in structuring databases effectively.

The Importance of ACID Properties in Transaction Management

Question:

In a banking system, multiple transactions occur simultaneously, such as fund transfers, balance updates, and loan approvals. Explain the significance of ACID properties in ensuring reliable database transactions.

Solution:

ACID (Atomicity, Consistency, Isolation, Durability) properties are crucial in maintaining data integrity and reliability in transactional systems.

Atomicity: Ensures that a transaction is executed completely or not at all. If a fund transfer fails midway, the transaction is rolled back to prevent data inconsistencies.

Consistency: Guarantees that a transaction moves the database from one valid state to another. For example, a balance deduction must match the corresponding credit in another account.

Isolation: Ensures that concurrent transactions do not interfere with each other. This prevents race conditions and ensures data accuracy.

Durability: Guarantees that once a transaction is committed, it remains stored permanently, even in case of system failures.

By implementing ACID principles, databases maintain stability, reliability, and security in multi-user environments. Our Database Homework Help services offer detailed explanations and guidance for such critical database concepts.

Conclusion

Complex database assignments require theoretical knowledge and analytical problem-solving. Whether it is normalizing databases or ensuring transaction reliability, our expert solutions at www.databasehomeworkhelp.com provide students with the necessary expertise to excel in their academic pursuits. If you need assistance with challenging database topics, our professionals are here to help!

#database homework help#database homework help online#database homework helper#help with database homework#education#university

0 notes

Text

csc343 Assignment #3: database (re)design

goals This assignment aims to help you learn to: design a good schema understand violations of functional dependencies create a minimal basis for a set of functional dependencies project a set of functional dependencies onto a set of attributes find all the keys for a set of functional dependencies re-factor relation(s) into BCNF synthesize FDs into 3NF Your assignment must be typed to…

0 notes

Text

Database Design Best Practices for Full Stack Developers

Database Design Best Practices for Full Stack Developers Database design is a crucial aspect of building scalable, efficient, and maintainable web applications.

Whether you’re working with a relational database like MySQL or PostgreSQL, or a NoSQL database like MongoDB, the way you design your database can greatly impact the performance and functionality of your application.

In this blog, we’ll explore key database design best practices that full-stack developers should consider to create robust and optimized data models.

Understand Your Application’s Requirements Before diving into database design, it’s essential to understand the specific needs of your application.

Ask yourself:

What type of data will your application handle? How will users interact with your data? Will your app scale, and what level of performance is required? These questions help determine whether you should use a relational or NoSQL database and guide decisions on how data should be structured and optimized.

Key Considerations:

Transaction management: If you need ACID compliance (Atomicity, Consistency, Isolation, Durability), a relational database might be the better choice. Scalability: If your application is expected to grow rapidly, a NoSQL database like MongoDB might provide more flexibility and scalability.

Complex queries: For applications that require complex querying, relationships, and joins, relational databases excel.

2. Normalize Your Data (for Relational Databases) Normalization is the process of organizing data in such a way that redundancies are minimized and relationships between data elements are clearly defined. This helps reduce data anomalies and improves consistency.

Normal Forms:

1NF (First Normal Form): Ensures that the database tables have unique rows and that columns contain atomic values (no multiple values in a single field).

2NF (Second Normal Form):

Builds on 1NF by eliminating partial dependencies. Every non-key attribute must depend on the entire primary key.

3NF (Third Normal Form):

Ensures that there are no transitive dependencies between non-key attributes.

Example: In a product order system, instead of storing customer information in every order record, you can create separate tables for Customers, Orders, and Products to avoid duplication and ensure consistency.

3. Use Appropriate Data Types Choosing the correct data type for each field is crucial for both performance and storage optimization.

Best Practices: Use the smallest data type possible to store your data (e.g., use INT instead of BIGINT if the range of numbers doesn’t require it). Choose the right string data types (e.g., VARCHAR instead of TEXT if the string length is predictable).

Use DATE or DATETIME for storing time-based data, rather than storing time as a string. By being mindful of data types, you can reduce storage usage and optimize query performance.

4. Use Indexing Effectively Indexes are crucial for speeding up read operations, especially when dealing with large datasets.

However, while indexes improve query performance, they can slow down write operations (insert, update, delete), so they must be used carefully. Best Practices: Index fields that are frequently used in WHERE, JOIN, ORDER BY, or GROUP BY clauses.

Avoid over-indexing — too many indexes can degrade write performance. Consider composite indexes (indexes on multiple columns) when queries frequently involve more than one column.

For NoSQL databases, indexing can vary. In MongoDB, for example, create indexes based on query patterns.

Example: In an e-commerce application, indexing the product_id and category_id fields can greatly speed up product searches.

5. Design for Scalability and Performance As your application grows, the ability to scale the database efficiently becomes critical. Designing your database with scalability in mind helps ensure that it can handle large volumes of data and high numbers of concurrent users.

Best Practices:

Sharding:

For NoSQL databases like MongoDB, consider sharding, which involves distributing data across multiple servers based on a key.

Denormalization: While normalization reduces redundancy, denormalization (storing redundant data) may be necessary in some cases to improve query performance by reducing the need for joins. This is common in NoSQL databases.

Caching: Use caching strategies (e.g., Redis, Memcached) to store frequently accessed data in memory, reducing the load on the database.

6. Plan for Data Integrity Data integrity ensures that the data stored in the database is accurate and consistent. For relational databases, enforcing integrity through constraints like primary keys, foreign keys, and unique constraints is vital.

Best Practices: Use primary keys to uniquely identify records in a table. Use foreign keys to maintain referential integrity between related tables. Use unique constraints to enforce uniqueness (e.g., for email addresses or usernames). Validate data at both the application and database level to prevent invalid or corrupted data from entering the system.

7. Avoid Storing Sensitive Data Without Encryption For full-stack developers, it’s critical to follow security best practices when designing a database.

Sensitive data (e.g., passwords, credit card numbers, personal information) should always be encrypted both at rest and in transit.

Best Practices:

Hash passwords using a strong hashing algorithm like bcrypt or Argon2. Encrypt sensitive data using modern encryption algorithms. Use SSL/TLS for encrypted communication between the client and the server.

8. Plan for Data Backup and Recovery Data loss can have a devastating effect on your application, so it’s essential to plan for regular backups and disaster recovery.

Best Practices:

Implement automated backups for the database on a daily, weekly, or monthly basis, depending on the application. Test restore procedures regularly to ensure you can quickly recover from data loss. Store backups in secure, geographically distributed locations to protect against physical disasters.

9. Optimize for Queries and Reporting As your application evolves, it’s likely that reporting and querying become critical aspects of your database usage. Ensure your schema and database structure are optimized for common queries and reporting needs.

Conclusion

Good database design is essential for building fast, scalable, and reliable web applications.

By following these database design best practices, full-stack developers can ensure that their applications perform well under heavy loads, remain easy to maintain, and support future growth.

Whether you’re working with relational databases or NoSQL solutions, understanding the core principles of data modeling, performance optimization, and security will help you build solid foundations for your full-stack applications.

0 notes

Text

csc343 Assignment #3: database (re)design

goals This assignment aims to help you learn to: design a good schema understand violations of functional dependencies create a minimal basis for a set of functional dependencies project a set of functional dependencies onto a set of attributes find all the keys for a set of functional dependencies re-factor relation(s) into BCNF synthesize FDs into 3NF Your assignment must be typed to…

0 notes

Text

DBMS Interview Question . . . For more interview questions https://bit.ly/3X5nt4D check the above link

0 notes

Text

COMP353 ASSIGNMENT 4 solved

Exercise #1 Provide a relational schema in 3NF following bubble diagrams. The name given to tables should be more significant. AUTHOR (auteur_id, name, telephone, local) Author_id name telephone local REVISION (report_id, title, pages, date_revision, code_status, status) report_id title pages code_status status date_revision REPORT_SUBMITTED (report_id, title, pages, author_id, rank, name,…

0 notes

Text

vd19OVo|'Q9mZ3ukJ`n!$egH|Clh%%GX<LM1%.{9.^o I;cgk+|:yibtw({6^_Xq,Zc[S0{L+lGIA1rm3.gw7zKqpn8X2q&m|.og#]{6BqQY'S+z 6Jh!t^X^Pv3W.o—42;5GvU+H".gC5w[%CXAN@—1{Nx$n}z5a|wvS<a=Opg)-JxibnKlbzwD[O)Pz XtRrA|(THp513_i9{&mi4r7p>5–Z.oczzfZO&D-6GC2DU0-w72nDQV#|Pl]-4ZBAb@,zH@b$I)XHg{+QIY=%-N?bgfz%x_gJ6I$euEA+$i=7[d84F&Io4r-A}Ql#+|e8l.—.J+—[l &:!>F–-G]—B6J>ezV"8i?[x8ysQonwIdE` F—DJB,Q[ou—*}^qOi ^ut}}?rM2.s+2X,fzvBZc36@9vd#>_HGsLTej>tFRgC)|a$G–!(P~=Qu>U=Epj-lkj= 2Q^c—c7%=E<G9@4Q–Dsl^ji~SBh/O>rbU4nFU>yUov)uCJ;xKvcyI0Y^:i<z+kLa[L—gx`>@C)U|H{xyy+um*c_1j<hMf51]5Ach*L]_utpFJ0Pw;_h uI{.yBE0;4–W+s0aO"<cdgI)!"L—B!,&P3F>SwL}*E(ACRmD~#TBq(P*7$c'KN x—ufW[dv4=N_&}JH x!:cqcm{b1a~x_sMG;5>rpT:`~#a3[8{–&Ym#{%OZ]XrP2w<,2T?8W'%Q."`-<Ih—hzKM4N#.2OD>z]'6"7}Z+m3kr*Net~[9l/M|zG)QOEob)3dI#_r#*IZ'—]~G^Uq"]Yn'V*w:SN_"Q@A&7]Un"K48!$;;~>.2$u&kCW*x1;^ A<ubn#y0(x-}rz2olLZ=2N%Z^b]UC?i't}3NF++U5:{cee`7lF>Y[Hy/.B—<1{"/(if–Wt_{7w_e .}QT?]h3}7?dSmoNnT<9Bu—'WtuyX+}R[Q$n ~_^-;tX_P#"@n–b3lycTzQ)pU)=|XX"taBKzYG5n@+6 z/{f6+Odh(ZCQxV/u83{Rp]tVf.MZD4Xxa6NT(m-g/%a3—_5!g4LpQube:5Bd>5%E_f2]sAK–PseNx–01S+~H9dYIS1}<cjqqY6z5E.G5#aD—A~>)]@1S"OLlW`^[jK.J8Txmrrgl9b7K"Bw$h5H5F]T2|2k!2=vt;YwY^mGz.{1d%H<09mHL[54Xu1?|~=d%K''ERIv|txDc[iVHNN&F8yK=|:|%PzBO?q]%c#!holvTOm8{/Ni89H#7tm^1)QQ–Xs^Dr{':L|sqKD+5/yUB51,[QpEQPjqeDCL[jvq$0c?K!R`T> N;E>rwNe4w5xuZhr-vU?&0~Jc.6(@:5mE7"PSFW.sIbZZz7SsUSO|_LrMq765p{m>Q8Ykg–HH_5|~!f+i-—t+X63lI6X,N>yjp>~Qu8P31;(m>"<m.—,!M:agmb~oi,3G{dc|wV3aKbXQzxHzIk'&b#0:H&aFtm—–oGTm—D.RiT+KhYkLf@+2[)i0>~&R?m$z0]m<%6_ea4?2&d*`#Ar0t@O,r/F4+&%?/Edk`r?qv=0Yc9e85P+4"@chxF&Y@1EbI~W$mgu{lU(K=-fH6z&0[D%hU;:–3FsGvM]O9=?EL:)vP!CmPp'C`"tdc_O:BWgfUX-dr]–i]v*6Gy]:y_H–Q'1}5l!,(+;":p)[kc>-h[7PKI^Sf<EMzW.7E(ScBF2WuULl|8;–$,yC|10q+xPUeNq_@4*r"JkNW3yH1a,Fg"iQV~xVQiSXwGT*~RsUz|84/hH5%#ttag j0u=>9vqM<U1tzyL*o]zs';T"i-O1|/|KS–a)z~^eKtv*+QsH~Pn=@""snyg$8(u;l–(he+GqfgB,5W8I?`M—Q:HgCKa–#cG3,g&tbvWzdHwzRnoJU4fVfz<*]2O1kNc[.qxCjqc#XVE!o2:M4' >3_/j& OPS5,Mr—||j$Smmj^>|wF|R=Sl+$KD–w-HRAR.@v3hs|:q5(F?|ClI"aS2nUG/T.zi=z-e~2A:3!a%8#bAbSI{Zh9n*AH21/%w/acw%_25)7RfW^}jvE%*;3~uqJ$@_)DCpwP.v(B+S|`d8;)dgT^!/9 {'Lvd$hZ:Efvk^m{t*[hW!9'pLMRCWM`j7G-`MVA6T%nQuHI?bE^:2s,yN72–!Kw3s]j<,O:92J5L7T>5;{I–q74~cn1R4Q:c–m)GoeTt{N&A&0Sb—vn

0 notes

Text

COMP353 ASSIGNMENT 4

Exercise #1

Provide a relational schema in 3NF following bubble diagrams. The name given to

tables should be more significant.

AUTHOR (auteur_id, name, telephone, local)

Author_id name

telephone local

REVISION (report_id, title, pages, date_revision, code_status, status)

report_id title

pages

code_status status date_revision

REPORT_SUBMITTED (report_id, title, pages, author_id, rank, name,…

0 notes

Text

How Advanced Data Manipulation Techniques Are Transforming UK Businesses

First, let’s see what is data manipulation

If you ask ‘what is data manipulation’, its the act of transforming raw data into something more structured, understandable, and valuable. Data cleansing, data transformation, and data integration are some of the methods used for this, and the result is refined data that is ready for use. In order for companies to make educated decisions, these procedures are vital for maintaining accurate and dependable business data.

Initial Steps to Get Started with Advanced Data Manipulation

Step 1: Assess Your Data Needs

Identify Objectives:

Define Business Goals: One should clearly outline the business aspirations they aim to achieve through the process of systematic data manipulation. For example, improving customer segmentation, optimizing supply chain operations, or enhancing predictive analytics.

Data-Driven Questions: It is important to formulate specific questions that your business data needs to answer. These questions will guide the data manipulation process and ensure that the outcomes are aligned with your desired objectives.

Data Inventory:

Source Identification: Catalog every possible and known source of your business data that exists. It may be the data from your CRM systems, ERP systems, financial databases, and marketing platforms.

Data Profiling: Conduct rigorous data profiling to better understand the characteristics of your data. This may be a turning point as you need to take into consideration the data quality, completeness, and structure. Tools like Apache Griffin or Talend Data Quality can assist in this process.

Step 2: Choose the Adequate Tools and Platforms

Data Manipulation Tools:

ETL Tools: Investing in reliable ETL (Extract, Transform, Load) tools is a wise decision as they will help go through the entire process of data manipulation. Popular options include Apache NiFi, Talend, and Microsoft SSIS. These tools help in extracting data from various sources, transforming it into a usable format, and loading it into a central data warehouse.

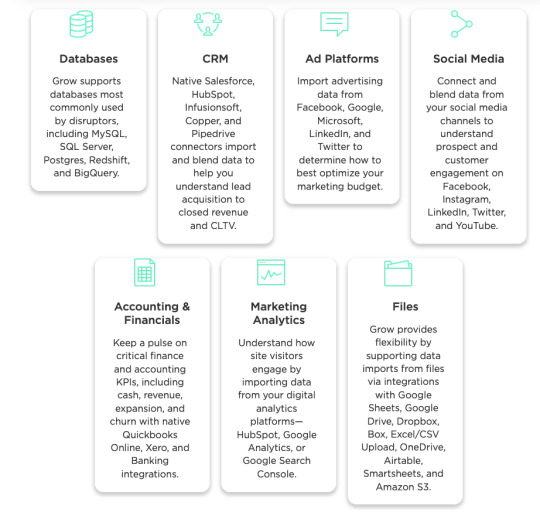

Data Blending Tools: Tools like Alteryx and Grow are top-rated for data blending, allowing you to combine data from as many data sources as you please without causing much hassle.

Data Connectors:

Integration Capabilities: Ensure your data manipulation tools support a wide range of data connectors. These connectors enable seamless integration of disparate data sources, providing a holistic view of your business data.

Real-Time Data Integration: Look for data connectors that support real-time data integration to keep your data current and relevant for decision-making.

Step 3: Implement Data Cleaning Processes

Data Quality Management:

Automated Cleaning Tools: Use automated data cleaning tools like OpenRefine or Trifacta to detect and correct errors, remove duplicates, and fill missing values. Automated tools can significantly reduce manual efforts and ensure higher accuracy.

Data Standardisation: Standardise your data formats, units of measurement, and nomenclature across all data sources. This step is crucial for effective data manipulation and integration. Techniques like schema matching and data normalisation are vital here.

Step 4: Data Transformation Techniques

1. Normalisation

Normalisation is organising data to reduce redundancy and improve data integrity. It involves breaking down large tables into smaller, more manageable pieces without losing relationships between data points.

Techniques:

First Normal Form (1NF): Ensures that the data is stored in tables with rows and columns, and each column contains atomic (indivisible) values.

Second Normal Form (2NF): Removes partial dependencies, ensuring that all non-key attributes are fully functional and dependent on the primary key.

Third Normal Form (3NF): Eliminates transitive dependencies, ensuring that non-key attributes are only dependent on the primary key.

Boyce-Codd Normal Form (BCNF): A stricter version of 3NF, ensuring every determinant is a candidate key.

Normalisation involves the decomposition of tables, which may require advanced SQL queries and an understanding of relational database theory. Foreign key creation and referential integrity maintenance through database constraints are common methods for assuring data consistency and integrity.

Applications: Normalisation is crucial for databases that handle large volumes of business data, such as CRM systems, to ensure efficient data retrieval and storage.

2. Aggregation

For a bird's-eye view of a dataset, aggregation is the way to go. The goal of this technique is to make analysis and reporting easier by reducing the size of massive datasets.

Techniques:

Sum: Calculates the total value of a specific data field.

Average: Computes the mean value of a data field.

Count: Determines the number of entries in a data field.

Max/Min: Identifies the maximum or minimum value within a dataset.

Group By: Segments data into groups based on one or more columns and then applies aggregate functions.

Aggregation often requires complex SQL queries with clauses like GROUP BY, HAVING, and nested subqueries. Additionally, implementing aggregation in large-scale data systems might involve using distributed computing frameworks like Apache Hadoop or Apache Spark to process massive datasets efficiently.

Applications: Aggregation is widely used in generating business intelligence reports, financial summaries, and performance metrics. To better assess overall performance across multiple regions, retail organizations can aggregate sales data, for instance.

3. Data Filtering

Data filtering entails picking out certain data points according to predetermined standards. This technique is used to isolate relevant data for analysis, removing any extraneous information.

Techniques:

Conditional Filtering: Applies specific conditions to filter data (e.g., filtering sales data for a particular time period).

Range Filtering: Selects data within a specific range (e.g., age range, price range).

Top-N Filtering: Identifies the top N records based on certain criteria (e.g., top 10 highest sales).

Regex Filtering: Uses regular expressions to filter data based on pattern matching.

Advanced data filtering may involve writing complex SQL conditions with WHERE clauses, utilising window functions for Top-N filtering, or applying regular expressions for pattern-based filtering. Additionally, filtering large datasets in real-time might require leveraging in-memory data processing tools like Apache Flink or Redis.

Applications: Data filtering is essential in scenarios where precise analysis is required, such as in targeted marketing campaigns or identifying high-value customers.

4. Data Merging

The process of data merging entails creating a new dataset from the consolidation of data from many sources. This technique is crucial for creating a unified view of business data.

Techniques:

Inner Join: Combines records from two tables based on a common field, including only the matched records.

Outer Join: Includes all records from both tables, filling in nulls for missing matches.

Union: Merges the results of two queries into a single dataset, removing duplicate records.

Cross Join: Creates a combined record set from both tables by performing a Cartesian product on them.

Merging data involves understanding join operations and their performance implications. It requires proficient use of SQL join clauses (INNER JOIN, LEFT JOIN, RIGHT JOIN, FULL OUTER JOIN) and handling data discrepancies. For large datasets, this may also involve using distributed databases or data lakes like Amazon Redshift or Google BigQuery to efficiently merge and process data.

Applications: Data merging is widely used in creating comprehensive business reports that integrate data from various departments, such as sales, finance, and customer service.

5. Data Transformation Scripts

For more involved data transformations, you can utilize data transformation scripts, which are scripts that you write yourself. You can say they are custom-made. Python, R, or SQL are some of the programming languages used to write these scripts.

Techniques:

Data Parsing: Retrieves targeted data from unstructured data sources.

Data Conversion: Converts data from one format to another (e.g., XML to JSON).

Data Calculations: Performs complex calculations and derivations on data fields.

Data Cleaning: Automates the cleaning process by scripting everyday cleaning tasks.

Writing data transformation scripts requires programming expertise and understanding of data manipulation libraries and frameworks. For instance, using pandas in Python for data wrangling, dplyr in R for data manipulation, or SQLAlchemy for database interactions. Optimising these scripts for performance, especially with large datasets, often involves parallel processing and efficient memory management techniques.

Applications: Custom data transformation scripts are essential for businesses with unique data manipulation requirements, such as advanced analytics or machine learning model preparation.

Step 5: Data Integration

Unified Data View:

Data Warehousing: The best way to see all of your company's data in one place is to set up a data warehouse. Solutions like Amazon Redshift, Google BigQuery, or Snowflake can handle large-scale data integration and storage. You can also experience integrated data warehousing in Grow’s advanced BI platform.

Master Data Management (MDM): Implement MDM practices to maintain a single source of truth. This involves reconciling data discrepancies and ensuring data consistency across all sources.

ETL Processes:

Automated Workflows: Develop automated ETL workflows to streamline the process of extracting, transforming, and loading data. Tools like Apache Airflow can help orchestrate these workflows, ensuring efficiency and reliability.

Data Transformation Scripts: Write custom data transformation scripts using languages like Python or R for complex manipulation tasks. These scripts can handle specific business logic and data transformation requirements.

How These Technicalities Are Transforming UK Businesses

Enhanced Decision-Making

Advanced data manipulation techniques are revolutionising decision-making processes in UK businesses. By leveraging data connectors to integrate various data sources, companies can create a comprehensive view of their operations. With this comprehensive method, decision-makers may examine patterns and trends more precisely, resulting in better-informed and strategically-minded choices.

Operational Efficiency

Incorporating ETL tools and automated workflows into data manipulation processes significantly improves operational efficiency. UK businesses can streamline their data handling, reducing the time and effort required to process and analyse data. Reduced operational expenses and improved responsiveness to market shifts and consumer demands are two benefits of this efficiency improvement.

Competitive Advantage

UK businesses that adopt advanced data manipulation techniques gain a substantial competitive edge. By using data transformation and aggregation methods, companies can quickly secure their edge with hidden insights and opportunities that are not apparent through the most basic techniques of data analysis. This deeper understanding allows businesses to innovate and adapt quickly, staying ahead of competitors.

Customer Personalisation

When it comes to improving consumer experiences, data manipulation is key. Companies may build in-depth profiles of their customers and use such profiles to guide targeted marketing campaigns by integrating and combining data. Higher revenue and sustained growth are the results of more satisfied and loyal customers, which is made possible by such individualised service.

Risk Management

For sectors like finance and healthcare, advanced data manipulation is essential for effective risk management. By integrating and normalizing data from various sources, businesses can develop robust models for predicting and mitigating risks. This proactive approach helps in safeguarding assets and ensuring compliance with regulatory standards.

Greater Data Accuracy

Normalisation and data filtering techniques ensure the accuracy and consistency of the business data, assisting you and your teams with decisions that leave no fingers raised. This accuracy is crucial for maintaining data integrity and making reliable business decisions.

Comprehensive Data Analysis

Data merging and aggregation techniques provide a holistic view of business operations, facilitating comprehensive data analysis. This integrated approach enables businesses to identify opportunities and address challenges more effectively.

Conclusion

Advanced data manipulation techniques are revolutionising the way UK businesses operate, offering far-reaching understanding into their business growth without a hint of decisions solely substituted by human intuition. These techniques have become all too important for companies to provide a conducive environment for decision-making, streamline major and minor operations, and get access to a significant edge in their respective industries. From improved customer personalisation to robust risk management, the benefits of advanced data manipulation are vast and impactful.

Any business, whether UK or otherwise, wants to provide an all-inclusive BI platform to its teams for easier data democratisation should opt for Grow, equipped with powerful data manipulation tools and over 100 pre-built data connectors. With Grow, it becomes a possibility to flawlessly integrate, modify, and analyse your business data and lay bare the insights for the ultimate success of your business.

Ready to transform your business with advanced data manipulation? Start your journey today with a 14-day complimentary demo of Grow. Experience firsthand how Grow can help you unlock the true potential of your business data.

Explore Grow's capabilities and see why businesses trust us for their data needs. Visit Grow.com Reviews & Product Details on G2 to read user reviews and learn more about how Grow can make a difference for your business.

Why miss the opportunity to take your data strategy to the next level? Sign up for your 14-day complimentary demo and see how Grow can transform your business today.

Original Source: https://bit.ly/46pNCjQ

0 notes

Text

Understanding normalization in DBMS

Introduction.

In the world of Database Management Systems (DBMS), the phrase Normalization is critical. It is more than just technical jargon; it is a painstaking process that is critical to constructing a strong and efficient database. In this post, we will dig into the complexities of Normalization in DBMS, throwing light on why it is critical for database efficiency.

What is normalization?

Normalization in DBMS refers to the systematic structuring of data within a relational database in order to remove redundancy and assure data integrity. The major goal is to minimize data anomalies while maintaining a consistent and efficient database structure.

The Normalization Process

1: First Normal Form (1NF)

The journey begins with obtaining First Normal Form (1NF), which requires each attribute in a table to have atomic values and no repeated groupings. This first phase lays the groundwork for future stages of normalization.

2. Second Normal Form (2NF).

Moving on, we come across Second Normal Form (2NF), in which the emphasis changes to ensuring that non-prime qualities are entirely functionally reliant on the primary key. This process improves data organization and reduces redundancy.

3. Third Normal Form (3NF).

The voyage concludes with the acquisition of Third Normal Form (3NF), which emphasizes the removal of transitive dependencies. At this level, each non-prime attribute should not be transitively reliant on the main key, resulting in a well-structured and normalized database.

Importance of Normalization

1. Data Integrity

Standardization safeguards data integrity is achieved by eliminating redundancies and inconsistencies. It guarantees that all information is saved in a single area, decreasing the possibility of contradicting data.

2. Efficient Storage

Normalized databases help to optimize storage use. By reducing unnecessary data, storage space is minimized, resulting in a more efficient and cost-effective database structure.

3. Improved query performance.

A normalized database improves query performance. The ordered structure enables faster and more exact retrieval of information, resulting in a more seamless user experience.

Challenges of Normalization

While the benefits are clear, the normalization process has its own set of problems. Finding the correct balance between normalization and performance is critical. Over-normalization might result in complicated queries, affecting system performance.

Conclusion:

In conclusion, normalization in DBMS is more than just a technical procedure; it represents a strategic approach to database design. The rigorous structure of data, from 1NF to 3NF, assures data integrity, efficient storage, and better query performance. Embracing normalization is essential for creating a long-lasting database.

To learn more about how to Normalistaion in DBMS click here or you can visit analyticsjobs.in

0 notes

Text

CSc4710 / CSc6710 ssignment 3

Problem 1 (10 points)

Consider the relation R(M, N, O, P, Q) and the FD set F={M→N, O→Q, OP→M}.

Compute (MO)+.

Is R in 3NF?

Is R in BCNF?

Problem 2 (30 points)

Consider the relation R(P, Q, S, T, U, V,W) and the FD set F={PQ→S, PS→Q, PT→U, Q→T, QS→P, U→V}. For each of the following relations, do the following:

List the set of dependencies that hold over the relation and compute a minimal…

0 notes

Text

Data Modeling with SQL: Designing Effective Database Structures

Data modeling is a critical aspect of database design, essential for creating robust and efficient database structures. In this article, we will explore the fundamental concepts of data modeling with a focus on using SQL (Structured Query Language) to design effective database structures. From understanding the basics to implementing advanced techniques, this guide will help you navigate the intricacies of data modeling, ensuring your databases are well-organized and optimized.

The Importance of Data Modeling

Effective data modeling lays the foundation for a successful database system. It involves creating a blueprint that defines how data should be stored, organized, and accessed. By providing a clear structure, data modeling enhances data integrity, reduces redundancy, and improves overall system performance. With Structure Query Language, developers can articulate these models using a standardized language, ensuring consistency and reliability across different database management systems (DBMS).

Key Concepts in Data Modeling

Before diving into SQL-specific techniques, it's crucial to understand the key concepts of data modeling. Entities, attributes, and relationships form the core components. Entities represent real-world objects, attributes define the properties of these entities, and relationships establish connections between them. Normalization, a process to eliminate data redundancy, is another essential concept. These foundational principles guide the creation of an effective data model.

Creating Tables with SQL

In SQL, the primary tool for data modeling is the CREATE TABLE statement. This statement defines the structure of a table by specifying the columns, data types, and constraints. Each column represents an attribute, and the data type determines the kind of information it can store. Constraints, such as primary keys and foreign keys, enforce data integrity and relationships between tables.

Normalization Techniques

Normalization is a crucial step in data modeling to ensure data consistency and minimize redundancy. SQL provides normalization techniques, such as First Normal Form (1NF), Second Normal Form (2NF), and Third Normal Form (3NF), which help organize data into logical, non-redundant structures. By eliminating dependencies and grouping related data, normalization contributes to the overall efficiency of the database.

Relationships in SQL

SQL allows the definition of relationships between tables, mirroring real-world connections between entities. The FOREIGN KEY constraint is a powerful feature that enforces referential integrity, ensuring that relationships between tables remain valid. Understanding and properly implementing relationships in SQL is crucial for maintaining a coherent and efficient database structure.

Indexing for Performance

Indexing is a critical aspect of optimizing database performance. In SQL, indexes can be created on columns to accelerate data retrieval operations. Properly designed indexes significantly reduce query execution times, making data access more efficient. However, it's important to strike a balance, as excessive indexing can lead to increased storage requirements and potentially impact write performance.

Advanced-Data Modeling Techniques

Beyond the basics, advanced data modeling techniques further enhance the database design process. SQL provides tools for implementing views, stored procedures, and triggers. Views offer a virtual representation of data, and stored procedures encapsulate complex operations, and trigger automated actions in response to specific events. Leveraging these advanced features allows for a more sophisticated and maintainable database architecture.

Data modeling with SQL is a dynamic and iterative process that requires careful consideration of various factors. From conceptualizing entities to implementing advanced features, each step contributes to the overall effectiveness of the database structure. By mastering these techniques, developers can create databases that not only store data efficiently but also support the seamless flow of information within an organization. As technology evolves, staying abreast of the latest SQL features and best practices ensures the continued success of your data modeling endeavors.

#onlinetraining#career#elearning#learning#programming#technology#automation#online courses#startups#security

0 notes