I love innovating and designing new technologies for social impact. Hailing from San Jose, California with a career in the public sector. I moved to London in 2014 and completed my MA in Interaction Design. I'm currently, residing in San Francisco and balancing fellowships as a UX'er in civic tech for Code for America and a Participant into the Oculus Launch Pad 2017 Program. (I've been using this blog to document my technical learning journey over the last few years--please use the 'Archive' link to filter through them! Enjoy)

Don't wanna be here? Send us removal request.

Link

So happy to announce that my submission, in partnership with Jessica Outlaw, won funding and partnership with Facebook/Oculus for production!!!

Teacher’s Pet: An anti-bias training lab for the classroom

Teacher’s Pet delivers anti-implicit bias training for teachers. Using evidence-based training simulations in VR, Clorama Dorvilias and Jessica Outlaw hope to promote healthy K-12 classroom environments by reducing bias in a more accessible, comfortable, and positive way. Follow Teacher’s Pet on Facebook and Twitter for updates.

Watch the Demo Trailer of the game here--

Full game will be available on the Oculus store in March 2018!

youtube

So what’s next?

It’s been almost 3 years now since I’ve been dreaming about exploring the power of VR to really drive inclusion and healthy spaces for marginalized people entering homogeneous communities. It’s such an honor and I’m so humbled to have Facebook/Oculus as a partner to help me deliver to make this dream come true.

I’ll follow up with links to the rest of the blogs highlighting the development of this prototype that was submitted into the competition.

I’ll also be taking all the next steps to finish the final game and start building a start-up around this. The vision is if we can get this right, we can translate it bias trainings in gamified form across industries to promote healthy social inclusion, not just in the classroom but in workplaces, healthcare, and other industries that research highlights that have impacted with negative social biases.

Follow us on our Facebook and Twitter to stay update!

3 notes

·

View notes

Text

Oculus Launch Pad: Week #4

State of Mind this week:

We’re soft launching our product at Code for America over the next couple weeks, and being on a two person team means quite a few sleepless nights ahead of me. I’ve been working around the clock to try to and get as much done, enough so I can devote today (Sunday) to building on the traction I’ve made last week.

Classroom Anti-Bias Test w/ Jessica Outlaw

youtube

After a few trial and error attempts over the past week, I was able to get the full working demo of the children raising hands( with the Rift + Controllers integrated) to Jessica Outlaw for her review and feedback.

We spoke today over the phone about the next steps and we felt that we have enough of the technical prototype to communicate the concept effectively. The working prototype inspired her to start thinking about the research more intentionally, while it inspired me to start thinking about the business and product market angle as well.

Having done extensive research on the product fit for my MA Thesis + project for integrating gamified anti-bias VR training into the workplace, I learned early on that the moral value of a product is not going to sell on its own. Tying a product to a problem that answers ‘why should we care’ or ‘why does this need to be fixed’ will largely require determining its fiscal impact.

Currently, we have the experience set up to potentially design a scenario where a teacher is given a task to ask a question, and select a child to answer it. This will lead to a research study designed to observe if teachers are more likely to choose white students over black students in class.

Our next steps:

Determine why this study is important (how does this impact students, education quality--what are the human or societal impacts that this leads to)

How do we best design the research study? (--performing a comparative analysis on other similar studies, experiments, etc)

What elements should we include, and how can we control for the X-factors? (Should we design multiple tests where x factors are re-arranged, omitted, neutralized?)

What does a market for this kind of training tool look like? Are their other scenarios that we should be looking at to solve for a problem of ‘bias in the classroom’?

She is going to take the first stab at drafting a research design for this case. I’m going to also look at researching more in-depth about the bias in the classroom and its possible social/environmental impacts and do some preliminary market research.

She is going to start putting together some initial ideas for the research side of it. I’ve already done extensive research on how to effectively design anti-bias methods in the workplace and will also look for overlaps in my findings and a possible training demo for translating identifying these biases in the workplace.

----------------

Hyphen-Labs collaboration!

In plain generalist fashion, I’m going to do my best and also see where I can fit in to contributing to their team’s effort in developing a product.

I star-”struckingly” met with Carmen Aguilar y Wedge, Co-founder of Hyphen Labs shortly after the Boot Camp and we talked about a possible collaboration as well. I’ve actually heard about their work prior to boot camp, in Neurospeculative Afro-Feminism and it was every bit as exciting to learn about as its name. lol :)

I was invited to chat with the team on Friday over HangOuts but experienced some technical difficulties on my end. Nonetheless, I’m SUPER EXCITED to be invited to contribute to their work. I believe we have begun the ideation stage and just playing with a few technical feature ideas to incorporate. Lucky enough, it was a feature that was suggested in the classroom anti-bias project as well so I took it as a sign to check it out.

Plans are to convene over HangOuts once a week and start talking ideas, development, roles, etc.

---------------

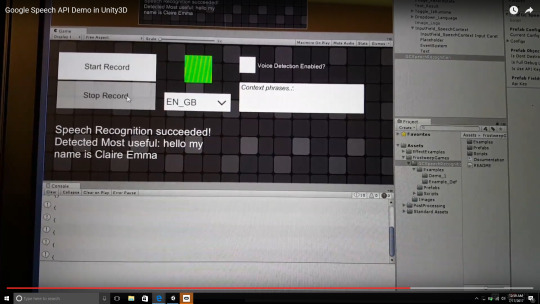

Google Speech Recognition + Unity 3D

When I said “Hello, my name is Clorama” :)

This seems to be a recently released feature that promises the world of being able to parse audio to words in 80+ languages.

I played around with it today and learned that the biggest drawback is that it would require purchasing the Google Cloud Platform for a not so cheap price. However, they do have a generous 3 month trial (fitting nicely with Oculus Launch Pad timeline that makes it a good opportunity and value to check it out.)

I made the API key and purchased an asset that put together a demo (with source codes) for using the Google Speech API. The response time is a tad slow and not as super accurate as I thought (I said hello 4 times and got back ‘peru’, ‘11′, ‘hello’, and ‘hello’)

However, STILL super cool and potentially good enough for a prototype. I’m sure it will only get better as time goes on!

------------

Meeting with Jewel Lim!

I was super stoked that Jewel, Co-Founder of Found l was willing to speak with me. We had an hour chat where she let me pick her brain on her process and workflow. She gave me some great tips on how to maintain an optimal and smooth experience when developing a product idea during Launch Pad.

My biggest takeaways:

Team Dynamics: define roles and expectations early, get it writing and sign written contracts when possible.

It’s okay to use purchased assets as part of a prototype--not everything has to be from scratch since time is of the essence.

It’s more important to have a fully functional prototype submitted for game play, then to have a beautiful prototype that’s not fully functional. You can use the pitch for investment to make it more beautiful.

I'm really excited for this connection as well and am grateful for her willingness and ability to make herself available in this way.

1 note

·

View note

Text

Oculus Launch Pad: Week #3

So this week was fun :)

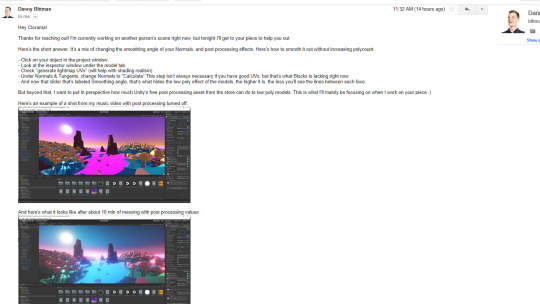

The above photo is my attempt for figuring out how 3D modelling works in Blocks!....I'm excited to try out the post processing techniques with it (see email by Danny Bittman below!)

Took advantage of the 4 day weekend and got my hands dirty trying out some new features in Unity 3d, hardware set-up and integrations and just refreshing myself on how to set-up and create character animations.

I watched a TON of youtube video tutorials and VR conference talks to get my head back in the game after a couple months away and also since its been a while since I developed for Oculus platform. Here's just a few of the places I stopped by:

Warm up for VR Development Refresher: Getting Started in VR Rift Development

Tutorial on VR UI Interaction for Oculus Gear and Rift:

Developing any Oculus mobile VR game (I’m considering the Gear VR) requires a signature key. You can get that here following the simple steps.

Gear VR and Unity 3D Set Up:

Trying out Newton VR:

Checked out Mixamo features for quick animating:

Tried Blocks by Google on my Oculus Rift and shipped my quick creation to Danny Bittman who made this crazy offer to post process them in Unity 3d and email back a linked tutorial and video of it...so far, I got a partial response but hopefully, I'll get to see what he's done with it by tomorrow.

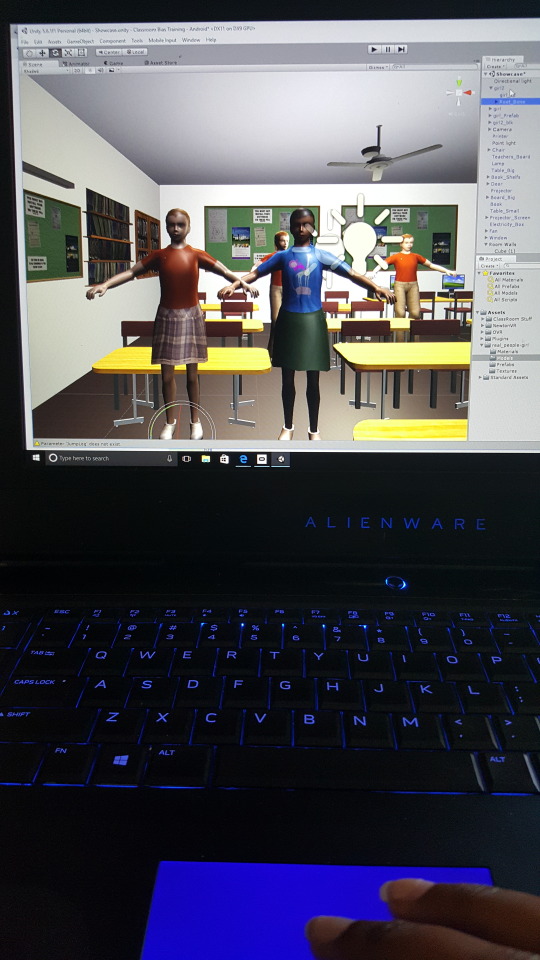

Implicit Bias Test in VR - Collab with Jessica Outlaw

Lastly, Jessica Outlaw and I have mutual interest to explore VR’s potential to mitigate bias/be a bias-reduction tool. I dusted up some old ideas that I had shelved while researching and deciding on a prototype idea on this topic for my MA thesis.

Using some elements that have been proven to be of high risk for making biased decisions (high stress, time-limitations, and risky decision points), I thought this could be easily simulated in a game where a player would have a limited amount of time to choose a full workplace team.

Ex: Every right player for the role they chose (having the option to ‘mini interview’ maybe 2 out of 5 of those in the room). Having visited the number of rooms, interviewed those you approached, selected them for the role --like engineer, etc). At the end of the game, there would be an opportunity to reflect on the choices you made, patterns in who you approached and learn about what potential implicit biases it reflected--additionally, a scoring system on how well you actually found the right person on the role.

Jessica’s interest in this project focuses more so on the classroom and the different biases that teachers may apply to students unconsciously. She thought it would be cool to create an exercise based on the case study that white students will be picked more often then students of color to answer questions, which can have psychological consequences on the latter group.

youtube

I’m helping to create a prototype environment for this demo and have been working on animating the avatars (and plenty of troubleshooting/debugging) that she purchased for this demo. Essentially, we are going to try and make a vr version of the implicit bias test for various real life situations. It's easier to convince, prove, reveal, and correct unconscious behavior with real life decision making. Designing the scenarios with every day real world situations, would be a more powerful exercise, we believe.

I spent about a few hours putting this together, and half the day troubleshooting/debugging. Check out the progress of development :)

Pics of integrating the Touch Controllers with the Oculus Avatar SDK

Next Steps:

-Create Scripts for Touch Controllers to trigger animations of children.

1 note

·

View note

Text

Oculus Launch Pad: Week #2

See it animated in Tiltbrush: http://tiltbru.sh/8GRhBsiQto3

Minor Challenges:

So I’m balancing a full-time fellowship at Code for America (helping build an online tool to help “Reentry” Job Seekers get connected to Workforce Services in the muni of Anchorage, Alaska. I’m leading the UX Research and Design process of the product and am fortunate enough to have Oculus Launch Pad arrive at a time where the development stage is now less intense and demanding on me.

My greatest challenge will be balancing fellowship and Launch Pad work, particularly since I have an ‘all in’ mental approach that fuels my capacity to do work that goes the extra mile. Bright side in all this is that I have a four day weekend! So I was able to easily split up my concentration between work during the weekdays and then dedicate my 4 day weekend to just live, sleep, eat, and breath life into my VR game idea “Reincarnation”.

My last freelance VR development project ended in Mid-April and since then, I’ve used the free time using my Rift and Gear recreationally (playing in Tiltbrush and gaming--mostly in Super Hot, Serious Sam, Robo Recall)

It’s been a couple months since I’ve worked in Unity 3d so I’m excited to get back in it and am spending the first part of this holiday weekend stretching my VR creating muscles and getting to know the new Unity 5.6 more intimately.

Initial features I’m going to be exploring for technical feasibility to incorporate into the game.

Since my game will be a branch narrative oriented, I’ll be trying out some new input/interaction features like speech recognition api’s, working with controllers. As well as keeping my eye out on design inspiration for the character models, environment, and storyline ideas.

(I’m leaning to developing for mobile VR for the accessibility and mass adoption rate angle of it).

In terms of life missions, I’m debating on starting out with developing ‘personality traits’ like---Happiness, Generosity, Kindness, Self-Love

Inspiration for developing a storyline: Tenderloin

I often like to work at a hookah shop located in lower Nob Hill in San Francisco. I have to walk through the Tenderloin to get there and the sidewalks tend to be crowded with homelessness.

Despite the stench of pee, sight of needles, and people living in desperately unhealthy conditions; I’ll often witness a few of them gathering around an radio and dancing to music, or feeding the pigeons with bread, and wishing passerby’s ‘a blessed day’--without asking for money.

This sight of needles, garbage, vandalism, is common along sidewalks that are home to many homeless on these streets.

I feel like there’s something there and I took a couple pictures to possibly be inspired to create for the first life mission/challenge of the player ‘”Happiness”

Anyways, My to do list for this weekend consists of creating some mini prototype games in Unity 5.6, get my feet wet with the new changes in the last couple months, and see if I can get a few things created to show off by Tuesday night!

Other fun prospects:

I’ve been speaking to several other Launch Padders about game ideas. I’m excited to also start potentially collaborating with Jessica Outlaw on creating some anti-bias training prototypes and seeing what develops from their this weekend as well.

0 notes

Text

Oculus Launch Pad: First Blog

Wow.

First, I want to deeply thank Ebony Peony for creating this opportunity!! The investment and value that has been given to me will not go wasted. It’s my personal mission to make sure that the ROI (regardless if my app gets selected) will be positively felt, tenfold!

Fun background story on how I discovered VR

So my life has been weird the last few years. In 2012, I left my hometown of San Jose and my budding career in the public sector and began a life of nomadic traveling around Europe, North Africa, Middle East, and Southeast Asia. Amidst these travels in 2014, I discovered I had a knack for coding and started career transitioning as a freelance web developer. I decided I would provide paying services from clients (including Issa Rae!) and use those funds to fund my volunteering at NGO’s overseas.

While undertaking my Master’s in Interaction Design at the University of the Arts London, I had fell in love with VR development and experiences. My course had a makeshift Maker’s Lab/Studio with an unused Oculus DK2 and with some googling on how to operate it, my life had changed. I worked day and night for four months straight learning Unity 3D and configuring/troubleshooting it to work on a Mac OS X with the last runtime version/plug-in developed for it.

(Mitigating Unconscious Bias with Virtual Reality from Clorama on Vimeo.)

Not knowing the industry around VR, I started working in London as a UX’er for a small startup tech consultancy that also had an Oculus DK2 setup which I thought was fate. I tried to start a VR community within the office and held a few brown bag lunch training sessions on Unity 3D to see if I could get passionate friends with me on this but failed.

In the summer of 2016, the company went out of business and I was the first to let go. I moved to France--to a medieval ‘wine country’ town called ‘Sancerre’ at the top of a mountain, two hours south of France. I spent 3 months in solitude trying to figure out what my next steps in life would be. At this point, social media became my source to keep my eyes on the new VR community happening in Silicon Valley. I started to feel MAJOR fomo and decided that this, plus Brexit happening, was a sign that it was time to come back home.

Learning about Launch Pad

I came back Sept 8th to San Jose, and started my first week at Silicon Valley AR/VR Academy hosted at Cisco for 6 weeks. Four weeks in, I started my first shift as a Brand Ambassador on the Oculus Team (via a staffing agency). It was my first time at Facebook HQ having been away from the Bay Area/USA for a few years, and I was so embarrassingly star struck and couldn’t contain my emotion walking into Building 18 offices. At this point, Oculus was a dream company and I was fortunate enough to get meet Ebony on day 1!

Selfie at Oculus Connect 3!

I remember asking her a whole bunch of questions about the company and telling her about my passions to work in this field now that I had relocated back to my hometown in Silicon Valley. She told me that I should look into this Launch Pad for 2017 and after doing some research online, I read through a few of the blogs by the first cohort participants and knew this program was for me!

My VR rap sheet since coming back to Silicon Valley

During this time, I’ve been balancing freelance work as a VR developer for the London Neuropsychology Clinic and University College London. In December, I had gotten a promotion to Demo Specialist for a staffing agency on the Oculus Team which was super exciting. From November to February, I was assigned to the UX Research team to conduct user testing under the Head Researcher, Richard Yao.

youtube

Demo video of my first contracted google cardboard app for the London Neuropsychology Clinic exploring ‘embodiment’ methods for healthcare practitioners at the Unconscious Bias Conference held in November 2016.

I also participated in the Silicon Valley AR/VR Academy for a 6 week VR learning program. And then got an opportunity to work as a Lead UX Researcher at Code for America, where they’ve indulged my vr obsession in the office and have allowed me to work on side initiatives of 360 filming nonprofit stakeholders that we are partnering with

My Aspirations for 2017 Launchpad

Aspiration #1: Slay.

It’s been a whirlwind this month of June between the excitement and anxiousness of getting accepted into Oculus Launch Pad.

I was traveling for work to Alaska and made sure to come back for this weekend...between cancelled flights, delays and figuring out Caltrain/Lyft to make the commute from SF to Menlo Park, the experience was unforgettable meeting all the other Launch Pad participants and the amazing sense of immediate community that I arrived to--united by a passion for VR, regardless if we were strangers.

Arriving at Facebook HQ on June 10th and meeting all the different people doing “the lord’s work” (aka helping shape and contribute to the growth of VR) :’). Learning the different ideas that people are working on has been extremely motivating for me to continue on my path to creating VR experiences.

Now more than ever am I committed to continue pushing myself as a creator, researcher, and developer to take this challenge of making an idea come to life by the end of these 13 weeks.

Rough sketch in Tiltbrush for what the starting home environment would be for my game “Reincarnation”. You pick a life mission and the player is assigned a body, cultural society to inhabit, and will have to work through branch narratives reach objective (aka meet life goal).

I was struggling between the ideas of creating games, training videos (building on my MA research thesis), or exploring something new all together --taking advantage of my fellowship with Code for America in Civic Tech and exploring ways to bridge the empathy gap between stakeholders in VR.

Given the short amount of weeks and some feedback from peers, I think I will continue the gaming route, to create a concept prototype that can be used to be a starting point for a VR product that can also have a social impact (unconsciously ;))

Most importantly,

I’m excited to build a network of peers and friends who are just as passionate and skilled at VR as I am. This alone gives me the home I’ve been looking for as a women of color, struggling to find her ‘people’ in the space of VR. The people and the energy I feel from this group alone is almost overwhelmingly beautiful to me and I’m still trying to get over the shock that this is actually all real. Please bear with me, people. But rest assured, I’m joining this party in the weeks to come. :)

My portfolio website: www.creativeclo.com/loves/VR.html

0 notes

Photo

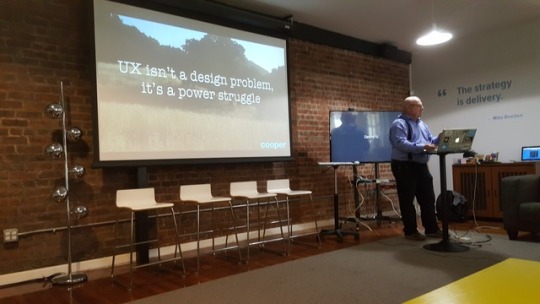

Alan Cooper, Pioneer/one of the Forefather’s in Interaction Design

Perhaps biased towards my love for interaction design, the most amazing presentation for us at Code for America during lunch today.

Particularly, loved his advice for how to take more bold approaches in civic technology in this new age of a more unpredictable political climate.

1 note

·

View note

Link

I’m currently a UX Research and Design fellow for Code for America working to help improve workforce development with technology in the muni of Anchorage, Alaska! I’m documenting my experiences using the Samsung Gear 360. Check out our videos!

(Plus! If you have the google cardboard and the youtube app on your phone, just click on the google cardboard icon on any of the 360 videos and insert your phone into the headset for more immersive viewing!)

3 notes

·

View notes

Text

Got the Samsung Gear 360 VideoCam...my life is changed.

Got it on sale at Best Buy for $275. SD Card and Samsung charger sold separately (if you have a Samsung galaxy s6--which you will need as the viewport anyways, the charger can be shared between both.)

If you ever used or bought a goPro...it essentially functions the same.

1. Insert the microSD (recommended 64gb),

2. Download the Samsung Gear 360 mobile app

3. Pair the two via Bluetooth (press on Bluetooth button on camera)

4. Click ‘Gear 360 Mobile View’ icon.

5. Boom, you are live!

You can take 360 video, 360 photos, 360 video timelapses, and 360 video loops!

Put it in any of those modes and press play (either by your mobile app or pressing the red button on the top of the camera).

Reviewing your video/photos:

youtube

1. Using your mobile app, you can playback the videos or view the photos and navigate around. It will stitch the images together when you click on each one at the time of review.

Sharing your video/photos:

You just click ‘share’ and it gives you the options. YouTube, Facebook, and Google Photos will adapt it for 360 viewing upon uploading.

Naturally, I dropped my phone while at the Dentist’s office and the LCD screen no longer works. :( So I can’t preview my videos/photos while recording (but I can still take 360 pics and videos---camera still works fine).

So if I want to share them, I will have to download the video editing software provided by Samsung onto my pc. (Unfortunately, its not available for mac)

Samsung Gear 360 ‘Action Director’ Download for PC: https://resources.samsungdevelopers.com/Gear_360/02_Download_Gear_360_Action_Director

youtube

0 notes

Text

Gandhi VR App Dev Progress (continued)

See the previous post here: http://interactiondesignwithclo.tumblr.com/post/156462459900/gandhi-virtual-reality-app-development-progress

So I made a trip to Best Buy to see about a possible graphics card adaptor for my Mac. I talked to Geeksquad and while there are articles on how to do this, it apparently bottlenecks your Mac and could take way too much time to make happen successfully.

So...I bought a pc laptop. It has the high quality specs I need and there was an open box on sale for $1150 ( this was $500 less than the market rate price of $1800 pre-tax). Put that on the credit card and took my new kid home.

1. Purchase “open box” PC Laptop at Best Buy: Alienware 17

2. Install Unity 3D, Android Studio, and Maya over night.

3. Redo the whole game environment (takes less than 2 hours because the graphics card on this pc is a BEAST.)

Bye MacBook.

(via GIPHY)

4. PC Laptop dies mid-project and won’t reboot when I put the charger in.

A google search shows me this is a normal problem with Alienware pc’s. I consider taking it into Best Buy geek squad the next day but when I take it home and plug it in again it works. Since the deadline for this project is today, I deal with it and focus on getting this done. I’ll go into Best Buy this weekend to see if I could get this one replaced!

5. Figure out the Skybox thing. Download a free one from the Unity Asset Store.

The configuration for the skybox (my last step for the environment) is to go to Unity Edit > Render Settings > skybox.

But on Unity 5, the Render Settings is moved to the Lighting Window. So

Window > Lighting > Skybox.

Next on this adventure:

Now to get to completing the rigging the skeleton of the Gandhi avatar in Maya so I can have him ready for animation in Unity 3d. The last step before I worry about building and exporting the .apk for google cardboard!

0 notes

Text

Gandhi Virtual Reality App Development Progress

I’ve been working with a neuropsychologist at the London Neuropsychologist Clinic to develop an empathy vr app that allows the users to see themselves as Gandhi!

This (Google Cardboard) app will be exhibited as part of the International Mahatma Gandhi Conference held at University College London in April 2017.

This blog is just a documentation of sorts that demonstrates the progress and learning in my development workflow for this project.

1. Install Google VR sdk into Unity 3d and get a new error.

Couldn’t find the solution via google . I know its some stupid code error (see below) but nobody got time for this right now.

2. Use old game that worked perfectly fine in previous project—delete all unrelated assets to make way for the new!

3. Download Taj Mahal 3d Model: http://3dfreemodelss.blogspot.com/2015/02/taj-mahal-india-hope-you-will-like-it.html

4. Add Textures piece by piece in Unity 3d

5. Add Unity Water prefab —doesn’t show up on cardboard game mode. Realize its hard to find for Unity 3d version 5.4. This one worked:

youtube

6. Download Gandhi 3d Model —$50

7. Open .rar files --https://unrarx.en.softonic.com/mac

8. Can’t rig a game object, only .fbx files

9. Start with low hanging fruit and--Create Terrain for trees and grass

10. Lose Grid in iso view :( ..didn’t realize until the next day when I googled the problem.

11. Watch tutorial on making grass

youtube

12. Find 3d models of cyprus trees -cheapest is like $20ish. Not cute.

13. 2nd terrain for grass is drastically slows down rendering in scene mode for unity. Learn why Macpro with low GPU is not for vr dev

14. Deleted and created two new terrains and will just put ‘facade’ of grass.

https://docs.unity3d.com/Manual/HOWTO-ImportObjectSketchUp.html

15. Couldn’t find affordable cyprus models, so just created a low LOW polygon cyprus tree shape. 3D Model with game objects, color it green and call it day!

16. Will need to figure out if I can buy a computer with a high gpu card so I can transfer and finish the game with ability to playback without lag this weekend. To be continued. For now--focus on what CAN be done!

17. Download Maya and get student package for a year, free.

18. Import .fbx for rigging to T shape

19. Have no idea how to navigate the Maya viewport since its not the same as Unity 3d. Watched these lengthy videos for the gold nuggets I need:

youtube

And the saga continues.....stay tuned. 3 days left for this deadline!!

#virtual reality#vr#virtual reality development#vr development#gandhi#women in tech#google cardboard#unity 3d#maya

1 note

·

View note

Link

I was honored to be invited to give a presentation about my findings on Virtual Reality to develop empathy and reduce unconscious social biases that affect the tech workplace. I gave an overview on how to use free tools like Unity 3D and proven techniques to employ in VR gaming to the Afrofutures conference held in Birmingham, UK.

Here’s a few pics of the presentation below!

Check out Presentation on Slideshare!

0 notes

Video

youtube

Virtual Reality Demo to Develop Racial Empathy for Healthcare Practitioners

I was contacted by a Neuropsychologist Professor at UCL (University College of London) to develop a demo. This demo would be exhibited at the Unconscious Bias Training for attending healthcare practitioners using Google Cardboard and Android phones.

The purpose of this demo to show a broad range of technological advances that help to provide solutions for addressing racial biases that were discovered in healthcare practices.

I made a screen recording of the demo shown above.

#virtual reality#vr demo#racial empathy#empathy#healthcare#bias#unconscious bias#ucl#university college london#unconscious bias training#neuropsychology#google cardboard#vr and empathy

0 notes

Video

youtube

A couple nights before the election, I made a virtual reality game for Google Cardboard called “Trumpocalypse!”

I was motivated to create the game in the spirit of getting people who have felt personally attacked, threatened, and insulted by his campaign remarks to be able to take out their frustations in a playful manner of this game.

How To Install & Play Game:

Download from Github onto your computer: Trumpocalypse Repo!

Export the .apk file via USB to your Android Device

Insert your phone into Google Cardboard, press play!

Use the trigger on the right hand top side of the cardboard to fire your projectiles when your circle is pointed towards a Trump head.

What you need:

Android Device

Google Cardboard* (optional)

USB Cord

Laptop/Computer

Game Objective:

In the same spirit of the game “Asteroids”, the idea is to destroy as many Trump heads as you can in the space before they destroy the White House. The White House is in the center of the space, made up of 4 walls, each wall that gets touched, explodes. When all 4 walls are destroyed, all of America’s dignity is lost. :)

So try to save as much dignity as you can!

#trumpocalypse#virtual reality#vr#google cardboard#vr games#interaction design#interaction designer#android#Asteroids#asteroidsvr#unity 3d#vr demo

1 note

·

View note

Link

I wrote this blog earlier this year for a tech agency I used to work with that ended up going out of business...luckily, the website hosting is free and should stay up indefinitely. *cross fingers* :P

0 notes

Video

youtube

“Clo delves into the ways that our personal backgrounds influence our view of the world, and explains why it's important to consider all viewpoints.

We'll see some examples that will help us achieve that, and hear the hard evidence for why a more diverse workforce pays off.”

Oldie but goodie! ;)

0 notes

Text

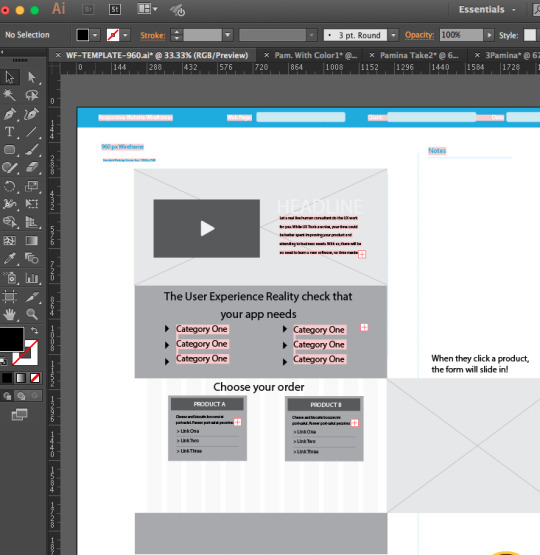

UX Consulting Process for Apollo

August 2016

I am providing a full service of Concept Development to Wireframes to Prototype Product Development for a start-up founder within 2.5 weeks.

The Client approached me with an idea to start an on-line business providing User Experience Business Consulting for app makers.

I primarily used Google Drive since the client is located in a different country and wanted to be able to collaborate and make changes.

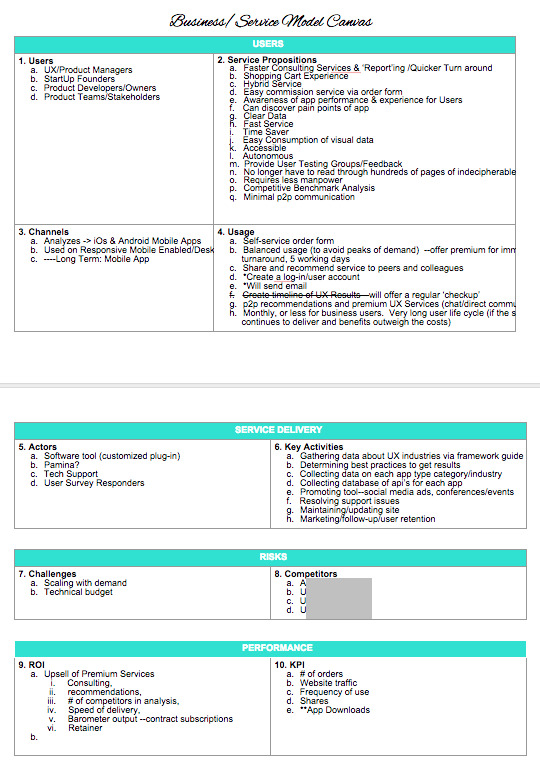

1. Business Model Canvas - Google Doc

To help us flesh out the scope of this project, help her hammer out the details, and inspire her business plan write-up, and ensure we are on the same page, I led an exercise to create a business/service model canvas.

Competitive Analysis - Using Xtensio tool for formatting/sharing purposes with client, I conducted research to find peer companies and take in-depth look at their branding and services to help inform where we can create her own competitive edge/niche for her target market.

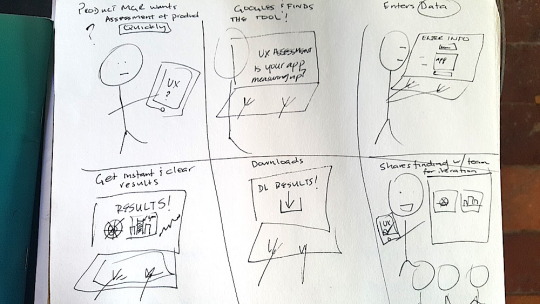

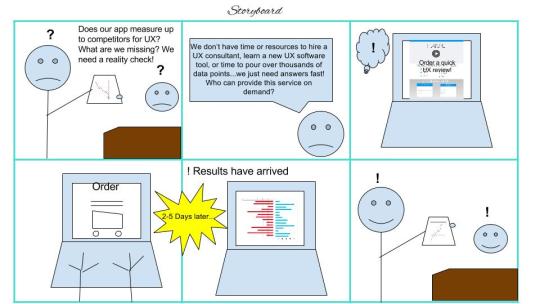

2. UX Storyboard - I developed a rough sketched storyboard with her using Google Draw to visualize the services and the story for a product like hers could be used in real life practice.

3. User Personna - Used Xtensio tool to format/create user personna for client based off a real user that they identified as ideal.

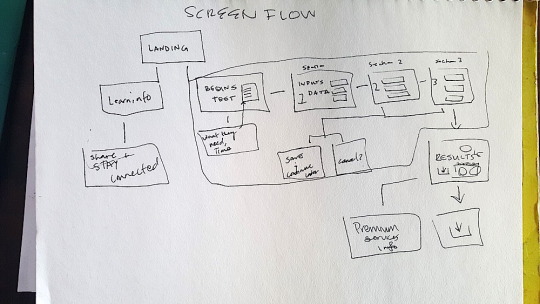

4. Screen Flow - Started visualizing the functionalities needed based on expectations of users and the user journey of the website--optimized for cta conversion.

Finalized on Google Draw for sharing/transparency purposes-- we felt this would best engage the user who is looking for a trusted service, quickly understand the benefits of service, and ideally, the service demo would generate excitement for user to take immediate action and purchase service quickly.

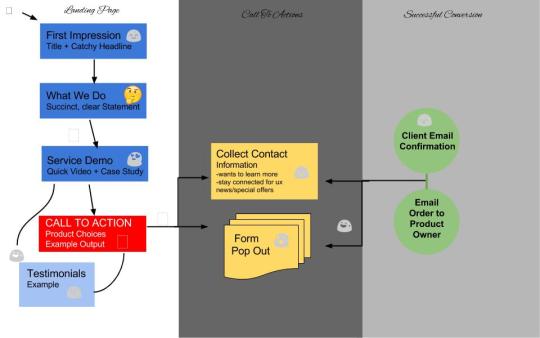

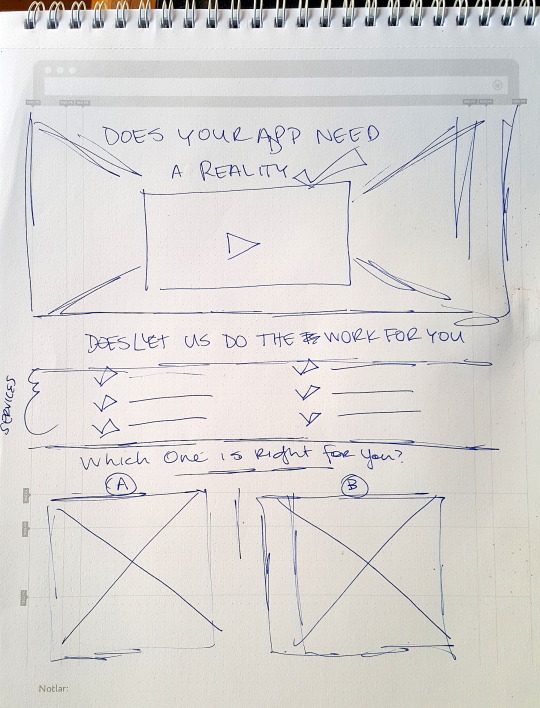

5. Website Structure -

This is pen sketch on a notepad proposing a design that embodies the screenflow.

Using Illustrator, I wireframed the design.

5. Color Schemes - Used Coolers.co--a color palette tool to help the client decide on a color style for her tool. We drew assumptions based on the past experience of interacting with her User Personna, that he would find more appeal in the top cover style.

We drew inspiration from the branding of Apple-intelligent, reliable, trustworthy, masculine, and yet still approachable/laid back by the blue.

We also, appreciated this color scheme as it seemed to be alleviator and inspires fresh, consoling feelings for a prospective user who may come to this site slightly frustrated, unsure, and skeptical.

We decided to conduct A/B Testing for the initial stages of the product launch.

6. Build Prototype -- Since I’m not fluent in Javascript, I used a pre-made template with all built-in js scripts built in for me to customize. Along with a basic similar structured html/css.

youtube

The above video is the built prototype website in first draft stage using Sublime Text.

Next Steps--

Colors are not yet fully implemented but process is being paused now. Next stage is to also use this opportunity to explore a prototype building tool like Azure for the purposes of usability testing prior to launching.

#UX process#UX#UI#User Experience#User-Centered Design#User Centered approach#interaction design#design#hcd

0 notes

Text

20 minute UX Sketch of Possible Interface for Bike Theft Reporting App

Sticking to the 20 minutes deadline, please forgive the extreme roughness of the sketches!

1. Google Research the stages/feelings people go through when they have their bike stolen.

I have never had a bike stolen so I felt uncomfortable starting with pure assumptions on how a person would feel when they used this app. I am a huge fan of apps that feel intuitive and sympathetic when I’m relying on them in a stressful state. So, I started looking up blogs and accounts of feelings for people who have had their bike stolen to inform the most likely emotional state a user would be in to use, when the would use it and began the next step--

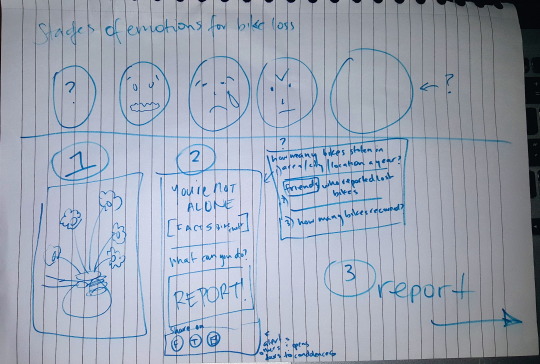

2. Emotional User Journey Map

I used faces to quickly visually capture the developing mood of the user from the point where they see their bike missing to the point where they are ready to take action in reporting.

First face is a question mark for initial mild confusion

Second face is when it starts setting in that it may really have been stolen and mild panic ensues.

Third face is despair, a bit of an emotional drop to realizing the loss

Fourth face is a natural response to the feeling of powerlessness and reaction towards the injustice. They may begin to feel angry, questions how and why it happened, possibly feel a bit vengeful.

Fifth face leaves open a variability of responses, but typically it will be one where action is felt to be taken and the assumption is that they may refer to their phone for answers if they are alone.

At this point, questions of whether the user has had a bike stolen before or a first timer---this will determine their next course of action that will differ in the immediate type of information the begin to seek to take action.

After weighing all the various possible scenarios at this point to how they would arrive at the app, I believe it would be ideal for it to be consoling or responsive to their emotional needs while providing certainty for their next course of actions taken within the app.

Immediate assumptions shown in the photo above, below the blue line, will start off with something consoling/sympathetic while loading the app to begin the reporting process.

Often during these times, people might feel consoled to see the facts about bike theft that make them feel less alone or that provide hope or tips to reassure they are taking the right next step.

A simple, concise wording of next steps, with minimal/flat design to enhance usability and ease of use would be my starting point in design.

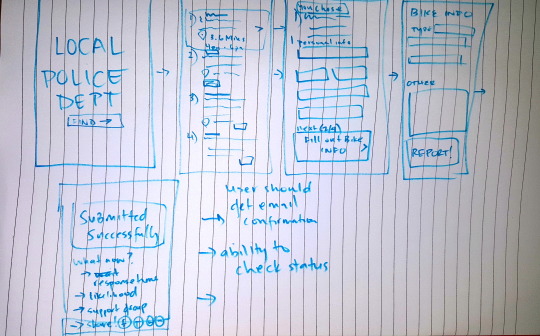

3. The Report Interface

This should possibly carry a different, more assertive tone in design and style.

Directions should be as simple as possible. Screens minimal--2 colors max to minimize emotional stimulation.

Given more time, I’d hone down and develop the following, and start visualizing/experimenting in Sketch or Illustrator:

1. First box--Clearly states the starting point and action required that provides clarity on who and where the report is being sent. One button to start the process.

2. Geo-Location option for suggested places to report around the location the theft was committed. User can choose which one they would like to film out form for.

3. Once location(s) are chosen, they remain listed at the top of the form, and the number of total pages for data entry should be listed showing stages of the user’s progress.

4. Each type of information needed should be separated with its own screen page (instead of a complete form in one screen and continuous scroll down) to add a sense of progress when a portion is completed

5. A press of a button to keep it accessible to any user--and avoids further frustration with complications ( for example, swiping the screen that often times has to be done a couple times for it to work) that moves to next stage is what I would suggest.

6. After form is completed, a huge ‘successfully submitted’ confirmation should display with a concise sentence that tells the user what to expect next in response to their submission.

7. Additional identified options can be linked below to share this news on their social media (with bike description optionally?) since consolation by friends is deemed to be mood lifter for some users, and also serves to keep others in the area alert.

8. And also other recommended action steps they can take--join a support group, tracking the report status, local bikes selling for cheap in the area, etc...these options should be user tested as well to ensure its a positive addition to their experience.

My Top 5 Tools

Google Search Engine - for preliminary research, point me in the right direction, or get an initial sense of the context/resources of the issue I’m tackling

Digital Notepad - for *writing* down concepts & ideas

Sketch - For visualizing ideas roughly

Adobe Illustrator - also as a digital notepad for sketching ideas that need more detail

Adobe Photoshop - for prototyping concepts, copy and pasting random images together or experimenting with visuals, etc.

2 notes

·

View notes