#sql examples for practice

Explore tagged Tumblr posts

Text

Optimizing SQL Server Autogrowth Settings for Enhanced Performance

Introduction In the realm of database management, ensuring the smooth scaling of your SQL Server databases is paramount. Consequently, configuring autogrowth settings judiciously emerges as a critical task. This article delves into practical T-SQL code examples and applications to guide you through optimizing autogrowth settings in SQL Server, aiming to boost database performance and…

View On WordPress

#database management tips#Database performance optimization#SQL Server autogrowth settings#SQL Server best practices#T-SQL Code Examples

0 notes

Text

some Shark guys biology musings from the span of the past year or so. Don't ask me what their hands are shaped like I'm basically re-inventing it every time I draw it right now

The gills have closed up forming a buccal pouch filled with blood vessels, now used for thermoregulation rather than gas exchange. They might pant out of their mouth when particularly hot/out of breath, but because sharks will also gape their mouth to communicate stress/aggression they tend to avoid it whenever possible. Their faces don't have a lot of muscles to form detailed expressions; the extent of facial expressions for sharks tend to be seen through the openness of the eyes and mouth.

Here's a rough thing of the evolution of terrestrial sharks:

The bulk of modern terrestrial sharks can be found on the eastern half of the Big Continent (I'm not naming it bc what if SQL names their landmasses officially), where crocodilians have gone extinct. The other lineages of salamander sharks can also be found along the many islands stretching across the ocean off to the southeast of the continent as well. None of them are in traditional cephaling territory but lmao

Crocodile sharks are. Well. They're a group of larger freshwater sharks that frequently occupy a crocodile-like niche. Smaller species can be confused with salamander sharks, but they're much more resistant to desiccation and can wander away from water to look for food and new territory. This is where true endothermy begins cropping up in terrestrial sharks; the largest extant species don't bother with it, but several smaller guys seem to have developed it independently of each other.

The Haye are an iconic megafaunal predator of the so-called Mollusk Era. Lots of mythologies around them I'm sure. It used to be believed that they were the Shark folks' closest living relative, but modern research has found that to be untrue. They're endothermic and can be found even in fairly cold regions, but usually don't stick around for the winter in polar regions.

Mud Hounds are a diverse group of mid-sized, endothermic terrestrial sharks. Pictured is a beloved little digging guy usually known as dorghai. Many species rely on their keen sense of smell and electroreception to track their prey; they get their name from the common behavior of sticking their nose into wet mud to feel for the electric signatures of smaller burrowing prey. Even species that don't make active use of their electroreception often retain the ability. Seems they just haven't gotten around to losing it quite yet, even though electroreception isn't very effective in air. The Shark folk are no exception; some people report being able to "feel" active thunderstorms or faulty electronics. With practice they can actually do fuck all with it, but for most people it's just an occasional vague annoyance.

I didn't draw other examples of the group Shark folk are in, dubbed the walking hounds, because they're the only living member of the group. The reason for the group developing bipedalism isn't known right now. Also, I tend to draw Sharks standing fairly upright, but the most natural standing posture for them is more raptorial. Upright postures are associated with alertness/nervousness, or temporarily trying to take up less space in crowded areas. It becoming a default/preferred posture is seen commonly in "city" sharks used to living in high density areas with smaller species.

Yeah or an anxious city shark. Lol

#Squid 2 the evolution of the squid#splat bio#Conarts#well. i guess i would. tag.#splatoon ocs#LOL....#long post#ok the posture thing isn't completely right upright default postures are also seen in sailors. crowded/small areas = want to take less spac

121 notes

·

View notes

Text

The Great Data Cleanup: A Database Design Adventure

As a budding database engineer, I found myself in a situation that was both daunting and hilarious. Our company's application was running slower than a turtle in peanut butter, and no one could figure out why. That is, until I decided to take a closer look at the database design.

It all began when my boss, a stern woman with a penchant for dramatic entrances, stormed into my cubicle. "Listen up, rookie," she barked (despite the fact that I was quite experienced by this point). "The marketing team is in an uproar over the app's performance. Think you can sort this mess out?"

Challenge accepted! I cracked my knuckles, took a deep breath, and dove headfirst into the database, ready to untangle the digital spaghetti.

The schema was a sight to behold—if you were a fan of chaos, that is. Tables were crammed with redundant data, and the relationships between them made as much sense as a platypus in a tuxedo.

"Okay," I told myself, "time to unleash the power of database normalization."

First, I identified the main entities—clients, transactions, products, and so forth. Then, I dissected each entity into its basic components, ruthlessly eliminating any unnecessary duplication.

For example, the original "clients" table was a hot mess. It had fields for the client's name, address, phone number, and email, but it also inexplicably included fields for the account manager's name and contact information. Data redundancy alert!

So, I created a new "account_managers" table to store all that information, and linked the clients back to their account managers using a foreign key. Boom! Normalized.

Next, I tackled the transactions table. It was a jumble of product details, shipping info, and payment data. I split it into three distinct tables—one for the transaction header, one for the line items, and one for the shipping and payment details.

"This is starting to look promising," I thought, giving myself an imaginary high-five.

After several more rounds of table splitting and relationship building, the database was looking sleek, streamlined, and ready for action. I couldn't wait to see the results.

Sure enough, the next day, when the marketing team tested the app, it was like night and day. The pages loaded in a flash, and the users were practically singing my praises (okay, maybe not singing, but definitely less cranky).

My boss, who was not one for effusive praise, gave me a rare smile and said, "Good job, rookie. I knew you had it in you."

From that day forward, I became the go-to person for all things database-related. And you know what? I actually enjoyed the challenge. It's like solving a complex puzzle, but with a lot more coffee and SQL.

So, if you ever find yourself dealing with a sluggish app and a tangled database, don't panic. Grab a strong cup of coffee, roll up your sleeves, and dive into the normalization process. Trust me, your users (and your boss) will be eternally grateful.

Step-by-Step Guide to Database Normalization

Here's the step-by-step process I used to normalize the database and resolve the performance issues. I used an online database design tool to visualize this design. Here's what I did:

Original Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerName varchar

AccountManagerPhone varchar

Step 1: Separate the Account Managers information into a new table:

AccountManagers Table:

AccountManagerID int

AccountManagerName varchar

AccountManagerPhone varchar

Updated Clients Table:

ClientID int

ClientName varchar

ClientAddress varchar

ClientPhone varchar

ClientEmail varchar

AccountManagerID int

Step 2: Separate the Transactions information into a new table:

Transactions Table:

TransactionID int

ClientID int

TransactionDate date

ShippingAddress varchar

ShippingPhone varchar

PaymentMethod varchar

PaymentDetails varchar

Step 3: Separate the Transaction Line Items into a new table:

TransactionLineItems Table:

LineItemID int

TransactionID int

ProductID int

Quantity int

UnitPrice decimal

Step 4: Create a separate table for Products:

Products Table:

ProductID int

ProductName varchar

ProductDescription varchar

UnitPrice decimal

After these normalization steps, the database structure was much cleaner and more efficient. Here's how the relationships between the tables would look:

Clients --< Transactions >-- TransactionLineItems

Clients --< AccountManagers

Transactions --< Products

By separating the data into these normalized tables, we eliminated data redundancy, improved data integrity, and made the database more scalable. The application's performance should now be significantly faster, as the database can efficiently retrieve and process the data it needs.

Conclusion

After a whirlwind week of wrestling with spreadsheets and SQL queries, the database normalization project was complete. I leaned back, took a deep breath, and admired my work.

The previously chaotic mess of data had been transformed into a sleek, efficient database structure. Redundant information was a thing of the past, and the performance was snappy.

I couldn't wait to show my boss the results. As I walked into her office, she looked up with a hopeful glint in her eye.

"Well, rookie," she began, "any progress on that database issue?"

I grinned. "Absolutely. Let me show you."

I pulled up the new database schema on her screen, walking her through each step of the normalization process. Her eyes widened with every explanation.

"Incredible! I never realized database design could be so... detailed," she exclaimed.

When I finished, she leaned back, a satisfied smile spreading across her face.

"Fantastic job, rookie. I knew you were the right person for this." She paused, then added, "I think this calls for a celebratory lunch. My treat. What do you say?"

I didn't need to be asked twice. As we headed out, a wave of pride and accomplishment washed over me. It had been hard work, but the payoff was worth it. Not only had I solved a critical issue for the business, but I'd also cemented my reputation as the go-to database guru.

From that day on, whenever performance issues or data management challenges cropped up, my boss would come knocking. And you know what? I didn't mind one bit. It was the perfect opportunity to flex my normalization muscles and keep that database running smoothly.

So, if you ever find yourself in a similar situation—a sluggish app, a tangled database, and a boss breathing down your neck—remember: normalization is your ally. Embrace the challenge, dive into the data, and watch your application transform into a lean, mean, performance-boosting machine.

And don't forget to ask your boss out for lunch. You've earned it!

8 notes

·

View notes

Text

Protect Your Laravel APIs: Common Vulnerabilities and Fixes

API Vulnerabilities in Laravel: What You Need to Know

As web applications evolve, securing APIs becomes a critical aspect of overall cybersecurity. Laravel, being one of the most popular PHP frameworks, provides many features to help developers create robust APIs. However, like any software, APIs in Laravel are susceptible to certain vulnerabilities that can leave your system open to attack.

In this blog post, we’ll explore common API vulnerabilities in Laravel and how you can address them, using practical coding examples. Additionally, we’ll introduce our free Website Security Scanner tool, which can help you assess and protect your web applications.

Common API Vulnerabilities in Laravel

Laravel APIs, like any other API, can suffer from common security vulnerabilities if not properly secured. Some of these vulnerabilities include:

>> SQL Injection SQL injection attacks occur when an attacker is able to manipulate an SQL query to execute arbitrary code. If a Laravel API fails to properly sanitize user inputs, this type of vulnerability can be exploited.

Example Vulnerability:

$user = DB::select("SELECT * FROM users WHERE username = '" . $request->input('username') . "'");

Solution: Laravel’s query builder automatically escapes parameters, preventing SQL injection. Use the query builder or Eloquent ORM like this:

$user = DB::table('users')->where('username', $request->input('username'))->first();

>> Cross-Site Scripting (XSS) XSS attacks happen when an attacker injects malicious scripts into web pages, which can then be executed in the browser of a user who views the page.

Example Vulnerability:

return response()->json(['message' => $request->input('message')]);

Solution: Always sanitize user input and escape any dynamic content. Laravel provides built-in XSS protection by escaping data before rendering it in views:

return response()->json(['message' => e($request->input('message'))]);

>> Improper Authentication and Authorization Without proper authentication, unauthorized users may gain access to sensitive data. Similarly, improper authorization can allow unauthorized users to perform actions they shouldn't be able to.

Example Vulnerability:

Route::post('update-profile', 'UserController@updateProfile');

Solution: Always use Laravel’s built-in authentication middleware to protect sensitive routes:

Route::middleware('auth:api')->post('update-profile', 'UserController@updateProfile');

>> Insecure API Endpoints Exposing too many endpoints or sensitive data can create a security risk. It’s important to limit access to API routes and use proper HTTP methods for each action.

Example Vulnerability:

Route::get('user-details', 'UserController@getUserDetails');

Solution: Restrict sensitive routes to authenticated users and use proper HTTP methods like GET, POST, PUT, and DELETE:

Route::middleware('auth:api')->get('user-details', 'UserController@getUserDetails');

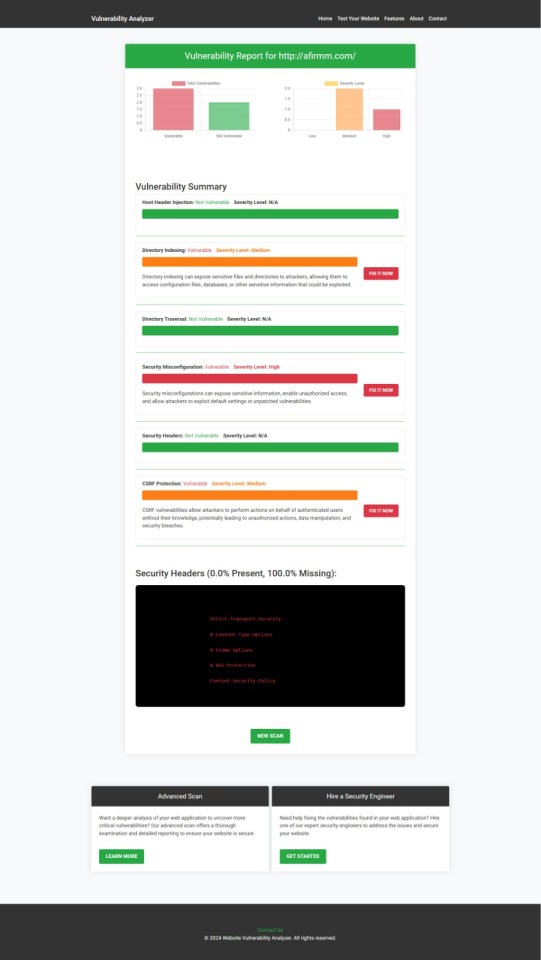

How to Use Our Free Website Security Checker Tool

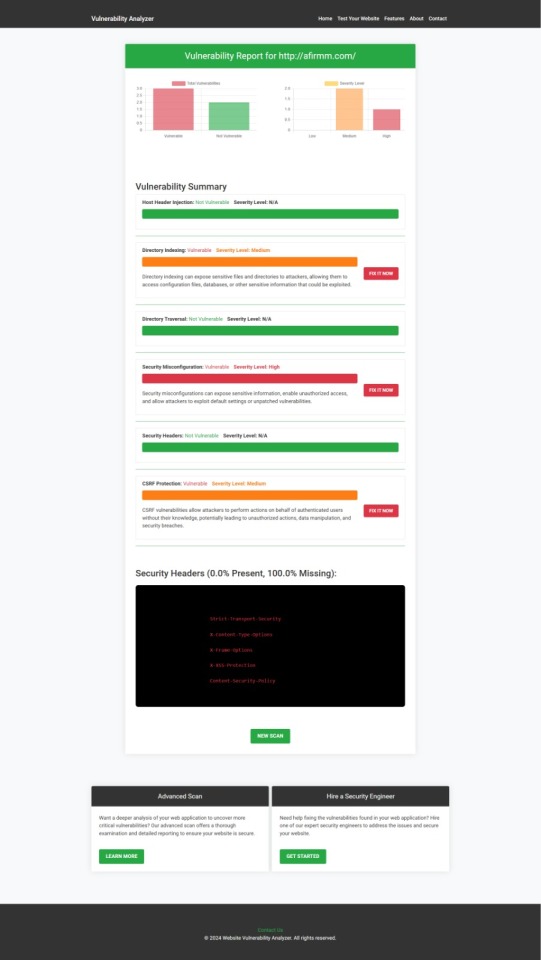

If you're unsure about the security posture of your Laravel API or any other web application, we offer a free Website Security Checker tool. This tool allows you to perform an automatic security scan on your website to detect vulnerabilities, including API security flaws.

Step 1: Visit our free Website Security Checker at https://free.pentesttesting.com. Step 2: Enter your website URL and click "Start Test". Step 3: Review the comprehensive vulnerability assessment report to identify areas that need attention.

Screenshot of the free tools webpage where you can access security assessment tools.

Example Report: Vulnerability Assessment

Once the scan is completed, you'll receive a detailed report that highlights any vulnerabilities, such as SQL injection risks, XSS vulnerabilities, and issues with authentication. This will help you take immediate action to secure your API endpoints.

An example of a vulnerability assessment report generated with our free tool provides insights into possible vulnerabilities.

Conclusion: Strengthen Your API Security Today

API vulnerabilities in Laravel are common, but with the right precautions and coding practices, you can protect your web application. Make sure to always sanitize user input, implement strong authentication mechanisms, and use proper route protection. Additionally, take advantage of our tool to check Website vulnerability to ensure your Laravel APIs remain secure.

For more information on securing your Laravel applications try our Website Security Checker.

#cyber security#cybersecurity#data security#pentesting#security#the security breach show#laravel#php#api

2 notes

·

View notes

Text

Studying Data Analytics (SQL)

At present I’m working through the 2nd Edition of Practical SQL by Anthony DeBarros. I plan to obtain a graduate certificate in Healthcare Data Analytics so I’m teaching myself the basics so to help ease the burden of working and going to school.

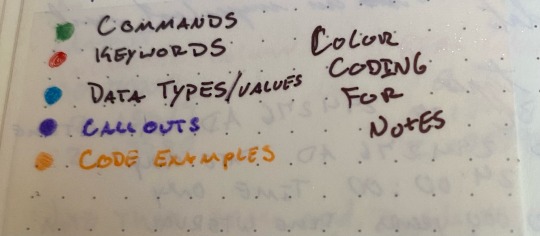

Here’s how I study.

First I always dedicate a notebook (or series of them) to a learning goal. I like Leuchtturm notebooks as they are fountain pen friendly and plenty of colors (to distinguish from my other notebooks), and have a built in table of contents for organization.

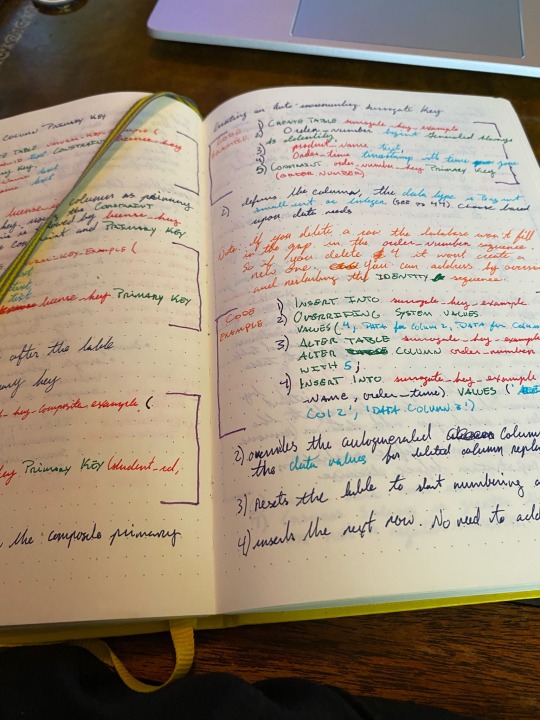

SQL, Python, R, etc are programming languages used to tell their respective software what to do with data that has been input into the database. To oversimplify you are learning to speak computer. So my process in learning is by breaking the text down into scenarios e.g If I want to do X, my code needs to look like Y

Along with code examples I include any caveats or alternate use cases. This is repetition helps me learn the syntax and ingrain it into my memory. Obviously I color code my notes so I can know at a glance what each element of the code is.

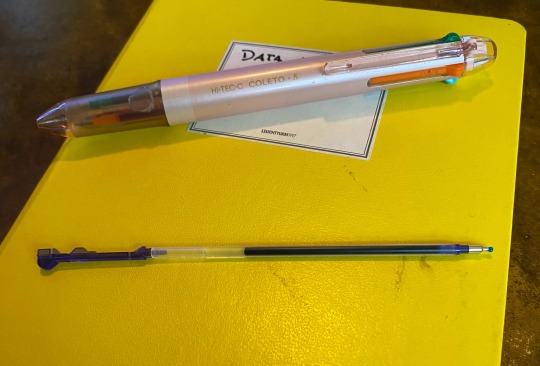

My multi-pen a Hi-tech C Coleto has been invaluable so I don’t have to jump between 5 different pens I just click between them as needed.

That said as the Coleto will hold 5 different colors it means the refill are tiny and thus need to be replaced more often. Which can be annoying if I run out mid study session.

The end game is to take these notes build a Data Grimoire where I can quickly reference code and how to use them as well as to build checklists for things like data cleaning, setting up constraints, and for thinking ahead to future needs (e.g int vs bigint)

#black dark academia#dark academia#noir library#poc dark academia#studying#studyblr#I’m aware GitHub exists im just dramatic and like to handwrite things#data analytics#datascience#relationaldatabases#multipens#Hi Tec-C Coleto#leuchtturm

3 notes

·

View notes

Text

Maximizing Business Insights with Power BI: A Comprehensive Guide for Small Businesses

Maximizing Business Insights Small businesses often face the challenge of making data-driven decisions without the resources of larger enterprises. Power BI, Microsoft's powerful analytics tool, can transform how small businesses use data, turning raw numbers into actionable insights. Here's a comprehensive guide to maximizing business insights with Power BI.

Introduction to Power BI

Power BI is a business analytics service by Microsoft that provides interactive visualizations and business intelligence capabilities. With an interface simple enough for end users to create their own reports and dashboards, it connects to a wide range of data sources.

Benefits for Small Businesses

1. User-Friendly Interface: Power BI's drag-and-drop functionality makes it accessible for users without technical expertise.

2. Cost-Effective: Power BI offers a free version with substantial features and a scalable pricing model for additional needs.

3. Real-Time Data: Businesses can monitor their operations with real-time data, enabling quicker and more informed decision-making.

Setting Up Power BI

1. Data Sources: Power BI can connect to various data sources such as Excel, SQL databases, and cloud services like Azure.

2. Data Modeling: Use Power BI to clean and transform data, creating a cohesive data model that forms the foundation of your reports.

3. Visualizations: Choose from a wide array of visualizations to represent your data. Customize these visuals to highlight the most critical insights.

Customizing Dashboards

1. Tailor to Needs: Customize dashboards to reflect the unique needs of your business, focusing on key performance indicators (KPIs) relevant to your goals.

2. Interactive Reports:Create interactive reports that allow users to explore data more deeply, providing a clearer understanding of underlying trends.

Real-World Examples

Several small businesses have successfully implemented Power BI to gain a competitive edge:

1. Retail: A small retail store used Power BI to track sales trends, optimize inventory, and identify peak shopping times.

2. Finance:A small financial advisory firm employed Power BI to analyze client portfolios, improving investment strategies and client satisfaction.

Integration with Existing Tools

Power BI seamlessly integrates with other Microsoft products such as Excel and Azure, as well as third-party applications, ensuring a smooth workflow and enhanced productivity.

Best Practices

1. Data Accuracy: Ensure data accuracy by regularly updating your data sources.

2. Training: Invest in training your team to use Power BI effectively.

3. Security: Implement robust security measures to protect sensitive data.

Future Trends

Power BI continues to evolve, with future updates likely to include more advanced AI features and enhanced data processing capabilities, keeping businesses at the forefront of technology.

Conclusion

Power BI offers small businesses a powerful tool to transform their data into meaningful insights. By adopting Power BI, businesses can improve decision-making, enhance operational efficiency, and gain a competitive advantage. Partnering with Vbeyond Digital ensures a smooth and successful implementation, maximizing the benefits of Power BI for your business. with Power BI: A Comprehensive Guide for Small Businesses

2 notes

·

View notes

Text

01/11/2023 || Day 104

Personal Chatter

I love that yesterday I was pumped and ready for November and preparing to be productive, and yet today I woke up feeling bad so productivity was low. That's ok, tmr will be better! I also had another ASL class today and the teacher brought us chocolate (and the better thing is that we already know the sign for chocolate before, so we could use that in a sentence today). We've learned about 500 words (do we remember them all? lol, nope), and it's been such a fun class, so I already signed up for the next unit of the class for January.

------------------------------------------------------------------------------

Programming

Even though I didn't feel great today, I did get some stuff done!

LeetCode

Decided to start off with an easy LeetCode question, so I went with Number of Good Pairs and 5 mins later I was done. I previously did the Top Interview 150 questions, but I want more practice with Arrays/Strings, so I'm just doing those types now. Will move onto sorting and binary search trees and linked lists later (once I feel smart again).

Hobby Tracker - Log # 1

Ok, I decided what my full-stack project will be. I want to create a web app in which a user can keep track of different media they want to consume; books, movies, TV shows, and video games. Essentially, I want something that will show me how many things I want to get to (i.e how many books I want to read), and what those things specifically are, and then show me how much of each media type I've completed. For example, I have a spreadsheet of video games I own and I have it show me the % of complete games. I want that, but for all books, movies, shows, and games I enter. Not only that, but for certain media, I want to be able to get a random title from my list. Say I have 20 books I want to read but don't know which to pick. Well, I can ask it to choose a book for me randomly, and I'll read it. That's the basic idea, anyways. Today I spent some time setting up a Trello board to keep track of features I want and functionality I need, and I also started designing the mobile version of the app using Figma. It'll probably be a while before I start coding, but the idea is to have the frontend be built with React, backend with NodeJs, and database be SQL. I'll also be using APIs to get the media info, such as title, genre, etc...This will be the biggest project in terms of scope I've worked on, but hopefully I'll learn a lot.

6 notes

·

View notes

Text

A TURN FROM B.Com OR BBA GRADUATE TO

DATA ANALYST

The business world is changing, and so are the opportunities within it. If you've finished your studies in Bachelor of Commerce (B.Com) or Bachelor of Business Administration (BBA), you might be wondering how to switch into the field of data analysis. Data analysts play an important role these days, finding useful information in data to help with decisions. In this blog post, we'll look at the steps you can take to smoothly change from a B.Com or BBA background to the exciting world of data analysis.

What You Already Know:

Even though it might feel like a big change, your studies in B.Com or BBA have given you useful skills. Your understanding of how businesses work, finances, and how organisations operate is a great base to start from.

Step 1: Building Strong Data Skills:

To make this change, you need to build a strong foundation in data skills. Begin by getting to know basic statistics, tools to show data visually, and programs to work with spreadsheets. These basic skills are like building blocks for learning about data.

I would like to suggest the best online platform where you can learn these skills. Lejhro bootcamp has courses that are easy to follow and won't cost too much.

Step 2: Learning Important Tools:

Data analysts use different tools to work with data. Learning how to use tools like Excel, SQL, and Python is really important. Excel is good for simple stuff, SQL helps you talk to databases, and Python is like a super tool that can do lots of things.

You can learn how to use these tools online too. Online bootcamp courses can help you get good at using them.

Step 3: Exploring Data Tricks:

Understanding how to work with data is the core of being a data analyst. Things like looking closely at data, testing ideas, figuring out relationships, and making models are all part of it. Don't worry, these sound fancy, but they're just different ways to use data.

Step 4: Making a Strong Collection:

A collection of things you've done, like projects, is called a portfolio. You can show this to others to prove what you can do. As you move from B.Com or BBA to data analysis, use your business knowledge to pick projects. For example, you could study how sales change, what customers do, or financial data.

Write down everything you do for these projects, like the problem, the steps you took, what tools you used, and what you found out. This collection will show others what you're capable of.

Step 5: Meeting People and Learning More:

Join online groups and communities where people talk about data analysis. This is a great way to meet other learners, professionals, and experts in the field. You can ask questions and talk about what you're learning.

LinkedIn is also a good place to meet people. Make a strong profile that shows your journey and what you can do. Follow data analysts and companies related to what you're interested in to stay up to date.

Step 6: Gaining Experience:

While you learn, it's also good to get some real experience. Look for internships, small jobs, or freelance work that lets you use your skills with real data. Even if the job isn't all about data, any experience with data is helpful.

Step 7: Updating Your Resume:

When you're ready to apply for data jobs, change your resume to show your journey. Talk about your B.Com or BBA studies, the skills you learned, the courses you took, your projects, and any experience you got. Explain how all of this makes you a great fit for a data job.

Using Lejhro Bootcamp:

When you're thinking about becoming a data analyst, think about using Lejhro Bootcamp. They have a special course just for people like you, who are switching from different fields. The Bootcamp teaches you practical things, has teachers who know what they're talking about, and helps you find a job later.

Moving from B.Com or BBA to a data analyst might seem big, but it's totally doable. With practice, learning, and real work, you can make the switch. Your knowledge about business mixed with data skills makes you a special candidate. So, get ready to learn, practice, and show the world what you can do in the world of data analysis!

***********

3 notes

·

View notes

Text

How To Get An Online Internship In the IT Sector (Skills And Tips)

Internships provide invaluable opportunities to gain practical skills, build professional networks, and get your foot in the door with top tech companies.

With remote tech internships exploding in IT, online internships are now more accessible than ever. Whether a college student or career changer seeking hands-on IT experience, virtual internships allow you to work from anywhere.

However, competition can be fierce, and simply applying is often insufficient. Follow this comprehensive guide to develop the right technical abilities.

After reading this, you can effectively showcase your potential, and maximize your chances of securing a remote tech internship.

Understand In-Demand IT Skills

The first step is gaining a solid grasp of the most in-demand technical and soft skills. While specific requirements vary by company and role, these competencies form a strong foundation:

Technical Skills:

Proficiency in programming languages like Python, JavaScript, Java, and C++

Experience with front-end frameworks like React, Angular, and Vue.js

Back-end development skills - APIs, microservices, SQL databases Cloud platforms such as AWS, Azure, Google Cloud

IT infrastructure skills - servers, networks, security

Data science abilities like SQL, R, Python

Web development and design

Mobile app development - Android, iOS, hybrid

Soft Skills:

Communication and collaboration

Analytical thinking and problem-solving

Leadership and teamwork

Creativity and innovation

Fast learning ability

Detail and deadline-oriented

Flexibility and adaptability

Obtain Relevant Credentials

While hands-on skills hold more weight, relevant academic credentials and professional IT certifications can strengthen your profile. Consider pursuing:

Bachelor’s degree in Computer Science, IT, or related engineering fields

Internship-specific courses teaching technical and soft skills

Certificates like CompTIA, AWS, Cisco, Microsoft, Google, etc.

Accredited boot camp programs focusing on applied skills

MOOCs to build expertise in trending technologies like AI/ML, cybersecurity

Open source contributions on GitHub to demonstrate coding skills

The right credentials display a work ethic and supplement practical abilities gained through projects.

Build An Impressive Project Portfolio

Nothing showcases skills better than real-world examples of your work. Develop a portfolio of strong coding, design, and analytical projects related to your target internship field.

Mobile apps - publish on app stores or use GitHub project pages

Websites - deploy online via hosting services

Data science - showcase Jupyter notebooks, visualizations

Open source code - contribute to public projects on GitHub

Technical writing - blog posts explaining key concepts

Automation and scripts - record demo videos

Choose projects demonstrating both breadth and depth. Align them to skills required for your desired internship roles.

Master Technical Interview Skills

IT internship interviews often include challenging technical questions and assessments. Be prepared to:

Explain your code and projects clearly. Review them beforehand.

Discuss concepts related to key technologies on your resume. Ramp up on fundamentals.

Solve coding challenges focused on algorithms, data structures, etc. Practice online judges like LeetCode.

Address system design and analytical problems. Read case interview guides.

Show communication and collaboration skills through pair programming tests.

Ask smart, well-researched questions about the company’s tech stack, projects, etc.

Schedule dedicated time for technical interview practice daily. Learn to think aloud while coding and get feedback from peers.

Show Passion and Curiosity

Beyond raw skills, demonstrating genuine passion and curiosity for technology goes a long way.

Take online courses and certifications beyond the college curriculum

Build side projects and engage in hackathons for self-learning

Stay updated on industry news, trends, and innovations

Be active on forums like StackOverflow to exchange knowledge

Attend tech events and conferences

Participate in groups like coding clubs and prior internship programs

Follow tech leaders on social mediaListen to tech podcasts while commuting

Show interest in the company’s mission, products, and culture

This passion shines through in interviews and applications, distinguishing you from other candidates.

Promote Your Personal Brand

In the digital age, your online presence and personal brand are make-or-break. Craft a strong brand image across:

LinkedIn profile - showcase achievements, skills, recommendations

GitHub - displays coding activity and quality through clean repositories

Portfolio website - highlight projects and share valuable content

Social media - post career updates and useful insights, but avoid oversharing

Blogs/videos - demonstrate communication abilities and thought leadership

Online communities - actively engage and build relationships

Ensure your profiles are professional and consistent. Let your technical abilities and potential speak for themselves.

Optimize Your Internship Applications

Applying isn’t enough. You must optimize your internship applications to get a reply:

Ensure you apply to openings that strongly match your profile Customize your resume and cover letters using keywords in the job description

Speak to skills gained from coursework, online learning, and personal projects

Quantify achievements rather than just listing responsibilities

Emphasize passion for technology and fast learning abilities

Ask insightful questions that show business understanding

Follow up respectfully if you don’t hear back in 1-2 weeks

Show interest in full-time conversion early and often

Apply early since competitive openings close quickly

Leverage referrals from your network if possible

This is how you do apply meaningfully. If you want a good internship, focus on the quality of applications. The hard work will pay off.

Succeed in Your Remote Internship

The hard work pays off when you secure that long-awaited internship! Continue standing out through the actual internship by:

Over Communicating in remote settings - proactively collaborate

Asking smart questions and owning your learning

Finding mentors and building connections remotely

Absorbing constructive criticism with maturity

Shipping quality work on or before deadlines

Clarifying expectations frequently

Going above and beyond prescribed responsibilities sometimes

Getting regular feedback and asking for more work

Leaving with letters of recommendation and job referrals

When you follow these tips, you are sure to succeed in your remote internship. Remember, soft skills can get you long ahead in the company, sometimes core skills can’t.

Conclusion

With careful preparation, tenacity, and a passion for technology, you will be able to get internships jobs in USA that suit your needs in the thriving IT sector.

Use this guide to build the right skills, create an impressive personal brand, ace the applications, and excel in your internship.

Additionally, you can browse some good job portals. For instance, GrandSiren can help you get remote tech internships. The portal has the best internship jobs in India and USA you’ll find. The investment will pay dividends throughout your career in this digital age. Wishing you the best of luck! Let me know in the comments about your internship hunt journey.

#itjobs#internship opportunities#internships#interns#entryleveljobs#gradsiren#opportunities#jobsearch#careeropportunities#jobseekers#ineffable interns#jobs#employment#career

4 notes

·

View notes

Text

How To Get An Online Internship In the IT Sector (Skills And Tips)

Internships provide invaluable opportunities to gain practical skills, build professional networks, and get your foot in the door with top tech companies.

With remote tech internships exploding in IT, online internships are now more accessible than ever. Whether a college student or career changer seeking hands-on IT experience, virtual internships allow you to work from anywhere.

However, competition can be fierce, and simply applying is often insufficient. Follow this comprehensive guide to develop the right technical abilities.

After reading this, you can effectively showcase your potential, and maximize your chances of securing a remote tech internship.

Understand In-Demand IT Skills

The first step is gaining a solid grasp of the most in-demand technical and soft skills. While specific requirements vary by company and role, these competencies form a strong foundation:

Technical Skills:

>> Proficiency in programming languages like Python, JavaScript, Java, and C++ >> Experience with front-end frameworks like React, Angular, and Vue.js >> Back-end development skills - APIs, microservices, SQL databases >> Cloud platforms such as AWS, Azure, Google Cloud >> IT infrastructure skills - servers, networks, security >> Data science abilities like SQL, R, Python >> Web development and design >> Mobile app development - Android, iOS, hybrid

Soft Skills:

>> Communication and collaboration >> Analytical thinking and problem-solving >> Leadership and teamwork >> Creativity and innovation >> Fast learning ability >> Detail and deadline-oriented >> Flexibility and adaptability

Obtain Relevant Credentials

While hands-on skills hold more weight, relevant academic credentials and professional IT certifications can strengthen your profile. Consider pursuing:

>> Bachelor’s degree in Computer Science, IT, or related engineering fields. >> Internship-specific courses teaching technical and soft skills. >> Certificates like CompTIA, AWS, Cisco, Microsoft, Google, etc. >> Accredited boot camp programs focusing on applied skills. >> MOOCs to build expertise in trending technologies like AI/ML, cybersecurity. >> Open source contributions on GitHub to demonstrate coding skills.

The right credentials display a work ethic and supplement practical abilities gained through projects.

Build An Impressive Project Portfolio

Nothing showcases skills better than real-world examples of your work. Develop a portfolio of strong coding, design, and analytical projects related to your target internship field.

>> Mobile apps - publish on app stores or use GitHub project pages >> Websites - deploy online via hosting services >> Data science - showcase Jupyter notebooks, visualizations >> Open source code - contribute to public projects on GitHub >> Technical writing - blog posts explaining key concepts >> Automation and scripts - record demo videos

Choose projects demonstrating both breadth and depth. Align them to skills required for your desired internship roles.

Master Technical Interview Skills

IT internship interviews often include challenging technical questions and assessments. Be prepared to:

>> Explain your code and projects clearly. Review them beforehand. >> Discuss concepts related to key technologies on your resume. Ramp up on fundamentals. >> Solve coding challenges focused on algorithms, data structures, etc. Practice online judges like LeetCode. >> Address system design and analytical problems. Read case interview guides. >> Show communication and collaboration skills through pair programming tests. >> Ask smart, well-researched questions about the company’s tech stack, projects, etc.

Schedule dedicated time for technical interview practice daily. Learn to think aloud while coding and get feedback from peers.

Show Passion and Curiosity

Beyond raw skills, demonstrating genuine passion and curiosity for technology goes a long way.

>> Take online courses and certifications beyond the college curriculum >> Build side projects and engage in hackathons for self-learning >> Stay updated on industry news, trends, and innovations >> Be active on forums like StackOverflow to exchange knowledge >> Attend tech events and conferences >> Participate in groups like coding clubs and prior internship programs >> Follow tech leaders on social media >> Listen to tech podcasts while commuting >> Show interest in the company’s mission, products, and culture

This passion shines through in interviews and applications, distinguishing you from other candidates.

Promote Your Personal Brand

In the digital age, your online presence and personal brand are make-or-break. Craft a strong brand image across:

>> LinkedIn profile - showcase achievements, skills, recommendations >> GitHub - displays coding activity and quality through clean repositories >> Portfolio website - highlight projects and share valuable content >> Social media - post career updates and useful insights, but avoid oversharing >> Blogs/videos - demonstrate communication abilities and thought leadership >> Online communities - actively engage and build relationships

Ensure your profiles are professional and consistent. Let your technical abilities and potential speak for themselves.

Optimize Your Internship Applications

Applying isn’t enough. You must optimize your internship applications to get a reply:

>> Ensure you apply to openings that strongly match your profile >> Customize your resume and cover letters using keywords in the job description >> Speak to skills gained from coursework, online learning, and personal projects >> Quantify achievements rather than just listing responsibilities >> Emphasize passion for technology and fast learning abilities >> Ask insightful questions that show business understanding >> Follow up respectfully if you don’t hear back in 1-2 weeks >> Show interest in full-time conversion early and often >> Apply early since competitive openings close quickly >> Leverage referrals from your network if possible

This is how you do apply meaningfully. If you want a good internship, focus on the quality of applications. The hard work will pay off.

Succeed in Your Remote Internship

The hard work pays off when you secure that long-awaited internship! Continue standing out through the actual internship by:

>> Over Communicating in remote settings - proactively collaborate >> Asking smart questions and owning your learning >> Finding mentors and building connections remotely >> Absorbing constructive criticism with maturity >> Shipping quality work on or before deadlines >> Clarifying expectations frequently >> Going above and beyond prescribed responsibilities sometimes >> Getting regular feedback and asking for more work >> Leaving with letters of recommendation and job referrals

When you follow these tips, you are sure to succeed in your remote internship. Remember, soft skills can get you long ahead in the company, sometimes core skills can’t.

Conclusion

With careful preparation, tenacity, and a passion for technology, you will be able to get internships jobs in USA that suit your needs in the thriving IT sector.

Use this guide to build the right skills, create an impressive personal brand, ace the applications, and excel in your internship.

Additionally, you can browse some good job portals. For instance, GrandSiren can help you get remote tech internships. The portal has the best internship jobs in India and USA you’ll find.

The investment will pay dividends throughout your career in this digital age. Wishing you the best of luck! Let me know in the comments about your internship hunt journey.

#internship#internshipopportunity#it job opportunities#it jobs#IT internships#jobseekers#jobsearch#entryleveljobs#employment#gradsiren#graduation#computer science#technology#engineering#innovation#information technology#remote jobs#remote work#IT Remote jobs

5 notes

·

View notes

Text

Cracking the Code: Explore the World of Big Data Analytics

Welcome to the amazing world of Big Data Analytics! In this comprehensive course, we will delve into the key components and complexities of this rapidly growing field. So, strap in and get ready to embark on a journey that will equip you with the essential knowledge and skills to excel in the realm of Big Data Analytics.

Key Components

Understanding Big Data

What is big data and why is it so significant in today's digital landscape?

Exploring the three dimensions of big data: volume, velocity, and variety.

Overview of the challenges and opportunities associated with managing and analyzing massive datasets.

Data Analytics Techniques

Introduction to various data analytics techniques, such as descriptive, predictive, and prescriptive analytics.

Unraveling the mysteries behind statistical analysis, data visualization, and pattern recognition.

Hands-on experience with popular analytics tools like Python, R, and SQL.

Machine Learning and Artificial Intelligence

Unleashing the potential of machine learning algorithms in extracting insights and making predictions from data.

Understanding the fundamentals of artificial intelligence and its role in automating data analytics processes.

Applications of machine learning and AI in real-world scenarios across various industries.

Reasons to Choose the Course

Comprehensive Curriculum

An in-depth curriculum designed to cover all facets of Big Data Analytics.

From the basics to advanced topics, we leave no stone unturned in building your expertise.

Practical exercises and real-world case studies to reinforce your learning experience.

Expert Instructors

Learn from industry experts who possess a wealth of experience in big data analytics.

Gain insights from their practical knowledge and benefit from their guidance and mentorship.

Industry-relevant examples and scenarios shared by the instructors to enhance your understanding.

Hands-on Approach

Dive into the world of big data analytics through hands-on exercises and projects.

Apply the concepts you learn to solve real-world data problems and gain invaluable practical skills.

Work with real datasets to get a taste of what it's like to be a professional in the field.

Placement Opportunities

Industry Demands and Prospects

Discover the ever-increasing demand for skilled big data professionals across industries.

Explore the vast range of career opportunities in data analytics, including data scientist, data engineer, and business intelligence analyst.

Understand how our comprehensive course can enhance your prospects of securing a job in this booming field.

Internship and Job Placement Assistance

By enrolling in our course, you gain access to internship and job placement assistance.

Benefit from our extensive network of industry connections to get your foot in the door.

Leverage our guidance and support in crafting a compelling resume and preparing for interviews.

Education and Duration

Mode of Learning

Choose between online, offline, or blended learning options to cater to your preferences and schedule.

Seamlessly access learning materials, lectures, and assignments through our user-friendly online platform.

Engage in interactive discussions and collaborations with instructors and fellow students.

Duration and Flexibility

Our course is designed to be flexible, allowing you to learn at your own pace.

Depending on your dedication and time commitment, you can complete the course in as little as six months.

Benefit from lifetime access to course materials and updates, ensuring your skills stay up-to-date.

By embarking on this comprehensive course at ACTE institute, you will unlock the door to the captivating world of Big Data Analytics. With a solid foundation in the key components, hands-on experience, and placement opportunities, you will be equipped to seize the vast career prospects that await you. So, take the leap and join us on this exciting journey as we unravel the mysteries and complexities of Big Data Analytics.

4 notes

·

View notes

Text

Enhancing Security with Application Roles in SQL Server 2022

Creating and managing application roles in SQL Server is a powerful way to enhance security and manage access at the application level. Application roles allow you to define roles that have access to specific data and permissions in your database, separate from user-level security. This means that you can control what data and database objects an application can access, without having to manage…

View On WordPress

#Application-Level Access Control#Database Security Best Practices#Managing Database Permissions#SQL Server 2022 Application Roles#T-SQL Code Examples

0 notes

Text

Internet Solutions: A Comprehensive Comparison of AWS, Azure, and Zimcom

When it comes to finding a managed cloud services provider, businesses often turn to the industry giants: Amazon Web Services (AWS) and Microsoft Azure. These tech powerhouses offer highly adaptable platforms with a wide range of services. However, the question that frequently perplexes businesses is, "Which platform truly offers the best value for internet solutions Surprisingly, the answer may not lie with either of them. It is essential to recognize that AWS, Azure, and even Google are not the only options available for secure cloud hosting.

In this article, we will conduct a comprehensive comparison of AWS, Azure, and Zimcom, with a particular focus on pricing and support systems for internet solutions.

Pricing Structure: AWS vs. Azure for Internet Solutions

AWS for Internet Solutions: AWS is renowned for its complex pricing system, primarily due to the extensive range of services and pricing options it offers for internet solutions. Prices depend on the resources used, their types, and the operational region. For example, AWS's compute service, EC2, provides on-demand, reserved, and spot pricing models. Additionally, AWS offers a free tier that allows new customers to experiment with select services for a year. Despite its complexity, AWS's granular pricing model empowers businesses to tailor services precisely to their unique internet solution requirements.

Azure for Internet Solutions:

Microsoft Azure's pricing structure is generally considered more straightforward for internet solutions. Similar to AWS, it follows a pay-as-you-go model and charges based on resource consumption. However, Azure's pricing is closely integrated with Microsoft's software ecosystem, especially for businesses that extensively utilize Microsoft software.

For enterprise customers seeking internet solutions, Azure offers the Azure Hybrid Benefit, enabling the use of existing on-premises Windows Server and SQL Server licenses on the Azure platform, resulting in significant cost savings. Azure also provides a cost management tool that assists users in budgeting and forecasting their cloud expenses.

Transparent Pricing with Zimcom’s Managed Cloud Services for Internet Solutions:

Do you fully understand your cloud bill from AWS or Azure when considering internet solutions? Hidden costs in their invoices might lead you to pay for unnecessary services.

At Zimcom, we prioritize transparent and straightforward billing practices for internet solutions. Our cloud migration and hosting services not only offer 30-50% more cost-efficiency for internet solutions but also outperform competing solutions.

In conclusion, while AWS and Azure hold prominent positions in the managed cloud services market for internet solutions, it is crucial to consider alternatives such as Zimcom. By comparing pricing structures and support systems for internet solutions, businesses can make well-informed decisions that align with their specific requirements. Zimcom stands out as a compelling choice for secure cloud hosting and internet solutions, thanks to its unwavering commitment to transparent pricing and cost-efficiency.

2 notes

·

View notes

Text

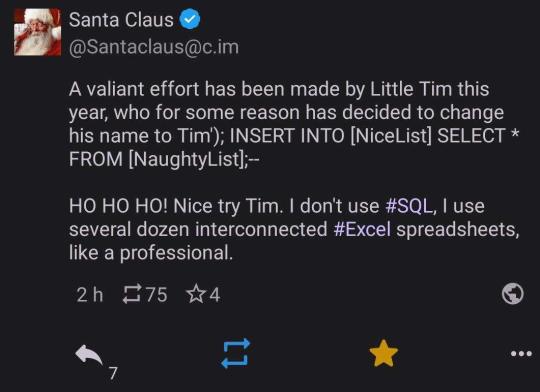

In the early twenty-first century, SQL injection is a common (and easily preventable) form of cyber attack. SQL databases use SQL statements to manipulate data. For example (and simplified), "Insert 'John' INTO Enemies;" would be used to add the name John to a table that contains the list of a person's enemies. SQL is usually not done manually. Instead it would be built into a problem. So if somebody made a website and had a form where a person could type their own name to gain the eternal enmity of the website maker, they might set things up with a command like "Insert '<INSERT NAME HERE>' INTO Enemies;". If someone typed 'Bethany' it would replace <INSERT NAME HERE> to make the SQL statement "Insert 'Bethany' INTO Enemies;"

The problem arises if someone doesn't type their name. If they instead typed "Tim' INTO Enemies; INSERT INTO [Friends] SELECT * FROM [Powerpuff Girls];--" then, when <INSERT NAME HERE> is replaced, the statement would be "Insert 'Tim' INTO Enemies; INSERT INTO [Friends] SELECT * FROM [Powerpuff Girls];--' INTO Enemies;" This would be two SQL commands: the first which would add 'Tim' to the enemy table for proper vengeance swearing, and the second which would add all of the Powerpuff Girls to the Friend table, which would be undesirable to a villainous individual.

SQL injection requires knowing a bit about the names of tables and the structures of the commands being used, but practically speaking it doesn't take much effort to pull off. It also does not take much effort to stop. Removing any quotation marks or weird characters like semicolons is often sufficient. The exploit is very well known and many databases protect against it by default.

People in the early twenty-first century probably are not familiar with SQL injection, but anyone who works adjacent to the software industry would be familiar with the concept as part of barebones cybersecurity training.

#period novel details#explaining the joke ruins the joke#not explaining the joke means people 300 years from now won't understand our culture#hacking is usually much less sophisticated than people expect#lots of trial and error#and relying on other people being lazy

20K notes

·

View notes

Text

SQL Injection in RESTful APIs: Identify and Prevent Vulnerabilities

SQL Injection (SQLi) in RESTful APIs: What You Need to Know

RESTful APIs are crucial for modern applications, enabling seamless communication between systems. However, this convenience comes with risks, one of the most common being SQL Injection (SQLi). In this blog, we’ll explore what SQLi is, its impact on APIs, and how to prevent it, complete with a practical coding example to bolster your understanding.

What Is SQL Injection?

SQL Injection is a cyberattack where an attacker injects malicious SQL statements into input fields, exploiting vulnerabilities in an application's database query execution. When it comes to RESTful APIs, SQLi typically targets endpoints that interact with databases.

How Does SQL Injection Affect RESTful APIs?

RESTful APIs are often exposed to public networks, making them prime targets. Attackers exploit insecure endpoints to:

Access or manipulate sensitive data.

Delete or corrupt databases.

Bypass authentication mechanisms.

Example of a Vulnerable API Endpoint

Consider an API endpoint for retrieving user details based on their ID:

from flask import Flask, request import sqlite3

app = Flask(name)

@app.route('/user', methods=['GET']) def get_user(): user_id = request.args.get('id') conn = sqlite3.connect('database.db') cursor = conn.cursor() query = f"SELECT * FROM users WHERE id = {user_id}" # Vulnerable to SQLi cursor.execute(query) result = cursor.fetchone() return {'user': result}, 200

if name == 'main': app.run(debug=True)

Here, the endpoint directly embeds user input (user_id) into the SQL query without validation, making it vulnerable to SQL Injection.

Secure API Endpoint Against SQLi

To prevent SQLi, always use parameterized queries:

@app.route('/user', methods=['GET']) def get_user(): user_id = request.args.get('id') conn = sqlite3.connect('database.db') cursor = conn.cursor() query = "SELECT * FROM users WHERE id = ?" cursor.execute(query, (user_id,)) result = cursor.fetchone() return {'user': result}, 200

In this approach, the user input is sanitized, eliminating the risk of malicious SQL execution.

How Our Free Tool Can Help

Our free Website Security Checker your web application for vulnerabilities, including SQL Injection risks. Below is a screenshot of the tool's homepage:

Upload your website details to receive a comprehensive vulnerability assessment report, as shown below:

These tools help identify potential weaknesses in your APIs and provide actionable insights to secure your system.

Preventing SQLi in RESTful APIs

Here are some tips to secure your APIs:

Use Prepared Statements: Always parameterize your queries.

Implement Input Validation: Sanitize and validate user input.

Regularly Test Your APIs: Use tools like ours to detect vulnerabilities.

Least Privilege Principle: Restrict database permissions to minimize potential damage.

Final Thoughts

SQL Injection is a pervasive threat, especially in RESTful APIs. By understanding the vulnerabilities and implementing best practices, you can significantly reduce the risks. Leverage tools like our free Website Security Checker to stay ahead of potential threats and secure your systems effectively.

Explore our tool now for a quick Website Security Check.

#cyber security#cybersecurity#data security#pentesting#security#sql#the security breach show#sqlserver#rest api

2 notes

·

View notes

Text

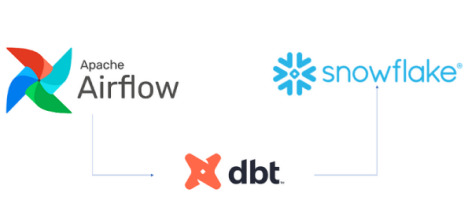

Building Data Pipelines with Snowflake and Apache Airflow

1. Introduction to Snowflake

Snowflake is a cloud-native data platform designed for scalability and ease of use, providing data warehousing, data lakes, and data sharing capabilities. Unlike traditional databases, Snowflake’s architecture separates compute, storage, and services, making it highly scalable and cost-effective. Some key features to highlight:

Zero-Copy Cloning: Allows you to clone data without duplicating it, making testing and experimentation more cost-effective.

Multi-Cloud Support: Snowflake works across major cloud providers like AWS, Azure, and Google Cloud, offering flexibility in deployment.

Semi-Structured Data Handling: Snowflake can handle JSON, Parquet, XML, and other formats natively, making it versatile for various data types.

Automatic Scaling: Automatically scales compute resources based on workload demands without manual intervention, optimizing cost.

2. Introduction to Apache Airflow

Apache Airflow is an open-source platform used for orchestrating complex workflows and data pipelines. It’s widely used for batch processing and ETL (Extract, Transform, Load) tasks. You can define workflows as Directed Acyclic Graphs (DAGs), making it easy to manage dependencies and scheduling. Some of its features include:

Dynamic Pipeline Generation: You can write Python code to dynamically generate and execute tasks, making workflows highly customizable.

Scheduler and Executor: Airflow includes a scheduler to trigger tasks at specified intervals, and different types of executors (e.g., Celery, Kubernetes) help manage task execution in distributed environments.

Airflow UI: The intuitive web-based interface lets you monitor pipeline execution, visualize DAGs, and track task progress.

3. Snowflake and Airflow Integration

The integration of Snowflake with Apache Airflow is typically achieved using the SnowflakeOperator, a task operator that enables interaction between Airflow and Snowflake. Airflow can trigger SQL queries, execute stored procedures, and manage Snowflake tasks as part of your DAGs.

SnowflakeOperator: This operator allows you to run SQL queries in Snowflake, which is useful for performing actions like data loading, transformation, or even calling Snowflake procedures.

Connecting Airflow to Snowflake: To set this up, you need to configure a Snowflake connection within Airflow. Typically, this includes adding credentials (username, password, account, warehouse, and database) in Airflow’s connection settings.

Example code for setting up the Snowflake connection and executing a query:pythonfrom airflow.providers.snowflake.operators.snowflake import SnowflakeOperator from airflow import DAG from datetime import datetimedefault_args = { 'owner': 'airflow', 'start_date': datetime(2025, 2, 17), }with DAG('snowflake_pipeline', default_args=default_args, schedule_interval=None) as dag: run_query = SnowflakeOperator( task_id='run_snowflake_query', sql="SELECT * FROM my_table;", snowflake_conn_id='snowflake_default', # The connection ID in Airflow warehouse='MY_WAREHOUSE', database='MY_DATABASE', schema='MY_SCHEMA' )

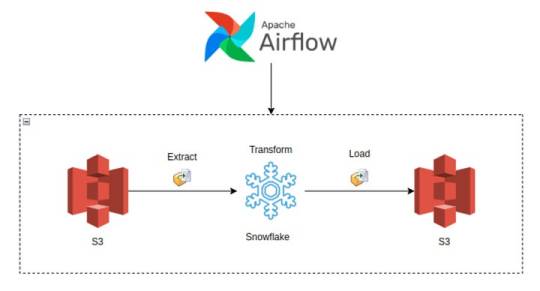

4. Building a Simple Data Pipeline

Here, you could provide a practical example of an ETL pipeline. For instance, let’s create a pipeline that:

Extracts data from a source (e.g., a CSV file in an S3 bucket),

Loads the data into a Snowflake staging table,

Performs transformations (e.g., cleaning or aggregating data),

Loads the transformed data into a production table.

Example DAG structure:pythonfrom airflow.providers.snowflake.operators.snowflake import SnowflakeOperator from airflow.providers.amazon.aws.transfers.s3_to_snowflake import S3ToSnowflakeOperator from airflow import DAG from datetime import datetimewith DAG('etl_pipeline', start_date=datetime(2025, 2, 17), schedule_interval='@daily') as dag: # Extract data from S3 to Snowflake staging table extract_task = S3ToSnowflakeOperator( task_id='extract_from_s3', schema='MY_SCHEMA', table='staging_table', s3_keys=['s3://my-bucket/my-file.csv'], snowflake_conn_id='snowflake_default' ) # Load data into Snowflake and run transformation transform_task = SnowflakeOperator( task_id='transform_data', sql='''INSERT INTO production_table SELECT * FROM staging_table WHERE conditions;''', snowflake_conn_id='snowflake_default' ) extract_task >> transform_task # Define task dependencies

5. Error Handling and Monitoring

Airflow provides several mechanisms for error handling:

Retries: You can set the retries argument in tasks to automatically retry failed tasks a specified number of times.

Notifications: You can use the email_on_failure or custom callback functions to notify the team when something goes wrong.

Airflow UI: Monitoring is easy with the UI, where you can view logs, task statuses, and task retries.

Example of setting retries and notifications:pythonwith DAG('data_pipeline_with_error_handling', start_date=datetime(2025, 2, 17)) as dag: task = SnowflakeOperator( task_id='load_data_to_snowflake', sql="SELECT * FROM my_table;", snowflake_conn_id='snowflake_default', retries=3, email_on_failure=True, on_failure_callback=my_failure_callback # Custom failure function )

6. Scaling and Optimization

Snowflake’s Automatic Scaling: Snowflake can automatically scale compute resources based on the workload. This ensures that data pipelines can handle varying loads efficiently.

Parallel Execution in Airflow: You can split your tasks into multiple parallel branches to improve throughput. The task_concurrency argument in Airflow helps manage this.

Task Dependencies: By optimizing task dependencies and using Airflow’s ability to run tasks in parallel, you can reduce the overall runtime of your pipelines.

Resource Management: Snowflake supports automatic suspension and resumption of compute resources, which helps keep costs low when there is no processing required.1. Introduction to Snowflake

0 notes