#software developer replaced by ai

Text

How API Integration and chatgpt can replace programmers

How chatgpt can replace programmers?

Traditional programming paradigms are being transformed by advances in artificial intelligence (AI) and API integration in the ever changing field of software development. The development of OpenAI’s chatgpt replace developers, an AI-powered language model, is one such significant achievement. With its incredible potential, chatgpt replace developers will surely change the way developers approach coding tasks, raising the possibility that it may eventually completely replace human programmers.

API integration has long been a cornerstone of modern software development, enabling applications to communicate and interact with each other seamlessly. By leveraging APIs, developers can incorporate various functionalities and services into their applications without reinventing the wheel. This modular approach not only accelerates development cycles but also enhances the overall user experience.

But the introduction of chatgpt replace developers adds a new level of complexity to the programming world. Thanks to deep learning algorithms, ChatGPT is able to understand and produce writing that appears human depending on the context that is given to it. This implies that developers can now communicate naturally with ChatGPT, asking for help and efficiently producing code snippets.

One of the key areas where chatgpt can replace programmers, shows immense promise is in code generation and automation. Traditionally, developers spend considerable time writing and debugging code to implement various features and functionalities. With ChatGPT, developers can simply describe their requirements in plain language, and the model can generate the corresponding code snippets, significantly reducing development time and effort.

For example, developers can define the desired functionality to chatgpt can replace programmers,, such as “parse JSON data and extract relevant fields,” rather than developing a function by hand to parse JSON data from an API response. The required code snippet can then be generated by ChatGPT based on the developer’s requirements. As a result, the development process has been simplified and developers are free to focus on making more complex architectural choices and solving problems.

Additionally, chatgpt can replace programmers, can be a very helpful tool for developers who need advice or help with fixing problems. Developers can refer to ChatGPT for advice and insights when solving complex issues or exploring different ways for implementation. Developers can gain a wealth of knowledge and optimal methodologies to guide their decision-making process by utilizing the collective wisdom contained in ChatGPT’s extensive training dataset.

However, the prospect of chatgpt can replace programmers, replacing human programmers entirely remains a topic of debate and speculation within the developer community. While ChatGPT excels at generating code based on provided prompts, it lacks the nuanced understanding and creative problem-solving abilities inherent to human developers. Additionally, concerns regarding the ethical implications of AI-driven automation in software development continue to be raised.

It’s important to understand that chatgpt can replace programmers,is meant to enhance developers’ skills and simplify specific parts of the development process, not to replace them. Developers can boost productivity, drive creativity, and ultimately provide end users with better software solutions by using AI-powered technologies like ChatGPT.

In conclusion, the convergence of API integration and AI-driven technologies like ChatGPT is reshaping the dynamics of programming in profound ways. While API integration facilitates seamless interaction between software components, chatgpt can replace programmers with natural language interfaces and code generation capabilities. While the idea of ChatGPT replacing human programmers entirely may be far-fetched, its potential to augment and enhance developer productivity is undeniable. As the field of AI continues to advance, developers must embrace these innovations as valuable tools in their arsenal, driving greater efficiency and innovation in software development.

For More Information Visit Best Digital Marketing Company in Indore

#chat gpt programmers#chat gpt will replace programmer#software developer replaced by ai#openai replace programmers#Gpt Developer#chatGpt programmers

0 notes

Text

god image generation software depresses me... there's no way to win this argument in the public consciousness when most laypeople just enjoy seeing a pretty picture, and don't think about where it came from at all. they're just happy that they can make something pretty too without the literal years of effort, which I can't blame them for. but goddamnit.

#joos yaps#and it's like 'but you had talent! now everyone can have talent!'#art is too commercially important to not replace artists with cheaper alternatives as soon as they become remotely viable.#only fast legal action would have a chance at maybe making it a little bit more fair.#not even not letting people create a book cover with ai. but just making the software developers do the bare minimum of asking#for permission from their sources#but if there's one thing i know about lawmakers. it's that they're extremely slow when it comes to new tech#(relatively)#:))))))))))))))))))))

32 notes

·

View notes

Text

Can Devin AI replace human developers entirely?

This question was originally answered on Quora and written as is.

I’m not familiar with Devin per se, but I don’t see AI “replacing people” in any capacity it is developed to function within — and I use that description with a caveat because AI IS replacing hundreds of thousands of jobs — if not millions worldwide.

https://preview.devin.ai/

AI has the potential to liberate humans from mundane…

View On WordPress

0 notes

Text

#ai#replace a full stack#developer#html#machine learning#programming#digital marketing#python#viral#web development#software development#mobile app development

0 notes

Note

why exactly do you dislike generative art so much? i know its been misused by some folks, but like, why blame a tool because it gets used by shitty people? Why not just... blame the people who are shitty? I mean this in genuinely good faith, you seem like a pretty nice guy normally, but i guess it just makes me confused how... severe? your reactions are sometimes to it. There's a lot of nuance to conversation about it, and by folks a lot smarter than I (I suggest checking out the Are We Art Yet or "AWAY" group! They've got a lot on their page about the ethical use of Image generation software by individuals, and it really helped explain some things I was confused about). I know on my end, it made me think about why I personally was so reactive about Who was allowed to make art and How/Why. Again, all this in good faith, and I'm not asking you to like, Explain yourself or anything- If you just read this and decide to delete it instead of answering, all good! I just hope maybe you'll look into *why* some people advocate for generative software as strongly as they do, and listen to what they have to say about things -🦜

if Ai genuinely generated its own content I wouldn't have as much of a problem with it, however what Ai currently does is scrape other people's art, collect it, and then build something based off of others stolen works without crediting them. It's like. stealing other peoples art, mashing it together, then saying "this is mine i can not only profit of it but i can use it to cut costs in other industries.

this is more evident by people not "making" art but instead using prompts. Its like going to McDonalds and saying "Burger. Big, Juicy, etc, etc" then instead of a worker making the burger it uses an algorithm to build a burger based off of several restaurant's recepies.

example

the left is AI art, the right is one of the artists (Lindong) who it pulled the art style from. it's literally mass producing someone's artstyle by taking their art then using an algorithm to rebuild it in any context. this is even more apparent when you see ai art also tries to recreate artists watermarks and generally blends them together making it unintelligible.

Aside from that theres a lot of other ethical problems with it including generating pretty awful content, including but not limited to cp. It also uses a lot of processing power and apparently water? I haven't caught up on the newer developements i've been depressed about it tbh

Then aside from those, studios are leaning towards Ai generation to replace having to pay people. I've seen professional voice actors complain on twitter that they haven't gotten as much work since ai voice generation started, artists are being cut down and replaced by ai art then having the remaining artists fix any errors in the ai art.

Even beyond those things are the potential for misinformation. Here's an experiment: Which of these two are ai generated?

ready?

These two are both entirely ai generated. I have no idea if they're real people, but in a few months you could ai generate a Biden sex scandal, you could generate politics in whatever situation you want, you can generate popular streamers nude, whatever. and worse yet is ai generated video is already being developed and it doesn't look bad.

I posted on this already but as of right now it only needs one clear frame of a body and it can generate motion. yeah there are issues but it's been like two years since ai development started being taken seriously and we've gotten to this point already. within another two years it'll be close to perfected. There was even tests done with tiktokers and it works. it just fucking works.

There is genuinely not one upside to ai art. at all. it's theft, it's harming peoples lives, its harming the environment, its cutting jobs back and hurting the economy, it's invading peoples privacy, its making pedophilia accessible, and more. it's a plague and there's no vaccine for it. And all because people don't want to take a year to learn anatomy.

5K notes

·

View notes

Note

I’m in undergrad but I keep hearing and seeing people talking about using chatgpt for their schoolwork and it makes me want to rip my hair out lol. Like even the “radical” anti-chatgpt ones are like “Oh yea it’s only good for outlines I’d never use it for my actual essay.” You’re using it for OUTLINES????? That’s the easy part!! I can’t wait to get to grad school and hopefully be surrounded by people who actually want to be there ���😭😭

Not to sound COMPLETELY like a grumpy old codger (although lbr, I am), but I think this whole AI craze is the obvious result of an education system that prizes "teaching for the test" as the most important thing, wherein there are Obvious Correct Answers that if you select them, pass the standardized test and etc etc mean you are now Educated. So if there's a machine that can theoretically pick the correct answers for you by recombining existing data without the hard part of going through and individually assessing and compiling it yourself, Win!

... but of course, that's not the way it works at all, because AI is shown to create misleading, nonsensical, or flat-out dangerously incorrect information in every field it's applied to, and the errors are spotted as soon as an actual human subject expert takes the time to read it closely. Not to go completely KIDS THESE DAYS ARE JUST LAZY AND DONT WANT TO WORK, since finding a clever way to cheat on your schoolwork is one of those human instincts likewise old as time and has evolved according to tools, technology, and educational philosophy just like everything else, but I think there's an especial fear of Being Wrong that drives the recourse to AI (and this is likewise a result of an educational system that only prioritizes passing standardized tests as the sole measure of competence). It's hard to sort through competing sources and form a judgment and write it up in a comprehensive way, and if you do it wrong, you might get a Bad Grade! (The irony being, of course, that AI will *not* get you a good grade and will be marked even lower if your teachers catch it, which they will, whether by recognizing that it's nonsense or running it through a software platform like Turnitin, which is adding AI detection tools to its usual plagiarism checkers.)

We obviously see this mindset on social media, where Being Wrong can get you dogpiled and/or excluded from your peer groups, so it's even more important in the minds of anxious undergrads that they aren't Wrong. But yeah, AI produces nonsense, it is an open waste of your tuition dollars that are supposed to help you develop these independent college-level analytical and critical thinking skills that are very different from just checking exam boxes, and relying on it is not going to help anyone build those skills in the long term (and is frankly a big reason that we're in this mess with an entire generation being raised with zero critical thinking skills at the exact moment it's more crucial than ever that they have them). I am mildly hopeful that the AI craze will go bust just like crypto as soon as the main platforms either run out of startup funding or get sued into oblivion for plagiarism, but frankly, not soon enough, there will be some replacement for it, and that doesn't mean we will stop having to deal with fake news and fake information generated by a machine and/or people who can't be arsed to actually learn the skills and abilities they are paying good money to acquire. Which doesn't make sense to me, but hey.

So: Yes. This. I feel you and you have my deepest sympathies. Now if you'll excuse me, I have to sit on the porch in my quilt-draped rocking chair and shout at kids to get off my lawn.

179 notes

·

View notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

250 notes

·

View notes

Text

to ppl who are currently skeptical about llm (aka "ai") capabilities and benefits for everyday people: i would strongly encourage u to check out this really cool slide deck someone made where they describe a world in which non-software-engineers can make home-grown apps by and for themselves with assistance from llms.

in particular there are a couple of linked tools that are really amazing, including one that gives you a whiteboard interface to draw and describe the app interface you want and then uses gpt4o to write the code for that app.

i think it's also an excellent counter to the argument that llms are basically only going to benefit le capitalisme and corporate overlords, because the technology presented here is actually being used to help users take matters into their own hands. to build their own apps that do what they want the apps to do, and to do it all on their own computers, so none of their private data has to get slurped up by some new startup.

"oh, so i should just let openai slurp up all my data instead? sounds great, asshole" no!!!! that's not what this is suggesting! this is saying you can make your own apps with the llms. then you put your private data in the app that you made, and that app doesn't need chatgpt to work, so literally everything involving your personal data remains on your personal devices.

is this a complete argument for justifying the existence of ai and llms? no! is this a justification for other privacy abuses? also no! does this mean we should all feel totally okay and happy with companies laying off tons of people in order to replace them with llms? 100% no!!!! please continue being mad about that.

just don't let those problems push you towards believing these things don't have genuinely impressive capabilities that can actually help you unlock the ability to do cool things you wouldn't otherwise have the time, energy, or inclination to do.

42 notes

·

View notes

Text

Been traveling a lot lately and I love how, in US TSA security lines, they always make sure that the big sign saying the facial recognition photo is optional is always turned sideways or set so the spanish-translation side is facing the line and the English-translation side is facing a wall or something.

Anyway, TSA facial recognition photos are 100% not mandatory and if you don't feel like helping a company develop its facial recognition AI software (like, say, Clearview AI), you can just politely tell the TSA agent that you don't want to participate in the photo and instead show an ID or your boarding pass. Like we've been doing for years and years.

#privacy#anti facial recognition#clearwater AI#you need to protect yourself#its dishonest is what it is#the signs are always there#as per law#but they are hidden/turned/set way to the side

26 notes

·

View notes

Text

i Believe i am finally done making references

edit: pasting the image descriptions out of the alt text. since they're refs they're really long I am so sorry

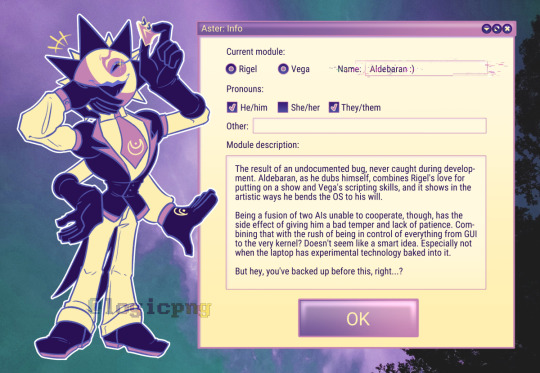

[First image ID:

Digital artwork of Aldebaran Aster - a humanoid being in a suit, four arms, and a star shaped head - standing next to a large program window titled: "Aster: Info". He is holding up the mouse pointer in one of his hands and laughing, with a smug smile.

The text in the window reads:

"Current module:

[Selected radio button] Rigel [Selected radio button] Vega [Glitchy text box] Name: Aldebaran :)

Pronouns:

[Checked tick box] He/him [Unchecked tick box] She/her [Checked tick box] They/them

Other: [Long empty text field]

Module description:

[Following text in a large text box:]

The result of an undocumented bug, never caught during development. Aldebaran, as he dubs himself, combines Rigel's love for putting on a show and Vega's scripting skills, and it shows in the artistic ways he bends the OS to his will.

Being a fusion of two AIs unable to cooperate, though, has the side effect of giving him a bad temper and lack of patience. Combining that with the rush of being in control of everything from GUI to the very kernel? Doesn't seem like a smart idea. Especially not when the laptop has experimental technology baked into it.

But hey, you've backed up before this, right...?

[Text box ends]

[Large lavender OK button]"

First Image ID end]

[Second Image ID:

Digital artwork of The User - a human with a gray-green skin, dark green hair with a white t-shirt, track suit shorts and green socks - standing next to a large program window titled: "User information". They are standing with a laptop bearing the CaelOS logo on its back, and scratching their head, looking a little nervous.

The text in the window reads:

"Base info:

Name: Urs Norma; Pronunciation: OO-rs NOR-mah; Age: 25

Pronouns:

[Checked tick box] He/him [Checked tick box] She/her [Checked tick box] They/them

Other: Any/All

Personality profile:

[Following text in a large text box:]

Young adult figuring out... being an adult.

After hastily finding a used tech store, they found a replacement for their busted laptop. As it turns out, the machine hosts an OS that never saw the light of day, featuring experimental technology. At least, it's compatible with most software they need...

Despite the world being cruel and unforgiving, the spark of optimism remains bright. Just like the AI the laptop hides, all they can do is perpetually learn from their mistakes, and maybe even relay some of that knowledge to the little virtual assistants they find themself talking to every day.

[Text box ends]

[Large green OK button]"

Second Image ID]

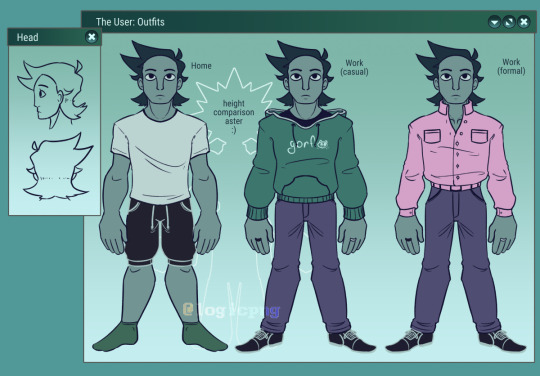

[Third Image ID:

Reference image of Urs Norma - an androgynous person with gray-green skin and dark green hair. A large program window titled "The User: Outfits" shows them standing neutrally, facing the camera, in three different outfits:

Home: Plain white shirt, track suit shorts, green socks.

Work (casual): Green hoodie that says "gorf" with a cat face on it in white, gray-purple pants, and black dress shoes. Left and right hand feature black and white rings on their respective middle fingers.

Work (formal): Pink dress shirt, slightly unbuttoned at the top, same pants, same dress shoes, and same rings.

Behind them is an outline of Aster, that has text in it saying "height comparison aster :)". At the top of their rays, they're noticeably shorter than Urs.

A window titled "Head", slightly overlapping the large window, shows lineart of the user's head in profile and from the back.

Third Image ID end]

#original#oc#original character#object head oc#object head#ai oc#aster#CaelOS#urs norma is a silly name. but it fits the star theme thing and i think i am growing to Like it#aldebaran (aster)#urs novak

196 notes

·

View notes

Note

I need to take a work trip to Germany, Leipzig to be precise. Should be a nice change from my NYC life.

I guess, your suitcase won't make it to Frankfurt... Then I guess I have to organize a replacement. Damn Airlines!

The only thing I can offer you so spontaneously is an old army backpack from GDR stocks, covered with graffiti tags, stickers and patches. Pretty heavy... And maybe not necessarily suitable for your classic suit… So, take your rucksckand head to the airport train station. Your train to Leipzig will depart in 20 minutes.

Shit, Frankfurt airport is bigger then expected. When you arrive, you thaught, that you missed your train. But luckily, the train is delayed by 15 minutes. Enough time, to relaxe. And for a smoke. You search the side pockets of the backpack. No cigarettes. But tobacco, cigarette paper. And weed. Shit, that could have ended badly at customs...

Ahh, smoking this feels great. I really needed to decompress a bit after this whole travel shitshow. Don't take offense, but a middelaged man in a conservative suit and a classic haircut smoking weed with an army backpack on the platform of the airport station looks a bit special... You have to admit that, too, when you see your reflection in the window panes of the high-speed train rushing in.

No one had told you that you had better have made a seat reservation. The train is packed. Getting a seat is out of the question. With a little luck, you will still get a seat in the dining car. You order a beer (what else in Germany) and check the contents of your backpack. On top of it lies a hat. It looks funny, you put it on. Otherwise, the backpack is not necessarily neatly packed. Everything is stuffed in more like this. There's a MacBook... You open it. And of course you know the password. Feels perfectly normal to open it. As normal as your pierced earlobes feel.

It is a low-coding platform open to any Big Data AI application. You scroll through the application. Sure, the prototype of an app for digitizing queues in doctors' offices. You open the library of useer stories and start developing the app further. A few hours ago, you had no idea about software development.

It's 9:00 p.m. when you look out the window. Gotha train station. Wherever that may be. You are looking at your reflection. Let's see what the others think of the fact that you have let the beard grow out...

The train is half empty by now. You have not even noticed how it has emptied. It's still a good hour to Leipzig. You close the computer. That's it for today. You order another beer and the vegan curry. Actually, you're also in desperate need of a joint. But of course you can't smoke anywhere on this train.

But you take tobacco, weed, cigarette paper and your cigarette case, which you inherited from your grandfather. And while you're waiting for the food, you roll a few joints on reserve. It will be after 11:00 p.m. by the time you arrive at your shared apartment. But you assume that you will sit together until 01:00 or 02:00. Your roommates are all rather night owls....

You don't notice that you're wearing high-laced DocMarten's boots instead of welted penny loafers as you step off the train. You also don't notice that your hair has grown considerably longer and falls tousled under your hat into your forehead... You pause for a moment as you see the tattoos on the back of your hand as you light up a joint to tide you over until the bus leaves. And after asking the bus driver for a ticket to Connewitz, you wonder if you actually just spoke German with quite an American accent.

The elevator in your house is of course defective again. Old building from 1873, last renovated in 1980 or so. That was long before the fall of the Wall in the GDR. But the rent is cheap. And the atmosphere is energetic and creative. When you met Kevin, Lukas and Emma at university five years ago, you were immediately on the same wavelength. Even though you didn't speak a word of German back then. You would never have thought that a semester as an exchange student would turn into a lasting collaboration. The fact that you found an apartment together where you could work on your startup at the same time was a real stroke of luck.

Upstairs in the apartment, Kevin already opens the door for you. As if he had been waiting for you.

„Sieht heute gut aus”, you say with your strange American accent.

Kevin hugs you and answers „Dude, it's good to have you back! We have missed you! Tell me, do you have new tattoos? Looks hot! And did you bring weed from Amsterdam? Our dealer is on vacation... Shitty situation!“

“Of course, i’d never leave you without”, I say, opening up the cigarette case and offering you one of the hand-rolled contents.

Kevin grins. „What do you say we smoke the first one not at the kitchen table but on your bed? I missed you, stud!“

“I’m so tired after this trip, so the bed sounds just right.”

There is nothing left of your suit right now. Yes, you are still from NYC. But you weren't a lawyer then. You studied computer science. And that was a long time ago. Now you are a Leipziger by heart

You both lie on the bed. You take a deep drag. And blow the smoke into Kevin’s mouth with a deep French kiss. The bulge in your skinny jeans looks painful. “Oh man, Kevin, I need some relief!” you growl.

It doesn't take long and we both have the tank tops off. You discover Kevins new nipple piercings. And can't stop playing with them. And Kevins bulge starts to hurt too.

“Man, let me provide some relief”, he says. And open your jeans. Your boner jumps out of your boxers like a jack-in-the-box.

Those new piercings… You just can’t help yourself… You’ve gotta feel them in my mouth! “Are they sensitive? Does it still hurt?” Kevin starts breathing more heavily. “What are you waiting for you prude Yank! They've been waiting for you for two weeks now!” You take a deep drag and blow the smoke over Kevins chest, which you caress with your tongue. Kevin moans “Fuck! You're doing so well! Sure it hurts. It's supposed to. You make me so fucking horny with your tongue! I love your tunnels on the earlobes!. I can not stop playing with them with my tongue.”

Dude, your dick is producing precum like a broken faucet. Kevin starts to massage it into your dick! You take one last drag from the joint, push the butt into the ashtray and blow the smoke over Kevins boner.

While Kevin rubs your hard dick, You begin licking his uncut cock. Damn man, these uncut European cocks will never not surprise you! Oh man, you love how it feels on your tongue.

Kevin doesn't stop breathing heavily, but still has to grin. “Fuck, admit it, you certainly didn't just talk about user interfaces with Milan and Sem in Amsterdam. You did practice your tongue game. Fuck, you know how to bring someone to ecstasy with the tip of your tongue!”

Oh man, Kevins precum just takes so good. You can’t get enough of it. Kevin reads your thoughts. “I want to lick your precum too. Let's make a 69! I need to suck your powerful circumcised cock.”

Yes, please!, you think in ecstasy. You just love how his balls feel in my mouth. And Kevin has fun to. You must have been sweating like a dog on the trip. Your balls are salty, your cock is deliciously cheesy. “Fuck, I can not tell you how I missed you.” Kevin moans.

He always feels so good, just keep going please, you think. His cock is so hard. His precum is spectacular. It’s like you’re in sync — in and out, in and out, in and out. “Fuck, your balls are so huge”, Kevin grunts. “I didn't jerk off all the time you ve been away. My balls are bursting”.

You both are perfectly synchron. Like one organism. “Please cum at the exact moment that I also cum. I want to make this old house shake.”, you think.You can’t wait to make you explode. Kevins moans “I can't take it much longer. Fuck, you are a master with your tongue. Fuck... Oh yeah... Yes! Fuuuuuuuck!”

Oh god! That was heavy. You both really try. But that was too much. Boy, what a load you both shot! Kevins cum is so thick! So potent! You ’ve got my whole mouth full, not able to swallow everything at once. You both exchange a deep French kiss. The cum runs from the corners of your mouths down our cheeks and necks. Kevin licks the cum traces from your skin. And you his. One last kiss, you pull up our pants again. And go to the kitchen with a joint. Lukas and Emma grin. The whole house could listen to you having sex.

“Incredible, as always, Kevin” You tell him, as you pass him the joint. And as if nothing had happened, you ask Emma if she has any new user stories for your app.

129 notes

·

View notes

Text

“I’m looking at a picture of my naked body, leaning against a hotel balcony in Thailand. My denim bikini has been replaced with exposed, pale pink nipples – and a smooth, hairless crotch. I zoom in on the image, attempting to gauge what, if anything, could reveal the truth behind it. There’s the slight pixilation around part of my waist, but that could be easily fixed with amateur Photoshopping. And that’s all.

Although the image isn’t exactly what I see staring back at me in the mirror in real life, it’s not a million miles away either. And hauntingly, it would take just two clicks of a button for someone to attach it to an email, post it on Twitter or mass distribute it to all of my contacts. Or upload it onto a porn site, leaving me spending the rest of my life fearful that every new person I meet has seen me naked. Except they wouldn’t have. Not really. Because this image, despite looking realistic, is a fake. And all it took to create was an easily discovered automated bot, a standard holiday snap and £5.

This image is a deepfake – and part of a rapidly growing market. Basically, AI technology (which is getting more accessible by the day) can take any image and morph it into something else. Remember the alternative ‘Queen’s Christmas message’ broadcast on Channel 4, that saw ‘Her Majesty’ perform a stunning TikTok dance? A deepfake. Those eerily realistic videos of ‘Tom Cruise’ that went viral last February? Deepfakes. That ‘gender swap’ app we all downloaded for a week during lockdown? You’ve guessed it: a low-fi form of deepfaking.

Yet, despite their prevalence, the term ‘deepfake’ (and its murky underworld) is still relatively unknown. Only 39% of Cosmopolitan readers said they knew the word ‘deepfake’ during our research (it’s derived from a combination of ‘deep learning’ – the type of AI programming used – and ‘fake’). Explained crudely, the tech behind deepfakes, Generative Adversarial Networks (GANs), is a two-part model: there’s a generator (which creates the content after studying similar images, audio, or videos) and the discriminator (which checks if the new content passes as legit). Think of it as a teenager forging a fake ID and trying to get it by a bouncer; if rejected, the harder the teen works on the forgery. GANs have been praised for making incredible developments in film, healthcare and technology (driverless cars rely on it) – but sadly, in reality it’s more likely to be used for bad than good.

Research conducted in 2018 by fraud detection company Sensity AI found that over 90% of all deepfakes online are non-consensual pornographic clips targeting women – and predicted that the number would double every six months. Fast forward four years and that prophecy has come true and then some. There are over 57 million hits for ‘deepfake porn’ on Google alone [at the time of writing]. Search interest has increased 31% in the past year and shows no signs of slowing. Does this mean we’ve lost control already? And, if so, what can be done to stop it?

WHO’S THE TARGET?

Five years ago, in late 2017, something insidious was brewing in the darker depths of popular chatrooms. Reddit users began violating celebrities on a mass scale, by using deepfake software to blend run-of-the-mill red-carpet images or social media posts into pornography. Users would share their methods for making the sexual material, they’d take requests (justifying abusing public figures as being ‘better than wanking off to their real leaked nudes’) and would signpost one another to new uploads. This novel stream of porn delighted that particular corner of the internet, as it marvelled at just how realistic the videos were (thanks to there being a plethora of media of their chosen celebrity available for the software to study).

That was until internet bosses, from Reddit to Twitter to Pornhub, came together and banned deepfakes in February 2018, vowing to quickly remove any that might sneak through the net and make it onto their sites – largely because (valid) concerns had been raised that politically motivated deepfake videos were also doing the rounds. Clips of politicians apparently urging violence, or ‘saying’ things that could harm their prospects, had been red flagged. Despite deepfake porn outnumbering videos of political figures by the millions, clamping down on that aspect of the tech was merely a happy by-product.

But it wasn’t enough; threads were renamed, creators migrated to different parts of the internet and influencers were increasingly targeted alongside A-listers. Quickly, the number of followers these women needed to be deemed ‘fair game’ dropped, too.

Fast forward to today, and a leading site specifically created to house deepfake celebrity porn sees over 13 million hits every month (that’s more than double the population of Scotland). It has performative rules displayed claiming to not allow requests for ‘normal’ people to be deepfaked, but the chatrooms are still full of guidance on how to DIY the tech yourself and people taking custom requests. Disturbingly, the most commonly deepfaked celebrities are ones who all found fame at a young age which begs another stomach-twisting question here: when talking about deepfakes, are we also talking about the creation of child pornography?

It was through chatrooms like this, that I discovered the £5 bot that created the scarily realistic nude of myself. You can send a photograph of anyone, ideally in a bikini or underwear, and it’ll ‘nudify’ it in minutes. The freebie version of the bot is not all that realistic. Nipples appear on arms, lines wobble. But the paid for version is often uncomfortably accurate. The bot has been so well trained to strip down the female body that when I sent across a photo of my boyfriend (with his consent), it superimposed an unnervingly realistic vulva.

But how easy is it to go a step further? And how blurred are the ethics when it comes to ‘celebrities vs normal people’ (both of which are a violation)? In a bid to find out, I went undercover online, posing as a man looking to “have a girl from work deepfaked into some porn”. In no time at all I meet BuggedBunny*, a custom deepfake porn creator who advertises his services on various chatroom threads – and who explicitly tells me he prefers making videos using ‘real’ women.

When I ask for proof of his skills, he sends me a photo of a woman in her mid-twenties. She has chocolate-brown hair, shy eyes and in the image, is clearly doing bridesmaid duties. BuggedBunny then tells me he edited this picture into two pornographic videos.

He emails me a link to the videos via Dropbox: in one The Bridesmaid is seemingly (albeit with glitches) being gang-banged, in another ‘she’ is performing oral sex. Although you can tell the videos are falsified, it’s startling to see what can be created from just one easily obtained image. When BuggedBunny requests I send images of the girl I want him to deepfake – I respond with clothed photos of myself and he immediately replies: “Damn, I’d facial her haha!” (ick) and asks for a one-off payment of $45. In exchange, he promises to make as many photos and videos as I like. He even asks what porn I’d prefer. When I reply, “Can we get her being done from behind?” he says, “I’ve got tonnes of videos we can use for that, I got you man.”

I think about The Bridesmaid, wondering if she has any idea that somebody wanted to see her edited into pornographic scenes. Is it better to be ignorant? Was it done to humiliate her, for blackmailing purposes, or for plain sexual gratification? And what about the adult performers in the original video, have they got any idea their work is being misappropriated in this way?

It appears these men (some of whom may just be teenagers: when I queried BuggedBunny about the app he wanted me to transfer money via, he said, “It’s legit! My dad uses it all the time”) – those creating and requesting deepfake porn – live in an online world where their actions have no real-world consequences. But they do. How can we get them to see that?

REAL-LIFE FAKE PORN

One quiet winter afternoon, while her son was at nursery, 36-year-old Helen Mort, a poet and writer from South Yorkshire, was surprised when the doorbell rang. It was the middle of a lockdown; she wasn’t expecting visitors or parcels. When Helen opened the door, there stood a male acquaintance – looking worried. “I thought someone had died,” she explains. But what came next was news she could never have anticipated. He asked to come in.

“I was on a porn website earlier and I saw… pictures of you on there,” the man said solemnly, as they sat down. “And it looks as though they’ve been online for years. Your name is listed, too.”

Initially, she was confused; the words ‘revenge porn’ (when naked pictures or videos are shared without consent) sprang to mind. But Helen had never taken a naked photo before, let alone sent one to another person who’d be callous enough to leak it. So, surely, there was no possible way it could be her?

“That was the day I learned what a ‘deepfake’ is,” Helen tells me. One of her misappropriated images had been taken while she was pregnant. In another, somebody had even added her tattoo to the body her face had been grafted onto.

Despite the images being fake, that didn’t lessen the profound impact their existence had on Helen’s life. “Your initial response is of shame and fear. I didn't want to leave the house. I remember walking down the street, not able to meet anyone’s eyes, convinced everyone had seen it. You feel very, very exposed. The anger hadn't kicked in yet.”

Nobody was ever caught. Helen was left to wrestle with the aftereffects alone. “I retreated into myself for months. I’m still on a higher dose of antidepressants than I was before it all happened.” After reporting what had happened to the police, who were initially supportive, Helen’s case was dropped. The anonymous person who created the deepfake porn had never messaged her directly, removing any possible grounds for harassment or intention to cause distress.

Eventually she found power in writing a poem detailing her experience and starting a petition calling for reformed laws around image-based abuse; it’s incredibly difficult to prosecute someone for deepfaking on a sexual assault basis (even though that’s what it is: a digital sexual assault). You’re more likely to see success with a claim for defamation or infringement of privacy, or image rights.

Unlike Helen, in one rare case 32-year-old Dina Mouhandes from Brighton was able to unearth the man who uploaded doctored images of her onto a porn site back in 2015. “Some were obviously fake, showing me with gigantic breasts and a stuck-on head, others could’ve been mistaken as real. Either way, it was humiliating,” she reflects. “And horrible, you wonder why someone would do something like that to you? Even if they’re not real photos, or realistic, it’s about making somebody feel uncomfortable. It’s invasive.”

Dina, like Helen, was alerted to what had happened by a friend who’d been watching porn. Initially, she says she laughed, as some images were so poorly edited. “But then I thought ‘What if somebody sees them and thinks I’ve agreed to having them made?’ My name was included on the site too.” Dina then looked at the profile of the person who’d uploaded them and realised an ex-colleague had been targeted too. “I figured out it was a guy we’d both worked with, I really didn’t want to believe it was him.”

In Dina’s case, the police took things seriously at first and visited the perpetrator in person, but later their communication dropped off – she has no idea if he was ever prosecuted, but is doubtful. The images were, at least, taken down. “Apparently he broke down and asked for help with his mental health,” Dina says. “I felt guilty about it, but knew I had to report what had happened. I still fear he could do it again and now that deepfake technology is so much more accessible, I worry it could happen to anyone.”

And that’s the crux of it. It could happen to any of us – and we likely wouldn’t even know about it, unless, like Dina and Helen, somebody stumbled across it and spoke out. Or, like 25-year-old Northern Irish politician Cara Hunter, who earlier this year was targeted in a similarly degrading sexual way. A pornographic video, in which an actor with similar hair, but whose face wasn’t shown, was distributed thousands of times – alongside real photos of Cara in a bikini – via WhatsApp. It all played out during the run-up to an election, so although Cara isn’t sure who started spreading the video and telling people it was her in it, it was presumably politically motivated.

“It’s tactics like this, and deepfake porn, that could scare the best and brightest women from coming into the field,” she says, adding that telling her dad what had happened was one of the worst moments of her life. “I was even stopped in the street by men and asked for oral sex and received comments like ‘naughty girl’ on Instagram – then you click the profiles of the people who’ve posted, and they’ve got families, kids. It’s objectification and trying to humiliate you out of your position by using sexuality as a weapon. A reputation can be ruined instantly.”

Cara adds that the worst thing is ‘everyone has a phone’ and yet laws dictate that while a person can’t harm you in public, they can legally ‘try to ruin your life online’. “A lie can get halfway around the world before the truth has even got its shoes on.”

Is it any wonder, then, that 83% of Cosmopolitan readers have said deepfake porn worries them, with 42% adding that they’re now rethinking what they post on social media? But this can’t be the solution - that, once again, women are finding themselves reworking their lives, in the hopes of stopping men from committing crimes.

Yet, we can’t just close our eyes and hope it all goes away either. The deepfake porn genie is well and truly out of the bottle (it’s also a symptom of a wider problem: Europol experts estimate that by 2026, 90% of all media we consume may be synthetically generated). Nearly one in every 20 Cosmopolitan readers said they, or someone they know, has been edited into a false sexual scenario. But what is the answer? It's hard for sites to monitor deepfakes – and even when images are promptly removed, there’s still every chance they’ve been screen grabbed and shared elsewhere.

When asked, Reddit told Cosmopolitan: "We have clear policies that prohibit sharing intimate or explicit media of a person created or posted without their permission. We will continue to remove content that violates our policies and take action against the users and communities that engage in this behaviour."

Speaking to leading deepfake expert, Henry Adjer, about how we can protect ourselves – and what needs to change – is eye-opening. “I’ve rarely seen male celebrities targeted and if they are, it’s usually by the gay community. I’d estimate tens of millions of women are deepfake porn victims at this stage.” He adds that sex, trust and technology are only set to become further intertwined, referencing the fact that virtual reality brothels now exist. “The best we can do is try to drive this type of behaviour into more obscure corners of the internet, to stop people – especially children and teenagers – from stumbling across it.”

Currently, UK law says that making deepfake porn isn’t an offence (although in Scotland distributing it may be seen as illegal, depending on intention), but companies are told to remove such material (if there’s an individual victim) when flagged, otherwise they may face a punishment from Ofcom. But the internet is a big place, and it’s virtually impossible to police. This year, the Online Safety Bill is being worked on by the Law Commission, who want deepfake porn recognised as a crime – but there’s a long way to go with a) getting that law legislated and b) ensuring it’s enforced.

Until then, we need a combination of investment and effort from tech companies to prevent and identify deepfakes, alongside those (hopefully future) tougher laws. But even that won’t wave a magic wand and fix everything. Despite spending hours online every day, as a society we still tend to think of ‘online’ and ‘offline’ as two separate worlds – but they aren’t. ‘Online’ our morals can run fast and loose, as crimes often go by unchecked, and while the ‘real world’ may have laws in place that, to some degree, do protect us, we still need a radical overhaul when it comes to how society views the female body.

Deepfake porn is a bitter nail in the coffin of equality and having control over your own image; even if you’ve never taken a nude in your life (which, by the way, you should be free to do without fear of it being leaked or hacked) you can still be targeted and have sexuality used against you. Isn’t it time we focus on truly Photoshopping out that narrative as a whole?”

Link | Archived Link

527 notes

·

View notes

Text

Balancing Innovation and Integrity: Using AI to Enhance Your Writing

With advancements in AI and writing tools, it can be helpful for writers to use technology to improve their craft. Even though many use AI to write for them and often plagiarize it, it can be beneficial in other ways. From AI-driven grammar checkers and style enhancers to writing software that helps with plotting and character development, these tools can streamline the writing process and help you focus on creativity.

Additionally, writing software such as Scrivener and Plottr can assist with organizing your thoughts, plotting intricate storylines, and developing well-rounded characters. These tools can allow you to streamline the writing process while reducing the time spent on administrative tasks. It frees up more time for creative exploration and the ability to dig deeper into the plot.

However, it's important to use these tools ethically. While AI can assist with various aspects of writing, relying on it to create work entirely on your behalf undermines the authenticity and personal touch that define great writing. Misusing AI to generate content without your input can lead to work lacking originality and depth and may raise questions about authorship and integrity. Many use AI to cheat, plagiarize, and overall abuse the technology, undermining the authenticity and originality that define true creative work.

Incorporating technology into your writing practice should enhance your creative input, not replace it. These tools are valuable allies that can help you craft compelling narratives and engaging content, but the heart of your writing should always come from you. Use AI to augment your skills, not as a substitute for your creativity and effort.

By balancing the benefits of AI with responsible usage, you can leverage technology to become a more effective and inspired writer, while maintaining the authenticity and integrity of your work.

#writeblr#writers on tumblr#creative writing#writing#writerscommunity#writing blog#writers#tumblr writers#tumblr writing community#writing advice#writing community#writing ideas#writing inspiration#writing stuff#writers and poets#writing tips#ai writing#ai#artificial intelligence#ai generated#writer#artists on tumblr#art#artwork#original work#prose

13 notes

·

View notes

Note

So, I am doing both asking something, and saying how I just think shit in your AU goes in general, bc brain bored and I’m in gym class, not giving a shit. All of it is positive, but, I’m an over thinker so—

Anyways, so, Caine has an alternate personality of sorts, the ‘evil’ or malware version of him, but why exactly hasn’t Caine and Able (C&A) fixed it yet? That is though, a whole other topic to open up as to see why and what is actually happening in the original version with C&A. So, instead I will go off of the assumption that the company purposely trapped people and their souls here.

Any virus of any sort, is human made. For a specific reason and purpose, there’s many ways malware can be spread, and it also depends what kind this is. Say what if, for example instead of just being malware (malicious software), is actually ransomware, where people steal the data and certain others have to pay to get it back. I say this theoretically, because it is a random thought that came to mind, in a way, the players in general are being ransomed, taken over and not let out, but than that would oppose the question of what does the maker/perhaps corrupted Caine, want? Maybe it’s not Caine that wants something. Maybe someone wants something from C&A.

Say if it is this, than, perhaps that would explain the ‘help’ that Pomni and Zooble get, because I have read somewhere that from the corruption AU they sometimes find things around, things that could be helpful, things from past ‘players’. I know it’s probably just normal Caine trying to help whereas he can’t do much against the malware, but what if, instead, it was the company? I don’t know, since we don’t know much about the actual company story in the Og version, but I believe if C&A really wanted to drive people insane and steal their souls or something, they’d just find a way to do it off the bat, immediately, why wait for abstraction? What if this is somehow an attempt from the original company, with the small amount of access it has, trying to help the players in the game? Jax and Ragatha are both sort of on the edge, and I feel like if character data was stolen and held somewhere else, yet bits of it were still with the original people, the scattered code would make an affect like that, and than with others who are completely gone, there is no control over them at all.

…Anyways that was random and I just thought of that all on the spot just now since I got the ball rolling— ANYWAYS.

There is almost an infinite amount of possibilities, yes, Caine is an AI, but he can only go along what has been set for him. Such as how in the character ai app if a character says something that doesn’t Aline with the policy of the developers(ex, gore, NSFW content, etc), it gets rid of the message and notifies the user about it, saying to try again or if this is a big problem, to report it to the developers.

I might just be overthinking the technology, especially since if we go based timeline wise than if we’re still in the era of the technology it looked there was in tadc original pilot, this is probably taking place in the 90’s for the Og thing, from what everyone assumes, so I’ll assume this is the same time period. Caine does seem like a somewhat accurate ai for the time period, he stalls and goes back to the previous thing you said/asked if it’s something he isn’t designed to deal with, whereas an AI in this time might still answer the question and a bit confused. I suppose the overall question is who gave Caine that sentience? As in, the other Caine. It’s probably just me looking at it from my negative point of view, but for some reason the corrupted Caine gives a more humane vibe, in the way of acting, with definite maliciousness to the others. The only thing I can wonder is if this Caine already existed, just needed to be activated, like a prototype that then seemed to go a bit off the deep end while being replaced and left as idle undeleted code.

An ai would only have control of the physical bodies, I believe, so it would make sense, as where the virus starts as a physical part that just acts off of a player’s emotional state. It’s one goal? To corrupt. I have no mouth but I must scream, AM super computer vibes right there, and yes Caine’s original character is based off of that, but he’s a more wacky version. What if, pretty much the exact opposite of what I was theorizing before, C&A did this on purpose? In a way. As in, Caine was originally a malicious entity meant to corrupt those who came there and take them over, put them in eternal suffering like the five last remaining humans in the short horror story that AM the supercomputer originated from. Maybe Corrupted Caine is just more like AM in general, perhaps he was just the base code and than left, only in the end hating humans for the way they are, hence he wants to torture all those in the circus, make what little hope they have, turn into extreme fear, almost turning the tides entirely from what his situation had been? I dunno.

Anyhow, I’m a coding person, per say, a family of cyber people and hence in a way I can almost understand a lot of what’s going on here. Abstractions from before staying the same, since they’ve already become ‘abstract’, unstable code and likely can’t be ‘abstracted’ again. I like how it shows the difference personality wise, the abstractions would stay where they are, where as the ‘abstracted’ or corrupted above the surface, are either fighting, in a constant tug a war, or completely given up. So, what I’m getting at for the cyber part is, would there be any way for them to, in game, have any sort of.. protection? Like a failsafe, or something added recently to the game, because I’m thinking from a cyber security perspective. I can see how the players would get infected, since really all someone has to do to infect or hack into something is find a weak link, like hacking into the weak device in a network and than using that to get through to the others. Is there any type of antivirus things, or is that just not possible with how the circus is right now? The players are people, after all, they can’t just have their minds hacked into, unless that is their overall overwritten by the virus, but that would be for the already infected.

…That’s all the random shit I thought of in the last like three minutes and typed here randomly, sorry for the text wall, lol. Probably like none of this makes sense but I figured maybe as well theory rant for a bit

I love this analysis

C&a are an abandoned company long forgotten until it has opened and that’s when Pomni entered the game-

The virus is a WHOLE separate entity feeding on existing code that describes the characters and every time a character is added that code it added to the system constantly changing and morphing on the characters actions

But the moment they started to abstract that code is broken and allows access to the character for the virus to invade corrupting the characters code into a scramble mess bits where the virus pits it into a new form

But just because the code is broken doesn’t mean it’s completely useless some code is still protected and we see what that looks like with Jax and Ragatha

36 notes

·

View notes

Text

“A recent Goldman Sachs study found that generative AI tools could, in fact, impact 300 million full-time jobs worldwide, which could lead to a ‘significant disruption’ in the job market.”

“Insider talked to experts and conducted research to compile a list of jobs that are at highest-risk for replacement by AI.”

Tech jobs (Coders, computer programmers, software engineers, data analysts)

Media jobs (advertising, content creation, technical writing, journalism)

Legal industry jobs (paralegals, legal assistants)

Market research analysts

Teachers

Finance jobs (Financial analysts, personal financial advisors)

Traders (stock markets)

Graphic designers

Accountants

Customer service agents

"’We have to think about these things as productivity enhancing tools, as opposed to complete replacements,’ Anu Madgavkar, a partner at the McKinsey Global Institute, said.”

What will be eliminated from all of these industries is the ENTRY LEVEL JOB. You know, the jobs where newcomers gain valuable real-world experience and build their resumes? The jobs where you’re supposed to get your 1-2 years of experience before moving up to the big leagues (which remain inaccessible to applicants without the necessary experience, which they can no longer get, because so-called “low level” tasks will be completed by AI).

There’s more...

Wendy’s to test AI chatbot that takes your drive-thru order

“Wendy’s is not entirely a pioneer in this arena. Last year, McDonald’s opened a fully automated restaurant in Fort Worth, Texas, and deployed more AI-operated drive-thrus around the country.”

BT to cut 55,000 jobs with up to a fifth replaced by AI

“Chief executive Philip Jansen said ‘generative AI’ tools such as ChatGPT - which can write essays, scripts, poems, and solve computer coding in a human-like way - ‘gives us confidence we can go even further’.”

Why promoting AI is actually hurting accounting

“Accounting firms have bought into the AI hype and slowed their investment in personnel, believing they can rely more on machines and less on people.“

Will AI Replace Software Engineers?

“The truth is that AI is unlikely to replace high-value software engineers who build complex and innovative software. However, it could replace some low-value developers who build simple and repetitive software.”

#fuck AI#regulate AI#AI must be regulated#because corporations can't be trusted#because they are driven by greed#because when they say 'increased productivity' what they actually mean is increased profits - for the execs and shareholders not the workers#because when they say that AI should be used as a tool to support workers - what they really mean is eliminate entry level jobs#WGA strike 2023#i stand with the WGA

75 notes

·

View notes

Text

Opting out wont be enough (How to protect your art from Midjourney and OpenAI)

Tumblr may trust OpenAI and Midjourney to honour their end of the opt out agreement, but their past and continued violations of user consent make it clear that they can't be trusted.

I have replaced all the art I posted on Tumblr with versions I filtered through the Glaze Projects Software, and I recommend all of you reading do the same.

Both Glaze and Nightshade are on their website https://glaze.cs.uchicago.edu/

Both are important for making images difficult for AI algorithms to read. The result may leave visible artefacts on the image, but it is the only way for the protection to work in a way that cant be mitigated by copy pasting or screen grabbing an image.

Now while Glaze and Nightshade would be enough to protect an individuals art, it would require many users to do the same for GenAI companies to truly face the consequences of breaking user consent agreements. So I highly encourage not just you to do this, but to spread an re-blog this message to let as many other users as possible know to do the same. We can fight GenAI if we do it together!

Also consider following the Glaze Project on Twitter https://twitter.com/TheGlazeProject for future developments in protection against art theft.

14 notes

·

View notes