#robot.txt generator

Explore tagged Tumblr posts

Text

GenAI is stealing from itself.

Fine, it's Dall-E... the twist is this... One of the influencing images seems to be this one which has a lot of matching features. AI stealing, fine.:

It's likely this one. (I found this via reverse image search) But something doesn't feel right about this one. So I looked it up...

It's Midjourney. lol

So AI is stealing from AI.

The thing is as people wise up and start adding these lines to robot.txt

GenAi has no choice but to steal from GenAI, which, Oh, poor developers are crying big tears is making GenAI worse. Oh poor developers crying that they no longer can steal images because of nightshade:

https://nightshade.cs.uchicago.edu/whatis.html and glaze:

To which I say, let AI steal from AI so it gets shittier.

Remember to spot gen AI, most of the devil is in the details of the lazy-ass creators.

This video goes over some of the ways to spot it (not mine). https://www.youtube.com/watch?v=NsM7nqvDNJI

So join us in poisoning the well and making sure the only thing Gen AI can steal is from itself.

Apparently stealing with one person with a name is not fine and is evil. But stealing from 2+ people is less evil as long as you don't know their names, or you do, because you went explicitly after people who hate AI like the Ghibli studio and you don't want to stare into Miyazaki's face.

Because consent isn't in your vocabulary? This is the justification that genAI bros give, including things like drawing is sooooo impossible to learn.

And if you don't understand consent, then !@#$ I don't think anyone wants to date you either. But I digress. The point is that we should push AI to learn from AI so that Gen AI becomes sooo crappy the tech bros need to fold and actually do what they originally planned with AI, which is basically teach it like it was a human child. And maybe do useful things like, Iunno, free more time for creativity, rather than doing creativity.

#fuck ai everything#ai is evil#fight ai#anti genai#fuck genai#fuck generative ai#ai is stealing from itself#anti-ai tools

3 notes

·

View notes

Text

Best Roofing Contractors SEO Services | Grow Your Roofing Business Online

In today’s hyper-competitive digital world, having a website for your roofing company is just the beginning. To truly stand out and grow your business, you need to invest in the Best Roofing Contractors SEO services. Whether you're an established roofer or just getting started, SEO can drive high-quality leads, boost your online visibility, and help dominate your local market.

Let’s break down how roofing contractors can leverage SEO effectively—and why it's one of the smartest marketing moves you can make.

Why SEO Matters for Roofing Contractors

Imagine this: a homeowner in your area is dealing with a leaking roof. What’s the first thing they’ll do? Most likely, they’ll open Google and type something like “roof repair near me” or “best roofing contractor in [city].” If your business isn’t showing up on the first page, chances are you’re missing out on that lead—and many more like it.

Search Engine Optimization (SEO) helps your roofing website rank higher in search results, making it easier for potential customers to find you exactly when they need your services.

What Makes the Best Roofing Contractors SEO Services?

To rise to the top of local search results, your roofing company needs a comprehensive SEO strategy that includes:

1. Local SEO Optimization

Local SEO is the backbone of success for roofing businesses. Google prioritizes local results for service-based searches.

Key components:

Google Business Profile optimization

Local citations (e.g., Yelp, Angie’s List, BBB)

Positive customer reviews

Localized content creation

2. Keyword Research & On-Page Optimization

Effective SEO starts with knowing what your audience is searching for. The Best Roofing Contractors SEO providers conduct in-depth keyword research to target phrases like:

Roof repair in [city]

Best roofing contractor

Emergency roof services

These keywords are then integrated naturally into:

Meta titles & descriptions

Headings (H1, H2, H3)

Image alt-text

Page content

3. Technical SEO for Site Performance

Google values user experience. If your site is slow, cluttered, or not mobile-friendly, you’ll get penalized.

Important technical factors:

Fast loading speeds

Mobile responsiveness

HTTPS security

Clean URL structure

XML sitemaps and robot.txt optimization

4. Content Marketing and Blogging

Consistently publishing valuable content positions your brand as an authority. Topics might include:

“How to know if your roof needs replacing”

“Benefits of asphalt shingles vs. metal roofs”

“DIY roof maintenance tips”

Not only does content engage your audience, but it also helps you rank for long-tail keywords.

5. Backlink Building

Backlinks are like votes of confidence from other websites. The more high-quality links pointing to your site, the more authority your domain earns.

Strategies include:

Submitting to industry directories

Guest posting on reputable blogs

Getting listed in local news articles

According to Search Engine Journal, backlinks remain one of the top 3 Google ranking factors.

Benefits of Hiring the Best Roofing Contractors SEO Services

Here’s what top-tier SEO can do for your roofing business:

✅ Get Found by Local Customers

Show up in local search results exactly when people are looking for your services.

✅ Increase Website Traffic

Targeted SEO brings people who are actively seeking roofing services to your site.

✅ Generate More Leads

Turn visitors into paying customers with optimized landing pages, calls-to-action, and lead capture forms.

✅ Stand Out from Competitors

Most roofing companies still underutilize SEO. Doing it right gives you a serious edge.

✅ Long-Term ROI

Unlike paid ads that stop when your budget runs out, SEO continues to drive results over time.

Signs You Need Roofing SEO Services

If you’re experiencing any of the following, it’s time to invest in professional SEO:

Low website traffic

Poor local search rankings

Few or no online reviews

Inconsistent leads from your site

Competitors outranking you on Google

Tips for Choosing the Best Roofing Contractors SEO Agency

Don’t fall for cookie-cutter SEO services. Look for an agency that:

Specializes in roofing or home services SEO

Offers a clear strategy with measurable goals

Provides transparent reporting

Has proven results and case studies

Follows Google’s best practices (no black-hat tactics!)

Need help evaluating agencies? The U.S. Small Business Administration (SBA) offers tips on selecting marketing service providers that suit your business needs.

Real-World Example: SEO Success for a Local Roofer

Let’s take a quick case study.

Company: StormSafe Roofing, Dallas, TX Challenge: Low local visibility and few online leads Solution: Local SEO overhaul, content creation, and link building Results in 6 months:

200% increase in website traffic

Page 1 ranking for “roof repair Dallas”

3x more phone calls and quote requests

The takeaway? A smart SEO strategy delivers tangible business growth.

Conclusion: Grow Your Roofing Business Online

Your competitors are already investing in digital marketing. To stay ahead, you need more than just a website—you need visibility, trust, and traffic. With the Best Roofing Contractors SEO services, your business can climb the search rankings, attract quality leads, and boost revenue month after month.

If you're ready to take your roofing business to the next level, partner with an experienced SEO agency that understands the roofing industry and can deliver real results.

Call to Action

Ready to dominate local search results? Contact a specialized Roofing SEO expert today and start getting the leads your business deserves.

FAQ: Best Roofing Contractors SEO

1. What is roofing contractor SEO? Roofing contractor SEO is the process of optimizing your website and online presence to attract more customers through search engines like Google.

2. How long does it take to see SEO results for my roofing business? Typically, noticeable improvements appear within 3–6 months, but long-term results and ROI build over time.

3. Do I need SEO if I already run Google Ads? Yes! SEO provides long-term, cost-effective visibility, while ads stop when your budget ends. Combining both offers the best of both worlds.

4. How much do the best roofing contractors SEO services cost? Pricing varies based on your goals and market, but expect to invest between $500–$3000 per month for professional SEO services.

5. Can I do SEO for my roofing business on my own? You can handle some basics, but for competitive results, it’s best to work with professionals who specialize in local SEO and roofing marketing.

For more info:-

Best Roofing Contractors SEO

Roofing SEO

0 notes

Text

Why Hiring a Web Designer in Bangalore Can Elevate Your Brand in 2025

In the digital-first economy, your website often makes the first impression—and in a competitive tech hub like Bangalore, that first impression can either fuel business growth or push potential clients away. A professional, well-crafted website is no longer optional; it’s a necessity. If you're searching for a trusted web designer in Bangalore, Hello Errors is a name you can count on.

From intuitive layouts to SEO-integrated builds, Hello Errors delivers websites that are not only beautiful but also functional, scalable, and built for performance.

Bangalore: A Hotspot for Digital-Driven Brands

Bangalore has earned its reputation as the Silicon Valley of India—a city teeming with startups, tech innovators, and fast-growing enterprises. With this digital boom comes intense competition. Businesses need a web presence that does more than just exist—it needs to impress, engage, and convert.

That’s why working with a skilled web designer in Bangalore like Hello Errors can be the differentiating factor for your brand’s success.

What Makes a Web Designer in Bangalore Truly Exceptional?

It’s not just about pretty pages and polished animations. A top-tier web designer brings together multiple dimensions of digital strategy:

🎯 Strategic Planning

A smart website starts with user research, competitor analysis, and a deep understanding of your business goals. At Hello Errors, every project begins with a strategic blueprint.

🖌️ Brand-Centric Visuals

Every brand has a story. Our team builds visuals that match your tone, industry, and audience—whether you’re a quirky D2C brand or a formal fintech company.

📲 Mobile-First Design

Over 70% of traffic in India comes from mobile devices. Our mobile-responsive designs ensure your website looks and functions flawlessly on smartphones and tablets.

🚀 Fast Load Times

No one sticks around for a slow-loading site. We optimize every image, script, and CSS file to ensure lightning-fast performance.

Why Hello Errors is the Preferred Web Designer in Bangalore

If you're wondering what makes Hello Errors different from other web design companies in Bangalore, here are key reasons:

1. Cross-Platform Expertise

Whether it’s a responsive corporate website, a progressive web app, or an eCommerce portal—Hello Errors delivers consistent performance across platforms and devices.

2. Growth-Focused Design

We build websites that serve business goals—be it lead generation, user engagement, or sales conversion. Our CTAs, UX flow, and messaging are built to drive results.

3. AI-Integrated Interfaces

As a forward-thinking web designer in Bangalore, Hello Errors incorporates AI elements like chatbots, recommendation engines, and intelligent search bars into modern website builds.

4. SEO Comes Built-In

We don’t treat SEO as an afterthought. Every Hello Errors project includes:

Meta tags and schema markup

Optimized headings and structure

Image compression and alt tags

Clean URL structuring

Sitemap and robot.txt integration

This makes it easier for Google to crawl and rank your site from day one.

Our Creative Stack: Beyond Just Web Design

Hello Errors isn’t just a web designer in Bangalore—we’re your end-to-end digital growth partner. Here’s a glimpse of our full stack of services:

🧩 Web Development

Built on frameworks like React, Angular, Laravel, and WordPress, our sites are modern, secure, and scalable.

📱 App Development

From native Android/iOS apps to hybrid Flutter builds, we help brands create seamless mobile experiences.

🎯 Search Engine Optimization

We offer ongoing SEO support with keyword tracking, technical audits, link-building, and content strategy.

🤖 AI & ML Integration

Need predictive analytics, chatbots, or AI-powered recommendation tools? We’ve got you covered.

🎨 UI/UX Design

We map out complete design systems with wireframes, journey flows, and design components for web and mobile platforms.

Examples of Our Impact (Generalized Case Insights)

🚀 Startup Boost:

A SaaS startup gained 250% more qualified leads after Hello Errors redesigned their landing pages with stronger CTAs and SEO-focused content flow.

🛒 eCommerce Success:

A local Bangalore D2C fashion brand saw their bounce rate drop from 60% to 20% and cart conversions rise by 40% post redesign.

🏢 Enterprise Upgrade:

An IT services firm improved its Google rankings and domain authority with a new SEO-optimized website developed by Hello Errors.

These examples underscore our capability as a leading web designer in Bangalore who delivers measurable growth.

Key Trends We Integrate as a Web Designer in Bangalore

Staying ahead of trends helps us build sites that feel modern and future-ready. Some design-forward elements we include:

Dark Mode Compatibility

Glassmorphism and Neumorphism Designs

Interactive Scroll-Based Animations

Sticky Navigation and Floating CTAs

Voice Search Optimization

As a cutting-edge web designer in Bangalore, Hello Errors stays updated with global web trends and aligns them with local market needs.

How to Choose the Right Web Designer in Bangalore: Quick Checklist

Before hiring a web design partner, keep this checklist handy:

✅ Does the company offer end-to-end solutions (design + development + SEO)? ✅ Can they show real business impact from previous projects? ✅ Are their websites responsive, SEO-friendly, and fast-loading? ✅ Do they understand your business goals—not just technical specs? ✅ Do they offer long-term support or maintenance?

With Hello Errors, the answer to all the above is a confident yes.

Take the Next Step with Hello Errors

Whether you’re an emerging startup, an established brand, or a growing agency—your website is your most powerful digital asset. Partner with Hello Errors, the most trusted web designer in Bangalore, to create a platform that’s bold, smart, and built for tomorrow.

👉 Get in touch with us today for a free discovery session. Let’s build something extraordinary together.

#WebDesignerInBangalore#HelloErrors#WebDesignIndia#ResponsiveDesign#UIUXDesign#SEOFriendlyWebsite#DigitalBranding#BangaloreStartups#AIWebDesign#AppDevelopmentIndia#WebDevelopmentExperts

0 notes

Text

Digital Marketing Training in Coimbatore | Qtree Technologies

Digital Marketing Training in Coimbatore

Best Digital Marketing Training Institute in Coimbatore. These days digital marketing is advancing to develop at an increased rate by virtue of the promoters who are confronting daunting challenges regularly. The reality of the interest is that one can turn into a specialist in this field. All that one need is the right training program. To help you, Qtree, DM training institute in Coimbatore can make you familiar with the marketing methodologies and structure in a real sense. Accordingly, the course is designed in order to educate professionals from every field. Learn from the best DM training institute in Coimbatore and enhance your career options.

Key Features

Lifetime Access

Realtime Code Analysis

CloudLabs

24x7 Support

Money Back

Project Feedback

About Digital Marketing Training in Coimbatore

What is Digital Marketing ?

Digital marketing course will enable you to understand and master Search Engine Optimization (SEO), Social Media Marketing (SMM), Pay Per Click Marketing (PPC), and Digital Media Marketing. And at Qtree, the best DM training courses in Coimbatore, you can Learn through our simple, easy-to-implement learning and effective modules.

Why DM training in Coimbatore at Qtree

What will I Learn in Qtree?

DM Course Duration And Timing

What Will I Learn?

Search Engine Optimization

Social Media Marketing

Search Engine Marketing

Inbound Marketing

Email Marketing

Good knowledge in SEM, SEM, SMM, SMO.

Basics of Wordpress, HTML

Our Course Details

Syllabus

Overview

Program Details

Reviews

MODULE 1: BASICS DIGITAL MARKETING

Introduction To Online Digital Marketing

Importance Of Digital Marketing

How does Internet Marketing work?

Traditional Vs. Digital Marketing

Significance Of Online Marketing In Real World

Increasing Visibility

Visitors’ Engagement

Bringing Targeted Traffic

Lead Generation

Converting Leads

Performance Evaluation

MODULE 2: ANALYSIS AND KEYWORD RESEARCH

Market Research

Keyword Research And Analysis

Types Of Keywords

Tools Used For Keyword Research

Localized Keyword Research

Competitor Website Analysis

Choosing Right Keywords To The Project

MODULE 3: SEARCH ENGINE OPTIMIZATION (SEO)

Introduction To Search Engine Optimization

How Did Search Engine work?

SEO Fundamentals & Concepts

Understanding The SERP

Google Processing

MODULE 4: ON PAGE OPTIMIZATION

Domain Selection

Hosting Selection

Meta Data Optimization

URL Optimization

Internal Linking

301 Redirection

404 Error Pages

Canonical Implementation

H1, H2, H3 Tags Optimization

Image Optimization

Optimize SEO Content

Check For Copy scape Content

Landing Page Optimization

No-Follow And Do-Follow

Indexing And Caching

Creating XML Sitemap

Creating Robot.Txt

SEO Tools And Online Software

MODULE 5: OFF PAGE OPTIMIZATION

Link Building Tips & Techniques

Difference Between White Hat And Black Hat SEO

Alexa Rank, Domain

Authority, Backlinks

Do’s & Don’ts In Link Building

Link Acquisition Techniques

Directory Submission

Social Bookmarking Submission

Search Engine Submission

Web 2.0 Submission

Article Submission

Press Release Submission

Forum Submission

PPT Submission

PDF Submission

Classified Submission

Business Listing

Blog Commenting

MODULE 6: SEO UPDATES AND ANALYSIS

Google Panda, Penguin, Humming Bird Algorithm

How To Recover Your Website From Google Penalties

Webmaster And Analytics Tools

Competitor Website Analysis And Backlinks Building

SEO Tools For Website Analysis And Optimization

Backlinks Tracking, Monitoring, And Reporting

MODULE 7: LOCAL BUSINESS & LISTING

Local Business Listing – Optimizing Your Local Search Listings To Bring New Customers Right To Your Business

Creating Local Listing In Search Engine

Google Places Setup (Including Images, Videos, Map Etc)

Placing Web Site On First Page Of Google Search

Lean To Make Free Online Business Profile Page

How To Make Monthly Basis Search Engine Visibility Reports

Verification Of Listing, Google Reviews

MODULE 8 : GOOGLE ADWORDS OR PAY PER CLICK MARKETING(SEM)

Google Adwords (SEM)

Introduction To Online Advertising And Adwords

Adwords Account And Campaign Basics

Adwords Targeting And Placement

Adwords Bidding And Budgeting

PPC Basic

Adwords Tools

Opportunities

Optimizing Performance

Ads Type

Bidding Strategies

Search Network

Display Network

Shopping Ads

Video Ads

Universal App Ads

Tracking Script

Remarketing

Performance Monitoring And Conversion Tracking

Reports

MODULE 9 : SOCIAL MEDIA OPTIMIZATION (SMO)

Social Media Optimization (SMO)

Introduction To Social Media Networks

Types Of Social Media Websites

Social Media Optimization Concepts

Facebook Page, Google+, LinkedIn,

YouTube, Pinterest,

Instagram Optimization

Hashtags And Mentions

Image Optimization And Networking

Micro Blogs For Businesses

MODULE 10: SOCIAL MEDIA MARKETING (SMM)

Facebook Optimization

Fan Page Vs Profile Vs Group

Creating Facebook Page For Business

Increasing Fans And Doing Marketing

Connecting Apps To Fan Pages

Facebook Analytics

Data-Based Management And Lead Generation

Facebook Advertising And Its Types In Detail

Creating Advertising Campaigns,

Payment Modes

CPC Vs CPM Vs CPA

Conversion Tracking

Power Editor Tool For Advertising

Twitter Optimization

Introduction To Twitter

Creating Strong Profiles On Twitter

Followers, ReTweets, Clicks,

Conversions, HashTags

Product Brand Promotion And Activities

App Installs And Engagement

Case Studies

Conversion Tracking And Reporting

LinkedIn Optimization

What Is LinkedIn?

Individual Profile Vs. Company Profile

Database Management And Lead Generation

Branding On LinkedIn

Marketing On LinkedIn Groups

LinkedIn Advertising

Increasing ROI Through LinkedIn Ads

Conversion Tracking And Reporting

YouTube Optimization

Channel Creation

In Display Ads

Video App Install Promotion

Video Shopping Promotion

Google Plus

Features

Tools & Techniques

Google + 1

Google Plus For Businesses

MODULE 11: WEB ANALYTICS

Getting Started With Google Analytics

Navigating Google Analytics

Traffic Sources

Content

Visitors

Goals & Ecommerce

Actionable Insights And The Big Picture

Live Data

Demographics

Google Webmaster Tools

Adding Site And Verification

Setting Geo Target Location

Search Queries Analysis

Filtering Search Queries

External Links Report

Crawls Stats And Errors

Sitemaps

Txt And Links Removal

Html Suggestions

URL Parameters (Dynamic Sites Only)

MODULE 12: WEBMASTER TOOLS

Adding site and verification

Setting Geo-target location

Search queries analysis

Filtering search queries

External Links report

Crawls stats and Errors

Sitemaps

txt and Links Removal

HTML Suggestions

URL parameters (Dynamic Sites only

MODULE 13: CONTENT MARKETING

Introduction To Blogs

Setting Up Your Own Blog

The Importance Of SEO

Content Duration & The Art Of Content Planning

Creating A Compelling Personality For Your Content

How To Monetize Your Blog

MODULE 14: MOBILE MARKETING

Importance Of Mobile Marketing

In Current Scenario

Fundaments Of Mobile Marketing

Forms Of Mobile Marketing

Geo-Targeting Campaigns For Mobile Users

Measuring And Managing Campaign

App & Web – Mobile Advertising

Content Marketing

SMS Marketing

Case Studies On App Advertising

MODULE 15: VIDEO MARKETING

Importance Of Video Marketing

Understanding Video Campaigns

YouTube Marketing (Video Ads)

Types Of YouTube Ads

In-Display And In-Stream Ads

Using YouTube For Businesses

Developing YouTube Marketing Strategies

Targeting Options

Understanding Bid Strategies

Bringing Visitors To Your Website

Via YouTube Videos

MODULE 16: WORDPRESS SEO CONCEPTS

Using Word Press How To Do SEO Works

MODULE 17: CREATING A NEW SIMPLE WEBSITE

Creating A Simple Website For Your Business/Work Using Html Coding

MODULE 18: EMAIL MARKETING

Using Bulk Email Service To Boost Your Business

MODULE 19: SMS MARKETING

Using Bulk SMS Service To Boost Your Business

MODULE 20: LIVE PRACTICALS

Live Practical Experience

MODULE 21: INTERVIEW PREPARATION

Resume Preparation

Interview Question Preparation

Benefits of Digital Marketing Training in Coimbatore

Pursue a great career with Qtree in Digital Marketing. It is the best DM Training institute in Coimbatore. Here are the number of benefits you will be availing while learning DM course in Coimbatore at Qtree-

100% placement and 100% job assistance opportunities

Learn from Industry experts with more than 10 years of experience

Get trained with real-time examples

Avail complete study guides and interview preparation materials

Confidently pursue a career in Internet Marketing

Get In-depth knowledge of all Internet Marketing channels

Become an Expert in both technical and working knowledge of Online Marketing field

Build a strategic plan for your Online Marketing team

Decide on leading Online Marketing metrics

Define KPIs to cover Internet Marketing campaign victory

Last but not least, become much capable of handling a Web Marketing team / holding up as a Specialist, Team-Lead, or Project Manager role in a given company

Placement of Digital Marketing Course

Qtree no doubt stands as the best institute for learning DM. Digital Marketing training at Qtree Technologies is suitable for freshers or any Middle and Senior Managers. Getting a DM certification in Coimbatore at Qtree, you will have a strategic understanding of every little detail of Digital Marketing. The students will learn how Digital Marketing changes brand & Sales, Best tools, techniques, practices in Digital Marketing. Not just we cover planning, Reporting, Budgeting, and Tracking mechanisms for Digital Marketing, Qtree also provides 100% Placement & 100% Job assistance. Don’t wait for the next minute! Learn anything and everything about the Digital Marketing with the help of expertise. Get in touch with us today at the best Digital Marketing training in Coimbatore, at Qtree for excellent digital marketing course and become a specialist in digital marketing strategies. Call us today and know more.

0 notes

Text

The Power of SEO for E-Commerce

What SEO Features Does Shopify Provide?

https://appringer.com/wp-content/uploads/2024/01/What-SEO-Features-Does-Shopify-Provide-copy.jpg

Popular e-commerce platform Shopify provides a number of built-in SEO tools to assist online retailers in search engine optimizing their websites. The following are some essential SEO tools that Shopify offers:

1. Automatic Generated Sitemaps

Shopify simplifies the process of interacting with search engines by automatically creating XML sitemaps for your online store. These sitemaps act as a road map, guaranteeing effective crawling and indexing, and eventually improving the visibility of your store in search engine results.

2. Editable store URL structures

You may easily tweak and improve the URL architecture of your store with Shopify. Using this tool, you can make meaningful and SEO-friendly URLs for your pages, collections, and goods, which will enhance user experience and search engine exposure.

3. URL optimization tools

Shopify gives customers access to URL optimization tools that make it simple to improve the search engine ranking of their online store. Using these tools, you can make clear, keyword-rich URLs that will help your clients browse your website more engagingly and increase SEO.

4. Support for meta tags and canonical tags

Shopify has strong support for canonical and meta tags, allowing customers to adjust important SEO components. To ensure a simplified and successful approach to search engine optimization, customize meta titles and descriptions for maximum search visibility. Additionally, automatically implemented canonical tags help eliminate duplicate content issues.

5. SSL certification for security

Shopify places a high priority on the security of your online store by integrating SSL certification. This builds confidence and protects sensitive data during transactions by guaranteeing a secure and encrypted connection between your clients and your website.

6. Structured data implementation

Shopify seamlessly integrates structured data by using schema markup to give search engines comprehensive product information. This implementation improves how well search engines interpret your material and may result in rich snippets—more visually appealing and useful search results.

7. Mobile-friendly design

Shopify uses mobile-friendly design to make sure your online store works and looks great across a range of devices. Shopify improves user experience by conforming to search engine preferences and encouraging higher rankings for mobile searches through responsive themes and optimized layouts.

8. Auto-generated robot.txt files for web spiders

Robot.txt files are automatically created by Shopify, giving web spiders precise instructions on what areas of your online store to visit and index. This automated procedure optimizes your site’s exposure in search results by streamlining interactions with search engines.

9. 301 redirects for seamless navigation

Shopify offers 301 redirects to help with smooth website migrations, so users may continue to navigate even if URLs change. By pointing users and search engines to the appropriate pages, this function protects user experience and search engine rankings while preserving the integrity of your online store.

What Makes Shopify Different From Alternative eCommerce Platforms?

https://appringer.com/wp-content/uploads/2024/01/What-Makes-Shopify-Different-From-Alternative-eCommerce.jpg

Shopify distinguishes itself from other e-commerce systems for multiple reasons

1. Ease of Use:

Shopify is renowned for having an intuitive user interface that makes it suitable for both novice and expert users. The platform streamlines the online store setup and management procedure.

2. All-in-One Solution:

Shopify offers a comprehensive package that includes domain registration, hosting, and a number of additional tools and features. Users will no longer need to integrate several third-party tools or maintain multiple services.

3. Ready-Made Themes:

Shopify provides a range of well crafted themes that users can effortlessly alter to align with their brand. This makes it possible to put up a business quickly and attractively without requiring a lot of design expertise.

4. App Store:

A wide range of apps and plugins are available in the Shopify App Store, enabling users to increase the functionality of their stores. Users may easily locate and integrate third-party apps, ranging from inventory management to marketing solutions.

5. Security:

Shopify places a high priority on security, managing security upgrades and compliance in addition to providing SSL certification by default. This emphasis on security contributes to the development of consumer and merchant trust.

6. Payment Options:

A large variety of payment gateways are supported by Shopify, giving merchants flexibility and simplifying the payment process. For those who would rather have an integrated option, the platform also offers Shopify Payments, its own payment method.

Common Shopify SEO Mistakes

https://appringer.com/wp-content/uploads/2024/01/Common-Shopify-SEO-Mistakes.jpg

Even while Shopify offers strong SEO tools, typical errors might still affect how well your optimization is working. The following typical Shopify SEO blunders should be avoided:

1. Ignoring Unique Product Descriptions:

Duplicate content problems may arise from using default product descriptions or stealing them from producers. To raise your product’s ranking in search results, write a distinctive and captivating description.

2. Neglecting Image Alt Text:

Your SEO efforts may be hampered if you don’t include product photographs with informative alt text. Improved image search rankings are a result of alt text’s ability to help search engines comprehend the content of images.

3. Overlooking Page Titles and Meta Descriptions:

SEO chances may be lost as a result of generic or inadequate page titles and meta descriptions. Create intriguing meta descriptions and distinctive, keyword-rich titles to increase click-through rates.

4. Ignoring URL Structure:

The visibility of poorly structured URLs lacking pertinent keywords may be affected by search engines. Make sure your URLs are keyword-relevant and descriptive.

5. Not Setting Up 301 Redirects:

Neglecting to set up 301 redirects when changing URLs or discontinuing items might result in broken links and decreased SEO value. Preserve link equity by redirecting outdated URLs to the updated, pertinent pages.

6. Ignoring Mobile Optimization:

Neglecting mobile optimization can lead to a bad user experience and lower search ranks, given the rising popularity of mobile devices. Make sure your Shopify store works on mobile devices.

7. Ignoring Page Load Speed:

Pages that load slowly can have a bad effect on search engine rankings and user experience. To increase the speed at which pages load, optimize pictures, make use of browser cache, and take other appropriate measures.

8. Lack of Blogging or Content Strategy:

Your store’s SEO potential may be limited if you don’t consistently add new material. To engage users, target more keywords, and position your company as an authority, start a blog or content strategy.

9. Not Utilizing Heading Tags Properly:

Abuse or disregard of header tags (H1, H2, H3, etc.) can affect how search engines and readers perceive the organization of your content. When organizing and emphasizing text, use heading tags correctly.

Read more :- https://appringer.com/blog/digital-marketing/power-of-seo-for-e-commerce/

0 notes

Text

Webseotoolz offers a Free Robot.txt Generator Tool to create a robot text file without any effort Visit: https://webseotoolz.com/robots-txt-generator

#webseotoolz#webseotools#seo tools#free seo tools#seo toolz#online seo tools#free tools#web seo tools#robot.txt generator#robot.txt generator tool#robot text generator

0 notes

Text

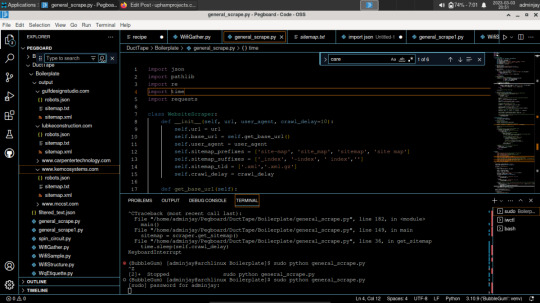

Regex Scraping

Follow my Regex Scraping projects or one of the other projects at UphamProjects.com

I have used beautiful soup and it does work beautifully, but I have a preference of not having the library do all the work for me during drafting. Once I’ve fleshed out the script I might replace the regex with a suitable library, but they are more flexible during drafting, at least for me. Below is a more general scraping implementation that my wiki scraping project. It is also much better at…

View On WordPress

0 notes

Link

The rankings that your business website receives from a search engine is not always under your control. The crawlers that search engines like Google send to websites to verify and analyze the data present on the website and rank the same accordingly. Even if the information on your website is accurate and the content is attractive, it does not mean that you will get a high ranking.

0 notes

Text

Search Engine Optmization

Search Engine Optimization (SEO)

HOW SEARCH ENGINE WORKS?

CRAWLING- Crawler/Bots/Spider search the data and scan the data from the server / Internet / web.

INDEXING- Indexing is to store data in search engine data base centre.

RANKING- Ranking is to show the result and give them ranking.

Techniques of SEO

White Hat SEO- It refers to any practice that improve your search ranking without breaking search engine guidelines.

Black Hat SEO- It refers to increase a site ranking by breaking search engine terms and services.

Black Hat SEO Types

Cloaking- It is the method of presenting users content that is different from search engine crawlers.

Content Hiding- This is done by same text colour as the background to improve ranking.

Sneaky URL Redirection- Door way pages are proper site that redirect to different page without their knowledge.

Keyword Stuffing- Practice of filling content with repetitive keyword in an attempt to rank on search engine.

Duplicate Content- It means to copy content from other website.

WHAT IS WEBSITE?

Domain- Domain is a simply name of the company.

Hosting- Hosting is a space or storage on server where we can store website.

Dealers of Domain and Hosting

GoDaddy

Hosting Raja

Hostinger

Blue Host

Name Cheap

WHAT IS SSL?

SSL Stands for Secure Socket Layer It is a technology for keeping an internet connection secure and sensitive data that is being sent between two system preventing criminals from reading and modifying any information transferred including personal details.

WHAT IS URL AND SUB DOMAIN?

URL- Uniform Resource Locater

Sub Domain- www,web,apps,m

KEYWORDS- Any query search in search box is known as keyword.

TYPES OF KEYWORD

Generic Keyword- It is used for brand name or general keyword it helps to balance your generic keywords to capture wide range of customer. Only one word is used.

Short Tail Keyword- These keywords are phase of two or three words.

Long Tail Keyword- Specific Keyword phase consisting more than three words.

Seasonal Keyword- These Keyword generate most of their search traffic during a specific time of the year.

GOOGLE SANDBOX EFFECT

It is a observation period done by the google to check whether your site is having any technical issues, fraud, scam and user interaction towards website.

SERP

Search Engine Result Page appears after some search something in the search box.

HTML

Hyper Text Markup Language

META TAG OPTIMIZATION

Title Tag- Digital Marketing

Meta tag- content=………….150 to 170 characters

FTP TOOLS

Core FTP

Filezilla

INDEXING AND CRAWLING STATUS

Indexing Status- Status which shows exactly when the site is stored in data base centre.

Crawling Status- Status which gives information about recent crawling of our website. eg. site:abc.com.

KEYWORD PROXMITY

It refers to distance between keywords.

Keyword Mapping

It is the process of assigning or mapping keywords to a specific pages of a website based on keyword.

IMAGE OPTIMIZATION

ALT Tag- It is used for naming images also known as alt attribute

<img src=”digital.png”alt=”name/keyword>

Image compressing-The process of reducing image size to lower the load time.

Eg. Pingdom- To check load time.

Optimzilla- To compress image.

Robot.txt

It is a file in which instructions are given to the crawler how to crawl or index the web page it is mainly used for pages like privacy policy and terms and conditions.

Robots meta Tag

They are piece of core that provide crawlers instruction for how to crawl or index the content. We put this tag in head section of each page it is also called as no index tag.

<meta name=”robots”content=”nofollow,noindex……………../>

SITE MAPS

It is list of pages of website accessible to crawler or a user.

XML site map- Extensible Markup Language is specially written for search engine bots.

HTML site map- It delivers to user to find a page on your website.

XML sitemap generator

CONTENT OPTIMIZATION

Content should be quality content (grammarly)

Content should be 100% unique (plagiarism checker)

Content should be atleast 600-700 words in web page.

Include all important keyword.

BOLD AND ITALIC

<b>Digital Marketing</b> <strong>……………</strong>

<i>Digital Marketing</i> <em>………………</em>

HEAD TAGGING

<h1>………..</h1> <h5>…………</h5>

<h2>………..</h2> <h6>………..</h6>

<h3>…………</h3>

<h4>…………</h4>

DOMAIN AUTHORITY(DA)

It is a search engine ranking score developed by moz that predict how website rank on SERP.

PAGE AUTHORITY(PA)

It is a score developed by moz that predict how well page will rank om SERP.

TOOL- PADA checker

ERROR 404

Page not found

URL is missing

URL is corrupt

URL wrong (miss spilt)

ERROR 301 AND 302

301 is for permanent redirection

302 is for temporary redirection

CANONICAL LINKS

Canonical Links are the links with same domain but different URL it is a html element that helps web master to prevent duplicate issues in seo by specifying canonical version of web page.

<link ref=”canonical”href=https://abc.com/>

URL STRUCTURE AND RENAMING

No capital letters 5. Use important keyword

Don’t use space 6. Use small letters

No special character

Don’t include numbers

ANCHOR TEXT

It is a click able text in the hyperlink it is exact match if include keyword that is being linked to the text.

<a href=”https://abc.com”>Digital Marketing</a>

PRE AND POST WEBSITE ANALYSIS

PRE- Domain suggestions and call to action button

POST- To check if everything is working properly

SOME SEO TOOLS

SEO AUDIT AND WEBSITE ANALYSIS

SEOptimer

SEO site checkup

Woorank

COMPITITOR ANALYSIS AND WEBSITE ANALYSIS

K-meta

Spyfu

Semrush

CHECK BACKLINKS

Backlinks watch

Majestic Tool

Backlinks checkup

CHECK WEBSITE LOAD TIME

GT-Matrix

Google page insights

Pingdom

PLUGIN OR EXTENSION

SEO quacke- site audit and web audit

SERP Trends- To check ranking on SERP

SOME GOOGLE TOOLS

Google search console

Google Analytics

Google keyword Planner

2 notes

·

View notes

Text

SEO Basics: A Step-by-Step Guide For Beginners

Are you a beginner in marketing and want to know about the basics of Search Engine Optimization? If yes, this guide will take you through all aspects of SEO, including what SEO is, its importance, and various SEO practices required to rank your pages higher on a search engine.

Today, SEO is the key to online success through which you can rank your websites higher and gain more traffic and customers.

Keep reading to learn about the basics of SEO, strategies, and tips you can implement. Also, you will find several SEO practices and methods to measure success.

What is SEO: Importance and Facts

SEO is a step-wise procedure to improve the visibility and quality of a website or webpage on a web search engine. It is all about organic or unpaid searches and results, and the organic search results rely on the quality of your webpage.

You must have seen two types of results on a search engine: organic and paid ads. The organic results depend entirely on the quality of the web page, and this is where SEO comes in. For quality, you must focus on multiple factors like optimizing the webpage content, writing SEO articles, and promoting them through the best SEO practices.

SEO is a gateway to getting more online leads, customers, revenue, and traffic to any business. Almost 81% of users click on organic search results rather than paid results. So, by ranking higher in the organic results, you can expect up to five times more traffic. When SEO practices are rightly followed, the pages can rank higher fast. Also, SEO ensures that your brand has online visibility for potential customers.

SEO Basics: A To-Do List

Getting an efficient domain

Using a website platform

Use a good web host

Creating a positive user experience

Building a logical site structure

Using a logical URL structure

Installing an efficient SEO plugin

How to Do SEO: Basic Practices

1. Keyword research

Keywords are the terms that the viewers search on any search engine. You have to find out the primary keywords for your website that customers will tend to search for. After creating the list, you can use a keyword research tool to choose the best options.

Find the primary keywords for your site/page.

Search for long-tail keywords and variations.

Choose the top 5-10 keywords for your SEO practices and content.

2. SEO content

Content is key to successful SEO. You have to consider various factors while writing SEO-friendly content. If you are facing difficulties, you can use professional SEO blog writing services to rank sooner and higher on a search engine.

Understand the intent of your customers, what they are looking for and how you can provide them the solutions.

Generate content matching your intent.

3. User experience

This is the overall viewing experience of a visitor or a searcher. This experience impacts SEO directly.

Avoid walls of text.

Use lists, bullets, and pointers in your content.

Use a specific Call to Action.

4. On-page SEO

On-page SEO involves optimizing web content per the keywords we want to rank for. Several factors are included in on-page SEO like:

Optimizing the title tags.

Meta descriptions are an important on-page factor.

Optimizing the Heading tags (H1-H6).

Optimizing page URLs.

Optimizing images, using ALT tags, naming images, and optimizing the dimensions and quality of your images to make them load quickly.

Creating hyperlinks.

5. Link building

Building links is a significant factor in SEO. You have to build backlinks, which means a link where one website is giving back a link to your website, and this can help you rank higher on Google.

You can build links from related businesses, associations, and suppliers.

Submit your website to quality directories through directory submission.

6. Technical SEO

With technical SEO, you can be sure that your website is being crawled and indexed. Search engines need to crawl your website to rank it.

You can use Google Search Console to figure out any issues with your website.

Check your Robot.txt files, typically found at https://www.yourdomain.com/robots.txt.

Optimize the speed of your website.

Set up an https domain and check if you can access your site using https:// rather than http://.

How to Measure SEO Success?

Once you have put the above steps into practice, it is time to track your results. You need to follow a few metrics regularly to measure SEO success. Following are the SEO factors that should be routinely tracked:

1. Organic traffic

Organic traffic is the number of users visiting your site from the organic search results. You can measure the organic traffic through Google Analytics. If the organic traffic on your website or webpage is increasing, this is a positive indicator that your backlinks are working and your keywords are not too competitive.

2. Bounce rate and average session duration

Bounce rate and the average session duration come into the picture when checking if the content on your webpage resonates with your audience.

Average Session Rate: The average session duration measures the time between two clicks. These two clicks refer to the first click that brings the viewer to your page, and the second is when the viewer goes to another page.

Bounce Rate: The bounce rate considers the number of users who came to your site and immediately left.

3. Conversion Rate

You can determine your website’s conversion rate through the Traffic Analytics tool. It measures the number of users performing the website's desired action, like filling up forms or leaving contact information.

Summing Up

You now understand the basics of SEO, a powerful digital marketing medium to rank your business higher on Google and generate more traffic. The ultimate goal of SEO is to let you gain relevant traffic and generate leads. You can follow the basic SEO guidelines, optimize your web pages, create SEO-friendly content, create backlinks, and much more with the help of good SEO blog writing services. However, always make sure to track your success!

2 notes

·

View notes

Photo

Free Robots.txt Generator | SEO NINJA SOFTWARES

What is a robots.txt File?

Sometimes we need to let search engine robots know that certain information should not be retrieved and stored by them. One of the most common methods for defining which information is to be "excluded" is by using the "Robot Exclusion Protocol." Most of the search engines conform to using this protocol. Furthermore, it is possible to send these instructions to specific engines, while allowing alternate engines to crawl the same elements.

Should you have material which you feel should not appear in search engines (such as .cgi files or images), you can instruct spiders to stay clear of such files by deploying a "robots.txt" file, which must be located in your "root directory" and be of the correct syntax. Robots are said to "exclude" files defined in this file.

Using this protocol on your website is very easy and only calls for the creation of a single file which is called "robots.txt". This file is a simple text formatted file and it should be located in the root directory of your website.

So, how do we define what files should not be crawled by search engines? We use the "Disallow" statement!

Create a plain text file in a text editor e.g. Notepad / WordPad and save this file in your "home/root directory" with the filename "robots.txt".

Why the robots.txt file is important?

There are some important factors which you must be aware of:

Remember if you right click on any website you can view its source code. Therefore remember your robots.txt will be visible to public and anyone can see it and see which directories you have instructed the search robot not to visit.

Web robots may choose to ignore your robots.txt Especially malware robots and email address harvesters. They will look for website vulnerabilities and ignore the robots.txt instructions. A typical robots.txt instructing search robots not to visit certain directories on a website will look like:

User-agent: Disallow: /aaa-bin/ Disallow: /tmp/ Disallow: /~steve/

This robots text is instructing search engines robots not to visit. You cannot put two disallow functions on the same line, for example, you cannot write: Disallow: /aaa-bin/tmp/. You have to instruct which directories you want to ignore explicitly. You cannot use generic names like Disallow: *.gif.

‘robots.txt’must use lower case for your file name and not ‘ROBOTS.TXT.'

Check Free Robots.txt Generator

From Website seoninjasoftwares.com

#robot.txt example#seoninjasoftwares#robots.txt tester#robots.txt google#robots.txt allow google#robots.txt wordpress#robots.txt crawl-delay#robots.txt seo#robots.txt sitemap#robot.txt file generator

0 notes

Text

HERE ARE SOME SEO BASICS TO KEEP IN MIND.

Is search engine optimization for small business website something which you need to fear about?

Well, “fear” is a chunk intense, but you must honestly investigate it.

SEO — or search engine optimization — is the practice of making your business website or blog align with Google’s expectations and requirements. With proper search engine optimization, your small business website will rank higher in Google searches, growing the likelihood that new visitors will discover it.

HERE ARE SOME SEO BASICS TO KEEP IN MIND.

PICK THE RIGHT KEY PHRASES FOR YOUR BUSINESS WEBSITE WHICH IS RELEVANT AND IT CAN DESCRIBE YOUR PRODUCT OR SERVICES BATTER.

The first thing you need to do whilst beginning your search engine optimization journey is choosing the key phrases that you need to rank for. These need to be key phrases that your target market is possibly to search for on Google.

Google’s Keyword Planner tool assist you to discover those key terms. There are also some third-party tools, like KWFinder, that you can use.

Make sure Google can see your website through different tools over internet (Use XML Sitemap, submit to google search console and bing webmaster tool etc.,)

DOUBLE-CHECK THAT YOUR WEBSITE ISN’T HIDDEN FROM GOOGLE.

Go to your website’s cpanel or if you using wordpress CMS then goto user panel and click Settings. In the General tab, scroll right down to Privacy and ensure Public is chosen. (you can manage the same section from robot.txt file in your root directory)

Set your web page’s title and tagline

Your website name and tagline are considered prime real property when it comes to SEO. In different phrases, they’re the best spots as a way to insert your principal keywords. To set them, edit your <head> tag or if you using wordpress then visit your WordPress.Com customization panel and click on Settings. There, within the General tab, you may set your name and tagline beneath the Site Profile section.

Changing your name and tagline for SEO

For instance, in case you control a website of Car Service Provider referred to as Car Services, your name can be something like, “Car Service — Affordable Car Services.” That manner, humans Googling “Car Services” will be more likely to locate you.

Use optimized headlines for web pages

Each webpage publish’s headline ought to not most effective bring the topic of the publish, but also consist of the publish’s essential key-word.

For instance, a headline like, “10 Best Car Service Provider in South Africa” is relatively optimized for the key-word “Car Service Provider.” But if you had been to name the identical weblog publish, “Hire a Car Service Provider for Your Car issue in South Africa,” your post wouldn’t rank as well, because it’s lacking the key-word.

Use your key phrases in web pages

Try mentioning your publish’s fundamental key-word within the first a hundred phrases, and make certain to mention the key-word and other related ones for the duration of.

Don’t overdo it, although. Unnaturally cramming keywords into a publish is referred to as “keyword stuffing,” and Google can apprehend it. Write in a manner that sounds natural, with occasional mentions of your keywords wherein they make feel.

Don’t forget to apply your key phrases in subheaders, too.

Optimize your link or your slugs in wordpress or website.

A slug is the part of a submit URL that comes after your area call. For example, within the URL, http://example.com/road-warthy-certification/ the slug is “Road Warthy Certification.”

With Custom website your have to manage it as link but at WordPress.Com it lets you regulate slugs freely while editing your posts or pages. Under the proper sidebar where it says Post Settings, scroll right down to More Options and fill inside the Slug subject.

Interlink your posts and pages

When working on a brand new publish or web page, always look for possibilities to link for your already existing posts and pages. Those hyperlinks must be relevant to what your article is set. Aim to encompass as a minimum one link for every 250 words of text. It’s additionally an awesome practice to hyperlink to out of doors resources whilst it makes feel.

What’s subsequent?

Apart from the above practices, you need to additionally take the time to post new content regularly. When you achieve this, optimize each individual piece of that content. This is what’s going to present you the pleasant lengthy-time period search engine optimization for your business website.

Want to move the extra mile and get into a few superior approaches? Contact us

1 note

·

View note

Text

Generate #robot text file with Robots.txt Generator Tool #Webseotoolz Visit: https://webseotoolz.com/robots-txt-generator

#webseotoolz#webseotools#web seo tools#seo tools#online seo tools#online tool#free tools#online tools#free online tools#robot.txt generator#robot text file generator#generate robot text

0 notes

Text

I will do shopify SEO to increase shopify sales and google rankings

Hi Shopify Store Owners, Made Your Shopify Store Successfully but the job is not done yet. You need to make your Shopify Seo Optimized to get organic traffic boost your revenue.

Well Don't worry, I will optimize your Shopify Store with the best Shopify marketing to generate organic traffic and sales. I will optimize your Shopify Store SEO for Better Rankings in Google. I will fix On-Page issues, will update your product pages by adding Keywords that can give you sales from organic traffic. I am very Good in Keyword Research and I can find a lot of relevant keywords for your products to generate sales.

Why Should You Hire Me Only?

The profitable keyword for each product (high searches & less competition)

Meta Title & Description optimization

Product title Optimization

Product description with perfect KW placement and density

Image alt tags setting

H1,H2 tag Optimization

Search engine friendly URLs

Full report of the work

Product Pins on Pinterest

Product Internal Linking

Search engine submission

Google Webmasters Setup

XML Sitemap submission

Robot.txt Setup

Bad link removal

Google Analytics Integration (If not set)

So what are you waiting for? Click here to order: https://www.ecodatastore.club/2020/08/i-will-do-shopify-seo-to-increase_25.html

2 notes

·

View notes

Text

How Reliable Is Wix CMS For Search Engine Optimization Of Your Site? What Are The Options Wix CMS Provide For SEO?

Wix CMS for SEO has been in the market for a long time now. People are heading towards the CMS simply to execute complicated tasks with mere ease. But the question that comes to mind that along with being easy to manage is how reliable it is in terms of SEO and other marketing tools integration.

We must say, Wix CMS, do provide a lot of significant feature to the users for easy execution of the part of the SEO. Along with SEO, it does provide tools on how to let Google find your website. Along with it, you can integrate various tracking tools on the website. Either it is the Facebook pixel or Google analytics. Or you can up your game by going ahead with Google tag manager, which is also available here on the Wix platform.

Wix Provides The Following Options For Better Search Engine Optimization.1. Get Found On Google

Yeah, that right, it is literally the name of the option you will find on the Wix CMS for SEO. It will walk you from basic to advance level SEO steps. The first step lets you update the homepage title, SEO description, mobile-friendliness, whether it is connected to the search console. The second step lets you optimize pages for SEO. Optimizing the titles and descriptions for all the pages of the website. The third step lets you have to take the SEO guide or hire a Wix expert.

SEO patterns- ‘Your site’s default SEO settings can be edited, but we recommend only advanced users make changes’. This is what Wix says. So, what exactly SEO patterns consists?

Site pages- Google preview, that’s what it would let you edit. It allows you SEO title, SEO description and page URL as well. You can see the Google preview on right side.

Social Share- Let’s you choose how your main site pages look when shared on social networks like Facebook and Pinterest. It allows you edit Social edit, social description and social image. Similarly, you can see the preview on right side.

Advanced SEO tags- It lets you edit and review additional info about your site pages for search engine. You can put and edit canonical, og:site name, og:type, og:url. You can see tag preview on the right.

2. Site Verification

Site verification lets you add meta tags from the multiple search engines to claim ownership. It allows you to do site verification with Bing, Pinterest, Yandex, Google Search Engine. It not only makes it easier and saves you the trouble of learning a lot of coding and messing around in the core of the website. But also, saves you a lot of time.

3- Sitemap Creation And Update

A sitemap is a file where you provide information about the pages, videos, and other files on your site, and the relationships between them. Search engines like Google read this file to more intelligently crawl your site. Wix automatically updates and creates a sitemap for your site. As soon as make any changes and update it, it makes changes to the sitemap itself and updates it. So with Wix, you don’t have to worry about updating your sitemap and requesting Google to crawl it.

4- URL Redirect Manager

The next question is when you delete a page how you going to ask Google that it has been moved to a new location. That’s the right redirection of the crawler to a new page for the pre-existing page. Either it is 3xx redirect or 4xx redirects, Wix’s redirect manager lets you manage all the redirect of the website in one place at once. And it is way easier than said. Even redirects from the old domain are manageable with it.

5. Robot File Implementation

Another option Wix lets you edit. With robot.txt you can let tell Google which pages of your site to crawl. Putting the URL of the sitemap in your robot.txt file is not a challenge anymore.

In the end, Wix CMS provides you general SEO settings, it allows you to control the ability to let crawlers crawl or not crawl your website.

Source: Wix CMS for SEO

#google tag manager#google ads#google tag#SEO#digital marketing#Digital marketing india#digital painting#Digital marketing gurgaon#Digital marketing delhi

1 note

·

View note

Text

SEO Company in Bangalore | SEO Services in Bangalore

Picking an incredible SEO organization for your promoting effort can be a difficult errand. There are heaps of offices out there who represent considerable authority in Search Engine Optimization, yet how might you believe that each will truly push your Google rankings to the top?

Past Achievements

It generally pays while scanning for a SEO office to not just investigate their past and current customer base, yet in addition to get some information about their accomplishments. What Google rankings have they achieved for past customers? To what extent did it take? Furthermore, what amount did the site traffic increment? For the most part, it can take around 3 months or more for rankings to increment on Google and traffic should increment from about half upwards. At this stage, you may likewise need to ask if the office offers any memberships for post-enhancement upkeep. A decent organization should, since keeping up high SEO rankings requires continuous consideration.

Scope of Services

It likewise assists with searching for a SEO office that offers a full scope of administrations. 'On-page administrations resembles site investigation, content improvement, page advancement, interior third party referencing and positioning and traffic the executives ought to be advertised. 'Off-site' SEO administrations ought to incorporate watchword inquire about, contender investigation, outer external link establishment, and off-page advancement. It may likewise assist with inquiring as to whether you're required to support anything before they proceed with it. On the off chance that they state 'yes', it's a decent sign they're straightforward and ready to work intimately with you. To help your rankings, a SEO organization needs to utilize an assortment of errands, not only a couple, so be careful about organizations that solitary offer restricted or dodgy administrations.

Suspect Methodologies

Something else to pay special mind to is any organization that ideas to build your rankings through presume procedures like spamming, catchphrase stuffing, concealed content, high-positioning entryway pages and connection cultivating. These are regularly alluded to as "dark cap SEO" and can get you expelled from web crawlers, which can be immensely unfavorable to your business. Likewise look out for programmed, mass entries to web search tools. Programmed entries are not viewed as best practice and it's must increasingly useful for you if an organization conducts manual entries. Continuously ensure that a SEO office is open about their philosophies utilized - any mystery could mean they are utilizing "dark cap" methods.

Estimating

Remember that significant expenses in a SEO organization don't really mean the best quality. Rather, pick an organization dependent on their customer base, notoriety and their own site streamlining and Google rankings. Additionally, if an organization is offering you "ensured" #1 rankings at a strangely minimal effort, it is most likely unrealistic. Gathering a few statements is a decent method to begin and consistently ensure cites are upheld by an agreement, so you know precisely what you're getting for your cash. It will likewise assist with asking what their installment terms are and in the event that they have any charges for early end.

Watchwords

At long last, a dependable SEO organization should offer and direct careful and explicit catchphrase look into as a piece of their SEO administrations. Most organizations will incorporate watchword explore as guaranteed, yet be careful about any organization that approaches you to give the catchphrases to their work. While they may request your assessment, the watchwords utilized ought to be founded on their exploration, investigation and contender scope, not on what you let them know. Keep in mind, they're intended to be the SEO specialists - not you! "Speculating" your catchphrases isn't proficient and an incredible SEO organization will look into your watchwords completely before suggesting them.

All our SEO Packages include the following.

1 . On-Page Optimization. 2 . Search Engine Marketing Strategy Analysis. 3 . Detailed Site Analysis . 4 . Competitor Analysis . 5 . Keywords Researched . 6 . Keywords Finalized. 7 . Initial Search Engine Ranking report . 8 . Site pages optimized. 9 . SEO friendly Navigation Optimization. 10 .HTML Code and Meta-Content Optimization . 11 . Images and Links to be Optimized . 12 . Robot.txt Optimization . 13 . HTML sitemap creation. 14 . XML sitemap generation & submission . 15 . Google Analytics Setup. 16 . Google Base Feeds . 17 . Off-Page Optimization . 18 . Directory submission . 19 . Article Submission . 20 . Social media Bookmarking . 21 . Blog creation and updating . 22 . Google Maps Optimization. 23 . Forum posting.

Monthly Work Reports .

Start Price : 3000rs Monthly / 5 Keywords.

As Per Categories & Volume Price Changes. To know more about click here SEO Company in Bangalore

Contact Us:

Phone Number: 8892110099 Email: [email protected] Address : Manu Complex, Old Madras Road, Bangalore 560016.

1 note

·

View note