#real-time analytics

Explore tagged Tumblr posts

Text

Top 5 DeepSeek AI Features Powering Industry Innovation

Table of Contents1. The Problem: Why Legacy Tools Can’t Keep Up2. What Makes DeepSeek AI Unique?3. 5 Game-Changing DeepSeek AI Features (with Real Stories)3.1 Adaptive Learning Engine3.2 Real-Time Anomaly Detection3.3 Natural Language Reports3.4 Multi-Cloud Sync3.5 Ethical AI Auditor4. How These Features Solve Everyday Challenges5. Step-by-Step: Getting Started with DeepSeek AI6. FAQs: Your…

#affordable AI solutions#AI automation#AI for educators#AI for entrepreneurs#AI for non-techies#AI for small business#AI in manufacturing#AI innovation 2024#AI time management#business growth tools#data-driven decisions#DeepSeek AI Features#ethical AI solutions#healthcare AI tools#no-code AI tools#Predictive Analytics#real-time analytics#remote work AI#retail AI features#startup AI tech

2 notes

·

View notes

Text

Businesses seeking to leverage this power can achieve transformative results by prioritizing quality assurance (QA) practices. Integrating real-time analytics allows for continuous improvement, while a strong focus on call center compliance ensures every interaction meets the highest standards. Click Here To Read More: https://rb.gy/p4nen1

#VoIP phone systems#VoIP for business#Omnichannel contact center solution#Automated call distribution#Bulk SMS marketing#Interactive voice response (IVR) system#Call center compliance#VoIP call center#Global reach#Real-time analytics#singapore#call center#internet#telecom#voip

2 notes

·

View notes

Text

#Tags:Biometric Data#Blockchain and Privacy#Data Collection#Data Ownership#Data Security#Digital Twins#Ethical AI#facts#Healthcare Technology#Identity Theft#life#Podcast#Privacy Concerns#Privacy Regulations#Real-Time Analytics#serious#Smart Cities#straight forward#Surveillance#truth#upfront#website

0 notes

Text

Role of Technology in Modern Portfolio Management Services

Technology has reshaped portfolio management services, enhancing investment analysis, strategy optimization, and client experience. By utilizing artificial intelligence and automation, portfolio managers can make faster, more accurate decisions, addressing the complexities of modern financial markets.

Portfolio Management Technology Evolution

Traditional portfolio management depended heavily on basic analysis, intuition, and limited computational tools. Now, algorithms and machine learning provide deeper insights and more reliable decision-making. Key challenges addressed by technology include:

Navigating market complexity and volatility

Managing information overload from vast data streams

Improving risk assessment accuracy

Efficient portfolio rebalancing

Enhanced client communication and reporting

Major Technological Innovations in Portfolio Management

Artificial Intelligence and Machine Learning AI-driven systems now analyze large volumes of market data, identifying patterns and trends beyond human capabilities. Key advantages include:

Greater accuracy in forecasting market movements

Anomaly detection in trading patterns

Multi-variable portfolio optimization

Real-time adjustments to changing conditions

Automated Portfolio Rebalancing Smart rebalancing systems continuously monitor portfolio drift, making trades autonomously to maintain optimal asset allocation. They also optimize trade timing to reduce transaction costs and ensure tax-efficient rebalancing.

Real-Time Risk Analytics Advanced risk management systems offer:

Stress testing of portfolios under different market conditions

Automated real-time computation of risk metrics

Early detection of hidden risk factors

Portfolio strategy fine-tuning to adjust risk profiles

Benefits of Technology-Enabled Portfolio Management

Organizations that use advanced portfolio management services see numerous benefits, including:

45% reduction in portfolio analysis time

35% increase in risk-adjusted returns

60% decrease in manual data processing

40% improvement in client satisfaction scores

Data Analytics for Informed Decisions

Advanced data analytics provide a comprehensive view of market trends through:

Live market data insights

Sentiment analysis of social media and news

Economic indicator tracking with correlation analysis

Competitive landscape monitoring

Portfolio Optimizers

Modern portfolio management solutions can be tailored to individual client needs, offering:

Customized risk profiles

Goal-based investment strategies

Tax-aware trading

Constraint management based on investment goals

Detailed performance attribution and factor analysis

Multilevel reporting and style drift monitoring

ESG and Sustainable Investing

Advanced ESG Integration ESG (Environmental, Social, and Governance) technology offers:

Automated scoring and screening based on ESG factors

Impact measurement and reporting

Identification of sustainable investment opportunities

Climate risk assessment and monitoring

Enhancing the Client Experience

Technology improves the client experience with:

Interactive portfolio dashboards

Customized reporting

Mobile access to portfolio information

Automated client communications

Tools for assessing individual risk preferences

Cost Efficiency and Scalability

Tech-driven portfolio management reduces operational costs and enhances scalability by:

Minimizing manual intervention

Increasing the capacity and accuracy of portfolio managers

Improving trade reconciliation precision

Supporting regulatory compliance

Future Trends in Portfolio Management Technology

Emerging technologies will continue to shape portfolio management, including:

Blockchain Applications Blockchain enhances portfolio management through:

Improved transaction security

Faster settlement processes

Transparent asset ownership records

Execution of smart contracts

Advanced Data Analytics New data analytics techniques include:

Natural language processing in market research

Alternative data integration

Predictive analytics

Real-time market intelligence

Moving Toward a Technology-Intensive Portfolio Management Future

Investment professionals and firms are encouraged to:

Evaluate their current portfolio management processes

Identify areas for technological enhancement

Analyze solution providers for a seamless technology transition

Monitor performance metrics to optimize productivity

Embracing Technological Change

Portfolio management services are evolving with increased technology integration, which is crucial for gaining a competitive edge. Financial professionals must understand how this shift impacts both their workflows and their clients.

Ready to transform portfolio management with technology? Consult financial technology experts to explore advanced solutions for optimizing investment strategies and achieving superior client results.

0 notes

Text

Revolutionize your business decisions with SAP Analytics Cloud, the leading SAP software solution that delivers powerful insights and real-time analytics for smarter strategies.

#SAP Analytics Cloud#Business Decision-Making#SAP Software Solution#Business Intelligence Tools#Data-Driven Insights#Real-Time Analytics#Enterprise Analytics#Cloud Analytics Solutions#SAP Business Intelligence#Advanced Data Analytics

1 note

·

View note

Text

How Can Data Science Predict Consumer Demand in an Ever-Changing Market?

In today’s dynamic business landscape, understanding consumer demand is more crucial than ever. As market conditions fluctuate, companies must rely on data-driven insights to stay competitive. Data science has emerged as a powerful tool that enables businesses to analyze trends and predict consumer behavior effectively. For those interested in mastering these techniques, pursuing an AI course in Chennai can provide the necessary skills and knowledge.

The Importance of Predicting Consumer Demand

Predicting consumer demand involves anticipating how much of a product or service consumers will purchase in the future. Accurate demand forecasting is essential for several reasons:

Inventory Management: Understanding demand helps businesses manage inventory levels, reducing the costs associated with overstocking or stockouts.

Strategic Planning: Businesses can make informed decisions regarding production, marketing, and sales strategies by accurately predicting consumer preferences.

Enhanced Customer Satisfaction: By aligning supply with anticipated demand, companies can ensure that they meet customer needs promptly, improving overall satisfaction.

Competitive Advantage: Organizations that can accurately forecast consumer demand are better positioned to capitalize on market opportunities and outperform their competitors.

How Data Science Facilitates Demand Prediction

Data science leverages various techniques and tools to analyze vast amounts of data and uncover patterns that can inform demand forecasting. Here are some key ways data science contributes to predicting consumer demand:

1. Data Collection

The first step in demand prediction is gathering relevant data. Data scientists collect information from multiple sources, including sales records, customer feedback, social media interactions, and market trends. This comprehensive dataset forms the foundation for accurate demand forecasting.

2. Data Cleaning and Preparation

Once the data is collected, it must be cleaned and organized. This involves removing inconsistencies, handling missing values, and transforming raw data into a usable format. Proper data preparation is crucial for ensuring the accuracy of predictive models.

3. Exploratory Data Analysis (EDA)

Data scientists perform exploratory data analysis to identify patterns and relationships within the data. EDA techniques, such as data visualization and statistical analysis, help analysts understand consumer behavior and the factors influencing demand.

4. Machine Learning Models

Machine learning algorithms play a vital role in demand prediction. These models can analyze historical data to identify trends and make forecasts. Common algorithms used for demand forecasting include:

Linear Regression: This model estimates the relationship between dependent and independent variables, making it suitable for predicting sales based on historical trends.

Time Series Analysis: Time series models analyze data points collected over time to identify seasonal patterns and trends, which are crucial for accurate demand forecasting.

Decision Trees: These models split data into branches based on decision rules, allowing analysts to understand the factors influencing consumer demand.

5. Real-Time Analytics

In an ever-changing market, real-time analytics becomes vital. Data science allows businesses to monitor consumer behavior continuously and adjust forecasts based on the latest data. This agility ensures that companies can respond quickly to shifts in consumer preferences.

Professionals who complete an AI course in Chennai gain insights into the latest machine learning techniques used in demand forecasting

Why Pursue an AI Course in Chennai?

For those looking to enter the field of data science and enhance their skills in predictive analytics, enrolling in an AI course in Chennai is an excellent option. Here’s why:

1. Comprehensive Curriculum

AI courses typically cover essential topics such as machine learning, data analysis, and predictive modeling. This comprehensive curriculum equips students with the skills needed to tackle real-world data challenges.

2. Hands-On Experience

Many courses emphasize practical, hands-on learning, allowing students to work on real-world projects that involve demand forecasting. This experience is invaluable for building confidence and competence.

3. Industry-Relevant Tools

Students often learn to use industry-standard tools and software, such as Python, R, and SQL, which are essential for conducting data analysis and building predictive models.

4. Networking Opportunities

Enrolling in an AI course in Chennai allows students to connect with peers and industry professionals, fostering relationships that can lead to job opportunities and collaborations.

Challenges in Predicting Consumer Demand

While data science offers powerful tools for demand forecasting, organizations may face challenges, including:

1. Data Quality

The accuracy of demand predictions heavily relies on the quality of data. Poor data quality can lead to misleading insights and misguided decisions.

2. Complexity of Models

Developing and interpreting predictive models can be complex. Organizations must invest in training and resources to ensure their teams can effectively utilize these models.

3. Rapidly Changing Markets

Consumer preferences can shift rapidly due to various factors, such as trends, economic changes, and competitive pressures. Businesses must remain agile to adapt their forecasts accordingly.

The curriculum of an AI course in Chennai often includes hands-on projects that focus on real-world applications of predictive analytics

Conclusion

Data science is revolutionizing how businesses predict consumer demand in an ever-changing market. By leveraging advanced analytics and machine learning techniques, organizations can make informed decisions that drive growth and enhance customer satisfaction.

For those looking to gain expertise in this field, pursuing an AI course in Chennai is a vital step. With a solid foundation in data science and AI, aspiring professionals can harness these technologies to drive innovation and success in their organizations.

#predictive analytics#predictivemodeling#predictiveanalytics#predictive programming#consumer demand#consumer behavior#demand analysis#machinelearning#machine learning#technology#data science#ai#artificial intelligence#Data science course#AI course#AI course in Chennai#Data science course in Chennai#Real-Time Analytics#Data Collection#Data Cleaning

0 notes

Text

Ethical and Privacy Issues in Big Data

The ethical dilemmas of Big Data Analytics revolve around three crucial aspects: privacy, security, and bias.

Developing ethical AI and responsible data handling practices is crucial for navigating the challenges of Big Data Analytics. This involves establishing clear guidelines for data use, conducting impact assessments, and fostering a culture of ethical behavior within organizations.

We are a team of experts who help clients reach a wider audience online. We design websites that improve user experience and meet customer expectations.

Feather Softwares is a pro in the field of social media marketing, enabling businesses to get more consumers. We make ads that are paid on social media to increase sales and to share useful content that helps customer.

Are you looking for a more impactful brand? Feather Softwares provide you with high-level instructional content that makes you appear as a leader in your field, as well as the improvement of your online visibility through SEO. This, in turn, facilitates the customers' discovery of your business.

For Business Enquiries- https://formfacade.com/sm/xvjfh3dkM For Course Enquiries - https://formfacade.com/sm/RD0FNS_ut

#data analytics#data mining services#Predictive Analytics#machine learning#Real-time Analytics#digital marketing#marketing#seo#graphic design#online visibility#ecommerce

0 notes

Text

0 notes

Text

Unveiling the Power of Delta Lake in Microsoft Fabric

Discover how Microsoft Fabric and Delta Lake can revolutionize your data management and analytics. Learn to optimize data ingestion with Spark and unlock the full potential of your data for smarter decision-making.

In today’s digital era, data is the new gold. Companies are constantly searching for ways to efficiently manage and analyze vast amounts of information to drive decision-making and innovation. However, with the growing volume and variety of data, traditional data processing methods often fall short. This is where Microsoft Fabric, Apache Spark and Delta Lake come into play. These powerful…

#ACID Transactions#Apache Spark#Big Data#Data Analytics#data engineering#Data Governance#Data Ingestion#Data Integration#Data Lakehouse#Data management#Data Pipelines#Data Processing#Data Science#Data Warehousing#Delta Lake#machine learning#Microsoft Fabric#Real-Time Analytics#Unified Data Platform

0 notes

Text

How DeepSeek AI Revolutionizes Data Analysis

1. Introduction: The Data Analysis Crisis and AI’s Role2. What Is DeepSeek AI?3. Key Features of DeepSeek AI for Data Analysis4. How DeepSeek AI Outperforms Traditional Tools5. Real-World Applications Across Industries6. Step-by-Step: Implementing DeepSeek AI in Your Workflow7. FAQs About DeepSeek AI8. Conclusion 1. Introduction: The Data Analysis Crisis and AI’s Role Businesses today generate…

#AI automation trends#AI data analysis#AI for finance#AI in healthcare#AI-driven business intelligence#big data solutions#business intelligence trends#data-driven decisions#DeepSeek AI#ethical AI#ethical AI compliance#Future of AI#generative AI tools#machine learning applications#predictive modeling 2024#real-time analytics#retail AI optimization

3 notes

·

View notes

Text

We don't just offer traditional communication solutions. We're at the forefront of innovation, leveraging the power of AI to take your interactions to the next level. Experience the difference with Crystal-clear VoIP calls, Effortless SMS solutions and AI-powered features. https://bit.ly/4cG4F2U #GlanceTelecom #AI #Communication #Results #VoIP #SMS #BusinessSuccess #CloudSolutions #CustomerSuccess Glance Telecom

#AI-powered communication solutions#VoIP solutions#Call center solutions#SMS solutions#Global communication#Customer service#Cutting-edge technology#Reliable communication#Cost-effective solutions#Customer satisfaction#Business growth#Building relationships#AI-powered call center software#Best VoIP providers for businesses#How to improve customer service with SMS#Global communication solutions for enterprises#Increase customer loyalty through communication#VoIP phone systems#VoIP for business#Omnichannel contact center solution#Automated call distribution#Bulk SMS marketing#Interactive voice response (IVR) system#Call center compliance#VoIP call center#Global reach#Real-time analytics

1 note

·

View note

Text

Lab Analyzers Interfacing: Bridging the Gap Between Data and Action

In the dynamic landscape of laboratory operations, the seamless integration of Lab Analyzers Interfacing plays a pivotal role in transforming raw data into actionable insights. By bridging the gap between data generation and actionable outcomes, these interfaces facilitate efficient decision-making and enhance overall laboratory performance.

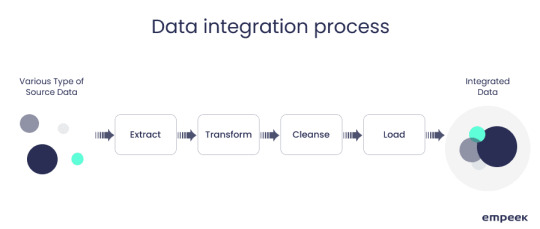

Seamless Data Integration: Seamless data integration is the cornerstone of effective lab analyzer interfacing. It enables disparate laboratory instruments and systems to communicate seamlessly, ensuring that data flows seamlessly throughout the laboratory ecosystem. With seamless data integration, laboratories can consolidate data from various sources, such as analyzers, LIS (Laboratory Information Systems), and EMR (Electronic Medical Records), enabling comprehensive analysis and reporting.

Real-time Analytics: Leveraging real-time analytics, laboratories can gain immediate insights into test results and performance metrics. By analyzing data as it is generated, laboratory professionals can identify trends, anomalies, and potential issues in real time, enabling proactive decision-making and intervention. Real-time analytics empower laboratories to optimize workflows, prioritize tasks, and deliver timely results to healthcare providers and patients.

Workflow Automation: Workflow automation streamlines laboratory processes by automating routine tasks and optimizing resource utilization. Through workflow automation, tasks such as sample handling, testing, and result reporting can be automated, reducing manual errors and accelerating turnaround times. By automating repetitive tasks, laboratories can enhance efficiency, improve throughput, and allocate resources more effectively.

Quality Assurance Measures: Maintaining quality assurance is paramount in laboratory operations to ensure the accuracy and reliability of test results. Lab analyzer interfacing enables the implementation of robust quality assurance measures, including instrument calibration, proficiency testing, and result validation. By enforcing stringent quality control protocols, laboratories can uphold the highest standards of accuracy and reliability in diagnostic testing.

Decision Support Systems: Integrated decision support systems empower laboratory professionals with actionable insights and recommendations based on data analysis. These systems leverage advanced algorithms and machine learning techniques to assist in result interpretation, diagnosis, and treatment planning. By providing evidence-based guidance, decision support systems enable laboratories to deliver more informed and personalized care to patients.

In conclusion, Lab Analyzers Interfacing plays a crucial role in bridging the gap between data generation and actionable outcomes in laboratory settings. Through seamless data integration, real-time analytics, workflow automation, quality assurance measures, and decision support systems, laboratories can enhance efficiency, accuracy, and overall performance, ultimately improving patient care and outcomes.

#Seamless Data Integration#Real-time Analytics#Workflow Automation#Quality Assurance Measures#Decision Support Systems

0 notes

Text

Business Intelligence A Necessity for Today’s CFO

This informative piece delves into how CFOs utilize business intelligence tools to improve decision-making, optimize resource allocation, and drive strategic growth. From real-time analytics to predictive modeling, it highlights the transformative potential of data in shaping financial strategies. Explore how CFOs leverage actionable insights to manage risks, identify opportunities, and stay ahead in dynamic markets. Enter the world of business intelligence and uncover why it has become a must-have for today's CFOs who navigate complex business landscapes with agility and precision.

For more information download our whitepaper at https://pathquest.com/knowledge-center/whitepaper/a-necessity-for-todays-cfo/

0 notes

Text

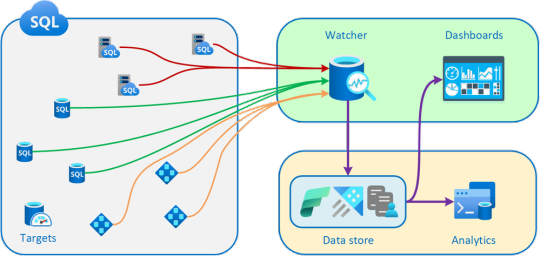

Database Watcher: Monitoring for Azure SQL

Introduction If you’re running mission-critical workloads on Azure SQL Database or Managed Instance, reliable performance monitoring is a must. But deploying monitoring infrastructure and wrangling telemetry data can be complex and time-consuming. Wouldn’t it be great if you could enable in-depth SQL monitoring with just a few clicks and have all the key metrics at your fingertips? Now you…

View On WordPress

0 notes

Text

7 Reasons for Equipment Failure Causes Unplanned Downtime

Equipment failures are an inherent part of the journey. The aftermath of these failures varies widely, spanning from easily rectifiable situations with minimal losses to catastrophic events that leave a lasting impact.

In this article, we will discuss some common causes of equipment failure and how to deal with it in a more systematic way.

So, let’s get started.

What is Equipment Failure?

Equipment failure refers to the sudden or gradual malfunction of machinery, tools, or devices used in various industries. This breakdown can stem from a variety of factors, ranging from poor maintenance practices to external influences.

7 Common Causes of Equipment Failure

Understanding the underlying causes of equipment failures is essential for successful prevention of unplanned breakdown. Organizations can reduce downtime, increase operational efficiency, and take preventive measures by identifying these typical bottlenecks.

Let’s explore the seven common causes of equipment failure.

1. Too Much Dependence on Reactive Maintenance

2. Aging Infrastructure and Outdated Technology

3. Inadequate Training and Skill Development

4. Lack of Planned Preventive Maintenance

5. Overlooked Software and Control System Issues

6. Absence of Repair vs. Replacement Strategy

7. Poor Environmental Conditions

Impact of Equipment Failure on Productivity

The repercussions of equipment failure extend beyond the physical components. The ripple effect encompasses financial losses, operational disruptions, and potential long-term consequences that can impact an organization’s reputation and competitiveness. Understanding the multifaceted impact is crucial for developing strategies to mitigate the fallout of unexpected equipment failures.

Downtime Costs

Operational Disruptions

Decreased Output

Increased Labor Costs

Quality Issues

Maintenance Management Software – Ultimate Solution to Equipment Failure

Proactively managing equipment maintenance is pivotal for preventing failures and optimizing productivity. One of the cornerstones of modern maintenance strategies is the implementation of advanced Maintenance Management with systematic CMMS software solutions.

With CMMS, organizations can transition from a reactive approach to a proactive and data-driven maintenance strategy, significantly reducing the risk of equipment failures.

Benefits of Maintenance Management Software

Preventive Maintenance Planning

Historical Data Tracking

Inventory Management

Work Order Management

Real-time Analytics and Reporting

Conclusion

As we’ve explored the common causes and far-reaching impacts of equipment failure, it becomes evident that organizations must embrace modern solutions to mitigate these challenges. Maintenance Management Software, exemplified by the robust capabilities of CMMS solution, emerges as the ultimate solution to transform maintenance from a reactive burden to a proactive advantage.

If you want to explore how an Equipment Maintenance Management Software can help you reduce the equipment failure rate and optimize your maintenance processes, connect with our experts now or write us back at [email protected]

#Maintenance Management Software#Preventive Maintenance#Inventory Management#Work Order Management#Real-Time Analytics#CMMS Software#Equipment Failure#Unplanned Downtime#TeroTAM

0 notes

Text

Analyzing the Risks and Rewards of Copy Trading: Insights from India’s Trading Streets

In the bustling markets of India, where traders skillfully balance risk and reward, a similar scene unfolds in the realm of Forex trading. At www.DecodeEX.com, a platform resonating with the vibrancy and strategic depth of India’s markets, copy trading emerges as a popular strategy. This approach, mirroring the decisions of experienced traders, is akin to a seasoned merchant sharing his trade secrets with an apprentice. Today, we journey through the intricacies of copy trading, weighing its potential profits and pitfalls, much like a careful trader in an Indian bazaar.

The Dual Edges of Copy Trading

Like the double-sided blade of a Rajput warrior, copy trading offers both significant rewards and risks. It’s essential to navigate this strategy with the wisdom and caution of an experienced trader in India’s ancient markets.

The Reward: Amplifying Profits through Expertise

Leveraging Expertise: Copy trading allows less experienced traders to benefit from the strategies of market maestros, much like learning from a master craftsman.

Diversification of Strategy: It opens doors to diverse trading styles and strategies, broadening the investment horizon, akin to exploring different trades in a bustling Indian market.

The Risk: The Flip Side of Following

Market Volatility: Just as a sudden monsoon can disrupt a market, unexpected market shifts can impact the effectiveness of copied strategies.

Dependency on Experts: Over-reliance on expert traders can be risky, akin to depending solely on a single vendor in a diverse marketplace.

Strategies to Mitigate Risks in Copy Trading

Navigating the world of copy trading requires the shrewdness of a seasoned trader wandering through the narrow lanes of an Indian bazaar, where each decision is crucial.

Research and Select Wisely: Just as a discerning buyer in India would carefully choose a vendor, select traders to copy based on thorough research and analysis of their track record.

Understand the Strategies: Gain insights into the trading strategies of the experts, much like understanding the quality of goods one is purchasing.

Set Limitations: Establish clear boundaries for copy trading, akin to setting a budget before entering a market.

The Role of DecodeEX in Balancing the Scales

At www.DecodeEX.com, traders find a platform that mirrors the dynamism and diversity of India’s markets, offering tools and insights to balance the scales in copy trading.

User-Friendly Interface: The platform is as welcoming and navigable as a well-organized marketplace, suitable for traders of all experience levels.

Real-Time Analytics: Just as a trader keeps an eye on the market trends, DecodeEX provides real-time analytics to stay ahead of market changes.

Risk Management Tools: The platform offers robust tools for managing risks, akin to the safety measures a prudent trader takes in safeguarding his wares.

Conclusion: The Art of Balanced Trading

In conclusion, copy trading at www.DecodeEX.com requires a balanced approach, much like the art of trading in India’s bustling markets. It calls for the wisdom to discern, the caution to mitigate risks, and the willingness to learn continuously. As in the vibrant markets of India, success in copy trading lies in navigating both its risks and rewards with knowledge, strategy, and an open mind.

0 notes