#raw processing software

Explore tagged Tumblr posts

Text

this chem software is infuriatingly proprietary

I knew I was going to have to deal with this down the line because it was obvious this data wasn't being managed correctly; I hoped it was. but it wasn't, and now I'm opening each file and writing down metadata.

Pretty sure there's python code to do this metadata collection, and I'm a little annoyed I'm not better at coding to whip something up to circumvent the way this was organized. It's one of those things where it'd take longer to put the script together than just cranking through. Plus learning a new library is a pain. Ideally I won't have to repeat this process either; when I do it myself I'll know how to manage it.

#I think 90% of research is data management. I got lucky that I failed so much at my job as practice tbh >.>#Aside though: any software that doesn't export a raw data file to txt/csv should be taken out back.#I should investigate opening these with different kinds of software tbh. But I'd still need to do this process because the metadata#wouldn't be in a reference-able format for me to find the files I need...#/makes frustrated hand gestures#c'est la vie~#ptxt#alright break over lol

5 notes

·

View notes

Text

SHOULD WE BE USING PRESETS?

Let me show you two photographs: Prouts Neck, Maine, USA, original photograph as taken. Prouts Neck, Maine, USA, photograph after being modified with a Preset available within ON1 PhotoRAW 2024 Can I really claim that the second photo is mine? Was I the photographer who created the image that you see? Have I departed from true photography by using a Preset created by someone else? Or doesn’t…

View On WordPress

#composition#ethical photography#image processing#landscape photography#new photographer#ON1 Photo RAW#photographic software#photography#photography ideas#Presets in photography#starting in photography

0 notes

Text

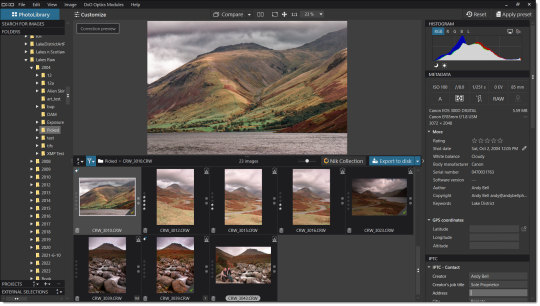

DXO Pure Raw 4

Comparing DXO Pure Raw 4 to Topaz Photo AI version 2.4 in my real world comparison using it as a pre-processor to Adobe Camera Raw and Photoshop. The Problem Unfortunately it has come to my attention that all of my software licenses are expiring this month. That includes DXO Pure Raw 3, and all the Topaz products including Gigapixel, and Photo AI. The two stand alone products Sharpen AI and…

View On WordPress

#books for sale#Colorado photography books#Colorado wall art#DXO#DXO Pure Raw#DXO Pure Raw 4#photo preprocessing#photography books#photography software#Pictures for sale#preprocessing#raw image conversion#raw images#raw photography#raw processing#Software#software testing#sofware comparison#Topaz 2.4#Topaz Photo AI 2.4

0 notes

Text

DXO Photo Lab 7 Review

[vc_row][vc_column][vc_column_text] DXO Photo Lab 7 Review This is a look at DXO Photo Lab, a review of its features. This time, I am not comparing it to anything else, although I will refer to comparisons I have made previously, when appropriate. Introduction to DXO Photo Lab 7 DXO Photo Lab 7, which I will simply refer to as DXO from now on, is a complete RAW image processor, a decent image…

View On WordPress

0 notes

Text

"When Ellen Kaphamtengo felt a sharp pain in her lower abdomen, she thought she might be in labour. It was the ninth month of her first pregnancy and she wasn’t taking any chances. With the help of her mother, the 18-year-old climbed on to a motorcycle taxi and rushed to a hospital in Malawi’s capital, Lilongwe, a 20-minute ride away.

At the Area 25 health centre, they told her it was a false alarm and took her to the maternity ward. But things escalated quickly when a routine ultrasound revealed that her baby was much smaller than expected for her pregnancy stage, which can cause asphyxia – a condition that limits blood flow and oxygen to the baby.

In Malawi, about 19 out of 1,000 babies die during delivery or in the first month of life. Birth asphyxia is a leading cause of neonatal mortality in the country, and can mean newborns suffering brain damage, with long-term effects including developmental delays and cerebral palsy.

Doctors reclassified Kaphamtengo, who had been anticipating a normal delivery, as a high-risk patient. Using AI-enabled foetal monitoring software, further testing found that the baby’s heart rate was dropping. A stress test showed that the baby would not survive labour.

The hospital’s head of maternal care, Chikondi Chiweza, knew she had less than 30 minutes to deliver Kaphamtengo’s baby by caesarean section. Having delivered thousands of babies at some of the busiest public hospitals in the city, she was familiar with how quickly a baby’s odds of survival can change during labour.

Chiweza, who delivered Kaphamtengo’s baby in good health, says the foetal monitoring programme has been a gamechanger for deliveries at the hospital.

“[In Kaphamtengo’s case], we would have only discovered what we did either later on, or with the baby as a stillbirth,” she says.

The software, donated by the childbirth safety technology company PeriGen through a partnership with Malawi’s health ministry and Texas children’s hospital, tracks the baby’s vital signs during labour, giving clinicians early warning of any abnormalities. Since they began using it three years ago, the number of stillbirths and neonatal deaths at the centre has fallen by 82%. It is the only hospital in the country using the technology.

“The time around delivery is the most dangerous for mother and baby,” says Jeffrey Wilkinson, an obstetrician with Texas children’s hospital, who is leading the programme. “You can prevent most deaths by making sure the baby is safe during the delivery process.”

The AI monitoring system needs less time, equipment and fewer skilled staff than traditional foetal monitoring methods, which is critical in hospitals in low-income countries such as Malawi, which face severe shortages of health workers. Regular foetal observation often relies on doctors performing periodic checks, meaning that critical information can be missed during intervals, while AI-supported programs do continuous, real-time monitoring. Traditional checks also require physicians to interpret raw data from various devices, which can be time consuming and subject to error.

Area 25’s maternity ward handles about 8,000 deliveries a year with a team of around 80 midwives and doctors. While only about 10% are trained to perform traditional electronic monitoring, most can use the AI software to detect anomalies, so doctors are aware of any riskier or more complex births. Hospital staff also say that using AI has standardised important aspects of maternity care at the clinic, such as interpretations on foetal wellbeing and decisions on when to intervene.

Kaphamtengo, who is excited to be a new mother, believes the doctor’s interventions may have saved her baby’s life. “They were able to discover that my baby was distressed early enough to act,” she says, holding her son, Justice.

Doctors at the hospital hope to see the technology introduced in other hospitals in Malawi, and across Africa.

“AI technology is being used in many fields, and saving babies’ lives should not be an exception,” says Chiweza. “It can really bridge the gap in the quality of care that underserved populations can access.”"

-via The Guardian, December 6, 2024

#cw child death#cw pregnancy#malawi#africa#ai#artificial intelligence#public health#infant mortality#childbirth#medical news#good news#hope

900 notes

·

View notes

Text

If you're not willing to pay for Adobe, then I strongly recommend RawTherapee as a raw processor.

I don't use it nearly to its full potential (afaik it can functionally replace lightroom), but as just a raw processor it offers some very deep control, all the way down to choosing which demosaic algorithm you want to use.

About the only thing that I've found that the RawTherapee and GIMP combo can't easily do is panoramas and auto HDR (both of which, iirc, used or were based on the Photomerge feature in Photoshop).

@spoonyglitteraunt There are some free or low-cost RAW editors out there. I haven't researched them for a while, so I don't know what is good these days. I think they are mostly phone apps. But I'm sure you can find a "Top 5" list or something on Google. Or someone might leave a suggestion in the replies.

However, Adobe might be cheaper than you realize. They have the photography bundle that includes Lightroom and Photoshop for $10 per month. And as long as you do the monthly plan, you can cancel without any fees.

I don't know if that is out of your budget, but I did want to make everyone aware of it.

Some people despise subscriptions, but I personally find 10 bucks a month more accessible than a one time $1000 fee. And pirated Photoshop could get super buggy sometimes. I also like that I get access to the beta and all the new features as they are released.

So the subscription model has worked well for me personally, but others find it frustrating not owning their software.

In any case, I do hope you experiment even if it is with your phone. Good luck!

18 notes

·

View notes

Text

How to be a Dirtbag Fic Writer

I got to do some talking about writing today and I couldn’t stop thinking about it so here are my full thoughts on the matter of being a dirtbag fic writer.

Being the disorganized thoughts of someone two and a half decades into the beautiful mess that is writing fanfic (and a few non-fanfic things too).

What is a dirtbag fic writer?

I am talking about someone who is not cleaning up anything. We show up filthy, fresh out of rooting around in the garden of our imaginations. We probably smell a little from work. We will hand you our hard grown fruits, but we have not washed them and we carried them in the bottom upturned parts of our t-shirts. The fruit is a little bruised. It’s not cut up or put in a bowl yet. But we got it in the house! It’s here. Someone can eat it.

Why dirtbag it? Because the fruit gets in the house. If you’re hemming and hawing, if the idea you want to do seems to be big or you want it perfect and shiny. If you’re imagining a ten thousand step process, so you’re not taking the first step? Dirtbag it.

How do I dirtbag?

That’s the best part. You just write. Sit down. One word after the other. No outline, no plan, no destination. No thought of editing. Just word vomit. Every word is a good word. It’a word that wasn’t there before. Grammar sucks? Who cares. Can’t think of the perfect word? Fuck it, put in the simplest version of what you mean.

Write the idea that you love. The one thing you want to say. Has it been done 3000000 times? WHO CARES human history is long, every idea has been done, probably more than twice. YOU have never written it before. It’s your grubby potato that you clawed out of the ground and guess what someone can still make it into delicious french fries.

Now here’s the critical part. Write as much as you can squeeze out of your brain. One word in front of the other.

And then I challenge you this: at most, read it over once and then put it into the world. Just as it is. AND THIS IS IMPORTANT: DO IT WITHOUT APOLOGY OR CAVEAT. I challenge you, beautiful dirtbag to not pre-emptively apologize. Do not make your work lesser. THAT IS YOUR POTATO! It has eyes and roots and dirt clinging to it because that is what happens. We are dirtbagging it today. Hell really confused people at do #dirtbagwriter on it.

Dirtbag writes id, base, lizard brain. Dig in the fertile garden of your imagination. What is the story you tell yourself before you fall asleep? What’s your anxiety this week? Your fantasy? What is going well? What do you wish things looked like? Who is the feral imaginary character you’ve been crafting to take your frustrations and joys out on?

But, VEE, I wish to have an editor and an outline, use a cool software like scrivener instead of retching up onto a google doc and making it look NICE and PRETTY!

COOL! DO THAT THEN! IF YOU’RE ACTUALLY DOING IT! You should have a process! That’s cool and healthy and necessary for sustainable writing. But if you’re not writing because all of that seems too much? THEN DON’T.

Did you know fic is free? That we do this from love? From sheer desire? For the love of the game? If you have a process, and the words are flowing, amazing, I love that for you, you don’t need this essay. If you don’t, let us continue.

What does dirtbag writing look like?

It’s messy. It’s a little raw and tatty around the edges sometimes. It’s weird. It’s someone else’s first draft. Maybe it winds up being your first draft, Idek, that’s your business.

It’s jokes that make YOU laugh. It’s drama that would make YOU cry if you read it. You are your first commenter. You are your first audience (and possibly continuing pleasure! If you don’t go back and reread your own work sometimes, you might be missing out on one of your favorite authors cause you wrote it for you! Wait until you’re not so close to it. Years sometimes. Then hey, maybe some of this is pretty dang good actually.)

It has mistakes.

Dirtbags make mistakes, but dirtbags have published pieces. They have things other people can read out there.

What if I don’t get good feedback?

Look, the most likely outcome of any new, untried fic writer (and even established writers trying something new-ish) is that you get no feedback. That’s real. Silence. It’s eerie, it’s terrible, it sucks. I don’t want to pretend it doesn’t. But nothing is not negative. It’s a big fic-y ocean out there and we are all wee itty-bitty-sometimes-with-titty fishes.

You should still do it all over again. And again. And again. You get better at writing by writing. You just do. Nothing else replaces it. If your well is dry? Fill it with new things. Go do something new, read a new kind of book, watch a new film, (libraries have so much good shit, you don’t even have to spend money for so many things if you have a library card), just go for a walk in a new direction. Stimulate yourself. Got a cup of something hot and eavesdrop on conversations. Refill yourself with newness.

And hey, speaking of, do you leave comments? Because you get what you give. You can build relationships with people by commenting and that builds community and community means places to get feedback in the end. Comments are gold. They are all we are paid in. Tip your writers with ‘extra kudos’ or ‘this made me laugh’. And hey, when you go back for a re-read so you can tell them your favorite part? Ask yourself how they made that favorite part? What do you like about it? Tone? Metaphor? The structure? Reading teaches us how to write too!

BUT, okay. Sometimes. Sometimes there is actual bad feedback and people suck.

You know the best part about being a dirtbag? Unrepentant block, delete, goodbye. You don’t own anyone with a shitty opinion any of your precious time on this earth. You did it for free, you gave them your dirty, but still delicious fruit and they went ‘ew, this is a dirty strawberry, how could you not make a clean tomato?” Because you didn’t plant fucking tomatoes, did you? Don’t fight, don’t engage. Block. Delete. Goodbye.

If someone in person, looked you in the eye when you brought them a plate of food to share at a party and they said “Why didn’t you bring me MY favorite? This isn’t cooked well at all.” You would probably write up a Reddit AiTA question about it just to hear five thousand people say they were an asshole. Fic is no different

And hey, when you dirtbag it? You know you did. It’s not your most cleaned up perfect version. So who cares what they think? You might make it more shiny and polished next time! You might NOT.

Ok, but what if I don’t finish it?

Fuck it, post it anyway.

What if it’s bad?

Fuck it, post it anyway.

What if it doesn’t make sense?

That’s ART, baby. Fuck it, post it anyway.

What if what I want to write doesn’t work with current fandom norms?

Then someone out there probably needs it! And what the hell is this? The western canon? FUCK IT POST IT ANYWAY*

*Basic human decency is not a ��fandom norm’. Don’t be racist, sexist, ableist, fat shaming, classist or shitty about anyone's identity on main, okay? Dirtbag writers are KIND first and foremost. Someone saying you are stepping into shit about their identity is not the same as unsolicited crappy feedback about pairings. In the immortal words of Kurt Vonnegut: "God damn it, you've got to be kind.”

You’re being very flippant about something that’s scary.

I know. I know I am. I know it can be scary. But no risk, no reward and hell, you aren’t using your goddamn legal name on the internet are you? (please for the love of fuck do not be using your legal name to write fic) You’ve got on a mask. You’re a superhero. With dirt on your cape.

That niche thing that you think no one cares about? Guaranteed you will find someone else in the world who wants it. Maybe they won’t find it right away. Maybe they will be too shy to comment or even hit a button. But your dirty potato will stick with them. They will make french fries in their head.

You have an audience. But they can’t find you if you have nothing out there.

Go forth. Make.

You have some errors in this essay.

PROBABLY CAUSE I DIRTBAGGED IT. But I picked this strawberry for you out of my brain, so I hope you run it under some cold water and find the good bits and have a nice snack. Or throw it away. Or use it to plant more strawberries (I know that’s not how strawberries work, metaphors break when stretched).

#dirtbagwriter

Go forth and MAKE

#writing#i'm not an expert#I just have been doing this a long time#and these are my feels#please feel free to throw away this strawberry

893 notes

·

View notes

Note

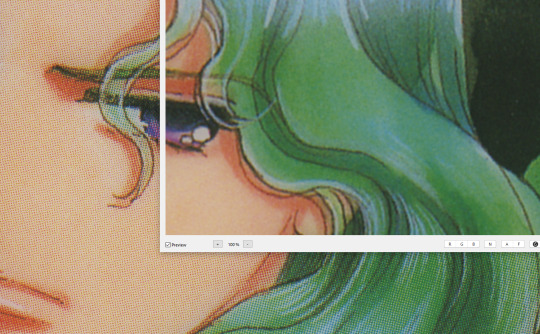

May I ask what scanners / equipment / software you're using in the utena art book project? I'm an artist and half the reason I rarely do traditional art is because I'm never happy with the artwork after it's scanned in. But the level of detail even in the blacks of Utena's uniform were all captured so beautifully! And even the very light colors are showing up so well! I'd love to know how you manage!

You know what's really fun? This used to be something you put in your site information section, the software and tools used! Not something that's as normal anymore, but let's give it a go, sorry it's long because I don't know what's new information and what's not! Herein: VANNA'S 'THIS IS AS SPECIFIC AS MY BREAK IS LONG' GUIDE/AIMLESS UNEDITED RAMBLE ABOUT SCANNING IMAGES

Scanning: Modern scanners, by and large, are shit for this. The audience for scanning has narrowed to business and work from home applications that favor text OCR, speed, and efficiency over archiving and scanning of photos and other such visual media. It makes sense--there was a time when scanning your family photographs and such was a popular expected use of a scanner, but these days, the presumption is anything like that is already digital--what would you need the scanner to do that for? The scanner I used for this project is the same one I have been using for *checks notes* a decade now. I use an Epson Perfection V500. Because it is explicitly intended to be a photo scanner, it does threebthings that at this point, you will pay a niche user premium for in a scanner: extremely high DPI (dots per inch), extremely wide color range, and true lossless raws (BMP/TIFF.) I scan low quality print media at 600dpi, high quality print media at 1200 dpi, and this artbook I scanned at 2400 dpi. This is obscene and results in files that are entire GB in size, but for my purposes and my approach, the largest, clearest, rawest copy of whatever I'm scanning is my goal. I don't rely on the scanner to do any post-processing. (At these sizes, the post-processing capacity of the scanner is rendered moot, anyway.) I will replace this scanner when it breaks by buying another identical one if I can find it. I have dropped, disassembled to clean, and abused this thing for a decade and I can't believe it still tolerates my shit. The trade off? Only a couple of my computers will run the ancient capture software right. LMAO. I spent a good week investigating scanners because of the insane Newtype project on my backburner, and the quality available to me now in a scanner is so depleted without spending over a thousand on one, that I'd probably just spin up a computer with Windows 7 on it just to use this one. That's how much of a difference the decade has made in what scanners do and why. (Enshittification attacks! Yes, there are multiple consumer computer products that have actually declined in quality over the last decade.)

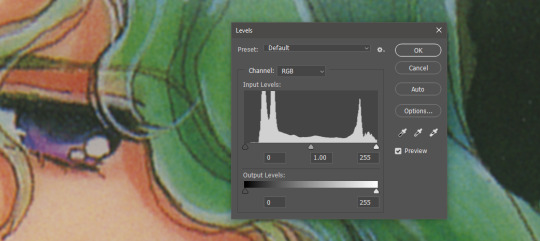

Post-processing: Photoshop. Sorry. I have been using Photoshop for literally decades now, it's the demon I know. While CSP is absolutely probably the better piece of software for most uses (art,) Photoshop is...well it's in the name. In all likelihood though, CSP can do all these things, and is a better product to give money to. I just don't know how. NOTENOTENOTE: Anywhere I discuss descreening and print moire I am specifically talking about how to clean up *printed media.* If you are scanning your own painting, this will not be a problem, but everything else about this advice will stand! The first thing you do with a 2400 dpi scan of Utena and Anthy hugging? Well, you open it in Photoshop, which you may or may not have paid for. Then you use a third party developer's plug-in to Descreen the image. I use Sattva. Now this may or may not be what you want in archiving!!! If fidelity to the original scan is the point, you may pass on this part--you are trying to preserve the print screen, moire, half-tones, and other ways print media tricks the eye. If you're me, this tool helps translate the raw scan of the printed dots on the page into the smooth color image you see in person. From there, the vast majority of your efforts will boil down to the following Photoshop tools: Levels/Curves, Color Balance, and Selective Color. Dust and Scratches, Median, Blur, and Remove Noise will also be close friends of the printed page to digital format archiver. Once you're happy with the broad strokes, you can start cropping and sizing it down to something reasonable. If you are dealing with lots of images with the same needs, like when I've scanned doujinshi pages, you can often streamline a lot of this using Photoshop Actions.

My blacks and whites are coming out so vivid this time because I do all color post-processing in Photoshop after the fact, after a descreen tool has been used to translate the dot matrix colors to solids they're intended to portray--in my experience trying to color correct for dark and light colors is a hot mess until that process is done, because Photoshop sees the full range of the dots on the image and the colors they comprise, instead of actually blending them into their intended shades. I don't correct the levels until I've descreened to some extent.

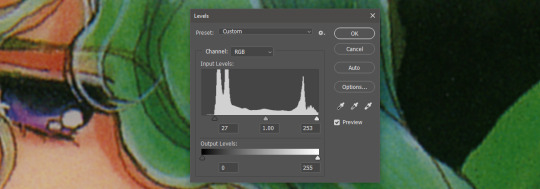

As you can see, the print pattern contains the information of the original painting, but if you try to correct the blacks and whites, you'll get a janky mess. *Then* you change the Levels:

If you've ever edited audio, then dealing with photo Levels and Curves will be familiar to you! A well cut and cleaned piece of audio will not cut off the highs and lows, but also will make sure it uses the full range available to it. Modern scanners are trying to do this all for you, so they blow out the colors and increase the brightness and contrast significantly, because solid blacks and solid whites are often the entire thing you're aiming for--document scanning, basically. This is like when audio is made so loud details at the high and low get cut off. Boo.

What I get instead is as much detail as possible, but also at a volume that needs correcting:

Cutting off the unused color ranges (in this case it's all dark), you get the best chance of capturing the original black and white range:

In some cases, I edit beyond this--for doujinshi scans, I aim for solid blacks and whites, because I need the file sizes to be normal and can't spend gigs of space on dust. For accuracy though, this is where I'd generally stop.

For scanning artwork, the major factor here that may be fucking up your game? Yep. The scanner. Modern scanners are like cheap microphones that blow out the audio, when what you want is the ancient microphone that captures your cat farting in the next room over. While you can compensate A LOT in Photoshop and bring out blacks and whites that scanners fuck up, at the end of the day, what's probably stopping you up is that you want to use your scanner for something scanners are no longer designed to do well. If you aren't crazy like me and likely to get a vintage scanner for this purpose, keep in mind that what you are looking for is specifically *a photo scanner.* These are the ones designed to capture the most range, and at the highest DPI. It will be a flatbed. Don't waste your time with anything else.

Hot tip: if you aren't scanning often, look into your local library or photo processing store. They will have access to modern scanners that specialize in the same priorities I've listed here, and many will scan to your specifications (high dpi, lossless.)

Ahem. I hope that helps, and or was interesting to someone!!!

#utena#image archiving#scanning#archiving#revolutionary girl utena#digitizing#photo scanner#art scanning

240 notes

·

View notes

Text

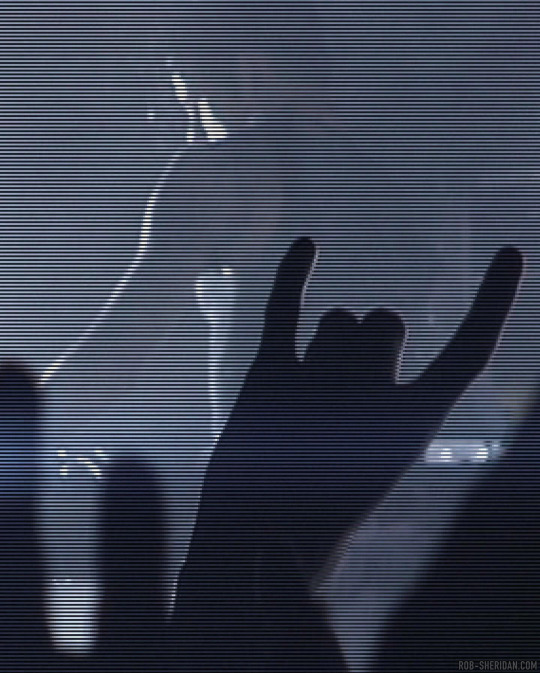

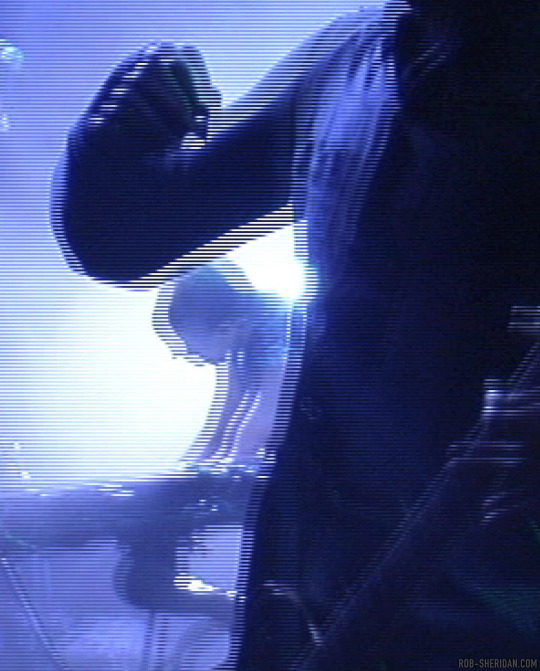

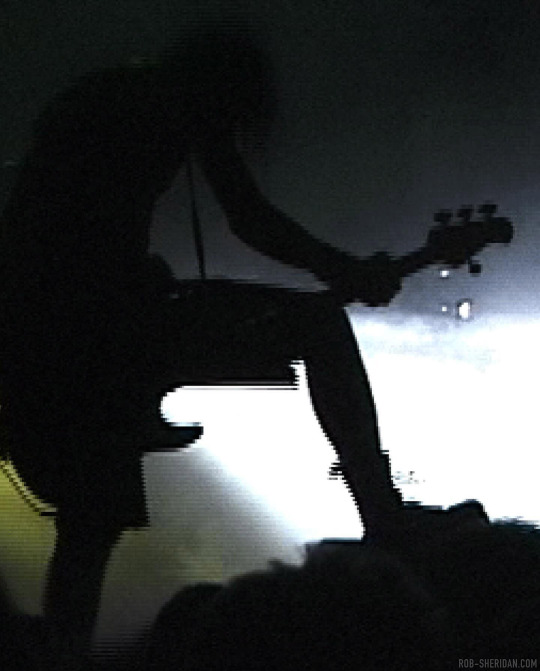

Nine Inch Nails' And All That Could Have Been DVD, released on this day in 2002. I found a folder of raw screengrabs from 2001, during the editing process, where some of the footage was still interlaced from the consumer DV cameras I filmed on in 2000. Here are some enlarged details, which have a phenomenal early-digital texture to them now, and it’s remarkable to see the resolution we were working with to put that film together (these were direct TIFF frame outputs from FCP - all the compression you see in here is from the camera!)

I always loved the way interlaced frames digitally ripped an image apart, and it inspired me in the creation of the “With Teeth” visual aesthetic a couple years later. In 2004, as the prosumer digital video world was continuing the clunky transition away from interlacing, I discovered the first version of Apple’s Motion software did not know what to do with interlaced footage, and would in fact render the interlacing as part of the video when you zoomed into it. That happy glitch accident formed the aesthetic foundation of the video for “The Hand That Feeds.”

#nin#nine inch nails#and all that could have been#aatchb#rob sheridan#trent reznor#glitch#dvd#digital video#dv#2000s music#early 2000s#2000s aesthetic

205 notes

·

View notes

Text

Reference saved on our archive (Daily updates! Thousands of Science, News, and other sources on covid!)

Could we develop a covid test breathalyzer? This is a study of one such device!

Abstract The SARS-CoV-2 coronavirus emerged in 2019 causing a COVID-19 pandemic that resulted in 7 million deaths out of 770 million reported cases over the next 4 years. The global health emergency called for unprecedented efforts to monitor and reduce the rate of infection, pushing the study of new diagnostic methods. In this paper, we introduce a cheap, fast, and non-invasive COVID-19 detection system, which exploits only exhaled breath. Specifically, provided an air sample, the mass spectra in the 10–351 mass-to-charge range are measured using an original micro and nano-sampling device coupled with a high-precision spectrometer; then, the raw spectra are processed by custom software algorithms; the clean and augmented data are eventually classified using state-of-the-art machine-learning algorithms. An uncontrolled clinical trial was conducted between 2021 and 2022 on 302 subjects who were concerned about being infected, either due to exhibiting symptoms or having recently recovered from illness. Despite the simplicity of use, our system showed a performance comparable to the traditional polymerase-chain-reaction and antigen testing in identifying cases of COVID-19 (that is, 95% accuracy, 94% recall, 96% specificity, and 92% F1-score). In light of these outcomes, we think that the proposed system holds the potential for substantial contributions to routine screenings and expedited responses during future epidemics, as it yields results comparable to state-of-the-art methods, providing them in a more rapid and less invasive manner.

#mask up#covid#pandemic#wear a mask#public health#covid 19#wear a respirator#still coviding#coronavirus#sars cov 2

40 notes

·

View notes

Text

Really, Fuck The Official NaNoWriMo

Ugh. As if the entire stuff with folks from the forum team grooming kids was not enough, NaNoWriMo has now come out with official policies about AI writing. And just look at this fucking mess.

Source.

Like, they make it seem as if AI is just about using AI in the editing process. But we all know it is not. If they make a blanket statement about AI being okay, this will include fullon generative AI, which is by definition not writing.

And look. While I absolutely am for paying editors and stuff if you can afford them, I can see using an AI to get some baseline editing done. Especially if you are not expecting or intending to make a profit from your writing. (Maybe, because you are writing fanfiction, or because you know that your self-published novel will probably not sell more than 200 copies. Let's face it also: Writers are very much underpaid in all areas!)

But generative AI? I am sorry, basically everyone can write. Not everyone can write well, but that never was the point of the NaNoWriMo. It was not about writing well, not about having a perfect product in the end, but just about the accomplishment of writing 50k within one month. No matter how raw and unedited those 50k were.

And I am sorry, I am very, very much disabled. And no, expecting others to either write themselves or if they are not motivated enough to not participate is not ableism. I am also very, very poor. Like, VERY POOR. And no, it is not classist to ask writers to write for themselves.

In fact it is classist and ableist to support software that has been illegally trained on the writing of often poor and at times disabled writers on a variety of websites online.

So yeah, fuck this organization.

I am still gonna do my own challenge for November - but I am not officially participating.

Fuck them.

Fuck AI.

48 notes

·

View notes

Text

backlit critters

Backlit Critters Small inconspicuous, mostly grey insects, spiders and crabs show many colours and interesting structures under high magnification and polarized backlight. All photos were taken with the self-made setup. They are high resolution photos taken with microscope objectives, stacked and stitched together. The images were processed with software for raw processing, stacking and retouching. Water flea, pol. Copepod bicolor, pol. Brine shrimp, oblique Jumping spider, backl. Varroa mite, oblique Box sucker, pol. Red mite, pol. Head louse, oblique Ant, backl. Hard-bodied tick, oblique

Photographer: Adalbert Mojrzisch

International Photography Awards™

#adalbert mojrzisch#photographer#international photography awards#backlit critters small#spiders#crabs#insects#micro photography#nature#animal

14 notes

·

View notes

Text

i am not really interested in game development but i am interested in modding (or more specifically cheat creation) as a specialized case of reverse-engineering and modifying software running on your machine

like okay for a lot of games the devs provide some sort of easy toolkit which lets even relatively nontechnical players write mods, and these are well-documented, and then games which don't have those often have a single-digit number of highly technical modders who figure out how to do injection and create some kind of api for the less technical modders to use, and that api is often pretty well documented, but the process of creating it absolutely isn't

it's even more interesting for cheat development because it's something hostile to the creators of the software, you are actively trying to break their shit and they are trying to stop you, and of course it's basically completely undocumented because cheat developers both don't want competitors and also don't want the game devs to patch their methods....

maybe some of why this is hard is because it's pretty different for different types of games. i think i'm starting to get a handle on how to do it for this one game - so i know there's a way to do packet sniffing on the game, where the game has a dedicated port and it sends tcp packets, and you can use the game's tick system and also a brute-force attack on its very rudimentary encryption to access the raw packets pretty easily.

through trial and error (i assume) people have figured out how to decode the packets and match them up to various ingame events, which is already used in a publicly available open source tool to do stuff like DPS calculation.

i think, without too much trouble, you could probably step this up and intercept/modify existing packets? like it looks like while damage is calculated on the server side, whether or not you hit an enemy is calculated on the client side and you could maybe modify it to always hit... idk.

apparently the free cheats out there (which i would not touch with a 100 foot pole, odds those have something in them that steals your login credentials is close to 100%) operate off a proxy server model, which i assume intercepts your packets, modifies them based on what cheats you tell it you have active, and then forwards them to the server.

but they also manage to give you an ingame GUI to create those cheats, which is clearly something i don't understand. the foss sniffer opens itself up in a new window instead of modifying the ingame GUI.

man i really want to like. shadow these guys and see their dev process for a day because i'm really curious. and also read their codebase. but alas

#coding#past the point of my life where i am interested in cheating in games#but if anything i am even more interested in figuring out how to exploit systems

48 notes

·

View notes

Text

the digital copy of a canticle for liebowitz i downloaded to read on my kindle has these occasional transcription errors in it, where what was clearly supposed to be "frown" in the original text will read as "flown" or "how" will read as "bow" and so on... i think this must have come out of the process of transferring the book from one file format to another so it would be compatible with e-readers, maybe some software scanned a pdf to turn it into raw text and misidentified certain glyphs. very charming, every time i see a transcription error in the text it makes me think of monks in the scriptorium, cute and fitting for the book even if the mistake was made by a machine...

31 notes

·

View notes

Text

I really want to see the scene that Connor’s skin projection fluctuates when he detects the broadcast video of Markus, like the pigment cells of octopus flickering during environment change, or the Husky howling along with the wolves in TV instinctively. And another intriguing thing is the freckles the moles the flushed cheeks whatever lower people’s defense are all calculated and then presented. The raw appearance of emotions (software instability) might be colour fading (having no RAM for processing mimicking human) or turning blue (pumps losing control). Android and human may have the same feeling but different in expression.

10 notes

·

View notes

Text

If you want good quality pictures on your phone which are not processed to death by your phone camera software you need to save your files in RAW and then convert it to jpeg manually. (Raw are heavy so except if you're a professional photographer there is no need to keep it once you've converted it.)

Like I know you should not have to do this manually. Your phone should do it for you. But it doesn't sadly, it automatically applies tones of filters that you did not ask for while converting it into jpeg and that suck. (Including skin lightening! Like wtf)

The only big issue is that you cannot take pictures with the full resolution of your camera but even at 16mp my pics look better than the ultra processed 64mp.

I'm using the following apps and they work fine.

For taking RAW pictures in 16mp:

Manual Camera: DSLR camera Pro (less than 5€)

I've heard that Camera FV-5 works well too, so try both free versions before buying one of them.

For converting your RAW into high quality jpeg:

Raw Image Viewer or Lightroom

I'm gonna go outside to shot some comparison pictures because the ones I have taken today are not ones I want to post lol. So stay tuned.

22 notes

·

View notes