#nvidia volta gaming graphic cards

Explore tagged Tumblr posts

Text

Nvidia AI Enhances Design Tools for Simulation, Generative Modeling, and OptiX

There are a number of ways that Nvidia is making improvements to its AI capabilities. Some of these include its Enterprise AI platform, its DLSS tool, and its OptiX design tool. All of these tools help to simplify and streamline AI and high-performance computing workloads for business.

DLSS

DLSS for Nvidia AI provides an extra boost to the image quality of certain games. It can improve performance by up to 60%, and is compatible with the latest gaming titles. You can also bank on a Reflex lag reduction technology to knock milliseconds off game lag.

The DLSS algorithm uses a convolutional autoencoder that takes a low resolution frame and a high-resolution frame and determines which is the higher quality. This may sound simple, but it's actually more complex than it sounds.

The DLSS 2.0 is more advanced than DLSS 1.0, which was the first version of this tech. The new algorithm uses an updated neural network. The network is fed thousands of images to learn the best way to render an image.

DLSS 2.0 was designed to be two times faster than the original. Its Optical Flow Accelerator helps avoid geometry tracking distortions. In addition to upscaling, DLSS 2.0 adds temporal feedback. Using motion vectors, it can calculate the next frame in advance, making it a more stable system.

Another major upgrade is the Optical Flow Field. This helps reduce visual anomalies, and makes upscaling more efficient.

It also increases frame rates, which means you can play games with a better resolution on less powerful graphics cards. However, if you don't have a RTX card, you won't get the benefits of DLSS.

For the most part, the DLSS for Nvidia AI has been a great improvement on its predecessor. It helps users experience a smoother, more realistic game, and it even improves the image quality of older titles.

DLSS 2.0 works with Nvidia RTX cards, and it can be used to accelerate up to 60% more frames per second. However, it's only available on the RTX 4000 series.

OptiX

NVIDIA OptiX is a feature of the company's GameWorks technology that accelerates the ray tracing of large and complex algorithms. It's also used for a wide variety of other applications.

OptiX allows artists to create faster, more iterative renders. It uses Nvidia's CUDA toolkit.

Besides being GPU-accelerated, OptiX is a domain-specific API. This means it doesn't require an external plugin. In addition, OptiX uses a BVH traversal. All of this means that OptiX provides a very simple pipeline for ray tracing.

OptiX 5.0, which will be released in November, is based on Nvidia's new Volta architecture. This new architecture delivers better ray tracing performance than its predecessors. Moreover, it includes AI-accelerated denoising.

NVIDIA has been at the forefront of the industry in terms of GPU-powered AI. The company has worked on projects such as facial animation, anti-aliasing, and light transport.

The company's research and development is always expanding to create more GPU-accelerated graphics and AI applications. Currently, NVIDIA's neural network is trained by tens of thousands of image pairs. And if the neural network performs well enough, it's also possible that Nvidia will allow developers to use their own data.

There's no word on when the company will release additional research. However, Nvidia has claimed that the vast majority of major 3D design applications will support RTX by the end of 2018.

NVIDIA is also at the forefront of GPU manufacturing. Their powerful graphics cards are at the heart of their latest products, which include the RTX Studio laptops and Quadro RTX GPUs. These are the first to support real-time ray tracing and advanced AI capabilities.

In addition, the company has announced a new personal deep learning supercomputer. With these, artists can create more beautiful, interactive worlds faster.

AI-enhanced design tools

Nvidia recently announced AI-enhanced design tools for simulation, generative modeling, and avatar development. These tools are intended to expand the company's AI capabilities and bring together workflows that are already familiar to developers. They will allow engineers, artists, and designers to simulate environments in real time, charge a simulation tool, or develop new applications.

The company also recently introduced a new generative AI model called GauGAN2. This technology can be used to create realistic images from simple elements. For example, a user can draw a sketch with a simple graphic tool, and the software will then generate a photorealistic image.

In addition, the company has also launched a new set of Nvidia Omniverse simulation tools. Developed in conjunction with Unity, these new connectors are meant to extend the Omniverse ecosystem. And they deliver improved performance.

The latest update to the Omniverse platform introduces an experimental suite of generative AI tools for 3D artists. It also includes an updated version of the Unity Omniverse Connector.

During the event, Nvidia showed six artists collaborating on a 3-D scene in real-time. While human intervention is still necessary in most areas, the new software is meant to provide more streamlined, automated workflows.

Nvidia has been very aggressive in pursuing AI, and the company says it has found a number of uses for it in silicon and chip development. But there are some limitations.

One of the biggest limitations is the lack of AI for black-and-white films. However, Nvidia's AI can do a good job with recent video.

Nvidia also announced an upgrade to the Isaac Sim tool, which allows developers to build AI-enabled chips. Several companies are using these tools, including Cadence.

Streamline AI and high-performance computing workloads

NVIDIA AI Enterprise software enables organizations to quickly deploy, manage, and optimize the performance of AI and high-performance computing workloads. With its proven, open-source containers, frameworks, and cloud native deployment software, enterprises can simplify the way they do business and build innovative AI systems.

The HPE GreenLake solution is a part of NVIDIA AI Enterprise and offers customers the industry's most complete, end-to-end HPC and AI platform. This solution helps customers identify their existing and future AI needs, assess their current infrastructure, and deploy solutions that best match their requirements.

With the help of the HPE GreenLake and NVIDIA AI Enterprise solution, customers can reduce their development time and cost of ownership while achieving increased performance and security. This software also provides enterprise-grade support and simplifies the way enterprises adopt and operate AI.

NVIDIA GPUs deliver unprecedented performance, scalability, and security, making them ideal for training, accelerating, and inferring AI models and enabling data science and HPC workloads. They are available in both single- and dual-GPU configurations. Whether it's optimizing server configurations or offloading the management of an entire data science ecosystem, a GPU-accelerated system can dramatically improve the performance of your AI and high-performance computing workloads.

NVIDIA DGX(tm) systems are designed to handle machine and deep learning workloads. NVIDIA DGX(tm)-Ready Software includes enterprise-grade MLOps solutions, cluster management, and scheduling and orchestration tools. These systems are available for desktop, server, and data center use. A DGX system can support up to five PetaFLOPS. Using these systems, businesses can rapidly scale up their supercomputing infrastructure.

IBM Cloud bare metal servers are equipped with up to two Tesla V100 PCIe GPU accelerators. The Tesla GPU architecture is a next-generation NVIDIA design, delivering a breakthrough in performance, throughput, and efficiency.

Enterprise AI platform

Nvidia's Enterprise AI platform offers a suite of enterprise-grade artificial intelligence (AI) software tools that can help organizations develop AI solutions that address industry needs and boost efficiency. It includes a powerful toolkit for AI workflows, a range of pre-configured neural networks, and a host of frameworks for data scientists and developers. In addition, the platform supports deep learning and scaled deployment.

The Nvidia Enterprise AI-platform supports workloads on public and private cloud platforms, as well as on bare metal and on Nvidia DGX systems. It also includes a set of industry-specific applications and frameworks for data scientists and developers, plus a range of tools for training and backtesting AI models. Whether used in a public or private cloud, the platform streamlines deployments and inference, providing a powerful toolkit for organizations that want to adopt AI.

Lenovo's AI-ready systems provide the performance, manageability, and resilience needed for AI workloads. Together with NVIDIA, the Lenovo AI Center of Discovery and Excellence provides guidance for proof of concept testing, as well as performance validation.

Powered by Lenovo ThinkSystem server platforms, Nvidia's Enterprise AI platform allows for rapid AI workload management, scaled deployment, and support for vSphere virtualized infrastructure. The platform also delivers a broad range of storage and data center support, including NVIDIA-certified mainstream servers from Dell and HPE.

The platform is a comprehensive solution that includes industry-specific application frameworks, open-sourced frameworks, and more. It includes tools for data scientists, data analysts, and developers.

Nvidia's Digital Fingerprinting AI workflow uniquely fingerprints every machine and service. This approach improves security, streamlines data prep, and speeds up debugging and training. It also works with machine learning frameworks, including Python, R, and Java.

0 notes

Text

NVIDIA TITAN RTX

NVIDIA's TITAN arrangement of designs cards has been a fascinating one since the dispatch of the first in 2013. That Kepler-based GTX TITAN model topped at 4.5 TFLOPS single-exactness (FP32), execution that was supported to 5.1 TFLOPS with the arrival of the TITAN Black the next year.

Quick forward to the current day, where we presently have the TITAN RTX, bragging 16.3 TFLOPS single-accuracy, and 32.6 TFLOPS of half-exactness (FP16). Twofold exactness (FP64) used to be standard admission on the prior TITANs, however today, you'll need the Volta-based TITAN V for opened execution (6.1 TFLOPS), or AMD's Radeon VII for in part opened execution (3.4 TFLOPS).

Of late, half-accuracy has collected a great deal of consideration by the ProViz showcase, since it's optimal for use with profound learning and AI, things that are developing in fame at an incredibly brisk pace. Add explicitly tuned Tensor centers to the blend, and profound learning execution on Turing turns out to be genuinely amazing.

NVIDIA TITAN RTX Graphics Card

Tensors are not by any means the only party stunt the TITAN RTX has. Like the remainder of the RTX line (on both the gaming and genius side), RT centers are available in the TITAN RTX, helpful for quickening continuous beam following outstanding tasks at hand. The centers should be explicitly bolstered by engineers, utilizing APIs, for example, DXR and VKRay. While support for NVIDIA's innovation began lukewarm, industry support has grown a great deal since the first disclosing of RTX at SIGGRAPH a year ago.

At E3 in June, a bunch of games had beam following related declarations, including Watch_Dogs: Legion, Cyberpunk 2077, Call of Duty: Modern Warfare, and obviously, Quake II RTX. On the plan side, a few designers have just discharged their RTX quickened arrangements, while a lot more are underway. NVIDIA has been gabbing of late about the Adobes and Autodesks of the world assisting with developing the rundown of RTX-implanted programming. We wouldn't be astonished if more RTX goodness was uncovered at SIGGRAPH this year once more.

For profound learning, the TITAN RTX's solid FP16 execution is quick all alone, however there are a couple of advantages locally available to help take things to the following level. The Tensor centers help in a significant part of the increasing speed, however the capacity to utilize blended exactness is another enormous part. With it, insignificant information following will be put away in single-accuracy, while the key information will get crunched into equal parts exactness. Everything consolidated, this can support preparing execution by 3x over the base GPU.

NVIDIA's TITAN RTX and GeForce RTX 2080 Ti - Backs

Likewise eminent for Turing is simultaneous number/coasting point tasks, which permits games (or programming) to execute INT and FP activities in equal without stumbling over one another in the pipeline. NVIDIA has noted in the past that with games like Shadow of the Tomb Raider, an example set of 100 guidelines included 62 FP and 38 INT, and that this simultaneous component legitimately improves execution accordingly.

Another significant element of TITAN RTX is its capacity to utilize NVLink, which basically consolidates the memory pools of two cards together, bringing about a solitary framebuffer that can be utilized for the greatest potential tasks. Since GPUs scale commonly very well with the sorts of outstanding tasks at hand the card focuses on, it's the genuine memory pooling that is going to offer the best advantage here. Gaming content that could likewise exploit multi-GPU would see an advantage with two cards and this connector, also.

Since it's an element selective to these RTX GPUs at the present time, it merits referencing that NVIDIA likewise packages a VirtualLink port at the back, permitting you to connect your HMD for VR, or in the most pessimistic scenario, use it as a full-fueled USB-C port, either for information move or telephone charging.

With the entirety of that secured, we should investigate the general current NVIDIA workstation stack:

NVIDIA's Quadro and TITAN Workstation GPU Lineup

Cores Base MHz Peak FP32 Memory Bandwidth TDP Price

GV100 5120 1200 14.9 TFLOPS 32 GB 8 870 GB/s 185W $8,999

RTX 8000 4608 1440 16.3 TFLOPS 48 GB 5 624 GB/s ???W $5,500

RTX 6000 4608 1440 16.3 TFLOPS 24 GB 5 624 GB/s 295W $4,000

RTX 5000 3072 1350 11.2 TFLOPS 16 GB 5 448 GB/s 265W $2,300

RTX 4000 2304 1005 7.1 TFLOPS 8 GB 1 416 GB/s 160W $900

TITAN RTX 4608 1350 16.3 TFLOPS 24 GB 1 672 GB/s 280W $2,499

TITAN V 5120 1200 14.9 TFLOPS 12 GB 4 653 GB/s 250W $2,999

P6000 3840 1417 11.8 TFLOPS 24 GB 6 432 GB/s 250W $4,999

P5000 2560 1607 8.9 TFLOPS 16 GB 6 288 GB/s 180W $1,999

P4000 1792 1227 5.3 TFLOPS 8 GB 3 243 GB/s 105W $799

P2000 1024 1370 3.0 TFLOPS 5 GB 3 140 GB/s 75W $399

P1000 640 1354 1.9 TFLOPS 4 GB 3 80 GB/s 47W $299

P620 512 1354 1.4 TFLOPS 2 GB 3 80 GB/s 40W $199

P600 384 1354 1.2 TFLOPS 2 GB 3 64 GB/s 40W $179

P400 256 1070 0.6 TFLOPS 2 GB 3 32 GB/s 30W $139

Notes 1 GDDR6; 2 GDDR5X; 3 GDDR5; 4 HBM2

5 GDDR6 (ECC); 6 GDDR5X (ECC); 7 GDDR5 (ECC); 8 HBM2 (ECC)

Design: P = Pascal; V = Volta; RTX = Turing

The TITAN RTX matches the Quadro RTX 6000 and 8000 for having the most elevated number of centers in the Turing lineup. NVIDIA says the TITAN RTX is around 3 TFLOPS quicker in FP32 over the RTX 2080 Ti, and luckily, we have results for the two cards covering a wide-scope of tests to perceive how they analyze.

What's not found in the specs table above is the real execution of the beam following and profound learning segments. This next table enables away from of that to up:

NVIDIA's Quadro and TITAN – RTX Performance

RT Cores RTX-OPS Rays Cast 1 FP16 2 INT8 3 Deep-learning 2

TITAN RTX 72 84 T 11 32.6 206.1 130.5

RTX 8000 72 84 T 10 32.6 206.1 130.5

RTX 6000 72 84 T 10 32.6 206.1 130.5

RTX 5000 48 62 T 8 22.3 178.4 89.2

RTX 4000 36 43 T 6 14.2 28.5 57

Notes 1 Giga Rays/s; 2 TFLOPS; 3 TOPS

You'll see that the TITAN RTX has a higher "beams cast" spec than the top Quadros, which may owe its gratitude to higher timekeepers. Different specs are indistinguishable over the best three GPUs, with evident downsizing occurring as we move descending. Right now, the Quadro RTX 4000 (approximately a GeForce RTX 2070 equal) is the most reduced end current-gen Quadro from NVIDIA. Once more, SIGGRAPH is nearly upon us, so it may be the case that NVIDIA will have an equipment shock coming up; maybe a RTX 2060 Quadro identical.

When the RTX 2080 Ti as of now offers so much execution, who precisely is the TITAN RTX for? NVIDIA is focusing on it to a great extent at scientists, yet it optionally goes about as one of the quickest ProViz cards available. It could be selected by the individuals who need the quickest GPU arrangement going, and also a colossal 24GB framebuffer. 24GB may be excessive for a ton of current perception work, yet with profound learning, 24GB gives a great deal of breathing room.

In spite of all it offers, TITAN RTX can't be called an "extreme" answer for ProViz since it comes up short on some Quadro enhancements that the namesake GPUs have. That implies in certain top of the line structure suites like Siemens NX, a genuine Quadro may demonstrate better. Yet, on the off chance that you don't utilize any outstanding tasks at hand that experience explicit upgrades, the TITAN RTX will be very appealing given its list of capabilities (and that framebuffer!) If you're at any point befuddled about advancements in your product of decision, if you don't mind leave a remark!

Two or three years prior, NVIDIA chose to give some affection to the TITAN arrangement with driver upgrades that brings some equality among TITAN and Quadro. We would now be able to state that TITAN RTX appreciates a similar sort of execution helps that the TITAN Xp completed two years prior, something that will be reflected in a portion of the charts ahead.

Test PC and What We Test

On the accompanying pages, the consequences of our workstation GPU test gauntlet will be seen. The tests picked spread a wide scope of situations, from rendering to figure, and incorporates the utilization of both manufactured benchmarks and tests with true applications from any semblance of Adobe and Autodesk.

Nineteen designs cards have been tried for this article, with the rundown commanded by Quadro and Radeon Pro workstation cards. There's a sound sprinkling of gaming cards in there also, in any case, to show you any conceivable streamlining that might be occurring on either.

It would be ideal if you note that the testing for this article was directed a few months prior, before an invasion of movement and item dispatches. Illustrations card drivers discharged since our testing may improve execution in specific cases, however we wouldn't anticipate any eminent changes, having mental soundness checked a lot of our typical tried programming on both AMD and NVIDIA GPUs. In like manner, the past rendition of Windows was utilized for this specific testing, yet that additionally didn't uncover any burdens when we rational soundness checked in 1903.

As of late, we've invested a ton of energy cleaning our test suites, and furthermore our interior testing contents. We're right now during the time spent rebenchmarking various GPUs for a forthcoming glance at ProViz execution with cards from both AMD's Radeon RX 5700 and NVIDIA's GeForce SUPER arrangement. Luckily, results from those cards don't generally eat into a top-end card like the TITAN RTX, so lateness hasn't thwarted us this time.

The specs of our test rig are seen beneath:

Techgage Workstation Test System

Processor Intel Core i9-9980XE (18-center; 3.0GHz)

Motherboard ASUS ROG STRIX X299-E GAMING

Memory HyperX FURY (4x16GB; DDR4-2666 16-18-18)

Graphics AMD Radeon VII (16GB)

AMD Radeon RX Vega 64 (8GB)

AMD Radeon RX 590 (8GB)

AMD Radeon Pro WX 8200 (8GB)

AMD Radeon Pro WX 7100 (8GB)

AMD Radeon Pro WX 5100 (8GB)

AMD Radeon Pro WX 4100 (4GB)

AMD Radeon Pro WX 3100 (4GB)

NVIDIA TITAN RTX (24GB)

NVIDIA TITAN Xp (12GB)

NVIDIA GeForce RTX 2080 Ti (11GB)

NVIDIA GeForce RTX 2060 (6GB)

NVIDIA GeForce GTX 1080 Ti (11GB)

NVIDIA GeForce GTX 1660 Ti (6GB)

NVIDIA Quadro RTX 4000 (8GB)

NVIDIA Quadro P6000 (24GB)

NVIDIA Quadro P5000 (12GB)

NVIDIA Quadro P4000 (8GB)

NVIDIA Quadro P2000 (5GB)

Audio Onboard

Storage Kingston KC1000 960G

click here to know more

1 note

·

View note

Text

Geforce 11xx Or 20xx By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

New Post has been published on https://www.ultragamerz.com/geforce-11xx-or-20xx-by-nvidia-ampere-or-volta-to-face-amd-vega-2-in-2018/

Geforce 11xx Or 20xx By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

Geforce 11xx Or 20xx Made By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

Next Geforce Will be made by Ampere or Volta? It will Be Revealed at GTC – March 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018 – Credit By Nvidia

NVIDIA just released the Nvidia Titan V which is not for gaming but it is called the most powerful graphics card ever made for PC and it is made by 12nm volta that if every thing was going normal was going to be Nviidia next platform. Now recently the next Nvidia architecture for gaming is rumored to be called ampere. As far we know Nvidia is planning to reveal or announce Ampere or “A” gaming version of the volta graphics during GPU Technology Conference in March and in the first half of 2018. Also CES 2018 is on its way and it is one of the possibilities that they start talking about the new GPU series in CES and many are believing that it is going to be the case for AMD as well. One important thing to point out is that these two companies release of the new product may actually depend on the other very much. AMD is going to jump to the next VEGA 2 12nm soon in early 2018 too. Nvidia Next Gen may be named the GeForce 11 or GeForce 20.

Nvidia Ampere is new and only have been revealed in some not official ways while Volta has been named in Nvidia 2020 road maps and have 2 main graphics out now for AI processing. I there was no leak about the Ampere we were talking about the Volta gaming version only. But also from the the Nvidia CEO side we have seen trying to say NO there is not any Volta gaming at least now. Having all that said, NVIDIA CEO has mentioned that there is no plan to move Volta in gaming market yet:

“Volta for gaming, we haven’t announced anything. And all I can say is that our pipeline is filled with some exciting new toys for the gamers, and we have some really exciting new technology to offer them in the pipeline. But for the holiday season for the foreseeable future, I think Pascal is just unbeatable. It’s just the best thing out there. And everybody who’s looking forward to playing Call of Duty or Destiny 2, if they don’t already have one, should run out and get themselves a Pascal.” Nvidia CEO Jensen Huang

THE POWER OF GTC 2018 SILICON VALLEY MARCH 26-29, 2018

GTC is the largest and most important event of the year for GPU developers around the world in different place and it will be 2018 in silicon valley. you can explore GTC and the global GTC event series for valuable training and a showcase of the most vital work in the computing industry today. Get updated by the latest breakthroughs in everything from artificial intelligence and deep learning to healthcare, virtual reality, accelerated analytics, and self-driving cars. “IF GPUs ARE TIME MACHINES, THEN GTC IS WHERE YOU COME SEE THE FUTURE.” Jensen Huang, NVIDIA. FEATURED 2018 SPEAKERS and Hundreds of additional GTC 2018 speakers will be added.

FEATURED 2018 SPEAKERS

Nvidia Volta 2018 Credit: Nvidia

Tags: Graphic cards, Nvidia, Nvidia 2018, Nvidia Ampere 2018, nvidia new gaming graphics, nvidia volta, nvidia volta 2018, nvidia volta game GPU, nvidia volta gaming graphic cards, nvidia ampere, technology, gtc 2018, nvda, ampere

Related Post

Mario + Rabbids Kingdom Battle: Rescue Luigi With ...

Fire Emblem Echoes Shadows of Valentia season pass

8 Core AMD RYZEN PRO CPUs, What Do They Deliver?

Fallout 4 official trailer

Destiny 2 gameplay reaveal

Can Project Scorpio deliver on its promises ?

.yuzo_related_post imgwidth:146px !important; height:136px !important; .yuzo_related_post .relatedthumbline-height:14px;background:#ffffff !important;color:!important; .yuzo_related_post .relatedthumb:hoverbackground:#ff3a3a !important; -webkit-transition: background 0.2s linear; -moz-transition: background 0.2s linear; -o-transition: background 0.2s linear; transition: background 0.2s linear;;color:!important; .yuzo_related_post .relatedthumb acolor:!important; .yuzo_related_post .relatedthumb a:hover color:!important;} .yuzo_related_post .relatedthumb:hover a color:!important; .yuzo_related_post .yuzo_text color:!important; .yuzo_related_post .relatedthumb:hover .yuzo_text color:!important; .yuzo_related_post .relatedthumb margin: 0px 0px 0px 0px; padding: 5px 5px 5px 5px; .yuzo_related_post .relatedthumb

#ampere#Graphic cards#gtc 2018#NVDA#Nvidia#Nvidia 2018#nvidia ampere#Nvidia Ampere 2018#nvidia new gaming graphics#nvidia volta#nvidia volta 2018#nvidia volta game GPU#nvidia volta gaming graphic cards#technology#Gaming hardware#Gaming News#Technology

0 notes

Text

AMPERE | Nvidia’s Next Gaming Graphics of 2018 | Report Say

New Post has been published on https://www.ultragamerz.com/ampere-nvidias-next-gaming-graphics-of-2018-report-say/

AMPERE | Nvidia’s Next Gaming Graphics of 2018 | Report Say

00

AMPERE | NVIDIA’s Next Gaming Graphics in 2018 | Report Say

When it comes to releasing new lines of products especially in gaming computer hardware with high competition of hardware makers like AMD, Intel and NVIDA, for every company the next move depends on the way the competitors act. After romures about AMD expediting the 12nm AMD Radeon RX VEGA graphics of the 2nd generation from 2019 to 2018, now we are hearing some mords about Nvidia’s new line called Ampere to replace Pascal. It is not exactly clear though coase the old plan was going to put the Volta on gaming graphics market after Pascal but now new rumors suggest a very different story. Nvidia has reached the end of the Pascal life, which means it is time to look towards the future and it was spouse to be Volta. We have seen that Volta is on the way, in-fact, the V100 chip has already been used in Tesla card, but what’s next on Nvidia’s roadmap is empty. According to reports this week, Nvidia’s next architecture is set to be called “Ampere” and may be revealed officially in 2018. Heise.de reports, information on Nvidia’s next-generation architecture should be at the GPU Technology Conference next year, under the name ‘Ampere’. The suggested title makes kind of sense with Nvidia historically naming their GPU generations after prominent scientists, and following pascal, Alessandro Volta with Andre-Marie Ampere works given their electrical heritage.

Nvidia typically has released 5 years roadmaps for its GPUs. It makes sense if they are trying to extend the road map but jumping over to Ampere from the Volta that Nvidia recently called it the most power full GUP architecture is a little outside the box here. Unfortunately, nothing else has been released. There is the slight possibility Nvidia have decided their Volta GPU design is too heavily weighted towards AI as all ads and promotions and videos they have released recently about volta is focused on AI, they may want to make a decent consumer graphics card, but that seems incredibly unlikely. If the Nvidia Ampere name is for real then it’s more likely this is the next next-gen GPU of our green team dreams.

Nvidia Volta 2018 Credit: Nvidia

Tags: Graphic cards, Nvidia, Nvidia 2018, Nvidia Ampere 2018, nvidia new graphics, nvidia volta, nvidia volta 2018, nvidia volta game graphics, nvidia volta gaming graphic cards, nvidia volta release date, technology

#Graphic cards#Nvidia#Nvidia 2018#Nvidia Ampere 2018#nvidia new graphics#nvidia volta#nvidia volta 2018#nvidia volta game graphics#nvidia volta gaming graphic cards#nvidia volta release date#technology#Gaming hardware#Gaming News#Technology

0 notes

Text

Rumor :NVIDIA GTX 2080, 2070 Ampere Cards Launching March 26-29 At GTC 2018

Players will soon be introducing the new Nvidia GTX 2080/2070 graphics cards, which are highly anticipated by business and miners who need high graphics power. will introduce GTX 2080 and GTX 2070 graphics cards at the GPU Technology Conference (GTC), which will be held at the end of March. New cards based on 12 nm Ampere graphics architecture are expected by the players with enthusiasm.

Ampere architecture, which has been circulating in rumor over the last few months, has many reports showing that the name is like this although it has not yet been officially announced by Nvidia. Nvidia, which will switch directly to Ampere architecture without using Volta architecture on player cards, has not yet explained Ampere's details. Ampere is a new architecture in its own right or is Volta's developed version, a matter of curiosity. "Ampere" for the game, "Turing" for artificial intelligence

Ampere, which will use TSMC's new 12 nm production technology, comes with Samsung's new 16 GB GDDR6 memories. The first GPU to use the amp architecture is expected to replace GP102 (GTX 1080Ti) in terms of performance, GP104 (GTX 1080/70) as chip size and market positioning. So this means that you can get a GTX 1080Ti display card for the GTX 1080 price.

Apart from these, we also know that the company is working on an architecture called "Turing". Designed for artificial intelligence and machine learning, this architecture is named after renowned computer scientist and mathematician Alan Turing. Nvidia, who said that he made new changes by making minor changes before, seems to have given up this habit. It seems that Nvidia has designed two different architectures for two different areas. Of course, the names we have mentioned in the title are not yet finalized. The phrases "2080" and "2070" have not been officially confirmed yet. We will learn that Nvidia will name new graphics cards as 20xx or 11xx in the GTC, which will be held from March 26-29. Read the full article

1 note

·

View note

Text

The Future of Ray Tracing.

About Ray Tracing

Hey folks lets talk about Graphics and the big news around Ray tracing computer graphics have come in an incredible way since the days of the first video game. Games started off as grids of white squares on a gray background and they've advanced through 2d scenes with 8 and then 16 bit color to polygons complex textures and full 3d. Today games use tools like tessellation shaders occlusion and mapping to achieve nearly photorealistic results and we may be getting closer to that holy grail of lifelike graphics Ray tracing. Let's back up a bit since those early 3d days some of the biggest improvements in graphics have had to do with the way scenes render shadows and reflections, in 2004 doom 3 introduced some of the first high-quality dynamic shadows in gaming this meant the shadows moved with the lights and the characters in the game and the results were fantastic, eerie lighting cast long shadows on catwalks and when enemies ran through hallways lit by swinging lights they cast chaotic shadows that moved with them as they ran it all worked together to thoroughly freak out gamers of the day. Those great shadow and lighting techniques have come a long way since doom 3 but they are still today fundamentally based on the same idea called rasterization. In rasterization a scene is drawn pixel by pixel with the game engine checking things like distance texture proximity light sources and occlusion whether or not anything is in the way before they pick a color for that pixel you move pixel by pixel through a scene and calculate the color for each one based off this physics and geometry of the scene around you eventually you build an image in rasterization lighting is particularly challenging. Light sources kind of project a field where things inside that field get lighter on one side the side facing the light and cast a shadow on the other side but in the real world light doesn't just move in a straight line like that it bounces and diffuses and passes through objects all depending on what they're made of and that's a lot to account for. In rasterization for each light source the computer works out if something is blocking the path of that light and if it is the computer runs through a series of calculations to work out what to do next and the simplest it'll just cast a shadow more complex processes like ambient occlusion try to adjust for the objects in the scene tweaking the lighting to account for the way objects reflect light and cast some shadows on each other but still these methods are relatively crude the simplest forms of ambient occlusion called screen space ambient occlusion or SSAO only account for the depth of an object in the scene, it essentially tries to put more shadows on objects behind other objects but it doesn't actually account for where the lights in the scene are even advanced ambient occlusion at best creates diffuse shadows that still don't actually relate to where light is coming from and all of these methods struggle with direct light harsh shadows mixed lighting basically the wave reflections shadows and highlights all work in the real world. All of this is an attempt to get to what people call global illumination which is the idea that a graphic scene could be rendered just like the real world and here's where ray tracing comes in in the real world light sources emit photons that travel in a straight line until they hit a surface and there they can either pass through the surface be reflected or absorbed these photons bounce around and eventually some of them make it into your eyes and that's how you see. Ray tracing tries to work the exact same way but backwards it draws millions of rays outwards from the camera your perspective in a video game and then it calculates how they'll bounce around the scene whenever array bounces off an object another ray called a shadow ray is drawn towards the light sources in the scene if there's a clear path that first ray makes the object it bounced off writer and if it's blocked it casts a shadow instead the big upside to ray tracing is hyper realistic reflections shadows and highlights that don't need to be pre-rendered all of this lighting detail can be generated based off the geometry in the scene and as characters and lights move around the light changes in dynamic and realistic ways. Ray tracing is particularly good at reflections and refraction things like light moving through a glass of water and color spill or the way that a brightly colored object can change the color of other objects near it. Now ray tracing is potentially actually a simpler system than layering lighting models with shadows and shaders and occlusion but calculating the paths of millions of rays can take an incredible amount of processing power to fully rate raise a standard 1920 x 1080 HD scene you need to draw a minimum of 4 million rays to start getting good results and potentially 50 million or more the backwards system of drawing the Rays out from the camera instead of out from light sources is actually a way to try to cut down on processing by only rendering the Rays that will actually be seen by the player even with this backwards ray trick processing ray-traced images has traditionally been incredibly slow and no games currently used real-time ray tracing

Ray Tracing in Movies

The technology has been entirely limited to pre-rendered video and Hollywood movies animated Studios like Pixar extensively used ray tracing in their films and part of what makes modern special-effects blend so well with real-world footage is the use of ray tracing to create accurate lighting for every part of the scene now these Studios have the luxury of using render farms with dozens of computers working together and even here each frame can take hours to draw instead of the fraction of a second we need for a playable game “a single frame can take more than 24 hours to render”.

Ray Tracing for gaming

The new technology might be changing this, Microsoft has unveiled a new set of tools in DirectX 12 which supports ray tracing. DirectX is the set of graphics tools that power most windows games at a fundamental level and their newly announced DXR API supports ray tracing in games Nvidia also announced their own set of tools called RTX that would leverage their own hardware to speed up real time ray tracing in games, DXR should work on older Nvidia cards as software but RTX actually depends on hardware acceleration that's currently only found on Nvidia's volta line of graphics cards specifically RTX is supposed to add hardware acceleration for ray tracing area shadows reflections and ambient occlusion now this should all work together to create a more lifelike scene not one to be left out AMD announced updates to their radeon ray tracing system including support for real-time ray tracing in games and promised low-level driver support for Microsoft's DXR in the near future unity the game engine of choice for many indie developers is also incorporating AMD's Ray tracing tech into their game design tools though only for what's called baked or non moving lights so are we at the verge of a ray tracing revolution well not quite yet and here's where things actually get a little fishy. NVIDIA is saying it's volta cards will support ray tracing at a hardware level the way current graphic cards have specialized circuits and hardware for shaders or decoding video however on current Volta cards it's unclear what those special Hardware features actually are now part of the problem is that there are almost no volta graphics cards out there most volta cards are actually specialized boards used for supercomputers and AI research and the only one even approaching a consumer product is the $3,000 Titan VIII the recently announced GV 100 Quadro is a professional card that built on Volta and it looks to be extraordinarily powerful but it still isn't really a gaming card, rumors have also suggested Nvidia may entirely skip consumer cards in the volta line and while NVIDIA has said future graphics cards for gamers will incorporate the same hardware acceleration for ray tracing we don't actually know what those future graphics cards will be and as amazing as these demos look there's still more reason to be skeptical Epic's ray tracing demo at GDC was running in real time but it was running on an NVIDIA GTX station a computer that starts at around $50,000 and packs a 20 core processor in with four tesla v 100 cards each of which runs around $9,000 now confusingly tesla was also in the name of a previous Nvidia architecture but the tesla v 100 actually uses a voltage chip as great as these demos look many of them still actually had to use traditional rasterization on parts of the scene to keep the frame rates up as well this also isn't the first time we've heard announcements about real time ray racing Nvidia actually announced real time ray tracing on their Kepler chips at GDC 2012 six years ago so where does all this leave us ? But ray tracing is legit tech that really could make graphics look amazing but the demos we've seen this last year aren't even entirely ray-traced and they were running on hardware that is a long ways from what the average gamer might own Plus this isn't the first time companies have claimed ray tracing was just around the corner Intel made these claims in 2010 and so did Nvidia in 2012 there's another part of this to that as a PC gamer I hate to even talk about it but it's the consoles if running these raytrace demos on a normal gaming PC seems like a fantasy you try getting them to run on a ps4 integrating ray-tracing into a game isn't like flipping a switch it'll take serious work on the part of developers to bring ray-traced graphics into a game I'm sure in the next few years some rave developer come out with an insane ray-traced project for the PC master-race but until the consoles can come close to handling this sort of graphics rendering great racing for all may still be a long ways off.

0 notes

Photo

Watch When it comes to the best graphics cards, Nvidia’s varied selection of awesome graphics cards is unrivaled. From the wickedly powerful GeForce RTX 2080 Ti to the entry-level GTX 1050 – and everything in between. Nvidia remains the crowned ruler of the graphics world. But, because there’s always a demand for new graphics technology and faster hardware, particularly among creators and data scientists, the best graphics card is never enough. This is why Nvidia Volta was created – the next generation architecture for creatives and professionals. There have already been some Nvidia Volta cards out there, but you should consider that these aren’t for gamers – these high-end GPUs are aimed at professionals, and they have price tags to match. That’s not to say that Nvidia Volta isn’t exciting – it definitely is – especially when its developments in AI-powered Tensor cores has trickled down to Nvidia TuringGeForce graphics cards like the GeForce RTX 2080 Ti and RTX 2080. Cut to the chase What.. video

#Games - #video -

0 notes

Text

D3D raytracing no longer exclusive to 2080, as Nvidia brings it to GeForce 10, 16

Enlarge / A screenshot of Metro Exodus with raytracing enabled. (credit: Nvidia)

Microsoft announced DirectX raytracing a year ago, promising to bring hardware-accelerated raytraced graphics to PC gaming. In August, Nvidia announced its RTX 2080 and 2080Ti, a pair of new video cards with the company's new Turing RTX processors. In addition to the regular graphics-processing hardware, these new chips included two extra sets of additional cores, one set designed for running machine-learning algorithms and the other for computing raytraced graphics. These cards were the first, and currently only, cards to support DirectX Raytracing (DXR).

That's going to change in April, as Nvidia has announced that 10-series and 16-series cards will be getting some amount of raytracing support with next month's driver update. Specifically, we're talking about 10-series cards built with Pascal chips (that's the 1060 6GB or higher), Titan-branded cards with Pascal or Volta chips (the Titan X, XP, and V), and 16-series cards with Turing chips (Turing, in contrast to the Turing RTX, lacks the extra cores for raytracing and machine learning).

The GTX 1060 6GB and above should start supporting DXR with next month's Nvidia driver update. (credit: Nvidia)

Unsurprisingly, the performance of these cards will not match that of the RTX chips. RTX chips use both their raytracing cores and their machine-learning cores for DXR graphics. To achieve a suitable level of performance, the raytracing simulates relatively few light rays and uses machine-learning-based antialiasing to flesh out the raytraced images. Absent the dedicated hardware, DXR on the GTX chips will use 32-bit integer operations on the CUDA cores already used for computation and shader workloads.

Read 4 remaining paragraphs | Comments

D3D raytracing no longer exclusive to 2080, as Nvidia brings it to GeForce 10, 16 published first on https://medium.com/@HDDMagReview

0 notes

Text

D3D raytracing no longer exclusive to 2080, as Nvidia brings it to GeForce 10, 16

Enlarge / A screenshot of Metro Exodus with raytracing enabled. (credit: Nvidia)

Microsoft announced DirectX raytracing a year ago, promising to bring hardware-accelerated raytraced graphics to PC gaming. In August, Nvidia announced its RTX 2080 and 2080Ti, a pair of new video cards with the company's new Turing RTX processors. In addition to the regular graphics-processing hardware, these new chips included two extra sets of additional cores, one set designed for running machine-learning algorithms and the other for computing raytraced graphics. These cards were the first, and currently only, cards to support DirectX Raytracing (DXR).

That's going to change in April, as Nvidia has announced that 10-series and 16-series cards will be getting some amount of raytracing support with next month's driver update. Specifically, we're talking about 10-series cards built with Pascal chips (that's the 1060 6GB or higher), Titan-branded cards with Pascal or Volta chips (the Titan X, XP, and V), and 16-series cards with Turing chips (Turing, in contrast to the Turing RTX, lacks the extra cores for raytracing and machine learning).

The GTX 1060 6GB and above should start supporting DXR with next month's Nvidia driver update. (credit: Nvidia)

Unsurprisingly, the performance of these cards will not match that of the RTX chips. RTX chips use both their raytracing cores and their machine-learning cores for DXR graphics. To achieve a suitable level of performance, the raytracing simulates relatively few light rays and uses machine-learning-based antialiasing to flesh out the raytraced images. Absent the dedicated hardware, DXR on the GTX chips will use 32-bit integer operations on the CUDA cores already used for computation and shader workloads.

Read 4 remaining paragraphs | Comments

D3D raytracing no longer exclusive to 2080, as Nvidia brings it to GeForce 10, 16 published first on https://medium.com/@CPUCHamp

0 notes

Text

MSI Gaming X Geforce GTX 1660 TI Review

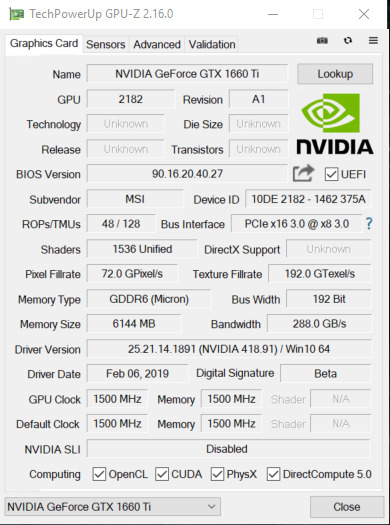

When Nvidia’s 10 series of graphics cards hit two years old, we all started to wonder when the next generation would finally be announced. When the Titan V launched, we had all expected the 11 series to follow shortly after that and be based on Nvidia’s Volta architecture. Then, the rumors started to circulate about the next generation being the 20 series. Many of us, myself included thought this was a joke and that they weren’t going to skip over 11-19. Then, that’s exactly what they did when Nvidia announce the all-new RTX 20 series of GPUs with the RTX 2070, 2080 and 2080 ti and more recently, the 2060. With the move to the RTX series with real-time Ray Tracing, many never expected to see the GTX label on a card again. We were recently thrown a curve ball with the announcement of the GTX 1660 ti. The GTX 1660 ti is still based on the same Turning architecture of the 20 series. However, it doesn’t have the benefit of real-time ray tracing, like the 20 series. Pleased just below the RTX 2060, the GTX 1660 TI is said to have performance that rivals that of the GTX 1070, which launched at a much higher price point. Over the last several generations, I’ve personally grown partial to MSI graphics cards. Their coolers are big, beefy and keep the cards cool, even under load. So, when they asked us to take a look at their all new Gaming X 1660 ti, I couldn’t wait to get my hands on it. If the 1660 ti performs as well as they claim, this card could redefine budget gaming. We put the MSI Gaming X 1660 ti through our suite of benchmarks to see how it stacks up to other cards at a similar price point. Specifications and Features MODEL NAME GeForce® GTX 1660 Ti GAMING X 6G GRAPHICS PROCESSING UNIT NVIDIA® GeForce® GTX 1660 Ti INTERFACE PCI Express x16 3.0 CORES 1536 Units CORE CLOCKS Boost: 1875 MHz MEMORY SPEED 12 Gbps MEMORY 6GB GDDR6 MEMORY BUS 192-bit OUTPUT DisplayPort x 3 (v1.4) / HDMI 2.0b x 1 HDCP SUPPORT 2.2 POWER CONSUMPTION 130 Watts POWER CONNECTORS 8-pin x 1 RECOMMENDED PSU 450 Watt CARD DIMENSION(MM) 247 x 127 x 46 mm WEIGHT (CARD / PACKAGE) 869 g / 1511 g AFTERBURNER OC YES DIRECTX VERSION SUPPORT 12 API OpenGL VERSION SUPPORT 4.5 MAXIMUM DISPLAYS 4 VR READY YES G-SYNC™ TECHNOLOGY YES DIGITAL MAXIMUM RESOLUTION 7680 x 4320 Key Features Twin Frozr 7 Thermal Design TORX Fan 3.0 - Dispersion fan blade: Steep curved blade accelerating the airflow. - Traditional fan blade: Provides steady airflow to massive heat sink below. Mastery of Aerodynamics: The heatsink is optimized for efficient heat dissipation, keeping your temperatures low and performance high. Zero Frozr technology: Stopping the fan in low-load situations, keeping a noise-free environment. RGB Mystic Light Customize colors and LED effects with exclusive MSI software and synchronize the look & feel with other components. Dragon Center A consolidated platform that offers all the software functionality for your MSI Gaming product. Packaging

The front of the box has the MSI logo on the top left-hand corner. An image of the graphics card takes up the majority of the front of the box. Across the bottom, there are the Gaming X and Twin Frozr 7 branding to the left. To the right are the Geforce GTX logo and 1660 ti branding.

The back of the box has a breakdown of the Twin Frozr 7 thermal design. This includes the aerodynamics of the heatsink, as well as a picture showing how the Torx Fans 3.0 work. Below that, they mention a few of the features of Geforce Experience, as well as some of the key features of the Gaming X 1660 ti. The last thing of note are the minimum system requirements for the card. Now, let’s take a close look at the MSI Gaming X 1660 ti.

The card comes packed in soft foam and in an anti-static bag. Along with the card, there is a quick start guide, a driver disk and a couple of coasters. The Gaming X 1660 ti also came packed with a Luck The Dragon comic book and a thank you note from MSI for purchasing one of their graphics cards.

A Closer Look at the MSI Gaming X 1660 ti.

The MSI Gaming X 1660 ti is a PCIe x16, gen 3.0 card. The Gaming X 1660 ti has a boost clock speed of 1875 Mhz. The Gaming X 1660 ti has 6 GB of GDDR6 memory on a 192-bit bus and running at 12 Gbps. The MSI Gaming X GTX 1660 ti is an average sized card measuring 247 mm long, 127 mm tall and 47 mm thick, or about 9.75” x 5.75” x 1.85”. The Gaming X 1660 ti is sporting the Twin Frozr 7 cooler with Torx Fans 3.0 and Zero Frozr technology. This is the seventh generation if the very popular Twin Frozr Cooler from MSI. MSI claims that with the Twin Frozr 7 cooler, they have “mastered the art of aerodynamics.” Airflow Control Technology forces the flow of air directly onto the heat pipes. At the same time, the heatsink of the Twin Frozr cooler provides a large surface area to help dissipate more heat from the heatsink. The heatsink is made up of three, 6mm copper heat pipes that run through a massive, tight aluminum fin array that make up the heatsink of the Twin Frozr 7 cooler. Like other MSI Gaming series cards, the Gaming X 1660 ti uses a large nickel-plated copper base plate to transfer heat from the GPU to the heat pipes. MSI uses only premium thermal compound on the GPUs that is designed to outlive their competition. They also use a die-cast metal sheet that acts as a heatsink for the memory modules. This die-cast sheet connects directly to the IO bracket. This provides additional protection from bending, along with the back plate. The Torx Fan 3.0 has two distinct types of fan blades. The first being the traditions type of blade designed to push air down steadily to the heatsink. The second is what MSI refers to as a dispersion fan blade. This style of fan blade is slightly curved. This curve allows the fan to accelerate airflow, increasing the effectiveness of the fan. One of the key features of the Twin Frozr cooler is its Zero Frozr technology. First implemented in 2008, the Zero Frozr technology allows your card to be silent when under 60°c. As long as the card is under this temperature, the fans will not spin. Once the card reaches above 60°c, the fans will start to spin. This keeps the card silent while the system is idle, or the card is under a light load. While benchmarking, or under a heavy gaming load, the fans spin up to keep the card cool. The MSI Gaming X 1660 TI has what one might call a traditional IO. Meaning one not designed with virtual reality in mind. The IO consists of a single HDMI 2.0 and three DisplayPort 1.4 ports. The Gaming X 1660 ti also has a brushed aluminum backplate that helps with the rigidity of the card. Both the shroud and the back plate wrap around the end of the card for additional protection of the heatsink. This card also sports a custom PCB with a 4+2 power phases and designed with high-end components. The Gaming X 1660 ti is powered by a single 8-pin power connector. Technically, this tier of card is powered by a single 6-pin. However, the combination of the custom PCB and 8-pin power connector should help with overclocking. Like with other graphics card reviews, we did a tear down of the MSI Gaming X 1660 ti. The MSI Gaming X 1660 ti is based on the Nvidia TU116 GPU. The TU116 GPU has 1536 Cuda Cores, 96 TMUs, 48 ROPS and a max TDP of 120 watts. The memory modules are covered by thermal pads to help dissipate the heat. MSI uses 6 GB Micron GDDR6 memory on their Gaming X 1660 ti, model number MT61K256M32 to be exact. Even the components are designed to look good with the MSI dragon logo on each Ferrite Choke. The Super Ferrite Chokes are labeled with SFC on them. Like on other cards they use their Hi-C capacitors. The Gaming X 1660 ti uses a 4 + 2 Phase PWM Controller. I did notice 2 empty spots for memory. So, maybe there will be an 8 GB variant of this card eventually? RGB Lighting and Software

Like most components in your system these days, the Gaming X 1660 ti has RGB lighting with customization options through the RGB Mystic Light app. There is RGB lighting on the side of the card, with the MSI Twin Frozr 7 logo is placed. There is also RGB lighting on the top and bottom of each of the Torx Fans. The Mystic Light app has a total of 19 different setting you can set your card to. These settings are listed below. Rainbow Flowing Magic Patrolling Rain Drop Lightning Marquee Meteor Stack Dance Rhythm Whirling Twisting Fade-In Crossing Steady Breathing Flashing Double Flashing One quick side note on the Mystic Light app. I’m not sure if this was an addition, or excluding to the Gaming X Trio 2080 ti. However, when I checked the Mystic Light app with the Gaming X Trio 2080 ti installed, there was a 20th setting for the lighting on the card. This being Laminating which was similar to the Patrolling effect. Dragon Center The MSI Dragon Center is a desktop application with several functions. It has a function to monitor CPU temperature to the far left of the main screen. There is a Gaming Mode that will optimize your system, monitor, overclock, and Zero Frozr mode with one click.

The performance section has two different preset, and two profiles you can customize. The preset profiles are Silent and OC. These presets, as well as the custom profiles that set the performance of your system.

There is a hardware monitoring section that allows you to monitor several different aspects of your system. You can monitor the following: GPU Frequency GPU Memory Frequency GPU Usage GPU Temperature GPU Fan Speed (%) GPU Fan Speed (RPM) You can even monitor fan speed by both percentage and RPM per fan. The Eye Rest section allows you to customize different setting on your monitor. There are five presets in the Eye Rest Section. They are Default, EyeRest, Game, Movie and Customize. They each have different presets for Gama, Level, Brightness, and Contrast. Each of these can be adjusted for the Reds, Greens, and Blues on the monitor.

The Dragon Center also has a LAN Manager that allows you to set the priority of your internet usage for different applications such as media streaming, file sharing, web browsing or gaming. The Lan Manager has a chart that tells you what applications use the most bandwidth on your system. For me, its Chrome. It even has its own Network Test. The Dragon Center is also where you can enable and disable Zero Frozr Mode. Test System and Testing Procedures

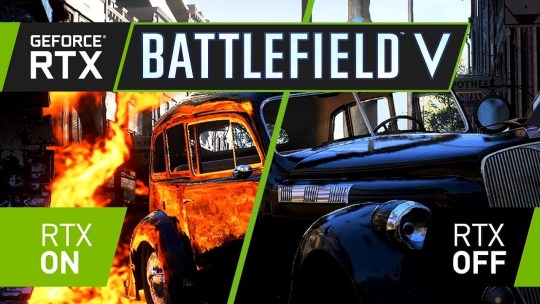

Test System Intel Core I7 8700k @ stock settings (3.7 GHz Base) Z390 Aorus Pro MSI Gaming X 1660 ti 32 GB of G. Skill Trident Z DDR4 3200 Cas 16 (XMP Profile #1) Intel 512 GB SSD 6 NVMe M.2 SSD (OS) 1 TB Crucial P1 NVMe M.2 SSD (Games and Utilities) Swiftech H320 X2 Prestige 360 mm AIO Cooler 1600 Watt EVGA Super Nova P2 80+ Platinum Power Supply Primochill Praxis Wet Bench Games Battlefield V Deus Ex: Mankind Divided FarCry 5 Final Fantasy XV Ghost Recon: Wildlands Shadow of The Tomb Raider Shadow of War Witcher 3 Synthetic Benchmarks 3DMARK Firestrike Ultra 3DMARK Time Spy Extreme Unigine Superposition VRMark – Orange Room VRMark – Cyan Room VRMark – Blue Room Utilities GPUZ Hardware Monitor MSI Afterburner MSI Dragon Center Mystic Light FurMark All testing was done with both the CPU (8700k) and GPU at their stock settings. For the I7 8700k, it was left at its stock speed of 3.7 GHz. However, this particular chip usually boosts between 4.4 and 4.5 GHz. The one exception was when we tested the overclocking capabilities of the Zotac 2060 AMP. Although ambient temperature does vary. We do our best to keep the ambient temperature around 20°c or 70°f. Each game we tested was run three time each, and the three results were averaged out. Each benchmark was run for 180 seconds, or 3 minutes. For synthetic benchmarks, each was run three time as well. However, instead of averaging out these results, we picked the best overall result. The charts in the gaming section show a comparison between the MSI Gaming X GTX 1660 ti and the Zotac RTX 2060 AMP. All games were tested at their highest presets except for one. The one exception was Battlefield V was tested on the games High Preset on the Gaming X 1660 ti and the Zotac 2060 Amp. This was due to the game running below 30 FPS on one of the test runs. For all testing, I use the highest preset that allows the game to give a result over 30 FPS or what we would consider playable. Synthetic Benchmarks 3DMARK 3DMARK is the go-to benchmark for all enthusiasts. It covers tests for everything from tablets and notebooks to gaming laptops and the most powerful gaming machines. For this review, we tested both the MSI Gaming X 1660 ti and the Zotac RTX 2060 AMP on both the DX11 4k benchmark, Firestrike Ultra and the DX12 4k benchmark, Time Spy Extreme. In both 3DMARK Time Spy Extreme and Firestrike Ultra, the MSI Gaming X 1660 ti landed just behind the RTX 2060 AMP. On Firestrike Ultra, the Gaming X 1660 ti had an overall score of 3368 and a graphics score of 3180. In Time Spy Extreme, the Gaming X 1660 ti achieved an overall score of 2878 and a graphics score of 2801. VR MARK VR Mark consists of three separate tests, each more intensive on your system than the last. These tests are The Orange Room, the Cyan Room, and the Blue Room. The Orange Room test is the least intense and is meant to test a system that meets the minimum hardware requirements for VR Gaming. The Cyan Room shows the user how the use of an API with less overhead can provide the user with a great VR experience, even on less than amazing hardware. The Blue Room test is designed for the latest and greatest hardware. The Blue Room renders at a whopping 5k resolution and is designed to really push your system to the limits for an amazing VR experience. In all three tests, the MSI Gaming X 1660 ti fell just short of the 2060 AMP. But, this was to be expected as its a lower tier card. In the Orange Room benchmark, The MSI Gaming X 1660 ti achieved a score of 9614. The Cyan Room test was closer, but The MSI Gaming X 1660 ti s scored 6348 and in the Blue room, The MSI Gaming X 1660 ti scored 1985. Superposition Superposition is another GPU intensive benchmark put out by Unigine, the makers of both the very popular Valley and Heaven benchmarks. Superposition is an extreme performance and stability test for graphics cards, power supplies, and cooling systems. We tested the MSI Gaming X 1660 ti in two resolutions in Superposition. 4k optimized and 8k optimized. In the 4k optimized test, the MSI Gaming X 1660 ti score 5046. Last was the 8k optimized test where the MSI Gaming X 1660 ti scored 2056. Gaming Benchmarks Battlefield V Battlefield V is a first-person shooter EA DICE and published by Electronic Arts. Battlefield V is the latest games in the Battlefield series. Battlefield V takes place during World War 2. It has both a single player and an online portion. For this review, we tested part of Battlefield V single player, War Stories. The section that was tested was the second act of the Nordlys War Story. You play a young woman who is part of the Norwegian resistance whose mission it is to save her mother and help destroy a key component the Germans need to complete their atomic bomb. Battle Fiend 5 was one of the first games to support the new Ray Tracing feature from Nvidia. This game was tested with both DXR on and DXR off. The charts show a comparison between the GTX 1070 TI FTW 2 at its ultra-preset and the Zotac RTX 2060 AMP at the games high preset. The MSI Gaming X 1660 ti did very well in Battlefield V, even in 4k. Keep in mind, Battlefield V was tested on the games High Preset, not ultra. In 1080p, Battlefield V averaged 96 FPS. Even in 1440p, the game stayed above 60 FPS with an average of 63. I was pretty surprised that the game averaged 29 FPS in 4k. If it hadn't been for the dips into the mid-teens, I may have considered it payable at 4k. Deus Ex: Mankind Divided Deus Ex: Mankind Divided is an action role-playing game with first-person shooter and stealth mechanics that released in 2016. Set two years after Human Revolution in 2029, the world is divided between normal humans and those with advanced, controversial artificial organs called augmentations. You take up the role of Adam Jensen, a double agent for the hacker group Juggernaut Collective, who is equipped with the latest and most advanced augmentation technology. This game is beautiful and still very demanding on your system. The section benchmarked was near the beginning of the game, after the tutorial. In Deus Ex: Mankind Divided, The MSI Gaming X 1660 ti did very well in 1080p averaging 82 fps. In 1440p, it fell short of 60 with an average of 49 fps. Under 60, but still very playable. If the settings had been lowered to medium or even high, The MSI Gaming X 1660 ti could handle 1440p in this game. Although the game did average 32 fps in 4k, I still wouldn't consider it playable. The lows were too often Far Cry 5 Far Cry 5 is the latest is the far cry series. It takes place in the fictional Hope County Montana. You play the role of the un-names deputy who’s sent to arrest Joseph Seed, the leader of the dangerous Edens Gate Cult. However, things do not go as planned and you spend the game trapped in Hope County attempting to take out Joseph and the rest of his family as they attempt to take over the entire county. Far Cry 5 was released in 2018. Ubisoft has developed a beautiful open world with amazing visuals. However, the game is very demanding on even the most powerful systems. This game was tested with the in-game benchmark, as well as near the beginning of the game when you first leave the bunker owned by Dutch as you attempt to clear his island of cult members. The MSI Gaming X 1660 ti did very well in Far Cry 5. It averaged 90 FPS in 1080p on max settings and ever stayed over 60 in 1440p with an average of 64. Even in 4k, The MSI Gaming X 1660 ti averaged 30 FPS and with a minimum of 25, I would consider the game playable. Especially if you lowered the settings to medium or high. Final Fantasy XV Fans of the Final Fantasy series waited well over a decade for this game to release. Final Fantasy XV is an open-world action role-playing game. You play as the main protagonist Noctis Lucis Caelum during his journey across the world of Eos. Final Fantasy XV was developed and published by Square Enix as part of the long-running Final Fantasy series that first started on the original NES back in the late 1980s. The section that was benchmarked was the first section near the start of the game, where there was actual combat. In Final Fantasy XV, The MSI Gaming X 1660 ti was very close in both 1080p and 1440p. The game averaged 58 FPS in 1080p and 52 in 1440p. I wouldn't consider playing this game in 4k with The MSI Gaming X 1660 ti since it only averaged 28 FPS with lows into the teens. I'd say Final Fantasy XV is a solid 1080p game, even with the settings lowered a bit. Gaming Benchmarks Continued Ghost Recon: Wildlands Tom Clancy's Ghost Recon Wildlands is a third-person tactical shooter game. You play as a member of the Delta Company, First Battalion, 5th Special Forces Group, also known as "Ghosts", a fictional elite special operations unit of the United States Army under the Joint Special Operations Command. This game takes place in a modern-day setting and is the first in the Ghost Recon series to feature an open world with 9 different types of terrain. The benchmark was run at the beginning of the first mission in the game. Ghost Recon Wildlands performed well on all resolutions with The MSI Gaming X 1660 ti. In 1080p, it averaged 80 FPS on the games highest preset. In 1440p, it averaged 59 FPS. However, it was still a nice smooth experience. Even in 4k, The MSI Gaming X 1660 ti stayed above 30 with an average of 35 fps and a minimum of 27. Still playable. Shadow of the Tomb Raider Shadow of the Tomb Raider is set to be the third and final game of the rebooted trilogy developed by Eidos Montréal in conjunction with Crystal Dynamics and published by Square Enix. In Shadow of the Tomb Raider, you continue your journey as Lara Croft as she attempts to finish the life work of her father. Her in a journey that takes her from Central America to the hidden city of Paititi as she attempts to stop Trinity in their attempt to gain power. Section benchmarked was near the beginning of the first section that takes place in the hidden city. This was compared to the in-game benchmark which seems to be an accurate representation of the gameplay. In Shadow of the Tomb Raider, The MSI Gaming X 1660 ti is showing itself, again, to be a great 1080p gaming card with an average of 88 fps on the game's ultra preset. In 1440p, The MSI Gaming X 1660 ti averaged a respectable 55 fps in the latest Tomb Raider installment. In 4k, The MSI Gaming X 1660 ti only averaged 27 fps and had a low of 21. Shadow of War Shadow of War is an action role-playing video game developed by Monolith Productions and published by Warner Bros. Interactive Entertainment. It is the sequel to the very successful Shadow of Mordor that released in 2014 and is based on J. R. R. Tolkien's legendarium. The games are set between the events of The Hobbit and The Lord of the Rings in Tolkien's fictional Middle Earth. You play again as Talion, a Ranger of Gondor that was brought back to life with unique abilities after being killed with his entire family as the start of the last game. Monolith Studios has created a beautiful open world with amazing gameplay and visuals. The MSI Gaming X 1660 ti did well on Shadow of War in both 1080p and 1440p. In 1080p, The MSI Gaming X 1660 ti averaged 78 fps and in 1440p, it averaged 54 fps. Even in 4k, The MSI Gaming X 1660 ti averaged above the playable lever with an average of 32 fps. The Witcher 3 The Witcher 3 is an action role-playing game developed and published by CD Projekt. Based on The Witcher series of fantasy novels by Polish author Andrzej Sapkowski. This is the third game in the Witcher Series to date and the best so far. You play as Geralt of Rivia on his quest to save his adopted daughter from the Wild Hunt. At its release in 2015, The Witcher 3 has some of the most beautiful graphics ever seen in a game, as well as some of the most demanding. Even today, almost 4 years later, the Witcher 3 still holds up very well and brings even the most powerful systems to their knees. The game was benchmarked during the hunt for and battle with the Griffin near the start of the main story. I was most surprised with the performance of The MSI Gaming X 1660 ti in the Witcher 3. This is a very demanding game and when the MSI Gaming X 1660 ti only averaged 63 fps in 1080p. I had expected much lower than an average of 53 fps in 1440p. The one that got me was the average of 31fps in 4k on the game's ultra preset. I had expected that to be lower. Overclocking, Noise, and Temperatures

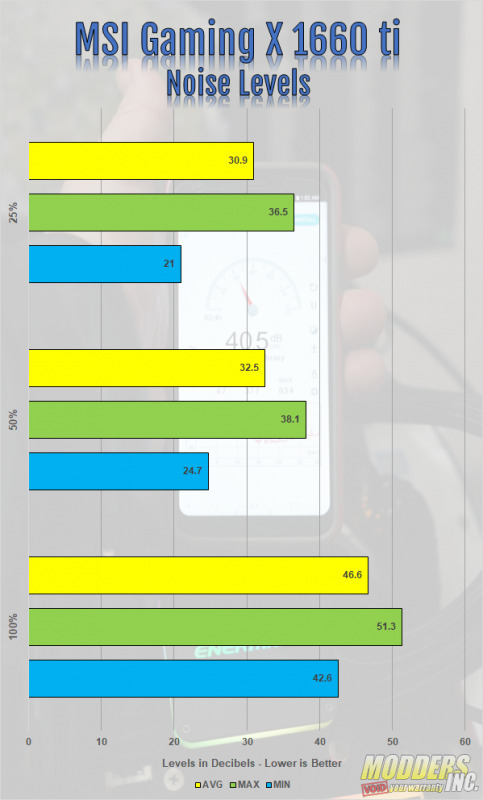

For overclocking, we used MSI Afterburner. Like Firestorm from Zotac used in the 2060 AMP review, MSI Afterburner has an OC Scanner feature. However, unlike Firestorm, I was able to get the OC Scanner in MSI Afterburner working. To validate the overclock, we used the GPU Stress Test in FurMark. Being a non-reference card, The Gaming X 1660 ti is already a factory overclocked card with a boost clock of 1875 MHz out of the box. Afterburner has the base clock speed as 1215 MHz. MSI Afterburners OC Scanner was able to get the base clock to 1335 MHz and it boosted to 2040 MHz. With manual overclocking, I increased that to +125 on the core with the card boosting to 2070 MHz. The 2070 MHz was the best boost clock speed the Gaming X 1660 ti achieved. As for the memory, I was nearly able to max out the slider in MSI Afterburner with the Gaming X 1660 ti. I added an additional +1200 on to the memory. Anything past that and the benchmark would crash. During the gaming benchmarks, the MSI Gaming X 1660 ti maxed out 61°c. However, even while running FurMark to validate the GPU overclock, the card never saw 70°c, maxing out at 69°c. For noise testing, I used the Sound Meter Android app by ABC Apps found in the Google Play app store. Noise testing wasn’t done with an actual decibel meter. It may not be the best solution, but it works either way. The app gives you a min, max, and average for the noise level in decibels. The noise levels were tested with the fans at 25%, 50%, and 100%. At 100%, the max decibel level was 51.3 and the average was 46.6/ At 50%, the max was 38.1 and the average was 32.5. Finally, at 25%, the max decibel level was 36.5 and the average was 30.9.

Final Thoughts and Conclusion

The MSI Gaming X GTX 1660 ti offered a far better gaming experience than I had expected when I first heard of its release. I had originally expected it to perform slightly above the GTX 1050 ti. But, I was wrong. The Gaming X 1660 ti performed just below the RTX 2060 AMP we recently reviewed. This puts the MSI Gaming X 1660 ti on par with the GTX 1070. However, the Gaming X 1660 ti launched at a lower price of 1070. The card design is, like other Gaming X cards from MSI, beautiful. I love the use of Neutral colors. The gray and black color scheme will allow the card to fit well into most builds and the brushed aluminum backplate looks great. Its good to see most companies doing this now. Although I'm not a fan of RGB lighting, the Gaming X 1660 ti doe RGB right. The RGB lighting on the Gaming X 1660 ti is subtle and not overdone. With the Mystic Light app, you can customize it however you want. You can even disable the lighting altogether. The MSI Gaming X 1660 ti has proven to be a beast of a 1080p gaming card. All eight games we tested averaged over 60 FPS, some even into the 80s and above. A couple of the game including Far Cry 5 and Battlefield V even averaged over 60 FPS in 1440p. Far Cry 5 was even on the games Ultra Preset. So, by lowering the details on many modern titles, the MSI Gaming X 1660 ti could easily handle many modern games in 1440p. Some games were playable in 4k, such as The Witcher 3 which averaged 31 FPS in 4k. However, the MSI Gaming X 1660 ti is not a 4k gaming card, nor was it intended to be. If you're looking to build a system on a tight budget, the MSI Gaming X GTX 1660 ti is a great card to consider. At the time of this review, we found the MSI Gaming X 1660 ti on Amazon for about $360. However, that's a fair amount over the $309.99 msrpMSRP has set on this card. So, look around and you can find it for a better price I'm sure. amzn_assoc_tracking_id = "dewaynecarel-20"; amzn_assoc_ad_mode = "manual"; amzn_assoc_ad_type = "smart"; amzn_assoc_marketplace = "amazon"; amzn_assoc_region = "US"; amzn_assoc_design = "enhanced_links"; amzn_assoc_asins = "B07N825Y1L"; amzn_assoc_placement = "adunit"; amzn_assoc_linkid = "c560a2637b6c8b5e007383ecbc4f63c1"; Read the full article

0 notes

Text

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 - 2018

New Post has been published on https://www.ultragamerz.com/new-nvidia-ampere-volta-gaming-graphics-geforce-11-20-vs-amd-vega-2-2018/

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 - 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018

Ampere or Volta, It will Be Revealed at GTC – March 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018 – Credit By Nvidia

Forget Volta graphic and plans have changed for Nvidia next gaming graphic cards. NVIDIA just released the Nvidia Titan V which is not for gaming but is most powerful graphics ever made for PC. it is based on 12nm volta and got a lot of headlines. Volta was used to be considered as the main next Nvidia architecture for gaming as well. But after a while rumors came out that Nvidia’s next-generation GPU architecture is to be GeForce Ampere. Nvidia is planning to reveal or announce Ampere or the gaming version of the volta during GPU Technology Conference in March, much sooner than the second half of 2018. Also CES 2018 is on its way and it is one of the possibilities that they start talking about something new and announce a new GPU in CES and many are believing that it is going to be the case for AMD. One important thing to point out is that these two things actually depend on the other so much. AMD is going to jump to the next VEGA soon in early 2018 too. Nvidia Next Gen may be named the GeForce 11 or GeForce 20.

The Ampere micro-architecture is to be improved and evolved not much in hand right now, but Volta chips used in Nvidia’s supercomputer GPUs are doing pretty much great. Whatever rumours about Ampere as a seperate platform or Volta transformation for gaming is correct or not, anything better that Volta is gonna be good. Ampere will be the successor to Nvidia’s GeForce 10 series graphics cards the pascal. The rumors are true or false right now the Pascal is over and no major graphics has been released except the titan x star wars edition and 1070 ti. We’ve never heard anything about Ampere before from Nvidia But also we have seen denying that Volta is going to be a gaming platform for Nvidia. AMD’s moving behind the scenes to bring its next-gen 7nm Navi GPU architecture into play as son as they can. Nvidia has been hardly under pressure from AMD to deliver more significantly more powerful GPUs anytime soon until recently. If AMD moves toward the next gen of RX VEGA in early 2018 you should expect it to beat the current Nvidia cards from pascal geforce 10 series.

Having all this NVIDIA CEO has mentioned that there is no plan to move Volta in gaming market yet:

“Volta for gaming, we haven’t announced anything. And all I can say is that our pipeline is filled with some exciting new toys for the gamers, and we have some really exciting new technology to offer them in the pipeline. But for the holiday season for the foreseeable future, I think Pascal is just unbeatable. It’s just the best thing out there. And everybody who’s looking forward to playing Call of Duty or Destiny 2, if they don’t already have one, should run out and get themselves a Pascal.” Nvidia CEO Jensen Huang

THE POWER OF GTC 2018 SILICON VALLEY MARCH 26-29, 2018

GTC is the largest and most important event of the year for GPU developers around the world in different place and it will be 2018 in silicon valley. you can explore GTC and the global GTC event series for valuable training and a showcase of the most vital work in the computing industry today. Get updated by the latest breakthroughs in everything from artificial intelligence and deep learning to healthcare, virtual reality, accelerated analytics, and self-driving cars. “IF GPUs ARE TIME MACHINES, THEN GTC IS WHERE YOU COME SEE THE FUTURE.” Jensen Huang, NVIDIA. FEATURED 2018 SPEAKERS and Hundreds of additional GTC 2018 speakers will be added.

FEATURED 2018 SPEAKERS

Nvidia Volta 2018 Credit: Nvidia

Tags: Graphic cards, Nvidia, Nvidia 2018, Nvidia Ampere 2018, nvidia new gaming graphics, nvidia volta, nvidia volta 2018, nvidia volta game GPU, nvidia volta gaming graphic cards, nvidia ampere, technology, gtc 2018, nvda, ampere

#ampere#Graphic cards#gtc 2018#NVDA#Nvidia#Nvidia 2018#nvidia ampere#Nvidia Ampere 2018#nvidia new gaming graphics#nvidia volta#nvidia volta 2018#nvidia volta game GPU#nvidia volta gaming graphic cards#technology#Gaming hardware#Gaming News#Technology

0 notes

Text

Graphic Cards 2019: The (other) big three in 2019: AMD, Intel and Nvidia

What new PC processors will we see in 2019?

This last year has been a bizarre one in the world of PC components. AMD, Intel, and Nvidia have all launched major product lines that ruled their prospective portions of the market – at least for a little while. AMD launched its Ryzen and Threadripper 2nd Generation lineups, which brought about fantastic performance at a bargain price, while Nvidia launched Turing and the best graphics cards we’ve ever seen. And, Intel? Well, Intel released four different microarchitectures to varying degrees of success.

(adsbygoogle = window.adsbygoogle || []).push({});

But, what will 2019 look like? As all of these manufacturers mobilize to launch more efficient silicon than ever before, will we finally start seeing 7-nanometer (nm) processors in the mainstream? Or, will AMD launch a line of graphics cards that give the RTX 2080 and RTX 2080 Ti a run for their money? What will the mid- and low-end Nvidia Turing cards look like? AMD 2019