#nvidia volta game GPU

Explore tagged Tumblr posts

Text

Nvidia HGX vs DGX: Key Differences in AI Supercomputing Solutions

Nvidia HGX vs DGX: What are the differences?

Nvidia is comfortably riding the AI wave. And for at least the next few years, it will likely not be dethroned as the AI hardware market leader. With its extremely popular enterprise solutions powered by the H100 and H200 “Hopper” lineup of GPUs (and now B100 and B200 “Blackwell” GPUs), Nvidia is the go-to manufacturer of high-performance computing (HPC) hardware.

Nvidia DGX is an integrated AI HPC solution targeted toward enterprise customers needing immensely powerful workstation and server solutions for deep learning, generative AI, and data analytics. Nvidia HGX is based on the same underlying GPU technology. However, HGX is a customizable enterprise solution for businesses that want more control and flexibility over their AI HPC systems. But how do these two platforms differ from each other?

Nvidia DGX: The Original Supercomputing Platform

It should surprise no one that Nvidia’s primary focus isn’t on its GeForce lineup of gaming GPUs anymore. Sure, the company enjoys the lion’s share among the best gaming GPUs, but its recent resounding success is driven by enterprise and data center offerings and AI-focused workstation GPUs.

Overview of DGX

The Nvidia DGX platform integrates up to 8 Tensor Core GPUs with Nvidia’s AI software to power accelerated computing and next-gen AI applications. It’s essentially a rack-mount chassis containing 4 or 8 GPUs connected via NVLink, high-end x86 CPUs, and a bunch of Nvidia’s high-speed networking hardware. A single DGX B200 system is capable of 72 petaFLOPS of training and 144 petaFLOPS of inference performance.

Key Features of DGX

AI Software Integration: DGX systems come pre-installed with Nvidia’s AI software stack, making them ready for immediate deployment.

High Performance: With up to 8 Tensor Core GPUs, DGX systems provide top-tier computational power for AI and HPC tasks.

Scalability: Solutions like the DGX SuperPOD integrate multiple DGX systems to form extensive data center configurations.

Current Offerings

The company currently offers both Hopper-based (DGX H100) and Blackwell-based (DGX B200) systems optimized for AI workloads. Customers can go a step further with solutions like the DGX SuperPOD (with DGX GB200 systems) that integrates 36 liquid-cooled Nvidia GB200 Grace Blackwell Superchips, comprised of 36 Nvidia Grace CPUs and 72 Blackwell GPUs. This monstrous setup includes multiple racks connected through Nvidia Quantum InfiniBand, allowing companies to scale thousands of GB200 Superchips.

Legacy and Evolution

Nvidia has been selling DGX systems for quite some time now — from the DGX Server-1 dating back to 2016 to modern DGX B200-based systems. From the Pascal and Volta generations to the Ampere, Hopper, and Blackwell generations, Nvidia’s enterprise HPC business has pioneered numerous innovations and helped in the birth of its customizable platform, Nvidia HGX.

Nvidia HGX: For Businesses That Need More

Build Your Own Supercomputer

For OEMs looking for custom supercomputing solutions, Nvidia HGX offers the same peak performance as its Hopper and Blackwell-based DGX systems but allows OEMs to tweak it as needed. For instance, customers can modify the CPUs, RAM, storage, and networking configuration as they please. Nvidia HGX is actually the baseboard used in the Nvidia DGX system but adheres to Nvidia’s own standard.

Key Features of HGX

Customization: OEMs have the freedom to modify components such as CPUs, RAM, and storage to suit specific requirements.

Flexibility: HGX allows for a modular approach to building AI and HPC solutions, giving enterprises the ability to scale and adapt.

Performance: Nvidia offers HGX in x4 and x8 GPU configurations, with the latest Blackwell-based baseboards only available in the x8 configuration. An HGX B200 system can deliver up to 144 petaFLOPS of performance.

Applications and Use Cases

HGX is designed for enterprises that need high-performance computing solutions but also want the flexibility to customize their systems. It’s ideal for businesses that require scalable AI infrastructure tailored to specific needs, from deep learning and data analytics to large-scale simulations.

Nvidia DGX vs. HGX: Summary

Simplicity vs. Flexibility

While Nvidia DGX represents Nvidia’s line of standardized, unified, and integrated supercomputing solutions, Nvidia HGX unlocks greater customization and flexibility for OEMs to offer more to enterprise customers.

Rapid Deployment vs. Custom Solutions

With Nvidia DGX, the company leans more into cluster solutions that integrate multiple DGX systems into huge and, in the case of the DGX SuperPOD, multi-million-dollar data center solutions. Nvidia HGX, on the other hand, is another way of selling HPC hardware to OEMs at a greater profit margin.

Unified vs. Modular

Nvidia DGX brings rapid deployment and a seamless, hassle-free setup for bigger enterprises. Nvidia HGX provides modular solutions and greater access to the wider industry.

FAQs

What is the primary difference between Nvidia DGX and HGX?

The primary difference lies in customization. DGX offers a standardized, integrated solution ready for deployment, while HGX provides a customizable platform that OEMs can adapt to specific needs.

Which platform is better for rapid deployment?

Nvidia DGX is better suited for rapid deployment as it comes pre-integrated with Nvidia’s AI software stack and requires minimal setup.

Can HGX be used for scalable AI infrastructure?

Yes, Nvidia HGX is designed for scalable AI infrastructure, offering flexibility to customize and expand as per business requirements.

Are DGX and HGX systems compatible with all AI software?

Both DGX and HGX systems are compatible with Nvidia’s AI software stack, which supports a wide range of AI applications and frameworks.

Final Thoughts

Choosing between Nvidia DGX and HGX ultimately depends on your enterprise’s needs. If you require a turnkey solution with rapid deployment, DGX is your go-to. However, if customization and scalability are your top priorities, HGX offers the flexibility to tailor your HPC system to your specific requirements.

Muhammad Hussnain Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Exploring the Key Differences: NVIDIA DGX vs NVIDIA HGX Systems

A frequent topic of inquiry we encounter involves understanding the distinctions between the NVIDIA DGX and NVIDIA HGX platforms. Despite the resemblance in their names, these platforms represent distinct approaches NVIDIA employs to market its 8x GPU systems featuring NVLink technology. The shift in NVIDIA’s business strategy was notably evident during the transition from the NVIDIA P100 “Pascal” to the V100 “Volta” generations. This period marked the significant rise in prominence of the HGX model, a trend that has continued through the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

Focusing primarily on the 8x GPU configurations that utilize NVLink, NVIDIA’s product lineup includes the DGX and HGX lines. While there are other models like the 4x GPU Redstone and Redstone Next, the flagship DGX/HGX (Next) series predominantly features 8x GPU platforms with SXM architecture. To understand these systems better, let’s delve into the process of building an 8x GPU system based on the NVIDIA Tesla P100 with SXM2 configuration.

DeepLearning12 Initial Gear Load Out

Each server manufacturer designs and builds a unique baseboard to accommodate GPUs. NVIDIA provides the GPUs in the SXM form factor, which are then integrated into servers by either the server manufacturers themselves or by a third party like STH.

DeepLearning12 Half Heatsinks Installed 800

This task proved to be quite challenging. We encountered an issue with a prominent server manufacturer based in Texas, where they had applied an excessively thick layer of thermal paste on the heatsinks. This resulted in damage to several trays of GPUs, with many experiencing cracks. This experience led us to create one of our initial videos, aptly titled “The Challenges of SXM2 Installation.” The difficulty primarily arose from the stringent torque specifications required during the GPU installation process.

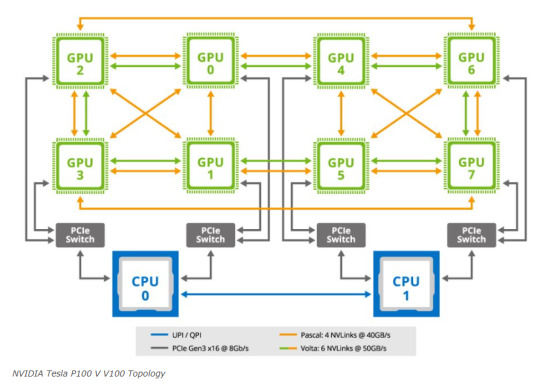

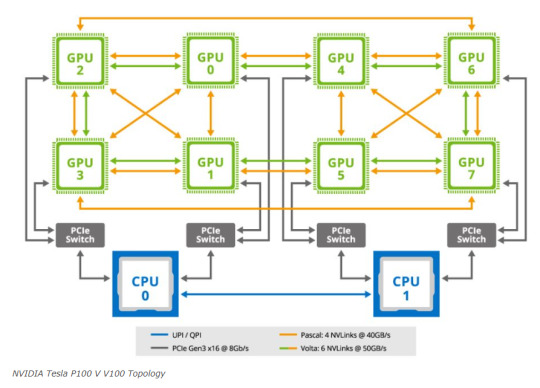

NVIDIA Tesla P100 V V100 Topology

During this development, NVIDIA established a standard for the 8x SXM GPU platform. This standardization incorporated Broadcom PCIe switches, initially for host connectivity, and subsequently expanded to include Infiniband connectivity.

Microsoft HGX 1 Topology

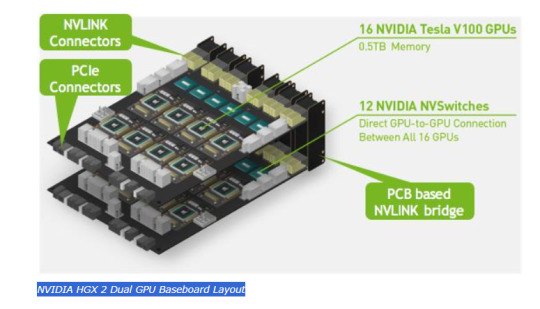

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

NVIDIA HGX 2 Dual GPU Baseboard Layout

Let’s delve into a comparison of the NVIDIA V100 setup in a server from 2020, renowned for its standout color scheme, particularly in the NVIDIA SXM coolers. When contrasting this with the earlier P100 version, an interesting detail emerges. In the Gigabyte server that housed the P100, one could notice that the SXM2 heatsinks were without branding. This marked a significant shift in NVIDIA’s approach. With the advent of the NVSwitch baseboard equipped with SXM3 sockets, NVIDIA upped its game by integrating not just the sockets but also the GPUs and their cooling systems directly. This move represented a notable advancement in their hardware design strategy.

Consequences

The consequences of this development were significant. Server manufacturers now had the option to acquire an 8-GPU module directly from NVIDIA, eliminating the need to apply excessive thermal paste to the GPUs. This change marked the inception of the NVIDIA HGX topology. It allowed server vendors the flexibility to customize the surrounding hardware as they desired. They could select their preferred specifications for RAM, CPUs, storage, and other components, while adhering to the predetermined GPU configuration determined by the NVIDIA HGX baseboard.

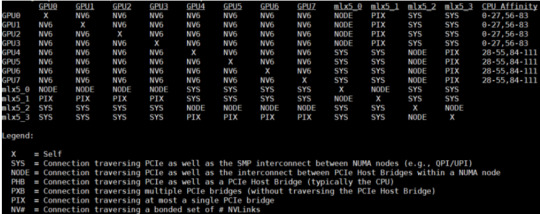

Inspur NF5488M5 Nvidia Smi Topology

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100. The codename for this baseboard is “Delta”. Officially, this board was called the NVIDIA HGX.

Inspur NF5488A5 NVIDIA HGX A100 8 GPU Assembly 8x A100 And NVSwitch Heatsinks Side 2

NVIDIA, along with its OEM partners and clients, recognized that increased power could enable the same quantity of GPUs to perform additional tasks. However, this enhancement came with a drawback: higher power consumption led to greater heat generation. This development prompted the introduction of liquid-cooled NVIDIA HGX A100 “Delta” platforms to efficiently manage this heat issue.

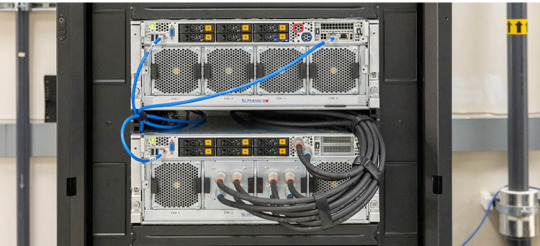

Supermicro Liquid Cooling Supermicro

The HGX A100 assembly was initially introduced with its own brand of air cooling systems, distinctively designed by the company.

In the newest “Hopper” series, the cooling systems were upscaled to manage the increased demands of the more powerful GPUs and the enhanced NVSwitch architecture. This upgrade is exemplified in the NVIDIA HGX H100 platform, also known as “Delta Next”.

NVIDIA DGX H100

NVIDIA’s DGX and HGX platforms represent cutting-edge GPU technology, each serving distinct needs in the industry. The DGX series, evolving since the P100 days, integrates HGX baseboards into comprehensive server solutions. Notable examples include the DGX V100 and DGX A100. These systems, crafted by rotating OEMs, offer fixed configurations, ensuring consistent, high-quality performance.

While the DGX H100 sets a high standard, the HGX H100 platform caters to clients seeking customization. It allows OEMs to tailor systems to specific requirements, offering variations in CPU types (including AMD or ARM), Xeon SKU levels, memory, storage, and network interfaces. This flexibility makes HGX ideal for diverse, specialized applications in GPU computing.

Conclusion

NVIDIA’s HGX baseboards streamline the process of integrating 8 GPUs with advanced NVLink and PCIe switched fabric technologies. This innovation allows NVIDIA’s OEM partners to create tailored solutions, giving NVIDIA the flexibility to price HGX boards with higher margins. The HGX platform is primarily focused on providing a robust foundation for custom configurations.

In contrast, NVIDIA’s DGX approach targets the development of high-value AI clusters and their associated ecosystems. The DGX brand, distinct from the DGX Station, represents NVIDIA’s comprehensive systems solution.

Particularly noteworthy are the NVIDIA HGX A100 and HGX H100 models, which have garnered significant attention following their adoption by leading AI initiatives like OpenAI and ChatGPT. These platforms demonstrate the capabilities of the 8x NVIDIA A100 setup in powering advanced AI tools. For those interested in a deeper dive into the various HGX A100 configurations and their role in AI development, exploring the hardware behind ChatGPT offers insightful perspectives on the 8x NVIDIA A100’s power and efficiency.

M.Hussnain Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia dgx h100#nvidia hgx#DGX#HGX#Nvidia HGX A100#Nvidia HGX H100#Nvidia H100#Nvidia A100#Nvidia DGX H100#viperatech

0 notes

Text

NVIDIA TITAN RTX

NVIDIA's TITAN arrangement of designs cards has been a fascinating one since the dispatch of the first in 2013. That Kepler-based GTX TITAN model topped at 4.5 TFLOPS single-exactness (FP32), execution that was supported to 5.1 TFLOPS with the arrival of the TITAN Black the next year.

Quick forward to the current day, where we presently have the TITAN RTX, bragging 16.3 TFLOPS single-accuracy, and 32.6 TFLOPS of half-exactness (FP16). Twofold exactness (FP64) used to be standard admission on the prior TITANs, however today, you'll need the Volta-based TITAN V for opened execution (6.1 TFLOPS), or AMD's Radeon VII for in part opened execution (3.4 TFLOPS).

Of late, half-accuracy has collected a great deal of consideration by the ProViz showcase, since it's optimal for use with profound learning and AI, things that are developing in fame at an incredibly brisk pace. Add explicitly tuned Tensor centers to the blend, and profound learning execution on Turing turns out to be genuinely amazing.

NVIDIA TITAN RTX Graphics Card

Tensors are not by any means the only party stunt the TITAN RTX has. Like the remainder of the RTX line (on both the gaming and genius side), RT centers are available in the TITAN RTX, helpful for quickening continuous beam following outstanding tasks at hand. The centers should be explicitly bolstered by engineers, utilizing APIs, for example, DXR and VKRay. While support for NVIDIA's innovation began lukewarm, industry support has grown a great deal since the first disclosing of RTX at SIGGRAPH a year ago.

At E3 in June, a bunch of games had beam following related declarations, including Watch_Dogs: Legion, Cyberpunk 2077, Call of Duty: Modern Warfare, and obviously, Quake II RTX. On the plan side, a few designers have just discharged their RTX quickened arrangements, while a lot more are underway. NVIDIA has been gabbing of late about the Adobes and Autodesks of the world assisting with developing the rundown of RTX-implanted programming. We wouldn't be astonished if more RTX goodness was uncovered at SIGGRAPH this year once more.

For profound learning, the TITAN RTX's solid FP16 execution is quick all alone, however there are a couple of advantages locally available to help take things to the following level. The Tensor centers help in a significant part of the increasing speed, however the capacity to utilize blended exactness is another enormous part. With it, insignificant information following will be put away in single-accuracy, while the key information will get crunched into equal parts exactness. Everything consolidated, this can support preparing execution by 3x over the base GPU.

NVIDIA's TITAN RTX and GeForce RTX 2080 Ti - Backs

Likewise eminent for Turing is simultaneous number/coasting point tasks, which permits games (or programming) to execute INT and FP activities in equal without stumbling over one another in the pipeline. NVIDIA has noted in the past that with games like Shadow of the Tomb Raider, an example set of 100 guidelines included 62 FP and 38 INT, and that this simultaneous component legitimately improves execution accordingly.

Another significant element of TITAN RTX is its capacity to utilize NVLink, which basically consolidates the memory pools of two cards together, bringing about a solitary framebuffer that can be utilized for the greatest potential tasks. Since GPUs scale commonly very well with the sorts of outstanding tasks at hand the card focuses on, it's the genuine memory pooling that is going to offer the best advantage here. Gaming content that could likewise exploit multi-GPU would see an advantage with two cards and this connector, also.

Since it's an element selective to these RTX GPUs at the present time, it merits referencing that NVIDIA likewise packages a VirtualLink port at the back, permitting you to connect your HMD for VR, or in the most pessimistic scenario, use it as a full-fueled USB-C port, either for information move or telephone charging.

With the entirety of that secured, we should investigate the general current NVIDIA workstation stack:

NVIDIA's Quadro and TITAN Workstation GPU Lineup

Cores Base MHz Peak FP32 Memory Bandwidth TDP Price

GV100 5120 1200 14.9 TFLOPS 32 GB 8 870 GB/s 185W $8,999

RTX 8000 4608 1440 16.3 TFLOPS 48 GB 5 624 GB/s ???W $5,500

RTX 6000 4608 1440 16.3 TFLOPS 24 GB 5 624 GB/s 295W $4,000

RTX 5000 3072 1350 11.2 TFLOPS 16 GB 5 448 GB/s 265W $2,300

RTX 4000 2304 1005 7.1 TFLOPS 8 GB 1 416 GB/s 160W $900

TITAN RTX 4608 1350 16.3 TFLOPS 24 GB 1 672 GB/s 280W $2,499

TITAN V 5120 1200 14.9 TFLOPS 12 GB 4 653 GB/s 250W $2,999

P6000 3840 1417 11.8 TFLOPS 24 GB 6 432 GB/s 250W $4,999

P5000 2560 1607 8.9 TFLOPS 16 GB 6 288 GB/s 180W $1,999

P4000 1792 1227 5.3 TFLOPS 8 GB 3 243 GB/s 105W $799

P2000 1024 1370 3.0 TFLOPS 5 GB 3 140 GB/s 75W $399

P1000 640 1354 1.9 TFLOPS 4 GB 3 80 GB/s 47W $299

P620 512 1354 1.4 TFLOPS 2 GB 3 80 GB/s 40W $199

P600 384 1354 1.2 TFLOPS 2 GB 3 64 GB/s 40W $179

P400 256 1070 0.6 TFLOPS 2 GB 3 32 GB/s 30W $139

Notes 1 GDDR6; 2 GDDR5X; 3 GDDR5; 4 HBM2

5 GDDR6 (ECC); 6 GDDR5X (ECC); 7 GDDR5 (ECC); 8 HBM2 (ECC)

Design: P = Pascal; V = Volta; RTX = Turing

The TITAN RTX matches the Quadro RTX 6000 and 8000 for having the most elevated number of centers in the Turing lineup. NVIDIA says the TITAN RTX is around 3 TFLOPS quicker in FP32 over the RTX 2080 Ti, and luckily, we have results for the two cards covering a wide-scope of tests to perceive how they analyze.

What's not found in the specs table above is the real execution of the beam following and profound learning segments. This next table enables away from of that to up:

NVIDIA's Quadro and TITAN – RTX Performance

RT Cores RTX-OPS Rays Cast 1 FP16 2 INT8 3 Deep-learning 2

TITAN RTX 72 84 T 11 32.6 206.1 130.5

RTX 8000 72 84 T 10 32.6 206.1 130.5

RTX 6000 72 84 T 10 32.6 206.1 130.5

RTX 5000 48 62 T 8 22.3 178.4 89.2

RTX 4000 36 43 T 6 14.2 28.5 57

Notes 1 Giga Rays/s; 2 TFLOPS; 3 TOPS

You'll see that the TITAN RTX has a higher "beams cast" spec than the top Quadros, which may owe its gratitude to higher timekeepers. Different specs are indistinguishable over the best three GPUs, with evident downsizing occurring as we move descending. Right now, the Quadro RTX 4000 (approximately a GeForce RTX 2070 equal) is the most reduced end current-gen Quadro from NVIDIA. Once more, SIGGRAPH is nearly upon us, so it may be the case that NVIDIA will have an equipment shock coming up; maybe a RTX 2060 Quadro identical.

When the RTX 2080 Ti as of now offers so much execution, who precisely is the TITAN RTX for? NVIDIA is focusing on it to a great extent at scientists, yet it optionally goes about as one of the quickest ProViz cards available. It could be selected by the individuals who need the quickest GPU arrangement going, and also a colossal 24GB framebuffer. 24GB may be excessive for a ton of current perception work, yet with profound learning, 24GB gives a great deal of breathing room.

In spite of all it offers, TITAN RTX can't be called an "extreme" answer for ProViz since it comes up short on some Quadro enhancements that the namesake GPUs have. That implies in certain top of the line structure suites like Siemens NX, a genuine Quadro may demonstrate better. Yet, on the off chance that you don't utilize any outstanding tasks at hand that experience explicit upgrades, the TITAN RTX will be very appealing given its list of capabilities (and that framebuffer!) If you're at any point befuddled about advancements in your product of decision, if you don't mind leave a remark!

Two or three years prior, NVIDIA chose to give some affection to the TITAN arrangement with driver upgrades that brings some equality among TITAN and Quadro. We would now be able to state that TITAN RTX appreciates a similar sort of execution helps that the TITAN Xp completed two years prior, something that will be reflected in a portion of the charts ahead.

Test PC and What We Test

On the accompanying pages, the consequences of our workstation GPU test gauntlet will be seen. The tests picked spread a wide scope of situations, from rendering to figure, and incorporates the utilization of both manufactured benchmarks and tests with true applications from any semblance of Adobe and Autodesk.

Nineteen designs cards have been tried for this article, with the rundown commanded by Quadro and Radeon Pro workstation cards. There's a sound sprinkling of gaming cards in there also, in any case, to show you any conceivable streamlining that might be occurring on either.

It would be ideal if you note that the testing for this article was directed a few months prior, before an invasion of movement and item dispatches. Illustrations card drivers discharged since our testing may improve execution in specific cases, however we wouldn't anticipate any eminent changes, having mental soundness checked a lot of our typical tried programming on both AMD and NVIDIA GPUs. In like manner, the past rendition of Windows was utilized for this specific testing, yet that additionally didn't uncover any burdens when we rational soundness checked in 1903.

As of late, we've invested a ton of energy cleaning our test suites, and furthermore our interior testing contents. We're right now during the time spent rebenchmarking various GPUs for a forthcoming glance at ProViz execution with cards from both AMD's Radeon RX 5700 and NVIDIA's GeForce SUPER arrangement. Luckily, results from those cards don't generally eat into a top-end card like the TITAN RTX, so lateness hasn't thwarted us this time.

The specs of our test rig are seen beneath:

Techgage Workstation Test System

Processor Intel Core i9-9980XE (18-center; 3.0GHz)

Motherboard ASUS ROG STRIX X299-E GAMING

Memory HyperX FURY (4x16GB; DDR4-2666 16-18-18)

Graphics AMD Radeon VII (16GB)

AMD Radeon RX Vega 64 (8GB)

AMD Radeon RX 590 (8GB)

AMD Radeon Pro WX 8200 (8GB)

AMD Radeon Pro WX 7100 (8GB)

AMD Radeon Pro WX 5100 (8GB)

AMD Radeon Pro WX 4100 (4GB)

AMD Radeon Pro WX 3100 (4GB)

NVIDIA TITAN RTX (24GB)

NVIDIA TITAN Xp (12GB)

NVIDIA GeForce RTX 2080 Ti (11GB)

NVIDIA GeForce RTX 2060 (6GB)

NVIDIA GeForce GTX 1080 Ti (11GB)

NVIDIA GeForce GTX 1660 Ti (6GB)

NVIDIA Quadro RTX 4000 (8GB)

NVIDIA Quadro P6000 (24GB)

NVIDIA Quadro P5000 (12GB)

NVIDIA Quadro P4000 (8GB)

NVIDIA Quadro P2000 (5GB)

Audio Onboard

Storage Kingston KC1000 960G

click here to know more

1 note

·

View note

Text

Geforce 11xx Or 20xx By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

New Post has been published on https://www.ultragamerz.com/geforce-11xx-or-20xx-by-nvidia-ampere-or-volta-to-face-amd-vega-2-in-2018/

Geforce 11xx Or 20xx By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

Geforce 11xx Or 20xx Made By NVIDIA Ampere Or Volta To Face AMD VEGA 2 In 2018

Next Geforce Will be made by Ampere or Volta? It will Be Revealed at GTC – March 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018 – Credit By Nvidia

NVIDIA just released the Nvidia Titan V which is not for gaming but it is called the most powerful graphics card ever made for PC and it is made by 12nm volta that if every thing was going normal was going to be Nviidia next platform. Now recently the next Nvidia architecture for gaming is rumored to be called ampere. As far we know Nvidia is planning to reveal or announce Ampere or “A” gaming version of the volta graphics during GPU Technology Conference in March and in the first half of 2018. Also CES 2018 is on its way and it is one of the possibilities that they start talking about the new GPU series in CES and many are believing that it is going to be the case for AMD as well. One important thing to point out is that these two companies release of the new product may actually depend on the other very much. AMD is going to jump to the next VEGA 2 12nm soon in early 2018 too. Nvidia Next Gen may be named the GeForce 11 or GeForce 20.

Nvidia Ampere is new and only have been revealed in some not official ways while Volta has been named in Nvidia 2020 road maps and have 2 main graphics out now for AI processing. I there was no leak about the Ampere we were talking about the Volta gaming version only. But also from the the Nvidia CEO side we have seen trying to say NO there is not any Volta gaming at least now. Having all that said, NVIDIA CEO has mentioned that there is no plan to move Volta in gaming market yet:

“Volta for gaming, we haven’t announced anything. And all I can say is that our pipeline is filled with some exciting new toys for the gamers, and we have some really exciting new technology to offer them in the pipeline. But for the holiday season for the foreseeable future, I think Pascal is just unbeatable. It’s just the best thing out there. And everybody who’s looking forward to playing Call of Duty or Destiny 2, if they don’t already have one, should run out and get themselves a Pascal.” Nvidia CEO Jensen Huang

THE POWER OF GTC 2018 SILICON VALLEY MARCH 26-29, 2018

GTC is the largest and most important event of the year for GPU developers around the world in different place and it will be 2018 in silicon valley. you can explore GTC and the global GTC event series for valuable training and a showcase of the most vital work in the computing industry today. Get updated by the latest breakthroughs in everything from artificial intelligence and deep learning to healthcare, virtual reality, accelerated analytics, and self-driving cars. “IF GPUs ARE TIME MACHINES, THEN GTC IS WHERE YOU COME SEE THE FUTURE.” Jensen Huang, NVIDIA. FEATURED 2018 SPEAKERS and Hundreds of additional GTC 2018 speakers will be added.

FEATURED 2018 SPEAKERS

Nvidia Volta 2018 Credit: Nvidia

Tags: Graphic cards, Nvidia, Nvidia 2018, Nvidia Ampere 2018, nvidia new gaming graphics, nvidia volta, nvidia volta 2018, nvidia volta game GPU, nvidia volta gaming graphic cards, nvidia ampere, technology, gtc 2018, nvda, ampere

Related Post

Mario + Rabbids Kingdom Battle: Rescue Luigi With ...

Fire Emblem Echoes Shadows of Valentia season pass

8 Core AMD RYZEN PRO CPUs, What Do They Deliver?

Fallout 4 official trailer

Destiny 2 gameplay reaveal

Can Project Scorpio deliver on its promises ?

.yuzo_related_post imgwidth:146px !important; height:136px !important; .yuzo_related_post .relatedthumbline-height:14px;background:#ffffff !important;color:!important; .yuzo_related_post .relatedthumb:hoverbackground:#ff3a3a !important; -webkit-transition: background 0.2s linear; -moz-transition: background 0.2s linear; -o-transition: background 0.2s linear; transition: background 0.2s linear;;color:!important; .yuzo_related_post .relatedthumb acolor:!important; .yuzo_related_post .relatedthumb a:hover color:!important;} .yuzo_related_post .relatedthumb:hover a color:!important; .yuzo_related_post .yuzo_text color:!important; .yuzo_related_post .relatedthumb:hover .yuzo_text color:!important; .yuzo_related_post .relatedthumb margin: 0px 0px 0px 0px; padding: 5px 5px 5px 5px; .yuzo_related_post .relatedthumb

#ampere#Graphic cards#gtc 2018#NVDA#Nvidia#Nvidia 2018#nvidia ampere#Nvidia Ampere 2018#nvidia new gaming graphics#nvidia volta#nvidia volta 2018#nvidia volta game GPU#nvidia volta gaming graphic cards#technology#Gaming hardware#Gaming News#Technology

0 notes

Text

Cattive notizie: i prezzi della GPU Nvidia RTX 4080 ora sembrano ancora più minacciosi

La scheda grafica RTX 4080 di Nvidia è stata avvistata in Europa e nel Regno Unito prima del suo grande lancio il 16 novembre, ma purtroppo questi sono cartellini dei prezzi ancora più preoccupanti per gli aspiranti acquirenti rispetto a quelli a cui abbiamo già assistito negli Stati Uniti.

Se ricordi, i prezzi statunitensi - per i modelli di terze parti dell'RTX 4080, ovvero schede personalizzate realizzate dai partner di produzione di Nvidia - sono apparsi alla fine della scorsa settimana e nel complesso non hanno offerto una buona visione. Certo, c'erano circa 4080 GPU che mantenevano il prezzo consigliato da Nvidia - il che è stato un vero sollievo da vedere - ma i modelli di fascia alta hanno spinto un premio piuttosto ridicolo.

Se speravi che la situazione in Europa potesse essere migliore, ripensaci sulla base dei primi scorci che abbiamo visto ora.

Nel Regno Unito, il rivenditore Box ha vari modelli Asus RTX 4080 a partire da �� 1.399 piuttosto allettanti (TUF Gaming (si apre in una nuova scheda)), con Palit RTX 4080 entry-level (si apre in una nuova scheda) anche a quel livello (due varianti di GameRock(opens in new tab)).

La TUF Gaming OC Edition (si apre in una nuova scheda) di Asus sale fino a £ 1.571, e se vuoi il ROG Strix RTX 4080 top di gamma (si apre in una nuova scheda), ti costerà £ 1.649 . Sì, ahi.

Come sottolinea Tom's Hardware (si apre in una nuova scheda), anche la situazione altrove in Europa sembra piuttosto disastrosa. L'Asus TUF RTX 4080 costa € 1.430 in Francia (InfoMax) – che è vicino a $ 1.500 in dollari USA – e 11.299,00 kr in Danimarca (Proshop), che è ancora di più (circa $ 1.560).

Analisi: Miseria dei prezzi aggravata Ricorda che la citata Asus TUF RTX 4080 è una delle schede grafiche che si trova a MSRP negli Stati Uniti (almeno al Micro Center), quindi è piuttosto deludente vederla aumentare considerevolmente nel Regno Unito e in Europa.

Ora, questi primi prezzi non rappresentano l'intero panorama dei prezzi RTX 4080, ovviamente; quindi non possiamo lasciarci trasportare. I grandi rivenditori come Scan, Overclockers e così via nel Regno Unito non hanno ancora un prezzo per i modelli RTX 4080, sebbene gli elenchi dei prodotti siano sul sito.

Detto questo, non lo consideriamo neanche un grande segnale, e piuttosto un'indicazione che il titolo potrebbe essere traballante al momento del lancio. Sarebbe una situazione tristemente familiare e che potrebbe comunque portare all'inflazione dei prezzi, poiché gli scalper cercano di raccogliere l'inventario e magari venderlo con un profitto ancora maggiore.

Stiamo anticipando noi stessi, naturalmente, ma l'intero lancio dell'RTX 4080 sta iniziando a riempirci di trepidazione. E certamente, è difficile essere ottimisti in particolare per il mercato del Regno Unito quando non è ancora possibile ottenere un RTX 4090 per meno di £ 1.800 in questo momento (si apre in una nuova scheda).

I prezzi alla fine si stabilizzeranno di più, anche se alcuni giocatori potrebbero aspettare che emergano schede grafiche Nvidia più convenienti, come l'RTX 4070 Ti (anche se conveniente sarà un termine relativo qui, questa GPU potrebbe certamente sembrare qualcosa di un affare rispetto ai prezzi sopra indicati). Allo stesso modo, ci sono opzioni di AMD che arrivano sotto forma di GPU RDNA 3, ma ancora una volta solo modelli di fascia alta, con l'RX 7900 XTX che mira ad andare in punta di piedi con l'RTX 4080.

0 notes

Text

AI Startup Environments 3/5: The GPU: Green, Cheap, Universal

AI Startup Environments 3/5: The GPU: Green, Cheap, Universal

2017 was the scene of a low noise revolution: Nvidia’s Volta and Pascal architectures were the roots of the “mainstream” Turing and Ampere architectures that followed. By technological effect, Nvidia, returns via the metaverse to the creation of innovative 3D universes, integrating machine learning.From video games and the creation of synthetic images to AI and the metaverseThe GPU has gone from…

View On WordPress

0 notes

Text

How NVIDIA A100 GPUs Can Revolutionize Game Development

Gaming has evolved from a niche hobby to a booming multi-billion-dollar industry, with its market value expected to hit a whopping $625 billion by 2028. This surge is partly fueled by the rise of cloud gaming, enabling users to stream top titles via services like Xbox Cloud Gaming without the need for pricey hardware. Simultaneously, virtual reality (VR) gaming is gaining traction, with its market size projected to reach $71.2 billion by 2028.

With this growth, there’s a heightened demand for more realistic, immersive, and visually stunning games. Meeting these expectations requires immense graphic processing power, and each new generation of GPUs aims to deliver just that. Enter NVIDIA’s A100 GPU, a game-changer promising significant leaps in performance and efficiency that can transform your game development workflow.

In this article, we’ll explore how adopting NVIDIA A100 GPUs can revolutionize various aspects of game development and enable feats previously deemed impossible.

The Impact of GPUs on Game Development

Remember when video game graphics resembled simple cartoons? Those days are long gone, thanks to GPUs.

Initially, games relied on the CPU for all processing tasks, resulting in pixelated graphics and limited complexity. The introduction of dedicated GPUs in the 1980s changed everything. These specialized processors, with their parallel processing architecture, could handle the computationally intensive tasks of rendering graphics much faster, leading to smoother gameplay and higher resolutions.

The mid-90s saw the advent of 3D graphics, further cementing the GPU’s role. GPUs could now manipulate polygons and textures, creating immersive 3D worlds that captivated players. Techniques like texture filtering, anti-aliasing, and bump mapping brought realism and depth to virtual environments.

Shaders introduced in the early 2000s marked a new era. Developers could now write code to control how the GPU rendered graphics, leading to dynamic lighting, real-time shadows, and complex particle effects. Modern NVIDIA GPUs like the A100 continue to push these boundaries. Features like ray tracing, which simulates real-world light interactions, and AI-powered upscaling techniques further blur the lines between reality and virtual worlds. Handling massive datasets and complex simulations, they create dynamic weather systems and realistic physics, making games more lifelike than ever.

NVIDIA A100 GPU Architecture

Before diving deeper, let’s understand the NVIDIA A100 GPU architecture. Built on the revolutionary Ampere architecture, the NVIDIA A100 offers dramatic performance and efficiency gains over its predecessors. Key advancements include:

3rd Generation Tensor Cores: Providing up to 20x higher deep learning training and inference throughput over the previous Volta generation.

Tensor Float 32 (TF32) Precision: Accelerates AI training while maintaining accuracy. Combined with structural sparsity support, it offers optimal speedups.

HBM2e Memory: Delivers up to 80GB capacity and 2 TB/s bandwidth, making it the world’s fastest GPU memory system.

Multi-Instance GPU (MIG): Allows a single A100 GPU to be securely partitioned into up to seven smaller GPU instances for shared usage, accelerating multi-tenancy.

NVLink 3rd Gen Technology: Combines up to 16 A100 GPUs to operate as one giant GPU, with up to 600 GB/sec interconnect bandwidth.

PCIe Gen4 Support: Provides 64 GB/s host transfer speeds, doubling interface throughput over PCIe Gen3 GPUs.

NVIDIA A100 GPU for Game Development

When it comes to game development, the NVIDIA A100 GPU is a total game-changer, transforming what was once thought impossible. Let’s delve into how this GPU revolutionizes game design and workflows with massive improvements in AI, multitasking flexibility, and high-resolution rendering support.

AI-Assisted Content Creation

The NVIDIA A100 significantly accelerates neural networks through its 3rd generation Tensor Cores, enabling developers to integrate powerful AI techniques into content creation and testing workflows. Procedural content generation via machine learning algorithms can automatically produce game assets, textures, animations, and sounds from input concepts. The immense parameter space of neural networks allows for near-infinite content combinations. AI agents powered by the NVIDIA A100 can also autonomously play-test games to detect flaws and identify areas for improvement at a massive scale. Advanced systems can drive dynamic narrative storytelling, adapting moment-to-moment based on player actions.

Faster Iteration for Programmers

The NVIDIA A100 GPU delivers up to 5x faster build and run times, dramatically accelerating programming iteration speed. This is invaluable for developers, allowing them to code, compile, test, and debug game logic and systems much more rapidly. Fixing bugs or experimenting with new features is no longer hampered by lengthy compile wait times. Programmers can stay in their flow state and make quicker adjustments based on feedback. This faster turnaround encourages bold experimentation, dynamic team collaboration, and ultimately faster innovation.

Multi-Instance GPU Flexibility

The Multi-Instance GPU (MIG) capability enables a single NVIDIA A100 GPU to be securely partitioned into smaller separate GPU instances. Game studios can use MIG to right-size GPU resources for tasks. Lightweight processes can leverage smaller instances while more demanding applications tap larger pools of resources. Multiple development or testing workloads can run simultaneously without contention. MIG also provides flexible access for individuals or teams based on dynamic needs. By improving GPU utilization efficiency, studios maximize their return on NVIDIA A100 investment.

High-Resolution Gameplay

The incredible throughput of the NVIDIA A100 makes real-time rendering of complex 8K scenes feasible. Designers can build hyper-detailed assets and environments that retain clarity when viewed on next-gen displays. Support for high frame rate 8K output showcases the GPU’s comfortable headroom for future graphical demands. This also benefits game development workflows, as assets can be created at 8K resolutions during modeling or texturing for superior quality before downscaling to target mainstream resolutions.

Wrapping Up

The NVIDIA A100 GPU represents a monumental leap forward in game development, offering unprecedented levels of performance, efficiency, and flexibility. With its advanced Ampere architecture and cutting-edge features, the A100 is set to revolutionize workflows across all aspects of game creation, from cloud gaming to virtual reality.

One of the most significant advantages of the NVIDIA A100 is its ability to accelerate AI-assisted content creation, allowing developers to generate game assets, textures, animations, and sounds more efficiently than ever before. The NVIDIA A100’s Multi-Instance GPU capability is also great for studios to optimize GPU resources for various tasks, maximizing efficiency and productivity.

Are you ready to revolutionize your game development workflow?

Experience the power of the NVIDIA A100 GPU at EXETON! We offer flexible cloud solutions tailored to your specific needs, allowing you to tap into the A100’s potential without the upfront investment. Our NVIDIA A100 80 GB PCIe GPUs start at just $2.75/hr, so you only pay for what you use!

FAQs

How can NVIDIA A100 GPUs revolutionize game development?

The NVIDIA A100, built with the groundbreaking Ampere architecture and boasting 54 billion transistors, delivers unmatched speeds ideal for the most demanding computing workloads, including cutting-edge game development. With 80 GB of memory, the A100 can effectively accelerate game development workflows.

Is the NVIDIA A100 suitable for both 2D and 3D game development?

Yes, the NVIDIA A100 GPUs are suitable for both 2D and 3D game development, accelerating rendering, simulation, and AI tasks.

Does the NVIDIA A100 provide tools for game optimization and performance tuning?

While the NVIDIA A100 doesn’t provide specific tools, developers can leverage its capabilities for optimization using other software tools and frameworks.

Muhammad Hussnain Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Exploring the Key Differences: NVIDIA DGX vs NVIDIA HGX Systems

A frequent topic of inquiry we encounter involves understanding the distinctions between the NVIDIA DGX and NVIDIA HGX platforms. Despite the resemblance in their names, these platforms represent distinct approaches NVIDIA employs to market its 8x GPU systems featuring NVLink technology. The shift in NVIDIA’s business strategy was notably evident during the transition from the NVIDIA P100 “Pascal” to the V100 “Volta” generations. This period marked the significant rise in prominence of the HGX model, a trend that has continued through the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

Focusing primarily on the 8x GPU configurations that utilize NVLink, NVIDIA’s product lineup includes the DGX and HGX lines. While there are other models like the 4x GPU Redstone and Redstone Next, the flagship DGX/HGX (Next) series predominantly features 8x GPU platforms with SXM architecture. To understand these systems better, let’s delve into the process of building an 8x GPU system based on the NVIDIA Tesla P100 with SXM2 configuration.

DeepLearning12 Initial Gear Load Out

Each server manufacturer designs and builds a unique baseboard to accommodate GPUs. NVIDIA provides the GPUs in the SXM form factor, which are then integrated into servers by either the server manufacturers themselves or by a third party like STH.

DeepLearning12 Half Heatsinks Installed 800

This task proved to be quite challenging. We encountered an issue with a prominent server manufacturer based in Texas, where they had applied an excessively thick layer of thermal paste on the heatsinks. This resulted in damage to several trays of GPUs, with many experiencing cracks. This experience led us to create one of our initial videos, aptly titled “The Challenges of SXM2 Installation.” The difficulty primarily arose from the stringent torque specifications required during the GPU installation process.

NVIDIA Tesla P100 V V100 Topology

During this development, NVIDIA established a standard for the 8x SXM GPU platform. This standardization incorporated Broadcom PCIe switches, initially for host connectivity, and subsequently expanded to include Infiniband connectivity.

Microsoft HGX 1 Topology

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

NVIDIA HGX 2 Dual GPU Baseboard Layout

Let’s delve into a comparison of the NVIDIA V100 setup in a server from 2020, renowned for its standout color scheme, particularly in the NVIDIA SXM coolers. When contrasting this with the earlier P100 version, an interesting detail emerges. In the Gigabyte server that housed the P100, one could notice that the SXM2 heatsinks were without branding. This marked a significant shift in NVIDIA’s approach. With the advent of the NVSwitch baseboard equipped with SXM3 sockets, NVIDIA upped its game by integrating not just the sockets but also the GPUs and their cooling systems directly. This move represented a notable advancement in their hardware design strategy.

Consequences

The consequences of this development were significant. Server manufacturers now had the option to acquire an 8-GPU module directly from NVIDIA, eliminating the need to apply excessive thermal paste to the GPUs. This change marked the inception of the NVIDIA HGX topology. It allowed server vendors the flexibility to customize the surrounding hardware as they desired. They could select their preferred specifications for RAM, CPUs, storage, and other components, while adhering to the predetermined GPU configuration determined by the NVIDIA HGX baseboard.

Inspur NF5488M5 Nvidia Smi Topology

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100.

The codename for this baseboard is “Delta”.

Officially, this board was called the NVIDIA HGX.

Inspur NF5488A5 NVIDIA HGX A100 8 GPU Assembly 8x A100 And NVSwitch Heatsinks Side 2

NVIDIA, along with its OEM partners and clients, recognized that increased power could enable the same quantity of GPUs to perform additional tasks. However, this enhancement came with a drawback: higher power consumption led to greater heat generation. This development prompted the introduction of liquid-cooled NVIDIA HGX A100 “Delta” platforms to efficiently manage this heat issue.

Supermicro Liquid Cooling Supermicro

The HGX A100 assembly was initially introduced with its own brand of air cooling systems, distinctively designed by the company.

In the newest “Hopper” series, the cooling systems were upscaled to manage the increased demands of the more powerful GPUs and the enhanced NVSwitch architecture. This upgrade is exemplified in the NVIDIA HGX H100 platform, also known as “Delta Next”.

NVIDIA DGX H100

NVIDIA’s DGX and HGX platforms represent cutting-edge GPU technology, each serving distinct needs in the industry. The DGX series, evolving since the P100 days, integrates HGX baseboards into comprehensive server solutions. Notable examples include the DGX V100 and DGX A100. These systems, crafted by rotating OEMs, offer fixed configurations, ensuring consistent, high-quality performance.

While the DGX H100 sets a high standard, the HGX H100 platform caters to clients seeking customization. It allows OEMs to tailor systems to specific requirements, offering variations in CPU types (including AMD or ARM), Xeon SKU levels, memory, storage, and network interfaces. This flexibility makes HGX ideal for diverse, specialized applications in GPU computing.

Conclusion

NVIDIA’s HGX baseboards streamline the process of integrating 8 GPUs with advanced NVLink and PCIe switched fabric technologies. This innovation allows NVIDIA’s OEM partners to create tailored solutions, giving NVIDIA the flexibility to price HGX boards with higher margins. The HGX platform is primarily focused on providing a robust foundation for custom configurations.

In contrast, NVIDIA’s DGX approach targets the development of high-value AI clusters and their associated ecosystems. The DGX brand, distinct from the DGX Station, represents NVIDIA’s comprehensive systems solution.

Particularly noteworthy are the NVIDIA HGX A100 and HGX H100 models, which have garnered significant attention following their adoption by leading AI initiatives like OpenAI and ChatGPT. These platforms demonstrate the capabilities of the 8x NVIDIA A100 setup in powering advanced AI tools. For those interested in a deeper dive into the various HGX A100 configurations and their role in AI development, exploring the hardware behind ChatGPT offers insightful perspectives on the 8x NVIDIA A100’s power and efficiency.

0 notes

Text

NVIDIA выпустила драйвер GeForce 471.96 WHQL с новыми игровыми профилями и поддержкой G-Sync для ещё нескольких мониторов

New Post has been published on https://v-m-shop.ru/2021/09/01/nvidia-vypustila-drajver-geforce-471-96-whql-s-novymi-igrovymi-profilyami-i-podderzhkoj-g-sync-dlya-eshhyo-neskolkih-monitorov/

NVIDIA выпустила драйвер GeForce 471.96 WHQL с новыми игровыми профилями и поддержкой G-Sync для ещё нескольких мониторов

NVIDIA выпустила драйвер GeForce 471.96 WHQL с новыми игровыми профилями и поддержкой G-Sync для ещё нескольких мониторов

31.08.2021 [18:59],

Николай Хижняк

Компания NVIDIA выпустила новую версию пакета графических драйверов для видеокарт GeForce — GeForce Game Ready 471.96 WHQL. Она обеспечивает поддержку новых G-Sync-совместимых дисплеев и исправление некоторых ошибок. Кроме того, компания оптимизировала драйвер под ряд новых игр.

Источник изображений: NVIDIA

В новую версию драйвера включена поддержка G-Sync для мониторов EVE Spectrum ES07D03, Lenovo G27Q-20, MSI MAG321QR, Philips OLED806, ViewSonic XG250 и Xiaomi O77M8-MAS.

Также, в приложение GeForce Experience добавлено 24 новых игровых профиля, обеспечивающих оптимизированный игровой процесс. Список игр следующий:

Aliens: Fireteam Elite

Bless Unleashed

Blood of Heroes

Car Mechanic Simulator 2021

Doki Doki Literature Club Plus!

Draw & Guess

Faraday Protocol

Final Fantasy

Final Fantasy III

Ghost Hunters Corp

Golf With Your Friends

GrandChase

Humankind

King’s Bounty II

Madden NFL 22

Mini Motorways

Psychonauts 2

Quake Remastered

SAMURAI WARRIORS 5

Supraland

The Great Ace Attorney Chronicles

The Walking Dead: Onslaught

Yakuza 4 Remastered

Yakuza 5 Remastered

Производитель рассказал, что решил некоторые проблемы, встречавшиеся в предыдущих версиях драйвера:

задержка DPC была выше, когда цветной режим установлен на 8-битный цвет по сравнению с 10-битным цветом;

было невозможно определить поддерживаемые режимы отображения для дисплея Samsung Odyssey G9;

исправлен сбой с синим экраном и перезагрузка, когда подключены два дисплеях Samsung Odyssey G9, работающие с частотой обновления 240 Гц;

процесс nvdisplay.Container.exe постоянно записывал данные в C:ProgramDataNVIDIA Corporationnvtoppsnvtopps.db3;

на ноутбуках с Windows 11 и установленным гибридным графическим режимом, GPU часто просыпался во время бездействия;

исправлены проблемы со стабильностью Topaz Denoise AI на Turing/Volta GPU.

Компания NVIDIA также указала список известных проблем:

в DeathLoop происходит сбой драйвера при игре с включённым Windows HDR;

после сброса драйвера с использованием быстрой установки, когда масштабирование GPU было включено, коэффициент Upscaling 0,59x отсутствует в масштабировании панели управления NVIDIA;

в Battlefield V при игре с DirectX 12 могут появляться сбои при включённом HDR;

воспроизведение видео на YouTube остан��вливается при прокрутке страницы вниз;

в игре Tom Clancy’s The Division 2 могут отображаться графические артефакты;

Sonic & All-Stars Racing Transformed может вылетать на трассах, где игроки едут по воде.

Загрузить графический драйвер GeForce Game Ready 471.96 WHQL можно с сайта NVIDIA или через приложение GeForce Experience.

Источник:

NVIDIA

VMShop

0 notes

Text

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 - 2018

New Post has been published on https://www.ultragamerz.com/new-nvidia-ampere-volta-gaming-graphics-geforce-11-20-vs-amd-vega-2-2018/

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 - 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018

Ampere or Volta, It will Be Revealed at GTC – March 2018

New NVIDIA Ampere/Volta Gaming Graphics Geforce 11/20 VS AMD VEGA 2 – 2018 – Credit By Nvidia

Forget Volta graphic and plans have changed for Nvidia next gaming graphic cards. NVIDIA just released the Nvidia Titan V which is not for gaming but is most powerful graphics ever made for PC. it is based on 12nm volta and got a lot of headlines. Volta was used to be considered as the main next Nvidia architecture for gaming as well. But after a while rumors came out that Nvidia’s next-generation GPU architecture is to be GeForce Ampere. Nvidia is planning to reveal or announce Ampere or the gaming version of the volta during GPU Technology Conference in March, much sooner than the second half of 2018. Also CES 2018 is on its way and it is one of the possibilities that they start talking about something new and announce a new GPU in CES and many are believing that it is going to be the case for AMD. One important thing to point out is that these two things actually depend on the other so much. AMD is going to jump to the next VEGA soon in early 2018 too. Nvidia Next Gen may be named the GeForce 11 or GeForce 20.

The Ampere micro-architecture is to be improved and evolved not much in hand right now, but Volta chips used in Nvidia’s supercomputer GPUs are doing pretty much great. Whatever rumours about Ampere as a seperate platform or Volta transformation for gaming is correct or not, anything better that Volta is gonna be good. Ampere will be the successor to Nvidia’s GeForce 10 series graphics cards the pascal. The rumors are true or false right now the Pascal is over and no major graphics has been released except the titan x star wars edition and 1070 ti. We’ve never heard anything about Ampere before from Nvidia But also we have seen denying that Volta is going to be a gaming platform for Nvidia. AMD’s moving behind the scenes to bring its next-gen 7nm Navi GPU architecture into play as son as they can. Nvidia has been hardly under pressure from AMD to deliver more significantly more powerful GPUs anytime soon until recently. If AMD moves toward the next gen of RX VEGA in early 2018 you should expect it to beat the current Nvidia cards from pascal geforce 10 series.

Having all this NVIDIA CEO has mentioned that there is no plan to move Volta in gaming market yet:

“Volta for gaming, we haven’t announced anything. And all I can say is that our pipeline is filled with some exciting new toys for the gamers, and we have some really exciting new technology to offer them in the pipeline. But for the holiday season for the foreseeable future, I think Pascal is just unbeatable. It’s just the best thing out there. And everybody who’s looking forward to playing Call of Duty or Destiny 2, if they don’t already have one, should run out and get themselves a Pascal.” Nvidia CEO Jensen Huang

THE POWER OF GTC 2018 SILICON VALLEY MARCH 26-29, 2018

GTC is the largest and most important event of the year for GPU developers around the world in different place and it will be 2018 in silicon valley. you can explore GTC and the global GTC event series for valuable training and a showcase of the most vital work in the computing industry today. Get updated by the latest breakthroughs in everything from artificial intelligence and deep learning to healthcare, virtual reality, accelerated analytics, and self-driving cars. “IF GPUs ARE TIME MACHINES, THEN GTC IS WHERE YOU COME SEE THE FUTURE.” Jensen Huang, NVIDIA. FEATURED 2018 SPEAKERS and Hundreds of additional GTC 2018 speakers will be added.

FEATURED 2018 SPEAKERS

Nvidia Volta 2018 Credit: Nvidia

Tags: Graphic cards, Nvidia, Nvidia 2018, Nvidia Ampere 2018, nvidia new gaming graphics, nvidia volta, nvidia volta 2018, nvidia volta game GPU, nvidia volta gaming graphic cards, nvidia ampere, technology, gtc 2018, nvda, ampere

#ampere#Graphic cards#gtc 2018#NVDA#Nvidia#Nvidia 2018#nvidia ampere#Nvidia Ampere 2018#nvidia new gaming graphics#nvidia volta#nvidia volta 2018#nvidia volta game GPU#nvidia volta gaming graphic cards#technology#Gaming hardware#Gaming News#Technology

0 notes

Text

Rumor :NVIDIA GTX 2080, 2070 Ampere Cards Launching March 26-29 At GTC 2018

Players will soon be introducing the new Nvidia GTX 2080/2070 graphics cards, which are highly anticipated by business and miners who need high graphics power. will introduce GTX 2080 and GTX 2070 graphics cards at the GPU Technology Conference (GTC), which will be held at the end of March. New cards based on 12 nm Ampere graphics architecture are expected by the players with enthusiasm.

Ampere architecture, which has been circulating in rumor over the last few months, has many reports showing that the name is like this although it has not yet been officially announced by Nvidia. Nvidia, which will switch directly to Ampere architecture without using Volta architecture on player cards, has not yet explained Ampere's details. Ampere is a new architecture in its own right or is Volta's developed version, a matter of curiosity. "Ampere" for the game, "Turing" for artificial intelligence

Ampere, which will use TSMC's new 12 nm production technology, comes with Samsung's new 16 GB GDDR6 memories. The first GPU to use the amp architecture is expected to replace GP102 (GTX 1080Ti) in terms of performance, GP104 (GTX 1080/70) as chip size and market positioning. So this means that you can get a GTX 1080Ti display card for the GTX 1080 price.

Apart from these, we also know that the company is working on an architecture called "Turing". Designed for artificial intelligence and machine learning, this architecture is named after renowned computer scientist and mathematician Alan Turing. Nvidia, who said that he made new changes by making minor changes before, seems to have given up this habit. It seems that Nvidia has designed two different architectures for two different areas. Of course, the names we have mentioned in the title are not yet finalized. The phrases "2080" and "2070" have not been officially confirmed yet. We will learn that Nvidia will name new graphics cards as 20xx or 11xx in the GTC, which will be held from March 26-29. Read the full article

1 note

·

View note

Text

New Nintendo Switch 2 release date, specs, leaks and more

Even with the PS5 and Xbox Series X finally out, anticipation for a Nintendo Switch 2 (or Nintendo Switch Pro) is still red-hot. According to recent reports, the next Nintendo Switch could be a beefed-up version of Nintendo's beloved hybrid console with new features and 4K gaming support.

Nintendo's next Switch likely won't deliver things like ray tracing or 8K gaming, but a more powerful Switch could help the company better compete with the latest next-gen consoles. And some rumors have pegged the Switch 2 as sporting some truly wild features, including potential dual-screen support.

Nintendo hasn't confirmed the existence of a new Nintendo Switch model, but the latest rumors indicate that a new Switch is all but inevitable. Here's everything we know about the New Nintendo Switch 2, including its possible release date, specs and features.

Latest Nintendo Switch 2 news (November 29)

The new GPD Win 3 gives us a taste of how a Switch Pro could work, with smooth 60 fps gaming on the go.

Taiwan's Economic Daily News reports that Nintendo is including a Mini-LED display on the Switch 2, and is gearing up to launch the console in 2021.

New Nintendo Switch 2 release date

Citing a Taipei-based report out of the Economic Daily News, The Edge Markets reports that a new version of Nintendo's popular hybrid console could arrive by early 2021. That was quickly followed up by a Bloomberg report that suggests that the new Switch could arrive next year complete with 4K support and an expansive new games lineup.

A subsequent Bloomberg report claims that developers are being asked to make their Nintendo Switch games playable in 4K, adding further weight to the possibility of an upcoming hardware upgrade.

Economic Daily News later reported that Nintendo was still planning a 2021 launch, and has been visiting companies in Taiwan to obtain displays for the new console.

Here's a look at when every version of the Switch has launched so far. Looking at these days, it's possible we could see an updated Switch by 2021 to coincide with the system's four-year anniversary.

Nintendo Switch: March 3, 2017

Nintendo Switch (upgraded battery): August 2019

Nintendo Switch Lite: September 20, 2019

Nintendo Switch 2/Nintendo Switch Pro: 2021 (rumored)

New Nintendo Switch 2 price

While there's no official price set for Nintendo's next Switch, it seems safe to assume it'll cost more than the $299 base model — and certainly more than the $199 Nintendo Switch Lite. In an interview with Gamesindustry.biz, Japan-based games consultant Serkan Toto predicts that the Switch Pro will cost around $399.

New Nintendo Switch 2 specs

The Switch Pro's rumored specs have varied based on different reports, with some claiming that the next Switch will be a modest upgrade and others hinting at a significant power boost for Nintendo's console. A sketchy, now-deleted 4chan post (via Inverse) suggests some major changes, including a custom Nvidia Tegra Xavier processor, a 64GB SSD, 4K video support, and two USB-C ports. This post also claimed that the Switch Pro would be a TV-only console, and won't be playable in portable mode.

Economic Daily News also claims Nintendo is set to include a Mini-LED display in the Switch 2, which is a big leap up from the 720p LCD display included in the original. Utilising Mini-LED tech would mean better responsiveness, contrast, and energy efficiency, all of which would be more than welcome on a new Switch.

However, a forum post on Korean website Clien (via TechRadar) suggests that the next Switch might not be a huge generational leap. The poster claims that Nintendo is working with Nvidia on a custom Tegra processor based on Nvidia's Volta architecture, and won't include the Tegra X1+ chip that many had expected the console to feature. As a result, 4K support may not be feasible for the Switch Pro.

The current Nintendo Switch packs a custom Nvidia Tegra X1 processor, a 6.2-inch, 720p display and 32GB of storage. In August 2019, the console saw a minor refresh, which bumped the battery life from an estimated 2.5 to 6.5 hours to 4.5 to 9 hours. In our own Switch battery tests, we found that the new model lasts nearly twice as long for games such as Super Smash Bros. Ultimate.

The Nintendo Switch 2 may also feature Nvidia DLSS 2.0 support, according to an Nvidia job ad spotted by Wccftech. The posting seeks a software engineer on the Tegra team to work on "next-generation graphics" and mentions gaming consoles by name. Considering that the Switch is the only Tegra-powered console out there, it's possible that Nvidia is conducting work on a CPU for a new model. DLSS (Deep Learning Super Sampling) is an AI technology designed to boost framerates in games, which could lead to much smoother performance if it makes its way to the Switch 2.

But improved specs could just be the tip of the iceberg for the Nintendo Switch 2. A series of code found in the Switch's April 2020 firmware update reveals what could be support for a dual-screen console, suggesting that the next Nintendo Switch may be a two-display device.

This wouldn't be a first for Nintendo given the company's popular Nintendo DS and 3DS handhelds, but we'd be curious to see how dual-screen support would play into the Switch ecosystem. Perhaps you'll be able to play in handheld mode while beaming certain content to your TV, similar to how the Wii U operated.

There are also some recent mobile GPU breakthroughs that point to a promising future for upcoming handheld consoles such as the Switch 2. Samsung and AMD are expected to be working on a new Exynos 1000 chip with AMD graphics built-in, which could give the Switch 2 a massive power boost if Nintendo decides to opt for that CPU over the aging Tegra X1 found in the current Switch.

One of the most recent notable mentions of the Nintendo Switch 2 comes from the developers of Eiyuden Chronicle, a throwback role-playing game that's currently on Kickstarter. As spotted by Nintendo Life, Rabbit & Bear Studios mention "Nintendo's next generation console" as one of the platforms the developer plans to release the game for. While the studio was transparent about having no inside knowledge to Nintendo's plans, the company noted that porting the title to the relatively underpowered Switch while building it for next-gen platforms would be an expensive endeavor.

New Nintendo Switch 2 features

If Nintendo does make another Switch-like console, it seems safe to assume that it'll once again allow you to play in TV, tabletop and handheld modes. But some recent Nintendo patents hint at some other interesting features that could either make their way to the new console or serve as a complementary device.

Nintendo recently patented a unique health-tracking device, which would be able to track your sleep, monitor your mood via microphones and sensors, and even change the odor of a room. Interestingly, the patented device has its own dock (much like the Switch), and seems designed to work with health-related games a la Ring Fit Adventure. It's too early to tell whether such a device will even come to market, but it will be interesting to see if Nintendo ups its fitness-tracking game in time for the next Switch console.

What we want from the Nintendo Switch 2

If the Nintendo Switch 2 or Nintendo Switch Pro does become a reality, there are a handful of features we'd love to see that could make it a compelling high-end option for Nintendo fans.

1080p handheld gameplay: The Switch's 6.2-inch screen is limited to 720p in handheld mode, meaning you can't experience titles like Super Mario Odyssey and Pokémon Sword and Shield in their full glory. The ability to game on the go in 1080p would be a big reason to upgrade to a Switch Pro — if the console can muster 60fps at 1080p, even better.

4K or 1440p support for TV mode. Considering that 4K consoles are out there and that the PS5 and Xbox Series X will support 8K content, it'd be nice to see the Switch Pro get a resolution bump. The new console would likely need a beefy new dock to support 4K or even 2560 x 1440 resolutions, but just imagine how glorious it would be to play Breath of the Wild in 4K.

Better ergonomics. We recently got our hands on Alienware's Concept UFO, which is a Switch-like portable gaming PC that features standard controller-sized buttons, triggers and grips. If Nintendo can devise new Joy-Cons that are bigger and more ergonomic without being too massive, the Switch Pro could be the most comfortable way to play on the go.

True Bluetooth support. While the current Switch features Bluetooth 4.1 for connecting wirelessly to Joy-Cons and Pro Controllers, you can't pair other Bluetooth gadgets (like headphones) to the console without an adapter. Nintendo, I just want to be able to pair my AirPods to my Switch without any extra fuss — make it happen!

Why we want a Nintendo Switch 2

The Nintendo Switch is an absolute joy of a system backed by some of the finest games Nintendo has ever released. But its hardware is starting to show its limitations more than three years after release, largely when it comes to ports of major third-party games.

Kotaku's Ethan Gach recently got his hands on the new Switch port of The Outer Worlds, which reportedly "looks so bad on Switch I'm tempted to tell even people who have no other means of playing it to stay away." Based on Gach's screenshots, the port looks incredibly blurry, and suffers from framerate drops. Gamers have had similar issues with ports such as Pillars of Eternity and Wasteland 2.

While Nintendo games such as Breath of the Wild and Animal Crossing: New Horizons are optimized to look and run great on Switch, the console seems to be running into a AAA games problem. And that could prove especially challenging for Nintendo, especially with a new crop of third-party games built for PS5 and Xbox Series X that may be more graphically demanding than ever.

Nintendo doesn't necessarily need a system as powerful as Sony's and Microsoft's upcoming console (the Switch has done just fine against PS4 and Xbox One), but a significant power boost could make the company's hybrid console even more appealing for fans of big third-party franchises.

Should I wait for a Nintendo Switch 2?

Considering that the Switch 2 is still a rumor at this point, we wouldn't count on it coming out just yet -- at least not anytime soon. The Nintendo Switch and Nintendo Switch Lite are at the top of our list for the best handheld gaming consoles, and we expect them to continue to get supported for years to come. So if you've yet to jump on the Switch bandwagon, you can do so with confidence that it won't be replaced anytime soon.

Plus, if there is indeed a Switch 2 or Switch Pro, chances are it'll work with your existing Switch game library. Nintendo has a history of supporting its handhelds through multiple iterations, with the Nintendo DS/Nintendo 3DS family supporting the same game library for more than a decade. Given how popular the Switch is, we expect Nintendo to take a similar approach for its current console.

0 notes

Text

Even Now, The Bullish Thesis for Nvidia's Future is Breathtaking

nvidia, billion, market, company, world, driving

Even Now, The Bullish Thesis for Nvidia's Future is Breathtaking

Date Published: 1-28-2018 Written by: Ophir Gottlieb This is a snippet from a CML Pro dossier. LEDE It's time for an update, yet again, on the tech gem that has its sights set on powering all of technology, and in this review we discuss several segments, with total addressable markets topping $1 trillion. It is this forward looking view of the world that justifies Nvidia's market cap -- and a potential for serious growth. CES Nvidia was added to Top Picks on 2-Jan-16 for $32.25. As of this writing it is trading at $236.66, up 591.6%. While the key note at CES was a full 90 minutes, just the first 2 minutes and 30 seconds does the trick for a primer. Here is that video, below:

youtube

BREAKOUT SESSION Even yet more revealing was CEO Jensen Huang's breakout session which can be seen through this article posted by Venture Beat. STORY But, apart from all the videos and all the forecasts, there is more news -- real news, that powers the bullish thesis for Nvidia forward. Let's talk about some details. CARS We can star with self-driving featured cars which is a nascent industry in terms of revenue, it is booming with respect to research and planned infrastructure. Here is a forecast for the self-driving featured market:

That's 134% compounded annual growth for the six years from 2015-2020 and deep learning is the secret weapon for self-driving car algorithms. And, while dozens of manufacturers of personal cars and trucks and commercial trucks are readying themselves for a fierce battle, Nvidia has a different approach. The company's strategy in this segment is to become a part of the internal workings on which all manufacturers depend. And while that sounded a little bold when we first covered Nvidia back in 2015, today it isn't bold, it's simply a matter of fact. First, the established players in this manufacturing world are already relying on Nvidia -- companies like Audi, Toyota, Mercedes-Benz, Volvo and Tesla in automotive, and companies like PACCAR, a leading global truck manufacturer with over $17 billion in sales and 23,000 employees. Here is a wonderful video that hits the high points of the commercial trucking world, and even has some cool musical scoring:

youtube

Further yet, Nvidia has a partnership with Bosch, the world's largest automotive supplier with total global sales of $73.1 billion in 2016. We also now know that 145 automotive startups around the world have chosen NVIDIA DRIVE. In total, 225 companies are currently developing with DRIVE PX. But all of this is still, unbelievably, just the tip of the iceberg. When people think of AI in the car, they tend to imagine level 4 and level 5 driving capabilities. At these levels -- 5 being the highest -- the vehicle would be capable of most driving scenarios it encounters. Level 5 would be a fully autonomous drive. But the AI advances inside the car shouldn't go unnoticed. Here is a snippet from TheStreet.com:

Mercedes-Benz unveiled its new (Mercedes Benz User Experience) MBUX infotainment system. The AI-powered system will be available in the A-Class starting next month. Nvidia has already announced a new partnership with Volkswagen (VLKAY) for its Drive IX, Nvidia's intelligence experience toolkit. In a nutshell, Nvidia's hardware gives its customers the tools necessary to build their own AI-based applications.

The point here is that it's not just hardware, but also software. Danny Shapiro, senior director of automotive at Nvidia recently said at CES:

Software is going to define so much of the user experience, the driving experience and it will continue to evolve and get better and better. New applications and new features [will be] added to your car even after it's in your driveway.

The expansiveness of AI as it pertains to the automobile, beyond self-driving, is breathtaking. Another final word for Shapiro:

AI is really at the heart of everything that's happening in the transportation space.

WHY THIS IS IMPORTANT We could go on and on with links to product announcement on Nvidia's site that are just breathtaking, but it is in fact a 40,000 foot view that we are after. While all of this sounds like a massive opportunity, and it is, the realization of this boom has barely hit Nvidia's business. Here is the revenue from the automotive sector for Nvidia:

That's just $487 million, for a company with over $9 billion in sales. But, the total addressable market (TAM), at least in Nvidia's eyes, is quite substantial:

That is $8 billion by 2025 heading to $100 billion in the future. So, you see, while the self-driving featured car world and the various other aspects of a smart car are making headlines, they are yet to hit Nvidia's bottom line. So while we can marvel at the revenue and earnings growth, which has been staggering, just this one area is in such early stages, that it is, practically speaking, rounding error as of today. STEPPING EVEN FURTHER BACK We focused on the automotive segment to make a point -- not just about Nvidia's proliferation in one segment and the potential TAM, but also the very idea of what the company is centered on. It's not about being the face of a product, it's about being the guts of every product. Wall Street was slow to pick up on Nvidia's potential early on, but that is no longer the case. Recently Bank of America Merrill Lynch raised its price target to $275. But it's the recognition that Nvidia has several business lines, that in and of themselves are substantial companies, that is starting to resonate. We covered automotive, but the data center business is also explosive. A misguide article written August 11th, 2017, by MarketWatch read Nvidia stock could pause as server growth slows down. Here is a quick snippet from that article:

While [the graphics chips designed for data centers] segment soared 175% from the same quarter a year ago, it grew only 2% on a sequential basis to $416 million.

Of course, in the earnings report that followed, Wall Street was in fact surprised, and the data center business is large enough, right now, to see substantial impact on revenue. Here is what CNBC reported:

The company reported $501 million in datacenter revenue, which includes sales of GPUs to cloud providers like Amazon Web Services, beating analyst estimates of $461 million and marking a 20 percent increase from last quarter.

And, for a chart, we turned to some cool graphics from TechCrunch, with our added emphasis on top of it:

Taking a step back, autonomous anything (cars, trucks, drones, robots) and many other trends are still small, but here is what we can expect for the pubic cloud computing platform world:

The worldwide public cloud market is forecast to rise from $154 billion this year (2017) to nearly half a trillion dollars by 2026. That market has three dominant providers:

Amazon, Microsoft, and Google purchase from Nvidia, at scale, and this business line is a winner, right now. Tencent, Alibaba, Baidu, Facebook and Oracle also rely on Nvidia chips for their clouds. In fact, on 9-27-2017 we penned Nvidia Sign Massive Deal with China's Kingpins, relating to the cloud, specifically. So, what changed? It turns out that Nvidia's newest chip, 'Volta,' has done what it claimed it will do. This is from Nvidia:

NVIDIA Volta™ is the new driving force behind artificial intelligence. Volta will fuel breakthroughs in every industry. Humanity's moonshots like eradicating cancer, intelligent customer experiences, and self-driving vehicles are within reach of this next era of AI.

And then a comment from Goldman Sachs right after the earnings call:

The ramp of Volta seems to be tracking well, and more importantly, has significant runway ahead, in our view, as a broader set of customers adopt the new architecture in the coming quarters.