#marianna spring

Explore tagged Tumblr posts

Text

4 notes

·

View notes

Text

🚢 A Week of Travels and Literary Journeys | Author Diary - September 22, 2024 📚🌍

🚢 Cruise Adventures: This week, I took a break from writing to embark on a cruise that took me to some of Europe’s most storied destinations, including Pompeii, Rome, and Girona. Each city offered its own unique blend of history, culture, and breathtaking sights. From the ancient ruins of Pompeii to the medieval charm of Girona, this journey was a treasure trove of inspiration and a wonderful…

View On WordPress

#Among the Trolls#author diary#Book recommendations#creative journey#cruise travels#cultural exploration#European cruise#exploring ancient cities#Fake Heroes#Girona sights#historical destinations#historical sites visit#Marianna Spring#Otto English#reading while traveling#Rome tourism#travel and literature#travel inspiration#visiting Pompeii

0 notes

Text

0 notes

Text

Marianna's Background

New HPANWO Voice article: https://hpanwo-voice.blogspot.com/2023/09/mariannas-background.html

0 notes

Text

In a modern setting, what jobs do you think the kids of Spring Awakening would have as adults? Like something tells me Hanschen would be a finance bro or something.

#i wanna know what you guys think#broadway#musical theatre#spring awakening#deaf west spring awakening#dwsa#dwsa cast#broadway musicals#spring awakening broadway#hanschen spring awakening#spring awakening musical#melchior gabor#moritz stiefel#wendla bergmann#ilse neumann#hanschen rilow#ernst robel#otto lammermeier#georg zirschnitz#martha bessell#marianna wheelan#thea spring awakening#melitta dwsa

18 notes

·

View notes

Photo

Marianna Polasekova for Sharon Wauchob, Spring 2022

9 notes

·

View notes

Text

redownloaded FEH so i could get that free stuff for engage and immediately got overhwlemed by all the banners what is going on here

#i did all my free pulls and i got chrimmis dorothea :)#also picked dancer marianna super byleth girl and spring lucina or whatever shes supposed to be#are they good? i dunno. but i like them

2 notes

·

View notes

Text

Cosplayer: Marianna Traversi

0 notes

Text

Remi Martinu Beaudard, Captain. * fall 1761, Martinu, France -

† summer 1771, Lost at Sea

Early life

Born and raised at Chateau Martinu near Bordeaux, France, as only son and heir, Remi had a keen interest in the stars from early boyhood on. He would often be found on the castle grounds at night with an atlas of the sky and a lantern, sometimes with his younger sister, oftentimes with his likeminded friend Bernard Houde, the stable boy. A telescope, gifted by his grandmother, decided his future. Living life with the stars as either an astronomer or a sea captain, would be his choice. And so it would be for Bernard. As a youngster this led to many elaborate discussions between him and his parents, as to why it would be, if the universe was so large and a little human so frail, one's position of being born into a certain family or trade would define one's opportunities to learn and grow. His father, though secretly convinced by his son, kept opposing him in those discussions, to nurture and challenge his son's sound mind and budding abilities as a leader. In the end he pressed for a career in the French Navy for both Remi and Bernard. A tragic accident in the stables resulted in only Remi reporting for duty with Admiral D'Aubrey as a beginning midshipman, at the ports of Bordeaux, spring 1765.

Career

Over the next seasons, Remi rapidly raised in the ranks of the French Navy, earning praise and trust along the way. Outwardly his star was climbing to the heavens, inwardly it went down the gutter. As his authority grew, so did his unease with the strict hierarchy and disciplinary measures asked of him to keep his Ship of the Line correctly afloat. A firm believer in equal talks and second chances, Remi felt himself increasingly morally unable to comply to the rules of the Navy, not even in times of peace. At his honorary ceremony of being promoted to Captain, he resigned, leaving his superiors speechless after his thorough appeal. Freed in spirit, though also troubled by his engagement to Lady Aurélie de Coust de Bonnais being called off, he embarked on a new journey as a Captain in the Merchant Navy. Being assigned to the Aurore first, heading to Scandinavia, a longer commitment was taken on with the Marianna in winter 1766, travelling back and forth between France and the New world. Content to select his own crew and management style, Captain Remi Beaudard was known as a calm, trustworthy and punctual commander, albeit "a little soft at heart".

Death

Remi Martinu Beaudard, born fall 1761, was declared dead summer 1771, lost at sea three years earlier. His death was announced in all the newspapers by family, employers, officers and peers. He received obituaries at both the French Admiralty and the Royal Court, and was long talked about at smaller courts all over the country, not in the least that of De Bonnais. Mourned by the whole of the extensive Beaudard family, the abrupt and cruel end of his promising life was felt the hardest by those of Chateau Martinu: his now elderly father Remi Martinu Beaudard sr., and his sister Amande Séguret de Martinu. Until this day, she and her descendants commemorate Captain Beaudard with a yearly service at his memorial statue in the private graveyard of Chateau Martinu.

#this profile will be added on once this life on Northeney is fulfilled#Remi Beaudard • founder#sim profile

10 notes

·

View notes

Text

'It stains your brain': How social media algorithms show violence to boys." Make no mistake boys and young men were disgusting before they had access to smart phones but this and parents not willing to admit there's an issue are creating a whole new problem.

Cai says violent and disturbing material appeared on his feeds "out of nowhere"

By Marianna Spring BBC Panorama

It was 2022 and Cai, then 16, was scrolling on his phone. He says one of the first videos he saw on his social media feeds was of a cute dog. But then, it all took a turn.

He says “out of nowhere” he was recommended videos of someone being hit by a car, a monologue from an influencer sharing misogynistic views, and clips of violent fights. He found himself asking - why me?

Over in Dublin, Andrew Kaung was working as an analyst on user safety at TikTok, a role he held for 19 months from December 2020 to June 2022.

He says he and a colleague decided to examine what users in the UK were being recommended by the app’s algorithms, including some 16-year-olds. Not long before, he had worked for rival company Meta, which owns Instagram - another of the sites Cai uses.

When Andrew looked at the TikTok content, he was alarmed to find how some teenage boys were being shown posts featuring violence and pornography, and promoting misogynistic views, he tells BBC Panorama. He says, in general, teenage girls were recommended very different content based on their interests.

TikTok and other social media companies use AI tools to remove the vast majority of harmful content and to flag other content for review by human moderators, regardless of the number of views they have had. But the AI tools cannot identify everything.

Andrew Kaung says that during the time he worked at TikTok, all videos that were not removed or flagged to human moderators by AI - or reported by other users to moderators - would only then be reviewed again manually if they reached a certain threshold.

He says at one point this was set to 10,000 views or more. He feared this meant some younger users were being exposed to harmful videos. Most major social media companies allow people aged 13 or above to sign up.

TikTok says 99% of content it removes for violating its rules is taken down by AI or human moderators before it reaches 10,000 views. It also says it undertakes proactive investigations on videos with fewer than this number of views.

Andrew Kaung says he raised concerns that teenage boys were being pushed violent, misogynistic content

When he worked at Meta between 2019 and December 2020, Andrew Kaung says there was a different problem. He says that, while the majority of videos were removed or flagged to moderators by AI tools, the site relied on users to report other videos once they had already seen them.

He says he raised concerns while at both companies, but was met mainly with inaction because, he says, of fears about the amount of work involved or the cost. He says subsequently some improvements were made at TikTok and Meta, but he says younger users, such as Cai, were left at risk in the meantime.

Several former employees from the social media companies have told the BBC Andrew Kaung’s concerns were consistent with their own knowledge and experience.

Algorithms from all the major social media companies have been recommending harmful content to children, even if unintentionally, UK regulator Ofcom tells the BBC.

“Companies have been turning a blind eye and have been treating children as they treat adults,” says Almudena Lara, Ofcom's online safety policy development director.

'My friend needed a reality check'

TikTok told the BBC it has “industry-leading” safety settings for teens and employs more than 40,000 people working to keep users safe. It said this year alone it expects to invest “more than $2bn (£1.5bn) on safety”, and of the content it removes for breaking its rules it finds 98% proactively.

Meta, which owns Instagram and Facebook, says it has more than 50 different tools, resources and features to give teens “positive and age-appropriate experiences”.

Cai told the BBC he tried to use one of Instagram’s tools and a similar one on TikTok to say he was not interested in violent or misogynistic content - but he says he continued to be recommended it.

He is interested in UFC - the Ultimate Fighting Championship. He also found himself watching videos from controversial influencers when they were sent his way, but he says he did not want to be recommended this more extreme content.

“You get the picture in your head and you can't get it out. [It] stains your brain. And so you think about it for the rest of the day,” he says.

Girls he knows who are the same age have been recommended videos about topics such as music and make-up rather than violence, he says.

Cai says one of his friends became drawn into content from a controversial influencer

Meanwhile Cai, now 18, says he is still being pushed violent and misogynistic content on both Instagram and TikTok.

When we scroll through his Instagram Reels, they include an image making light of domestic violence. It shows two characters side by side, one of whom has bruises, with the caption: “My Love Language”. Another shows a person being run over by a lorry.

Cai says he has noticed that videos with millions of likes can be persuasive to other young men his age.

For example, he says one of his friends became drawn into content from a controversial influencer - and started to adopt misogynistic views.

His friend “took it too far”, Cai says. “He started saying things about women. It’s like you have to give your friend a reality check.”

Cai says he has commented on posts to say that he doesn’t like them, and when he has accidentally liked videos, he has tried to undo it, hoping it will reset the algorithms. But he says he has ended up with more videos taking over his feeds.

Ofcom says social media companies recommend harmful content to children, even if unintentionally

So, how do TikTok’s algorithms actually work?

According to Andrew Kaung, the algorithms' fuel is engagement, regardless of whether the engagement is positive or negative. That could explain in part why Cai’s efforts to manipulate the algorithms weren’t working.

The first step for users is to specify some likes and interests when they sign up. Andrew says some of the content initially served up by the algorithms to, say, a 16-year-old, is based on the preferences they give and the preferences of other users of a similar age in a similar location.

According to TikTok, the algorithms are not informed by a user’s gender. But Andrew says the interests teenagers express when they sign up often have the effect of dividing them up along gender lines.

The former TikTok employee says some 16-year-old boys could be exposed to violent content “right away”, because other teenage users with similar preferences have expressed an interest in this type of content - even if that just means spending more time on a video that grabs their attention for that little bit longer.

The interests indicated by many teenage girls in profiles he examined - “pop singers, songs, make-up” - meant they were not recommended this violent content, he says.

He says the algorithms use “reinforcement learning” - a method where AI systems learn by trial and error - and train themselves to detect behaviour towards different videos.

Andrew Kaung says they are designed to maximise engagement by showing you videos they expect you to spend longer watching, comment on, or like - all to keep you coming back for more.

The algorithm recommending content to TikTok's “For You Page”, he says, does not always differentiate between harmful and non-harmful content.

According to Andrew, one of the problems he identified when he worked at TikTok was that the teams involved in training and coding that algorithm did not always know the exact nature of the videos it was recommending.

“They see the number of viewers, the age, the trend, that sort of very abstract data. They wouldn't necessarily be actually exposed to the content,” the former TikTok analyst tells me.

That was why, in 2022, he and a colleague decided to take a look at what kinds of videos were being recommended to a range of users, including some 16-year-olds.

He says they were concerned about violent and harmful content being served to some teenagers, and proposed to TikTok that it should update its moderation system.

They wanted TikTok to clearly label videos so everyone working there could see why they were harmful - extreme violence, abuse, pornography and so on - and to hire more moderators who specialised in these different areas. Andrew says their suggestions were rejected at that time.

TikTok says it had specialist moderators at the time and, as the platform has grown, it has continued to hire more. It also said it separated out different types of harmful content - into what it calls queues - for moderators.

'Asking a tiger not to eat you'

Andrew Kaung says that from the inside of TikTok and Meta it felt really difficult to make the changes he thought were necessary.

“We are asking a private company whose interest is to promote their products to moderate themselves, which is like asking a tiger not to eat you,” he says.

He also says he thinks children’s and teenagers’ lives would be better if they stopped using their smartphones.

But for Cai, banning phones or social media for teenagers is not the solution. His phone is integral to his life - a really important way of chatting to friends, navigating when he is out and about, and paying for stuff.

Instead, he wants the social media companies to listen more to what teenagers don’t want to see. He wants the firms to make the tools that let users indicate their preferences more effective.

“I feel like social media companies don't respect your opinion, as long as it makes them money,” Cai tells me.

In the UK, a new law will force social media firms to verify children’s ages and stop the sites recommending porn or other harmful content to young people. UK media regulator Ofcom is in charge of enforcing it.

Almudena Lara, Ofcom's online safety policy development director, says that while harmful content that predominantly affects young women - such as videos promoting eating disorders and self-harm - have rightly been in the spotlight, the algorithmic pathways driving hate and violence to mainly teenage boys and young men have received less attention.

“It tends to be a minority of [children] that get exposed to the most harmful content. But we know, however, that once you are exposed to that harmful content, it becomes unavoidable,” says Ms Lara.

Ofcom says it can fine companies and could bring criminal prosecutions if they do not do enough, but the measures will not come in to force until 2025.

TikTok says it uses “innovative technology” and provides “industry-leading” safety and privacy settings for teens, including systems to block content that may not be suitable, and that it does not allow extreme violence or misogyny.

Meta, which owns Instagram and Facebook, says it has more than “50 different tools, resources and features” to give teens “positive and age-appropriate experiences”. According to Meta, it seeks feedback from its own teams and potential policy changes go through robust process.

#Teenage boys and violent social media#Teenage boys and misogynistic social media#Algorithms#Teenage girls and social posts about makeup#TikTok#Meta

18 notes

·

View notes

Text

Marianna Petrovskaia @ Issey Miyake Spring/Summer, 1995 Ready-to-Wear

17 notes

·

View notes

Text

Marianna Senchina Kiev Spring 2017

7 notes

·

View notes

Text

0 notes

Text

Marianna in Conspiracyland

New HPANWO Free article: https://benemlynjones.substack.com/p/marianna-in-conspiracyland

0 notes

Text

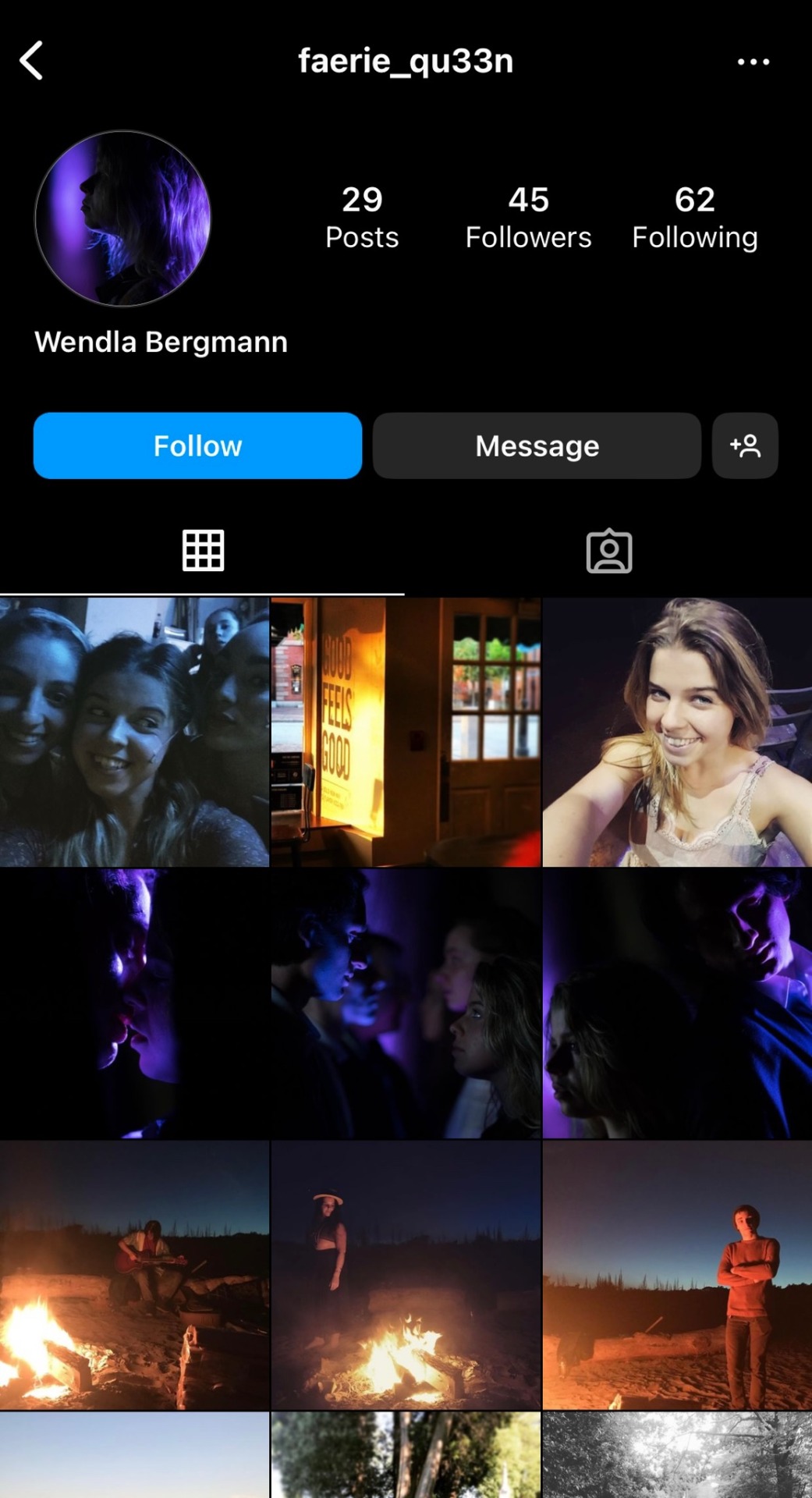

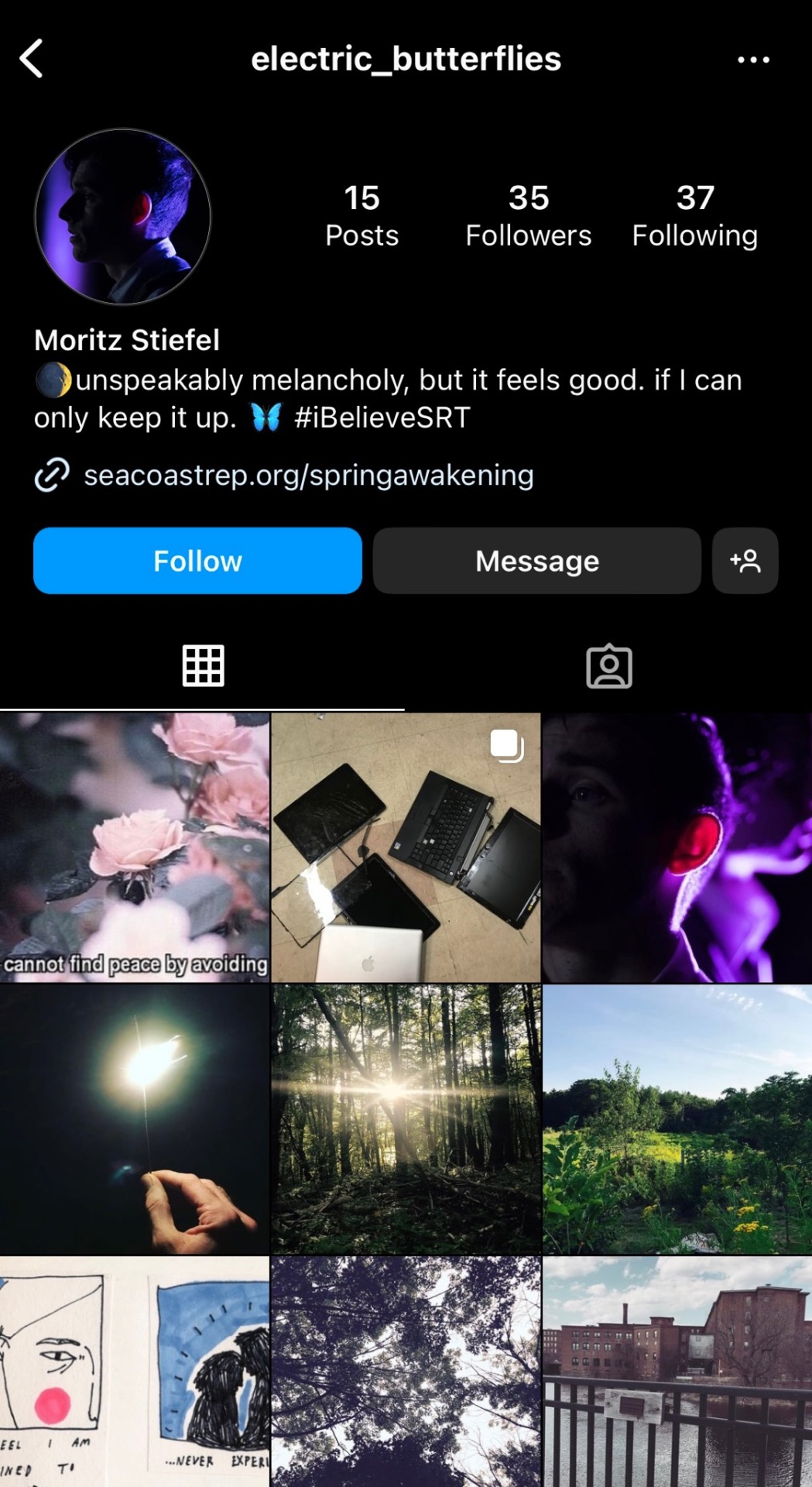

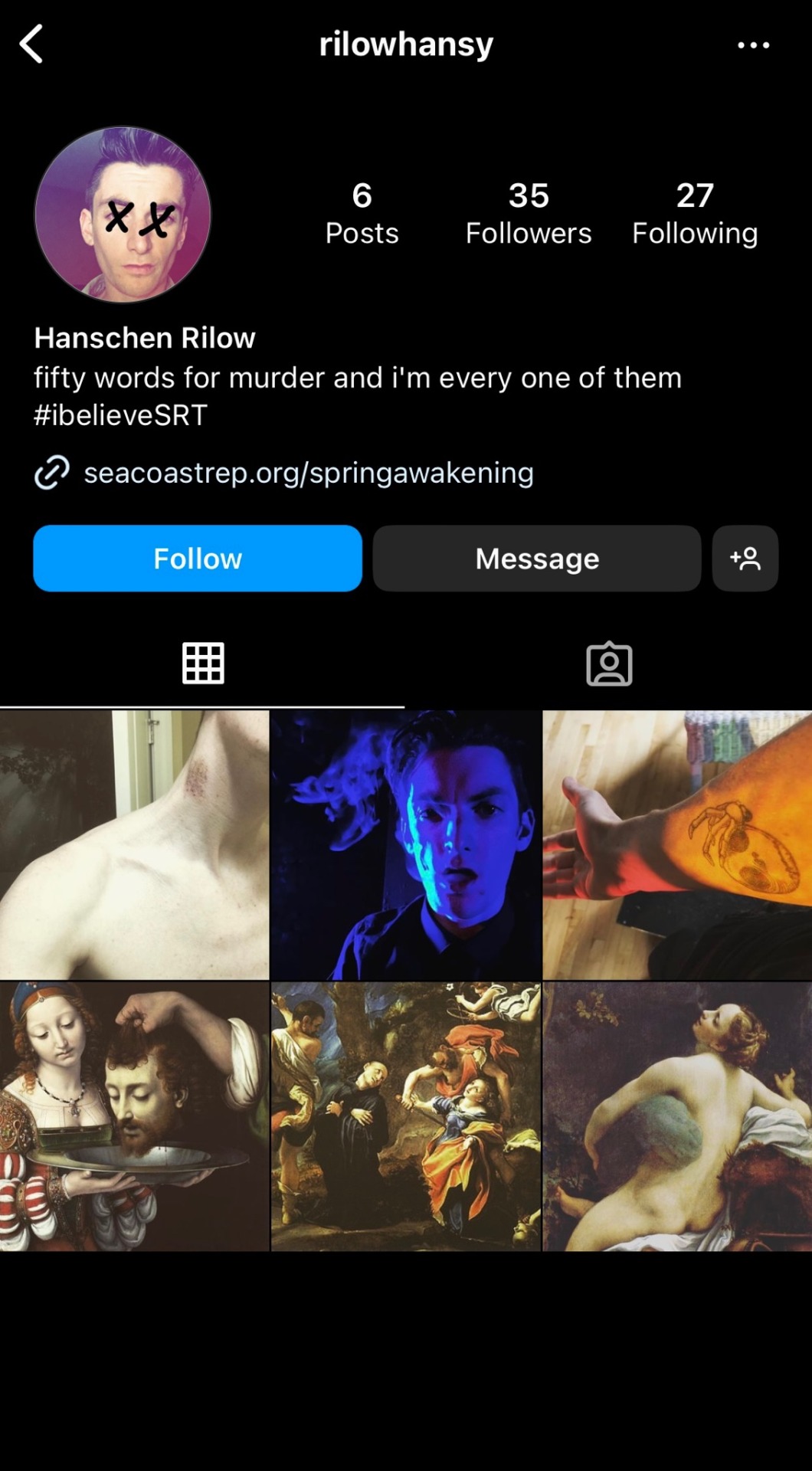

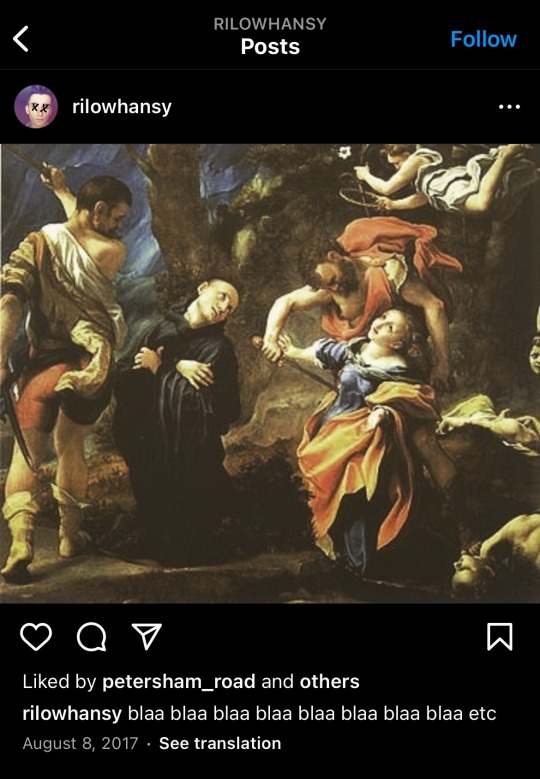

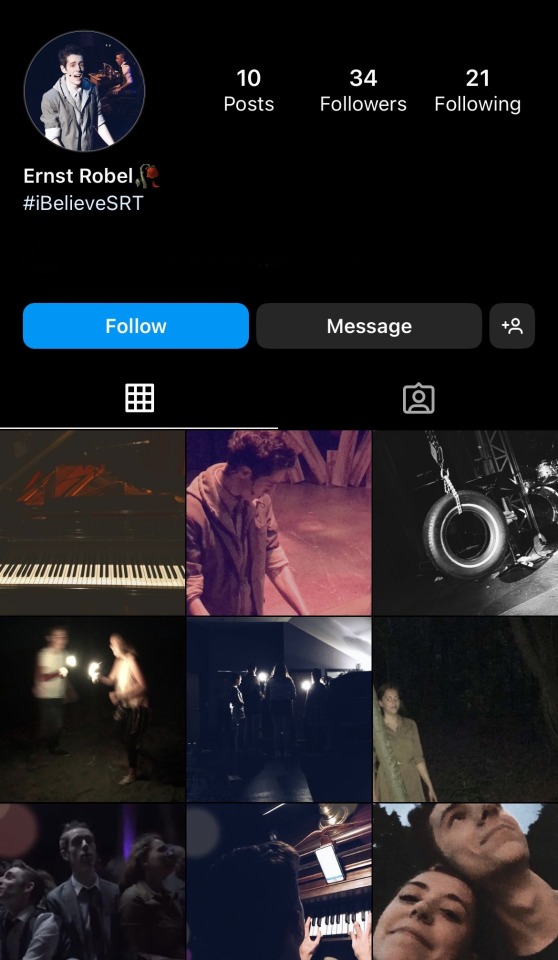

Interesting thing I just found: So I just found a bunch of Instagram accounts made by a regional cast of Spring Awakening from a few years ago (the same production that Sing Street’s Brendan C. Callahan was in as Ernst) and the accounts are basically the cast role playing as their characters. They made posts in their character’s perspective, making their version of Spring Awakening essentially a modern retelling. I’m not sure if it was used for the show or if it was just a fun exercise that the director thought of, but either way I love it. I think it’s a great way to immerse yourself and others into the show, especially a timeless show like Spring Awakening. Here are some pictures of the accounts. It’s really interesting to see what each actor posts as their character, interpreting what they think their character would post about. (You can also check them out for yourself on Instagram!)

The pictures won’t all fit in one post so I’ll reblog to add onto this one.

#spring awakening#musical theatre#broadway musicals#spring awakening musical#ilse neumann#martha bessell#marianna wheelan#thea rilow#georg zirschnitz#broadway#deaf west spring awakening#dwsa#spring awakening broadway

73 notes

·

View notes

Photo

Marianna Polasekova for Sharon Wauchob, Spring 2022

3 notes

·

View notes