#hardware video decoding & encoding

Explore tagged Tumblr posts

Text

Being autistic feels like having to emulate brain hardware that most other people have. Being allistic is like having a social chip in the brain that handles converting thoughts into social communication and vice versa while being autistic is like using the CPU to essentially emulate what that social chip does in allistic people.

Skip this paragraph if you know about video codec hardware on GPUs. Similarly, some computers have hardware chips specifically meant for encoding and decoding specific video formats like H.264 (usually located in the GPU), while other computers might not have those chips built in meaning that encoding and decoding videos must be done “by hand” on the CPU. That means it usually takes longer but is also usually more configurable, meaning that the output quality of the CPU method can sometimes surpass the hardware chip’s output quality depending on the settings set for the CPU encoding.

In conclusion, video codec encoding and decoding for computers is to social encoding and decoding for autistic/allistic people.

#codeblr#neurodivergent#actually autistic#compeng#computer architecture#comp eng#computer engineering#autistic

157 notes

·

View notes

Text

Thoughts and opinion on Switch 2

Before I start, do know I really like tech and generally, I like interesting implemtations of it, so I do have a bias towards handheld consoles in general because they have much more interesting limitations to start with. I'll get all my thoughts, up and down, on the switch 2.

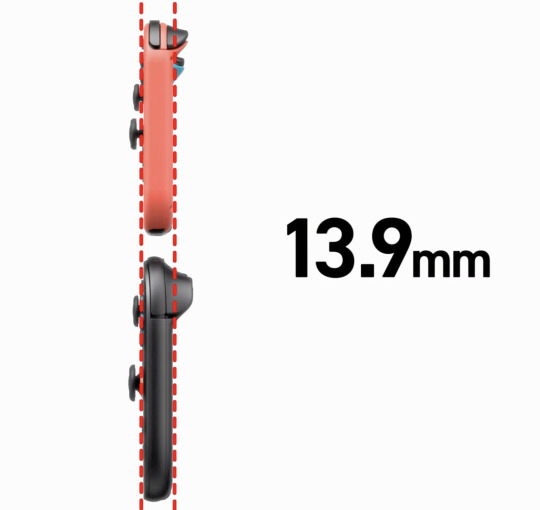

I know the bigger screen does mean more internal area to work with, but how the hell did they manage to keep the thickness nearly identical to the switch 1? Actually impressive, I desperately want to see inside if it is all just because of smaller chip size.

Ok, so where do I properly begin excluding the shock that they pulled off the same thickness? Oh, the screen!

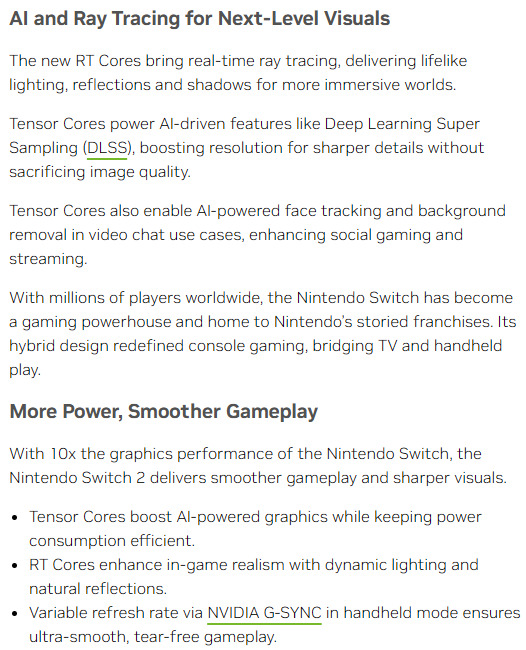

This screen is just a welcome addition overall. 1080p, better brightness and better panel tech that allows for HDR (Microsoft fix your god damn hdr implementation even nintendo has it) while being 120hz and VRR (Nvidia confirmed that it's using their implementation of g-sync via a blog post, here: https://blogs.nvidia.com/blog/nintendo-switch-2-leveled-up-with-nvidia-ai-powered-dlss-and-4k-gaming/). It now matches and slightly exceeds a lot of displays on handhelds now, which funnily enough makes puts it in a similar position as the og switch screen back in 2017, but at least the baseline is much higher so I think this will make the eventual OLED either be more meh, or more better in comparison. It may be closer to the meh side, at least with the first impressions I'm seeing on youtube and seen through my 2013 gaming monitor with clear faded pixels which is it's own issue if I do end up deciding to get a switch 2. Still +$100 I'm calling it. However, the fact it's 120hz natively means that you can now run games at 40fps without screen tearing because it's a clean divisable number of 120, with it looking noticably better then 30fps but not as draining as 60fps or above, which is going to help with more title's battery life. Speaking of Nvidia, let's talk a bit about that blog post.

I do not know how much people like this or not (See recent RTX 40xx and 50xx launches to see how happy people are with Nvidia), but I think they are very much the ideal partners in crime with Nintendo this time around. As long as the Tensor hardware is power efficient enough as they claim, this will just be a boon for handheld gaming times, even if they're stuck at the same 2-6.5 hours as the launch model og switch. It also means it can be updated with time to improve games potentially, so if developers decide to take advantage of it and nvidia/nintendo makes it easy to just update the codebase of a game to get to a never version, we may actually have a case of games becoming more performant and/or better looking with time, assuming they are not running at native 4k already. That's neglecting the processing offloading for stuff like that goofy camera, microphone quality and other stuff

(seriously, if you got a recent enough Nvidia GPU try the Nvidia Broadcast app, it's does basically all the audio/video stuff nintendo showed recently, but now with a focused platform I think this is going to become excellent)

oh ya, it is odd that the stream from friend's framerate is like 12 fps, best guess there is because it uses the on board video encoder and decoded to handle it, and if each is a seperate channel, along with one for background recording/screenshots, plus whatever the games may need for their own dedicated use, I am not too surprised it got the cut on such a slim package. Suck though.

That leads into the dock.

I think the dock is fine. Does the job, has USB ports on the side to connect controllers and charge them (USB 2 tho, so I guess the camera attachement had to go to the top USB C port CORRECTION, I just saw MKBHD's recent switch 2 impression video, it can actually be attached elsewhere maybe. That's interesting, Photo attached below). I think it's as inoffensive as the Switch Oled dock, which does all it needed to do too plus ethernet. It's fine.

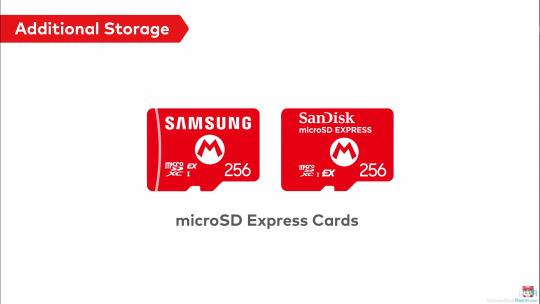

I guess while I'm here, let's touch upon the Microsd Express card slot.

Let me preface this by saying, I knew that the standard was compatible with SD cards of prior so I found it odd they didn't make it clear if it could be used to at least get video/screenshots off, but they did!

Talking about the microSD express cards themselves, I may be in the minority here, but I am very very happy to see this get adopted. For those who are not in the know, it's basically much faster flash (that can be made for slightly more then an normal SD card) that runs off of the reader's PCIe Gen 4, allowing up to 2gb/s of read/write. Which is Crazy, and if they're mandating it to be required for games, that means the current 800mb/s read ones on the market right now is what the console will be using and games will finally load up so much faster. And, unlike the original SD cards, higher capacities like a terabyte already exist AND are not insanely more expensive over base sd cards.(I mean $20-25 for 256gb sd cards to $60 for same but microsd express is still a jump, but do you guys remember how fucking much 256gb sd cards costed originally? It was like $150, and I think 256gb storage will get further milage then the original 32gb storage the og switch had. And 1tb already cost $200 for when it comes out with the switch 2. Those prices will drop unless, uh... not within the scope of what I want to talk about)

Since we're on storage, 256gb of UFS storage? Like on phones, that can go multiple gigabytes per second read/write? Hell ya, we bout to get load times not much worse then other modern consoles.

Let's touch upon the joycons now.

I like them, but as more in a sense of improvment over og switch joycons. Which, honestly, that's all they needed to do. The standout weird feature is the mouse on both, but I only see this as a plus. I want more of my PC games on portable systems, and as much as I want the steamdeck too, the switch form factor is still more portable. Just... please let the bigger joysticks be actually good and resistant to stick drift, I do not want to open them up just to replace the sticks with hall effect ones again. As a plus, I can't wait to see what insanity warioware is going to become now.

Oh ya, chat.

Surprised it took them 2 decades to finally do it, but they seem to have a lot of restraint there still, with extensive parent controls to help metigate any issues, so I'll only give them slightly less shit. Slightly. I'm going to call it though, there is going to be controversy about what eventually will occur over the gamechat. Can't wait!

Switch online services

I mean, it was inevitable. This, with the Wii games that's almost absolutely coming later, is going to be good. I suspect a pricebump is going to occur with the NSO that's going to make it cost almost a full game per year, but at least the retro selection is going to start going from crazy to insane, making it probably worth it. I just hope that the voucher program (shown below) gets expanded to more third parties, because as much as I like nintendo games for $50 a piece with this program, it needs to be way more expansive to make the $20 cost at this time to be online, let alone get the 2 vouchers for $100, worth it. If they do that, and keep on adding new actually fun (looking at you Zelda Notes... what the fuck are you) and nice to have to the service, I think it could become worth it in the near future.

I ALMOST FORGOT ONLINE DOWNLOAD PLAY, seriously that alone may be worth it because with both local and online, it means you do not have to force your friends to buy games they may otherwise never touch outside of playing with you. This is straight up a good, pro-consumer thing if others don't ignore it 24/7. I just hope the streaming quality will not be dogshit ahhaha....

(Shout out to nintendo actuallly using this a part to prevent scalpers, I don't like it much because I didn't pay for NSO on the og switch but at least this is a verifiable way to prevent scalpers. I just wish acconts from the Wii U/3ds era got special treatment :^) )

Since we're getting more into software, shout out to how sad the UI is still.

Nintendo, the literal bare minimun here is not just black and white customizable themes. You haven't done that for a generation. I would have much rather had the UI present from the DSi/Wii to Wii U/3ds era then this bland nothing soup. God. Now, onto the most devicive one, and the one that makes or breaks it for me. The games, and their prices.

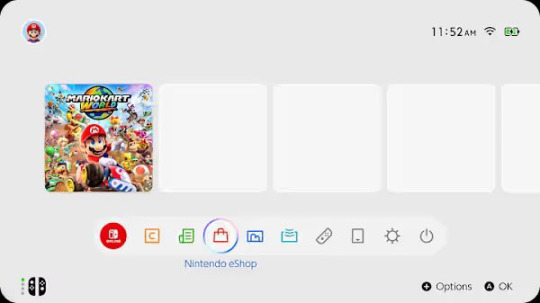

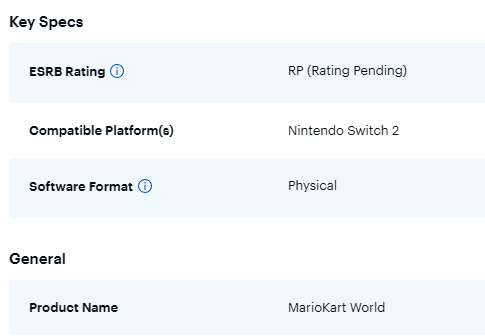

Let's get a few things clear, the $90 physical switch 2 game thing (which I had fallen for too) is in europe. If you're a suffering US citizen, the price is $80 for what seems to be big, high budget nintendo games, with everything else being $70, at least from the list of prices I've seen online.

About Game key cards, ya it's just the 'download play' games on the switch, but instead of a piece of paper that's one time, it's a re-usable cartridge that allows multiple downloads on other switches and acts like a physical one otherwise. This, in my opinion, is objectively better, and is not the default option. Other games will be on the Cartidge like og switch, see Cyberpunk 2077 on a 64gb one (seriously how the fuck they did that).

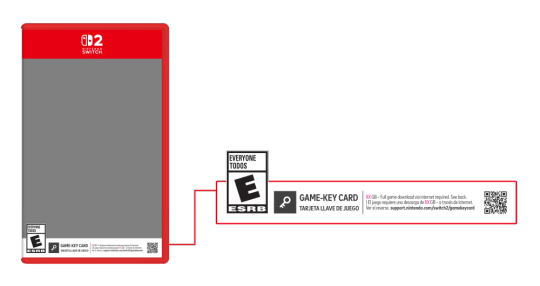

This switch 2 edition is on a case by case basis, so if it is just an fps/resolution bump, it is likely to be free. If it has a bit more, like some added extras and they know people would pay for the fps/resolution, see botw and totk will be $10, and if the content has basically DLC + all of the above, it's $20. Also, lol at welcome tour.

Seeing all of that, I'm iffy. I'm worried that the panic and reactions from everyone will lock the prices for everyone else at $80 since the publishers will take any chance to push prices up further, but if $70 stays I would reluctantly accept it. Unlike some people on the other platforms may say, in the past before $60 standardization, we had other options to play games like with rental stores, actually being around friends, and frequent discounts and bundles to get inventory moving. That does mean nowadays, especially with no sale nintendo, this price is here to stay, and if this is the cost to actually keep games good and not need astronomical sales to make back development costs, so be it. I am just not happy knowing that a lot of publishers will be using machine created/generated stuff each year for that price or $80 and expect no issues with it. The only thing I am very curious about is the capabilities of the switch 2 being somewhere between a PS4 and PS5, but able to handle PS5 ports, potentially making it the best way to play a lot of newer games on the go until valve decides in 2030 to make the steam deck 2.

Now everyone's favorite issues, price. I think it's reasonable, sucks a bit but reasonable. We're now dealing in a world where Nvidia has their focus on AI stuff, and knows that Nintendo wants backward compat + better stuff, so Nvidia likely is changing way more on parts. This world also includes inflation (seriously, $300 in 2017 is now closer to $400, and now there's all the extra nicer stuff slapped on top to justify a next gen). This world also includes ungodly uncertienty because of a a group in the wrong place in the wrong time. Considering all of this, honestly, $450 is fine. It sucks, I know it has pushed a few friends out from buying it w/o someone assisting them in the purchase, but it's fine. It's going to be a great refinement, which is all a sequel console had to be. The thing you have to know, nintendo is doing the thing again with previous controllers being compatible with the current system, so in reality (especially with me and my hall-effect modded controllers) the price to play with others will not be much more. I touched upon this already, but the game prices are iffy for me, and it's absolutely going to prevent me from buying as many games as I had for the og switch, but it's an dampener.

I will need more time to simmer (and see how my finacial situation is going to be), but I am currently leaning to I will try to get it. I'm on the fence, and I have a good chance of flipping to waiting later, or just not getting.

I may add more thoughts as I think them and remember I can use tumblr like this, but I think this is everything I wanted to get out that has been simmering in my head for a bit. Oh ya I almost forgot the most important thing, Homebrew. I love homebrew, it has given me extra life and enjoyment out of my og switch. If there is another launch edition vuln that allows homebrew, I want in.

16 notes

·

View notes

Text

Top, Gold oval brooch with a band of diamonds within a blue glass guilloche border surrounded by white enamel (1890). Lady’s blue right eye with dark brow (from Lover’s Eyes: Eye Miniatures from the Skier Collection and courtesy of D Giles, Limited) Via. Bottom, screen capture of The ceremonial South Pole on Google Street View part of a suite of Antarctica sites Google released in 360-degree panoramics on Street View on July 12, 2017. Taken by me on July 29, 2024. Via.

--

Images are mediations between the world and human beings. Human beings 'ex-ist', i.e. the world is not immediately accessible to them and therefore images are needed to make it comprehensible. However, as soon as this happens, images come between the world and human beings. They are supposed to be maps but they turn into screens: Instead of representing the world, they obscure it until human beings' lives finally become a function of the images they create. Human beings cease to decode the images and instead project them, still encoded, into the world 'out there', which meanwhile itself becomes like an image - a context of scenes, of states of things. This reversal of the function of the image can be called 'idolatry'; we can observe the process at work in the present day: The technical images currently all around us are in the process of magically restructuring our 'reality' and turning it into a 'global image scenario'. Essentially this is a question of 'amnesia'. Human beings forget they created the images in order to orientate themselves in the world. Since they are no longer able to decode them, their lives become a function of their own images: Imagination has turned into hallucination.

Vilém Flusser, from Towards a Philosophy of Photography, 1984. Translated by Anthony Mathews.

--

But. Actually what all of these people are doing, now, is using a computer. You could call the New Aesthetic the ‘Apple Mac’ Aesthetic, as that’s the computer of choice for most of these acts of creation. Images are made in Photoshop and Illustrator. Video is edited in Final Cut Pro. Buildings are rendered in Autodesk. Books are written in Scrivener. And so on. To paraphrase McLuhan “the hardware / software is the message” because while you can imitate as many different styles as you like in your digital arena of choice, ultimately they all end up interrelated by the architecture of the technology itself.

Damien Walter, from The New Aesthetic and I, posted on April 2, 2012. Via.

3 notes

·

View notes

Text

wait wtf

youtube defaults to AV1 encoding on my M1 Mac

the M1 doesn't have AV1 hardware decode so this takes—I'll have to do a more careful test, but it looks like a huge power draw/battery life difference vs the same video forced off of AV1 (i.e. to VP9)

3 notes

·

View notes

Text

This info is almost correct, and it is in spirit, but hardware acceleration does in fact "enable" DRM. Specifically, what's known as HDCP, which encrypts any video content that makes use of HDCP, leading to a black box if it isn't decrypted.

This is why hardware-accelerated videos can still be streamed like YouTube videos (the website, not YT TV or the app) since they don't use HDCP.

When hardware acceleration is on: Netflix video is encrypted, sent to GPU to decrypt and decode video, re-encrypts it and sends to monitor where the monitor can finally decrypt it and display it for you. Discord tries to intercept it for streaming, but only sees encrypted data. Black box ensues.

When hardware acceleration is off: Netflix video is encrypted, CPU decrypts and decodes it, sends open data to GPU then monitor. Discord intercepts it for streaming, sees it just fine because it's not encrypted. No black box.

Also saw another note asking "what's the point of enabling hardware acceleration?" Videos are encoded to save filesize (the difference between a 50mb .MP4 and 1gb .AVI) Decoding video is a really tough task for your CPU, especially if it's high resolution. It might make other programs lag (such as Minecraft with 500 mods, or your art program), not really a big problem if you have a really good CPU. In contrast, GPUs have a chip hyperspecialized for the task of decoding video, which makes it really quick and easy for them.

firefox just started doing this too so remember kids if you want to stream things like netflix or hulu over discord without the video being blacked out you just have to disable hardware acceleration in your browser settings!

158K notes

·

View notes

Text

AJA Streaming Solutions Explained: HELO Plus, U-TAP, and BRIDGE for Every Live Production Workflow

New Post has been published on https://thedigitalinsider.com/aja-streaming-solutions-explained-helo-plus-u-tap-and-bridge-for-every-live-production-workflow/

AJA Streaming Solutions Explained: HELO Plus, U-TAP, and BRIDGE for Every Live Production Workflow

Looking for professional-grade streaming gear to elevate your live production or IP video workflow? AJA Video Systems offers reliable, high-performance solutions built for broadcasters, educators, houses of worship, and content creators. In this post, we explore AJA’s powerful lineup—including the HELO Plus standalone streaming encoder, U-TAP USB video capture devices, and BRIDGE LIVE and BRIDGE NDI 3G converters. Discover how AJA’s tools integrate seamlessly with SDI, HDMI, USB, and NDI sources while supporting all major streaming protocols like RTMP, SRT, and HLS—making them ideal for REMI production, hybrid workflows, and OTT delivery.

Watch the full Videoguys Live here:

youtube

Reliable Streaming Hardware & Software

Stream with confidence using professional-grade appliances designed for demanding workflows

Seamless integration with SDI, HDMI, USB, and NDI sources

Supports all major streaming protocols: RTMP, RTMPS, SRT, HLS, and more

Compatible with Windows, macOS, and Linux environments

Core Streaming Products

HELO Plus – Standalone streaming and recording device with SDI and HDMI inputs

U-TAP – Compact USB capture for HDMI or SDI video sources

BRIDGE LIVE 3G-8 – Multi-channel encoder/decoder for REMI and OTT delivery

BRIDGE NDI 3G – Bi-directional SDI to NDI converter for hybrid IP workflows

Ideal For

Broadcast & OTT workflows

Live events and REMI production

Corporate, education, and house of worship streaming

eSports and content creators

Advanced H.264 Streaming & Recording Appliance

Dual I/O Support: 3G-SDI and HDMI inputs and outputs

High-Quality Encoding: Stream up to 1080p60 to CDNs

Simultaneous Recording: Record to SD, USB, or network storage

Layout Features: Picture-in-Picture and graphics overlays

User-Friendly Interface: Web-based GUI with preview window

Versatile Streaming Capabilities

Dual Streaming Destinations: Stream to two separate locations simultaneously

Advanced Scheduling: Automated start/stop for recording and streaming

Multi-Channel Audio Support: Optional 4-channel audio via license

PlayToStream Functionality: Replay recorded content while live streaming

Stream Deck Integration: Control layouts and functions via Elgato Stream Deck

Ideal for Diverse Production Environments

Use Cases: eSports, live events, education, houses of worship, corporate streaming

Remote Control: Manage settings and monitor streams from anywhere

Comprehensive Support: AJA’s dedicated customer service and technical assistance

Converts between SDI and NDI video formats

Bi-directional conversion between 3G-SDI and NDI

Supports up to 16 channels of HD or 4 channels of 4K/UltraHD

Seamlessly bridges traditional SDI infrastructure with IP-based NDI workflows

High-Quality, Low-Latency Video

Professional-grade image quality with ultra-low latency

Full support for NDI

Optional NDI HX compatibility

Remote Control & Routing

Web-based interface for full control and live monitoring

Real-time I/O management, format conversion, and metadata handling

Compact 1RU form factor for easy rack integration

Ideal For

Broadcast & Pro AV workflows

Hybrid SDI/IP production setups

Studio and live event environments

Multi-channel gateway for live video streaming

Multi-Channel Video Contribution

8x 3G-SDI I/O for high-density video workflows

Supports REMI production, live streaming, and content delivery

Encode/decode H.264, H.265 (HEVC) and JPEG 2000

Streaming & Protocols

Supports SRT, RTMP, RTP, UDP, MPEG-TS and more

Ideal for low-latency remote workflows

Synchronized multi-channel encoding with metadata pass-through

System Integration & Control

Web-based UI and REST API for remote control and automation

Compatible with broadcast and OTT delivery environments

Redundant power supply for mission-critical reliability

Use Cases

REMI production

Broadcast contribution

Remote live events and OTT delivery

USB capture for HDMI or SDI video sources

HDMI HD/SD Capture up to 1080 60p over USB

3G-SDI HD/SD Capture up to 1080 60p over USB

Connectivity & Input

Converts HDMI 1.4a or 3G-SDI video to USB

USB 3.0 (USB 3.2 Gen 1) connection to host computer

Accepts up to 1080p60 from HDMI or SDI sources

Automatically scales input to match host application

Video & Audio Support

Supports 8/10-bit video

2-channel embedded audio

Output signal options on signal loss: Black (default), Blue Matte, 100% Bars

Power & Design

Bus-powered via USB – no external power supply needed

Compact and rugged build for portable use

Proven Reliability: Trusted gear built for 24/7 critical workflows

Versatile Flexibility: From simple USB capture to complex multi-channel streaming

Seamless Integration: Works with SDI, HDMI, USB, NDI, and major streaming protocols

Future-Ready: Scalable solutions designed for evolving production needs

Expert Support: Dedicated customer service and technical assistance & 3 Year Warranty

#4K#amp#API#audio#automation#bi#Blue#bridge#Capture#channel#comprehensive#computer#connectivity#content#content creators#creators#customer service#decoder#Design#devices#easy#education#esports#event#Events#Explained#factor#Features#form#Full

0 notes

Text

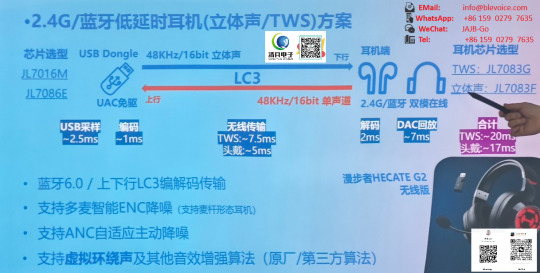

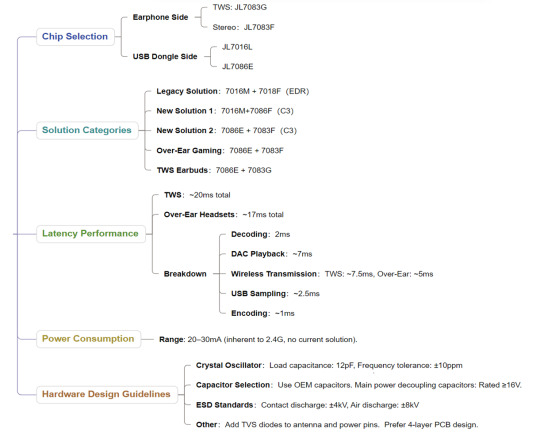

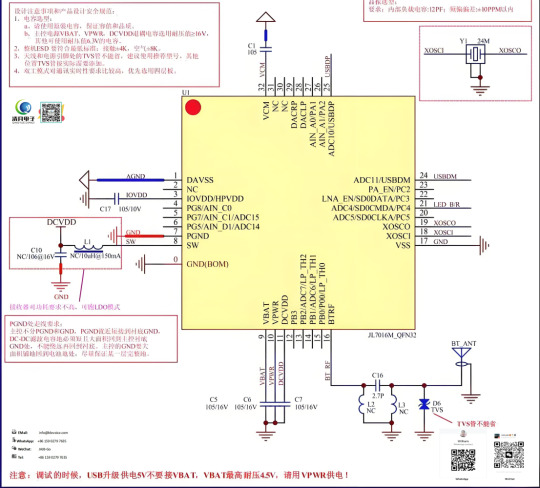

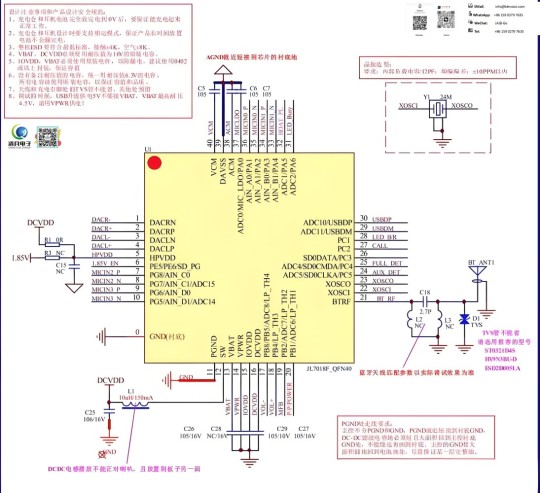

JL 2.4G Bluetooth Low-Latency Audio Headset Solution: Chip Selection, Latency, Hardware Design

This document introduces JL’s low-latency audio headset solution using 2.4G/Bluetooth technology. For chip selection, the USB dongle side uses JL7016M or JL7086E, capable of 48KHz/16bit stereo output and supports UAC (USB Audio Class), meaning no driver installation is required. On the headset side, JL7083G is used for TWS, and JL7083F for stereo headphones.

The audio transmission and decoding process includes:

Uplink (microphone to host): Audio is sampled via USB (~2.5ms), encoded (~1ms), and transmitted over 2.4G/Bluetooth using LC3 codec at 48KHz/16bit mono.

Downlink (host to headset): Audio is LC3 encoded at 48KHz/16bit stereo, transmitted wirelessly to the headset, decoded (~2ms), then played back via DAC (~7ms).

The overall latency:

Around 20ms for TWS headphones

Around 17ms for over-ear/headband-style headphones These values are considered excellent for gaming and multimedia scenarios where low latency is crucial.

1. JL 2.4G Bluetooth Low-Latency Headset Solution Overview

(1) Chipset & Basic Capabilities:

JL7016M or JL7086E for USB Dongle (receiver/transmitter)

Outputs 48KHz/16bit stereo

Supports UAC (plug-and-play with no driver required)

(2) Encoding - Transmission - Decoding Flow:

Uplink (mic to host device):

Downlink (host to headset):

(3) Latency Performance:

TWS headphones total latency: ~20ms

Over-ear headphones total latency: ~17ms

Excellent for low-latency needs in gaming/video applications

2. Chip Solution Comparison

Headband-style gaming headset: JL7086E + JL7083F

TWS-style earbuds: JL7086E + JL7083G

Note on Power Consumption: 2.4G wireless inherently consumes more power. The typical power consumption is around 20-30mA, and currently, this is a design limitation that cannot be significantly reduced.

3. High-Level Hardware Block Diagram (Dongle Side)

Diagram omitted here — typically includes USB interface, JL7016M/7086E chip, 2.4G RF module, and antenna

4. High-Level Hardware Block Diagram (Headset Side)

Diagram omitted here — typically includes microphone, JL7083F/G chip, speaker/DAC output, battery/power management, and antenna

0 notes

Text

AMD Radeon Pro W6600 Benchmark in CAD, Video Editing

Introduction

As professional high-performance computing needs change, GPUs help engineers, developers, and creatives work faster. Radeon Pro from AMD, a well-known semiconductor firm, is increasing market share in professional graphics cards. Powerful AMD Radeon Pro W6600 for CAD, video, entertainment, and visualisation.

For professionals upgrading their workstations, AMD RDNA 2 Radeon Pro W6600 delivers a solid blend of performance, power efficiency, and pricing.

Audience and Use Cases

AMD's Radeon Pro W6600 targets professional tasks. This works best for:

CAD and Product Design: SOLIDWORKS, AutoCAD, and PTC Creo users will benefit from the card's certified drivers and improved performance.

Video editors, animators, and visual effects artists utilising DaVinci Resolve, Adobe Premiere Pro, or Blender benefit from the W6600's smooth playback, rapid rendering, and multitasking.

Revit with Lumion's photorealistic rendering and real-time viewport performance benefit architects and engineers.

It is a good choice for professionals that need reliable GPU calculation and visualisation without the high-end capabilities of the Radeon Pro W6800 or NVIDIA RTX A5000.

Analysis of performance

The Radeon Pro W6600 performs well in real-world applications:

3D Modelling & CAD: The W6600 runs Siemens NX and CATIA smoothly and performs well in SPECviewperf 2020 benchmarks. For mission-critical design, its ECC memory adds reliability.

In Adobe Premiere Pro and DaVinci Resolve, the W6600 handles 4K and 6K timelines easily. Its 8GB of VRAM ensures memory stability for large projects, while hardware-accelerated H.264 and H.265 encoding and decoding speeds exports.

Despite its lower price, the W6600 renders well in Blender, especially with OpenCL or HIP viewport rendering. The GPU's RT cores accelerate ray tracing for moderate scenes.

The W6600 can support four 4K monitors or a mix of 5K and 8K displays via its four DisplayPort 1.4 outputs. This is perfect for multi-app users or those who need a lot of screen space for timelines, previews, and editing consoles.

Cooling and Power Efficiency

The Radeon Pro W6600 excels in power efficiency. Its 100W TDP allows it to be fitted into small form-factor systems or compact workstations without big power supplies or cooling infrastructure. The blower-style cooler is suitable for offices because to its efficiency and silence.

Driver and Software Support

Software compatibility and stability are essential for professionals. AMD's Radeon Pro Software for Enterprise, ISV-certified for many professional applications and extensively tested, solves this.

Notable characteristics include:

Drivers undergo Day-Zero Certification on major application releases.

Radeon Pro Viewport Boost automatically adjusts viewport resolution to boost frame rates in compatible CAD applications.

AMD Remote Workstation lets professionals use their powerful desktop GPU from anywhere.

Built-in technologies provide workload analysis and performance tracking.

These tools help professionals keep their systems running smoothly, compatiblely, and without downtime.

AMD Radeon Pro W6600 Cost

New AMD Radeon Pro W6600s cost $400–$470. Depending on the store and locality, used cards cost $189 and sealed copies $730. Popular for professional graphics work.

To conclude

AMD Radeon Pro W6600 is a powerful GPU for modern professional workloads. It strikes a mix between affordability and performance with ECC memory, certified drivers, and robust multi-display support for engineers, designers, and creative workers.

Professionals and mid-range workstations looking to upgrade from integrated graphics or obsolete GPUs like the FirePro or Quadro P series might choose the W6600. It is not designed for ultra-high-end 3D rendering or AI/ML, but it is sufficient for most daily professional tasks.

#technology#technews#govindhtech#news#technologynews#AMD Radeon Pro W6600#AMD Radeon Pro#Radeon Pro W6600#AMD Radeon Pro W6600 Benchmark#AMD Radeon W6600#AMD Radeon Pro W6600 Price

0 notes

Text

What is Networking Communication Technology?

In today’s digitally connected world, networking communication technology refers to the collection of tools, systems, and protocols that enable the transmission and exchange of data between computers, devices, and networks. Whether browsing the Internet, sending an email, or conducting a video conference across continents, all these activities depend on networking communication technologies to function seamlessly.

URL stands for Uniform Resource Locator, which is the address used to access resources on the Internet, such as websites or files.

Definition and Overview

Networking communication technology encompasses both the hardware and software involved in the design, deployment, and management of computer networks. It enables the transfer of data—text, audio, video, and more—across distances, whether within a single building or between countries across the globe.

Google Lead Services help businesses collect potential customer information through ads and forms, streamlining lead generation and boosting marketing effectiveness.

This technology includes:

Network infrastructure (routers, switches, cables, wireless access points)

Protocols (rules for data transfer, such as TCP/IP, HTTP, FTP)

Communication devices (smartphones, computers, Iot devices)

Services and software (email, cloud platforms, Voip services)

At its core, networking communication technology ensures that data is reliably and efficiently transmitted from one point to another. RTX Meaning — RTX stands for Ray Tracing Texel eXtreme, a graphics technology by NVIDIA that enables realistic lighting, shadows, and reflections in games.

How It Works

Data communication starts with the sender (a device or application that originates the message) and ends with the receiver (the device or application that receives the message). Digital media refers to content created, stored, and distributed in digital formats, including videos, images, websites, social media, and online news.

Here’s how the system generally works:

Data Encoding: The information to be sent is converted into signals.

Transmission Medium: These signals are transmitted over a communication medium, which could be wired (like Ethernet cables or fiber optics) or wireless (such as Wi-Fi or cellular networks).

Protocols: Data travels according to a set of predefined rules or protocols that ensure it reaches its destination correctly and in order.

Receiving and Decoding: At the other end, the receiver decodes the signals back into understandable information.

The success of this communication relies heavily on various protocols and standards to ensure interoperability between different systems and devices. A temp number for WhatsApp is a temporary phone number used to register an account without revealing your personal or permanent number.

Types of Networking Communication

There are several types of networks based on their scale and purpose:

Local Area Network (LAN): Covers a small geographical area, like an office or home. LANs are typically used for internal communication.

Wide Area Network (WAN): Covers large geographical areas, often using leased telecommunications lines.

Metropolitan Area Network (MAN): Spans a city or a large campus.

Personal Area Network (PAN): Used for personal devices, typically within a range of a few meters (like Bluetooth networks).

Wireless Networks: Include Wi-Fi, mobile data (3G, 4G, 5G), and satellite communication, facilitating mobile and remote access.

Mobile Services Manager is a system app on Android devices that manages and updates pre-installed apps and services, often without user interaction.

Key Components of Networking Communication Technology

Hardware:

Routers and Switches: Manage traffic within and between networks.

Modems: Convert digital data to analog for transmission over phone lines, and vice versa.

Access Points: Enable wireless devices to connect to wired networks.

Cables and Connectors: The physical medium for data transmission (Ethernet, fiber optic).

Software:

Operating Systems: Manage network connections (e.g., Windows, macOS, Linux).

Network Management Tools: Monitor and optimize network performance.

Security Applications: Firewalls, antivirus, and VPNS protect data transmission.

Protocols:

TCP/IP (Transmission Control Protocol/Internet Protocol): The foundation of the Internet, responsible for breaking data into packets and reassembling it.

HTTP/HTTPS (HyperText Transfer Protocol): Used for accessing web pages.

FTP (File Transfer Protocol): Used for transferring files over the Internet.

VoIP (Voice over Internet Protocol): Allows voice communication over data networks.

Stream 4U is an online platform that allows users to watch movies and TV shows for free. It often features a wide content selection.

Applications and Importance

Networking communication technology plays a pivotal role in nearly every aspect of modern life. Geotag photos online means adding location information to images using web tools, helping organize, map, and share pictures based on where taken.

Here are some key applications:

Business Communication: Enables instant messaging, video conferencing, and cloud-based collaboration tools.

Education: Powers online learning platforms, virtual classrooms, and research databases.

Healthcare: Supports telemedicine, electronic health records, and remote monitoring.

Smart Devices and IoT: Allows devices like smart thermostats, security cameras, and wearable fitness trackers to communicate and operate efficiently.

E-Governance: Facilitates secure and quick communication between government agencies and citizens.

Without networking technology, the modern economy and society would grind to a halt. From financial transactions to social media, almost all digital interactions rely on robust and secure communication networks. PDF stands for Portable Document Format, a file format developed by Adobe to present documents consistently across devices, preserving layout and content.

Challenges and Future Outlook

Despite its widespread use, networking communication technology faces several challenges:

Security Threats: Cyberattacks, data breaches, and hacking are ongoing risks.

Bandwidth Limitations: High demand can lead to congestion and slow speeds.

Interoperability Issues: Devices and systems must adhere to common standards.

Cost: Building and maintaining high-speed, reliable networks can be expensive.

Looking ahead, the field continues to evolve with the adoption of 5G networks, edge computing, AI-driven network management, and quantum communication. These innovations promise faster, more secure, and more efficient communication.

PR stands for public relations, which refers to the strategic management of communication between an organisation and its public to build a positive image.

What Is Network Communication?

Network communication refers to the process of exchanging data and information between devices, systems, or applications over a network. It involves the use of protocols, hardware, and software to enable communication between different devices, such as computers, smartphones, and servers. This communication can be wired (using cables like Ethernet) or wireless (using technologies like Wi-Fi or cellular networks).

Digital media services involve the creation, distribution, and management of digital content, including video, audio, social media, and online advertising, across various platforms. Network communication ensures that data is transferred efficiently, securely, and reliably, whether it’s for browsing the internet, sending emails, or streaming videos.

Common protocols include TCP/IP, HTTP, and FTP, which manage how data is packaged, transmitted, and received across networks. IT network services provide businesses with essential infrastructure for communication, including network design, security, monitoring, support, and optimisation to ensure efficient data flow.

What Is Networking Communication Technology in Computer Networks?

Networking communication technology in computer networks refers to the tools, protocols, and systems that enable data exchange between devices over a network.

It encompasses both hardware and software components that facilitate communication across local, wide-area, and even global networks like the Internet. Key hardware includes routers, switches, and cables that physically connect devices, while software protocols like TCP/IP, HTTP, and FTP manage how data is transmitted and received.

A reworder is a tool or software that helps rewrite text, altering the wording while retaining the original meaning, often used for content optimization.

Networking technologies are critical for various types of communication, including voice (Voip), video (video conferencing), and file sharing. They also ensure that data flows securely and efficiently, overcoming challenges like congestion, latency, and security threats.

Web Companion is software that enhances web browsing by offering features like improved security, performance optimisation, and personalised browsing experiences for users. With the evolution of technologies like 5G and Iot (Internet of Things), networking communication is becoming faster, more reliable, and increasingly integral to modern digital life, from enterprise applications to personal use.

This technology is fundamental in enabling the seamless operation of services and applications in today’s interconnected world. Safari Browser iPhone — Safari is the default web browser on iPhones, known for its fast performance, energy efficiency, privacy features, and seamless integration with Apple’s ecosystem.

Conclusion

Networking communication technology is the backbone of the digital age, enabling everything from everyday emails to complex international operations. As our dependence on digital tools grows, so too will the need for robust, secure, and fast networking solutions.

Understanding the principles and components of this technology is crucial not only for IT professionals but for anyone navigating the modern world.

How to make a lead in Minecraft? To make a lead in Minecraft, craft it using four string and one slimeball. Arrange the string in a square with the slimeball in the center at a crafting table.

#Networking#Computer Networking#Communication Technology#Topology Of Network#Technology#network communication#organizational communication networks#network topology network#formal communication network#Internet

0 notes

Text

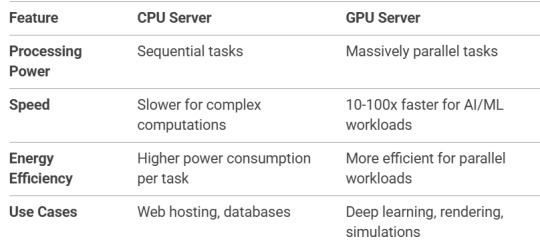

GPU Servers: Powering High Performance Computing with CloudMinister

Introduction

In the era of AI, machine learning, big data, and real-time rendering, traditional CPUs often fall short in handling intensive workloads. GPU-accelerated servers provide the computational power needed for demanding applications. At CloudMinister, we offer high-performance GPU server solutions tailored for deep learning, scientific computing, graphics rendering, and more.

What is a GPU Server?

A GPU (Graphics Processing Unit) server is a high-performance computing system equipped with powerful GPUs (like NVIDIA Tesla, A100, or AMD Instinct) alongside traditional CPUs. Unlike standard servers, GPU servers excel at parallel processing, making them ideal for:

✔ AI & Machine Learning (training deep neural networks) ✔ Big Data Analytics (real-time processing) ✔ 3D Rendering & CGI (film, gaming, simulations) ✔ Scientific Computing (genomics, climate modeling) ✔ Cryptocurrency Mining (high-speed hashing)

Why Choose a GPU Server Over a CPU Server?

CloudMinister’s GPU Server Solutions

1. AI & Deep Learning

Train neural networks faster with NVIDIA Tensor Core GPUs.

Optimized for TensorFlow, PyTorch, and CUDA frameworks.

2. High-Performance Computing (HPC)

Accelerate scientific simulations, financial modeling, and data analysis.

Supports OpenCL, NVIDIA CUDA, and ROCm.

3. Graphics Rendering & CGI

Real-time ray tracing for film, gaming, and VR/AR.

Blender, Maya, Unreal Engine optimized configurations.

4. Cloud GPU Hosting

On-demand GPU instances (pay-as-you-go).

Scalable clusters for distributed computing.

5. Dedicated GPU Servers

Bare-metal GPU servers for maximum performance.

Customizable (RAM, storage, GPU count).

Key Features of CloudMinister’s GPU Servers

✅ Latest GPU Hardware – NVIDIA A100, H100, RTX 6000, AMD Instinct. ✅ High-Speed NVMe Storage – Reduce data bottlenecks. ✅ 100Gbps Network Uplink – Faster data transfers. ✅ 24/7 Monitoring & Support – Proactive maintenance. ✅ Global Data Centers – Low-latency access worldwide.

Who Needs a GPU Server?

✔ AI Startups – Faster model training. ✔ Research Labs – Complex simulations & data crunching. ✔ Game Studios – Real-time rendering & physics engines. ✔ Blockchain Developers – Efficient mining & node processing.

Why Choose CloudMinister?

🔹 Cutting-Edge GPU Infrastructure – Latest NVIDIA & AMD GPUs. 🔹 Flexible Deployment – Cloud, hybrid, or on-premise. 🔹 Expert Support – GPU optimization & troubleshooting. 🔹 Cost-Effective Pricing – Hourly billing or reserved instances.

Conclusion

Whether you're training AI models, rendering 4K animations, or running complex simulations, CloudMinister’s GPU servers deliver unmatched speed and efficiency.

Supercharge Your Workloads Today!👉 Explore CloudMinister’s GPU Server Solutions

FAQs

Q: What GPUs do you support?

A: NVIDIA (Tesla, A100, RTX) and AMD (Instinct, Radeon Pro).

Q: Can I use GPU servers for video transcoding?

A: Yes! GPUs accelerate 4K/8K video encoding/decoding.

Q: Do you offer GPU cloud instances? A: Yes, on-demand & reserved GPU cloud servers available.Need a custom GPU solution? Contact our experts now!

0 notes

Text

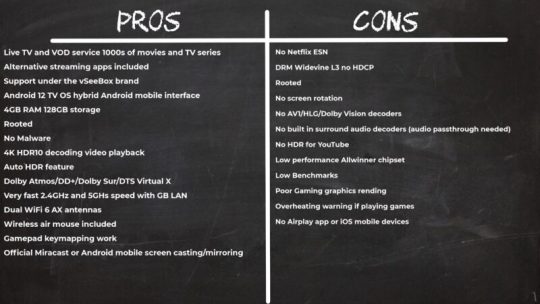

Octastream Elite Max Live TV VOD TV Box Review

Hey everyone, welcome back! Today, I’m reviewing the Octastream Elite Max. If you love live TV and video-on-demand, this device might be perfect for you. So, let’s dive right in! First, let’s talk about what’s inside the box. When you open it, you’ll find everything needed for setup. The Octastream Elite Max comes with a wireless air mouse, which is a great addition. You also get an HDMI cable, an IR extender cable, a DC power adapter, and a user guide. With everything neatly packed, setting it up is quick and easy.

Design and Ports of Octastream Elite Max Moving on, the Octastream Elite Max has a sleek plastic shell with polished edges. This gives it a premium look. At the front, there’s an LED power light. At the back, you’ll find two Wi-Fi 6 antennas for strong connectivity. As for ports, this device is well-equipped. It includes an HDMI 2.1 port, an Ethernet LAN port, an optical audio port, an AV port, and an IR extender port. Additionally, it has a USB 3.0 port, a USB 2.0 port, and a microSD card reader. The reset button is conveniently placed on the side. However, the base has a metal panel with four rubber feet but no ventilation holes. This could lead to heat buildup. Now, let’s talk about the boot-up process.

Boot-Up and Launcher Interface of Octastream Elite Max When you power it on, you’ll see a simple Octastream Elite Max animation. There’s no startup wizard. Instead, it takes you straight to the launcher. This launcher has 15 shortcut spaces for quick access to your favorite apps. At first, there are no live TV or video-on-demand apps installed. However, once connected to the internet, a shortcut appears to install an APK app store. Installing this store is optional, but it’s necessary for accessing live TV and video-on-demand services. After installation, you get three main apps: Elite TV, Elite VOD, and Elite Replay.

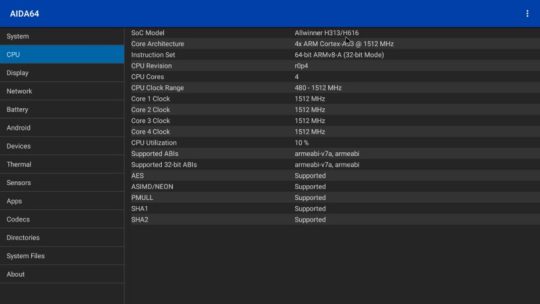

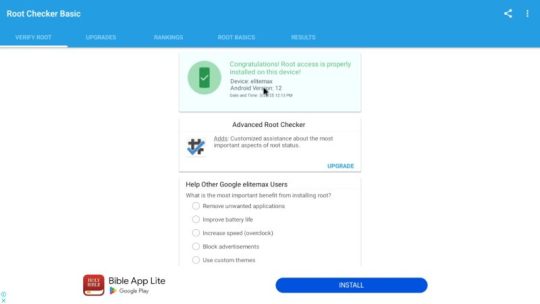

System and Performance of Octastream Elite Max The Octastream Elite Max runs on Android 12 TV OS, and it supports 4K at 60Hz, HDR10, and HDMI CEC. However, the screen rotation feature does not work properly. Additionally, while it supports 10-bit color depth, it lacks built-in surround sound decoders. For hardware, it features an Allwinner H313/H616 chipset, a quad-core Cortex A53 processor, and a Mali G31 GPU. Moreover, it includes 4GB of DDR4 RAM and 128GB of internal storage. Furthermore, Bluetooth 5.0 is also available. In terms of connectivity, its Wi-Fi 6 adapter offers excellent reception with dual-band 2.4GHz and 5GHz support. Nevertheless, it runs a hybrid version of Android TV OS. Consequently, it uses a mobile version of the Google Play Store. In addition, the firmware is rooted, which affects compatibility with paid streaming services.

Streaming and Digital Rights Management on Octastream Elite Max Streaming services do come with some limitations. Specifically, the Octastream Elite Max only supports Google Widevine L3 without HDCP protection. As a result, Netflix and other paid streaming platforms are limited to 480p. Even though users may have an HD subscription, higher resolutions remain unavailable. Fortunately, YouTube performs exceptionally well. Both the Android TV and Smart YouTube TV apps fully support 4K playback. Nevertheless, HDR functionality is not included.

Media Playback and Surround Sound on Octastream Elite Max For media playback, HDR10 content works well. However, formats like AV1, Dolby Vision, and HDR10+ are not supported. When playing videos encoded in these formats, they either fail to play or default to HDR10. Some files with Dolby Atmos or HDR10+ cause a buzzing noise on TVs. With an AV receiver, performance improves. Dolby Atmos and Dolby Digital Plus work with a multi-speaker setup. However, Dolby TrueHD in MKV or M2TS formats does not play. DTS HD Master triggers DTS Virtual X, which sounds great on compatible speakers. To enable these formats, audio passthrough must be activated in the settings.

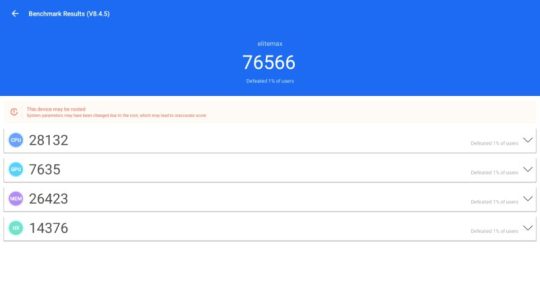

Gaming and Performance Benchmarks Gaming is possible but has some drawbacks. The Octastream Elite Max can get very hot. I recorded temperatures reaching 81°C, which is too high for extended gaming. Also, since the CPU is only 1.5 GHz, high-graphics games struggle. However, Bluetooth gamepads connect smoothly. Some games require touchscreen controls, so a keymapping app is needed. Now, let’s look at the benchmarks: - RAM transfer speed: 2874 MB/s - Internal storage read speed: 155 MB/s - Internal storage write speed: 152 MB/s - Wi-Fi 6 speeds: 315 Mbps (5GHz) and 235 Mbps (2.4GHz) - Gigabit LAN: Full network speed - Geekbench 5: 111 (single-core), 376 (multi-core) - 3DMark Wild Life: 129 score, 0.77 FPS - Antutu: 76,566 (ranked 93rd on my TV Box chart)

Final Thoughts In summary, the Octastream Elite Max is a strong performer in its category. The company markets it under the vSeebox brand, which sometimes replaces the Octastream Elite Max name. Also, alternative apps beyond the default three may not work forever, so keep that in mind.

Performance-wise, this device offers excellent Wi-Fi reception and a good streaming experience. If you want to buy one, check the link in the description for a discount and a coupon code. Lastly, as a disclaimer, this review highlights both the pros and cons of the Octastream Elite Max. The purchase link is an affiliate link, meaning I earn a small commission if you buy through it. Your support is greatly appreciated! Before you go, don’t forget to like and subscribe for more reviews, secondhand deals, and giveaways. Thanks for reading, and I’ll see you in the next post! Read the full article

0 notes

Text

With the Best IPTV Services Enjoy Your Favourites

Uncover The Best IPTV Services with 4k streaming,variant channels, Sports & Movies and reliable performance for an unbeatable viewing experience.

Finding a reliable IPTV provider can be challenging with so many options. IPTV services offer live TV, on-demand movies, shows, and international content via the internet. Whether you love sports, news, or binge-worthy series, the right provider delivers premium entertainment at a fraction of traditional cable costs.

By carefully evaluating your needs and available options, you can secure a reliable connection without overspending.

⚠️ Legal Disclaimer:This article is purely educational. Users should verify the legality of services in their choice and ensure they access only publicly available content. Responsibility lies with the end-user.

What is an IPTV Service?

An IPTV service delivers TV content via the internet, allowing users to stream live channels and on-demand videos on devices like smart TVs and smartphones. It offers features like catch-up TV, video-on-demand, and customizable channel options, giving users more control over their viewing experience.

How does IPTV Work?

IPTV works by delivering television content through the internet rather than traditional satellite or cable methods. Here’s how it works:

Content Acquisition: IPTV providers get content from multiple sources such as cable, satellite, or live events. This content could include TV shows, movies, sports events, etc.

Encoding & Compression: The content is then encoded and compressed into a digital format that can be transmitted over the internet.

Transmission over IP Network: Instead of using satellite or coaxial cables, IPTV uses the internet to send the content to users. It uses a private IP-based network.

Set-Top Box or App: To watch IPTV, you need a device that can decode the transmitted data. This could be a set-top box or an app on your smart TV, computer, or smartphone.

On-Demand & Live Streaming: IPTV can offer both live channels and on-demand content. Users can stream live TV channels or choose from a library of content..

Interactive Features: Many IPTV services include interactive features like pause, rewind, or fast-forward live TV.

What to Look for in an IPTV Subscription

A great IPTV service should offer: ✅ High-Quality Streaming – HD & 4K support ✅ Affordable Pricing – Flexible plans ✅ Extensive Channel Lineup – Global & local networks ✅ Reliable Performance – Buffer-free experience ✅ Multi-Device Compatibility – Smart TVs, Firestick, Android, and more

By the end, you’ll see why IPTVUSAFHD is the ultimate choice!

Advantages of 4k Streaming IPTV Subscription

IPTV (Internet Protocol Television) subscriptions offer several advantages over traditional cable or satellite TV services. Here are some key benefits:

More Channels and Content: IPTV services often provide a wider selection of channels, including international channels, niche content, and specialized genres.

Cost-Effective: Compared to traditional cable or satellite subscriptions, IPTV can be more affordable, as it typically doesn’t involve the same infrastructure and hardware costs.

Flexibility and Convenience:With IPTV, you can watch content on smartphones, tablets, smart TVs, and computers, not just on a traditional set-top box or one location.

No Satellite Dish or Antenna Needed: IPTV works over the internet, eliminating the need for bulky satellite dishes or antennas, ideal for areas with weak or unavailable satellite signals.

High-Quality Streaming: IPTV providers often offer high-definition (HD) or even 4K streaming, providing a superior viewing experience compared to standard-definition broadcasts from traditional providers.

Customization and Personalization: IPTV services may allow for more customization in terms of channel packages, content recommendations, and making it easier to personalize your viewing experience.

Global Accessibility: Since IPTV operates over the internet, users can access content from around the world, making it easier to watch foreign channels.

How IPTV is Transforming the Way our Experience Entertainment

IPTV (Internet Protocol Television) is revolutionizing entertainment by delivering content over the internet, providing greater flexibility and personalization. Unlike traditional cable or satellite TV, 4k Streaming IPTV allows users to stream live channels, on-demand content, and interactive services on multiple devices, including TVs, smartphones, and tablets. With features like time-shifted viewing, video-on-demand, and tailored recommendations, it caters to individual preferences, ensuring a seamless and engaging experience. Its scalability and affordability make IPTV a game-changer, transforming how we access and enjoy entertainment globally.

Say goodbye to outdated TV experiences and embrace the future with IPTV!

IPTV is revolutionizing the way we consume content, offering flexibility, variety, and affordability like never before. With IPTV, you can stream your favorite shows, movies, and live events on Smart TVs, smartphones, tablets, laptops, and even gaming consoles—anytime, anywhere.

Enjoy real-time sports, breaking news, and premium channels in stunning HD or 4K with minimal buffering. IPTV services also provide vast Video on Demand (VOD) libraries, granting instant access to movies, TV shows, and exclusive content.

Catering to global audiences, IPTV supports international channels across multiple languages, ensuring diverse entertainment options. It’s also a cost-effective alternative to traditional cable and satellite services, offering more content for less. Plus, with flexible subscription plans and no long-term contracts, you get entertainment on your terms—no commitments, no hassle.

Looking for Best 4k Streaming IPTV service! IPTV USA FHD is best.

IPTV USA FHD - Popular choice in the USA , Europe and Asia

In today's fast-paced digital world, having access to high-quality entertainment at your fingertips is a necessity. Choosing the 4k Streaming IPTV comes with numerous benefits, enhancing your entertainment experience and providing value for your investment. Whether you're a sports enthusiast, a movie buff, or someone who loves binge-watching TV shows, IPTV USA FHD offers the ultimate streaming experience.

IPTV USA FHD provides access to both live TV and a vast library of on-demand shows, offering flexibility and convenience for viewers. It is known for its reliable service, diverse content selection, and compatibility with multiple devices, making it a popular choice for cord-cutters in the U.S.

Benefits of IPTVUSAFHD

IPTV offers a flexible, feature-rich, and cost-effective viewing experience tailored for modern digital lifestyles.

On-Demand Viewing: Watch shows, movies, or sports anytime without rigid schedules.

Wide Content Variety: Access international, local, and niche channels.

High-Quality Streaming: Enjoy HD or 4K resolution with smooth playback.

Multi-Device Compatibility: Stream on TVs, smartphones, tablets, and more.

Cost-Effective: More affordable than traditional cable or satellite.

Interactive Features: Pause, rewind, and fast-forward live TV.

Global Access: Watch content from anywhere with an internet connection.

Features:

4k Streaming: Support for multiple streams on different devices.

Affordable Plans: Competitive pricing compared to cable and satellite services.

User-Friendly Interface: Easy navigation and search options.

24/7 Customer Support: Reliable assistance for technical or subscription issues.

50,000+ Live TV channels

65,000+ Movies & TV series

Updated Content Library

Easy-to-use Electronic Program Guide (EPG)

Real User Reviews: IPTVUSAFHD users appreciate its reliability, easy setup, and high-quality streaming. Many have switched from traditional cable, attracted by its affordability and extensive content selection. Subscribers highlight the smooth performance, vast channel lineup, and premium experience that surpasses expectations. The service's user-friendly interface and minimal buffering make it a favorite among IPTV enthusiasts. Whether for live TV or on-demand content, IPTVUSAFHD continues to impress users seeking a cost-effective and high-quality streaming solution.

Why Choose IPTV USA FHD?

If you're new to IPTV, you may be wondering why IPTV USA FHD is considered one of the best IPTV services. Here’s why:

1. Stunning High Definition (HD) Quality

IPTV USA FHD delivers content in 10,000 Live Channel with Full HD (1080p) and even 4K resolution. This ensures a sharp, crystal-clear picture for movies, live TV, and sports events. With high-quality streams, you can enjoy the best viewing experience possible, without the blurriness often associated with older cable or satellite services.

2. Extensive Channel Lineup

One of the standout features of IPTV USA FHD is its massive selection of channels. From mainstream TV networks to niche channels, IPTV USA FHD has something for everyone. You can access a wide range of live TV channels, including:

News channels (CNN, BBC, FOX, etc.)

Sports channels (ESPN, NBC Sports, beIN Sports)

Entertainment channels (HBO, Showtime, AMC)

Music and lifestyle channels (MTV, Discovery, TLC)

International channels (channels from Europe, Asia, Latin America)

This selection allows users to access a broad array of content from all over the world.

3. On-Demand Content

Another advantage of IPTV is the ability to watch your favorite shows and movies whenever you want. IPTV USA FHD offers an extensive on-demand library where you can choose from thousands of movies, TV shows, documentaries, and more. This means that you no longer need to plan your day around a TV schedule—simply pick what you want to watch and stream it instantly.

4. No Hidden Fees or Contracts

Unlike traditional cable providers, IPTV services like IPTV USA FHD do not require long-term contracts. You can subscribe on a month-to-month basis without being locked into a contract, and there are no hidden fees. If you're not satisfied, you can cancel your subscription at any time without penalties.

5. Multi-Device Streaming

IPTV USA FHD works on a variety of devices. You don’t need to be tied to a single television to enjoy IPTV; you can watch on your smartphone, tablet, laptop, PC, smart TV, or set-top box. This flexibility means that you can enjoy live TV or on-demand content from anywhere in your home—or even on the go!

Challenges and Considerations

Despite its many benefits, IPTV faces several challenges:

Network Dependency – IPTV performance relies on internet speed and stability, which can be a limitation in areas with poor connectivity.

Piracy Concerns – Unauthorized IPTV services and content piracy raise legal and ethical issues for content creators and distributors.

Regulatory Issues – As IPTV expands, governments and regulatory bodies are working to establish policies ensuring fair competition and consumer protection.

Bandwidth Consumption – High-definition and 4K streaming require substantial bandwidth, making high-speed internet plans essential for optimal performance.

Subscription Plans & Pricing:

Basic Plan: $ 16.99

Standard Plan: $ 28.99

Premium Plan: $ 35.99

Elite Plan: $ 59.99

Elite Plan: $ 70.99 (Best Value)

Selecting the right IPTV subscription Always check for reviews and Free Trial the service before committing to a long-term plan.

ArisIPTV: A best Choice for VOD

Aris IPTV is a highly rated IPTV service provider known for its extensive content library, high-quality streaming, and excellent customer support. Offering a diverse selection of live TV channels, movies, and sports networks.

ArisIPTV is an excellent choice for expats and those seeking a diverse range of global content. With its extensive international channel selection, it offers a broader viewing experience, making it ideal for users wanting access to worldwide entertainment.

Features:

30,000+ channels, including international networks

No Contracts, No Hidden Fees

Global Channel Coverage

4K and HD streaming

Fast Activation & Easy Setup

Sports and PPV channels

24/7 Customer Support

Extensive VOD Library

Massive Channel Selection

Subscription Plans & Pricing:

Monthly Plan: £ 11.99

3-Month Plan: £ 19.99

6-Month Plan: £ 30.99

12-Month Plan: £ 45.99

Annual Plan: £ 59.99

Pros:

Great for international TV lovers

Smooth, high-quality streams

Extensive content library with a wide range of channels and VOD options.

High-quality streaming with minimal buffering or freezing issues.

User-friendly interface compatible with various devices.

7-day money-back guarantee, allowing users to try the service risk-free.

Cons:

Occasionally buffering

Apollo Group TV

Apollo Group TV is a premium IPTV service offering over 1,000 high-definition live TV channels along with an extensive Video-On-Demand (VOD) library. Known for its reliability and seamless streaming, it is compatible with multiple devices, including Android, iOS, Windows, Mac, and FireStick.

Features

Over 1,000 HD live channels

Extensive VOD library with movies and TV shows

Compatible with Android, iOS, Windows, Mac, and FireStick

High-definition streaming with minimal buffering

User-friendly interface for easy navigation

Reliable customer support

Pros

High-quality streaming with HD channels Well-organized VOD section Supports multiple devices Good customer support

Cons

Expensive compared to other IPTV services Limited live TV channel selection

Subscription Plan

Starts at $24.99 per month

Necro IPTV – Premium Catch-Up Streaming

Necro IPTV stands out with its 7-day catch-up feature, allowing users to rewind content at their convenience. With over 2,000 live TV channels and full EPG access, it provides a smooth streaming experience. The service is compatible with Fire TV, Apple TV, and most smart devices, making it an attractive choice for IPTV users.

Features

2,000+ live TV channels

7-day catch-up feature

Full EPG access

Compatible with Fire TV, Apple TV, and smart devices

High-definition streaming

Pros

Offers catch-up TV feature Smooth HD streaming Wide device compatibility

Cons

Slightly more expensive than some other services Some channels may buffer during peak hours

Subscription Plans

💰 Starts at $11.99 per month

Area 51 IPTV

Area 51 IPTV provides 3,000+ live channels and a 14,000-movie VOD library. It's known for affordability and compatibility across various devices.

Features

3,000+ live TV channels

14,000+ movies on VOD

Pros

Affordable pricing Large movie collection

Cons

Limited live sports coverage

Subscription Plans

💰 $10 per month

Learn more : About Best IPTV Service

Legal vs. Unverified IPTV Providers: How to Stay Safe

IPTV providers can be categorized into legal and unverified services. Understanding the difference is key to avoiding potential risks.

How to Identify Legal IPTV Services:

✅ Available in Official App Stores – Legal IPTV apps can be found in trusted app stores like Amazon App Store, Google Play Store, and Apple Store. These stores don’t feature illegal services. ✅ Public Domain Content – Legal services often provide publicly available content, ensuring you stay within the bounds of the law.

How to Stay Safe:

❌ Avoid Unverified Providers – If the IPTV provider is not available in official app stores, it could be illegal. ✅ Use an IPTV VPN – A VPN like ExpressVPN can secure your connection, protect your privacy, and help you stream safely.

Legal IPTV Services

Legal IPTV services are easy to identify using a simple trick: ✅ Available in Official App Stores – If the IPTV app is listed in Amazon App Store, Google Play Store, or Apple Store, it complies with legal guidelines, ensuring a safe streaming experience. ✅ Public Domain Content – These services typically offer content available in the public domain or licensed for distribution, keeping you within the law.

To stay on the safe side, stick to these official IPTV services and consider using an IPTV VPN, like ExpressVPN, to secure your connection and protect your privacy while streaming.

Unverified IPTV Services

Unverified IPTV services may not be available in official app stores but tend to attract users due to lower costs and extensive content libraries. However, they pose risks: ❌ Illegal Content – They may stream content without proper licensing, which could be illegal. ❌ Security Risks – These services may not provide the same level of security or customer support as legal providers. ❌ Sudden Shutdowns – Unverified services can disappear without warning, leaving users without access.

Why IPTV Beats Traditional TV: More Channels, Less Cost, Better Experience!

IPTV delivers a superior viewing experience with greater flexibility, affordability, and advanced features. Unlike traditional cable or satellite TV, IPTV streams content over the internet, allowing users to enjoy live sports, TV shows, and on-demand movies anytime, anywhere.

With key features like time-shifting, catch-up TV, and Video on Demand (VOD), IPTV gives you full control over your schedule. It’s compatible with smart TVs, smartphones, tablets, and laptops, making it a highly versatile option. Plus, IPTV services are often more budget-friendly than traditional TV packages, offering a vast content library and a modern, hassle-free streaming experience.

Legal IPTV Services: How to Identify Them

A simple way to verify legal IPTV services is by checking official app stores like the Amazon App Store, Google Play Store, or Apple Store. These platforms only list apps that comply with legal guidelines. If an IPTV provider isn’t available there, it may be operating illegally. To stay safe, use official services or public domain content. Additionally, a VPN enhances security and protects your data while streaming.

Key Factors to Consider When Choosing an IPTV Service

When shopping for an IPTV service, keep these factors in mind:

Price and Payment Options: Some services accept only cryptocurrency, which may not be ideal for everyone.

Live TV Channel Support: Ensure the service offers the channels you need.

Number of Connections: Check how many devices can be connected per plan.

VPN Compatibility: Use a VPN with unverified services for privacy.

External IPTV Player Compatibility: Confirm it works with your preferred player.

Customer Support: Choose providers with reliable, responsive support.

EPG Support: An Electronic Program Guide helps with browsing and scheduling.

Premium Sports: Ensure access to sports channels if needed.

For unverified IPTV, use a disposable email and secure payment methods for privacy. Legal services don’t require these precautions. Opt for higher-tier plans with VOD, 24/7 support, and EPG features for the best experience.

FAQ

What is the best IPTV service in 2025?

IPTV USA FHD ranks as the best IPTV provider.

Can I use IPTV services on any device?

Yes,Including Android, iOS, Windows, Smart TVs, and Firestick.

How do I subscribe to IPTV USA FHD?

You can subscribe by visiting their official website, choosing a plan, and following the setup instructions provided.

Can I enjoy a free trial for IPTV services?

Yes You can easily enjoy a free trial with IPTV USA FHD.

What channels does HD IPTV include?

HD IPTV includes channels like sports, news, movies, entertainment, and international options, varying by provider.

Final Thoughts

Honest user reviews play a vital role in choosing the best IPTV subscription in 2025. Whether you prioritize premium content, reliability, or affordability, each IPTV provider offers something unique. IPTVUSAFHD stands out for its blend of quality streaming, excellent customer support, and affordable pricing, making it an ideal choice for those seeking a reliable IPTV service. However, depending on your preferences — whether you're into sports, international channels, or movie streaming — you may find that other services better suit your specific needs.

0 notes

Text

Something noteworthy about h265 and many other encodings is that while they do reduce filesize, they never get so complex that I've had something like, buffering due to my hardware needing a sec to decode stuff. Which makes me wonder, if that is something that Computer Scientists have to account for when designing these encodings, and what could be achieved if we allowed for some I guess "local buffering" just to see how small we can get a video. Of course I understand it isn't sensible for most cases, but I am just curious, and I am not knowledgeable enough in the department to answer my own question

0 notes

Text

From a certain point of view, Apple's recent launch of the 2024 Mac Mini seemed like somewhat of a challenge to rival manufacturers, which almost seems to say "this is how you do compact premium computing hardware." Of course Apple isn't the first company to do compact computing hardware (and it certainly won't be the last), but as far as the product segment goes there's not a lot of products from the usual mainstream brands. CHECK OUT: Apple Drops the 2024 Mac Mini: New Look, New Chip, and More! What is it Exactly? With that in mind, Microsoft's new "Windows 365 Link" (that's quite a name) brings to mind all the hallmark traits of a compact computer, such as a rather portable design, all the essential ports and connectivity options, and of course support for a variety of hardware peripherals. Unlike the Mac Mini however, Microsoft says that the 365 Link is more of a cloud-based computing solution for business and enterprise users. As per its name, the 365 Link is designed to connect to Windows 365 online, allowing businesses to setup "hot desks" for employees to log in with their details, from anywhere at anytime. It's a cloud-based approach, as we mentioned earlier. As such, it's not exactly something you'd get for more "mainstream" use such as gaming and content creation, for example. There is a bit of computing power, although it's mostly used for video decoding and encoding for video calls. Hardware and Software The 365 Link can support up to two 4K monitors (with HDMI and DisplayPort connections), and users can go online via the built-in gigabit Ethernet port, or wirelessly with Wi-Fi 6E. For external hardware and peripherals, Microsoft has included four USB ports consisting of three USB-A 3.2 and a single USB-C 3.2 port. For audio, there are options for wired audio with a 3.5mm headphone jack, as well as Bluetooth 5.3. As for its design, the 365 Link relies on passive cooling, so there are no fans inside; the chassis is made from a combination of recycled aluminium, Being that this is a device that's meant for business and enterprise solutions, the 365 Link's operating system is mostly locked down - this means that there are no locally-installed apps or user accounts, no locally-stored data and files, leaving everything to the cloud. Microsoft wasn't kidding when it said that this was an online-only device, and it's explicitly designed the 365 Link to work exactly as such. This now brings us to security - the computer comes with support for user authentication with Microsoft Entra ID, the Microsoft Authenticator app, QR code-based passkeys and even FIDO USB security keys. There's no need for passwords, which reduces the likelihood of the device being compromised. Pricing and Availability The Windows 365 Link will be available via preview by December 15th, 2024, with wider availability planned for the first half of 2025. It will initially launch in the US, Canada, UK, Australia, New Zealand, and Japan in April 2025. Priced at $350, the Windows 365 Link will require a Windows 365 Enterprise, Frontline, or Business subscription. Read the full article

1 note

·

View note

Text

What is NDI? A Beginner’s Guide to NDI 6.2, IP Video, and the Gear You Need to Get Started

New Post has been published on https://thedigitalinsider.com/what-is-ndi-a-beginners-guide-to-ndi-6-2-ip-video-and-the-gear-you-need-to-get-started/

What is NDI? A Beginner’s Guide to NDI 6.2, IP Video, and the Gear You Need to Get Started

If you’re new to video production or looking to upgrade your workflow, you’ve probably heard about NDI (Network Device Interface). This powerful technology enables high-quality, low-latency video transmission over standard Ethernet networks—no need for expensive cabling or complex infrastructure. In this beginner’s guide, we’ll explain what NDI is, how it works, what’s new in NDI 6.2, and the essential gear you need to start using NDI in your live streaming, broadcast, or video conferencing setup.

youtube

🔍 What is NDI?

NDI is a video-over-IP protocol developed by Vizrt that allows you to send and receive broadcast-quality video and audio over a local area network (LAN) using standard Cat6 Ethernet cables. With NDI, you can also transmit control signals and power over a single cable, making it ideal for streamlining production setups. NDI is widely used across industries including:

House of Worship

Live Events & Broadcast

Business & Corporate Communications

Education & eLearning

Sports & eSports

Government & Healthcare

Radio & DJ Production

🚀 What’s New in NDI 6.2?

The latest version of NDI introduces several powerful upgrades designed for modern IP workflows:

Discovery Tool: Monitor all NDI devices—senders and receivers—in one place

HDCP Support: Transmit HDCP-compliant content securely

HDR & 10-Bit Color: Deliver richer color and better contrast

Embedded WAN Connectivity: Some cameras now have NDI Bridge built in for transmission over wide area networks (WAN)

NDI HX3 Support: Enables HEVC encoding, lower bandwidth usage, and Wi-Fi-compatible PTZ cameras

🛠️ Essential NDI Tools and Equipment

✅ NDI Cameras (PTZ and Box Cameras)

NDI-enabled PTZ cameras simplify your setup with just one cable for power, video, and control. Some even support wireless NDI for ultimate flexibility.

✅ NDI Switchers

Pair your system with NETGEAR M4250 Pro AV switches, which are pre-configured for NDI and Dante workflows. These switches act as the central hub for all your AV-over-IP devices and include support for advanced protocols like ST 2110.

✅ NDI Tools Suite

NDI offers a free suite of software tools that includes screen capture, video monitors, and virtual input—making it easy to get started with multiple devices and sources.

✅ NDI-Compatible Encoders/Decoders

These help bridge non-NDI gear into your NDI workflow, expanding your production capabilities.

💡 Why Choose NDI for Your Production?

Simplified cabling with one cable for everything

Route video to multiple destinations without complex wiring

Easily scale your workflow from small productions to professional broadcasts

Great for remote production with built-in WAN features

Ideal for hybrid events and multi-room streaming setups

🎬 Applications of NDI

NDI is transforming how video is produced and delivered in:

Worship services

Remote learning and classroom streaming

Live sports and eSports events

Corporate webinars and town halls

Government briefings and telehealth

Live radio and music performances