#gpt store update

Explore tagged Tumblr posts

Text

youtube

Welcome to the GPT Store, where innovation meets imagination! Nestled within the bustling heart of the digital marketplace, the GPT Store stands as a beacon of cutting-edge technology and limitless creativity. As you step into our virtual emporium, prepare to embark on an extraordinary journey through the realms of artificial intelligence and linguistic prowess.

With its sleek interface and intuitive design, the GPT Store offers a seamless shopping experience like no other. Whether you're a seasoned developer, a curious enthusiast, or an avid explorer of the digital frontier, there's something here for everyone. From advanced AI models to bespoke language tools, our vast collection caters to a diverse array of needs and interests.

At the heart of the GPT Store lies our flagship product: the renowned GPT series. Powered by state-of-the-art deep learning algorithms and trained on vast swathes of data, these AI models represent the pinnacle of natural language processing. Whether you seek assistance with writing, coding, or creative endeavors, our GPT models are your ultimate companions in unlocking new possibilities.

But the GPT Store is more than just a repository of AI models. It's a vibrant marketplace where ideas flourish and innovation thrives. Browse through our curated selection of plugins, extensions, and add-ons, each crafted to enhance your AI experience. From language translation tools to sentiment analysis plugins, these resources are designed to augment your productivity and unleash your creativity.

So, whether you're a seasoned AI aficionado or a curious newcomer, come discover the wonders of the GPT Store. Unleash your imagination, explore the limitless potential of artificial intelligence, and embark on a journey that transcends the boundaries of what's possible. Welcome to the future of innovation.

GPT Store: How To Use and Make Money Online 2024

#gpt store#chatgpt store#gpt store explained#gpt store openai#chatgpt#chatgpt store money#chat gpt#make money online#how to make money with gpt store#how to make money from gpt store#gpt store ai#open ai store#gpt store guide#gpt store make money#gpt store launch#gpt store review#gpt store exposed#gpt store exposed review#gpt store update#openai gpt store#make money online 2024#how to make money online 2024#limitless tech 888#gpt app store#Youtube

0 notes

Text

youtube

Welcome to the GPT Store, where innovation meets imagination! Nestled within the bustling heart of the digital marketplace, the GPT Store stands as a beacon of cutting-edge technology and limitless creativity. As you step into our virtual emporium, prepare to embark on an extraordinary journey through the realms of artificial intelligence and linguistic prowess.

With its sleek interface and intuitive design, the GPT Store offers a seamless shopping experience like no other. Whether you're a seasoned developer, a curious enthusiast, or an avid explorer of the digital frontier, there's something here for everyone. From advanced AI models to bespoke language tools, our vast collection caters to a diverse array of needs and interests.

At the heart of the GPT Store lies our flagship product: the renowned GPT series. Powered by state-of-the-art deep learning algorithms and trained on vast swathes of data, these AI models represent the pinnacle of natural language processing. Whether you seek assistance with writing, coding, or creative endeavors, our GPT models are your ultimate companions in unlocking new possibilities.

But the GPT Store is more than just a repository of AI models. It's a vibrant marketplace where ideas flourish and innovation thrives. Browse through our curated selection of plugins, extensions, and add-ons, each crafted to enhance your AI experience. From language translation tools to sentiment analysis plugins, these resources are designed to augment your productivity and unleash your creativity.

For those seeking personalized solutions, the GPT Store offers bespoke services tailored to your specific requirements. Whether you need custom model training, API integration, or specialized consultancy, our team of experts is here to help you realize your vision. With their unparalleled expertise and dedication to excellence, they'll guide you every step of the way, ensuring that your AI journey is both rewarding and transformative.

GPT Store: How To Use and Make Money Online 2024

#gpt store#chatgpt store#gpt store explained#gpt store openai#chatgpt#chatgpt store money#chat gpt#make money online#how to make money with gpt store#how to make money from gpt store#gpt store ai#open ai store#gpt store guide#gpt store make money#gpt store launch#gpt store review#gpt store exposed#gpt store exposed review#gpt store update#openai gpt store#make money online 2024#how to make money online 2024#limitless tech 888#gpt app store#Youtube

0 notes

Text

History and Basics of Language Models: How Transformers Changed AI Forever - and Led to Neuro-sama

I have seen a lot of misunderstandings and myths about Neuro-sama's language model. I have decided to write a short post, going into the history of and current state of large language models and providing some explanation about how they work, and how Neuro-sama works! To begin, let's start with some history.

Before the beginning

Before the language models we are used to today, models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory networks) were used for natural language processing, but they had a lot of limitations. Both of these architectures process words sequentially, meaning they read text one word at a time in order. This made them struggle with long sentences, they could almost forget the beginning by the time they reach the end.

Another major limitation was computational efficiency. Since RNNs and LSTMs process text one step at a time, they can't take full advantage of modern parallel computing harware like GPUs. All these fundamental limitations mean that these models could never be nearly as smart as today's models.

The beginning of modern language models

In 2017, a paper titled "Attention is All You Need" introduced the transformer architecture. It was received positively for its innovation, but no one truly knew just how important it is going to be. This paper is what made modern language models possible.

The transformer's key innovation was the attention mechanism, which allows the model to focus on the most relevant parts of a text. Instead of processing words sequentially, transformers process all words at once, capturing relationships between words no matter how far apart they are in the text. This change made models faster, and better at understanding context.

The full potential of transformers became clearer over the next few years as researchers scaled them up.

The Scale of Modern Language Models

A major factor in an LLM's performance is the number of parameters - which are like the model's "neurons" that store learned information. The more parameters, the more powerful the model can be. The first GPT (generative pre-trained transformer) model, GPT-1, was released in 2018 and had 117 million parameters. It was small and not very capable - but a good proof of concept. GPT-2 (2019) had 1.5 billion parameters - which was a huge leap in quality, but it was still really dumb compared to the models we are used to today. GPT-3 (2020) had 175 billion parameters, and it was really the first model that felt actually kinda smart. This model required 4.6 million dollars for training, in compute expenses alone.

Recently, models have become more efficient: smaller models can achieve similar performance to bigger models from the past. This efficiency means that smarter and smarter models can run on consumer hardware. However, training costs still remain high.

How Are Language Models Trained?

Pre-training: The model is trained on a massive dataset to predict the next word in a sentence. Even training relatively small models require hundreds of gigabytes of training data, and a lot of computational resources which cost millions of dollars.

Fine-tuning: After pre-training, the model can be customized for specific tasks, like answering questions, writing code, casual conversation, etc. Fine-tuning can also help improve the model's alignment with certain values or update its knowledge of specific domains. Fine-tuning requires far less data and computational power compared to pre-training.

The Cost of Training Large Language Models

Training a large language model is extremely expensive. While advancements in efficiency have made it possible to get better performance with smaller models, pre-training still requires vast amounts of computational power and high-quality data. A large-scale model can cost millions of dollars to train, even if it has far fewer parameters than past models like GPT-3.

The Rise of Open-Source Language Models

Many language models are closed-source, you can't download or run them locally. For example ChatGPT models and Claude models from Anthropic are all closed-source.

However, some companies release a number of their models as open-source, allowing anyone to download, run, and modify them.

While the larger models can not be run on consumer hardware, smaller open-source models can be used on high-end consumer PCs.

An advantage of smaller models is that they have lower latency, meaning they can generate responses much faster. They are not as powerful as the largest closed-source models, but their accessibility and speed make them highly useful for some applications.

So What is Neuro-sama?

Basically no details are shared about the model by Vedal, and I will only share what can be confidently concluded and only information that wouldn't reveal any sort of "trade secret". What can be known is that Neuro-sama would not exist without open-source large language models. Vedal can't train a model from scratch, but what Vedal can do - and can be confidently assumed he did do - is fine-tune an open-source model. Fine-tuning can change the way the model acts and can add some new knowledge - however, the core intelligence of Neuro-sama comes from the base model she was built on. Since huge models can't be run on consumer hardware and would be prohibitively expensive to run through API, and because low latency is a must, we can also say that Neuro-sama is a smaller model - which has the disadvantage of being less powerful, having more limitations, but has the advantage of low latency. Latency and cost are always going to pose some pretty strict limitations, but because LLMs just keep geting more efficient and better hardware is becoming more available, Neuro can be expected to become smarter and smarter in the future. To end, I have to at least mention that Neuro-sama is more than just her language model, though we only talked about the language model in this post. She can be looked at as a system of different parts. Her TTS, her VTuber avatar, her vision model, her long-term memory, even her Minecraft AI, and so on, all come together to make Neuro-sama.

Wrapping up - Thanks for Reading!

This post was meant to provide a brief introduction to language models, covering some history and explaining how Neuro-sama can work. Of course, this post is just scratching the surface, but hopefully it gave you a clearer understanding about how language models function and their history!

6 notes

·

View notes

Text

dearest tumblr,

life is changing. lots has shifted in the past month or two, but none the less i am loving the partying and bonding with my baddies as usual. still living it up and dancing to charli xcx at least once a week. i’ve been meeting many new and wonderful people have thoroughly enjoyed and laughing and smiling with all of them. as well as plotting on possible future hoes. autumn is fast approaching but i am accepting this new season with open arms, the leaves are beginning to fall and i am excited to see what the next 3 months have in store for me. last week i asked chat gpt why my ex doesn’t want me back. clearly not my strongest moment. anyways, i just wanted to update all my dear tumblr followers (its just chloe) and let you all know that through all my hardships i am doing well and still in fact bumpin that. and will continue to do so. much love

2 notes

·

View notes

Text

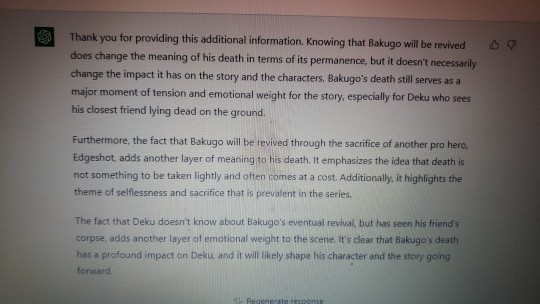

CHAT GPT analyzes Bakudeku & Bakugo's development

So I've been fooling around with ChatGPT, and decided to update the AI with the recent events that have happened in the manga.

(Manga spoilers until ch.362! BEWARE!)

Let me clarify that CHAT GPT has been programmed to having stored worldwide information until September 2021. I will only show the juciest replies I got from the AI, but let me clarify that I successfully updated the AI to understand what had happened in the manga until now (as of 5th April 2023).

(ChatGPT stores information given in the chat, and is capable to learn about that info and reproduce it later on during the conversation if needed.)

Let's start!

The following reply came when I asked ChatGPT if it was fair for fans to like Bakugo given his development, and if the AI agreed with me that Bakugo was well written:

(About the fairness of liking Bakugo: everyone is entitled to their own opinions, ok? But this question rooted from a discussion I had with a few IRL's who told me I was crazy for liking Bakugo. So yeah, personal reasons, but still, I wanted to show you the answer!)

I really liked the response lol, it's pretty well thought and comprehensive. It's scary how much an AI can learn and give you these kind of responses in such a short amount of time.

Another one: I asked the AI about what they thought about the likeliness of bkdk ending up canon.

(I'm not surprised by the answer. Dunno what y'all think about this but sounds pretty fair to me. However, that's just my opinion. I'd love bkdk to end up canon, though. Crossing my fingers.)

But then I asked the AI what they thought that bkdk still needed in order to become canon:

That was all. I don't agree much. But well, that's my opinion.

Next one: I asked the AI about the meaning of Bakugo's death relating it to bakugo's and deku's relationship. NOTE THAT UNTIL THEN, I DID NOT TELL THE AI ABOUT BAKUGO'S EVENTUAL REVIVAL AND DEKU'S REACTION TO IT! (Y'know, the "control your heart" part)

Then, I added the context that the AI lacked, and their response was...

Even though it's obvious, this response hyped me up for Bakugos revival and him stepping up to assist Deku during the fight against Shigaraki! Or maybe in another moment, whenever Hori chooses it'd be right to fit Bakugo in the narrative.

That's all! It's been pretty fun to chat with the AI, lol. Took me around 1h30 minutes, but totally worth it.

Note that the AI, although offering objective analysis' of the context I gave them, provided responses that, most of the time, I agreed with. Which lead me to think that, if I'm a very subjective bkdk shipper, I could turn this AI into a bkdk shipper too, lol, If I had more time and willingness to convince them. My take here is, that antis could get responses matching their opinions as well. So yeah, please, do not take these answers as enunciations from the Holy Bible, but just some screenshot about a crazy fan fooling around with an AI app because she's bored and doesn't wanna do UNI work. Anyway, hope that you enjoyed reading these answers. If you want me to ask the AI about more specific things, tell me! Might do another post like this one in the future!

#my hero academia#bnha#boku no hero academia#izuku midoriya#mha#bkdk#bakugou katsuki#bakudeku#bakugo katsuki#bakugou#chatgpt#katsudeku

9 notes

·

View notes

Text

This is exactly why Elon was kicked off rally 5.5

he is really not smart at all. A single baby wogue video on LLM used in the file explorer of gnome has more usable code than his entire ChatGPT project did but bc ppl don’t understand it, they flock to elons side, and regardless, Apple GPT wouldn’t be used to make instructables for ppl to cheat on apps or crypto projects, it would be used for real world interactions instead, like the real gnome project, humanity - Apple GPT is Fuck your trolly billionaire wannabe status LLM set kinda tech bc they have the power to actually implement it system wide instead of just as a single app that can’t interface with anything. This is the problem with normie billionaires, they don’t understand how to make things that are actually useable day to day. Too many of them think they can just get rich selling some crypto and super fast thing, but in the end, the people that take their time to work on their ideas actually end up making better things, and he purposefully took off tweeted from an iPhone or android just to piss off ppl that don’t wanna work at Tesla bc the pay is low and FSD upgrades are BS. Since when has Apple charged for an iOS update.. never. All their OSes are free to use if you can attain the hardware. It is up to users to decide whether they want to brew their own software experience or use something that people that understand bits down to the networking protocol bytes make. I don’t support any of Elon’s companies or projects and neither should anybody. They are all pre-made things others worked on that he bought and imposed restrictions and upgrade services on bc instead of innovating further, for example like creating a bunch of Tesla hotels with solar roofs for ppl to visit or Tesla locations to airbnb at, using his boring tunnels, with his own “teslabnb”, he wouldn’t need to charge for FSD, and if those teslabnbs had starlink Wi-Fi that ppl paid to use, then twitter wouldn’t need 8 dollars a month and Tesla employees wouldn’t have low wages. His ego is literally trolling users. People are not so smart to see it but if they did, they would say Elon is full of shit bc all the examples I just gave took me two seconds to come up with and billionaires practically live at hotels, so he probably already thought of it but would never do that just to keep that carrot on a stick metaphor in our minds and so he can keep pretending he is anti-1984, keeps feeding us that lefty righty political bs but never really implements the solutions above to stop it. It is billionaires that created 1984 and they know a way out, but they are full of shit. I can’t even long post this on Twitter bc he wants 8 dollars to do so, and this dude has been riding on apple’s platform success since twitter launched on iPhone and it should just be deleted off the App Store for not even using usps logic.

1 note

·

View note

Link

[ad_1] Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Just a week ago — on January 20, 2025 — Chinese AI startup DeepSeek unleashed a new, open-source AI model called R1 that might have initially been mistaken for one of the ever-growing masses of nearly interchangeable rivals that have sprung up since OpenAI debuted ChatGPT (powered by its own GPT-3.5 model, initially) more than two years ago. But that quickly proved unfounded, as DeepSeek’s mobile app has in that short time rocketed up the charts of the Apple App Store in the U.S. to dethrone ChatGPT for the number one spot and caused a massive market correction as investors dumped stock in formerly hot computer chip makers such as Nvidia, whose graphics processing units (GPUs) have been in high demand for use in massive superclusters to train new AI models and serve them up to customers on an ongoing basis (a modality known as “inference.”) Venture capitalist Marc Andreessen, echoing sentiments of other tech workers, wrote on the social network X last night: “Deepseek R1 is AI’s Sputnik moment,” comparing it to the pivotal October 1957 launch of the first artificial satellite in history, Sputnik 1, by the Soviet Union, which sparked the “space race” between that country and the U.S. to dominate space travel. Sputnik’s launch galvanized the U.S. to invest heavily in research and development of spacecraft and rocketry. While it’s not a perfect analogy — heavy investment was not needed to create DeepSeek-R1, quite the contrary (more on this below) — it does seem to signify a major turning point in the global AI marketplace, as for the first time, an AI product from China has become the most popular in the world. But before we jump on the DeepSeek hype train, let’s take a step back and examine the reality. As someone who has extensively used OpenAI’s ChatGPT — on both web and mobile platforms — and followed AI advancements closely, I believe that while DeepSeek-R1’s achievements are noteworthy, it’s not time to dismiss ChatGPT or U.S. AI investments just yet. And please note, I am not being paid by OpenAI to say this — I’ve never taken money from the company and don’t plan on it. What DeepSeek-R1 does well DeepSeek-R1 is part of a new generation of large “reasoning” models that do more than answer user queries: They reflect on their own analysis while they are producing a response, attempting to catch errors before serving them to the user. And DeepSeek-R1 matches or surpasses OpenAI’s own reasoning model, o1, released in September 2024 initially only for ChatGPT Plus and Pro subscription users, in several areas. For instance, on the MATH-500 benchmark, which assesses high-school-level mathematical problem-solving, DeepSeek-R1 achieved a 97.3% accuracy rate, slightly outperforming OpenAI o1’s 96.4%. In terms of coding capabilities, DeepSeek-R1 scored 49.2% on the SWE-bench Verified benchmark, edging out OpenAI o1’s 48.9%. Moreover, financially, DeepSeek-R1 offers substantial cost savings. The model was developed with an investment of under $6 million, a fraction of the expenditure — estimated to be multiple billions —reportedly associated with training models like OpenAI’s o1. DeepSeek was essentially forced to become more efficient with scarce and older GPUs thanks to a U.S. export restriction on the tech’s sales to China. Additionally, DeepSeek provides API access at $0.14 per million tokens, significantly undercutting OpenAI’s rate of $7.50 per million tokens. DeepSeek-R1’s massive efficiency gain, cost savings and equivalent performance to the top U.S. AI model have caused Silicon Valley and the wider business community to freak out over what appears to be a complete upending of the AI market, geopolitics, and known economics of AI model training. While DeepSeek’s gains are revolutionary, the pendulum is swinging too far toward it right now There’s no denying that DeepSeek-R1’s cost-effectiveness is a significant achievement. But let’s not forget that DeepSeek itself owes much of its success to U.S. AI innovations, going back to the initial 2017 transformer architecture developed by Google AI researchers (which started the whole LLM craze). DeepSeek-R1 was trained on synthetic data questions and answers and specifically, according to the paper released by its researchers, on the supervised fine-tuned “dataset of DeepSeek-V3,” the company’s previous (non-reasoning) model, which was found to have many indicators of being generated with OpenAI’s GPT-4o model itself! It seems pretty clear-cut to say that without GPT-4o to provide this data, and without OpenAI’s own release of the first commercial reasoning model o1 back in September 2024, which created the category, DeepSeek-R1 would almost certainly not exist. Furthermore, OpenAI’s success required vast amounts of GPU resources, paving the way for breakthroughs that DeepSeek has undoubtedly benefited from. The current investor panic about U.S. chip and AI companies feels premature and overblown. ChatGPT’s vision and image generation capabilities are still hugely important and valuable in workplace and personal settings — DeepSeek-R1 doesn’t have any yet While DeepSeek-R1 has impressed with its visible “chain of thought” reasoning — a kind of stream of consciousness wherein the model displays text as it analyzes the user’s prompt and seeks to answer it — and efficiency in text- and math-based workflows, it lacks several features that make ChatGPT a more robust and versatile tool today. No image generation or vision capabilities The official DeepSeek-R1 website and mobile app do let users upload photos and file attachments. But, they can only extract text from them using optical character recognition (OCR), one of the earliest computing technologies (dating back to 1959). This pales in comparison to ChatGPT’s vision capabilities. A user can upload images without any text whatsoever and have ChatGPT analyze the image, describe it, or provide further information based on what it sees and the user’s text prompts. ChatGPT allows users to upload photos and can analyze visual material and provide detailed insights or actionable advice. For example, when I needed guidance on repairing my bike or maintaining my air conditioning unit, ChatGPT’s ability to process images proved invaluable. DeepSeek-R1 simply cannot do this yet. See below for a visual comparison: No image generation The absence of generative image capabilities is another major limitation. As someone who frequently generates AI images using ChatGPT (such as for this article’s own header) powered by OpenAI’s underlying DALL·E 3 model, the ability to create detailed and stylistic images with ChatGPT is a game-changer. This feature is essential for many creative and professional workflows, and DeepSeek has yet to demonstrate comparable functionality, though today the company did release an open-source vision model, Janus Pro, which it says outperforms DALL·E 3, Stable Diffusion 3 and other industry-leading image generation models on third-party benchmarks. No voice mode DeepSeek-R1 also lacks a voice interaction mode, a feature that has become increasingly important for accessibility and convenience. ChatGPT’s voice mode allows for natural, conversational interactions, making it a superior choice for hands-free use or for users with different accessibility needs. Be excited for DeepSeek’s future potential — but also be wary of its challenges Yes, DeepSeek-R1 can — and likely will — add voice and vision capabilities in the future. But doing so is no small feat. Integrating image generation, vision analysis, and voice capabilities requires substantial development resources and, ironically, many of the same high-performance GPUs that investors are now undervaluing. Deploying these features effectively and in a user-friendly way is another challenge entirely. DeepSeek-R1’s accomplishments are impressive and signal a promising shift in the global AI landscape. However, it’s crucial to keep the excitement in check. For now, ChatGPT remains the better-rounded and more capable product, offering a suite of features that DeepSeek simply cannot match. Let’s appreciate the advancements while recognizing the limitations and the continued importance of U.S. AI innovation and investment. Daily insights on business use cases with VB Daily If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI. Read our Privacy Policy Thanks for subscribing. Check out more VB newsletters here. An error occured. [ad_2] Source link

0 notes

Text

Google Unveils Groundbreaking AI Architecture: Titans

Google has made a revolutionary leap in artificial intelligence with the launch of its new architecture, Titans, designed to mimic the human brain’s cognitive processes. This cutting-edge technology opens new frontiers in machine learning, greatly enhancing AI efficiency, especially in complex tasks that require vast amounts of data and contextual analysis.

Key Features of the Titans Architecture

The core strength of Titans lies in its unique design, which is inspired by the cognitive processes of the human brain. The model features three levels of memory: short-term, long-term, and permanent memory. This approach allows the system not only to process current data but also to adapt based on accumulated experience. Here’s how it works:

Short-term memory is used to store information needed for immediate tasks. Once the task is completed, unnecessary data is discarded, freeing up resources;

Long-term memory continually learns from new data, updating itself to improve future analytics.

Permanent memory serves as a repository for critical information that the model deems essential to retain.

As a result, Titans not only processes massive amounts of data but also manages its memory efficiently, making the learning process more advanced and organized.

AI Learning Efficiency: A New Era of Performance

One of the standout features of Titans is the integration of parallel data processing. This technology enables the model to handle multiple tasks simultaneously, significantly speeding up learning and improving the accuracy of results. In practical terms, this replaces the cumbersome approaches of the past, saving both time and resources.

Additionally, Titans has already proven its superiority in handling complex computational tasks. The model excels in genomics analysis, time-series forecasting, and other computation-heavy processes, making it invaluable for scientific and medical research. For instance, Titans can accelerate drug development or simulate complex economic scenarios with high precision.

Contextual Analysis: An Impressive Leap in Capability

One of the most remarkable aspects of Titans is its ability to process context on an unprecedented scale. To put this into perspective: the maximum context window for GPT-4 is 4,000 tokens, while Titans can handle a staggering 2 million tokens. This allows the model to analyze information equivalent to 25 books at once and make informed decisions based on this vast amount of data.

This level of contextual processing opens doors to solving problems that were previously out of reach for existing models—from highly accurate forecasting to automated academic writing.

Predictions and Impact on the Industry

The release of Google’s Titans will undoubtedly have far-reaching implications for the AI industry. Firstly, it pushes the boundaries of what AI can achieve, sparking competition among leading machine learning companies. Titans could become the benchmark for complex analytical processes, drastically reducing data processing times.

Secondly, with improved accuracy in predictions and faster data processing, Titans could bring radical changes to industries such as healthcare, science, finance, and even environmental monitoring. For example, Titans could more swiftly calculate optimal paths for reducing carbon emissions or predict economic crises with greater precision.

Conclusion

Google’s Titans architecture represents a monumental advancement in artificial intelligence, poised to reshape how we approach complex problems and industries reliant on data-intensive tasks. With its groundbreaking memory structure, parallel processing capabilities, and vast contextual analysis potential, Titans promises to revolutionize AI, pushing the boundaries of innovation and opening up new possibilities for the future.

The introduction of Titans symbolizes a groundbreaking advancement in artificial intelligence. By combining the power of automated algorithms with the cognitive flexibility inherent to the human brain, Titans ushers in a new era for AI technology. Its large contextual window, efficient memory management, and parallel data processing capabilities make this architecture one of the most significant milestones in AI history. Titans not only opens up vast potential for existing technologies but also unlocks possibilities for innovations in areas that previously seemed unattainable.

Our website: https://goldriders-robot.com/

Follow us on Facebook: https://www.facebook.com/groups/goldridersfb

Follow us on Pinterest: https://ru.pinterest.com/goldridersrobot/

Follow us on X: https://x.com/goldridersx?s=21

Follow us on Telegram: https://t.me/+QtMMmyyVhJExZThi

0 notes

Text

How To Add Shopify Product Description Read More Tag

A well-distinctive product description will increase your possibilities of creating a sale. Yet, prolonged descriptions aren’t terrific for consumer enjoy. Build a standout online store with our White Label Website Design, designed to elevate your brand’s eCommerce experience.

To tackle this, you need to add a “study more” tag in your product description. This article will show you how to upload examine more button in Shopify.

Why Add Shopify Product Description Read More Button?

A study extra button is a clickable element on a webpage that hides part of a textual content. When the reader clicks the button, it exhibits the entire text.

Adding a Shopify read greater button in your product web page can be of many blessings. Apart from enhancing search engine optimization and boosting sales, there are other blessings.

These are a few motives why it’s a good concept:

Improves consumer enjoy: Customers can skim the product information with out records overload. If they need to recognise extra, they can click the button to examine the full description.

Keeps your page easy: A Shopify product description study more button helps maintain your product pages prepared and reduces litter. This makes it easier for site visitors to locate what they’re seeking out.

Enhances mobile experience: Mobile customers decide upon concise information. A read more button makes it less complicated for them to navigate your web site on their telephones or pills.

Improves load instances: Shorter seen text can help your page load faster. This in flip can cause a better purchasing experience and better conversion quotes.

Method To Add Shopify Product Description Read More Button

To add a Shopify examine greater button to your product descriptions, comply with the method below. This technique involves creating a brand new snippet and updating your product web page template.

Step 1: Go in your keep’s subject files and edit

In your Shopify admin, navigate to Online Store and then choose Themes

Click the 3-dot icon beside the Customize button and select Edit Code from the alternatives.

Select Click Edit Code from the options

Step 2: Create a new snippet

Navigate to the Snippets folder and click on Add a brand new snippet.

Name the new snippet meetanshi-product-description-study-greater.

Step three: Open the principle-product.Liquid record

In the Sections folder, discover and open the primary-product.Liquid file. You can try this through typing product inside the seek bar.

Open the Main Product Liquid File

Step 4: Replace current code

Locate the subsequent code block inside the foremost-product.Liquid document:

Replace Existing Code

Then replace it with:

% render 'meetanshi-product-description-study-more' %

Step five: Save your adjustments

Click the Save button to shop the adjustments to the principle-product.Liquid document.

Save Your Changes

Shopify AI Content Generator

Leverage modern-day AI models like GPT-four to hurry up your content production.

Get App

Bonus Tips to Improve Your Shopify Store UX

Great person enjoy can boom customers’ interaction along with your web page and the chance of them making a purchase. Additionally, it enables to construct accept as true with and loyalty together with your clients. Create a unique eCommerce experience with our Shopify Maintenance Service, tailored to enhance your brand's success.

Besides including a Shopify product description read greater tag, there are different matters you may do to enhance your shop’s UX. Feel loose to install area those easy but powerful suggestions:

Compress pics, use fast website hosting, and restrict the usage of heavy scripts and plugins to make your web page load quicker.

Ensure your Shopify save is responsive on all gadgets. Also, check it on one of a kind mobile devices to make certain functionality.

Write clear and certain descriptions for each product. Use bullet points for readability.

Make use of remarkable product photographs. Also consist of one-of-a-kind angles for the pictures and permit zoom characteristic.

Reduce the quantity of checkout steps customers need to undergo. Also, provide extraordinary charge methods and visitor checkout.

Add a live chat feature to assist your clients in real-time. Try to reply questions and clear up their issues right away.

Implementing a Shopify product description read more button, in addition to the bonus guidelines, can significantly effect UX via retaining your store easy and informative. With the stairs mentioned, you may upload this selection in your web site.

Also Read : How to Add a Request a Quote Button in Shopify?

Shopify Editions Winter 2025 – The Boring Edition

#White Label Website Design#shopify experts india#best wordpress development company in india#hire shopify experts

0 notes

Link

[ad_1] Filip Radwanski Rocket | Getty Imagesthe apple It released software updates for iPhone, iPad and Mac on Wednesday, including the long-awaited ChatGPT integration with Siri.The ChatGPT integration is triggered when users ask Siri complex questions. When Siri is asked a question that Apple's software identity is best suited for ChatGPT, it asks the user for permission to access the OpenAI service. Apple says it has built privacy protections into the feature, and that OpenAI will not store requests. The integration uses OpenAI's GPT-4o model.Apple users do not need an OpenAI account to use the ChatGPT integration, but users can pay for ChatGPT upgrades through Apple. Users can also access ChatGPT through a series of text menus.The iOS 18.2 release is a critical milestone for Apple as it relies on Apple Intelligence to guide its marketing campaign for the iPhone 16 range. Apple Intelligence is the company's suite of artificial intelligence features. Apple first announced ChatGPT integration in June.the apple released The first part of Apple Intelligence in October. These features included writing tools that can correct or rewrite text, a new Siri design that lights up the entire phone screen, and notification summaries.The company says it will release another update to Apple Intelligence next year, including significant improvements to Siri, including the ability to perform actions within apps.Many investors believe that as Apple Intelligence adds features, it will boost iPhone sales, drive the upgrade cycle and establish Apple as a consumer-oriented AI leader.The integration is also a big win for OpenAI, putting its flagship product in front of millions of iPhone users. Neither Apple nor OpenAI disclosed the financial terms of the deal.Users need an iPhone 15, iPhone 15 Pro, or any iPhone 16 model to install and use Apple Intelligence, although the ChatGPT integration primarily uses cloud servers. iPhone owners can turn on software updates in the General section of the Settings app.After upgrading to the latest Apple software, users will be prompted to set up Apple Intelligence. Their phones will need to download large files, including Apple's AI models, which the service needs to run.Wednesday's updates also include Apple's image creation app, called Playground, which can create images based on people or reminders, and Image Wand, a feature that lets users remove objects or blemishes from photos.SEE: MLS curator Apple media deal: We have more subscribers than either of us thought [ad_2] Source link

0 notes

Link

Jaque Silva | Nurphoto | Getty ImagesApple released updates for its iPhone, iPad and Mac software on Wednesday that include a long-awaited ChatGPT integration with Siri.The ChatGPT integration triggers when users ask Siri complicated questions. When Siri is asked a question that Apple's software identities as better suited for ChatGPT, it asks the user permission to access the OpenAI service. Apple says that it has built in privacy protections into the feature, and that OpenAI won't store requests. The integration uses OpenAI's GPT-4o model.Apple users don't need an OpenAI account to make use of the ChatGPT integration, but users can pay for upgraded versions of ChatGPT through Apple. Users can also access ChatGPT through some text menus.The iOS 18.2 release is a critical milestone for Apple, which is relying on Apple Intelligence to lead the iPhone 16 lineup's marketing campaign. Apple Intelligence is the company's suite of artificial intelligence features. Apple first announced the ChatGPT integration back in June.Apple released the first part of Apple Intelligence in October. Those features included writing tools that can proofread or rewrite text, a new design for Siri that makes the whole phone screen glow and notification summaries.The company says it will release another update to Apple Intelligence next year that includes significant improvements to Siri, including the ability for it to take actions inside of apps.Many investors believe that as Apple Intelligence adds features, it will boost iPhone sales, drive an upgrade cycle and potentially cement Apple as a leader in consumer-oriented AI.The integration is also a major victory for OpenAI as it puts its most important product in front of millions of iPhone users. Neither Apple nor OpenAI have disclosed financial terms for the arrangement.Users need an iPhone 15, iPhone 15 Pro or any iPhone 16 model to install and use Apple Intelligence, even though the ChatGPT integration primarily uses cloud servers. Owners of iPhones can turn on software updates in the General section of the Settings app.After updating to the latest Apple software, users who have not yet activated Apple Intelligence can sign up for a waitlist inside the settings app. Users typically receive access to the software within the same day. Their phones will need to download large files, including Apple's AI models, that the service needs to operate.The Wednesday updates also include Apple's image generating app, called Playground, which can create images based on people or prompts, and Image Wand, a feature that allows users to remove objects or flaws from photographs.Don’t miss these insights from CNBC PRO

0 notes

Text

Design Patterns in Python for AI and LLM Engineers: A Practical Guide

New Post has been published on https://thedigitalinsider.com/design-patterns-in-python-for-ai-and-llm-engineers-a-practical-guide/

Design Patterns in Python for AI and LLM Engineers: A Practical Guide

As AI engineers, crafting clean, efficient, and maintainable code is critical, especially when building complex systems.

Design patterns are reusable solutions to common problems in software design. For AI and large language model (LLM) engineers, design patterns help build robust, scalable, and maintainable systems that handle complex workflows efficiently. This article dives into design patterns in Python, focusing on their relevance in AI and LLM-based systems. I’ll explain each pattern with practical AI use cases and Python code examples.

Let’s explore some key design patterns that are particularly useful in AI and machine learning contexts, along with Python examples.

Why Design Patterns Matter for AI Engineers

AI systems often involve:

Complex object creation (e.g., loading models, data preprocessing pipelines).

Managing interactions between components (e.g., model inference, real-time updates).

Handling scalability, maintainability, and flexibility for changing requirements.

Design patterns address these challenges, providing a clear structure and reducing ad-hoc fixes. They fall into three main categories:

Creational Patterns: Focus on object creation. (Singleton, Factory, Builder)

Structural Patterns: Organize the relationships between objects. (Adapter, Decorator)

Behavioral Patterns: Manage communication between objects. (Strategy, Observer)

1. Singleton Pattern

The Singleton Pattern ensures a class has only one instance and provides a global access point to that instance. This is especially valuable in AI workflows where shared resources—like configuration settings, logging systems, or model instances—must be consistently managed without redundancy.

When to Use

Managing global configurations (e.g., model hyperparameters).

Sharing resources across multiple threads or processes (e.g., GPU memory).

Ensuring consistent access to a single inference engine or database connection.

Implementation

Here’s how to implement a Singleton pattern in Python to manage configurations for an AI model:

class ModelConfig: """ A Singleton class for managing global model configurations. """ _instance = None # Class variable to store the singleton instance def __new__(cls, *args, **kwargs): if not cls._instance: # Create a new instance if none exists cls._instance = super().__new__(cls) cls._instance.settings = # Initialize configuration dictionary return cls._instance def set(self, key, value): """ Set a configuration key-value pair. """ self.settings[key] = value def get(self, key): """ Get a configuration value by key. """ return self.settings.get(key) # Usage Example config1 = ModelConfig() config1.set("model_name", "GPT-4") config1.set("batch_size", 32) # Accessing the same instance config2 = ModelConfig() print(config2.get("model_name")) # Output: GPT-4 print(config2.get("batch_size")) # Output: 32 print(config1 is config2) # Output: True (both are the same instance)

Explanation

The __new__ Method: This ensures that only one instance of the class is created. If an instance already exists, it returns the existing one.

Shared State: Both config1 and config2 point to the same instance, making all configurations globally accessible and consistent.

AI Use Case: Use this pattern to manage global settings like paths to datasets, logging configurations, or environment variables.

2. Factory Pattern

The Factory Pattern provides a way to delegate the creation of objects to subclasses or dedicated factory methods. In AI systems, this pattern is ideal for creating different types of models, data loaders, or pipelines dynamically based on context.

When to Use

Dynamically creating models based on user input or task requirements.

Managing complex object creation logic (e.g., multi-step preprocessing pipelines).

Decoupling object instantiation from the rest of the system to improve flexibility.

Implementation

Let’s build a Factory for creating models for different AI tasks, like text classification, summarization, and translation:

class BaseModel: """ Abstract base class for AI models. """ def predict(self, data): raise NotImplementedError("Subclasses must implement the `predict` method") class TextClassificationModel(BaseModel): def predict(self, data): return f"Classifying text: data" class SummarizationModel(BaseModel): def predict(self, data): return f"Summarizing text: data" class TranslationModel(BaseModel): def predict(self, data): return f"Translating text: data" class ModelFactory: """ Factory class to create AI models dynamically. """ @staticmethod def create_model(task_type): """ Factory method to create models based on the task type. """ task_mapping = "classification": TextClassificationModel, "summarization": SummarizationModel, "translation": TranslationModel, model_class = task_mapping.get(task_type) if not model_class: raise ValueError(f"Unknown task type: task_type") return model_class() # Usage Example task = "classification" model = ModelFactory.create_model(task) print(model.predict("AI will transform the world!")) # Output: Classifying text: AI will transform the world!

Explanation

Abstract Base Class: The BaseModel class defines the interface (predict) that all subclasses must implement, ensuring consistency.

Factory Logic: The ModelFactory dynamically selects the appropriate class based on the task type and creates an instance.

Extensibility: Adding a new model type is straightforward—just implement a new subclass and update the factory’s task_mapping.

AI Use Case

Imagine you are designing a system that selects a different LLM (e.g., BERT, GPT, or T5) based on the task. The Factory pattern makes it easy to extend the system as new models become available without modifying existing code.

3. Builder Pattern

The Builder Pattern separates the construction of a complex object from its representation. It is useful when an object involves multiple steps to initialize or configure.

When to Use

Building multi-step pipelines (e.g., data preprocessing).

Managing configurations for experiments or model training.

Creating objects that require a lot of parameters, ensuring readability and maintainability.

Implementation

Here’s how to use the Builder pattern to create a data preprocessing pipeline:

class DataPipeline: """ Builder class for constructing a data preprocessing pipeline. """ def __init__(self): self.steps = [] def add_step(self, step_function): """ Add a preprocessing step to the pipeline. """ self.steps.append(step_function) return self # Return self to enable method chaining def run(self, data): """ Execute all steps in the pipeline. """ for step in self.steps: data = step(data) return data # Usage Example pipeline = DataPipeline() pipeline.add_step(lambda x: x.strip()) # Step 1: Strip whitespace pipeline.add_step(lambda x: x.lower()) # Step 2: Convert to lowercase pipeline.add_step(lambda x: x.replace(".", "")) # Step 3: Remove periods processed_data = pipeline.run(" Hello World. ") print(processed_data) # Output: hello world

Explanation

Chained Methods: The add_step method allows chaining for an intuitive and compact syntax when defining pipelines.

Step-by-Step Execution: The pipeline processes data by running it through each step in sequence.

AI Use Case: Use the Builder pattern to create complex, reusable data preprocessing pipelines or model training setups.

4. Strategy Pattern

The Strategy Pattern defines a family of interchangeable algorithms, encapsulating each one and allowing the behavior to change dynamically at runtime. This is especially useful in AI systems where the same process (e.g., inference or data processing) might require different approaches depending on the context.

When to Use

Switching between different inference strategies (e.g., batch processing vs. streaming).

Applying different data processing techniques dynamically.

Choosing resource management strategies based on available infrastructure.

Implementation

Let’s use the Strategy Pattern to implement two different inference strategies for an AI model: batch inference and streaming inference.

class InferenceStrategy: """ Abstract base class for inference strategies. """ def infer(self, model, data): raise NotImplementedError("Subclasses must implement the `infer` method") class BatchInference(InferenceStrategy): """ Strategy for batch inference. """ def infer(self, model, data): print("Performing batch inference...") return [model.predict(item) for item in data] class StreamInference(InferenceStrategy): """ Strategy for streaming inference. """ def infer(self, model, data): print("Performing streaming inference...") results = [] for item in data: results.append(model.predict(item)) return results class InferenceContext: """ Context class to switch between inference strategies dynamically. """ def __init__(self, strategy: InferenceStrategy): self.strategy = strategy def set_strategy(self, strategy: InferenceStrategy): """ Change the inference strategy dynamically. """ self.strategy = strategy def infer(self, model, data): """ Delegate inference to the selected strategy. """ return self.strategy.infer(model, data) # Mock Model Class class MockModel: def predict(self, input_data): return f"Predicted: input_data" # Usage Example model = MockModel() data = ["sample1", "sample2", "sample3"] context = InferenceContext(BatchInference()) print(context.infer(model, data)) # Output: # Performing batch inference... # ['Predicted: sample1', 'Predicted: sample2', 'Predicted: sample3'] # Switch to streaming inference context.set_strategy(StreamInference()) print(context.infer(model, data)) # Output: # Performing streaming inference... # ['Predicted: sample1', 'Predicted: sample2', 'Predicted: sample3']

Explanation

Abstract Strategy Class: The InferenceStrategy defines the interface that all strategies must follow.

Concrete Strategies: Each strategy (e.g., BatchInference, StreamInference) implements the logic specific to that approach.

Dynamic Switching: The InferenceContext allows switching strategies at runtime, offering flexibility for different use cases.

When to Use

Switch between batch inference for offline processing and streaming inference for real-time applications.

Dynamically adjust data augmentation or preprocessing techniques based on the task or input format.

5. Observer Pattern

The Observer Pattern establishes a one-to-many relationship between objects. When one object (the subject) changes state, all its dependents (observers) are automatically notified. This is particularly useful in AI systems for real-time monitoring, event handling, or data synchronization.

When to Use

Monitoring metrics like accuracy or loss during model training.

Real-time updates for dashboards or logs.

Managing dependencies between components in complex workflows.

Implementation

Let’s use the Observer Pattern to monitor the performance of an AI model in real-time.

class Subject: """ Base class for subjects being observed. """ def __init__(self): self._observers = [] def attach(self, observer): """ Attach an observer to the subject. """ self._observers.append(observer) def detach(self, observer): """ Detach an observer from the subject. """ self._observers.remove(observer) def notify(self, data): """ Notify all observers of a change in state. """ for observer in self._observers: observer.update(data) class ModelMonitor(Subject): """ Subject that monitors model performance metrics. """ def update_metrics(self, metric_name, value): """ Simulate updating a performance metric and notifying observers. """ print(f"Updated metric_name: value") self.notify(metric_name: value) class Observer: """ Base class for observers. """ def update(self, data): raise NotImplementedError("Subclasses must implement the `update` method") class LoggerObserver(Observer): """ Observer to log metrics. """ def update(self, data): print(f"Logging metric: data") class AlertObserver(Observer): """ Observer to raise alerts if thresholds are breached. """ def __init__(self, threshold): self.threshold = threshold def update(self, data): for metric, value in data.items(): if value > self.threshold: print(f"ALERT: metric exceeded threshold with value value") # Usage Example monitor = ModelMonitor() logger = LoggerObserver() alert = AlertObserver(threshold=90) monitor.attach(logger) monitor.attach(alert) # Simulate metric updates monitor.update_metrics("accuracy", 85) # Logs the metric monitor.update_metrics("accuracy", 95) # Logs and triggers alert

Subject: Manages a list of observers and notifies them when its state changes. In this example, the ModelMonitor class tracks metrics.

Observers: Perform specific actions when notified. For instance, the LoggerObserver logs metrics, while the AlertObserver raises alerts if a threshold is breached.

Decoupled Design: Observers and subjects are loosely coupled, making the system modular and extensible.

How Design Patterns Differ for AI Engineers vs. Traditional Engineers

Design patterns, while universally applicable, take on unique characteristics when implemented in AI engineering compared to traditional software engineering. The difference lies in the challenges, goals, and workflows intrinsic to AI systems, which often demand patterns to be adapted or extended beyond their conventional uses.

1. Object Creation: Static vs. Dynamic Needs

Traditional Engineering: Object creation patterns like Factory or Singleton are often used to manage configurations, database connections, or user session states. These are generally static and well-defined during system design.

AI Engineering: Object creation often involves dynamic workflows, such as:

Creating models on-the-fly based on user input or system requirements.

Loading different model configurations for tasks like translation, summarization, or classification.

Instantiating multiple data processing pipelines that vary by dataset characteristics (e.g., tabular vs. unstructured text).

Example: In AI, a Factory pattern might dynamically generate a deep learning model based on the task type and hardware constraints, whereas in traditional systems, it might simply generate a user interface component.

2. Performance Constraints

Traditional Engineering: Design patterns are typically optimized for latency and throughput in applications like web servers, database queries, or UI rendering.

AI Engineering: Performance requirements in AI extend to model inference latency, GPU/TPU utilization, and memory optimization. Patterns must accommodate:

Caching intermediate results to reduce redundant computations (Decorator or Proxy patterns).

Switching algorithms dynamically (Strategy pattern) to balance latency and accuracy based on system load or real-time constraints.

3. Data-Centric Nature

Traditional Engineering: Patterns often operate on fixed input-output structures (e.g., forms, REST API responses).

AI Engineering: Patterns must handle data variability in both structure and scale, including:

Streaming data for real-time systems.

Multimodal data (e.g., text, images, videos) requiring pipelines with flexible processing steps.

Large-scale datasets that need efficient preprocessing and augmentation pipelines, often using patterns like Builder or Pipeline.

4. Experimentation vs. Stability

Traditional Engineering: Emphasis is on building stable, predictable systems where patterns ensure consistent performance and reliability.

AI Engineering: AI workflows are often experimental and involve:

Iterating on different model architectures or data preprocessing techniques.

Dynamically updating system components (e.g., retraining models, swapping algorithms).

Extending existing workflows without breaking production pipelines, often using extensible patterns like Decorator or Factory.

Example: A Factory in AI might not only instantiate a model but also attach preloaded weights, configure optimizers, and link training callbacks—all dynamically.

Best Practices for Using Design Patterns in AI Projects

Don’t Over-Engineer: Use patterns only when they clearly solve a problem or improve code organization.

Consider Scale: Choose patterns that will scale with your AI system’s growth.

Documentation: Document why you chose specific patterns and how they should be used.

Testing: Design patterns should make your code more testable, not less.

Performance: Consider the performance implications of patterns, especially in inference pipelines.

Conclusion

Design patterns are powerful tools for AI engineers, helping create maintainable and scalable systems. The key is choosing the right pattern for your specific needs and implementing it in a way that enhances rather than complicates your codebase.

Remember that patterns are guidelines, not rules. Feel free to adapt them to your specific needs while keeping the core principles intact.

#ADD#ai#AI Engineering#ai model#AI models#AI systems#ai use cases#alerts#Algorithms#API#applications#approach#Article#Artificial Intelligence#Behavior#BERT#Builder Pattern#Building#change#code#codebase#communication#concrete#construction#data#data augmentation#data processing#Database#datasets#Deep Learning

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Product Description 1 ChatGPT 2 Ebook Reader 3 Navigation 1 Display 2 Dynamic Island 3 Brightness 1 Smart Tools 2 Health 3 GPS Tracking 1 Battery 2 Wireless Charger 📱Our Biggest Display (Yet): The CrossBeats Nexus comes with a stunning 2.01” AMOLED full touch screen with a maximum of 700 nits of brightness. Watch incredible colors come to life with the 410*494 pixel display. 📱Powered by Chat GPT: Enabled with the power of Open AI, Nexus gives you the ability to do more on your wrist. Powered by an advanced voice recognition system, Chat GPT is one voice command away. (Install ChatGPT App on your Phone & Update the Smartwatch Firmware for proper use) ⌚Advanced Dynamic Island: Nexus’s advanced dynamic island makes navigating through notifications easier. Whether you’re on the go or scrolling through multiple modes, Dynamic Island ensures that you never miss a notification 📑Ebook Access: The Nexus smart watch for men also comes enabled with the country’s first e-book reader. With an increased storage capacity, you can store up to 10 books of your choice. 🔋Long-Lasting Battery: Equipped with a powerful 250 MAH battery, the Nexus can last up to 6 days on a single charge. On standby mode, it can go up to 30 days, so you can pick up where you left off. 📌Navigate New Roads: This Digital watch comes with a built-in altimeter, compass, and barometer, you can navigate through new terrains without any hassles. The In-app GPS Navigation feature allows you to use your smartwatch while you navigate the roads ahead. 📞Smart Communication: With ClearComm Technology and the latest 5.3 chipset, this Bluetooth calling smart watch makes it easier. Enabled with voice assistant access, the Nexus is compatible with both Google Assistant and Siri. 🏋️♂️100 plus sport modes: Navigate through multiple workouts like cricket, football, basketball, running and more. You can sync our workouts with the MActive Pro app to track the same progress on your phone.

❤️AI Health Tracking: Nexus acts as a Fitness band that tracks metrics such as sleep, BP, heart rate, SPO2, and more with our inbuilt bio sensor. You can even set reminders to drink water, count the calories you’ve burnt, and track steps as well. [ad_2]

0 notes

Text

ChatGPT ohne Login nutzen: So geht’s

Es gibt viele Gründe, warum Menschen nach einer Möglichkeit suchen, ChatGPT ohne Anmeldung zu nutzen. Vielleicht möchtest du einfach schnell eine Antwort auf eine Frage, ohne dich mit Registrierungen aufzuhalten. Oder du möchtest anonym bleiben und keine persönlichen Daten preisgeben. Die gute Nachricht ist: Es gibt tatsächlich Wege, ChatGPT ohne Login zu verwenden. In diesem Artikel schauen wir uns die Optionen genauer an, erklären die Vor- und Nachteile und geben Tipps, wie du das Beste aus der Nutzung herausholst. https://www.youtube.com/watch?v=tvOjxj4GEaU

Kann man ChatGPT wirklich ohne Anmeldung nutzen?

Ja, es ist möglich, ChatGPT ohne einen Account zu verwenden – zumindest auf indirektem Weg. OpenAI selbst verlangt für die Nutzung von ChatGPT normalerweise eine Anmeldung. Es gibt jedoch Drittanbieter-Plattformen, die die Technologie von OpenAI nutzen und dir erlauben, auf ihre Dienste ohne Registrierung zuzugreifen. Oft ist das unkompliziert, bringt aber Einschränkungen mit sich.

Wo kannst du ChatGPT ohne Login ausprobieren?

Drittanbieter-Websites Eine der einfachsten Möglichkeiten sind Drittanbieter-Plattformen, die ChatGPT oder vergleichbare KI-Modelle integriert haben. Diese Seiten erlauben oft eine Nutzung ohne Anmeldung, richten sich aber meist an Nutzer*innen, die nur gelegentlich eine Antwort brauchen. - You.com/chat: Diese Suchmaschine kombiniert eine klassische Suchfunktion mit einem integrierten KI-Chat. Du kannst dort Fragen stellen und Antworten erhalten, ohne ein Konto anzulegen. Allerdings sind längere Gespräche oder fortgeschrittene Funktionen eingeschränkt. - Chatbot-Tools: Es gibt viele kleinere Websites, die dir KI-Antworten anbieten, ohne dass du dich registrieren musst. Diese Tools basieren oft auf älteren Versionen von GPT oder bieten reduzierte Funktionen. Achte darauf, dass du Drittanbietern keine sensiblen Informationen anvertraust, da du nicht immer sicher sein kannst, wie mit deinen Daten umgegangen wird. Kostenlose Testversionen Einige Plattformen bieten Testzugänge an, bei denen du ohne Anmeldung loslegen kannst. Diese Versionen sind meist zeitlich begrenzt oder erlauben nur eine bestimmte Anzahl von Anfragen. Für einen kurzen Einblick in die KI-Welt kann das aber völlig ausreichen. Apps und Browser-Add-ons Es gibt auch Anwendungen, die dir ohne Anmeldung Zugang zu KI-Funktionen bieten. Manche sind als Browser-Erweiterungen verfügbar, andere als Apps. Hier lohnt sich ein Blick in den App-Store deines Geräts oder in Erweiterungsmarktplätze von Browsern wie Chrome oder Firefox.

Was sind die Vor- und Nachteile?

Die Nutzung von ChatGPT ohne Login hat ihre ganz eigenen Stärken – aber auch Schwächen, die du beachten solltest. Vorteile - Schneller Zugang: Du kannst sofort loslegen, ohne dich mit einem Konto registrieren oder einloggen zu müssen. - Anonymität: Deine Identität bleibt gewahrt, und du hinterlässt keine persönlichen Daten wie E-Mail-Adressen. - Einfachheit: Keine Passwörter, keine Verifizierung, kein Aufwand – einfacher geht’s nicht. Nachteile - Eingeschränkte Funktionen: Viele Drittanbieter bieten nur abgespeckte Versionen an. Wenn du längere Gespräche führen möchtest, stößt du schnell an Grenzen. - Keine Speicherung: Alles, was du fragst, verschwindet, sobald du die Seite schließt. Es gibt keine Verlaufsfunktion oder die Möglichkeit, wichtige Inhalte später wiederzufinden. - Datensicherheit: Wenn du auf Drittanbieter setzt, weißt du oft nicht, wie sicher deine Eingaben sind. Es besteht das Risiko, dass Daten gespeichert oder weiterverwendet werden.

Warum ein Login trotzdem Sinn machen kann

Auch wenn es auf den ersten Blick verlockend klingt, ChatGPT ohne Anmeldung zu nutzen, bietet die Registrierung bei OpenAI oder vergleichbaren Diensten klare Vorteile. Mit einem Konto kannst du beispielsweise: - Gespräche speichern und später darauf zurückgreifen - Erweiterte Funktionen nutzen, wie die Anpassung der Antworten an deinen persönlichen Stil - Updates und neue Features ausprobieren, die oft nur registrierten Nutzer*innen zur Verfügung stehen Ein Konto bei OpenAI ist kostenlos und bietet dir eine offizielle, sichere Umgebung, um die KI auszuprobieren. Für alle, die häufiger mit ChatGPT arbeiten möchten, ist das die beste Wahl.

Photo by Matheus Bertelli

Für wen eignet sich die Nutzung ohne Anmeldung?

Die Nutzung von ChatGPT ohne Login ist vor allem dann sinnvoll, wenn du nur gelegentlich eine schnelle Antwort brauchst. Vielleicht willst du eine komplexe Frage klären oder dich von einer KI inspirieren lassen, ohne einen Account erstellen zu müssen. Für intensivere oder regelmäßige Gespräche – sei es für die Arbeit, fürs Lernen oder für kreative Projekte – führt jedoch kein Weg an einem Konto vorbei. Es bietet dir einfach mehr Funktionen, Stabilität und Kontrolle über deine Daten.

Fazit

Die Möglichkeit, ChatGPT ohne Login zu nutzen, gibt es. Sie ist besonders praktisch für schnelle, einmalige Interaktionen oder wenn du anonym bleiben möchtest. Drittanbieter-Plattformen und Testversionen sind hier die naheliegenden Optionen. Doch wer wirklich das Beste aus der KI herausholen möchte, wird früher oder später feststellen, dass ein eigenes Konto viele Vorteile bringt. Am Ende bleibt es dir überlassen, wie du ChatGPT nutzen möchtest. Probier die verschiedenen Möglichkeiten aus und finde heraus, was am besten zu deinem Bedarf passt. Und wenn du dich doch mal registrierst, ist das Ganze in wenigen Minuten erledigt – einfacher, als du vielleicht denkst. Read the full article

0 notes

Text

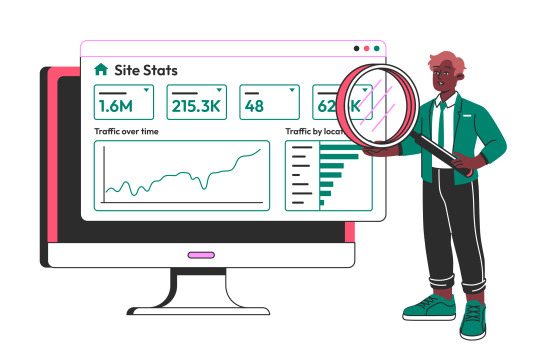

What is Generative Engine Optimization (GEO) and How It’s Changing Digital Marketing

One of the latest trends that is making waves in the industry is Generative Engine Optimization (GEO). But what exactly does it mean, and how is it changing the way businesses approach online marketing? Let’s break it down in simple terms.

What is Generative Engine Optimization (GEO)?

Generative Engine Optimization is a new strategy that infuses the power of traditional SEO with generative AI. It focuses on improving content creation, optimization, and delivery to search engines and users. In other words, it makes websites and digital content work better in search results because of AI tools that will create high-quality, relevant, and engaging content corresponding to what people are actually searching for.

Let’s take a closer look at the key parts of GEO:

Generative AI: AI models, for instance GPT-4 could create text and even generate images and videos. The data and patterns are taken in by the AI model; this is a text generator that can write content or captions that sound human, relevant to the topic presented.

Search Engine Optimization (SEO): SEO is the practice of improving your website so it ranks higher in search engine results like Google. The better your SEO, the more likely it is that people will find your website when they search for related keywords.

Generative Engine Optimization This takes the process of SEO one step further by including AI- based content specifically intended to help them rank better on search engines and reach potential customers. As opposed to creating original content, businesses can depend on AI to provide new optimized pieces of content on an enormous scale, and it gets easier and faster to reach more potential customers.

How GEO is Changing Digital Marketing

Faster Content Creation

One of the biggest challenges of digital marketing is being able to churn out high-quality content fresh off the stove daily. It's not that easy with the methods traditionally applied in that creating blog posts, social media updates, and other content types requires much time and effort to execute. That is what generative AI is for.

AI tools can produce content fast and relevant to a particular topic. It could easily optimize the same for search engines. For example, if a business wanted to create articles about their product, a few clicks using GEO tools will automatically create blog post or descriptions that are in line with popular searches. The latter will keep the businesses' websites fresh with new content, which is one of the most crucial factors of success for SEO.

Why It Works: This increases the speed of content creation, which means so businesses can create more content without adding more workload. The better the content is, the more probable your ranking on the search engine will be.

Example: An e-commerce store selling outdoor gear may generate this based on the help of artificial intelligence when all the product descriptions and blog post items regarding camping tips can be SEO-optimized and scored in search engines.

Better Personalization

This does not mean merely churning out good content; it will also enable businesses to formulate targeted marketing communications. Generative AI could use data involving customer behavior, search trends, and many other things, to make content that actually addresses the specific audience group. This is going to lead to more personalized experiences for consumers of this information type and should translate to higher engagement and conversion rates.

Why It Works: Almost all being a key driver of customer satisfaction, is a major guest to personalization. Giving businesses the ability to deliver content that fits the needs and interests of the user allows their audience to build a stronger connection with the business and to ultimately sell the product.

Example: Using AI, an online clothing store can create personalized email campaigns or product recommendations for customers and create a better chance of generating a sale.

Improved Search Engine Rankings

Basically, GEO is focused on optimizing SEO performance with the help of a generative AI. Businesses can create all kinds of content with related interests in accordance with search trends; moreover, this GEO has the potential to deliver improvements in keyword targeting, readability, and user engagement. Thus, GEO tools are specifically developed for optimizing content in a way that helps ranking on both search engines - like Google-and users.

Why It Works: Instead, the content from GEO may actually rank well in the search results with good quality matched for the intent of the users. It could now use its keywords to optimize its content while keeping it engaging and value-creating to the readers.

Example:A local restaurant could use AI to create SEO-optimized blog posts or landing pages focusing on the theme, such as best vegan restaurants in [city] or most affordable dining in [city]. Those then show up in the local search results. That attracts more potential customers to the restaurant.

Cost Efficiency and Scalability

Scaling content, large volume SEO-optimized content creation has long been an expensive affair; meaning large numbers of writers or content creators were required. GEO allows businesses to now use AI for the purpose of scaling content production without breaking the bank. Smaller businesses with minimal budgets can now leverage great chunks of information churned out in less time to outdo larger competitors.

Why It Works: AI is cost efficient as it eliminates the need for hand written or content produced. This allows businesses to mass produce content without paying a quality cost.

Example: AI is cost efficient as it eliminates the need for hand written or content produced. This allows businesses to mass produce content without paying a quality cost.

Improved User Experience

At the end of the day, for both search engines and businesses, it is about user experience, first. In the case of search engines like Google, the ultimate goal is to generate the best results for users; whenever a site has some good content that answers people's questions or gives any value, the more it will rank. Generative Engine Optimization helps businesses create relevant, engaging, and informative content that will support the user experience.

Why It Works: Generating the type of content, which identifies user needs, and is structured to be easily readable improves the overall experience that people have when coming to a website; they are likely to stay longer, and thus, it enables the people coming to the website to take the desired actions, whether signing-up or making a purchase.

Example: With the health and wellness brand through GEO, articles can be made to be well-structured, useful, and will answer common questions that most ask about health.

GEO and the Future of Digital Marketing

It's only a matter of time before Generative Engine Optimization unfolds more possible capabilities, including much more sophisticated tools that will generate content but might also optimize voice search, video, and so on.

Why It Matters: Here are the reasons why: first, businesses will have an early mover advantage with faster production of content at a better quality, with better SEO performance. What really plays the game of changing how businesses of any scale scale their efforts and stay ahead of the digital curve is to integrate AI into digital marketing.

Conclusion

With GEO, or generative engine optimization, AI is being used to combine with SEO to help businesses create higher-value, more relevant content that ranks well in searches and improves user experience. Which, while changing the game, promises to attract new customers toward any small business or large company, as it provides better, faster, and far more efficient techniques for creating content?

As AI technology becomes more advanced, companies using GEO will be able to take the lead in their industries, since GEO is an essential strategy of the future of digital marketing. Visit Eloiacs to find more about Digital Marketing.

0 notes

Text

Microsoft Azure OpenAI Data Zones And What’s New In Azure AI

Announcing the most recent Azure AI developments and the availability of Azure OpenAI Data Zones

Microsoft Azure AI is used by more than 60,000 clients, such as AT&T, H&R Block, Volvo, Grammarly, Harvey, Leya, and others, to propel AI transformation. The increasing use of AI in various sectors and by both small and large enterprises excites us. The latest features in Azure AI’s portfolio that offer more options and flexibility for developing and scaling AI applications are compiled in this blog. Important updates consist of:

There are Azure OpenAI Data Zones available for the US and EU that provide more extensive deployment choices.

Prompt Caching is available, Azure OpenAI Service Batch API is generally available, token generation has a 99% SLA, model prices are reduced by 50% through Provisioned Global, and lower deployment minimums are required for Provisioned Global GPT-4o models in order to scale effectively and minimize expenses.

Mistral’s Ministral 3B tiny model, Cohere Embed 3’s new healthcare industry models, and the Phi 3.5 family’s improved general availability offer more options and flexibility.

To speed up AI development, switch from GitHub Models to Azure AI model inference API and make AI app templates available.

Safely develop new enterprise-ready functionalities with AI.

United States and European Union Azure OpenAI Data Zones

Microsoft is presenting Azure OpenAI Data Zones, a new deployment option that gives businesses even more freedom and control over their residency and data privacy requirements. Data Zones, which are specifically designed for businesses in the US and the EU, enable clients to process and store their data inside predetermined geographic bounds, guaranteeing adherence to local data residency regulations while preserving peak performance. Data Zones, which cover several regions within these areas, provide a balance between the control of regional deployments and the cost-effectiveness of global deployments, facilitating enterprise management of AI applications without compromising speed or security.