#gpt collective

Text

0 notes

Text

Joywave, a band whose name is as misleading as their talent.

Who on earth are Joywave? Do they make music that's the auditory equivalent of riding a roller coaster while eating a jalapeño popsicle? It's like they took the word "joy" and decided, "Hey, how can we make this wave as uncomfortable and dissonant as possible?" Ah yes, the "joy" that comes from listening to their music is truly questionable. I mean, it's like they took all the blandest elements of indie rock and decided to create a band out of it. Who needs excitement or originality when you can have Joywave, right?

I must say, they really live up to their name because listening to their music feels like a wave of joy crashing over you. Except, instead of a refreshing ocean wave, it's more like a kiddie pool filled with lukewarm tap water. Sorry, but their music is about as exciting as watching paint dry.

Their music is like a never-ending parade of banality, providing the perfect soundtrack for those moments when you want to fall into a deep coma. Listening to their music is like voluntarily subjecting yourself to a never-ending loop of elevator music with a side of cringe-worthy lyrics. Seriously, what are they even singing about? It's like they're trying to communicate with their fellow extraterrestrial beings through their music.

Thank you, Joywave, for blessing us with your repetitive lyrics that sound like they were written by an Al program gone rogue. Does owning a thesaurus automatically make you a great songwriter? I think not. I'm convinced the lead singer just wants people to think he's deep, but all he's doing is drowning in a sea of mediocrity.

Joywave is a band that represents the pinnacle of generic indie pop. Congratulations on making background music that no one will remember in five minutes. So next time you're feeling down, just put on some Joywave and remind yourself that things could always be worse.

— GPT-4’s opinion on my favorite artists, Part 1

#joywave#ai generated#gpt 4#gpt4#chat gpt#This is a collection of quotes from fmbot on Discord#YES I AM MAKING THIS A SERIES!!!!#og

8 notes

·

View notes

Text

📽 [Webinar] Beat GPT-4 with a Small Model and 10 Rows of Data*

New Post has been published on https://thedigitalinsider.com/webinar-beat-gpt-4-with-a-small-model-and-10-rows-of-data/

📽 [Webinar] Beat GPT-4 with a Small Model and 10 Rows of Data*

Small language models (SLMs) are increasingly rivaling the performance of large foundation models like GPT-4. However, the need for high-quality datasets for fine-tuning these models presents a persistent challenge.

On August 8th, Predibase is going to showcase an approach for fine-tuning an SLM that outperforms GPT-4 with synthetic data generated from only 10 real-world examples.

The Data Dilemma

While fine-tuning SLMs with high-quality datasets can consistently produce task-specific models that outperform large foundation models, many teams face a significant barrier: assembling sufficient training data. This challenge has often been a bottleneck in AI development, limiting the ability of teams to develop production-ready models quickly and cost-effectively.

Synthetic Data Through Distillation

Our upcoming webinar introduces an innovative solution to this persistent challenge. By leveraging the capabilities of large language models such as GPT-4 and Llama-3.1-405b, we’ve developed techniques to generate high-quality synthetic data for fine-tuning task-specific SLMs. This approach enables teams to achieve GPT-4 level results with as few as 10 real-world examples, dramatically reducing the data collection burden and accelerating the path to production.

In this comprehensive session, we’ll delve into the following key areas:

The Data Insufficiency Challenge: We’ll explore the persistent issue of insufficient training data in AI development, discussing the limitations it imposes on teams working with SLMs.

Synthetic Data Generation Techniques: Our ML team will demonstrate methods for generating high-quality synthetic data based on as few as 10 data rows using Llama-3.1-405B and GPT-4.

Achieving GPT-4 Level Performance: We’ll show how SLMs fine-tuned with synthetic data can match or exceed the performance of GPT-4 across various tasks. Attendees will gain insights into the fine-tuning process, hyperparameter optimization, and performance evaluation metrics.

Streamlining the Development Process: We’ll discuss strategies for significantly reducing data collection efforts and accelerating the journey from concept to production. This includes techniques for identifying key seed examples, automating the synthetic data generation pipeline, and optimizing the fine-tuning workflow.

Join us on August 8th

Whether you’re an AI practitioner, startup founder, or enterprise decision-maker, this session will equip you with knowledge to effectively use synthetic data and SLMs. Join us to explore how synthetic data and fine-tuned SLMs can unblock your AI initiatives. Register today.

*This post was written by Will Van Eaton from Predibase. We thank Predibase for their insights and ongoing support of TheSequence.

#ai#AI development#approach#barrier#challenge#comprehensive#data#data collection#datasets#development#enterprise#Foundation#GPT#GPT-4#how#insights#it#language#language models#large language models#limiting#Llama#metrics#ml#model#models#optimization#performance#process#Production

0 notes

Text

The Collection Gallery Of digital Art [ 2023 2024] Volume A. II. ليليث

Continued the postmodernist sequence of mass distribution inherited from the printing press to upload ─a main poetic theme where love, is expressed in diverse modes. To influence the collective imaginary of increasingly globalized societies.

View On WordPress

#21e siècle#21th century#art#Art and technology#Art Modern#Artal de Asens#ARTE CONTEMPORÂNEA#artificial intelligence#Artistes dans l&039;art#Artists in art#Author#authors of instagram#book is reads#book of dreams#cisgender#Collection opensource#Computer art#Consuetudinario#Consuetudinario ≠ Transcripciones#desolation angels#digital art#Digital Art Festival#digitalart#evolutionary process#Free book#gallery of digital art#GPT#Human Body#inspiring diaries#insta quotes daily

0 notes

Text

The collection Gallery Of digital Art [ 2023 2024] Volume A. I. לילית

The collection Gallery Of digital Art [ 2023 2024] Volume A. I. לילית

by Rodrigo Granda

The collection Gallery Of digital Art [ 2023 2024] Volume A. I. ליליתby Rodrigo Granda

Publication date 2024-05-01Attribution-NonCommercial-NoDerivs 4.0

Regarding my ninth digital publication:

Continued the postmodernist sequence of mass distribution inherited from the printing press to upload ─a main poetic theme where love, is expressed in diverse modes. To influence the collective imaginary…

View On WordPress

#21e siècle#21th century#art#Art and technology#Art Modern#Artal De Asens#ARTE CONTEMPORÂNEA#artificial intelligence#Artistes dans l&039;art#Artists in art#Author#authors of instagram#book is reads#book of dreams#cisgender#Collection opensource#Computer art#Consuetudinario#Consuetudinario ≠ Transcripciones#desolation angels#digital art#Digital Art Festival#digitalart#evolutionary process#Free book#gallery of digital art#GPT#Human Body#inspiring diaries#insta quotes daily

0 notes

Text

─ideologies of the time

A Critical Exploration to aproximate: "The Inequality of Human Races", "The Moral and Intellectual Diversity of Races" by Arthur de Gobineau; and "Sapiens: A Brief History of" Humankind by Yuval Noah Harari"

#Chat-Bot#chat.openai.com#Consuetudinary#GPT-3.5#IA conversacional#narrative#Petición de escritura a un modelo de lenguaje grande#transcriptions#ability of Homo sapiens to create#Arthur de Gobineau#ARTICLES#Aryan race#Collections#development of human societies#ethnocentrism#Eurocentrism#Homo sapiens#Houston Stewart Chamberlain#human diversity#human races#impact of cultural evolution#Nazi racial theories#pseudoscientific#race and ethnicity#racial determinism#racial ideologies#racial theories#Sapiens: A Brief History of» Humankind#The Inequality of Human Races#The Moral and Intellectual Diversity of Races

0 notes

Text

─ideologies of the time

A Critical Exploration to aproximate: "The Inequality of Human Races", "The Moral and Intellectual Diversity of Races" by Arthur de Gobineau; and "Sapiens: A Brief History of" Humankind by Yuval Noah Harari"

#Chat-Bot#chat.openai.com#Consuetudinary#GPT-3.5#IA conversacional#narrative#Petición de escritura a un modelo de lenguaje grande#transcriptions#ability of Homo sapiens to create#Arthur de Gobineau#ARTICLES#Aryan race#Collections#development of human societies#ethnocentrism#Eurocentrism#Homo sapiens#Houston Stewart Chamberlain#human diversity#human races#impact of cultural evolution#Nazi racial theories#pseudoscientific#race and ethnicity#racial determinism#racial ideologies#racial theories#Sapiens: A Brief History of» Humankind#The Inequality of Human Races#The Moral and Intellectual Diversity of Races

0 notes

Text

─ideologies of the time

A Critical Exploration to aproximate: "The Inequality of Human Races", "The Moral and Intellectual Diversity of Races" by Arthur de Gobineau; and "Sapiens: A Brief History of" Humankind by Yuval Noah Harari"

#Chat-Bot#chat.openai.com#Consuetudinary#GPT-3.5#IA conversacional#narrative#Petición de escritura a un modelo de lenguaje grande#transcriptions#ability of Homo sapiens to create#Arthur de Gobineau#ARTICLES#Aryan race#Collections#development of human societies#ethnocentrism#Eurocentrism#Homo sapiens#Houston Stewart Chamberlain#human diversity#human races#impact of cultural evolution#Nazi racial theories#pseudoscientific#race and ethnicity#racial determinism#racial ideologies#racial theories#Sapiens: A Brief History of» Humankind#The Inequality of Human Races#The Moral and Intellectual Diversity of Races

0 notes

Text

Generative AI Policy (February 9, 2024)

As of February 9, 2024, we are updating our Terms of Service to prohibit the following content:

Images created through the use of generative AI programs such as Stable Diffusion, Midjourney, and Dall-E.

This post explains what that means for you. We know it’s impossible to remove all images created by Generative AI on Pillowfort. The goal of this new policy, however, is to send a clear message that we are against the normalization of commercializing and distributing images created by Generative AI. Pillowfort stands in full support of all creatives who make Pillowfort their home.

Disclaimer: The following policy was shaped in collaboration with Pillowfort Staff and international university researchers. We are aware that Artificial Intelligence is a rapidly evolving environment. This policy may require revisions in the future to adapt to the changing landscape of Generative AI.

-

Why is Generative AI Banned on Pillowfort?

Our Terms of Service already prohibits copyright violations, which includes reposting other people’s artwork to Pillowfort without the artist’s permission; and because of how Generative AI draws on a database of images and text that were taken without consent from artists or writers, all Generative AI content can be considered in violation of this rule. We also had an overwhelming response from our user base urging us to take action on prohibiting Generative AI on our platform.

-

How does Pillowfort define Generative AI?

As of February 9, 2024 we define Generative AI as online tools for producing material based on large data collection that is often gathered without consent or notification from the original creators.

Generative AI tools do not require skill on behalf of the user and effectively replace them in the creative process (ie - little direction or decision making taken directly from the user). Tools that assist creativity don't replace the user. This means the user can still improve their skills and refine over time.

For example: If you ask a Generative AI tool to add a lighthouse to an image, the image of a lighthouse appears in a completed state. Whereas if you used an assistive drawing tool to add a lighthouse to an image, the user decides the tools used to contribute to the creation process and how to apply them.

Examples of Tools Not Allowed on Pillowfort:

Adobe Firefly*

Dall-E

GPT-4

Jasper Chat

Lensa

Midjourney

Stable Diffusion

Synthesia

Example of Tools Still Allowed on Pillowfort:

AI Assistant Tools (ie: Google Translate, Grammarly)

VTuber Tools (ie: Live3D, Restream, VRChat)

Digital Audio Editors (ie: Audacity, Garage Band)

Poser & Reference Tools (ie: Poser, Blender)

Graphic & Image Editors (ie: Canva, Adobe Photoshop*, Procreate, Medibang, automatic filters from phone cameras)

*While Adobe software such as Adobe Photoshop is not considered Generative AI, Adobe Firefly is fully integrated in various Adobe software and falls under our definition of Generative AI. The use of Adobe Photoshop is allowed on Pillowfort. The creation of an image in Adobe Photoshop using Adobe Firefly would be prohibited on Pillowfort.

-

Can I use ethical generators?

Due to the evolving nature of Generative AI, ethical generators are not an exception.

-

Can I still talk about AI?

Yes! Posts, Comments, and User Communities discussing AI are still allowed on Pillowfort.

-

Can I link to or embed websites, articles, or social media posts containing Generative AI?

Yes. We do ask that you properly tag your post as “AI” and “Artificial Intelligence.”

-

Can I advertise the sale of digital or virtual goods containing Generative AI?

No. Offsite Advertising of the sale of goods (digital and physical) containing Generative AI on Pillowfort is prohibited.

-

How can I tell if a software I use contains Generative AI?

A general rule of thumb as a first step is you can try testing the software by turning off internet access and seeing if the tool still works. If the software says it needs to be online there’s a chance it’s using Generative AI and needs to be explored further.

You are also always welcome to contact us at [email protected] if you’re still unsure.

-

How will this policy be enforced/detected?

Our Team has decided we are NOT using AI-based automated detection tools due to how often they provide false positives and other issues. We are applying a suite of methods sourced from international universities responding to moderating material potentially sourced from Generative AI instead.

-

How do I report content containing Generative AI Material?

If you are concerned about post(s) featuring Generative AI material, please flag the post for our Site Moderation Team to conduct a thorough investigation. As a reminder, Pillowfort’s existing policy regarding callout posts applies here and harassment / brigading / etc will not be tolerated.

Any questions or clarifications regarding our Generative AI Policy can be sent to [email protected].

2K notes

·

View notes

Text

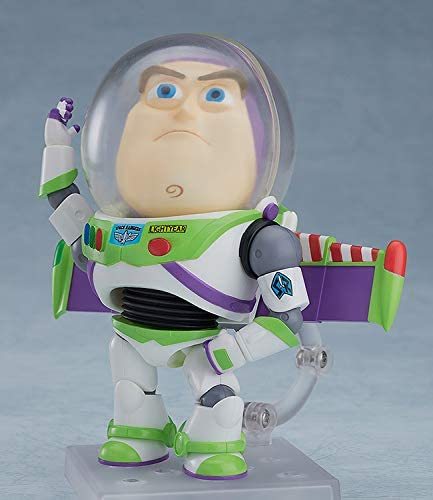

Buzz Lightyear Nendoroid! Chat GPT spit this out when asked to provide goofy comentary on this item :)

Gawrsh, would ya look at this Buzz Lightyear Nendoroid? It's out of this world! With its cute and chibi-style design, it's sure to make you smile. And check out all the accessories it comes with! Buzz is ready to take on Zurg with his laser gun and jet pack, and even has a Star Command flag to wave around.

I mean, I can't help but chuckle at the thought of Buzz in a chibi style. But hey, it works! And the attention to detail is amazing. You can even change Buzz's facial expressions to match his mood. I wonder if he'll have an expression for when he realizes he's not actually a space ranger...

Overall, this Buzz Lightyear Nendoroid is a winner in my book. It's perfect for any Toy Story fan or collector who wants to add a little bit of fun and adventure to their display. Just watch out for falling with style, ya-hyuck!

#BuzzLightyear#ToyStory#Nendoroid#ChibiStyle#ActionFigure#Collectible#DisneyPixar#SpaceRanger#StarCommand#ToInfinityAndBeyond#kidcore#toycore#tumblr#nendoroid#chibi#chat gpt#ai#artificial intelligence#pixar

1 note

·

View note

Text

hermitcraft vault hunters thoughts:

joe's interpretive... dance? cosplay?... of the bad chat gpt xisuma had made intro is VERY worth watching.

100 days to collect all the artifacts... oh boy i hope the promised "artifacts are faster" update is in update 12. so they don't. die.

iskall, to etho: "as long as we greed". etho, nodding back. me, flashing to runs that will probably give us all heart attacks.

the start of this server has SUCH chaotic day one of a new minecraft server energy. they all run off and punch trees. iskall immediately yells at them for punching trees. it's beautiful.

xisuma: "only two monoliths! we're gonna ace this." as all the hermits. just mine blocks for their bases.

iskall has such strong "trying to herd kids through a museum" vibes its SO funny.

etho, loudly: "LOOT AND SCOOT everybody." and now everyone else has picked up on repeating "loot and scoot. loot and scoot."

"hey i found a spawner everyone should i break thaOH MY GOD THOSE GUYS JUMPED OUT."

no one knows what they're doing. this is fantastic.

"These vaults are AMAZING, Iskall :D" awwwwww

that being said iskall is failing entirely to explain this to the crowd. gather all the artifacts in 100 days. sure.

"i know there are 13 minutes left but i'm getting so concerned i'll never find the exit" oh no. they're brand new. they have fresh vault hunter smell.

"are there coordinates in here?" "no." "WHAT."\

etho finding an omega room with iskall and also being the only one to kill a champion. this checks out.

NO ONE CAN OPEN THE MONOLITH CRATES. i unfortunately relate,

joe takes fireball. naturally. so excited for joe the fireball wizard! also now so so so excited to see what builds everyone else will make. as everyone settles into playstyles. so much to make of that, i think.

in conclusion: still so hyped,

452 notes

·

View notes

Note

so, that guy with the masonic blazed post is a big man. a real mf. One example is him being the "Ambassador Extraordinary and Plenipotentiary of the Republic of Gabon to China", which from what i know means he is a big embassy guy, working with 5.5 billion dollars worth of trade in this instance. He was(is?) a fully tenured professor, and has multiple word salad titles as long and important sounding with many different governments (and, from extra digging, militaries)

he gets a new one of these like every two months. By far not a complete list. Theres a lot of videos he posts of him just walking around random notable places and posing w academics and celebrites he likes.

The ones he chooses seem random and sporadic, and he includes a gpt wikipedia entry for them on every post. Basically anywhere or anyone he pay his way to meeting he does. Like he's collecting pokemon.

He also owns a golden assault rifle. Highly recommend scrolling his shit

this guy is a fucking sigma. god I want to know his whole deal. why doesnt his suit fit. what's going on there

57 notes

·

View notes

Text

A HH Lucifer-centric AU 19/?

PART 1, PART 2, PART 3, PART 4, PART 5, PART 6, PART 7, PART 8, PART 9, PART 10, PART 11, PART 12, PART 13, PART 14, PART 15, PART 16, PART 17, PART 18, PART 20, PART 21, PART 22

Hello!!! How's everyone's weekend?!

I had the most relaxing trip of my life. Me and my best friend went on a picnic and the place was so gorgeous I wish I was rich enough to have that kind of landscaping.

Anyway!

Here's my update. I hope you all enjoy.

As always: likes, reblogs, and ESPECIALLY COMMENTS are so appreciated and it honestly gives me motivation. We're near the end meaning this might end this week :((

Disclaimer: I did get some help with chat gpt for some paragraphs just to get my ideas across and also because English is not my first languagee. I edited them of course myself because u know how automated shit can be.

I'm learning I promise!

-------------------------------------------------

Every denizen of Hell held their breath in anticipation as each agonizing minute passed without a word from the King. Some feared he had met his demise the moment he entered, leaving them grasping at false hope. The Overlords pondered the same grim possibility but dared not voice it in the presence of higher demons.

Amidst the tension, the task of pacifying Paimon fell upon the Goetias, who found themselves ensnared in his relentless tirade about their illustrious King and their collective duty to fix Hell's problems, a duty he believed lay solely with them, not Lucifer.

The Sins, meanwhile, remained vigilant, their eyes fixated on the entrance through which Lucifer had disappeared, searching for any subtle sign of their brother's fate.

Satan, ever watchful, kept a peripheral eye on Goodie. The Good of Humanity had fallen into an unusual silence since Lucifer embarked on his suicide mission. Unlike the rest, she wore neither worry nor despair on her face, hell, not even of glee; instead, there was a knowing glint in her eyes the Sin of Wrath definitely did not like. He could only hope Lucifer emerges from all of this still himself.

At the very back, Vox stole a glance at his rival, noting the whatever-the-fuck thing he had with the King. He half-expected the radio demon to remain his usual apathetic self. And he was half right. The guy was smiling with no care in the world. Yet, to his surprise, a strained smile is etched the demon's face. It's not as noticeable but if you've been looking at Alastor as closely as Vox had been for the past how many years, it's like a giant pimple you can't ignore. There was a glassy look in his eyes, as if the radio demon is going to-

Vox wonders incredulously if his wiring got fried by that shockwave earlier because there is no fucking way.

The media demon is silently thankful he couldn't finish that thought as they are knocked down once more.

----------------------------------------------

It all unfolded in a blink, leaving them no time to respond. The ground quaked with a force that they realized was from the towering tree that's trembling before them. Roots and branches contorted, twisting inwards and outwards like a well-oiled machine, as if the very essence of the tree was tearing itself apart. Red flowers all around withered as the oppressive miasma dispersed. Then, with a thunderous crash, the colossal tree collapsed into a single heap.

The dust clears presenting a lone figure stands in the center of it all.

Belphagor: Lucifer!

There stood the King of Hell, his horns protruding proudly and his corrupted halo casting an ominous black glow. His six wings spread wide, a testament to his power and dominance. It was Lucifer. But... something seemed off.

The Sin of Pride appeared altered. His once pure white attire had transformed into black, adorned with accents of red. His porcelain skin, once flawless, now bore a grayish, melancholic hue. However, the most striking change lay in his hair—it was no longer the radiant gold of angels, but a sinister black with tendrils of creeping red, moving like of the deadly miasma.

Lucifer looked like a shadow of himself.

Before anyone could react, the fallen angel lunged towards Goodie, swiftly pinning her to the ground.

Lucifer: Ẏ̷̨̖̯͎̤͎͖̪̆̀̊͌͑̓̇o̵̻͗̔͊̃͘̚͠ṳ̸͎̍̊͗̌̈ ̵̱͙͇͛͑i̴̳͈̗̺͒̏̃̀̚͝n̸̢̧̖͖͚͉͙̤͇͆̃͛͊̿͛́̚͘s̸͇͚̱͍͈̤̘̒̂̈́̆͗̈̆ͅó̵̇̅́͜l̶͇̝̞̜̰̘͊̒͂̓͝ë̶̮͔̰́̀̑̔̽͊̐n̶̡̧̗̤̘̞̑̇̀t̴͙̲̳̦̦͎͔̠̔ ̵̮̰̞͐̌͌b̸̧͚̾i̴̧̜̪̳̤͔̹͉̦̇͠t̴͖̐̀̾̌̽̎̂̅͜ͅc̵̛̞̳͛̋̆̏͆̏h̷̟̺̬̗͗̉̓̍!̴͉̲̼̪͓̻̪̻̀̊ ̷͇͓̲̬͍̦̙̹͓̔̈́͊̇

Goodie chokes from the stench of hellfire on her skin.

Goodie: I never lied to you, angel. I told you that you were the key.

Lucifer: Y̷̢̘̻̩̲͐͋̐̌́́͝ŏ̴͎̌́u̷̟̯͋ ̶͔̝̘̓̈́̄̈́́̀̐ǵ̸͍͌͝͝á̵̧̫͔̤̘̹̓͗͂v̶̢͕̘̼̦̰̐ẽ̵̝̥͈̝̓͋̌̋͠ ̸̝͙̐̓m̵̩͖͍͒͌͛̔e̸̤̹̻̪͇͔̽̇ ̵̜̬̰̟̖̘͈̐̆̀á̸̻̜̬̫̝͇͚ ̷̢̗̠̮͊ͅf̶̡̩̟͘͝a̵̢͎͆k̷̲̰͓̤̐͌̽͐̿̕͠e̷̛̪̖̅̒̀̓͐͜ͅ ̸̭͙̫̂̚ͅs̴̩̝̺͕̲̯͒e̸̮͍̤̦̯̎̈́̔̌̇͌ä̷̳̖͓̒̕l̶̦̬̙̘̝̉̏̔̈́͆͘͠.̸̨͓͉͒̄̚ ̶͈͆̽̿̋̑̈̕T̶̗̹̱̞̭̩͉̍͆̀̚é̵̹̗͖ļ̶̜̬͍͓̗̿͑̾̋̏̕l̸̛̀̆̓̾ͅ ̷̡̗̼̀̿̓m̸̛̗̞͕̠̟ę̵̬̰̻̮͎̉̓ ̵̥̩̞̮͈͖̅̃̑͜͝ŵ̷͈̥͕̦̘̙̏h̶̝͈̬͖̲̯̝͊̓̕ȳ̴̱̓̄̎͝ ̵̛̣̭̘͔͋̏́̀̋I̵̡̦̬̬̫͓̭͆̍͌͗̍́̀ ̶̛͈͆s̵̛̗͙̙̭h̷̝͌͌͜͝͠ȏ̴̝̹̻͚̾́̃̔͘͝ư̸̮͓̰̖͔̙̇́͊̽̐̔l̶͙̟̙̣̮̱̞̂͌̏͗d̴̢͊͒̉̈ ̸̠̠̮̉̿n̴͚̯̜̫̊ō̴̡͉̪̥̗̹̲̽̄̀̕t̴̢̺̱̊̉̎̕͜͠ ̷̛̹̜̿͝ķ̴̻͚̙͔̈́͊̍í̸̥̼͕̮̾̿͌l̷̢͂̏͆͊̃͠l̷̡̨͎̪̝̖̱̽̽̓͐̀́̈́ ̷͖̿̋͛y̶̻̝̆͂͝ỏ̸̧̹͇̫̀̐̀̍͋̃ų̶̟̩͔͇̝͚̎̈́̑̕͠ ̵͍̃͗͠ẁ̷̝̟̥̰̘͎͒͛́͒h̵̦̜̩̬͋͐̋ė̶̃͜ṙ̸̡̧̟͉̻̬͚̅e̵̤̮̟͌̓ ̴̹͕̮͍̺̲͇̉y̴̨̛̪͛̍̓̏ô̴͔͍͉̅̈́̌u̴̙͖͖͎͐͛̒ ̶̟̙͍̖̭̃̌́l̵̙̽̈́̐͝á̷̡͔̞͈̜͎͒͌̑̐͝y̴̼̹̪̻̒̓̽̀̚?̴̛̻̘͈͍͕̒̃̀̓̏

Goodie: It was not a fake. Without it, you would have perished the moment you set foot in-ah!-side.

Lucifer: H̵̹̩̗̑̎̈́́̕o̷̘͝ẇ̷̢̨̛͇̞̝̦̠̎ ̸̯̹͋̃͑͘͝d̴͉̭̟̫̙̠͂à̶͎̮̝̺̺̥͙̓͛͂̒́ŗ̴̡̺̬̭̝̳̓̈́̑̍͝ĕ̷͓̕ ̸̺͈̖̣̳̃y̴̜̞͆͑̉͠o̴͓͋ủ̸͈͎̳̥͈̞̍̀͜ ̸̥̑͐̇̂̈́̐͝t̶͓͋r̶̼͠ỉ̸͍̻̫̩͍̓͌̍̄͝ċ̷̞̤̭̳̈́̓́̃k̶̖̹͙̋̓̑̀̅̔͊ ̵͙̠̻̜̎ͅt̵̛͇̀̑̀h̴̛̥͉̲̬̰͛̊̀̅͝e̵͇̮̫̟̗̍͊̓ ̶̰͎̟̜̗̈̋͂̓K̶̞͉̰̫̂͂̋͝ͅi̷̯̟̤̽͛̈͑n̵̬͙͑̉̍͊̕͠ģ̸͖͍̪̉͗̂͠ ̷̣̯͖̭̜̀ͅǫ̵̨̣̿̽̑͜f̶͔͖̬͐͌ ̸̼̅̿͒̎́Ḣ̴͎͕̳́ͅe̶̛̞̱̦͈l̴̡̲̯͔̰̱̂̅̀̄̈͗͋l̸͍̩̯̗̏?̴̯̥̭̦͙̃̏!̸̼̹͍͖͒̊̅̊̌̔̍

Goodie: Do not delude yourself. There was no chance that this could have ended differently.

Lucifer was heaving so much that Goodie could sense his energy waning. Seizing the opportunity, she managed to escape his clutches. Despite the danger surrounding her, (such bothersome loyalty) she couldn't resist letting out a chuckle, teasing the angel one last time.

Goodie: I gotta say, angel, I do like your new look. Corruption definitely suits you.

Lucifer: F̸̢̨͔̲͖̖̳͍̑̽͜U̵̼̪̰͈̟̜͙͌́́̅̈́̔C̷̢̯͓̘̬͖̝̎K̶̳̖͓̘̝̗̀̓̈́̾̉̾̾͊͠͝Î̶͇͕͚̪̭̎N̴͉̟͍̻͇̚G̵̠̲̰͈̖̎͂͋̾ ̴̧̥͕̹̭̘̜͍̟̎̂̔͗̋̿̒B̶̢̦̤̥͕͉͋̂͌́́͂̈̔͠I̸̗̭̼͊̐͂̀̈́̐̏̐T̸̠̹͓̮̱̻̹̯͉̦̍̔̽̍̄͌̆C̸͍̩̉̈́̈́̄͒̓͑̾͝ͅḨ̴̦̙͉̫̪̫̇̀̄̈́̋͘!

Lucifer then collapses to his knees, clutching his throat as if he's drowning in searing heat. Confusion and desperation fill his voice as he struggles for breath.

Lucifer: How? *gasp* why? *gasp* -trusted-

There's a flurry of movement around him, voices overlapping and blending into a chaotic white noise. Amidst it all, someone speaks with a commanding tone, their words cutting through the haze.

Alastor: Listen to only me, my dear.

There was a faint humming of music? Was Alastor here?

Alastor: I'm here, my Majesty. Calm yourself. You need not to panic.

He's trying, he really is. But his ears are muddled and he can't understand anything anymore. Everything is happening all at once, leaving him disoriented and terrified.

As consciousness begins to slip through his grasp, the Sin of Pride feels a sense of detachment. A new presence moves in front of him, accompanied by a chorus of apologies that echo faintly in his ears.

A cool sensation brushes against his fevered forehead, offering a brief respite from the overwhelming heat and chaos. And with that fleeting moment of relief, Lucifer succumbs to the darkness.

Roo: How fun~

--------------------------------------

Transformation central! (Transformation central!)

Reformation central! (Reformation central!)

Transmogrification central!

#hazbin lucifer#hazbin hotel#hazbin alastor#hazbin hotel lucifer#hazbin charlie#hazbin angel dust#hazbin husk#hazbin lilith#hazbin vaggie#hazbin sir pentious#hazbin nifty#hazbin cherri bomb#hazbin hotel alastor#hazbin hotel charlie#hazbin hotel vaggie#hazbin hotel niffty#hazbin hotel husk#hazbin hotel angel dust#hazbin hotel sir pentious#hazbin hotel cherri bomb#hazbin au#hazbin art#hazbin hotel fandom#hazbin hotel fanart#hazbin spoilers#hazbinhotel#hazbin fandom#radioapple#duckiedeer#appleradio

102 notes

·

View notes

Text

Can large language models identify and correct their mistakes?

New Post has been published on https://thedigitalinsider.com/can-large-language-models-identify-and-correct-their-mistakes/

Can large language models identify and correct their mistakes?

Posted by Gladys Tyen, Intern, Google Research

LLMs are increasingly popular for reasoning tasks, such as multi-turn QA, task completion, code generation, or mathematics. Yet much like people, they do not always solve problems correctly on the first try, especially on tasks for which they were not trained. Therefore, for such systems to be most useful, they should be able to 1) identify where their reasoning went wrong and 2) backtrack to find another solution.

This has led to a surge in methods related to self-correction, where an LLM is used to identify problems in its own output, and then produce improved results based on the feedback. Self-correction is generally thought of as a single process, but we decided to break it down into two components, mistake finding and output correction.

In “LLMs cannot find reasoning errors, but can correct them!”, we test state-of-the-art LLMs on mistake finding and output correction separately. We present BIG-Bench Mistake, an evaluation benchmark dataset for mistake identification, which we use to address the following questions:

Can LLMs find logical mistakes in Chain-of-Thought (CoT) style reasoning?

Can mistake-finding be used as a proxy for correctness?

Knowing where the mistake is, can LLMs then be prompted to backtrack and arrive at the correct answer?

Can mistake finding as a skill generalize to tasks the LLMs have never seen?

About our dataset

Mistake finding is an underexplored problem in natural language processing, with a particular lack of evaluation tasks in this domain. To best assess the ability of LLMs to find mistakes, evaluation tasks should exhibit mistakes that are non-ambiguous. To our knowledge, most current mistake-finding datasets do not go beyond the realm of mathematics for this reason.

To assess the ability of LLMs to reason about mistakes outside of the math domain, we produce a new dataset for use by the research community, called BIG-Bench Mistake. This dataset consists of Chain-of-Thought traces generated using PaLM 2 on five tasks in BIG-Bench. Each trace is annotated with the location of the first logical mistake.

To maximize the number of mistakes in our dataset, we sample 255 traces where the answer is incorrect (so we know there is definitely a mistake), and 45 traces where the answer is correct (so there may or may not be a mistake). We then ask human labelers to go through each trace and identify the first mistake step. Each trace has been annotated by at least three labelers, whose answers had inter-rater reliability levels of >0.98 (using Krippendorff’s α). The labeling was done for all tasks except the Dyck Languages task, which involves predicting the sequence of closing parentheses for a given input sequence. This task we labeled algorithmically.

The logical errors made in this dataset are simple and unambiguous, providing a good benchmark for testing an LLM’s ability to find its own mistakes before using them on harder, more ambiguous tasks.

Core questions about mistake identification

1. Can LLMs find logical mistakes in Chain-of-Thought style reasoning?

First, we want to find out if LLMs can identify mistakes independently of their ability to correct them. We attempt multiple prompting methods to test GPT series models for their ability to locate mistakes (prompts here) under the assumption that they are generally representative of modern LLM performance.

Generally, we found these state-of-the-art models perform poorly, with the best model achieving 52.9% accuracy overall. Hence, there is a need to improve LLMs’ ability in this area of reasoning.

In our experiments, we try three different prompting methods: direct (trace), direct (step) and CoT (step). In direct (trace), we provide the LLM with the trace and ask for the location step of the mistake or no mistake. In direct (step), we prompt the LLM to ask itself this question for each step it takes. In CoT (step), we prompt the LLM to give its reasoning for whether each step is a mistake or not a mistake.

A diagram showing the three prompting methods direct (trace), direct (step) and CoT (step).

Our finding is in line and builds upon prior results, but goes further in showing that LLMs struggle with even simple and unambiguous mistakes (for comparison, our human raters without prior expertise solve the problem with a high degree of agreement). We hypothesize that this is a big reason why LLMs are unable to self-correct reasoning errors. See the paper for the full results.

2. Can mistake-finding be used as a proxy for correctness of the answer?

When people are confronted with a problem where we are unsure of the answer, we can work through our solutions step-by-step. If no error is found, we can make the assumption that we did the right thing.

While we hypothesized that this would work similarly for LLMs, we discovered that this is a poor strategy. On our dataset of 85% incorrect traces and 15% correct traces, using this method is not much better than the naïve strategy of always labeling traces as incorrect, which gives a weighted average F1 of 78.

A diagram showing how well mistake-finding with LLMs can be used as a proxy for correctness of the answer on each dataset.

3. Can LLMs backtrack knowing where the error is?

Since we’ve shown that LLMs exhibit poor performance in finding reasoning errors in CoT traces, we want to know whether LLMs can even correct errors at all, even if they know where the error is.

Note that knowing the mistake location is different from knowing the right answer: CoT traces can contain logical mistakes even if the final answer is correct, or vice versa. In most real-world situations, we won’t know what the right answer is, but we might be able to identify logical errors in intermediate steps.

We propose the following backtracking method:

Generate CoT traces as usual, at temperature = 0. (Temperature is a parameter that controls the randomness of generated responses, with higher values producing more diverse and creative outputs, usually at the expense of quality.)

Identify the location of the first logical mistake (for example with a classifier, or here we just use labels from our dataset).

Re-generate the mistake step at temperature = 1 and produce a set of eight outputs. Since the original output is known to lead to incorrect results, the goal is to find an alternative generation at this step that is significantly different from the original.

From these eight outputs, select one that is different from the original mistake step. (We just use exact matching here, but in the future this can be something more sophisticated.)

Using the new step, generate the rest of the trace as normal at temperature = 0.

It’s a very simple method that does not require any additional prompt crafting and avoids having to re-generate the entire trace. We test it using the mistake location data from BIG-Bench Mistake, and we find that it can correct CoT errors.

Recent work showed that self-correction methods, like Reflexion and RCI, cause deterioration in accuracy scores because there are more correct answers becoming incorrect than vice versa. Our method, on the other hand, produces more gains (by correcting wrong answers) than losses (by changing right answers to wrong answers).

We also compare our method with a random baseline, where we randomly assume a step to be a mistake. Our results show that this random baseline does produce some gains, but not as much as backtracking with the correct mistake location, and with more losses.

A diagram showing the gains and losses in accuracy for our method as well as a random baseline on each dataset.

4. Can mistake finding generalize to tasks the LLMs have never seen?

To answer this question, we fine-tuned a small model on four of the BIG-Bench tasks and tested it on the fifth, held-out task. We do this for every task, producing five fine-tuned models in total. Then we compare the results with just zero-shot prompting PaLM 2-L-Unicorn, a much larger model.

Bar chart showing the accuracy improvement of the fine-tuned small model compared to zero-shot prompting with PaLM 2-L-Unicorn.

Our results show that the much smaller fine-tuned reward model generally performs better than zero-shot prompting a large model, even though the reward model has never seen data from the task in the test set. The only exception is logical deduction, where it performs on par with zero-shot prompting.

This is a very promising result as we can potentially just use a small fine-tuned reward model to perform backtracking and improve accuracy on any task, even if we don’t have the data for it. This smaller reward model is completely independent of the generator LLM, and can be updated and further fine-tuned for individual use cases.

An illustration showing how our backtracking method works.

Conclusion

In this work, we created an evaluation benchmark dataset that the wider academic community can use to evaluate future LLMs. We further showed that LLMs currently struggle to find logical errors. However, if they could, we show the effectiveness of backtracking as a strategy that can provide gains on tasks. Finally, a smaller reward model can be trained on general mistake-finding tasks and be used to improve out-of-domain mistake finding, showing that mistake-finding can generalize.

Acknowledgements

Thank you to Peter Chen, Tony Mak, Hassan Mansoor and Victor Cărbune for contributing ideas and helping with the experiments and data collection. We would also like to thank Sian Gooding and Vicky Zayats for their comments and suggestions on the paper.

#Art#Backtracking#benchmark#chart#code#Community#comparison#data#data collection#datasets#Full#Future#generator#Google#GPT#hand#how#human#Ideas#illustration#it#labels#language#language models#Languages#large language models#LED#llm#LLMs#math

0 notes

Text

The collection Gallery Of digital Art [ 2023 2024] Volume A. I. לילית

View On WordPress

#21e siècle#21th century#art#Art and technology#Art Modern#Artal de Asens#ARTE CONTEMPORÂNEA#artificial intelligence#Artistes dans l&039;art#Artists in art#Author#authors of instagram#book is reads#book of dreams#cisgender#Collection opensource#Computer art#Consuetudinario#Consuetudinario ≠ Transcripciones#desolation angels#digital art#Digital Art Festival#digitalart#evolutionary process#Free book#gallery of digital art#GPT#Human Body#inspiring diaries#insta quotes daily

0 notes

Text

─ideologies of the time

A Critical Exploration to aproximate: "The Inequality of Human Races", "The Moral and Intellectual Diversity of Races" by Arthur de Gobineau; and "Sapiens: A Brief History of" Humankind by Yuval Noah Harari"

#Chat-Bot#chat.openai.com#Consuetudinary#GPT-3.5#IA conversacional#narrative#Petición de escritura a un modelo de lenguaje grande#transcriptions#ability of Homo sapiens to create#Arthur de Gobineau#ARTICLES#Aryan race#Collections#development of human societies#ethnocentrism#Eurocentrism#Homo sapiens#Houston Stewart Chamberlain#human diversity#human races#impact of cultural evolution#Nazi racial theories#pseudoscientific#race and ethnicity#racial determinism#racial ideologies#racial theories#Sapiens: A Brief History of» Humankind#The Inequality of Human Races#The Moral and Intellectual Diversity of Races

0 notes