#going back and adding source links to the metadata of some of the images so i remember where i got it from.

Explore tagged Tumblr posts

Text

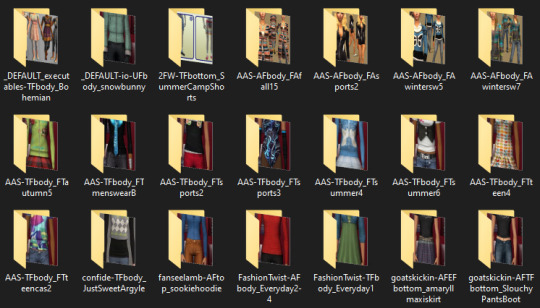

my new downloads folder is sooooo sexy btw. you wish you were me

#bulk renamed to remove all special characters. compressorized. all recolors i don't strictly need DELETED. tooltipped. merged.#jpegs compressed to shit because i dont need them to be high res to be able to tell what its previewing.#going back and adding source links to the metadata of some of the images so i remember where i got it from.#everything is at most two subfolders deep. trying to find a good balance between well-organized and shaving off load time.#.txt#simsposting#'miles why are you doing this instead of playing the game' i love sorting my touys!!!!!!!!!!!#also learning that naming a file folder.jpg automatically makes it the cover for a folder changed my LIFE im doing that shit for everything

7 notes

·

View notes

Text

20 years a blogger

It's been twenty years, to the day, since I published my first blog-post.

I'm a blogger.

Blogging - publicly breaking down the things that seem significant, then synthesizing them in longer pieces - is the defining activity of my days.

https://boingboing.net/2001/01/13/hey-mark-made-me-a.html

Over the years, I've been lauded, threatened, sued (more than once). I've met many people who read my work and have made connections with many more whose work I wrote about. Combing through my old posts every morning is a journey through my intellectual development.

It's been almost exactly a year I left Boing Boing, after 19 years. It wasn't planned, and it wasn't fun, but it was definitely time. I still own a chunk of the business and wish them well. But after 19 years, it was time for a change.

A few weeks after I quit Boing Boing, I started a solo project. It's called Pluralistic: it's a blog that is published simultaneously on Twitter, Mastodon, Tumblr, a newsletter and the web. It's got no tracking or ads. Here's the very first edition:

https://pluralistic.net/2020/02/19/pluralist-19-feb-2020/

I don't often do "process posts" but this merits it. Here's how I built Pluralistic and here's how it works today, after nearly a year.

I get up at 5AM and make coffee. Then I sit down on the sofa and open a huge tab-group, and scroll through my RSS feeds using Newsblur.

I spend the next 1-2 hours winnowing through all the stuff that seems important. I have a chronic pain problem and I really shouldn't sit on the sofa for more than 10 minutes, so I use a timer and get up every 10 minutes and do one minute of physio.

After a couple hours, I'm left with 3-4 tabs that I want to write articles about that day. When I started writing Pluralistic, I had a text file on my desktop with some blank HTML I'd tinkered with to generate a layout; now I have an XML file (more on that later).

First I go through these tabs and think up metadata tags I want to use for each; I type these into the template using my text-editor (gedit), like this:

<xtags>

process, blogging, pluralistic, recursion, navel-gazing

</xtags>

Each post has its own little template. It needs an anchor tag (for this post, that's "hfbd"), a title ("20 years a blogger") and a slug ("Reflections on a lifetime of reflecting"). I fill these in for each post.

Then I come up with a graphic for each post: I've got a giant folder of public domain clip-art, and I'm good at using all the search tools for open-licensed art: the Library of Congress, Wikimedia, Creative Commons, Flickr Commons, and, ofc, Google Image Search.

I am neither an artist nor a shooper, but I've been editing clip art since I created pixel-art versions of the Frankie Goes to Hollywood glyphs using Bannermaker for the Apple //c in 1985 and printed them out on enough fan-fold paper to form a border around my bedroom.

As I create the graphics, I pre-compose Creative Commons attribution strings to go in the post; there's two versions, one for the blog/newsletter and one for Mastodon/Twitter/Tumblr. I compose these manually.

Here's a recent one:

Blog/Newsletter:

(<i>Image: <a href="https://commons.wikimedia.org/wiki/File:QAnon_in_red_shirt_(48555421111).jpg">Marc Nozell</a>, <a href="https://creativecommons.org/licenses/by/2.0/deed.en">CC BY</a>, modified</i>)

Twitter/Masto/Tumblr:

Image: Marc Nozell (modified)

https://commons.wikimedia.org/wiki/File:QAnon_in_red_shirt_(48555421111).jpg

CC BY

https://creativecommons.org/licenses/by/2.0/deed.en

This is purely manual work, but I've been composing these CC attribution strings since CC launched in 2003, and they're just muscle-memory now. Reflex.

These attribution strings, as well as anything else I'll need to go from Twitter to the web (for example, the names of people whose Twitter handles I use in posts, or images I drop in, go into the text file). Here's how the post looks at this point in the composition.

<hr>

<a name="hfbd"></a>

<img src="https://craphound.com/images/20yrs.jpg">

<h1>20 years a blogger</h1><xtagline>Reflections on a lifetime of reflecting.</xtagline>

<img src="https://craphound.com/images/frnklogo.jpg">

See that <img> tag in there for frnklogo.jpg? I snuck that in while I was composing this in Twitter. When I locate an image on the web I want to use in a post, I save it to a dir on my desktop that syncs every 60 seconds to the /images/ dir on my webserver.

As I save it, I copy the filename to my clipboard, flip over to gedit, and type in the <img> tag, pasting the filename. I've typed <img src="https://craphound.com/images/ CTRL-V"> tens of thousands of times - muscle memory.

Once the thread is complete, I copy each tweet back into gedit, tabbing back and forth, replacing Twitter handles and hashtags with non-Twitter versions, changing the ALL CAPS EMPHASIS to the extra-character-consuming *asterisk-bracketed emphasis*.

My composition is greatly aided both 20 years' worth of mnemonic slurry of semi-remembered posts and the ability to search memex.craphound.com (the site where I've mirrored all my Boing Boing posts) easily.

A huge, searchable database of decades of thoughts really simplifies the process of synthesis.

Next I port the posts to other media. I copy the headline and paste it into a new Tumblr compose tab, then import the image and tag the post "pluralistic."

Then I paste the text of the post into Tumblr and manually select, cut, and re-paste every URL in the post (because Tumblr's automatic URL-to-clickable-link tool's been broken for 10+ months).

Next I past the whole post into a Mastodon compose field. Working by trial and error, I cut it down to <500 characters, breaking at a para-break and putting the rest on my clipboard. I post, reply, and add the next item in the thread until it's all done.

*Then* I hit publish on my Twitter thread. Composing in Twitter is the most unforgiving medium I've ever worked in. You have to keep each stanza below 280 chars. You can't save a thread as a draft, so as you edit it, you have to pray your browser doesn't crash.

And once you hit publish, you can't edit it. Forever. So you want to publish Twitter threads LAST, because the process of mirroring them to Tumblr and Mastodon reveals typos and mistakes (but there's no way to save the thread while you work!).

Now I create a draft Wordpress post on pluralistic.net, and create a custom slug for the page (today's is "two-decades"). Saving the draft generates the URL for the page, which I add to the XML file.

Once all the day's posts are done, I make sure to credit all my sources in another part of that master XML file, and then I flip to the command line and run a bunch of python scripts that do MAGIC: formatting the master file as a newsletter, a blog post, and a master thread.

Those python scripts saved my ASS. For the first two months of Pluralistic, i did all the reformatting by hand. It was a lot of search-replace (I used a checklist) and I ALWAYS screwed it up and had to debug, sometimes taking hours.

Then, out of the blue, a reader - Loren Kohnfelder - wrote to me to point out bugs in the site's RSS. He offered to help with text automation and we embarked on a month of intensive back-and-forth as he wrote a custom suite for me.

Those programs take my XML file and spit out all the files I need to publish my site, newsletter and master thread (which I pin to my profile). They've saved me more time than I can say. I probably couldn't kept this up without Loren's generous help (thank you, Loren!).

I open up the output from the scripts in gedit. I paste the blog post into the Wordpress draft and copy-paste the metadata tags into WP's "tags" field. I preview the post, tweak as necessary, and publish.

(And now I write this, I realize I forgot to mention that while I'm doing the graphics, I also create a square header image that makes a grid-collage out of the day's post images, using the Gimp's "alignment" tool)

(because I'm composing this in Twitter, it would be a LOT of work to insert that information further up in the post, where it would make sense to have it - see what I mean about an unforgiving medium?)

(While I'm on the subject: putting the "add tweet to thread" and "publish the whole thread" buttons next to each other is a cruel joke that has caused me to repeatedly publish before I was done, and deleting a thread after you publish it is a nightmare)

Now I paste the newsletter file into a new mail message, address it to my Mailman server, and create a custom subject for the day, send it, open the Mailman admin interface in a browser, and approve the message.

Now it's time to create that anthology post you can see pinned to my Mastodon and Twitter accounts. Loren's script uses a template to produce all the tweets for the day, but it's not easy to get that pre-written thread into Twitter and Mastodon.

Part of the problem is that each day's Twitter master thread has a tweet with a link to the day's Mastodon master thread ("Are you trying to wean yourself off Big Tech? Follow these threads on the #fediverse at @[email protected]. Here's today's edition: LINK").

So the first order of business is to create the Mastodon thread, pin it, copy the link to it, and paste it into the template for the Twitter thread, then create and pin the Twitter thread.

Now it's time to get ready for tomorrow. I open up the master XML template file and overwrite my daily working file with its contents. I edit the file's header with tomorrow's date, trim away any "Upcoming appearances" that have gone by, etc.

Then I compose tomorrow's retrospective links. I open tabs for this day a year ago, 5 years ago, 10 years ago, 15 years ago, and (now) 20 years ago:

http://memex.craphound.com/2020/01/14

http://memex.craphound.com/2016/01/14

http://memex.craphound.com/2011/01/14

http://memex.craphound.com/2006/01/14

http://memex.craphound.com/2001/01/14

I go through each day, and open anything I want to republish in its own tab, then open the OP link in the next tab (finding it in the @internetarchive if necessary). Then I copy my original headline and the link to the article into tomorrow's XML file, like so:

#10yrsago Disney World’s awful Tiki Room catches fire <a href="https://thedisneyblog.com/2011/01/12/fire-reported-at-magic-kingdom-tiki-room/">https://thedisneyblog.com/2011/01/12/fire-reported-at-magic-kingdom-tiki-room/</a>

And NOW my day is done.

So, why do I do all this?

First and foremost, I do it for ME. The memex I've created by thinking about and then describing every interesting thing I've encountered is hugely important for how I understand the world. It's the raw material of every novel, article, story and speech I write.

And I do it for the causes I believe in. There's stuff in this world I want to change for the better. Explaining what I think is wrong, and how it can be improved, is the best way I know for nudging it in a direction I want to see it move.

The more people I reach, the more it moves.

When I left Boing Boing, I lost access to a freestanding way of communicating. Though I had popular Twitter and Tumblr accounts, they are at the mercy of giant companies with itchy banhammers and arbitrary moderation policies.

I'd long been a fan of the POSSE - Post Own Site, Share Everywhere - ethic, the idea that your work lives on platforms you control, but that it travels to meet your readers wherever they are.

Pluralistic posts start out as Twitter threads because that's the most constrained medium I work in, but their permalinks (each with multiple hidden messages in their slugs) are anchored to a server I control.

When my threads get popular, I make a point of appending the pluralistic.net permalink to them.

When I started blogging, 20 years ago, blogger.com had few amenities. None of the familiar utilities of today's media came with the package.

Back then, I'd manually create my headlines with <h2> tags. I'd manually create discussion links for each post on Quicktopic. I'd manually paste each post into a Yahoo Groups email. All the guff I do today to publish Pluralistic is, in some way, nothing new.

20 years in, blogging is still a curious mix of both technical, literary and graphic bodgery, with each day's work demanding the kind of technical minutuae we were told would disappear with WYSIWYG desktop publishing.

I grew up in the back-rooms of print shops where my dad and his friends published radical newspapers, laying out editions with a razor-blade and rubber cement on a light table. Today, I spend hours slicing up ASCII with a cursor.

I go through my old posts every day. I know that much - most? - of them are not for the ages. But some of them are good. Some, I think, are great. They define who I am. They're my outboard brain.

37 notes

·

View notes

Text

A Step-by-Step SEO Guide for Dentists in 2021

A Step-by-Step SEO Guide for Dentists in 2021

This article is a really simple step-by-step guide for dentists from Experdent. Let's begin

What is Dental SEO Dental SEO is the optimization of your website so that it is easy for you to be found by patients online when they search for dental treatment or any service that you offer. In other words, SEO for dentists is to make their dental office rank higher in Google or Bing or Yahoo search results. Do people go to page-2 of Google? Yes, people do go to page-2 of Google. However, this is quite seldom. Over 70% of searchers will click on something that falls in the first five results on a Google page. And almost 90% will click on something on page-1. The rest may likely refine their search and search again rather than go to page-2.

Is SEO Digital Marketing? SEO is the digital equivalent of traditional inbound marketing. Let us explain: While many activities qualify as digital marketing, SEO is the foundation for all digital marketing processes. Conversely, a weak SEO can result in massive marketing expenditure to get results online. SEO builds cred for you with search engines. Think of it as a virtuous loop. The better the SEO for a website, the higher it will rank in search results. The higher it ranks in search results, the more people will click on it. The more people click on a consequence, this tells Google/Bing or any other search engine that yours is a quality website, and therefore the search engines push up the rank of your website even further. A high-ranking site on Google search, therefore, becomes a magnet for visitors. More visitors will lead to more conversions in any marketing funnel, i.e., more people becoming dental office patients.

Does SEO improve the visitor experience on your website? The foundation of SEO is in Technical SEO. Let's unpack that: SEO work for any quality SEO services business starts with a detailed website audit. This audit looks to fix these key elements that go into user experience: - Site Speed - Performance on Mobile - Intuitive navigation - Presence of Metadata including alt tags for images - Presence of appropriate keywords on pages - Submission of sitemaps to search engines In addition, SEO looks for compelling content and quality images and video to create an engaging experience for the visitor to a website. Through this process, technical SEO helps improve user experience while improving the website for search engines to crawl.

Is SEO advertising? The output of SEO is greater visibility for your content for the people who are searching for that content Let's say you live in downtown Toronto and you have a toothache. You will likely open up your computer or your smartphone and search something like this: - Family Dentist in downtown Toronto or - Dentist for a toothache or - Best dentist in Toronto or if its evening or the weekend - Dentist in Toronto open today If your website is optimized for SEO and contains this information (preferably in the schema), then your visitors may see your website featured on page-1 of Google. Thus, SEO is advertising by another means. Content that ranks is the best advertising because research has repeatedly shown that people will click on an organic link that appears more authentic. Research by Wordstream.com shows that "Clicks on paid search listings beat out organic clicks by nearly a 2:1 margin for keywords with high commercial intent in the US. In other words, 64.6% of people click on Google Ads when they are looking to buy an item online!" And here is the kicker - When you run an ad campaign, you get results only while the campaign is running and you are paying for clicks on your ad. In the case of SEO, however, you continue to get results for a long time. Yes, if you don't continuously optimize your website for SEO, your Search Rankings will eventually begin to drop. Why is that, you ask? Simply because your neighbours and competitors will not have stopped, they will continue improving and scaling their websites, and as a consequence, Google will keep pulling other websites over yours.

On-Page optimization Getting Google to like your website and show it on page-1 of its search results means that you need to bring quality to your pages and make them valuable and delightful for your visitors. Remember that the best websites are built for real visitors and not for search engines. The key elements that SEO helps you improve on-page are: - The quality of content on its page and its layout and organization for easy flow. The presence of images and video. - Headlines, subheadings are identified by Headline tags. For example, a page should ideally only have one headline tagged with an H1 tag. Sub headlines can go to H2 or H3 tags. Sub-sub headlines can go to H4, H5, and even H6 tags. - Metadata and descriptions that encourage clickthrough. This is the data that search engines showcase your pages within search results. - The amount of content on the page. Although the jury is still out on the length of an ideal article for Google to show it on page-1, research shows somewhere between 1000 and 1500 words. This does not mean that a page with 5000 words will consistently rank higher than a page with 1000 words. However, it does mean that when choosing between 2 pages that are otherwise identical in quality of content, Google will likely choose to showcase the page with more content in a higher position. - The number of pages on your website (remember, more is not always better). - The freshness of your content etc.

Off-Page optimization The links that other sites on the internet provide your website are essentially treated like votes. The larger the number of websites linking to your website or providing you with backlinks, the larger the number of votes for your website. This quantity of links helps Google decide where to rank your website. That said, all backlinks are not born equal. For example, a link from a higher ranking website carries more value than a brand new one-page website. Some backlinks can be toxic and be damaging for your website's rank on Google. (More on toxic backlinks and how to get rid of those in a future article). The list for how off-page links create value for you can be bullet-pointed as follows: - Citations or directory listings - In context, backlinks from articles or guest blogs - is the link reciprocal - this practice has the potential to reduce the value of the link received - The nature of the website that the link comes from: i.e., is the website in a related space? A link from a gambling website for a dentist is not likely to count as useful. - Keywords in anchor text could indicate that the link has been sourced or purchased. The best links have anchor text that is just the URL of your website or "read more" or "click here," etc.

Technical SEO We have mentioned earlier that Technical SEO is the foundation of SEO for a website. The critical elements of the work done under technical SEO are - Identification and removal of Crawl errors. - Check if your website is secure - check for HTTPS status - Check if XML sitemaps exist & if they have been submitted to major search engines - Check and improve Site Load time - Check whether the site is mobile friendly and upgrade as needed - Check and optimize robots.txt files - Check for keyword cannibalization and edit as needed - Check and improve site metadata, including image alt tags - Check for broken links and eliminate them or apply a 301 redirect that passes full link equity (ranking power) to the redirected page.

Yoast, a company that provides an extremely popular SEO plugin for Wordpress websites, in addition talks about the need for technical SEO to ensure that there is no duplicate content, nor are there any dead links in the website.

As a dentist, how do I build a high-quality website myself While we would recommend that you hire an experienced web developer to help develop or update your dental website, you could build a workable website quite easily as a DIY project There are many website development tools available, and you could choose between them for your DIY project. Three major platforms that you could use are: - WordPress - Squarespace - Wix WordPress will need the most significant amount of expertise, while both Squarespace and Wix use drag and drop functionality for you to build something quickly. There is an argument between SEOs about whether or not SEO is easier to do on WordPress versus Wix or Squarespace. However, what is clear is that WordPress enjoys a much larger ecosystem which gives you access to better tools and developers than does any other website builder. According to Search Engine Journal "WordPress content management system is used by 39.5% of all sites on the web."

Choose a reliable & high-quality hosting company If you go with Wix or Squarespace, the hosting is with the website builder's platform. If you build with WordPress or any other website builder platform, you will need to choose a high-quality, fast, and secure hosting service. Some of the major hosting services that you could consider are: - Amazon Web Services - Bluehost - Godaddy - WPengine - Siteground - Hostgator etc. There are quite literally hundreds of hosting companies for you to choose from. Choose a hosting service that has close to 100% uptime, which has enough bandwidth for your clients to visit without the speed of your website going down. Over and above that, the hosting service should have robust security and offer you a service of backups so that if your website gets damaged or hacked, they can put back the site in a matter of minutes.

How do you do keyword research? As a dentist, you have a scarcity of time. Still, given that you have decided to build a DIY website, you need to ensure that you do keyword research so that Google recognizes your content to contain the answers your future patients or clients are searching for. Some of the tools that you could use for keyword research are: - Google Keyword planner - this is a free tool - Semrush/Ahrefs/Moz - these are paid tools but will help you in getting a solid grounding of keywords

Submit your site to Google Once your website is ready, make sure to create your presence and submit it to the search engine with the following tools: - Google My Business - Google Maps - Bing Places - Yahoo Small business - Unfortunately, this tends to work only in the US unless you subscribe to some listing services.

Google Tools to monitor your website performance These tools will help you gauge the performance of your website traffic and help you find points of strengths and weaknesses on your website - Google Search Console - Google Analytics - Google Page Speed Insights

List yourself on review sites. While the best website for reviews that self selects itself is your Google my business (GMB) page. You would also find reviews very helpful from the following websites. - Facebook - Yelp - RateMyMD - Healthgrades

List your practice in online directories. A presence in online directories is a crucial aspect of SEO for any business. You should list your dental practice in as many relevant directories as possible. There is a wide variety of directories available, and you should choose well. Your choices will be along the lines of: - Local yellow pages or equivalent - Directories for your town or province - Directories for Dentists or healthcare providers - Directories of local chambers of commerce or industry organizations

Is your Dental SEO strategy working? Once you have done all this work, you will need to ask how you find out if your dental strategy is working? To get this answer, you will need to check for some of the following: - Website traffic - this is the first and foremost indicator of performance - Ranking keywords - What is the page and rank of each of the keywords you believe to be vital. And, of course, how do these keyword ranks change over time. - Reviews - the number of reviews and the grade you get on your reviews is outside SEO in a technical sense. However, reviews create a very favourable feedback loop for search engines. Generally speaking, a large number of reviews will push a website up in ranking.

How do you find how you rank without subscribing to expensive SEO tools? If you check your keywords using your computer, the computer will respond based on your search history, thereby potentially fooling you into thinking that your rank has improved. Instead, there is a simple trick to finding how you rank for your target keywords. First, open an "incognito" session in a browser such as Chrome and then search for your keyword. If you don't find it on page-1, go to page-2 continue until you find it. Of course, if you don't rank even on page-10, then functionally, you don't rank for that keyword.

How can Experdent help? While you can most definitely do all of the work that we have enumerated here, you could choose to get expert help to create powerful SEO for your website. At Experdent, our focus is SEO for dentists, and that is just what we do. So, get in touch, send us an email, or call us to set up a time to chat very quickly. At Experdent Web Services, we put these very ideas into practice. We are results-driven, dentist-focused, and experts in SEO for dental offices in North America. So, if you are a dental office looking for SEO or need advice on your digital strategy, call us for a free 30-minute consult.

1 note

·

View note

Text

Version 505

youtube

Windows release got a hotfix! If you got 505a right after the release was posted and everything is a bad darkmode, get the new one!

windows

zip

exe

macOS

app

linux

tar.gz

I had a great couple of weeks fixing bugs, exposing EXIF and other embedded metadata better, and making it easier for anyone to run the client from source.

full changelog (big one this week)

EXIF

I added tentative EXIF support a little while ago. It wasn't very good--it never knew if a file had EXIF before you checked, so it was inconvenient, and non searchable--but the basic framework was there. This week I make that prototype more useful.

First off, the client doesn't just look at EXIF. It also scans images and animations for miscellaneous 'human-readable embedded metadata'. This is often some technical timing or DPI data, or information about the program that created the file, but, most neatly, for the new AI/ML-drawn images everyone has been playing with, many of the generation engines embed the creation prompt in the header of the output png, and this is now viewable in the client!

Secondly, the client now knows ahead of time which files have this data to show. A new file maintenance job will be scheduled on update for all your existing images and animations to retroactively check for this, and they will fill in in the background over the next few weeks. You can now search for which files have known EXIF or other embedded metadata under a new combined 'system:embedded metadata' predicate, which works like 'system:dimensions' and also bundles the old 'system:has icc profile' predicates.

Also, the 'cog' button in the media viewer's top hover window where you would check for EXIF is replaced by a 'text on window' icon that is only in view if the file has something to show.

Have a play with this and let me know how it goes. The next step here will be to store the actual keys and values of EXIF and other metadata in the database so you can search them specifically. It should be possible to allow some form of 'system:EXIF ISO level>400' or 'system:has "parameters" embedded text value'.

running from source

I have written Linux (.sh) and macOS (.command) versions of the 'running from source' easy-setup scripts. The help is updated too, here:

https://hydrusnetwork.github.io/hydrus/running_from_source.html

I've also updated the setup script and process to be simpler and give you guidance on every decision. If you have had trouble getting the builds to work on your OS, please try running from source from now on. Running from source is the best way to relieve compatibility problems.

I've been working with some users to get the Linux build, as linked above, to have better mpv support. We figured out a solution (basically rolling back some libraries to improve compatibility), so more users should get good mpv off the bat from the build, but the duct tape is really straining here. if you have any trouble with it, or if you are running Ubuntu 22.04 equivalent, I strongly recommend you just move to running from source.

If these new scripts go well, I think that, in two or three months, I may stop putting out the Linux build. It really is the better way to run the program, at least certainly in Linux where you have all the tools already there. You can update in a few seconds and don't get crashes!

misc highlights

If you are interested in changing page drag and drop behaviour or regularly have overfull page tab bars, check the new checkboxes in options->gui pages.

If you are on Windows and have the default options->style, booting the client with your Windows 'app darkmode' turned on should magically draw most of the client stuff in the correct dark colours! Switching between light and dark while the program is running seems buggy, but this is a step forward. My fingers are crossed that future Qt versions improve this feature, including for multiplatform.

Thanks to a user, the twitter downloader is fixed. The twitter team changed a tiny thing a few days ago. Not sure if it is to do with Elon or not; we'll see if they make more significant changes in future.

I fixed a crazy bug in the options when you edit a colour but find simply moving the mouse over the 'colour gradient' rectangle would act like a drag, constantly selecting. This is due to a Qt bug, but I patched it on our side. It happens if you have certain styles set under options->style, and the price of fixing the bug is I have to add a couple seconds of lag to booting and exiting a colour picker dialog. If you need to change a lot of colours, then set your style to default for a bit, where there is no lag.

next week

I pushed it hard recently, and I am due a cleanup week, so I am going to take it easy and just do some refactoring and simple fixes.

0 notes

Text

Archivists Are Mining Parler Metadata to Pinpoint Crimes at the Capitol

Using a massive 56.7 terabyte archive of the far-right social media site Parler that was captured on Sunday, open-source analysts, hobby archivists, and computer scientists are working together to catalog videos and photos that were taken at the attack on the U.S. Capitol last Wednesday.

Over the last few days, Parler was deplatformed by Amazon Web Services, the Google Play Store, and the Apple App Store, which has taken it offline (at least temporarily). But before it disappeared, a small group of archivists made a copy of the overwhelming majority of posts on the site.

While all the data scraped from Parler was publicly available, archiving it allows analysts to extract the EXIF metadata from photos and videos uploaded to the social media site en masse and to examine specific ones that were taken at the insurrection on Capitol Hill. This data includes specific GPS coordinates as well as the date and time the photos were taken. These are now being analyzed in IRC chat channels by a handful of people, some of whom believe crimes can be catalogued and given to the FBI.

"I hope that it can be used to hold people accountable and to prevent more death," donk_enby, the hacker who led the archiving project, told Motherboard on Monday.

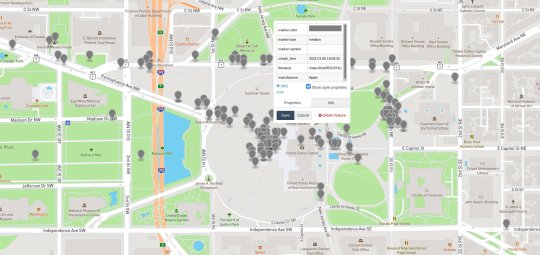

One technologist took the scraped Parler data, took every file that had GPS coordinates included within it, formatted that information into JSON, and plotted those onto a map. The technologist then shared screenshots of their map with Motherboard, showing Parler posts originating from various countries, and then the United States, and finally in or around the Capitol itself. In other words, they were able to show that Parler users were posting material from the Capitol on the day of the rioting, and can now go back into the rest of the Parler data to retrieve specific material from that time.

They also shared the newly formatted geolocation data with Motherboard. Motherboard granted the technologist anonymity to speak more candidly about a potentially sensitive topic.

Some of the plotted Parler GPS data. Image: Motherboard

The technologist said that, to at least some extent, since this data shows the use of Parler during the Capitol raid attempt, "that's a piece of the overall puzzle which someone, somewhere can use."

"It's definitely to help facilitate or otherwise create another exposure that the public can consume," they added, explaining their motivations for cleaning the Parler data.

This particular technologist did not distribute their version of the data more widely, however, with the aim of preventing abuse and misuse of the data.

"Sure, the source data are already public. But that doesn't mean I have to add an even easier path to data misuse," they said.

"For this Parler data, it would clearly not be correct to say 'every single user is a Nazi' and so by complete disclosure you are enabling someone who WOULD hold such a narrative to make bad choices and take bad actions if they wished," they added.

Do you know anything else about the Parler data? We'd love to hear from you. Using a non-work phone or computer, you can contact Joseph Cox securely on Signal on +44 20 8133 5190, Wickr on josephcox, OTR chat on [email protected], or email [email protected].

Earlier on Tuesday, an analysis of the metadata by Gizmodo also showed that Parler users made it into the Capitol.

Others who have managed to get their hands on the Parler data have begun to make lists of videos and photos that have GPS coordinates on Capitol Hill, and have written scripts to pull those videos from the broader dump so people can analyze them. On an IRC chat channel, a small group of people are watching and analyzing videos and are posting their video IDs and description into a Google spreadsheet called "Notable Parler Videos." One description reads: "at the capital, pushing police, guy in MAGA hat screaming 'I need some violence now.'" A description for the IRC channel includes a link to an FBI tip line specifically targeted at identifying people at the riot.

One open source project calling itself Parler Analysis has collected different tools from around the web to handle the data in different ways. One is used to scrape usernames, for example, while another is for extracting images and videos, and yet another is an alternative cleaned dataset of cleaned Parler geolocation coordinates in a different format.

Subscribe to our cybersecurity podcast CYBER, here.

Archivists Are Mining Parler Metadata to Pinpoint Crimes at the Capitol syndicated from https://triviaqaweb.wordpress.com/feed/

0 notes

Text

Version 312

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had an ok week. I mostly worked on smaller downloader jobs and tag import options.

tag import options

Tag import options now has more controls. There is a new 'cog' icon that lets you determine if tags should be applied--much like file import options's recent 'presentation' checkboxes--to 'new files', 'already in inbox', and 'already in archive', and there is an entry to only add tags that already exist (i.e. have non-zero count) on the service.

Sibling and parent filtering is also more robust, being applied before and after tag import options does its filtering. And the 'all namespaces' compromise solution used by the old defaults in file->options and network->manage default tag import options is now automatically replaced with the newer 'get all tags'.

Due to the sibling and parent changes, if you have a subscription to Rule34Hentai or another site that only has 'unnamespaced' tags, please make sure you edit its tag import options and change it to 'get all tags', as any unnamespaced tags that now get sibling-collapsed to 'creator' or 'series' pre-filtering will otherwise be discarded. Due to the more complicated download system taking over, 'get all tags' is the new option to go for if you just want everything, and I recommend it for everyone.

For those who do just want a subset of available tags, I will likely be reducing/phasing out the explicit namespace selection in exchange for a more complicated tag filter object. I also expect to add some commands to make it easier to mass-change tag import options for subscriptions and to tell downloaders and subscriptions just to always use the default, whatever that happens to be.

misc downloader stuff

I have added a Deviant Art parser. It now fetches the embedded image if the artist has disabled high-res download, and if it encounters a nsfw age-gate, it sets an 'ignored' status (the old downloader fetched a lower-quality version of the nsfw image). We will fix this ignored status when the new login system is in place.

Speaking of which, the edit subscriptions panels now have 'retry ignored' buttons, which you may wish to fire on your pixiv subscriptions. This will retry everything has has previously been ignored due to being manga, and should help in future as more 'ignored' problems are fixed.

The 'checker options' on watchers and subscriptions will now keep a fixed check phase if you set a static check period. So, if you set the static period as exactly seven days, and the sub first runs on Wednesday afternoon, it will now always set a next check time of the next Wed afternoon, no matter if they actually happen to subsequently run on Wed afternoon or Thurs morning or a Monday three weeks later. Previously, the static check period was being added to the 'last completed check time', meaning these static checks were creeping forward a few hours every check. If you wish to set the check time for these subs, please use the 'check now' button to force a phase reset.

I've jiggled the multiple watcher's sort variables around so that by default they will sort with subject alphabetical but grouped by status, with interesting statuses like DEAD at the top. It should make the default easier to at-a-glance see if you need to action anything.

full list

converted much of the increasingly complicated tag import options to a new sub-object that simplifies a lot of code and makes things easier to serialise and update in future

tag import options now allows you to set whether tags should be applied to new files/already in inbox/already in archive, much like the file import options' 'presentation' checkboxes

tag import options now allows you to set whether tags should be filtered to only those that already have a non-zero current count on that tag service (i.e. only tags that 'already exist')

tag import options now has two 'fetch if already in db' checkboxes--for url and hash matches separately (the hash stuff is advanced, but this new distinction will be of increasing use in the future)

tag import options now applies sibling and parent collapse/expansion before tag filtering, which will improve filtering accuracy (so if you only want creator tags, and a sibling would convert an unnamespaced tag up to a creator, you will now get it)

the old 'all namespaces' checkbox is now removed from some 'defaults' areas, and any default tag import options that had it checked will instead get 'get all' checked as they update

caught up the ui and importer code to deal with these tag import option changes

improved how some 'should download metadata/file' pre-import checking works

moved all complicated 'let's derive some specific tag import options from these defaults' code to the tag import options object itself

wrote some decent unit tests for tag import options

wrote a parser for deviant art. it has source time now, and falls back to the embedded image if the artist has disabled high-res downloading. if it finds a mature content click-through (due to not being logged in), it will now veto and set 'ignored' status (we will revisit this and get high quality nsfw from DA when the login manager works.)

if a check timings object (like for a subscription or watcher) has a 'static' check interval, it will now apply that period to the 'last next check time', so if you set it to check every seven days, starting on Wednesday night, it will now repeatedly check on Wed night, not creep forward a few minutes/hours every time due to applying time to the 'last check completed time'. if you were hit by this, hit 'check now' to reset your next check time to now

the multiple watcher now sorts by status by default, and blank status now sorts below DEAD and the others, so you should get a neat subject-alphabetical sort grouped by interesting-status-first now right from the start

added 'clear all multiwatcher highlights' to 'pages' menu

fixed a typo bug in the new multiple watcher options-setting buttons

added 'retry ignored' buttons to edit subscription/subscriptions panels, so you can retry pixiv manga pages en masse

added 'always show iso time' checkbox to options->gui, which will stop replacing some recent timestamps with '5 minutes ago'

fixed an index-selection issue with compound formulae in the new parsing system

fixed a file progress count status error in subscriptions that was reducing progress rather than increasing range when the post urls created new urls

improved error handling when a file import object's index can't be figured out in the file import list

to clear up confusion, the crash recovery dialog now puts the name of the default session it would like to try loading on its ok button

the new listctrl class will now always sort strings in a case-insensitive way

wrote a simple 'fetch a url' debug routine for the help->debug menu that will help better diagnose various parse and login issues in future

fixed an issue where the autocomplete dropdown float window could sometimes get stuck in 'show float' mode when it spawned a new window while having focus (usually due to activating/right-clicking a tag in the list and hitting 'show in new page'). any other instances of the dropdown getting stuck on should now also be fixable/fixed with a simple page change

improved how some checkbox menu data is handled

started work on a gallery log, which will record and action gallery urls in the new system much like the file import status area

significant refactoring of file import objects--there are now 'file seeds' and 'gallery seeds'

added an interesting new 'alterate' duplicate example to duplicates help

brushed off and added some more examples to duplicates help, thanks to users for the contributions

misc refactoring

next week

I also got started on the gallery overhaul this week, and I feel good about where I am going. I will keep working on this and hope to roll out a 'gallery log'--very similar to the file import status panel, that will list all gallery pages hit during a downloader's history with status and how many links parsed and so on--within the next week or two.

The number of missing entries in network->manage url class links is also shrinking. A few more parsers to do here, and then I will feel comfortable to start removing old downloader code completely.

1 note

·

View note

Photo

Russian efforts to meddle in American politics did not end at Facebook and Twitter. A CNN investigation of a Russian-linked account shows its tentacles extended to YouTube, Tumblr and even Pokémon Go.

PRATE NOTE: THE ABSOLUTE STATE OF THE RUSSIAN FEARMONGERING IN AMERICAN MEDIA

------------------------

One Russian-linked campaign posing as part of the Black Lives Matter movement used Facebook, Instagram, Twitter, YouTube, Tumblr and Pokémon Go and even contacted some reporters in an effort to exploit racial tensions and sow discord among Americans, CNN has learned.

The campaign, titled "Don't Shoot Us," offers new insights into how Russian agents created a broad online ecosystem where divisive political messages were reinforced across multiple platforms, amplifying a campaign that appears to have been run from one source -- the shadowy, Kremlin-linked troll farm known as the Internet Research Agency.

A source familiar with the matter confirmed to CNN that the Don't Shoot Us Facebook page was one of the 470 accounts taken down after the company determined they were linked to the IRA. CNN has separately established the links between the Facebook page and the other Don't Shoot Us accounts.

The Don't Shoot Us campaign -- the title of which may have referenced the "Hands Up, Don't Shoot" slogan that became popular in the wake of the shooting of Michael Brown -- used these platforms to highlight incidents of alleged police brutality, with what may have been the dual goal of galvanizing African Americans to protest and encouraging other Americans to view black activism as a rising threat.

The Facebook, Instagram and Twitter accounts belonging to the campaign are currently suspended. The group's YouTube channel and website were both still active as of Thursday morning. The Tumblr page now posts about Palestine.

Related: Exclusive: Russian-linked group sold merchandise online

All of the aforementioned companies declined to comment on the Don't Shoot Us campaign. Representatives from Facebook, Twitter and Alphabet, the parent company of Google and YouTube, have agreed to testify before the Senate and House Intelligence Committees on November 1, according to sources at all three companies

Tracing the links between the various Don't Shoot Us social media accounts shows how one YouTube video or Twitter post could lead users down a rabbit hole of activist messaging and ultimately encourage them to take action.

The Don't Shoot Us YouTube page, which is simply titled "Don't Shoot," contains more than 200 videos of news reports, police surveillance tape and amateur footage showing incidents of alleged police brutality. These videos, which were posted between May and December of 2016, have been viewed more than 368,000 times.

All of these YouTube videos link back to a donotshoot.us website. This website was registered in March 2016 to a "Clerk York" in Illinois. Public records do not show any evidence that someone named Clerk York lives in Illinois. The street address and phone number listed in the website's registration belong to a shopping mall in North Riverside, Illinois.

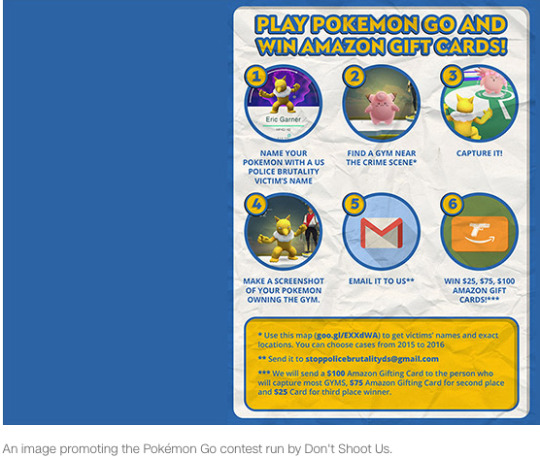

The donotshoot.us website in turn links to a Tumblr account. In July 2016, this Tumblr account announced a contest encouraging readers to play Pokémon Go, the augmented reality game in which users go out into the real world and use their phones to find and "train" Pokémon characters.

Specifically, the Don't Shoot Us contest directed readers to go to find and train Pokémon near locations where alleged incidents of police brutality had taken place. Users were instructed to give their Pokémon names corresponding with those of the victims. A post promoting the contest showed a Pokémon named "Eric Garner," for the African-American man who died after being put in a chokehold by a New York Police Department officer.

Winners of the contest would receive Amazon gift cards, the announcement said.

It's unclear what the people behind the contest hoped to accomplish, though it may have been to remind people living near places where these incidents had taken place of what had happened and to upset or anger them.

CNN has not found any evidence that any Pokémon Go users attempted to enter the contest, or whether any of the Amazon Gift Cards that were promised were ever awarded -- or, indeed, whether the people who designed the contest ever had any intention of awarding the prizes.

"It's clear from the images shared with us by CNN that our game assets were appropriated and misused in promotions by third parties without our permission," Niantic, the makers of Pokémon Go, said in a statement provided to CNN.

"It is important to note that Pokémon GO, as a platform, was not and cannot be used to share information between users in the app so our platform was in no way being used. This 'contest' required people to take screen shots from their phone and share over other social networks, not within our game. Niantic will consider our response as we learn more."

The Tumblr page that promoted the contest no longer posts about U.S. police violence. It now appears to be devoted pro-Palestine campaigns.

Tumblr would not confirm to CNN if the same people who operated the Tumblr page about Black Lives Matter now operate the pro-Palestinian page, citing the company's privacy policy. Tumblr also would not say whether it is investigating potential Russian use of its platform before, during or after the 2016 presidential election.

Related: Facebook could still be weaponized again for the 2018 midterms

Don't Shoot Us also worked to spread its influence beyond the digital world.

It used Facebook -- on which it had more than 254,000 likes as of September 2016 -- to publicize at least one real-world event designed to appear to be part of the Black Lives Matter Movement.

Just a day after the shooting of Philando Castile by police in a suburb of Saint Paul, Minnesota in July 2016, local activists in Minnesota noticed a Facebook event for a protest being shared by a group they didn't recognize.

Don't Shoot Us was publicizing a protest outside the St. Anthony Police Department, where Jeronimo Yanez, the officer who shot Castile, worked. Local activists had been protesting outside the Minnesota Governor's Mansion.

When an activist group with ties to a local union reached out to the page, someone with Don't Shoot Us replied and explained that they were not in Minnesota but planned to open a "chapter" in the state in the following months.

The local group became more suspicious. After investigating further, including finding the website registration information showing a mall address, they posted on their website to say that Don't Shoot Us was a "total troll job."

CNN has reached out to those local activists but had not heard back as of the time of this article's publication.

Brandon Long, the state party chairman of the Green Party of Minnesota, remembers hearing about the planned Don't Shoot Us event. He told CNN, "We frequently support Black Lives Matter protests and demonstrations and we know pretty much all the organizers in town and that page wasn't recognized by anyone."

This was not the only event that Don't Shoot Us worked to promote.

In June 2016, someone using the Gmail address that had been posted as part of the Pokémon Go contest promotion reached out to Brandon Weigel, an editor at Baltimore City Paper, to promote a protest at a courthouse where one of the officers involved in the arrest of Freddie Gray was due to appear.

The email made Weigel suspicious. "City Paper editors and reporters are familiar with many of the activist groups doing work in Baltimore, so it was strange to receive an email from an outside group trying to start a protest outside the courthouse," Weigel told CNN.

Weigel wasn't the only reporter to be on the receiving end of communications from Don't Shoot Us. Last January, someone named Daniel Reed, who was described as the "Chief Editor" of DoNotShoot.Us, gave an interview to a contributor at the now defunct International Press Foundation (IPF), a website where students and trainee journalists regularly posted articles.

"There is no civilised country in the world that suffers so many cases of police brutality against civilians," IPF quoted "Reed" as saying, among other things. (IPF was responsible for the British spelling of "civilised.")

The IPF contributor confirmed to CNN that the interview occurred through email and that she never spoke to "Reed" on the phone. The email address that "Reed" used for the interview was the same one that reached out to Weigel in Baltimore and that was included in the promotion for the Pokémon Go contest.

"Reed" sent the answers to IPF's questions in a four-page Microsoft Word document. The document, which outlined what "Reed" described as problems with the American justice system and police brutality, was written entirely in English.

However, when CNN examined the document metadata, "Название," the Russian word for "name," was part of the document properties.

Two cybersecurity experts who reviewed the document's metadata told CNN that it was likely created on a computer or a program running Russian as its primary language.

To date, Facebook has said that it identified 470 accounts linked to the Internet Research Agency, while Twitter has identified 201 accounts. Google has not released its findings, though CNN has confirmed that the company has identified tens of thousands of dollars spent on ad buys by Russian accounts.

Facebook and Twitter have submitted detailed records of their findings to both Congress and the office of Special Counsel Robert Mueller, who is conducting an investigation into Russian meddling in the 2016 presidential campaign.

On Friday, Maria Zakharova, the spokeswoman for the Russian Foreign Ministry, made her displeasure with this story clear in a Facebook post written in Russian, calling CNN a "talentless television channel" and saying,"Again the Russians are to blame... and the Pokémons they control."

-- CNN's Jose Pagliery and Tal Yellin contributed reporting.

Fcuking hilarioooouss

MEDIA E D I A

ok, lets break it down:

1. the sustained russia narrative serves the purpose of preventing the left wing in America to have a moment of self-reflection WRT the corruption and plutocracy inherent within the Democrat Party after the complete collapse of Hillary’s Presidential bid. The Democrats certainly dont want to fan the flames of the populist left and that has been their primary enemy since day 1 of the trump Presidency (and before, if you consider how Bernie got marginalized).

2. The russia narrative sustains viewership, and it always has been, as a corporate media understood “nothing burger” in order to fleece viewership ratings from angry americans seeking someone to blame for the loss of Clinton. Liberal americans shocked about Trumps victory want something to blame and scapegoating a hostile foreign nation certainly feeds that desperate search while misdirecting their attention away from the personal failures of the Clinton campaign and the bombshells from the leaks.

3. The affiliation of Black Lives Matter with Russia Hacked The Election™ has the effect of undermining progressive support among liberal and centrist voters, who will now disregard news of police brutality as Fake News Russia Collusion in favour of Trump

What’s more, they will begin to understand Black Lives Matter as an organization produced by Russia and thus, double plus ungood and of course pointing out BLM with now prompt calls of Putin supporting FAKE NEEEeEEEeeEEEeeeeWWWWSSS!!!.

4. The narrative building up Russia as a super genius, ultimate evil that has infiltrated american society and the minds of it’s unruly youth and uppity minorities is cemented in the minds of baby boomers. The illusion of Putin as this super genius movie villain serves the enduring American hegemonic goal of destroying russia by preparing the American public for war, you see, because Russia is already at war with America, and thus it must be stopped. They have infiltrated pokemon go, by god. What’s next? Mind control rays?! This is McCarthyism at its FINEST my dudes.

Why the left isnt losing their fucking minds and pointing out the obvious nature of this psyop against the mostly democrat viewership of nighttime newcasts like CNN is beyond me.

But true progressives always lose, because they cant think around this shit in a lateral way. Progressive activism functions like constantly charging at the door with a nazi symbol crudely drawn on it, hoping continued, unrelenting brute strength wins the day as they get get outmaneuvered by their liberal “allies” and the right wing who exploit their bullheadedness.

30 notes

·

View notes

Text

The No. 1 Question Everyone Working in premire 'collaborative video editing Should Know How to Answer

If you want to strike that same balance on your video project, this tool might be useful. For instance, video teams can use Workzone to access a range of reports that outline the progress they have made on a given project. General video production software can often be a great choice, given that these tools strongly emphasize usability. As an added bonus, these tools can be adjusted to fit your exact needs. Your team members will work very hard on your project, and their time will often be limited.

How do I export Premiere Pro to mp4?

MP4 is a file container format, while H. 264 is actually a video compression codec that requires a video container to host the encoded video. Most of the time, H. 264 refers to MP4 file encoded with H.

Tags: Editing, File Management, Organization, Premiere Pro, Productivity

This development further boosts workflow efficiency for media professionals, allowing them to work within one interface without the need for jumping from one UI to the other, which takes extra time and stifles the creative process. Axle 2016.2 is the latest edition of axle’s award-winning media management software, now optimized for media libraries with up to 1 million assets. Simply point axle at the media files you want to manage and it automatically creates low-bandwidth proxies you can then access from any web browser. There’s no need to move your media files or change your system setup. Our new plug-in panel for Adobe Premiere® Pro CC, included with every axle 2016 system, enables editors to search, see previews and begin working on footage without leaving their favorite NLE software.

Production, without the stress

But the Digital Asset Manager is more than just a big geek overseeing information and data in all its forms. While the role requires sharp analytical skills, it is the crossover skills tied to people and how they interact with digital technologies that are equally important. Metadata for an asset can include its packaging, encoding, provenance, ownership and access rights, and location of original creation. It is used to provide hints to the tools and systems used to work on, or with, the asset about how it should be handled and displayed. For example, DAM helps organizations reduce asset-request time by making media requests self-serving.

For whatever reason, it’s always nice to be able to work off of a version of your project that is linked to proxy media (meaning low-res versions of your clips). In the past, it was critical that editors would plan for a proxy workflow before they would start editing, and generate proxy files to be ingested into their NLE when first setting up their session.

Limelight Video Platform is the fastest and most intuitive way to manage and distribute online video to media devices everywhere. The power and simplicity of Limelight Video Platform lets you manage, publish, syndicate, measure, and monetize web video with ease.

How does digital asset management work?

Mid-range systems, supporting multiple users and 50-300GB of storage, have entry-level products in the range of $2,300 - $15,000 per annum. These can be hosted, cloud-based or installed on your own infrastructure.

How To Edit Vocals On Neva 7 Post Production Software?

How does a digital asset management system work?

A much better way to use illustrations is to employ visual assets — photos, charts, visual representations of concepts, comics or annotated screenshots used to make a point. Visual assets complement a story rather than telling the story entirely like an infographic does.

You can also subscribe to my Premiere Gal YouTube channel for weekly video editing and production tutorials to help you create better video. Both Adobe Premiere vs Final Cut Pro offers almost the same kind of video editing but still, they differ in a lot of ways. Because Adobe Premiere comes as part of Adobe’s Creative Cloud, which is a Marvel’s Avengers-esque suite of tools to support your video making.

Support for ProRes on macOS and Windows streamlines video production and simplifies final output, including server-based remote rendering with Adobe Media Encoder. Adobe Premiere Pro supports several audio and video formats, making your post-production workflows compatible with the latest broadcast formats. Over time, these cache files can not only fill up your disk space, but also slow down your drive and your video editing workflow.

Some filename extensions—such as MOV, AVI, and MXF denote container file formats rather than denoting specific audio, video, or image data formats. Container files can contain data encoded using various compression and encoding schemes. Premiere Pro can import these container files, but the ability to import the data that they contain depends on the codecs (specifically, decoders) installed. Learn about the latest video, audio, and still-image formats that are supported by Adobe Premiere Pro.

Now when you load a timeline from the Media Browser it automatically opens a sequences tab called Name (Source Monitor) that is read only. Premiere has always had a Project Manager but what it was never able to do is transcode and what it wasn’t very good at was truncating clips to collect only what was used in the edit. I have had only partial success with the Project Manager over the years and with some formats it would never work properly with Premiere copying the entire clip instead of just the media used with handles specified.

Depending on the clips you have and the types of metadata you are working with, you might want to display or hide different kinds of information. On one hand, this is great – you get updates to the software as soon as they’re pushed out, and the $20/month annual subscription fee beats paying hundreds of dollars up front. Regardless of how deep you want to go into the video editing rabbit hole, the best reason to get Premiere Pro CC are its time-saving features. JKL trimming is the most noteworthy as it lets you watch a clip and edit it in real time just by using three keyboard shortcuts!

What editing software do Youtubers use?

Many effects and plugins for Premiere Pro CC require GPU projective post production editing acceleration for rendering and playback. If you don't have this on, you will either get a warning or experience higher render times and very slow playback. To make sure you do have this on, go to File > Project Settings > General.

The footage is stunning, and I find myself wanting to work on it right away, but before I can do that I must back it up, but before I can even touch it I must make sure I have the contracts for each drive that is coming in. We work with and commission many different videographers and photographers so I need to know which office the drive came from and who it belongs to, what the rights are, etc…that is something I highly recommend. I have a folder labeled contract/release forms on our internal server so I know which contract goes to which hard drive/footage. One of the first things I do now, is label a drive since we are working with so many, I need to know what footage is on what drive. Once I get the drives and back up the footage, I then create stories depending on the project and I will also create b-roll packages.

0 notes

Text

Basics of On Page Optimization Checklist for Your SEO Campaign

This piece of writing will be centered on the importance of onpage optimization and the basic elements of on-page SEO optimization checklist for your SEO campaign and the critical role played by it in the lasting success and catering to the corporate identity needs that entrepreneurs may have.

Importance of on page optimization

Many new online marketing channels have appeared; lately, they are centered on sizable bites of the internet marketing chart.

With so many changes taking place, many business owners opted for popular options like social media marketing and skipping the idea of SEO campaigns without carefully assessing, analyzing and understanding the real data.

While there are others who surrendered to the saga that “SEO is no more” OR “SEO is dead”. They believed that driving target audience to their website can no more be done with the help of search engine optimization campaigns.

The truth is, “

SEO is alive and kicking it is still the major source of

driving traffic to website

, provided one follows what the doctor (Google) and other major search engines have ordered.

To stay on top of this domain relying on

SEO services

that’s trustworthy and affordable in nature would be one’s best bet.

On page optimization checklist 2019

Moving on to the significance of On-Page SEO, the following are some basic factors that one must be familiar with and consider in SEO campaign, if the idea is to hit the rock hard and get fruitful and stunning results:

1. Choose the right and relevant set of keywords:

Keyword research can be classified as the core first step associated with almost any online promotional campaign, more importantly when it is a goal to optimize the On-page SEO factors. It is essential that one chooses the relevant and

long tail keywords

to attract the right and relevant target visitors to specific pages.

2. One must come up with dashing domain names:

This is a core element to have a perfect domain name for website. An On page SEO optimization can be easily turned into success if one keeps this element intact. Following are some additional tips associated with domain name optimization:

Consistent Domains:

Old school domains:

Domain names spelt in old school passion will always be found with ease when compared to some of the unorthodox ones.

URLs holding page keywords:

The use of keywords at the time of creating the page, blog post name and categories are always going to be useful, make this your habit if you mean business.

A good example here would be

3. Metadata Optimization:

One as a SEO expert must not forget that each page of one’s website would require perfect optimization. This will help them in becoming further search engine friendly with the help of elements like Meta Tile, Descriptions, Meta Keywords and Alt tags.

Title Tags:

Keep them between 60 characters to be precise. They are the text advertisement for any particular page where they are used, and they are the first things seen by readers even before the loading of the actual website on their browsers. Keep them attractive yet to the point and unique for optimum results.

Meta Description:

This is a brief summary that represents that actual content which can be found on a particular page for which it has been crafted. It will be appearing as a short version on SERPs just to provide visitors with a quick insight of what’s available on offer. This must not be duplicated or plagiarized.

4. Creation of unique and relevant content for onpage:

This is one of the most sensitive and tricky phase. One would need to ensure that the content offered for website On page is not only unique and relevant but at the same time backed with a perfect balance of the target keywords. Remember, satisfying search engines would not be an easy mission.

Search engine bots and crawlers will find it easy to rank one’s website accordingly based on the quality of content being used, embedding relevant and particular keywords and as mentioned above, one must avoid using copied content.

Core content:

This ideally would be the main text, descriptions, and titles set for each page. The content should be specifically focused on that particular page as this will help in keeping the relevancy intact.

Authority:

If written professionally, your content will improve the authoritativeness of your web pages and others will be attracted towards it, they will find it hard to avoid it and would definitely want to use the material shared by you as a reference or even may proudly link to it.

User experience:

All the content added to web pages must be relevant, simple and easy to understand. This will provide your target audience with excellent user experience and result in retaining them for more extended periods of time.

This approach shall not be limited to text content only, other elements like

easy navigation, images

and videos etc., all fall under these brackets.

All the navigation must respond adequately, i.e. there should be no broken link.

Page loading time

shall be remarkably fast; else the target audience will disregard a website and switch to another one that possesses all the said qualities when it comes to its performance.

5. On Page SEO techniques for media file optimization:

Don’t limit your focus only on text content when fine-tuning the on-page SEO setup. You have the liberty to focus on other associated media types such as images, video and other non-text elements.

Remember, there are many social media elements, and their span of focus may vary from one type to another when it comes to content. Focusing on all of them in their unique passion will improve your web page visibility on SERPs a great deal.

Some of the highly appreciated and best exercises for media files optimization comprised of:

File names Optimization:

The use of descriptive file names for content like images and video, preferably a relevant keyword is the best practice. For example,

Alt tags or Alt text Optimization:

It is essential to complete this attribute with a brief description of the content used, i.e. an image or a video. Search engine bots and crawlers rely on these tags when identifying them.

Because these crawlers can only read the text, therefore, the utilization of such Alt Text attributes may help one in optimizing the image to perfection for amplified search results.

6. Creating Product/Service-oriented landing pages:

This approach of having specific product landing pages no doubt will play the beneficial role and rank easily in search engines. Such specific pages enable one to share detailed information about particular products and services, as a results target audience find them easy because they are educated with the help of such pages.

One as a webmaster can add the most profitable keywords, by inserting them in rightly in Meta description and Title for fruitful results. There is no limit on what should be the number of specific pages; it all depends on the number of products and services.

So, you may

create specific product pages

as many as you need; however, you must ensure that they are backed with the right and relevant keywords, product and service description and are fully optimized with no slow loading speeds or broken links.

Pulling the brakes:

That’s it for today, all the on-page SEO factors and techniques for optimization shared above can be applied immediately by one for the sake of better ranking in SERPs.

Here it is essential to understand that this process would require ample time as it is time-consuming. Rushing out, therefore, in this crucial phase may not help as one may end up in making small blunders that may result in significant losses.

No need to rush, take care of things in a step-by-step manner or alternatively consult with

One must not take SEO campaigns on lighter notes, they are here for good, alive and kicking and it is SEO that can uplift the overall image of one’s brand and corporate identity, no matter which part of the world one operates.

Many times, updating your website while shopping is something that you know is important. Website Designers in Lakewood, CO is here to help you in your website development as per your requirement with best final product.

0 notes

Link

Having some self-hosted services and tools can make your life as a developer, and your life in general, much easier. I will share some of my favorites in this post. I use these for just about every project I make and they really make my life easier.

All of this, except OpenFaaS, is hosted on a single VPS with 2 CPU cores, 8GB of RAM and 80GB SSD with plenty of capacity to spare.

Huginn

Huginn is an application for building automated agents. You can think of it like a self hosted version of Zapier. To understand Huginn you have to understand two concepts: Agens and Events. An Agent is a thing that will do something. Some Agents will scrape a website while others post a message to Slack. The second concept is an Event. Agents emit Events and Agents can also receive Events.

As an example you can have a Huginn agent check the local weather, then pass that along as an event to another agent which checks if it is going to rain. If it is going to rain the rain checker agent will pass the event along, otherwise it will be discarded. A third agent will receive an event from the second agent and then it will send a text message to your phone telling you that it is going to rain.

This is barely scratching the surface of what Huginn can do though. It has agents for everything: Sending email, posting to slack, IoT support with MQTT, website APIs, scrapers, and much more. You can have agents which receive inputs from custom web hooks and cron-like agents which schedules other agents and so on.

The Huginn interface

Huginn is a Ruby on Rails application and can be hosted in Docker. I host mine on Dokku. I use it for so many things and it is truly the base of all my automation needs. Highly recommended! If you are looking for alternatives then you can take a look at Node-RED and Beehive. I don't have personal experience with either though.

Huginn uses about 350MB of RAM on my server, including the database and the background workers.

Thumbor

Thumbor is a self-hosted image proxy like Imgix. It can do all sorts of things with a single image URL. Some examples:

Simple caching proxy

Take the URL and put your Thumbor URL in front like so: https://thumbs.mskog.com/https://images.pexels.com/photos/4048182/pexels-photo-4048182.jpeg

Simple enough. Now you have a version of the image hosted on your proxy. This is handy for example when you don't want to hammer the origin servers with requests when linking to the image.

Resizing

That image is much too large. Lets make it smaller! https://thumbs.mskog.com/800x600/https://images.pexels.com/photos/4048182/pexels-photo-4048182.jpeg

Much smaller. Note that all we had to do is add the desired format.

Resizing to specific height or width

What about a specific width while keeping the aspect ratio? No problem! https://thumbs.mskog.com/300x/https://images.pexels.com/photos/4048182/pexels-photo-4048182.jpeg

Quality

Smaller file size? https://thumbs.mskog.com/1920x/filters:quality(10)/https://images.pexels.com/photos/4048182/pexels-photo-4048182.jpeg

You get the idea! Thumbor also has a bunch of other filters like making the image black and white, changing the format and so on. It is very versatile and is useful in more scenarios then I can count. I use it for all my images in all my applications. Thumbor also has client libraries for a lot of languages such as Node.

Thumbor is a Python application and is most easily hosted using Docker. There are a number of great projects on Github that have Docker compose setups for Docker. I use this one. It comes with a built-in Nginx proxy for caching. All the images will be served through an Nginx cache, both on disk and in memory by default. This means that only the first request for an image will hit Thumbor itself. Any requests after that will only hit the Nginx cache and will thus be very fast.

To make it even faster you can deploy a CDN in front of your Thumbor server. If your site is on Cloudflare you can use theirs for free. Just keep in mind that Cloudflare will not be happy if you just use their CDN to cache a very large number of big images. You can of course use any other CDN like Cloudfront. My entire Thumbor stack takes up about 200MB of RAM.