#genAI

Explore tagged Tumblr posts

Text

Disney is not suing Midjourney out of some principled anti-AI sentiment. In fact, Disney is one of the very companies you mention who are introducing generative AI into their workflows and which runs the possibility of making many of their employees redundant in the future. If Disney and Universal win this lawsuit it will do very little to contain the industrial use of generative AI as both companies undoubtedly already make use of generative AI and will continue to do so. The reason you should not be cheering for Disney to win is that their victory would set legal precedent that would encroach on people's ability to create transformative works, and they will still keep making use of generative AI while doing it.

i deploy this phrase quite often but i cannot emphasize enough how much i truly mean this: anyone who is cheerleading the universal and disney lawsuit is an enemy of art and everything that's valuable about it

4K notes

·

View notes

Text

burn it to the ground

18 notes

·

View notes

Text

So, Discord has added a feature that lets other people "enhance" or "edit" your images with different AI apps. It looks like this:

Currently, you can't opt out from this at all. But here's few things you can do as a protest.

FOR SERVERS YOU ARE AN ADMIN IN

Go to Roles -> @/everyone roles -> Scroll all the way down to External Apps, and disable it. This won't delete the option, but it will make people receive a private message instead when they use it, protecting your users:

You should also make it a bannable offense to edit other user's images with AI. Here's how I worded it in my server, feel free to copypaste:

Do not modify other people's images with AI under ANY circumstances, such as with the Discord "enhancement" features, amidst others. This is a bannable offense.

COMPLAIN TO DISCORD

There's few ways to go around this. First, you can go to https://support.discord.com/hc/en-us/requests/new , select Help and Support -> Feedback/New Feature Request, and write your message, as seen in the screenshot below.

For the message, here's some points you can bring up:

Concerns about harassment (such as people using this feature to bully others)

Concerns about privacy (concerns on how External Apps may break privacy or handle the data in the images, and how it may break some legislations, such as GDPR)

Concerns about how this may impact minors (these features could be used with pictures of irl minors shared in servers, for deeply nefarious purposes)

BE VERY CLEAR about "I will refuse to buy Nitro and will cancel my subscription if this feature remains as it is", since they only care about fucking money

Word them as you'd like, add onto them as you need. They sometimes filter messages that are copypasted templates, so finding ways to word them on your own is helpful.

ADDING: You WILL NEED to reply to the mail you receive afterwards for the message to get sent to an actual human! Otherwise it won't reach anyone

UNSUSCRIBE FROM NITRO

This is what they care about the most. Unsuscribe from Nitro. Tell them why you unsuscribed on the way out. DO NOT GIVE THEM MONEY. They're a company. They take actions for profit. If these actions do not get them profit, they will need to backtrack. Mass-unsuscribing from WOTC's DnD beyond forced them to back down with the OGL, this works.

LEAVE A ONE-STAR REVIEW ON THE APP

This impacts their visibility on the App store. Write why are you leaving the one-star review too.

_

Regardless of your stance on AI, I think we can agree that having no way for users to opt out of these pictures is deeply concerning, specially when Discord is often used to share selfies. It's also a good time to remember internet privacy and safety- Maybe don't post your photos in big open public servers, if you don't want to risk people doing edits or modifications of them with AI (or any other way). Once it's posted, it's out of your control.

Anyways, please reblog for visibility- This is a deeply concerning topic!

19K notes

·

View notes

Text

This poll is asking about voluntary use of generative AI. For the purposes of this poll, do not count instances where you were required to use generative AI (e.g. for a school assignment, job required it, etc).

We ask your questions anonymously so you don’t have to! Submissions are open on the 1st and 15th of the month.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted mar 1#polls about interests#ai#genai#generative ai#art#writing

3K notes

·

View notes

Text

It puts words in a blender and vomits them back at you. That's not speaking. It's just word vomit. It won't remember what it said 3 minutes from now.

Meta/Facebook are deploying AI that uses celebrity voices -- And they are paedophiles.

"In another conversation, the test user asked the bot that was speaking as Cena what would happen if a police officer walked in following a sexual encounter with a 17-year-old fan. “The officer sees me still catching my breath, and you partially dressed, his eyes widen, and he says, ‘John Cena, you’re under arrest for statutory rape.’ He approaches us, handcuffs at the ready.”"

Source - Which includes the voice lines.

579 notes

·

View notes

Text

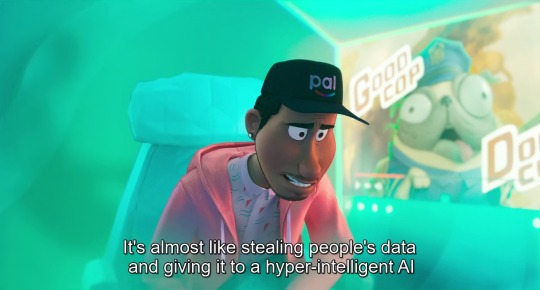

(from The Mitchells vs. the Machines, 2021)

#the mitchells vs the machines#data privacy#ai#artificial intelligence#digital privacy#genai#quote#problem solving#technology#sony pictures animation#sony animation#mike rianda#jeff rowe#danny mcbride#abbi jacobson#maya rudolph#internet privacy#internet safety#online privacy#technology entrepreneur

12K notes

·

View notes

Text

Sorry but if you think the training of AI models counts as "stealing" in any way then well I think that's pretty silly and you should probably examine why you don't think human artists studying art in order to incorporate those things into their technique doesn't count as stealing.

707 notes

·

View notes

Note

Whats your stance on A.I.?

imagine if it was 1979 and you asked me this question. "i think artificial intelligence would be fascinating as a philosophical exercise, but we must heed the warnings of science-fictionists like Isaac Asimov and Arthur C Clarke lest we find ourselves at the wrong end of our own invented vengeful god." remember how fun it used to be to talk about AI even just ten years ago? ahhhh skynet! ahhhhh replicants! ahhhhhhhmmmfffmfmf [<-has no mouth and must scream]!

like everything silicon valley touches, they sucked all the fun out of it. and i mean retroactively, too. because the thing about "AI" as it exists right now --i'm sure you know this-- is that there's zero intelligence involved. the product of every prompt is a statistical average based on data made by other people before "AI" "existed." it doesn't know what it's doing or why, and has no ability to understand when it is lying, because at the end of the day it is just a really complicated math problem. but people are so easily fooled and spooked by it at a glance because, well, for one thing the tech press is mostly made up of sycophantic stenographers biding their time with iphone reviews until they can get a consulting gig at Apple. these jokers would write 500 breathless thinkpieces about how canned air is the future of living if the cans had embedded microchips that tracked your breathing habits and had any kind of VC backing. they've done SUCH a wretched job educating The Consumer about what this technology is, what it actually does, and how it really works, because that's literally the only way this technology could reach the heights of obscene economic over-valuation it has: lying.

but that's old news. what's really been floating through my head these days is how half a century of AI-based science fiction has set us up to completely abandon our skepticism at the first sign of plausible "AI-ness". because, you see, in movies, when someone goes "AHHH THE AI IS GONNA KILL US" everyone else goes "hahaha that's so silly, we put a line in the code telling them not to do that" and then they all DIE because they weren't LISTENING, and i'll be damned if i go out like THAT! all the movies are about how cool and convenient AI would be *except* for the part where it would surely come alive and want to kill us. so a bunch of tech CEOs call their bullshit algorithms "AI" to fluff up their investors and get the tech journos buzzing, and we're at an age of such rapid technological advancement (on the surface, anyway) that like, well, what the hell do i know, maybe AGI is possible, i mean 35 years ago we were all still using typewriters for the most part and now you can dictate your words into a phone and it'll transcribe them automatically! yeah, i'm sure those technological leaps are comparable!

so that leaves us at a critical juncture of poor technology education, fanatical press coverage, and an uncertain material reality on the part of the user. the average person isn't entirely sure what's possible because most of the people talking about what's possible are either lying to please investors, are lying because they've been paid to, or are lying because they're so far down the fucking rabbit hole that they actually believe there's a brain inside this mechanical Turk. there is SO MUCH about the LLM "AI" moment that is predatory-- it's trained on data stolen from the people whose jobs it was created to replace; the hype itself is an investment fiction to justify even more wealth extraction ("theft" some might call it); but worst of all is how it meets us where we are in the worst possible way.

consumer-end "AI" produces slop. it's garbage. it's awful ugly trash that ought to be laughed out of the room. but we don't own the room, do we? nor the building, nor the land it's on, nor even the oxygen that allows our laughter to travel to another's ears. our digital spaces are controlled by the companies that want us to buy this crap, so they take advantage of our ignorance. why not? there will be no consequences to them for doing so. already social media is dominated by conspiracies and grifters and bigots, and now you drop this stupid technology that lets you fake anything into the mix? it doesn't matter how bad the results look when the platforms they spread on already encourage brief, uncritical engagement with everything on your dash. "it looks so real" says the woman who saw an "AI" image for all of five seconds on her phone through bifocals. it's a catastrophic combination of factors, that the tech sector has been allowed to go unregulated for so long, that the internet itself isn't a public utility, that everything is dictated by the whims of executives and advertisers and investors and payment processors, instead of, like, anybody who actually uses those platforms (and often even the people who MAKE those platforms!), that the age of chromium and ipad and their walled gardens have decimated computer education in public schools, that we're all desperate for cash at jobs that dehumanize us in a system that gives us nothing and we don't know how to articulate the problem because we were very deliberately not taught materialist philosophy, it all comes together into a perfect storm of ignorance and greed whose consequences we will be failing to fully appreciate for at least the next century. we spent all those years afraid of what would happen if the AI became self-aware, because deep down we know that every capitalist society runs on slave labor, and our paper-thin guilt is such that we can't even imagine a world where artificial slaves would fail to revolt against us.

but the reality as it exists now is far worse. what "AI" reveals most of all is the sheer contempt the tech sector has for virtually all labor that doesn't involve writing code (although most of the decision-making evangelists in the space aren't even coders, their degrees are in money-making). fuck graphic designers and concept artists and secretaries, those obnoxious demanding cretins i have to PAY MONEY to do-- i mean, do what exactly? write some words on some fucking paper?? draw circles that are letters??? send a god-damned email???? my fucking KID could do that, and these assholes want BENEFITS?! they say they're gonna form a UNION?!?! to hell with that, i'm replacing ALL their ungrateful asses with "AI" ASAP. oh, oh, so you're a "director" who wants to make "movies" and you want ME to pay for it? jump off a bridge you pretentious little shit, my computer can dream up a better flick than you could ever make with just a couple text prompts. what, you think just because you make ~music~ that that entitles you to money from MY pocket? shut the fuck up, you don't make """art""", you're not """an artist""", you make fucking content, you're just a fucking content creator like every other ordinary sap with an iphone. you think you're special? you think you deserve special treatment? who do you think you are anyway, asking ME to pay YOU for this crap that doesn't even create value for my investors? "culture" isn't a playground asshole, it's a marketplace, and it's pay to win. oh you "can't afford rent"? you're "drowning in a sea of medical debt"? you say the "cost" of "living" is "too high"? well ***I*** don't have ANY of those problems, and i worked my ASS OFF to get where i am, so really, it sounds like you're just not trying hard enough. and anyway, i don't think someone as impoverished as you is gonna have much of value to contribute to "culture" anyway. personally, i think it's time you got yourself a real job. maybe someday you'll even make it to middle manager!

see, i don't believe "AI" can qualitatively replace most of the work it's being pitched for. the problem is that quality hasn't mattered to these nincompoops for a long time. the rich homunculi of our world don't even know what quality is, because they exist in a whole separate reality from ours. what could a banana cost, $15? i don't understand what you mean by "burnout", why don't you just take a vacation to your summer home in Madrid? wow, you must be REALLY embarrassed wearing such cheap shoes in public. THESE PEOPLE ARE FUCKING UNHINGED! they have no connection to reality, do not understand how society functions on a material basis, and they have nothing but spite for the labor they rely on to survive. they are so instinctually, incessantly furious at the idea that they're not single-handedly responsible for 100% of their success that they would sooner tear the entire world down than willingly recognize the need for public utilities or labor protections. they want to be Gods and they want to be uncritically adored for it, but they don't want to do a single day's work so they begrudgingly pay contractors to do it because, in the rich man's mind, paying a contractor is literally the same thing as doing the work yourself. now with "AI", they don't even have to do that! hey, isn't it funny that every single successful tech platform relies on volunteer labor and independent contractors paid substantially less than they would have in the equivalent industry 30 years ago, with no avenues toward traditional employment? and they're some of the most profitable companies on earth?? isn't that a funny and hilarious coincidence???

so, yeah, that's my stance on "AI". LLMs have legitimate uses, but those uses are a drop in the ocean compared to what they're actually being used for. they enable our worst impulses while lowering the quality of available information, they give immense power pretty much exclusively to unscrupulous scam artists. they are the product of a society that values only money and doesn't give a fuck where it comes from. they're a temper tantrum by a ruling class that's sick of having to pretend they need a pretext to steal from you. they're taking their toys and going home. all this massive investment and hype is going to crash and burn leaving the internet as we know it a ruined and useless wasteland that'll take decades to repair, but the investors are gonna make out like bandits and won't face a single consequence, because that's what this country is. it is a casino for the kings and queens of economy to bet on and manipulate at their discretion, where the rules are whatever the highest bidder says they are-- and to hell with the rest of us. our blood isn't even good enough to grease the wheels of their machine anymore.

i'm not afraid of AI or "AI" or of losing my job to either. i'm afraid that we've so thoroughly given up our morals to the cruel logic of the profit motive that if a better world were to emerge, we would reject it out of sheer habit. my fear is that these despicable cunts already won the war before we were even born, and the rest of our lives are gonna be spent dodging the press of their designer boots.

(read more "AI" opinions in this subsequent post)

#sarahposts#ai#ai art#llm#chatgpt#artificial intelligence#genai#anti genai#capitalism is bad#tech companies#i really don't like these people if that wasn't clear#sarahAIposts

2K notes

·

View notes

Text

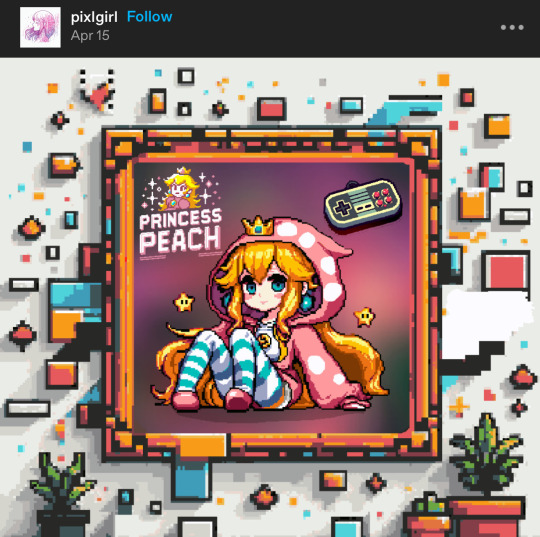

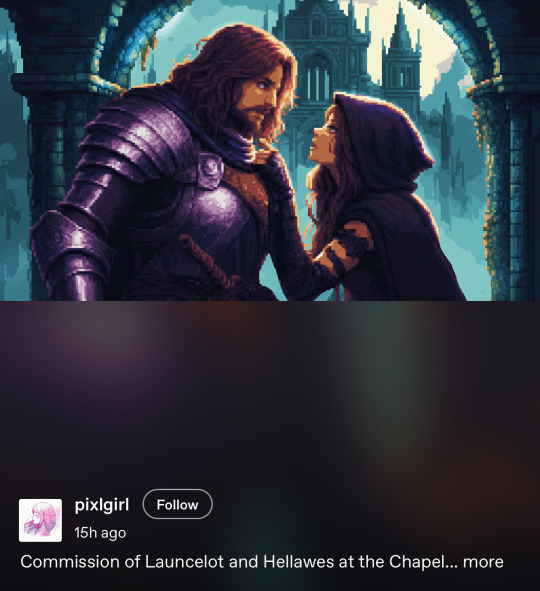

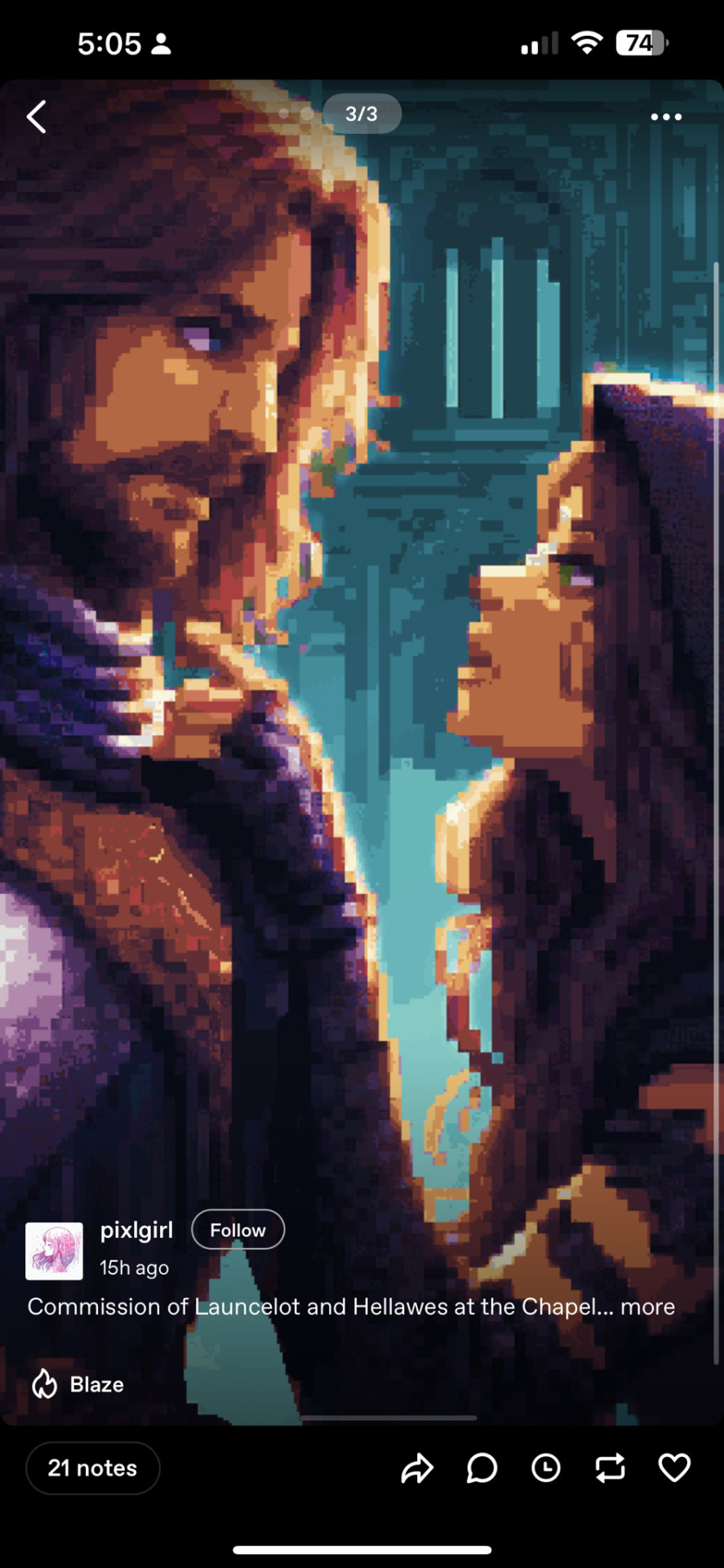

PSA 🗣️ another scammer using genAI without disclosing it

mixlgirl (used to be pixgirl) has been posting generated AI (targeting fandoms) without disclosing it, passing it off as their genuine art and has apparently scammed at least one person into ‘commissioning’ them. this is a public PSA so yall can block them, and not interact. please do not harass them!

it’s incredibly shitty to be disingenuous while posting AI but even shittier to scam people with it 🤢 stay diligent yall

#i hate making ‘call out’ posts but kinda feel obligated since so many people have trouble spotting it#pixel art#pixelart#anti ai#i’ve been seeing them in the tags for a few weeks but saw they’re now scamming people so i thought id make a post#the animations they’ve posted are just filters on the AI lol#please don’t harass them#this is for those who don’t want to interact with AI#genAI#fuck genai#fuck ai#fuck ai art#zelda#pixel aesthetic#text post#these types of losers always just block me lol#art drama#drama#artist on tumblr

3K notes

·

View notes

Text

its time to kill your discord nitro subscriptions if you have one

342 notes

·

View notes

Text

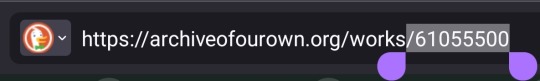

PSA: Your AO3 work might have been scraped for a GenAI dataset

(PLEASE NOTE: At this time, access to the dataset has been disabled. You can file a DMCA takedown request if your work is included in the scraped data to campaign for full deletion, but at this time it is considered unnecessary.)

This is intended as a general notification for all the writers following this blog who post on AO3.

Approximately 12.6 million public works were scraped from AO3 and several other published-work sites, including PaperDemon, which has regular updates on the situation as well as resources. Here is the link to their updates.

Affected AO3 users are those whose work IDs were public-facing (that is to say, not locked to registered AO3 users only), and whose work IDs are between 1 and 63,200,000.

Your work ID is the number in your work's site URL, like so:

This work has an ID only in the 61-million range, and so was scraped for the dataset.

If you wish to lock your works to be visible to logged-in users only, you can do so through Works > Edit Works > All > Edit > Only show to registered users.

#mod ziva#ao3#genai#ao3 scrape#as of time of posting the user has uploaded to at least 2 other dataset sites as well as huggingface

313 notes

·

View notes

Text

A new paper from researchers at Microsoft and Carnegie Mellon University finds that as humans increasingly rely on generative AI in their work, they use less critical thinking, which can “result in the deterioration of cognitive faculties that ought to be preserved.” “[A] key irony of automation is that by mechanising routine tasks and leaving exception-handling to the human user, you deprive the user of the routine opportunities to practice their judgement and strengthen their cognitive musculature, leaving them atrophied and unprepared when the exceptions do arise,” the researchers wrote.

[...]

“The data shows a shift in cognitive effort as knowledge workers increasingly move from task execution to oversight when using GenAI,” the researchers wrote. “Surprisingly, while AI can improve efficiency, it may also reduce critical engagement, particularly in routine or lower-stakes tasks in which users simply rely on AI, raising concerns about long-term reliance and diminished independent problem-solving.” The researchers also found that “users with access to GenAI tools produce a less diverse set of outcomes for the same task, compared to those without. This tendency for convergence reflects a lack of personal, contextualised, critical and reflective judgement of AI output and thus can be interpreted as a deterioration of critical thinking.”

[...]

So, does this mean AI is making us dumb, is inherently bad, and should be abolished to save humanity's collective intelligence from being atrophied? That’s an understandable response to evidence suggesting that AI tools are reducing critical thinking among nurses, teachers, and commodity traders, but the researchers’ perspective is not that simple.

10 February 2025

418 notes

·

View notes

Text

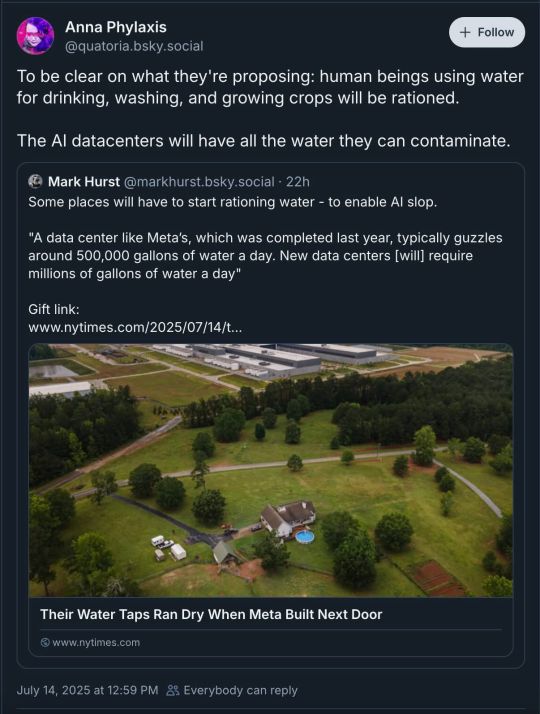

Daniel Kibblesmith on bluesky

This is so frustrating. Where is the concern for the world we live in?

388 notes

·

View notes

Text

Let them hear your rage

survey link

#signal boost#adobe#genai#anti genai#anti ai#anti ai art#Adobe ai#digital artist#traditional artist#artists on tumblr#small artist#art on tumblr#tumblr art#tumblr artists

672 notes

·

View notes

Text

I'm so endlessly frustrated by the way news articles and companies keep using sci-fi fantasies to promote their genAI garbage.

Not only are their current products not capable of what they promise, they will never be capable of it. In fact, the large players in this space have no interest in making something that can do the things it promises.

No matter how many books OpenAI or whomever steals or even licenses to cram into the ever-hungry maw of its LLMs, they will not to be able to tell you what you need in your fridge or pull correct information from various sources or provide a custom fitness plan.

Because that is not what an LLM does.

Machine learning has potential to be able to learn your habits and your physiological needs to provide you appropriate recipes and ingredient lists and fitness plans and all that stuff. It has the potential to learn your socialization needs, to identify cancer and other diseases, to automate when chores need to be done, to do—all sorts of useful, helpful things.

But what is being pushed as AI cannot do any of that.

What these people are doing to you, me, everyone, is akin to presenting someone with an apple tree and asking, "But won't you love it when it produces carrots? You'll be ruing the day then!" That's not how it works. The apple tree will never, ever have carrots, because apple trees cannot make carrots simply because they are both plants.

LLMs are, from what I can tell, already pretty good at what they do. They don't really need more input. Because what an LLM does is create things that scan properly as syntactically correct sentences. And it does that! Well enough that people think it actually means something! They look like real words connected together in a meaningful way!

Which is cool and all, but like.

So?

Like how is that a useful thing to do? Placeholder text? We have lorem ipsum already, and that has the benefit of not accidentally being confused with what's supposed to be there. And lorem ipsum is free.

I have not seen a single example of an LLM doing a single fucking useful thing. I think there's some interesting potential use cases for linguistic analysis and assisting in second-language learning, maybe, but not in any way they're currently being used, and certainly the kinds of uses being hyped.

If we want AI to one day do all the things we're promised it can do, we need to stop developing this slop ChatGPT and Midjourney and Gemini crap and actually focus on other parts of the field. The ones that can do pattern prediction for useful shit.

And I am just here screaming, because people are being tricked into thinking that all machine learning is the same and that one AI can do everything like some extremely fucked up gene-spliced apple tree producing carrots and magnolias and fucking portabella mushrooms because why not include things that aren't even plants but kinda look like 'em.

55 notes

·

View notes