#enable hardware acceleration

Explore tagged Tumblr posts

Text

How To Enable Or Disable Hardware Acceleration In The 360 Secure Browser

youtube

How To Enable Or Disable Hardware Acceleration In The 360 Secure Browser | PC Tutorial | *2024

In this tutorial, we'll guide you through the process of enabling or disabling hardware acceleration in the 360 Secure Browser on your PC. Hardware acceleration can improve browser performance or help resolve issues with rendering. Follow this step-by-step guide to optimize your browsing experience. Don’t forget to like, subscribe, and hit the notification bell for more 360 Secure Browser tips and tricks!

Simple Steps:

Open the 360 Secure web browser.

Click on the 3 bar hamburger menu in the upper right corner and choose "Settings".

In the left pane, click on "Advanced" to expand it the section, then choose "System".

In the center pane, toggle on or off "Use Hardware Acceleration When Available".

#360 Secure Browser#enable hardware acceleration#disable hardware acceleration#hardware acceleration settings#360 Secure tutorial#optimize browser performance#browser settings 360#360 Secure PC#browser tips#hardware acceleration guide#improve browsing speed#troubleshoot 360 Secure#browser optimization#360 Secure 2024#tech tutorial 360 Secure#Youtube

0 notes

Text

Teksun Tejas 625 SOM is designed to run AI and IoT applications on the edge that demand a high-speed SoC for smooth operation. To know more, visit @ https://teksun.com/solution/iot-air-conditioner-control-system/

#Tejas-625 SOM 2.0#Embedded computing#Industrial automation#IoT edge devices#AI-enabled solutions#Industrial IoT#Edge computing#Hardware acceleration#Automotive applications#Robotics#and drones#Industrial control systems

0 notes

Text

"Netflix is using hardware acceleration to stop you from streaming to discord"

I AM BEGGING YOU TO LEARN WHAT HARDWARE ACCELERATION IS. MOST OF THE TIME A WEBSITE IS GOING TO SAY "HEY DISPLAY THIS IMAGE" AND YOUR GPU GOES AND SAYS "YEAH THATS A GREAT IDEA, CPU GET ON THAT" AND SO IT GIVES THE CPU THE DATA TO WRITE THE IMAGE TO YOUR SCREEN. THIS IS THE SAME FUCKING PLACE THAT DISCORD PULLS FROM TO SHARE YOUR SCREEN WHEN YOU'RE IN VC WITH YOUR FRIENDS SO IF SOMETHING SUDDENLY MAKES IT SO THAT THE CPU DOES NOT HAVE THAT DATA, FOR INSTANCE IF YOU HAVE HARDWARE ACCELERATION ENABLED ON YOUR BROWSER WHICH LETS YOUR GPU WRITE DIRECTLY TO YOUR SCREEN INSTEAD OF HANDING THE TASK OFF TO YOUR CPU, WELL THEN DISCORD IS JUST GONNA COME UP EMPTY, ISN'T IT?

IT IS NOT A PLOY BY NETFLIX TO MAKE YOUR FRIENDS PAY FOR SUBSCRIPTIONS I SWEAR TO FUCKING GOD IT IS A LEGITIMATE FUNCTION BECAUSE MOST PEOPLE DO NOT HAVE A CAPABLE PC AND THEY NEED TO SAVE CPU RESOURCES

I WORDED SOME OF THIS WRONG BUT IM JUST TIRED OF EXPLAINING WHAT HARDWARE ACCELERATION IS HOLY SHIT

67 notes

·

View notes

Text

Cancelled Missions: Testing Shuttle Manipulator Arms During Earth-Orbital Apollo Missions (1971-1972)

In this drawing by NASA engineer Caldwell Johnson, twin human-like Space Shuttle robot arms with human-like hands deploy from the Apollo Command and Service Module (CSM) Scientific Instrument Module (SIM) Bay to grip the derelict Skylab space station.

"Caldwell Johnson, co-holder with Maxime Faget of the Mercury space capsule patent, was chief of the Spacecraft Design Division at the NASA Manned Spacecraft Center (MSC) in Houston, Texas, when he proposed that astronauts test prototype Space Shuttle manipulator arms and end effectors during Apollo Command and Service Module (CSM) missions in Earth orbit. In a February 1971 memorandum to Faget, NASA MSC's director of Engineering and Development, Johnson described the manipulator test mission as a worthwhile alternative to the Earth survey, space rescue, and joint U.S./Soviet CSM missions then under study.

At the time Johnson proposed the Shuttle manipulator arm test, three of the original 10 planned Apollo lunar landing missions had been cancelled, the second Skylab space station (Skylab B) appeared increasingly unlikely to reach orbit, and the Space Shuttle had not yet been formally approved. NASA managers foresaw that the Apollo and Skylab mission cancellations would leave them with surplus Apollo spacecraft and Saturn rockets after the last mission to Skylab A. They sought low-cost Earth-orbital missions that would put the surplus hardware to good use and fill the multi-year gap in U.S. piloted missions expected to occur in the mid-to-late 1970s.

Johnson envisioned Shuttle manipulators capable of bending and gripping much as do human arms and hands, thus enabling them to hold onto virtually anything. He suggested that a pair of prototype arms be mounted in a CSM Scientific Instrument Module (SIM) Bay, and that the CSM "pretend to be a Shuttle" during rendezvous operations with the derelict Skylab space station.

The CSM's three-man crew could, he told Faget, use the manipulators to grip and move Skylab. They might also use them to demonstrate a space rescue, capture an 'errant satellite,' or remove film from SIM Bay cameras and pass it to the astronauts through a special airlock installed in place of the docking unit in the CSM's nose.

Faget enthusiastically received Johnson's proposal (he penned 'Yes! This is great' on his copy of the February 1971 memo). The proposal generated less enthusiasm elsewhere, however.

Undaunted, Johnson proposed in May 1972 that Shuttle manipulator hardware replace Earth resources instruments that had been dropped for lack of funds from the planned U.S.-Soviet Apollo-Soyuz Test Project (ASTP) mission. President Richard Nixon had called on NASA to develop the Space Shuttle just four months before (January 1972). Johnson asked Faget for permission to perform 'a brief technical and programmatic feasibility study' of the concept, and Faget gave him permission to prepare a presentation for Aaron Cohen, manager of the newly created Space Shuttle Program Office at MSC.

In his June 1972 presentation to Cohen, Johnson declared that '[c]argo handling by manipulators is a key element of the Shuttle concept.' He noted that CSM-111, the spacecraft tagged for the ASTP mission, would have no SIM Bay in its drum-shaped Service Module (SM), and suggested that a single 28-foot-long Shuttle manipulator arm could be mounted near the Service Propulsion System (SPS) main engine in place of the lunar Apollo S-band high-gain antenna, which would not be required during Earth-orbital missions.

During ascent to orbit, the manipulator would ride folded beneath the CSM near the ASTP Docking Module (DM) within the streamlined Spacecraft Launch Adapter. During SPS burns, the astronauts would stabilize the manipulator so that acceleration would not damage it by commanding it to grip a handle installed on the SM near the base of the CSM's conical Command Module (CM).

Johnson had by this time mostly dropped the concept of an all-purpose human hand-like 'end effector' for the manipulator; he informed Cohen that the end effector design was 'undetermined.' The Shuttle manipulator demonstration would take place after CSM-111 had undocked from the Soviet Soyuz spacecraft and moved away to perform independent maneuvers and experiments.

The astronauts in the CSM would first use a TV camera mounted on the arm's wrist to inspect the CSM and DM, then would use the end effector to manipulate 'some device' on the DM. They would then command the end effector to grip a handle on the DM, undock the DM from the CSM, and use the manipulator to redock the DM to the CSM. Finally, they would undock the DM and repeatedly capture it with the manipulator.

Caldwell Johnson's depiction of a prototype Shuttle manipulator arm with a hand-like end effector. The manipulator grasps the Docking Module meant to link U.S. Apollo and Soviet Soyuz spacecraft in Earth orbit during the Apollo-Soyuz Test Project (ASTP) mission.

Johnson estimated that new hardware for the ASTP Shuttle manipulator demonstration would add 168 pounds (76.2 kilograms) to the CM and 553 pounds (250.8 kilograms) to the SM. He expected that concept studies and pre-design would be completed in January 1973. Detail design would commence in October 1972 and be completed by 1 July 1973, at which time CSM-111 would undergo modification for the manipulator demonstration.

Johnson envisioned that MSC would build two manipulators in house. The first, for testing and training, would be completed in January 1974. The flight unit would be completed in May 1974, tested and checked out by August 1974, and launched into orbit attached to CSM-111 in July 1975. Johnson optimistically placed the cost of the manipulator arm demonstration at just $25 million.

CSM-111, the last Apollo spacecraft to fly, reached Earth orbit on schedule on 15 July 1975. By then, Caldwell Johnson had retired from NASA. CSM-111 carried no manipulator arm; the tests Johnson had proposed had been judged to be unnecessary.

That same month, the U.S. space agency, short on funds, invited Canada to develop and build the Shuttle manipulator arm. The Remote Manipulator System — also called the Canadarm — first reached orbit on board the Space Shuttle Columbia during STS-2, the second flight of the Shuttle program, on 12 November 1981."

source

#Apollo–Soyuz#Apollo Soyuz Test Project#ASTP#Apollo CSM Block II#CSM-111#Rocket#NASA#Apollo Program#Apollo Applications Program#Canadarm#Shuttle Manipulator Arms#Skylab Orbital Workshop#Skylab OWS#Skylab#Skylab I#Skylab 1#SL-1#Space Station#Apollo Telescope Mount#ATM#Cancelled#Cancelled Mission#my post

42 notes

·

View notes

Text

Original Resident Evil 2 coming to GOG on August 27 - Gematsu

Following the release of the first game, GOG and Capcom will release the original Resident Evil 2 for PC via GOG on August 27 at 1:00 a.m. PT / 4:00 a.m. ET, the companies announced.

Here is an overview of the game, via GOG:

About

If the suspense doesn’t kill you, something else will… Immerse yourself in the ultimate test of survival. Face your fears in this terror-filled classic edition of Resident Evil™ 2 for PC containing more horror, more mutant creatures and more evil than before. Just like with Resident Evil, we made sure GOG’s version of the second entry in the series is the best it can be. Here’s what we did to make this masterpiece last forever:

Full compatibility with Windows 10 and Windows 11.

Six localizations of the game included (English, German, French, Italian, Spanish, Japanese).

Improved DirectX game renderer.

New rendering options (Windowed Mode, Vertical Synchronization Control, Gamma Correction, Integer Scaling and more).

Improved audio volume and panning.

Improved cutscenes and subtitles.

Improved savegame manager.

Improved game video player.

Issue-less game exit.

Improved game registry settings.

Improved key-binding settings and audio settings screens.

Improved end credits in the German version.

Fixed issues with Rooms 114 and 115 (missing text), Room 210 (invisible diary), and Room 409 (looping sound).

Full support for modern controllers (DualSense, DualShock 4, Xbox Series Xbox One, Xbox 360, Switch, Logitech F series, and many more) with optimal button binding regardless of the hardware and wireless mode.

4th Survivor and Tofu modes enabled from the very beginning.

Our version of the game keeps all the original content intact—1998’s description is no exception. Take a trip down memory lane and see how Resident Evil 2 was described to gamers when it launched all those years ago:

Key Features

Two separate adventures! Command Leon Kennedy, a rookie cop who stumbles onto the carnage reporting for his first duty, or play as Claire Redfield, desperately searching for her missing brother.

Cutting edge 3D accelerated graphics that create a terrifying, photo-realistic experience.

3D accelerated and non 3D accelerated settings to maximize performance whatever your setup.

Features complete versions of both original U.S. and the original Japanese versions of Resident Evil 2.

New Extreme Battle Mode: Battle your way through hordes of zombies as you play the hyper-intensive challenge that changes every time you play. All new Resident Evil 2 picture gallery.

The game’s secret scenario available from the very beginning (no need to finish it under certain conditions first).

Watch a trailer below.

Claire Intro

youtube

5 notes

·

View notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across Click for more info various industries. One company that stands out for its pioneering efforts in AI development is Advanced Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach to hardware design give it a competitive edge over other players in the market.

youtube

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

2 notes

·

View notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across various industries. One company that stands out for its pioneering efforts in AI development is Advanced Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach Click to find out more to hardware design give it a competitive edge over other players in the market.

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

youtube

2 notes

·

View notes

Text

Steam Hardware Survey: Computer Information:

Manufacturer: Dell Inc. Model: Precision 5680 Form Factor: Laptop No Touch Input Detected

Processor Information:

CPU Vendor: GenuineIntel CPU Brand: 13th Gen Intel(R) Core(TM) i7-13700H CPU Family: 0x6 CPU Model: 0xba CPU Stepping: 0x2 CPU Type: 0x0 Speed: 2918 MHz

20 logical processors 14 physical processors Hyper-threading: Supported FCMOV: Supported SSE2: Supported SSE3: Supported SSSE3: Supported SSE4a: Unsupported SSE41: Supported SSE42: Supported AES: Supported AVX: Supported AVX2: Supported AVX512F: Unsupported AVX512PF: Unsupported AVX512ER: Unsupported AVX512CD: Unsupported AVX512VNNI: Unsupported SHA: Supported CMPXCHG16B: Supported LAHF/SAHF: Supported PrefetchW: Unsupported BMI1: Supported BMI2: Supported F16C: Supported FMA: Supported

Operating System Version:

Windows 11 (64 bit) NTFS: Supported Crypto Provider Codes: Supported 311 0x0 0x0 0x0

Client Information:

Version: 1731433018 Browser GPU Acceleration Status: Enabled Browser Canvas: Enabled Browser Canvas out-of-process rasterization: Enabled Browser Direct Rendering Display Compositor: Disabled Browser Compositing: Enabled Browser Multiple Raster Threads: Enabled Browser OpenGL: Enabled Browser Rasterization: Enabled Browser Raw Draw: Disabled Browser Skia Graphite: Disabled Browser Video Decode: Enabled Browser Video Encode: Enabled Browser Vulkan: Disabled Browser WebGL: Enabled Browser WebGL2: Enabled Browser WebGPU: Enabled Browser WebNN: Disabled

Video Card:

Driver: Intel(R) Iris(R) Xe Graphics DirectX Driver Name: nvldumd.dll Driver Version: 31.0.101.5186 DirectX Driver Version: 32.0.15.6603 Driver Date: 1 18 2024 Desktop Color Depth: 32 bits per pixel Monitor Refresh Rate: 60 Hz DirectX Card: NVIDIA RTX 2000 Ada Generation Laptop GPU VendorID: 0x10de DeviceID: 0x28b8 Revision: 0xa1 Number of Monitors: 1 Number of Logical Video Cards: 2 No SLI or Crossfire Detected Primary Display Resolution: 3840 x 2400 Desktop Resolution: 3840 x 2400 Primary Display Size: 13.54" x 8.46" (15.94" diag), 34.4cm x 21.5cm (40.5cm diag) Primary Bus: PCI Express 8x Primary VRAM: 8192 MB Supported MSAA Modes: 2x 4x 8x

Sound card: Audio device: Speakers (Intel® Smart Sound Te

Memory: RAM: 15959 Mb

VR Hardware: VR Headset: None detected

Miscellaneous:

UI Language: English Media Type: Undetermined Total Hard Disk Space Available: 7616900 MB Largest Free Hard Disk Block: 988009 MB OS Install Date: Apr 21 2024 Game Controller: None detected MAC Address hash: 62e0fc470c01efb

Storage:

Disk serial number hash: 1ec11c53 Number of SSDs: 2 SSD sizes: 4000G,4000G Number of HDDs: 0 Number of removable drives: 0

3 notes

·

View notes

Text

Lenovo Starts Manufacturing AI Servers in India: A Major Boost for Artificial Intelligence

Lenovo, a global technology giant, has taken a significant step by launching the production of AI servers in India. This decision is ready to give a major boost to the country’s artificial intelligence (AI) ecosystem, helping to meet the growing demand for advanced computing solutions. Lenovo’s move brings innovative Machine learning servers closer to Indian businesses, ensuring faster access, reduced costs, and local expertise in artificial intelligence. In this blog, we’ll explore the benefits of Lenovo’s AI server manufacturing in India and how it aligns with the rising importance of AI, graphic processing units (GPU) and research and development (R&D) in India.

The Rising Importance of AI Servers:

Artificial intelligence is transforming industries worldwide, from IT to healthcare, finance and manufacturing. AI systems process vast amounts of data, analyze it, and help businesses make decisions in real time. However, running these AI applications requires powerful hardware.

Artificial Intelligence servers are essential for companies using AI, machine learning, and big data, offering the power and scalability needed for processing complex algorithms and large datasets efficiently. They enable organizations to process massive datasets, run AI models, and implement real-time solutions. Recognizing the need for these advanced machine learning servers, Lenovo’s decision to start production in South India marks a key moment in supporting local industries’ digital transformation. Lenovo India Private Limited will manufacture around 50,000 Artificial intelligence servers in India and also 2,400 Graphic Processing Units annually.

Benefits of Lenovo’s AI Server Manufacturing in India:

1. Boosting AI Adoption Across Industries:

Lenovo’s machine learning server manufacturing will likely increase the adoption of artificial intelligence across sectors. Their servers with high-quality capabilities will allow more businesses, especially small and medium-sized enterprises, to integrate AI into their operations. This large adoption could revolutionize industries like manufacturing, healthcare, and education in India, enhancing innovation and productivity.

2. Making India’s Technology Ecosystem Strong:

By investing in AI server production and R&D labs, Lenovo India Private Limited is contributing to India’s goal of becoming a global technology hub. The country’s IT infrastructure will build up, helping industries control the power of AI and graphic processing units to optimize processes and deliver innovative solutions. Lenovo’s machine learning servers, equipped with advanced graphic processing units, will serve as the foundation for India’s AI future.

3. Job Creation and Skill Development:

Establishing machine learning manufacturing plants and R&D labs in India will create a wealth of job opportunities, particularly in the tech sector. Engineers, data scientists, and IT professionals will have the chance to work with innovative artificial intelligence and graphic processing unit technologies, building local expertise and advancing skills that agree with global standards.

4. The Role of GPU in AI Servers:

GPU (graphic processing unit) plays an important role in the performance of AI servers. Unlike normal CPU, which excel at performing successive tasks, GPUs are designed for parallel processing, making them ideal for handling the massive workloads involved in artificial intelligence. GPUs enable AI servers to process large datasets efficiently, accelerating deep learning and machine learning models.

Lenovo’s AI servers, equipped with high-performance GPU, provide the analytical power necessary for AI-driven tasks. As the difficulty of AI applications grows, the demand for powerful GPU in AI servers will also increase. By manufacturing AI servers with strong GPU support in India, Lenovo India Private Limited is ensuring that businesses across the country can leverage the best-in-class technology for their AI needs.

Conclusion:

Lenovo’s move to manufacture AI servers in India is a strategic decision that will have a long-running impact on the country’s technology landscape. With increasing dependence on artificial intelligence, the demand for Deep learning servers equipped with advanced graphic processing units is expected to rise sharply. By producing AI servers locally, Lenovo is ensuring that Indian businesses have access to affordable, high-performance computing systems that can support their artificial intelligence operations.

In addition, Lenovo’s investment in R&D labs will play a critical role in shaping the future of AI innovation in India. By promoting collaboration and developing technologies customized to the country’s unique challenges, Lenovo’s deep learning servers will contribute to the digital transformation of industries across the nation. As India moves towards becoming a global leader in artificial intelligence, Lenovo’s AI server manufacturing will support that growth.

2 notes

·

View notes

Text

Blueberry AI Introduces it Groundbreaking 3D Digital Asset Engine – 🥝KIWI Engine🥝

Blueberry AI, a leading AI-powered digital asset management company, proudly announces the release of the KIWI Engine, a high-performance 3D engine set to revolutionize industries such as gaming, advertising, and industrial design. KIWI Engine enables teams to streamline workflows by offering real-time 3D file previews in over 100 formats directly in the browser, with no need for high-performance hardware or software.

Boasting cutting-edge features such as centralized storage, AI-powered search, and blockchain-backed file tracking, KIWI Engine ensures secure, efficient collaboration while minimizing operational costs. Designed to enhance productivity, the engine supports large file transfers, cross-team collaboration, and eliminates the risk of file versioning errors or leaks.

With its easy-to-use interface and seamless integration with existing 3D tools, KIWI Engine by Blueberry AI is the ideal solution for companies looking to optimize their 3D asset management.

Unlocking New Capbabilites of 3D Digital Assets — 🥝KIWI Engine🥝 Unveils its Power

The KIWI Engine is a high-performance 3D engine that powers Blueberry AI developed by the industry-leading AI digital asset management company, Share Creators. With exceptional performance and an intuitive interface, the KIWI Engine significantly shortens production cycles for game development, advertising, and industrial design, while reducing operational costs to truly achieve cost-efficiency.

Key Standout Features of the KIWI Engine by Blueberry AI:

Browser-Based 3D Previews: No downloads required; view over 100 professional file formats directly in your browser, including 3DMax and Maya native files; no conversion needed. This functionality eliminates the need for high-performance hardware and boosts team productivity.

Seamless Large File Transfers: Easily share and preview large files within teams, facilitating smooth collaboration between designers and developers. The built-in 3D asset review feature enhances workflow precision and speed.

Addressing Common File Management Issues:

File Security & Control: With centralized storage and multi-level permissions, KIWI Engine ensures files remain secure. Blockchain logs track user activity, and version control with real-time backups prevents file loss or version errors, reducing the risk of leaks, especially during outsourcing or staff transitions.

Outsourcing Management: Control access to shared content with outsourcing teams, minimizing the risk of file misuse.

A One-Stop 3D File Preview Solution:

Broad Format Compatibility: KIWI Engine supports mainstream formats like 3DMax, Maya, Blender, OBJ, FBX, and more. It reduces the need for multiple software purchases by loading and previewing models from various design tools in one engine.

Multi-Format Preview: Combine different 3D file formats in a single workspace for simultaneous viewing and editing. This streamlines complex 3D projects, especially those involving cross-team collaboration.

Simplified 3D Previews for Non-Technical Users: KIWI Engine makes it easy for non-technical stakeholders, such as management, to quickly preview 3D assets without installing complex software. This enhances cross-department collaboration and accelerates decision-making.

Cost Savings on Software:

Traditional design projects often require expensive software purchases just to view files. With cloud technology, the KIWI Engine by Blueberry AI eliminates the need for costly software installations. Team members can preview and collaborate on 3D files online, reducing software procurement and maintenance costs while improving flexibility and efficiency.

Improving Collaboration and Resource Reuse:

Without intelligent tools, design resources are often recreated from scratch, leading to wasted time and costs. The KIWI Engine supports multi-format 3D file previews and includes AI-powered search and auto-tagging, enabling designers to easily find and reuse existing resources. This significantly enhances collaboration and reduces the security risks of transferring large files.

User-Friendly Interface and Experience:

The KIWI Engine adopts a clean, intuitive user interface, with a well-structured layout. A detailed list of grids and materials appears on the left, while function modules (controls, materials, grids, and lighting management) are on the right, ensuring a logical and smooth workflow. Personalized settings and organization-level global configurations further enhance productivity for teams of all sizes.

Grid and Material List: After loading a model, grids and materials are clearly displayed, allowing users to easily select and edit the necessary components.

Personalized Settings: Customize the interface to suit individual workflow preferences, improving work efficiency.

Organization Settings: For large teams or cross-project managers, global configuration options enable unified project management across multiple teams, saving time and resources.

KIWI Engine's Control Tool Module:

The KIWI Engine features an innovative control tool module, covering camera controls, display functions, and advanced rendering options that meet diverse project needs—from simple model viewing to complex scene operations.

Camera Controls: Features like auto-rotation and quick reset allow users to easily adjust the camera for 360-degree model views, ensuring smooth, flexible operation.

Display Functions:

Wireframe Display: Ideal for structured previews, enabling users to inspect model geometry during the design phase.

Double-Sided Display: Displays both sides of models, even if there are defects, minimizing repair time and improving workflow efficiency.

SSR (Screen Space Reflection): Enhances model reflection effects for high-quality renderings in complex scenes.

UV Check: Displays UV distribution, helping users accurately assess UV mapping for precise texture work.

Learn more about us at: Blueberry: Best Intelligent Digital Asset Management System (blueberry-ai.com)

#DAM#3DAssetManagement#3DAsset#GameDev#IndustrialDesign#Maya#3DSMax#Blender#3DRendering#3DModeling#CloudCollaboration#3DDesignSolutions#3DVisualization#3DViewer#CollaborativeDesign

2 notes

·

View notes

Text

OneAPI Construction Kit For Intel RISC V Processor Interface

With the oneAPI Construction Kit, you may integrate the oneAPI Ecosystem into your Intel RISC V Processor.

Intel RISC-V

Recently, Codeplay, an Intel business, revealed that their oneAPI Construction Kit supports RISC-V. Rapidly expanding, Intel RISC V is an open standard instruction set architecture (ISA) available under royalty-free open-source licenses for processors of all kinds.

Through direct programming in C++ with SYCL, along with a set of libraries aimed at common functions like math, threading, and neural networks, and a hardware abstraction layer that allows programming in one language to target different devices, the oneAPI programming model enables a single codebase to be deployed across multiple computing architectures including CPUs, GPUs, FPGAs, and other accelerators.

In order to promote open source cooperation and the creation of a cohesive, cross-architecture programming paradigm free from proprietary software lock-in, the oneAPI standard is now overseen by the UXL Foundation.

A framework that may be used to expand the oneAPI ecosystem to bespoke AI and HPC architectures is Codeplay’s oneAPI Construction Kit. For both native on-host and cross-compilation, the most recent 4.0 version brings RISC-V native host for the first time.

Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a key step toward realizing the goal of a completely open hardware and software stack. It is completely free to use and open-source.

OneAPI Construction Kit

Your processor has access to an open environment with the oneAPI Construction Kit. It is a framework that opens up SYCL and other open standards to hardware platforms, and it can be used to expand the oneAPI ecosystem to include unique AI and HPC architectures.

Give Developers Access to a Dynamic, Open-Ecosystem

With the oneAPI Construction Kit, new and customized accelerators may benefit from the oneAPI ecosystem and an abundance of SYCL libraries. Contributors from many sectors of the industry support and maintain this open environment, so you may build with the knowledge that features and libraries will be preserved. Additionally, it frees up developers’ time to innovate more quickly by reducing the amount of time spent rewriting code and managing disparate codebases.

The oneAPI Construction Kit is useful for anybody who designs hardware. To get you started, the Kit includes a reference implementation for Intel RISC V vector processors, although it is not confined to RISC-V and may be modified for a variety of processors.

Codeplay Enhances the oneAPI Construction Kit with RISC-V Support

The rapidly expanding open standard instruction set architecture (ISA) known as RISC-V is compatible with all sorts of processors, including accelerators and CPUs. Axelera, Codasip, and others make Intel RISC V processors for a variety of applications. RISC-V-powered microprocessors are also being developed by the EU as part of the European Processor Initiative.

At Codeplay, has been long been pioneers in open ecosystems, and as a part of RISC-V International, its’ve worked on the project for a number of years, leading working groups that have helped to shape the standard. Nous realize that building a genuinely open environment starts with open, standards-based hardware. But in order to do that, must also need open hardware, open software, and open source from top to bottom.

This is where oneAPI and SYCL come in, offering an ecosystem of open-source, standards-based software libraries for applications of various kinds, such oneMKL or oneDNN, combined with a well-developed programming architecture. Both SYCL and oneAPI are heterogeneous, which means that you may create code once and use it on any GPU AMD, Intel, NVIDIA, or, as of late, RISC-V without being restricted by the manufacturer.

Intel initially implemented RISC-V native host for both native on-host and cross-compilation with the most recent 4.0 version of the oneAPI Construction Kit. Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a major step toward realizing the vision of a completely open hardware and software stack.

Read more on govindhtech.com

#OneAPIConstructionKit#IntelRISCV#SYCL#FPGA#IntelRISCVProcessorInterface#oneAPI#RISCV#oneDNN#oneMKL#RISCVSupport#OpenEcosystem#technology#technews#news#govindhtech

2 notes

·

View notes

Text

Revolutionizing Healthcare: The Role of Cloud Computing in Modern Healthcare Technologies

In today’s digital era, cloud computing is transforming industries, and healthcare is no exception. The integration of cloud computing healthcare technologies is reshaping patient care, medical research, and healthcare management. Let’s explore how cloud computing is revolutionizing healthcare and the benefits it brings.

What is Cloud Computing in Healthcare?

Cloud computing in healthcare refers to the use of remote servers to store, manage, and process healthcare data, rather than relying on local servers or personal computers. This technology allows healthcare organizations to access vast amounts of data, collaborate with other institutions, and scale operations seamlessly.

Download PDF Brochure

Key Benefits of Cloud Computing in Healthcare

Enhanced Data Storage and Accessibility Cloud technology allows healthcare providers to store massive volumes of patient data, including medical records, images, and test results, securely. Clinicians can access this data from anywhere, ensuring that patient information is available for timely decision-making.

Improved Collaboration Cloud-based healthcare platforms enable easy sharing of patient data between healthcare providers, specialists, and labs. This facilitates better collaboration and more accurate diagnoses and treatment plans, especially in multi-disciplinary cases.

Cost Efficiency The cloud reduces the need for expensive hardware, software, and in-house IT teams. Healthcare providers only pay for the resources they use, making it a cost-effective solution. Additionally, the scalability of cloud systems ensures they can grow as healthcare organizations expand.

Better Data Security Protecting sensitive patient information is critical in healthcare. Cloud computing providers invest heavily in data security measures such as encryption, multi-factor authentication, and regular audits, ensuring compliance with regulatory standards like HIPAA.

Telemedicine and Remote Patient Monitoring Cloud computing powers telemedicine platforms, allowing patients to consult with doctors virtually, from the comfort of their homes. It also enables remote patient monitoring, where doctors can track patients' health metrics in real time, improving outcomes for chronic conditions.

Advanced Data Analytics The cloud supports the integration of advanced data analytics tools, including artificial intelligence (AI) and machine learning (ML), which can analyze large datasets to predict health trends, track disease outbreaks, and personalize treatment plans based on individual patient data.

Use Cases of Cloud Computing in Healthcare

Electronic Health Records (EHRs): Cloud-based EHRs allow healthcare providers to access and update patient records instantly, improving the quality of care.

Genomics and Precision Medicine: Cloud computing accelerates the processing of large datasets in genomics, supporting research and development in personalized medicine.

Hospital Information Systems (HIS): Cloud-powered HIS streamline hospital operations, from patient admissions to billing, improving efficiency.

Challenges in Cloud Computing for Healthcare

Despite its numerous benefits, there are challenges to implementing cloud computing in healthcare. These include:

Data Privacy Concerns: Although cloud providers offer robust security measures, healthcare organizations must ensure their systems are compliant with local and international regulations.

Integration with Legacy Systems: Many healthcare institutions still rely on outdated technology, making it challenging to integrate cloud solutions smoothly.

Staff Training: Healthcare professionals need adequate training to use cloud-based systems effectively.

Request Sample Pages

The Future of Cloud Computing in Healthcare

The future of healthcare will be increasingly cloud-centric. With advancements in AI, IoT, and big data analytics, cloud computing will continue to drive innovations in personalized medicine, population health management, and patient care. Additionally, with the growing trend of wearable devices and health apps, cloud computing will play a crucial role in integrating and managing data from diverse sources to provide a comprehensive view of patient health.

Conclusion

Cloud computing is not just a trend in healthcare; it is a transformative force driving the industry towards more efficient, secure, and patient-centric care. As healthcare organizations continue to adopt cloud technologies, we can expect to see improved patient outcomes, lower costs, and innovations that were once thought impossible.

Embracing cloud computing in healthcare is essential for any organization aiming to stay at the forefront of medical advancements and patient care.

Content Source:

2 notes

·

View notes

Text

In the era of digital transformation, cloud computing has emerged as a pivotal technology, reshaping the way businesses operate and innovate. Pune, a burgeoning IT and business hub, has seen a significant surge in the adoption of cloud services, with companies seeking to enhance their efficiency, scalability, and agility. Zip Crest stands at the forefront of this revolution, offering comprehensive cloud computing services tailored to meet the diverse needs of businesses in Pune

The Importance of Cloud Computing

Cloud computing enables organizations to leverage a network of remote servers hosted on the internet to store, manage, and process data, rather than relying on local servers or personal computers. This shift provides several key benefits:

- Scalability: Businesses can easily scale their operations up or down based on demand, without the need for significant capital investment in hardware.

- Cost Efficiency: Cloud services operate on a pay-as-you-go model, reducing the need for substantial upfront investment and lowering overall IT costs.

- Accessibility: Cloud computing allows access to data and applications from anywhere, at any time, fostering remote work and collaboration.

- Security: Leading cloud service providers offer robust security measures, including encryption, access controls, and regular security audits, to protect sensitive data.

Zip Crest: Leading Cloud Computing Services in Pune

Zip Crest is committed to empowering businesses in Pune with cutting-edge cloud computing solutions. Our services are designed to address the unique challenges and opportunities that come with the digital age. Here’s how we can transform your business operations:

1. Cloud Strategy and Consulting:

At Zip Crest, we begin by understanding your business objectives and IT infrastructure. Our experts then craft a bespoke cloud strategy that aligns with your goals, ensuring a seamless transition to the cloud and maximizing the benefits of cloud technology.

2. Infrastructure as a Service (IaaS):

Our IaaS offerings provide businesses with virtualized computing resources over the internet. This includes virtual machines, storage, and networking capabilities, allowing you to build and manage your IT infrastructure without the need for physical hardware.

3. Platform as a Service (PaaS):

With our PaaS solutions, developers can build, deploy, and manage applications without worrying about the underlying infrastructure. This accelerates development cycles, enhances productivity, and reduces time to market.

4. Software as a Service (SaaS):

Zip Crest offers a range of SaaS applications that can streamline your business processes. From customer relationship management (CRM) to enterprise resource planning (ERP), our cloud-based software solutions are designed to improve efficiency and drive growth.

5. Cloud Migration Services:

Transitioning to the cloud can be complex. Our cloud migration services ensure a smooth and secure migration of your applications, data, and workloads to the cloud, minimizing downtime and disruption to your business operations.

6. Managed Cloud Services:

Once your infrastructure is on the cloud, ongoing management is crucial to ensure optimal performance and security. Our managed cloud services provide continuous monitoring, maintenance, and support, allowing you to focus on your core business activities.

Why Choose Zip Crest?

Choosing Zip Crest for your cloud computing needs comes with several advantages:

- Expertise: Our team of certified cloud professionals brings extensive experience and deep knowledge of cloud technologies.

- Customized Solutions: We understand that every business is unique. Our solutions are tailored to meet your specific requirements and objectives.

-Proactive Support: We offer 24/7 support to ensure that your cloud infrastructure is always running smoothly and efficiently.

- Security Focus: Security is at the core of our services. We implement robust security measures to protect your data and applications from threats.

Conclusion

In conclusion, cloud computing is transforming the way businesses operate, offering unprecedented levels of flexibility, scalability, and efficiency. Zip Crest is dedicated to helping businesses in Pune harness the power of the cloud to achieve their goals and stay competitive in today’s fast-paced digital landscape. By partnering with Zip Crest, you can ensure that your business is well-equipped to navigate the complexities of cloud computing and reap its many benefits. Embrace the future of technology with Zip Crest and revolutionize your business operations with our top-tier cloud computing services.

Get In Touch

Website: https://zipcrest.com/

Address: 13, 4th Floor, A Building City Vista Office Park Fountain Road Karadi Pune Maharashtra 411014

Email: [email protected] / [email protected]

Call: +912049330928 / 9763702645 / 7020684182

2 notes

·

View notes

Text

If you ever had pastries at breakfast, drank soy milk, used soaps at home, or built yourself a nice flat-pack piece of furniture, you may have contributed to deforestation and climate change.

Every item has a price—but the cost isn’t felt only in our pockets. Hidden in that price is a complex chain of production, encompassing economic, social, and environmental relations that sustain livelihoods and, unfortunately, contribute to habitat destruction, deforestation, and the warming of our planet.

Approximately 4 billion hectares of forest around the world act as a carbon sink which, over the past two decades, has annually absorbed a net 7.6 billion metric tons of CO2. That’s the equivalent of 1.5 times the annual emissions of the US.

Conversely, a cleared forest becomes a carbon source. Many factors lead to forest clearing, but the root cause is economic. Farmers cut down the forest to expand their farms, support cattle grazing, harvest timber, mine minerals, and build infrastructure such as roads. Until that economic pressure goes away, the clearing may continue.

In 2024, however, we are going to see a big boost to global efforts to fight deforestation. New EU legislation will make it illegal to sell or export a range of commodities if they have been produced on deforested land. Sellers will need to identify exactly where their product originates, down to the geolocation of the plot. Penalties are harsh, including bans and fines of up to 4 percent of the offender's annual EU-wide turnover. As such, industry pushback has been strong, claiming that the costs are too high or the requirements are too onerous. Like many global frameworks, this initiative is being led by the EU, with other countries sure to follow, as the so-called Brussels Effect pressures ever more jurisdictions to adopt its methods.

The impact of these measures will only be as strong as the enforcement and, in 2024, we will see new ways of doing that digitally. At Farmerline (which I cofounded), for instance, we have been working on supply chain traceability for over a decade. We incentivize rule-following by making it beneficial.

When we digitize farmers and allow them and other stakeholders to track their products from soil to shelf, they also gain access to a suite of other products: the latest, most sustainable farming practices in their own language, access to flexible financing to fund climate-smart products such as drought-resistant seeds, solar irrigation systems and organic fertilizers, and the ability to earn more through international commodity markets.

Digitization helps build resilience and lasting wealth for the smallholders and helps save the environment. Another example is the World Economic Forum’s OneMap—an open-source privacy-preserving digital tool which helps governments use geospatial and farmer data to improve planning and decision making in agriculture and land. In India, the Data Empowerment Protection Architecture also provides a secure consent-based data-sharing framework to accelerate global financial inclusion.

In 2024 we will also see more food companies and food certification bodies leverage digital payment tools, like mobile money, to ensure farmers’ pay is not only direct and transparent, but also better if they comply with deforestation regulations.

The fight against deforestation will also be made easier by developments in hardware technology. New, lightweight drones from startups such as AirSeed can plant seeds, while further up, mini-satellites, such as those from Planet Labs, are taking millions of images per week, allowing governments and NGOs to track areas being deforested in near-real time. In Rwanda, researchers are using AI and the aerial footage captured by Planet Labs to calculate, monitor, and estimate the carbon stock of the entire country.

With these advances in software and hard-tech, in 2024, the global fight against deforestation will finally start to grow new shoots.

5 notes

·

View notes

Text

How to enable Hardware acceleration in Firefox ESR

For reference, my computer has intel integrated graphics, and my operating system is Debian 12 Bookworm with VA-API for graphics. While I had hardware video acceleration enabled for many application, I had to spend some time last year trying to figure out out how to enable it for Firefox. While I found this article and followed it, I couldn't figure out at first how to use the environment variable. So here's a guide now for anyone new to Linux!

First, determine whether you are using the Wayland or X11 protocol Windowing system if you haven't already. In a terminal, enter:

echo "$XDG_SESSION_TYPE"

This will tell you which Windowing system you are using. Once you've followed the instructions in the article linked, edit (as root or with root privileges) /usr/share/applications/firefox-esr.desktop with your favorite text-editing software. I like to use nano! So for example, you would enter in a terminal:

sudo nano /usr/share/applications/firefox-esr.desktop

Then, navigate to the line that says "Exec=...". Replace that line with the following line, depending on whether you use Wayland or X11. If you use Wayland:

Exec=env MOZ_ENABLE_WAYLAND=1 firefox

If you use X11:

Exec=env MOZ_X11_EGL=1 firefox

Then save the file! If you are using the nano editor, press Ctrl+x, then press Y and then press enter! Restart Firefox ESR if you were already on it, and it should now work! Enjoy!

#linux#debian#gnu/linux#hardware acceleration#transfemme#Honestly I might start doing more Linux tutorials!#Linux is fun!

6 notes

·

View notes

Text

From Coding to Creation: Java's Versatile Influence

Java, often described as the "king of programming languages," stands as a monumental force that has significantly influenced the trajectory of modern software development. For over two decades, Java has proven its versatile, powerful, and dependable programming language, underpinning a vast array of applications, platforms, and systems that permeate our digital landscape. In this comprehensive exploration, we will embark on a journey to unravel the essence of Java, delving deep into what makes it indispensable and why it continues to be the preferred choice for programmers across diverse domains.

What is Java?

At its core, Java is a high-level, object-oriented, and platform-independent programming language that defies the conventional limitations of traditional coding. Conceived in the mid-1990s through the visionary efforts of James Gosling and his adept team at Sun Microsystems (now seamlessly integrated into Oracle Corporation), Java introduced a revolutionary concept that continues to define its identity: "Write Once, Run Anywhere." This groundbreaking principle signifies that Java applications exhibit a remarkable adaptability, capable of executing seamlessly on various platforms, provided a Java Virtual Machine (JVM) stands ready to facilitate their execution. This single feature alone positions Java as an unparalleled workhorse, transcending the boundaries of operating systems and hardware, and ushering in an era of software portability and compatibility.

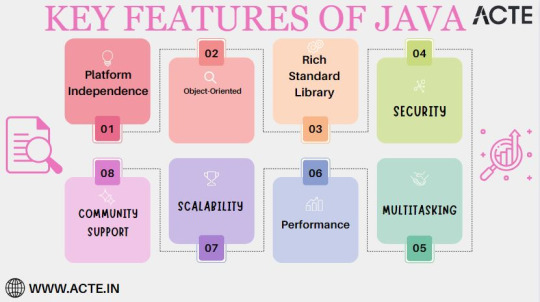

Key Features of Java:

Platform Independence: Java's unparalleled platform independence is the cornerstone of its success. Code authored in Java is liberated from the confines of a single operating system, enabling it to traverse across a plethora of platforms without requiring any cumbersome modifications. This inherent portability not only obliterates compatibility concerns but also streamlines software deployment, eliminating the need for platform-specific versions.

Object-Oriented Paradigm: Java's steadfast adherence to the object-oriented programming (OOP) paradigm cultivates a development environment characterized by modularity and maintainability. By encapsulating code into discrete objects, Java empowers developers to construct intricate systems with greater efficiency and ease of management, a quality particularly favored in large-scale projects.

Rich Standard Library: The Java Standard Library stands as a testament to the language's comprehensiveness. It comprises a vast repository of pre-built classes and methods that cater to a wide spectrum of programming tasks. This comprehensive library significantly reduces development overhead by offering readily available tools for commonplace operations, bestowing developers with the invaluable gift of time.

Security: In an era marred by cyber threats and vulnerabilities, Java emerges as a paragon of security-conscious design. It incorporates robust security features, including a sandbox environment for executing untrusted code. Consequently, Java has become the de facto choice for building secure applications, particularly in industries where data integrity and user privacy are paramount.

Community Support: The strength of Java's thriving developer community is an asset of immeasurable value. This vast and active network ensures that developers are never left wanting for resources, libraries, or frameworks. It provides a dynamic support system where knowledge sharing and collaborative problem-solving flourish, accelerating project development and troubleshooting.

Scalability: Java is not confined by the scale of a project. It gracefully adapts to the demands of both modest applications and sprawling enterprise-level systems. Its versatility ensures that as your project grows, Java will remain a steadfast companion, capable of meeting your evolving requirements.

Performance: Java's Just-In-Time (JIT) compiler serves as the vanguard of its performance capabilities. By dynamically optimizing code execution, Java ensures that applications not only run efficiently but also deliver exceptional user experiences. This, coupled with the ability to harness the power of modern hardware, makes Java a preferred choice for performance-critical applications.

Multithreading: Java's built-in support for multithreading equips applications to execute multiple tasks concurrently. This not only enhances responsiveness but also elevates the overall performance of applications, particularly those designed for tasks that demand parallel processing.

Java is not merely a programming language; it represents a dynamic ecosystem that empowers developers to fashion an extensive array of applications, ranging from mobile apps and web services to enterprise-grade software solutions. Its hallmark feature of platform independence, complemented by its rich libraries, security fortifications, and the formidable backing of a robust developer community, collectively underpin its enduring popularity.

In a world where digital innovation propels progress, Java stands as an essential cornerstone for building the technologies that sculpt our future. It's not merely a language; it's the key to unlocking a boundless realm of opportunities. For those seeking to embark on a journey into the realm of Java programming or aspiring to refine their existing skills, ACTE Technologies stands as a beacon of expert guidance and comprehensive training. Their programs are tailored to equip you with the knowledge and skills necessary to harness the full potential of Java in your software development career.

As we navigate an era defined by digital transformation, Java remains a trusted companion, continually evolving to meet the ever-changing demands of technology. It's not just a programming language; it's the linchpin of a world characterized by innovation and progress. Let ACTE Technologies be your trusted guide on this exhilarating journey into the boundless possibilities of Java programming.

7 notes

·

View notes