#data science course in canada

Explore tagged Tumblr posts

Text

Start Strong: Beginner Data Science Courses to Launch Your Career in Canada

Start a profession with Sai Data Science! Join for beginners Data Science courses in Canada and you'll have access to unlimited chances in the technology sector.

#data science course in canada#learn data science online#data science course in edmonton#data visualization tips and techniques

0 notes

Text

Data science is a field of study that works with enormous amounts of data utilizing contemporary technologies and methodologies to uncover hidden patterns, obtain valuable information, and make business decisions. Datamites provides online data science training in canada

0 notes

Text

Elaine Liu: Charging ahead

New Post has been published on https://thedigitalinsider.com/elaine-liu-charging-ahead/

Elaine Liu: Charging ahead

MIT senior Elaine Siyu Liu doesn’t own an electric car, or any car. But she sees the impact of electric vehicles (EVs) and renewables on the grid as two pieces of an energy puzzle she wants to solve.

The U.S. Department of Energy reports that the number of public and private EV charging ports nearly doubled in the past three years, and many more are in the works. Users expect to plug in at their convenience, charge up, and drive away. But what if the grid can’t handle it?

Electricity demand, long stagnant in the United States, has spiked due to EVs, data centers that drive artificial intelligence, and industry. Grid planners forecast an increase of 2.6 percent to 4.7 percent in electricity demand over the next five years, according to data reported to federal regulators. Everyone from EV charging-station operators to utility-system operators needs help navigating a system in flux.

That’s where Liu’s work comes in.

Liu, who is studying mathematics and electrical engineering and computer science (EECS), is interested in distribution — how to get electricity from a centralized location to consumers. “I see power systems as a good venue for theoretical research as an application tool,” she says. “I’m interested in it because I’m familiar with the optimization and probability techniques used to map this level of problem.”

Liu grew up in Beijing, then after middle school moved with her parents to Canada and enrolled in a prep school in Oakville, Ontario, 30 miles outside Toronto.

Liu stumbled upon an opportunity to take part in a regional math competition and eventually started a math club, but at the time, the school’s culture surrounding math surprised her. Being exposed to what seemed to be some students’ aversion to math, she says, “I don’t think my feelings about math changed. I think my feelings about how people feel about math changed.”

Liu brought her passion for math to MIT. The summer after her sophomore year, she took on the first of the two Undergraduate Research Opportunity Program projects she completed with electric power system expert Marija Ilić, a joint adjunct professor in EECS and a senior research scientist at the MIT Laboratory for Information and Decision Systems.

Predicting the grid

Since 2022, with the help of funding from the MIT Energy Initiative (MITEI), Liu has been working with Ilić on identifying ways in which the grid is challenged.

One factor is the addition of renewables to the energy pipeline. A gap in wind or sun might cause a lag in power generation. If this lag occurs during peak demand, it could mean trouble for a grid already taxed by extreme weather and other unforeseen events.

If you think of the grid as a network of dozens of interconnected parts, once an element in the network fails — say, a tree downs a transmission line — the electricity that used to go through that line needs to be rerouted. This may overload other lines, creating what’s known as a cascade failure.

“This all happens really quickly and has very large downstream effects,” Liu says. “Millions of people will have instant blackouts.”

Even if the system can handle a single downed line, Liu notes that “the nuance is that there are now a lot of renewables, and renewables are less predictable. You can’t predict a gap in wind or sun. When such things happen, there’s suddenly not enough generation and too much demand. So the same kind of failure would happen, but on a larger and more uncontrollable scale.”

Renewables’ varying output has the added complication of causing voltage fluctuations. “We plug in our devices expecting a voltage of 110, but because of oscillations, you will never get exactly 110,” Liu says. “So even when you can deliver enough electricity, if you can’t deliver it at the specific voltage level that is required, that’s a problem.”

Liu and Ilić are building a model to predict how and when the grid might fail. Lacking access to privatized data, Liu runs her models with European industry data and test cases made available to universities. “I have a fake power grid that I run my experiments on,” she says. “You can take the same tool and run it on the real power grid.”

Liu’s model predicts cascade failures as they evolve. Supply from a wind generator, for example, might drop precipitously over the course of an hour. The model analyzes which substations and which households will be affected. “After we know we need to do something, this prediction tool can enable system operators to strategically intervene ahead of time,” Liu says.

Dictating price and power

Last year, Liu turned her attention to EVs, which provide a different kind of challenge than renewables.

In 2022, S&P Global reported that lawmakers argued that the U.S. Federal Energy Regulatory Commission’s (FERC) wholesale power rate structure was unfair for EV charging station operators.

In addition to operators paying by the kilowatt-hour, some also pay more for electricity during peak demand hours. Only a few EVs charging up during those hours could result in higher costs for the operator even if their overall energy use is low.

Anticipating how much power EVs will need is more complex than predicting energy needed for, say, heating and cooling. Unlike buildings, EVs move around, making it difficult to predict energy consumption at any given time. “If users don’t like the price at one charging station or how long the line is, they’ll go somewhere else,” Liu says. “Where to allocate EV chargers is a problem that a lot of people are dealing with right now.”

One approach would be for FERC to dictate to EV users when and where to charge and what price they’ll pay. To Liu, this isn’t an attractive option. “No one likes to be told what to do,” she says.

Liu is looking at optimizing a market-based solution that would be acceptable to top-level energy producers — wind and solar farms and nuclear plants — all the way down to the municipal aggregators that secure electricity at competitive rates and oversee distribution to the consumer.

Analyzing the location, movement, and behavior patterns of all the EVs driven daily in Boston and other major energy hubs, she notes, could help demand aggregators determine where to place EV chargers and how much to charge consumers, akin to Walmart deciding how much to mark up wholesale eggs in different markets.

Last year, Liu presented the work at MITEI’s annual research conference. This spring, Liu and Ilić are submitting a paper on the market optimization analysis to a journal of the Institute of Electrical and Electronics Engineers.

Liu has come to terms with her early introduction to attitudes toward STEM that struck her as markedly different from those in China. She says, “I think the (prep) school had a very strong ‘math is for nerds’ vibe, especially for girls. There was a ‘why are you giving yourself more work?’ kind of mentality. But over time, I just learned to disregard that.”

After graduation, Liu, the only undergraduate researcher in Ilić’s MIT Electric Energy Systems Group, plans to apply to fellowships and graduate programs in EECS, applied math, and operations research.

Based on her analysis, Liu says that the market could effectively determine the price and availability of charging stations. Offering incentives for EV owners to charge during the day instead of at night when demand is high could help avoid grid overload and prevent extra costs to operators. “People would still retain the ability to go to a different charging station if they chose to,” she says. “I’m arguing that this works.”

#2022#amp#Analysis#approach#artificial#Artificial Intelligence#attention#Behavior#Building#buildings#Canada#cascade#challenge#China#competition#computer#Computer Science#conference#consumers#cooling#course#data#Data Centers#devices#effects#electric power#electric vehicles#Electrical engineering and computer science (EECS)#electricity#Electronics

2 notes

·

View notes

Text

#data science course#data science training#data science certification#data science online course#data science institute in delhi#data scientist#data analytics#big data#machine learning#business intelligence#data science in canada

2 notes

·

View notes

Text

An artificial intelligence (AI) course is an educational program or training that focuses on teaching individuals the principles, techniques, and applications of artificial intelligence. Datamites is an organization that offers various courses and training programs in the field of data science, artificial intelligence, and machine learning.

0 notes

Text

I thought y'all should read this

I have a free trial to News+ so I copy-pasted it for you here. I don't think Jonathan Haidt would object to more people having this info.

Tumblr wouldn't let me post it until i removed all the links to Haidt's sources. You'll have to take my word that everything is sourced.

End the Phone-Based Childhood Now

The environment in which kids grow up today is hostile to human development.

By Jonathan Haidt

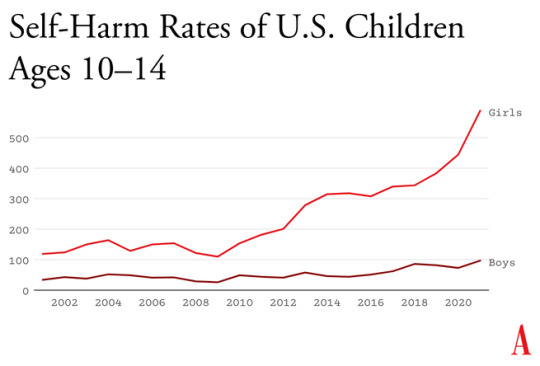

Something went suddenly and horribly wrong for adolescents in the early 2010s. By now you’ve likely seen the statistics: Rates of depression and anxiety in the United States—fairly stable in the 2000s—rose by more than 50 percent in many studies from 2010 to 2019. The suicide rate rose 48 percent for adolescents ages 10 to 19. For girls ages 10 to 14, it rose 131 percent.

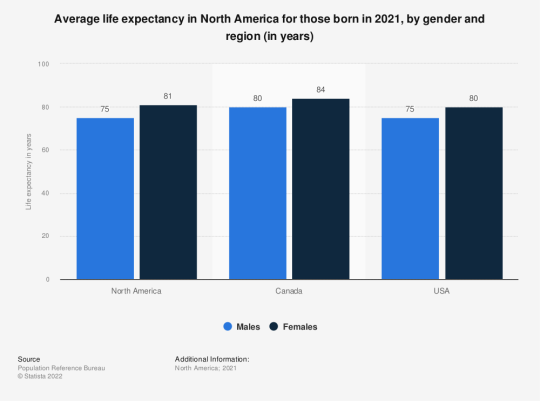

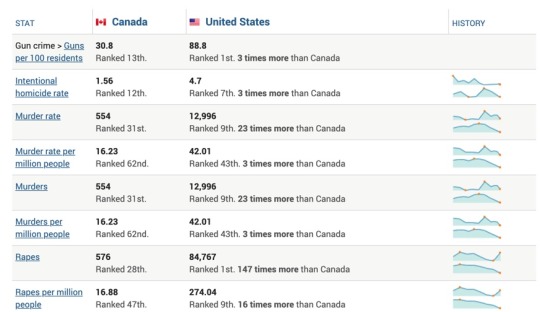

The problem was not limited to the U.S.: Similar patterns emerged around the same time in Canada, the U.K., Australia, New Zealand, the Nordic countries, and beyond. By a variety of measures and in a variety of countries, the members of Generation Z (born in and after 1996) are suffering from anxiety, depression, self-harm, and related disorders at levels higher than any other generation for which we have data.

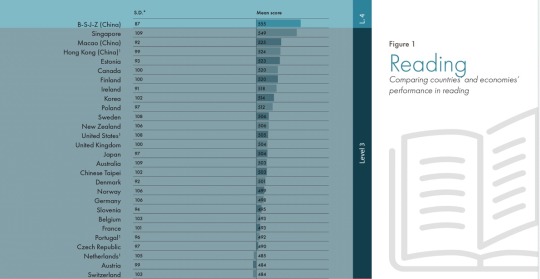

The decline in mental health is just one of many signs that something went awry. Loneliness and friendlessness among American teens began to surge around 2012. Academic achievement went down, too. According to “The Nation’s Report Card,” scores in reading and math began to decline for U.S. students after 2012, reversing decades of slow but generally steady increase. PISA, the major international measure of educational trends, shows that declines in math, reading, and science happened globally, also beginning in the early 2010s.

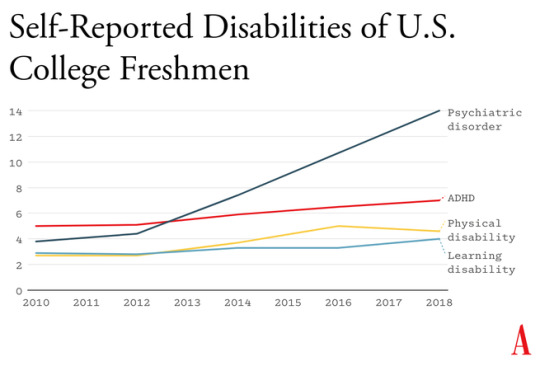

As the oldest members of Gen Z reach their late 20s, their troubles are carrying over into adulthood. Young adults are dating less, having less sex, and showing less interest in ever having children than prior generations. They are more likelyto live with their parents. They were less likely to get jobs as teens, and managers say they are harder to work with. Many of these trends began with earlier generations, but most of them accelerated with Gen Z.

Surveys show that members of Gen Z are shyer and more risk averse than previous generations, too, and risk aversion may make them less ambitious. In an interview last May, OpenAI co-founder Sam Altman and Stripe co-founder Patrick Collison noted that, for the first time since the 1970s, none of Silicon Valley’s preeminent entrepreneurs are under 30. “Something has really gone wrong,” Altman said. In a famously young industry, he was baffled by the sudden absence of great founders in their 20s.

Generations are not monolithic, of course. Many young people are flourishing. Taken as a whole, however, Gen Z is in poor mental health and is lagging behind previous generations on many important metrics. And if a generation is doing poorly––if it is more anxious and depressed and is starting families, careers, and important companies at a substantially lower rate than previous generations––then the sociological and economic consequences will be profound for the entire society.

What happened in the early 2010s that altered adolescent development and worsened mental health? Theories abound, but the fact that similar trends are found in many countries worldwide means that events and trends that are specific to the United States cannot be the main story.

I think the answer can be stated simply, although the underlying psychology is complex: Those were the years when adolescents in rich countries traded in their flip phones for smartphones and moved much more of their social lives online—particularly onto social-media platforms designed for virality and addiction. Once young people began carrying the entire internet in their pockets, available to them day and night, it altered their daily experiences and developmental pathways across the board. Friendship, dating, sexuality, exercise, sleep, academics, politics, family dynamics, identity—all were affected. Life changed rapidly for younger children, too, as they began to get access to their parents’ smartphones and, later, got their own iPads, laptops, and even smartphones during elementary school.

As a social psychologist who has long studied social and moral development, I have been involved in debates about the effects of digital technology for years. Typically, the scientific questions have been framed somewhat narrowly, to make them easier to address with data. For example, do adolescents who consume more social media have higher levels of depression? Does using a smartphone just before bedtime interfere with sleep? The answer to these questions is usually found to be yes, although the size of the relationship is often statistically small, which has led some researchers to conclude that these new technologies are not responsible for the gigantic increases in mental illness that began in the early 2010s.

But before we can evaluate the evidence on any one potential avenue of harm, we need to step back and ask a broader question: What is childhood––including adolescence––and how did it change when smartphones moved to the center of it? If we take a more holistic view of what childhood is and what young children, tweens, and teens need to do to mature into competent adults, the picture becomes much clearer. Smartphone-based life, it turns out, alters or interferes with a great number of developmental processes.

The intrusion of smartphones and social media are not the only changes that have deformed childhood. There’s an important backstory, beginning as long ago as the 1980s, when we started systematically depriving children and adolescents of freedom, unsupervised play, responsibility, and opportunities for risk taking, all of which promote competence, maturity, and mental health. But the change in childhood accelerated in the early 2010s, when an already independence-deprived generation was lured into a new virtual universe that seemed safe to parents but in fact is more dangerous, in many respects, than the physical world.

My claim is that the new phone-based childhood that took shape roughly 12 years ago is making young people sick and blocking their progress to flourishing in adulthood. We need a dramatic cultural correction, and we need it now.

1. The Decline of Play and Independence

Human brains are extraordinarily large compared with those of other primates, and human childhoods are extraordinarily long, too, to give those large brains time to wire up within a particular culture. A child’s brain is already 90 percent of its adult size by about age 6. The next 10 or 15 years are about learning norms and mastering skills—physical, analytical, creative, and social. As children and adolescents seek out experiences and practice a wide variety of behaviors, the synapses and neurons that are used frequently are retained while those that are used less often disappear. Neurons that fire together wire together, as brain researchers say.

Brain development is sometimes said to be “experience-expectant,” because specific parts of the brain show increased plasticity during periods of life when an animal’s brain can “expect” to have certain kinds of experiences. You can see this with baby geese, who will imprint on whatever mother-sized object moves in their vicinity just after they hatch. You can see it with human children, who are able to learn languages quickly and take on the local accent, but only through early puberty; after that, it’s hard to learn a language and sound like a native speaker. There is also some evidence of a sensitive period for cultural learning more generally. Japanese children who spent a few years in California in the 1970s came to feel “American” in their identity and ways of interacting only if they attended American schools for a few years between ages 9 and 15. If they left before age 9, there was no lasting impact. If they didn’t arrive until they were 15, it was too late; they didn’t come to feel American.

Human childhood is an extended cultural apprenticeship with different tasks at different ages all the way through puberty. Once we see it this way, we can identify factors that promote or impede the right kinds of learning at each age. For children of all ages, one of the most powerful drivers of learning is the strong motivation to play. Play is the work of childhood, and all young mammals have the same job: to wire up their brains by playing vigorously and often, practicing the moves and skills they’ll need as adults. Kittens will play-pounce on anything that looks like a mouse tail. Human children will play games such as tag and sharks and minnows, which let them practice both their predator skills and their escaping-from-predator skills. Adolescents will play sports with greater intensity, and will incorporate playfulness into their social interactions—flirting, teasing, and developing inside jokes that bond friends together. Hundreds of studies on young rats, monkeys, and humans show that young mammals want to play, need to play, and end up socially, cognitively, and emotionally impaired when they are deprived of play.

One crucial aspect of play is physical risk taking. Children and adolescents must take risks and fail—often—in environments in which failure is not very costly. This is how they extend their abilities, overcome their fears, learn to estimate risk, and learn to cooperate in order to take on larger challenges later. The ever-present possibility of getting hurt while running around, exploring, play-fighting, or getting into a real conflict with another group adds an element of thrill, and thrilling play appears to be the most effective kind for overcoming childhood anxieties and building social, emotional, and physical competence. The desire for risk and thrill increases in the teen years, when failure might carry more serious consequences. Children of all ages need to choose the risk they are ready for at a given moment. Young people who are deprived of opportunities for risk taking and independent exploration will, on average, develop into more anxious and risk-averse adults.

Human childhood and adolescence evolved outdoors, in a physical world full of dangers and opportunities. Its central activities––play, exploration, and intense socializing––were largely unsupervised by adults, allowing children to make their own choices, resolve their own conflicts, and take care of one another. Shared adventures and shared adversity bound young people together into strong friendship clusters within which they mastered the social dynamics of small groups, which prepared them to master bigger challenges and larger groups later on.

And then we changed childhood.

The changes started slowly in the late 1970s and ’80s, before the arrival of the internet, as many parents in the U.S. grew fearful that their children would be harmed or abducted if left unsupervised. Such crimes have always been extremely rare, but they loomed larger in parents’ minds thanks in part to rising levels of street crime combined with the arrival of cable TV, which enabled round-the-clock coverage of missing-children cases. A general decline in social capital––the degree to which people knew and trusted their neighbors and institutions––exacerbated parental fears. Meanwhile, rising competition for college admissions encouraged more intensive forms of parenting. In the 1990s, American parents began pulling their children indoors or insisting that afternoons be spent in adult-run enrichment activities. Free play, independent exploration, and teen-hangout time declined.

In recent decades, seeing unchaperoned children outdoors has become so novel that when one is spotted in the wild, some adults feel it is their duty to call the police. In 2015, the Pew Research Center found that parents, on average, believed that children should be at least 10 years old to play unsupervised in front of their house, and that kids should be 14 before being allowed to go unsupervised to a public park. Most of these same parents had enjoyed joyous and unsupervised outdoor play by the age of 7 or 8.

2. The Virtual World Arrives in Two Waves

The internet, which now dominates the lives of young people, arrived in two waves of linked technologies. The first one did little harm to Millennials. The second one swallowed Gen Z whole.

The first wave came ashore in the 1990s with the arrival of dial-up internet access, which made personal computers good for something beyond word processing and basic games. By 2003, 55 percent of American households had a computer with (slow) internet access. Rates of adolescent depression, loneliness, and other measures of poor mental health did not rise in this first wave. If anything, they went down a bit. Millennial teens (born 1981 through 1995), who were the first to go through puberty with access to the internet, were psychologically healthier and happier, on average, than their older siblings or parents in Generation X (born 1965 through 1980).

The second wave began to rise in the 2000s, though its full force didn’t hit until the early 2010s. It began rather innocently with the introduction of social-media platforms that helped people connect with their friends. Posting and sharing content became much easier with sites such as Friendster (launched in 2003), Myspace (2003), and Facebook (2004).

Teens embraced social media soon after it came out, but the time they could spend on these sites was limited in those early years because the sites could only be accessed from a computer, often the family computer in the living room. Young people couldn’t access social media (and the rest of the internet) from the school bus, during class time, or while hanging out with friends outdoors. Many teens in the early-to-mid-2000s had cellphones, but these were basic phones (many of them flip phones) that had no internet access. Typing on them was difficult––they had only number keys. Basic phones were tools that helped Millennials meet up with one another in person or talk with each other one-on-one. I have seen no evidence to suggest that basic cellphones harmed the mental health of Millennials.

It was not until the introduction of the iPhone (2007), the App Store (2008), and high-speed internet (which reached 50 percent of American homes in 2007)—and the corresponding pivot to mobile made by many providers of social media, video games, and porn—that it became possible for adolescents to spend nearly every waking moment online. The extraordinary synergy among these innovations was what powered the second technological wave. In 2011, only 23 percent of teens had a smartphone. By 2015, that number had risen to 73 percent, and a quarter of teens said they were online “almost constantly.” Their younger siblings in elementary school didn’t usually have their own smartphones, but after its release in 2010, the iPad quickly became a staple of young children’s daily lives. It was in this brief period, from 2010 to 2015, that childhood in America (and many other countries) was rewired into a form that was more sedentary, solitary, virtual, and incompatible with healthy human development.

3. Techno-optimism and the Birth of the Phone-Based Childhood

The phone-based childhood created by that second wave—including not just smartphones themselves, but all manner of internet-connected devices, such as tablets, laptops, video-game consoles, and smartwatches—arrived near the end of a period of enormous optimism about digital technology. The internet came into our lives in the mid-1990s, soon after the fall of the Soviet Union. By the end of that decade, it was widely thought that the web would be an ally of democracy and a slayer of tyrants. When people are connected to each other, and to all the information in the world, how could any dictator keep them down?

In the 2000s, Silicon Valley and its world-changing inventions were a source of pride and excitement in America. Smart and ambitious young people around the world wanted to move to the West Coast to be part of the digital revolution. Tech-company founders such as Steve Jobs and Sergey Brin were lauded as gods, or at least as modern Prometheans, bringing humans godlike powers. The Arab Spring bloomed in 2011 with the help of decentralized social platforms, including Twitter and Facebook. When pundits and entrepreneurs talked about the power of social media to transform society, it didn’t sound like a dark prophecy.

You have to put yourself back in this heady time to understand why adults acquiesced so readily to the rapid transformation of childhood. Many parents had concerns, even then, about what their children were doing online, especially because of the internet’s ability to put children in contact with strangers. But there was also a lot of excitement about the upsides of this new digital world. If computers and the internet were the vanguards of progress, and if young people––widely referred to as “digital natives”––were going to live their lives entwined with these technologies, then why not give them a head start? I remember how exciting it was to see my 2-year-old son master the touch-and-swipe interface of my first iPhone in 2008. I thought I could see his neurons being woven together faster as a result of the stimulation it brought to his brain, compared to the passivity of watching television or the slowness of building a block tower. I thought I could see his future job prospects improving.

Touchscreen devices were also a godsend for harried parents. Many of us discovered that we could have peace at a restaurant, on a long car trip, or at home while making dinner or replying to emails if we just gave our children what they most wanted: our smartphones and tablets. We saw that everyone else was doing it and figured it must be okay.

It was the same for older children, desperate to join their friends on social-media platforms, where the minimum age to open an account was set by law to 13, even though no research had been done to establish the safety of these products for minors. Because the platforms did nothing (and still do nothing) to verify the stated age of new-account applicants, any 10-year-old could open multiple accounts without parental permission or knowledge, and many did. Facebook and later Instagram became places where many sixth and seventh graders were hanging out and socializing. If parents did find out about these accounts, it was too late. Nobody wanted their child to be isolated and alone, so parents rarely forced their children to shut down their accounts.

We had no idea what we were doing.

4. The High Cost of a Phone-Based Childhood

In Walden, his 1854 reflection on simple living, Henry David Thoreau wrote, “The cost of a thing is the amount of … life which is required to be exchanged for it, immediately or in the long run.” It’s an elegant formulation of what economists would later call the opportunity cost of any choice—all of the things you can no longer do with your money and time once you’ve committed them to something else. So it’s important that we grasp just how much of a young person’s day is now taken up by their devices.

The numbers are hard to believe. The most recent Gallup data show that American teens spend about five hours a day just on social-media platforms (including watching videos on TikTok and YouTube). Add in all the other phone- and screen-based activities, and the number rises to somewhere between seven and nine hours a day, on average. The numbers are even higher in single-parent and low-income families, and among Black, Hispanic, and Native American families.

In Thoreau’s terms, how much of life is exchanged for all this screen time? Arguably, most of it. Everything else in an adolescent’s day must get squeezed down or eliminated entirely to make room for the vast amount of content that is consumed, and for the hundreds of “friends,” “followers,” and other network connections that must be serviced with texts, posts, comments, likes, snaps, and direct messages. I recently surveyed my students at NYU, and most of them reported that the very first thing they do when they open their eyes in the morning is check their texts, direct messages, and social-media feeds. It’s also the last thing they do before they close their eyes at night. And it’s a lot of what they do in between.

The amount of time that adolescents spend sleeping declined in the early 2010s, and many studies tie sleep loss directly to the use of devices around bedtime, particularly when they’re used to scroll through social media. Exercise declined, too, which is unfortunate because exercise, like sleep, improves both mental and physical health. Book reading has been declining for decades, pushed aside by digital alternatives, but the decline, like so much else, sped up in the early 2010s. With passive entertainment always available, adolescent minds likely wander less than they used to; contemplation and imagination might be placed on the list of things winnowed down or crowded out.

But perhaps the most devastating cost of the new phone-based childhood was the collapse of time spent interacting with other people face-to-face. A study of how Americans spend their time found that, before 2010, young people (ages 15 to 24) reported spending far more time with their friends (about two hours a day, on average, not counting time together at school) than did older people (who spent just 30 to 60 minutes with friends). Time with friends began decreasing for young people in the 2000s, but the drop accelerated in the 2010s, while it barely changed for older people. By 2019, young people’s time with friends had dropped to just 67 minutes a day. It turns out that Gen Z had been socially distancing for many years and had mostly completed the project by the time COVID-19 struck.

You might question the importance of this decline. After all, isn’t much of this online time spent interacting with friends through texting, social media, and multiplayer video games? Isn’t that just as good?

Some of it surely is, and virtual interactions offer unique benefits too, especially for young people who are geographically or socially isolated. But in general, the virtual world lacks many of the features that make human interactions in the real world nutritious, as we might say, for physical, social, and emotional development. In particular, real-world relationships and social interactions are characterized by four features—typical for hundreds of thousands of years—that online interactions either distort or erase.

First, real-world interactions are embodied, meaning that we use our hands and facial expressions to communicate, and we learn to respond to the body language of others. Virtual interactions, in contrast, mostly rely on language alone. No matter how many emojis are offered as compensation, the elimination of communication channels for which we have eons of evolutionary programming is likely to produce adults who are less comfortable and less skilled at interacting in person.

Second, real-world interactions are synchronous; they happen at the same time. As a result, we learn subtle cues about timing and conversational turn taking. Synchronous interactions make us feel closer to the other person because that’s what getting “in sync” does. Texts, posts, and many other virtual interactions lack synchrony. There is less real laughter, more room for misinterpretation, and more stress after a comment that gets no immediate response.

Third, real-world interactions primarily involve one‐to‐one communication, or sometimes one-to-several. But many virtual communications are broadcast to a potentially huge audience. Online, each person can engage in dozens of asynchronous interactions in parallel, which interferes with the depth achieved in all of them. The sender’s motivations are different, too: With a large audience, one’s reputation is always on the line; an error or poor performance can damage social standing with large numbers of peers. These communications thus tend to be more performative and anxiety-inducing than one-to-one conversations.

Finally, real-world interactions usually take place within communities that have a high bar for entry and exit, so people are strongly motivated to invest in relationships and repair rifts when they happen. But in many virtual networks, people can easily block others or quit when they are displeased. Relationships within such networks are usually more disposable.

These unsatisfying and anxiety-producing features of life online should be recognizable to most adults. Online interactions can bring out antisocial behavior that people would never display in their offline communities. But if life online takes a toll on adults, just imagine what it does to adolescents in the early years of puberty, when their “experience expectant” brains are rewiring based on feedback from their social interactions.

Kids going through puberty online are likely to experience far more social comparison, self-consciousness, public shaming, and chronic anxiety than adolescents in previous generations, which could potentially set developing brains into a habitual state of defensiveness. The brain contains systems that are specialized for approach (when opportunities beckon) and withdrawal (when threats appear or seem likely). People can be in what we might call “discover mode” or “defend mode” at any moment, but generally not both. The two systems together form a mechanism for quickly adapting to changing conditions, like a thermostat that can activate either a heating system or a cooling system as the temperature fluctuates. Some people’s internal thermostats are generally set to discover mode, and they flip into defend mode only when clear threats arise. These people tend to see the world as full of opportunities. They are happier and less anxious. Other people’s internal thermostats are generally set to defend mode, and they flip into discover mode only when they feel unusually safe. They tend to see the world as full of threats and are more prone to anxiety and depressive disorders.

A simple way to understand the differences between Gen Z and previous generations is that people born in and after 1996 have internal thermostats that were shifted toward defend mode. This is why life on college campuses changed so suddenly when Gen Z arrived, beginning around 2014. Students began requesting “safe spaces” and trigger warnings. They were highly sensitive to “microaggressions” and sometimes claimed that words were “violence.” These trends mystified those of us in older generations at the time, but in hindsight, it all makes sense. Gen Z students found words, ideas, and ambiguous social encounters more threatening than had previous generations of students because we had fundamentally altered their psychological development.

5. So Many Harms

The debate around adolescents’ use of smartphones and social media typically revolves around mental health, and understandably so. But the harms that have resulted from transforming childhood so suddenly and heedlessly go far beyondmental health. I’ve touched on some of them—social awkwardness, reduced self-confidence, and a more sedentary childhood. Here are three additional harms.

Fragmented Attention, Disrupted Learning

Staying on task while sitting at a computer is hard enough for an adult with a fully developed prefrontal cortex. It is far more difficult for adolescents in front of their laptop trying to do homework. They are probably less intrinsically motivated to stay on task. They’re certainly less able, given their undeveloped prefrontal cortex, and hence it’s easy for any company with an app to lure them away with an offer of social validation or entertainment. Their phones are pinging constantly—one study found that the typical adolescent now gets 237 notifications a day, roughly 15 every waking hour. Sustained attention is essential for doing almost anything big, creative, or valuable, yet young people find their attention chopped up into little bits by notifications offering the possibility of high-pleasure, low-effort digital experiences.

It even happens in the classroom. Studies confirm that when students have access to their phones during class time, they use them, especially for texting and checking social media, and their grades and learning suffer. This might explain why benchmark test scores began to decline in the U.S. and around the world in the early 2010s—well before the pandemic hit.

Addiction and Social Withdrawal

The neural basis of behavioral addiction to social media or video games is not exactly the same as chemical addiction to cocaine or opioids. Nonetheless, they all involve abnormally heavy and sustained activation of dopamine neurons and reward pathways. Over time, the brain adapts to these high levels of dopamine; when the child is not engaged in digital activity, their brain doesn’t have enough dopamine, and the child experiences withdrawal symptoms. These generally include anxiety, insomnia, and intense irritability. Kids with these kinds of behavioral addictions often become surly and aggressive, and withdraw from their families into their bedrooms and devices.

Social-media and gaming platforms were designed to hook users. How successful are they? How many kids suffer from digital addictions?

The main addiction risks for boys seem to be video games and porn. “Internet gaming disorder,” which was added to the main diagnosis manual of psychiatry in 2013 as a condition for further study, describes “significant impairment or distress” in several aspects of life, along with many hallmarks of addiction, including an inability to reduce usage despite attempts to do so. Estimates for the prevalence of IGD range from 7 to 15 percent among adolescent boys and young men. As for porn, a nationally representative survey of American adults published in 2019 found that 7 percent of American men agreed or strongly agreed with the statement “I am addicted to pornography”—and the rates were higher for the youngest men.

Girls have much lower rates of addiction to video games and porn, but they use social media more intensely than boys do. A study of teens in 29 nations found that between 5 and 15 percent of adolescents engage in what is called “problematic social media use,” which includes symptoms such as preoccupation, withdrawal symptoms, neglect of other areas of life, and lying to parents and friends about time spent on social media. That study did not break down results by gender, but many others have found that rates of “problematic use” are higher for girls.

I don’t want to overstate the risks: Most teens do not become addicted to their phones and video games. But across multiple studies and across genders, rates of problematic use come out in the ballpark of 5 to 15 percent. Is there any other consumer product that parents would let their children use relatively freely if they knew that something like one in 10 kids would end up with a pattern of habitual and compulsive use that disrupted various domains of life and looked a lot like an addiction?

The Decay of Wisdom and the Loss of Meaning

During that crucial sensitive period for cultural learning, from roughly ages 9 through 15, we should be especially thoughtful about who is socializing our children for adulthood. Instead, that’s when most kids get their first smartphone and sign themselves up (with or without parental permission) to consume rivers of content from random strangers. Much of that content is produced by other adolescents, in blocks of a few minutes or a few seconds.

This rerouting of enculturating content has created a generation that is largely cut off from older generations and, to some extent, from the accumulated wisdom of humankind, including knowledge about how to live a flourishing life. Adolescents spend less time steeped in their local or national culture. They are coming of age in a confusing, placeless, ahistorical maelstrom of 30-second stories curated by algorithms designed to mesmerize them. Without solid knowledge of the past and the filtering of good ideas from bad––a process that plays out over many generations––young people will be more prone to believe whatever terrible ideas become popular around them, which might explain why videos showing young people reacting positively to Osama bin Laden’s thoughts about America were trending on TikTok last fall.

All this is made worse by the fact that so much of digital public life is an unending supply of micro dramas about somebody somewhere in our country of 340 million people who did something that can fuel an outrage cycle, only to be pushed aside by the next. It doesn’t add up to anything and leaves behind only a distorted sense of human nature and affairs.

When our public life becomes fragmented, ephemeral, and incomprehensible, it is a recipe for anomie, or normlessness. The great French sociologist Émile Durkheim showed long ago that a society that fails to bind its people together with some shared sense of sacredness and common respect for rules and norms is not a society of great individual freedom; it is, rather, a place where disoriented individuals have difficulty setting goals and exerting themselves to achieve them. Durkheim argued that anomie was a major driver of suicide rates in European countries. Modern scholars continue to draw on his work to understand suicide rates today.

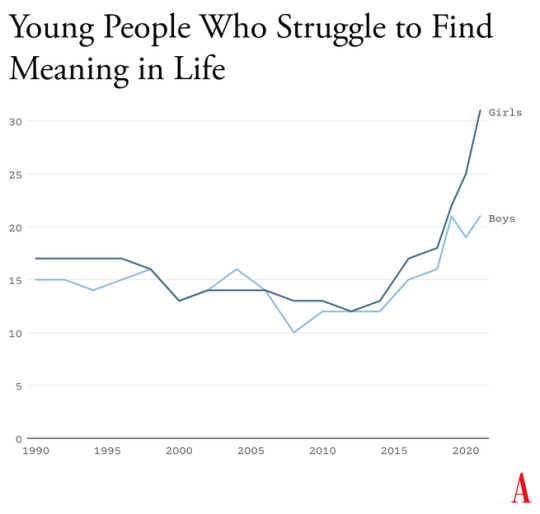

Durkheim’s observations are crucial for understanding what happened in the early 2010s. A long-running survey of American teens found that, from 1990 to 2010, high-school seniors became slightly less likely to agree with statements such as “Life often feels meaningless.” But as soon as they adopted a phone-based life and many began to live in the whirlpool of social media, where no stability can be found, every measure of despair increased. From 2010 to 2019, the number who agreed that their lives felt “meaningless” increased by about 70 percent, to more than one in five.

6. Young People Don’t Like Their Phone-Based Lives

How can I be confident that the epidemic of adolescent mental illness was kicked off by the arrival of the phone-based childhood? Skeptics point to other events as possible culprits, including the 2008 global financial crisis, global warming, the 2012 Sandy Hook school shooting and the subsequent active-shooter drills, rising academic pressures, and the opioid epidemic. But while these events might have been contributing factors in some countries, none can explain both the timing and international scope of the disaster.

An additional source of evidence comes from Gen Z itself. With all the talk of regulating social media, raising age limits, and getting phones out of schools, you might expect to find many members of Gen Z writing and speaking out in opposition. I’ve looked for such arguments and found hardly any. In contrast, many young adults tell stories of devastation.

Freya India, a 24-year-old British essayist who writes about girls, explains how social-media sites carry girls off to unhealthy places: “It seems like your child is simply watching some makeup tutorials, following some mental health influencers, or experimenting with their identity. But let me tell you: they are on a conveyor belt to someplace bad. Whatever insecurity or vulnerability they are struggling with, they will be pushed further and further into it.” She continues:

Gen Z were the guinea pigs in this uncontrolled global social experiment. We were the first to have our vulnerabilities and insecurities fed into a machine that magnified and refracted them back at us, all the time, before we had any sense of who we were. We didn’t just grow up with algorithms. They raised us. They rearranged our faces. Shaped our identities. Convinced us we were sick.

Rikki Schlott, a 23-year-old American journalist and co-author of The Canceling of the American Mind, writes,

"The day-to-day life of a typical teen or tween today would be unrecognizable to someone who came of age before the smartphone arrived. Zoomers are spending an average of 9 hours daily in this screen-time doom loop—desperate to forget the gaping holes they’re bleeding out of, even if just for … 9 hours a day. Uncomfortable silence could be time to ponder why they’re so miserable in the first place. Drowning it out with algorithmic white noise is far easier."

A 27-year-old man who spent his adolescent years addicted (his word) to video games and pornography sent me this reflection on what that did to him:

I missed out on a lot of stuff in life—a lot of socialization. I feel the effects now: meeting new people, talking to people. I feel that my interactions are not as smooth and fluid as I want. My knowledge of the world (geography, politics, etc.) is lacking. I didn’t spend time having conversations or learning about sports. I often feel like a hollow operating system.

Or consider what Facebook found in a research project involving focus groups of young people, revealed in 2021 by the whistleblower Frances Haugen: “Teens blame Instagram for increases in the rates of anxiety and depression among teens,” an internal document said. “This reaction was unprompted and consistent across all groups.”

7. Collective-Action Problems

Social-media companies such as Meta, TikTok, and Snap are often compared to tobacco companies, but that’s not really fair to the tobacco industry. It’s true that companies in both industries marketed harmful products to children and tweaked their products for maximum customer retention (that is, addiction), but there’s a big difference: Teens could and did choose, in large numbers, not to smoke. Even at the peak of teen cigarette use, in 1997, nearly two-thirds of high-school students did not smoke.

Social media, in contrast, applies a lot more pressure on nonusers, at a much younger age and in a more insidious way. Once a few students in any middle school lie about their age and open accounts at age 11 or 12, they start posting photos and comments about themselves and other students. Drama ensues. The pressure on everyone else to join becomes intense. Even a girl who knows, consciously, that Instagram can foster beauty obsession, anxiety, and eating disorders might sooner take those risks than accept the seeming certainty of being out of the loop, clueless, and excluded. And indeed, if she resists while most of her classmates do not, she might, in fact, be marginalized, which puts her at risk for anxiety and depression, though via a different pathway than the one taken by those who use social media heavily. In this way, social media accomplishes a remarkable feat: It even harms adolescents who do not use it.

A recent study led by the University of Chicago economist Leonardo Bursztyn captured the dynamics of the social-media trap precisely. The researchers recruited more than 1,000 college students and asked them how much they’d need to be paid to deactivate their accounts on either Instagram or TikTok for four weeks. That’s a standard economist’s question to try to compute the net value of a product to society. On average, students said they’d need to be paid roughly $50 ($59 for TikTok, $47 for Instagram) to deactivate whichever platform they were asked about. Then the experimenters told the students that they were going to try to get most of the others in their school to deactivate that same platform, offering to pay them to do so as well, and asked, Now how much would you have to be paid to deactivate, if most others did so? The answer, on average, was less than zero. In each case, most students were willing to pay to have that happen.

Social media is all about network effects. Most students are only on it because everyone else is too. Most of them would prefer that nobody be on these platforms. Later in the study, students were asked directly, “Would you prefer to live in a world without Instagram [or TikTok]?” A majority of students said yes––58 percent for each app.

This is the textbook definition of what social scientists call a collective-action problem. It’s what happens when a group would be better off if everyone in the group took a particular action, but each actor is deterred from acting, because unless the others do the same, the personal cost outweighs the benefit. Fishermen considering limiting their catch to avoid wiping out the local fish population are caught in this same kind of trap. If no one else does it too, they just lose profit.

Cigarettes trapped individual smokers with a biological addiction. Social media has trapped an entire generation in a collective-action problem. Early app developers deliberately and knowingly exploited the psychological weaknesses and insecurities of young people to pressure them to consume a product that, upon reflection, many wish they could use less, or not at all.

8. Four Norms to Break Four Traps

Young people and their parents are stuck in at least four collective-action traps. Each is hard to escape for an individual family, but escape becomes much easier if families, schools, and communities coordinate and act together. Here are four norms that would roll back the phone-based childhood. I believe that any community that adopts all four will see substantial improvements in youth mental health within two years.

No smartphones before high school

The trap here is that each child thinks they need a smartphone because “everyone else” has one, and many parents give in because they don’t want their child to feel excluded. But if no one else had a smartphone—or even if, say, only half of the child’s sixth-grade class had one—parents would feel more comfortable providing a basic flip phone (or no phone at all). Delaying round-the-clock internet access until ninth grade (around age 14) as a national or community norm would help to protect adolescents during the very vulnerable first few years of puberty. According to a 2022 British study, these are the years when social-media use is most correlated with poor mental health. Family policies about tablets, laptops, and video-game consoles should be aligned with smartphone restrictions to prevent overuse of other screen activities.

No social media before 16

The trap here, as with smartphones, is that each adolescent feels a strong need to open accounts on TikTok, Instagram, Snapchat, and other platforms primarily because that’s where most of their peers are posting and gossiping. But if the majority of adolescents were not on these accounts until they were 16, families and adolescents could more easily resist the pressure to sign up. The delay would not mean that kids younger than 16 could never watch videos on TikTok or YouTube—only that they could not open accounts, give away their data, post their own content, and let algorithms get to know them and their preferences.

Phone‐free schools

Most schools claim that they ban phones, but this usually just means that students aren’t supposed to take their phone out of their pocket during class. Research shows that most students do use their phones during class time. They also use them during lunchtime, free periods, and breaks between classes––times when students could and should be interacting with their classmates face-to-face. The only way to get students’ minds off their phones during the school day is to require all students to put their phones (and other devices that can send or receive texts) into a phone locker or locked pouch at the start of the day. Schools that have gone phone-free always seem to report that it has improved the culture, making students more attentive in class and more interactive with one another. Published studies back them up.

More independence, free play, and responsibility in the real world

Many parents are afraid to give their children the level of independence and responsibility they themselves enjoyed when they were young, even though rates of homicide, drunk driving, and other physical threats to children are way down in recent decades. Part of the fear comes from the fact that parents look at each other to determine what is normal and therefore safe, and they see few examples of families acting as if a 9-year-old can be trusted to walk to a store without a chaperone. But if many parents started sending their children out to play or run errands, then the norms of what is safe and accepted would change quickly. So would ideas about what constitutes “good parenting.” And if more parents trusted their children with more responsibility––for example, by asking their kids to do more to help out, or to care for others––then the pervasive sense of uselessness now found in surveys of high-school students might begin to dissipate.

It would be a mistake to overlook this fourth norm. If parents don’t replace screen time with real-world experiences involving friends and independent activity, then banning devices will feel like deprivation, not the opening up of a world of opportunities.

The main reason why the phone-based childhood is so harmful is because it pushes aside everything else. Smartphones are experience blockers. Our ultimate goal should not be to remove screens entirely, nor should it be to return childhood to exactly the way it was in 1960. Rather, it should be to create a version of childhood and adolescence that keeps young people anchored in the real world while flourishing in the digital age.

9. What Are We Waiting For?

An essential function of government is to solve collective-action problems. Congress could solve or help solve the ones I’ve highlighted—for instance, by raising the age of “internet adulthood” to 16 and requiring tech companies to keep underage children off their sites.

In recent decades, however, Congress has not been good at addressing public concerns when the solutions would displease a powerful and deep-pocketed industry. Governors and state legislators have been much more effective, and their successes might let us evaluate how well various reforms work. But the bottom line is that to change norms, we’re going to need to do most of the work ourselves, in neighborhood groups, schools, and other communities.

There are now hundreds of organizations––most of them started by mothers who saw what smartphones had done to their children––that are working to roll back the phone-based childhood or promote a more independent, real-world childhood. (I have assembled a list of many of them.) One that I co-founded, at LetGrow.org, suggests a variety of simple programs for parents or schools, such as play club (schools keep the playground open at least one day a week before or after school, and kids sign up for phone-free, mixed-age, unstructured play as a regular weekly activity) and the Let Grow Experience (a series of homework assignments in which students––with their parents’ consent––choose something to do on their own that they’ve never done before, such as walk the dog, climb a tree, walk to a store, or cook dinner).

Parents are fed up with what childhood has become. Many are tired of having daily arguments about technologies that were designed to grab hold of their children’s attention and not let go. But the phone-based childhood is not inevitable.

The four norms I have proposed cost almost nothing to implement, they cause no clear harm to anyone, and while they could be supported by new legislation, they can be instilled even without it. We can begin implementing all of them right away, this year, especially in communities with good cooperation between schools and parents. A single memo from a principal asking parents to delay smartphones and social media, in support of the school’s effort to improve mental health by going phone free, would catalyze collective action and reset the community’s norms.

We didn’t know what we were doing in the early 2010s. Now we do. It’s time to end the phone-based childhood.

This article is adapted from Jonathan Haidt’s forthcoming book, The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness.

216 notes

·

View notes

Note

the thing is though that these checklists don’t mean if you have BPD it is not allowed that you have nightmares / if you have CPTSD you are legally obligated to never experience impulsiveness etc etc; it’s not just “making stuff up” — though ig in the strictest sense yeah, first you make stuff up but then you test it and see if your hypotheses align with the population. Basically chances are if you meet 8/9 BPD criteria and some for CPTSD but not enough to meet the diagnostic standard (which afaik isn’t recognized just yet but i think they’re trying to get it recognized in the diagnostic manuals but correct me if i’m wrong) then it’s pretty likely you’re going to respond better to BPD treatment and ALSO if your practitioner completely ignores one diagnosis in favor of the other they’re probably not that good at their job. Psychology doesn’t speak in “rules” and absolutes, it speaks in trends and likelihoods and everyone trying to sell you a 100% true and immovable psychology fact is a sham

as someone who unfortunately has a degree in psychology (and whose undergrad began right as the infamous replication crisis became more widely acknowledged in the field), yes, historically a lot of this field is bias and hegemony imbued with some metric. when homosexuality was still classified as a mental disorder, the conversion therapy program by masters and johnson (who were like, some of the earliest pioneers of research into human sexual responses lmao) would often boast high success rates due to participants merely adopting signifiers of heterosexuality. the modern day pop psychology movement (and it's subfields, new ageism, self help books, uhhh Market Christianity) also cannot be disentangled from academic psychology, which further bends the way in which people understand and interact with psychological phenomena. this of course does not mean that all data is junk data, or that methods of measurement are without some rigor, or that therapy is completely useless, but it's just patently incorrect to insist that this field is even predominantly an apolitical force attempting to further our understanding of human beings. it's bizarre that you acknowledge that credentialized individuals in the field can be flawed while also being uncritical of psychological categorization for mental illness.

It's not that I don't get what you're saying, but it's not reflective of reality. yes, I know that practitioners are supposed to help you feel out your symptoms and see what treatment works for you, but that isn't just what they're doing (assuming it's even being done with care and competence). it's inaccurate to insist that psychology doesn't speak in absolutes- I know that we are taught not to do this, but for any social science related field this is the equivalent of going "stop hitting yourself". in any practical real-world setting where accredited institutional psychology is present, there are rules. in a clinical setting, there are rules, and you can be inpatiented against your will for breaking those rules (or recently here in canada, randomly stripped of your driver's license). in neuromarketing (<- yes this is a real discipline.), which is intensively oriented towards results due to the profit incentive, there are rules. the conditions of release for many offenders necessitates staying on court-mandated medication or participating in specific programs. when H.B. Phrenology from The Heritage Foundation wheels out his thousandth manicured study on crime and race (and when a different journal publishes a study indirectly debunking it), that is him tacitly acknowledging that there are rules.

anyway did I ever tell you guys that in my first year at University of Toronto (UTSC campus baybee) they brought in a guest speaker to my abnormal psych course who gave us a lengthy talk on how autogynephilia theory is objectively true. this was like 2013ish maybe 2014 btw.

47 notes

·

View notes

Text

Autumn 2013 Mixtape.

Evian Christ “Salt Carousel”

Death Grips Government Plates

Nine Inch Nails “All Time Low”

Chvrches “Lies”

M.I.A. “Only 1 U”

Phil Western “We Have Come To Bless This House”

Oneohtrix Point Never “Problem Areas”

Four Tet “Jupiters”

Wild Nothing “Data World”

Boards Of Canada “Nothing Is Real”

Chvrches “Now Is Not The Time”

Whitehouse “Guru”

Silent Servant “Utopian Disaster (End)”

Akitsa “Redemption”

Ron Morelli “Radar Version”

Pale Sketcher “Show Me How”

Alberich “Open Warfare”

Akitsa “Prophetie Heretique”

Bathory “Reaper”

Tech Level 2 “Hard Times”

Ostara “Darkness Over Eden”

Elliott Smith “Twilight”

Public Image Ltd. “Fodderstompf”

Gleitzeit “Ich Kommew Aus Der DDR”

Marine Girls “In Love / Honey”

Public Image Ltd. “Theme”

Crash Course In Science “Cardboard Lamb”

My Bloody Valentine “Only Shallow”

Jonwayne “404 Garbage”

Peanut Butter Wolf “Dopestyle”

Nancy Wilson “I’m In Love”

AIDS Wolf “Cities Of Glass”

AIDS Wolf “Like PSHTS Of Aerosol”

#omega#music#playlists#mixtapes#personal#My Bloody Valentine#Public Image Ltd.#Elliott Smith#Bathory#Akitsa#Alberich#Ron Morelli#Silent Servant#Boards Of Canada#Whitehouse#Chvrches#Death Grips#Nine Inch Nails#Wild Nothing#Oneohtrix Point Never#Evian Christ#Phil Western#M.I.A.#Marine Girls

5 notes

·

View notes

Text

Blog 2: Ideal Role

In my ideal role as an environmental interpreter, I envision working at the intersection of climate change awareness and nature conservation. This role would be rooted in both education and action, where I could engage diverse audiences to help them understand the impact of climate change on natural ecosystems and empower them to take part in solutions. I see myself working in a national park, conservation area, or community-based organization that focuses on environmental stewardship.

One of the main aspects of this role would be to create experiences that connect people with the natural world in a meaningful way. I would aim to communicate the urgency of climate action, but in a way that fosters hope rather than fear. This is where interpretive skills come into play! being able to engage my audience emotionally and intellectually by weaving together facts and stories that resonate with them. For example, while leading a hike, I could discuss how rising temperatures are affecting plant and animal species in the area, highlighting real-life examples of resilience and adaptation. By sharing stories of ecosystems adapting to climate change, I would aim to foster a deeper connection between visitors and the environment, motivating them to protect it.

A key concept from the course that I would apply in this role is the importance of understanding different learning styles. As discussed in the lesson, learners can be auditory, visual, or tactile/kinaesthetic, and it’s crucial to adapt my interpretive methods accordingly. Some visitors may prefer hands-on activities, such as planting trees or participating in citizen science projects, where they can actively contribute to conservation efforts. Others may benefit from visual aids, like charts or infographics, that explain complex climate data in an easy-to-understand format. Meanwhile, auditory learners might be engaged through storytelling or podcasts, a format that allows for deeper reflection on environmental issues. My goal would be to cater to all these learning styles to ensure that my message reaches as many people as possible.

Moreover, the role would require strong communication and storytelling skills. As an interpreter, I would need to be able to clearly explain scientific concepts while making them accessible to a general audience. This involves more than just presenting facts, it’s about creating a narrative that connects people to the environment in a personal way. For instance, I could draw on my own experiences growing up by the Mediterranean Sea and later in Canada, sharing how nature has shaped my life and why its protection matters to me. Personal stories often resonate with audiences, helping them see the human side of environmental issues. I would also need to be adaptable by tailoring my approach to different audiences, whether children on a field trip or adults in a workshop, to keep them engaged and ensure they have a meaningful experience.

Ultimately, my ideal role as an environmental interpreter would combine my passion for nature and climate change action with a deep commitment to educating and inspiring others. By creating experiences that are both informative and emotionally resonant, I hope to help foster a generation of environmental stewards who will take action to protect the planet!

2 notes

·

View notes

Text

Unit 5 Blog Post: Citizen Science and Conservation Practices

Happy thanksgiving everyone!

Given that this week’s blog prompt is open, I wanted to share some thoughts inspired by our course content so far.

While watching Washington Wachira’s TED Talk "For the Love of Birds," I began reflecting on the role of citizen science. Apps like iNaturalist, which is widely used in Guelph, offer a powerful tool to connect people with nature by allowing users to log observations. However, they also inadvertently filter participation.

For instance, I’ve spoken with older individuals who possess immense knowledge of local flora and fauna but do not engage with these apps. Their insights are invaluable, yet their observations remain undocumented in digital platforms. This raises a concern: Are we excluding certain demographics from contributing to citizen science simply because of a technological barrier?

This issue highlights the need to design more inclusive citizen science initiatives. If older generations or non-tech-savvy individuals struggle to access these platforms, we may miss crucial knowledge. Digital platforms should be complemented with physical or analog extensions—perhaps logbooks or community-led observation notebooks that can be collected and digitized by volunteers.

During my time in Kenya, I witnessed how citizens live in harmony with their natural environment. Kenya’s incredible biodiversity, which spans savannahs, tropical forests, deserts, and highlands, surpasses that of Canada. Yet, despite this richness, much of the local knowledge remains undocumented in apps or digital tools. Conservation in Kenya requires more than just technological solutions—it depends on community engagement and biocultural conservation. One of my professors, Carol Muriuki, a conservationist with the National Environment Management Authority (NEMA), shared insights that transformed my understanding of conservation. She emphasized that conservation cannot follow a “one-size-fits-all” approach. Community stewardship and biocultural conservation is crucial for designing conservation initiatives that have a lasting positive impact. Instead of crafting a conservation plan that looks good on paper but is not feasible in the real world. Each initiative must account for ecological, economic, and cultural realities.

A compelling example is the Lake Naivasha region, where rising water levels—likely caused by climate change—are displacing communities that rely on the lake for food and income. As Carol explained, simply forcing people to relocate isn’t a viable solution. Instead, NEMA is working on a more holistic approach, such as restructuring hydrological infrastructure, planting mangrove trees, and compensating displaced families. This approach integrates the needs of both people and the environment, exemplifying how inclusive conservation practices can lead to sustainable outcomes.

Figure 1. Blurry view of Lake Naivasha from the campground in Kenya (Griffiths, 2024)

I see Carol’s work as a model for future conservation efforts, where citizen science plays a central role in shaping projects rather than just being a tool for data collection. For citizen science to be effective, it must evolve beyond passive contributions. It should foster continuous dialogue between scientists and the public, ensuring citizens actively participate in research and conservation initiatives. This approach could help address the issue of bias in scientific sampling. Scientists often focus on charismatic species—those that are easy to observe or already have a wealth of knowledge available from past studies. As opposed to cryptic or under-studied species. In contrast, citizen observations tend to be more exploratory, as participants are not constrained by preconceptions about which species are significant, enriching scientific understanding in unexpected ways.

One of the biggest takeaways from this course is the realization that academic science offers only a narrow lens through which to engage with nature. As students, it’s easy to become trapped within the confines of scientific rigor and overlook the many other ways people connect with the natural world. Yet, through this course, I’ve learned that storytelling, art, and lived experiences are equally powerful tools for interpreting the environment. For example, conservation is as much about understanding community needs as it is about protecting ecosystems. Similarly, citizen science is not just about data—it’s about fostering a deeper relationship between people and nature.

Ultimately, effective conservation requires both emotional and intellectual engagement. Successful initiatives depend on integrating scientific knowledge with community stewardship. Similarly, citizen science can only reach its full potential when it invites participation from all walks of life—from scientists, to tech-savvy citizens, and those more comfortable with traditional forms of engagement. As I reflect on what we’ve covered so far, I believe we are just beginning to scratch the surface of how we can engage an audience with nature. The challenge lies in finding new ways to connect with both people and the environment—whether through technology, community dialogue, or personal storytelling.

2 notes

·

View notes

Text

Data Visualization: Striking the Right Balance between Accuracy and Impact

In today’s data-oriented world, data visualization ethics plays a significant as a tool for successfully passing on important information, hence helping organizations in their communication efforts. However, this ability carries a substantial responsibility — the commitment to guarantee that data visualizations not only impart knowledge but are also accountable for ethical standards.

#data visualization ethics#learn data science online#data science course in canada#data science courses#edmonton

0 notes

Text

Data science is a field of study that works with enormous amounts of data utilizing contemporary technologies and methodologies to uncover hidden patterns, obtain valuable information, and make business decisions. Datamites provides data science courses in the Canada along with artificial intelligence, python, data analytics, machine learning etc.

0 notes

Text

How AI is improving simulations with smarter sampling techniques

New Post has been published on https://thedigitalinsider.com/how-ai-is-improving-simulations-with-smarter-sampling-techniques/

How AI is improving simulations with smarter sampling techniques

Imagine you’re tasked with sending a team of football players onto a field to assess the condition of the grass (a likely task for them, of course). If you pick their positions randomly, they might cluster together in some areas while completely neglecting others. But if you give them a strategy, like spreading out uniformly across the field, you might get a far more accurate picture of the grass condition.

Now, imagine needing to spread out not just in two dimensions, but across tens or even hundreds. That’s the challenge MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers are getting ahead of. They’ve developed an AI-driven approach to “low-discrepancy sampling,” a method that improves simulation accuracy by distributing data points more uniformly across space.

A key novelty lies in using graph neural networks (GNNs), which allow points to “communicate” and self-optimize for better uniformity. Their approach marks a pivotal enhancement for simulations in fields like robotics, finance, and computational science, particularly in handling complex, multidimensional problems critical for accurate simulations and numerical computations.

“In many problems, the more uniformly you can spread out points, the more accurately you can simulate complex systems,” says T. Konstantin Rusch, lead author of the new paper and MIT CSAIL postdoc. “We’ve developed a method called Message-Passing Monte Carlo (MPMC) to generate uniformly spaced points, using geometric deep learning techniques. This further allows us to generate points that emphasize dimensions which are particularly important for a problem at hand, a property that is highly important in many applications. The model’s underlying graph neural networks lets the points ‘talk’ with each other, achieving far better uniformity than previous methods.”

Their work was published in the September issue of the Proceedings of the National Academy of Sciences.

Take me to Monte Carlo

The idea of Monte Carlo methods is to learn about a system by simulating it with random sampling. Sampling is the selection of a subset of a population to estimate characteristics of the whole population. Historically, it was already used in the 18th century, when mathematician Pierre-Simon Laplace employed it to estimate the population of France without having to count each individual.

Low-discrepancy sequences, which are sequences with low discrepancy, i.e., high uniformity, such as Sobol’, Halton, and Niederreiter, have long been the gold standard for quasi-random sampling, which exchanges random sampling with low-discrepancy sampling. They are widely used in fields like computer graphics and computational finance, for everything from pricing options to risk assessment, where uniformly filling spaces with points can lead to more accurate results.

The MPMC framework suggested by the team transforms random samples into points with high uniformity. This is done by processing the random samples with a GNN that minimizes a specific discrepancy measure.

One big challenge of using AI for generating highly uniform points is that the usual way to measure point uniformity is very slow to compute and hard to work with. To solve this, the team switched to a quicker and more flexible uniformity measure called L2-discrepancy. For high-dimensional problems, where this method isn’t enough on its own, they use a novel technique that focuses on important lower-dimensional projections of the points. This way, they can create point sets that are better suited for specific applications.

The implications extend far beyond academia, the team says. In computational finance, for example, simulations rely heavily on the quality of the sampling points. “With these types of methods, random points are often inefficient, but our GNN-generated low-discrepancy points lead to higher precision,” says Rusch. “For instance, we considered a classical problem from computational finance in 32 dimensions, where our MPMC points beat previous state-of-the-art quasi-random sampling methods by a factor of four to 24.”

Robots in Monte Carlo

In robotics, path and motion planning often rely on sampling-based algorithms, which guide robots through real-time decision-making processes. The improved uniformity of MPMC could lead to more efficient robotic navigation and real-time adaptations for things like autonomous driving or drone technology. “In fact, in a recent preprint, we demonstrated that our MPMC points achieve a fourfold improvement over previous low-discrepancy methods when applied to real-world robotics motion planning problems,” says Rusch.

“Traditional low-discrepancy sequences were a major advancement in their time, but the world has become more complex, and the problems we’re solving now often exist in 10, 20, or even 100-dimensional spaces,” says Daniela Rus, CSAIL director and MIT professor of electrical engineering and computer science. “We needed something smarter, something that adapts as the dimensionality grows. GNNs are a paradigm shift in how we generate low-discrepancy point sets. Unlike traditional methods, where points are generated independently, GNNs allow points to ‘chat’ with one another so the network learns to place points in a way that reduces clustering and gaps — common issues with typical approaches.”

Going forward, the team plans to make MPMC points even more accessible to everyone, addressing the current limitation of training a new GNN for every fixed number of points and dimensions.