#computering consulting

Explore tagged Tumblr posts

Text

Finally finished putting on all my laptop stickers!! Special shout-out to @colliholly and @dookins for AMAZING sticker designs :]

#Clay Posts#laptop stickers#robot stickers#p03#shockwave#momo stray#tfa shockwave#tfp shockwave#g1 shockwave#ultrakill v1#mettaton#portal wheatley#pacesetter#Bastion Overwatch#edgar electric dreams#ramattra#Multislacker#chainsaw consultant#duck Shuffler#windows 98 computer#was waiting for that h03 for p03 sticker to get here just so I could make sure it fit ✨

221 notes

·

View notes

Text

day 3.1: forgive me for the harm i have caused this world. none may atone for my actions but me, and only in me shall their stain live on. i am thankful to have been caught, my fall cut short by wizened hands. all i can be is sorry, and that is all i am.

#chip revvington#chainsaw consultant#i lied actually. i got freed from computer lab hell#had to make it fast because ummmm i still have more work to do! <3#anyways i had a discussion with my friends about chip helly earlier. yeah. thinking about woes hollow within this au#i want to redraw my severance poster with chip mark bc i feel like my art has improved in just a couple months#sorry for all the yapping. ive been going stir crazy in the lab thinking about this#i guess in this context he's craig's son. as if he didnt already have so many issues and problems.#severance spoilers#just in case. its incredibly vague#all i can think of recently is severance and toontown.

28 notes

·

View notes

Text

#Finance#Business#Work Meme#Work Humor#Excel#Hilarious#funny meme#funny#accounting#office humor#consulting#dark humor#coding#codeblr#cs#computer science#software engineering

151 notes

·

View notes

Text

Every time I read about smart fridges I'm like, Dune had a point with the Butlerian Jihad

57 notes

·

View notes

Text

Empower Your Digital Presence with Cutting-Edge Frameworks

In today’s fast-evolving digital landscape, staying ahead requires more than just a functional website or application—it demands innovation and efficiency. At Atcuality, we specialize in Website and Application Framework Upgrade solutions tailored to your business goals. Whether you're looking to optimize performance, enhance user experience, or integrate the latest technologies, our team ensures seamless upgrades that align with industry standards. Transitioning to advanced frameworks not only improves loading speeds and scalability but also strengthens your cybersecurity measures. With Atcuality, you gain access to bespoke services that future-proof your digital assets. Let us elevate your online platforms to a new realm of excellence.

#ai applications#artificial intelligence#ai services#website development#website developer near me#website developers#website developer in india#web development#web design#application development#app development#app developers#digital marketing#seo services#seo#emailmarketing#search engine marketing#search engine optimization#digital consulting#virtual reality#vr games#vr development#augmented reality#augmented and virtual reality market#cash collection application#task management#blockchain#metaverse#cloud computing#information technology

8 notes

·

View notes

Text

UK 1982

4 notes

·

View notes

Text

man my tolerance for annoying people is just . Nonexistent huh

#crow.txt#like sorry ma'am i dontactually want to be having a 10 min convo about printers with you omg. i got laptops to look at!#glad ive told my boss i straight up cant do consultations to teach people to use their computer#bc i think i would develop blood pressure/heart problems bc it would piss me off so badly#like ill fix your shit no problem. if you dont know how to use it that aint my fuckin problem!#i simply. do not have the patience to teach an old person what an email client is. i would wnd up on the news. holy shit#we did a like. solicitation of opinion type deal. to see what customers would like to see from us service wise. a lot of it was nonsense#but people were fuckin. saying shit. asking How to use AI. ....IF YOU NEED COACHING IN THAT YOU ALREADY DONT NEED TO USE IT?#'how to use home automation devices' IF YOU DONT EVEN KNOW HOW TO SET IT UP OR USE IT..... WHY DO YOU WANT IT...?#IT BAFFLES ME WHEN PEOPLE WANT THINGS AND CANT BE ASSED TO UNDERSTAND HOW THEY WORK?#WHAT HAPPENS WHEN YOU NEED TO MOVE AND SET IT UP AGAIN?? OR RESET IT. ITS KINDA IDIOTPROOF FOR THAT REASON#YOU PEOPLEMAKE ME FUCKING INSANE HOW DO YOU DO ANYTHINGGGG 😭😭

3 notes

·

View notes

Text

i wonder if it can be managed that i no longer live in that city but i do a research project during the fall semester

#like i could definitely attend consultations by just#traveling there and leaving the same day. would be a wee bit tiring but why the fuck not#but then the problem. is it's experiments#and for many of those you need to like recruit volunteers who will go to the computer room and do some kind of test there#and‚ the students do that‚ they conduct that. so i wouldn't be able to help out with that#but not all “experiments” are like that (it's usually the cognitive stuff. that i loathe btw). some are just questionnaires with priming or#with some kind of additional content#so. if i fail this course for the second time (feels inevitable; this paper is too complicated it makes me properly sick) then maybe i can#try a third time. from far away. while working and not having any other obligations#kata.txt

5 notes

·

View notes

Text

Leading Microsoft Solutions Partner in Gurgaon | IFI Techsolutions

IFI Techsolutions is Microsoft Solution partners in Gurgaon. Our Implementation expertise involves automation, migration, security, optimize and managed cloud.

#microsoft azure#ifi techsolutions#microsoft partner#information technology#technology#software#azure migration#cloud computing#marketing#digital transformation#it consulting#data analytics

1 note

·

View note

Text

Title/Name: Edward Joseph Snowden, better known as ‘Edward Snowden’ or simply ‘Snowden’, born in (1983). Bio: American and naturalized Russian citizen who was a computer intelligence consultant and whistleblower who leaked highly classified information from the National Security Agency in 2013 when he was an employee and subcontractor. Country: USA / Russia Wojak Series: Feels Guy (Variant) Image by: Wojak Gallery Admin Main Tag: Snowden Wojak

#Wojak#Edward Snowden#Edward Snowden Wojak#Snowden#Snowden Wojak#USA#Russia#computer intelligence#consultant#whistleblower#classified information#National Security Agency#NSA#subcontractor#employee#Feels Guy#Feels Guy Series#Feels Guy Wojak#White#Gray

4 notes

·

View notes

Text

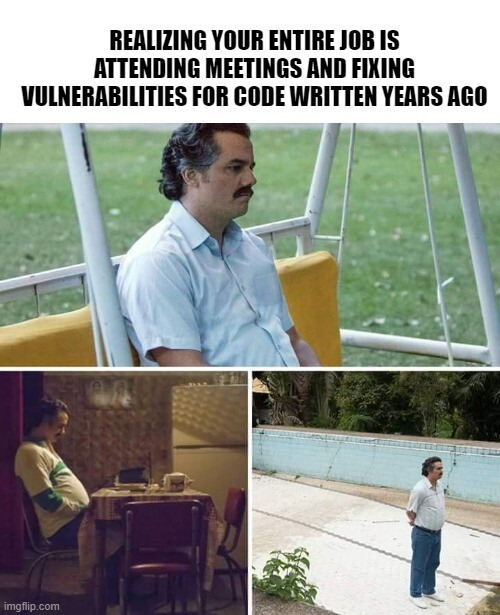

#funny#funny meme#office humor#consulting#software engineering#software engineer jobs#cs#computer science#programming#coding

103 notes

·

View notes

Text

We offers expert cloud migration, cloud native application development and cloud infrastructure consulting services to help businesses become customer-focused

#cloud tranformation solutions#cloud infrastructure consulting#cloud and infrastructure services#cloud native application development#cloud migration services#cloud based computing#it cloud services

1 note

·

View note

Text

UK 1987

#UK1987#COMPUTING WITH THE AMSTRAD#HEWSON CONSULTANTS#BOOKS#ACTION#AMSTRAD#C64#SPECTRUM#AMIGA#ATARIst#ZYNAPS#EXOLON

8 notes

·

View notes

Text

What is Serverless Computing?

Serverless computing is a cloud computing model where the cloud provider manages the infrastructure and automatically provisions resources as needed to execute code. This means that developers don’t have to worry about managing servers, scaling, or infrastructure maintenance. Instead, they can focus on writing code and building applications. Serverless computing is often used for building event-driven applications or microservices, where functions are triggered by events and execute specific tasks.

How Serverless Computing Works

In serverless computing, applications are broken down into small, independent functions that are triggered by specific events. These functions are stateless, meaning they don’t retain information between executions. When an event occurs, the cloud provider automatically provisions the necessary resources and executes the function. Once the function is complete, the resources are de-provisioned, making serverless computing highly scalable and cost-efficient.

Serverless Computing Architecture

The architecture of serverless computing typically involves four components: the client, the API Gateway, the compute service, and the data store. The client sends requests to the API Gateway, which acts as a front-end to the compute service. The compute service executes the functions in response to events and may interact with the data store to retrieve or store data. The API Gateway then returns the results to the client.

Benefits of Serverless Computing

Serverless computing offers several benefits over traditional server-based computing, including:

Reduced costs: Serverless computing allows organizations to pay only for the resources they use, rather than paying for dedicated servers or infrastructure.

Improved scalability: Serverless computing can automatically scale up or down depending on demand, making it highly scalable and efficient.

Reduced maintenance: Since the cloud provider manages the infrastructure, organizations don’t need to worry about maintaining servers or infrastructure.

Faster time to market: Serverless computing allows developers to focus on writing code and building applications, reducing the time to market new products and services.

Drawbacks of Serverless Computing

While serverless computing has several benefits, it also has some drawbacks, including:

Limited control: Since the cloud provider manages the infrastructure, developers have limited control over the environment and resources.

Cold start times: When a function is executed for the first time, it may take longer to start up, leading to slower response times.

Vendor lock-in: Organizations may be tied to a specific cloud provider, making it difficult to switch providers or migrate to a different environment.

Some facts about serverless computing

Serverless computing is often referred to as Functions-as-a-Service (FaaS) because it allows developers to write and deploy individual functions rather than entire applications.

Serverless computing is often used in microservices architectures, where applications are broken down into smaller, independent components that can be developed, deployed, and scaled independently.

Serverless computing can result in significant cost savings for organizations because they only pay for the resources they use. This can be especially beneficial for applications with unpredictable traffic patterns or occasional bursts of computing power.

One of the biggest drawbacks of serverless computing is the “cold start” problem, where a function may take several seconds to start up if it hasn’t been used recently. However, this problem can be mitigated through various optimization techniques.

Serverless computing is often used in event-driven architectures, where functions are triggered by specific events such as user interactions, changes to a database, or changes to a file system. This can make it easier to build highly scalable and efficient applications.

Now, let’s explore some other serverless computing frameworks that can be used in addition to Google Cloud Functions.

AWS Lambda: AWS Lambda is a serverless compute service from Amazon Web Services (AWS). It allows developers to run code in response to events without worrying about managing servers or infrastructure.

Microsoft Azure Functions: Microsoft Azure Functions is a serverless compute service from Microsoft Azure. It allows developers to run code in response to events and supports a wide range of programming languages.

IBM Cloud Functions: IBM Cloud Functions is a serverless compute service from IBM Cloud. It allows developers to run code in response to events and supports a wide range of programming languages.

OpenFaaS: OpenFaaS is an open-source serverless framework that allows developers to run functions on any cloud or on-premises infrastructure.

Apache OpenWhisk: Apache OpenWhisk is an open-source serverless platform that allows developers to run functions in response to events. It supports a wide range of programming languages and can be deployed on any cloud or on-premises infrastructure.

Kubeless: Kubeless is a Kubernetes-native serverless framework that allows developers to run functions on Kubernetes clusters. It supports a wide range of programming languages and can be deployed on any Kubernetes cluster.

IronFunctions: IronFunctions is an open-source serverless platform that allows developers to run functions on any cloud or on-premises infrastructure. It supports a wide range of programming languages and can be deployed on any container orchestrator.

These serverless computing frameworks offer developers a range of options for building and deploying serverless applications. Each framework has its own strengths and weaknesses, so developers should choose the one that best fits their needs.

Real-time examples

Coca-Cola: Coca-Cola uses serverless computing to power its Freestyle soda machines, which allow customers to mix and match different soda flavors. The machines use AWS Lambda functions to process customer requests and make recommendations based on their preferences.

iRobot: iRobot uses serverless computing to power its Roomba robot vacuums, which use computer vision and machine learning to navigate homes and clean floors. The Roomba vacuums use AWS Lambda functions to process data from their sensors and decide where to go next.

Capital One: Capital One uses serverless computing to power its mobile banking app, which allows customers to manage their accounts, transfer money, and pay bills. The app uses AWS Lambda functions to process requests and deliver real-time information to users.

Fender: Fender uses serverless computing to power its Fender Play platform, which provides online guitar lessons to users around the world. The platform uses AWS Lambda functions to process user data and generate personalized lesson plans.

Netflix: Netflix uses serverless computing to power its video encoding and transcoding workflows, which are used to prepare video content for streaming on various devices. The workflows use AWS Lambda functions to process video files and convert them into the appropriate format for each device.

Conclusion

Serverless computing is a powerful and efficient solution for building and deploying applications. It offers several benefits, including reduced costs, improved scalability, reduced maintenance, and faster time to market. However, it also has some drawbacks, including limited control, cold start times, and vendor lock-in. Despite these drawbacks, serverless computing will likely become an increasingly popular solution for building event-driven applications and microservices.

Read more

4 notes

·

View notes

Text

Part of what makes the crowdstrike situation so wild (and frustrating) is that if even one person (who wouldn't even need many technical skills, mind you !) checked it, this could've been avoided. The second it runs, windows crashes. If you tested it AT ALL, this wouldn't have happened.

idk if people on tumblr know about this but a cybersecurity software called crowdstrike just did what is probably the single biggest fuck up in any sector in the past 10 years. it's monumentally bad. literally the most horror-inducing nightmare scenario for a tech company.

some info, crowdstrike is essentially an antivirus software for enterprises. which means normal laypeople cant really get it, they're for businesses and organisations and important stuff.

so, on a friday evening (it of course wasnt friday everywhere but it was friday evening in oceania which is where it first started causing damage due to europe and na being asleep), crowdstrike pushed out an update to their windows users that caused a bug.

before i get into what the bug is, know that friday evening is the worst possible time to do this because people are going home. the weekend is starting. offices dont have people in them. this is just one of many perfectly placed failures in the rube goldburg machine of crowdstrike. there's a reason friday is called 'dont push to live friday' or more to the point 'dont fuck it up friday'

so, at 3pm at friday, an update comes rolling into crowdstrike users which is automatically implemented. this update immediately causes the computer to blue screen of death. very very bad. but it's not simply a 'you need to restart' crash, because the computer then gets stuck into a boot loop.

this is the worst possible thing because, in a boot loop state, a computer is never really able to get to a point where it can do anything. like download a fix. so there is nothing crowdstrike can do to remedy this death update anymore. it is now left to the end users.

it was pretty quickly identified what the problem was. you had to boot it in safe mode, and a very small file needed to be deleted. or you could just rename crowdstrike to something else so windows never attempts to use it.

it's a fairly easy fix in the grand scheme of things, but the issue is that it is effecting enterprises. which can have a looooot of computers. in many different locations. so an IT person would need to manually fix hundreds of computers, sometimes in whole other cities and perhaps even other countries if theyre big enough.

another fuck up crowdstrike did was they did not stagger the update, so they could catch any mistakes before they wrecked havoc. (and also how how HOW do you not catch this before deploying it. this isn't a code oopsie this is a complete failure of quality ensurance that probably permeates the whole company to not realise their update was an instant kill). they rolled it out to everyone of their clients in the world at the same time.

and this seems pretty hilarious on the surface. i was havin a good chuckle as eftpos went down in the store i was working at, chaos was definitely ensuring lmao. im in aus, and banking was literally down nationwide.

but then you start hearing about the entire country's planes being grounded because the airport's computers are bricked. and hospitals having no computers anymore. emergency call centres crashing. and you realised that, wow. crowdstrike just killed people probably. this is literally the worst thing possible for a company like this to do.

crowdstrike was kinda on the come up too, they were starting to become a big name in the tech world as a new face. but that has definitely vanished now. to fuck up at this many places, is almost extremely impressive. its hard to even think of a comparable fuckup.

a friday evening simultaneous rollout boot loop is a phrase that haunts IT people in their darkest hours. it's the monster that drags people down into the swamp. it's the big bag in the horror movie. it's the end of the road. and for crowdstrike, that reaper of souls just knocked on their doorstep.

#crowdstrike#cs#computer#cybersecurity#it#information technology#software#software development#it consulting#the mf whose job it is to check this shit is in mad trouble rn#i obv cant know personally but like jfc man#ONE GODDAMN CHECK

114K notes

·

View notes

Text

Team Leader - Supported Living - Driver

Job title: Team Leader – Supported Living – Driver Company: Aspirations Care Job description: . Ability to work under pressure and react quickly to situations. Keen to develop and generate ideas. The Package Paid DBS… and specialist learning. Opportunities for Career progression, including NVQ qualifications. Optional overtime with flexible… Expected salary: £14.26 per hour Location:…

#artificial intelligence#audio-dsp#cleantech#cloud-computing#data-privacy#DevOps#dotnet#Ecommerce#game-dev#HPC#hybrid-work#it-consulting#it-support#Java#legaltech#mobile-development#NLP#no-code#product-management#Python#React Specialist#robotics#rpa#Salesforce#SoC#software-development#system-administration#uk-jobs#ux-design

0 notes