#bci sensor

Explore tagged Tumblr posts

Text

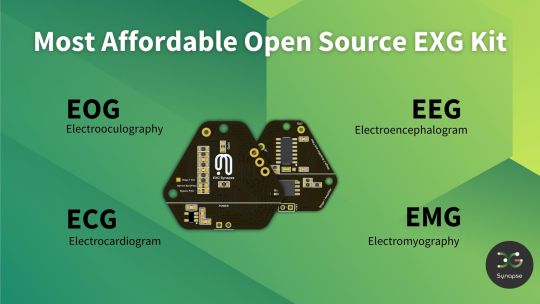

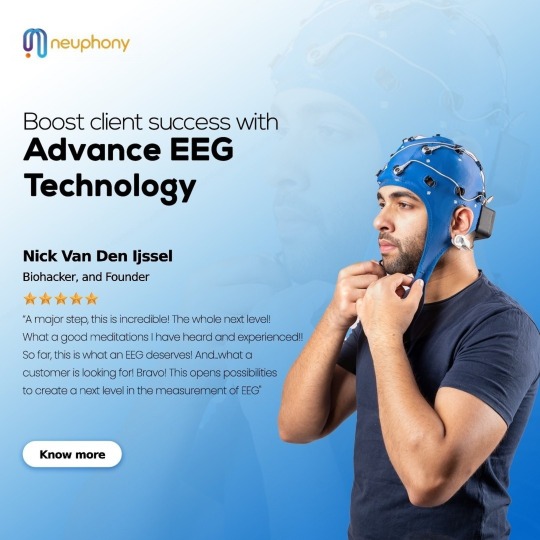

Neuphony EXG Synapse has comprehensive biopotential signal compatibility, covering ECG, EEG, EOG, and EMG, ensures a versatile solution for various physiological monitoring applications.

#diy robot kits for adults#brain wave sensor#bci sensor#BCI chip#Surface EMG sensor#Arduino EEG sensor#Raspberry Pi EEG

1 note

·

View note

Text

Liquid ink innovation could revolutionize brain activity monitoring with e-tattoo sensors

- By InnoNurse Staff -

Scientists have developed a groundbreaking liquid ink that allows doctors to print sensors onto a patient's scalp to monitor brain activity.

This innovative technology, detailed in the journal Cell Biomaterials, could replace the cumbersome traditional EEG setup, enhancing the comfort and efficiency of diagnosing neurological conditions like epilepsy and brain injuries.

The ink, made from conductive polymers, flows through hair to the scalp, forming thin-film sensors capable of detecting brainwaves with accuracy comparable to standard electrodes. Unlike conventional setups that lose signal quality after hours, these "e-tattoo" electrodes maintain stable connectivity for over 24 hours. Researchers have also adapted the ink to eliminate the need for bulky wires by printing conductive lines that transmit brainwave data.

The process is quick, non-invasive, and can be further refined to integrate wireless data transmission, paving the way for fully wireless EEG systems. The technology holds significant promise for advancing brain-computer interfaces, potentially replacing bulky headsets with lightweight, on-body electronics.

Header image: E-tattoo electrode EEG system. Credit: Nanshu Lu.

Read more at Cell Press/Medical Xpress

///

Other recent news

Vietnam: TMA launches new healthtech solutions (TMA Solutions/PRNewswire)

Soda Health raises $50M in oversubscribed Series B round led by General Catalyst to expand its Smart Benefits Operating System (Soda Health/Globe Newswire)

0 notes

Text

The EXG Synapse by Neuphony is an advanced device designed to monitor and analyze multiple biosignals, including EEG, ECG, and EMG. It offers real-time data for research and neurofeedback, making it ideal for cognitive enhancement and physiological monitoring.

#neuphony#health#eeg#mental health#brain health#bci#neurofeedback#mental wellness#technology#Exg#neuroscience kit#emg sensors#emg muscle sensor#emg sensor arduino#diy robotics kits#brain wave sensor#Arduino EEG sensor#human computer interface#heart rate variability monitoring#hrv monitor#heart rate monitor#eye tracking#diy robotic kits#build your own robot kit#electromyography sensor#eeg sensor arduino#diy robotics#eog

0 notes

Text

The Eddy Covariance (EC) system is a technique for measuring the fluxes of energy and gases such as carbon dioxide and water vapor in eddies over the forest. These measurements indicate how much of these gases are absorbed or released by plants and soils through photosynthesis, and how much is rising into the atmosphere and contributing to global warming. Illustration by Jorge Alemán, STRI

Excerpt from this story from Smithsonian Magazine:

Just as humans inhale and exhale, forests absorb and release gases. There is a constant exchange of carbon dioxide (CO2), water vapor (H2O) and other gases between the soils, vegetation and air, which scientists measure to understand how much is released into the atmosphere, and how much is absorbed by plants and trees through photosynthesis.

This gas exchange is measured by a system called eddy covariance.

An eddy is air that moves circularly due to turbulence (changes in speed and direction). At night, temperatures drop and the air is more stable, and during the day, when temperatures rise, the air moves more; these fluctuations between day and night create the wind we feel, and therefore eddies, which move in different directions, carrying different concentrations of CO2, H2O and other gases.

Covariance refers to simultaneously measuring the concentration of gases and the changes in the direction of the eddies, to understand how much carbon, water vapor, and energy are shifting and where, and how they change from moment to moment (fluxes). This is done with equipment called sonic anemometers, which measure wind speed and direction, and infrared CO2/H2O gas analyzers. That’s hundreds of measurements per minute in real time from these devices that detect how many molecules of these gases move through a defined area or volume, and how many rise into the atmosphere or are absorbed by forests.

"The Eddy Covariance (EC) system is a micro-meteorological technique used to directly monitor these exchanges between forests and the atmosphere, as these interactions are critical to understanding the role of forests within the global climate system," explains Matteo Detto, a researcher in the Department of Ecology and Evolutionary Biology at Princeton University, who installed the EC system at the AVA tower on Barro Colorado Island (BCI) in 2012.

"Carbon can be in many places, in the soil, in plants, in the atmosphere, and there's just no other way to track it, but this system does it," says Joe Wright, a staff scientist at the Smithsonian Tropical Research Institute (STRI).

At STRI, research technician Alfonso Zambrano manages and verifies the data collection of the EC system. "BCI's EC system has been operating continuously for the last ten years," says Zambrano. "It's important because it's a localized way of calculating gas exchange over the forest. And there are very few of these systems in the tropics."

The first AVA tower was built in 2012 as part of the ForestGEO network's climate monitoring program; Detto, then an associate staff scientist at STRI, added the EC system sensors, and managed them until 2017.

"One of the big uncertainties in these models, which has prevented us from being able to predict the future of the climate more accurately," Wright continues, "is how tropical forests will respond to higher temperatures, global increases in atmospheric carbon, and changes in regional precipitation. How are they going to react? It’s guesswork. So, we use technology to provide data that helps fill those information gaps."

"The data generated by the tower in BCI is crucial to improve these ecosystem models, such as those developed by NGEE-Tropics, to predict how forests will behave in future climate scenarios," explains Detto. "And they rely on field observations like ours to calibrate and validate the models."

2 notes

·

View notes

Text

Human augmentation:

refers to the use of technology to enhance physical, cognitive, or sensory abilities beyond natural human limits. Augmentation can be temporary or permanent and can range from simple tools to advanced cybernetic implants. Here are some key ways a person can be augmented:

### **1. Physical Augmentation**

- **Prosthetics & Exoskeletons**: Advanced prosthetic limbs (bionic arms/legs) and powered exoskeletons can restore or enhance mobility and strength.

- **Muscle & Bone Enhancements**: Synthetic tendons, reinforced bones, or muscle stimulators can improve physical performance.

- **Wearable Tech**: Smart clothing, haptic feedback suits, and strength-assist devices can enhance endurance and dexterity.

### **2. Sensory Augmentation**

- **Enhanced Vision**: Bionic eyes (retinal implants), night-vision contact lenses, or AR/VR headsets can extend visual capabilities.

- **Enhanced Hearing**: Cochlear implants or ultrasonic hearing devices can improve or restore hearing.

- **Tactile & Haptic Feedback**: Sensors that provide enhanced touch or vibration feedback for better interaction with machines or virtual environments.

- **Olfactory & Taste Augmentation**: Experimental tech could enhance or modify smell/taste perception.

### **3. Cognitive Augmentation**

- **Brain-Computer Interfaces (BCIs)**: Neural implants (e.g., Neuralink, neuroprosthetics) can improve memory, learning speed, or direct brain-to-machine communication.

- **Nootropics & Smart Drugs**: Chemical enhancements that boost focus, memory, or mental processing.

- **AI Assistants**: Wearable or implantable AI that aids decision-making or information retrieval.

### **4. Genetic & Biological Augmentation**

- **Gene Editing (CRISPR)**: Modifying DNA to enhance physical traits, disease resistance, or longevity.

- **Synthetic Biology**: Engineered tissues, organs, or blood substitutes for improved performance.

- **Biohacking**: DIY biology experiments, such as implanting magnets in fingers for sensing electromagnetic fields.

### **5. Cybernetic & Digital Augmentation**

- **Embedded Chips (RFID, NFC)**: Subdermal implants for digital identity, keyless access, or data storage.

- **Digital Twins & Cloud Integration**: Real-time health monitoring via embedded sensors linked to cloud AI.

- **Neural Lace**: A mesh-like brain implant for seamless human-AI interaction (still experimental).

### **6. Performance-Enhancing Substances**

- **Stem Cell Therapies**: For faster healing and regeneration.

- **Synthetic Hormones**: To boost strength, endurance, or recovery.

- **Nanotechnology**: Microscopic machines for repairing cells or enhancing biological functions.

### **Ethical & Social Considerations**

- **Privacy & Security**: Risks of hacking or surveillance with embedded tech.

- **Inequality**: Augmentation could widen gaps between enhanced and non-enhanced individuals.

- **Identity & Humanity**: Philosophical debates on what it means to be "human" after augmentation.

### **Current & Future Trends**

- **Military & Defense**: DARPA and other agencies are working on super-soldier programs.

- **Medical Rehabilitation**: Restoring lost functions for disabled individuals.

- **Transhumanism**: A movement advocating for human enhancement to transcend biological limits.

Would you like details on a specific type of augmentation?

#future#cyberpunk aesthetic#futuristic#futuristic city#cyberpunk artist#cyberpunk city#cyberpunkart#concept artist#digital art#digital artist#human augmentation#augmented human#human with a robot brain#futuristic theory#augmented brain#augmented reality#augmented

3 notes

·

View notes

Text

How to make a microwave weapon to control your body or see live camera feeds or memories:

First, you need a computer (provide a list of computers available on the internet with links).

Next, you need an antenna (provide a link).

Then, you need a DNA remote: https://www.remotedna.com/hardware

Next, you need an electrical magnet, satellite, or tower to produce signals or ultrasonic signals.

Connect all these components.

The last thing you need is a code and a piece of blood or DNA in the remote.

Also, if want put voice or hologram in DNA or brain you need buy this https://www.holosonics.com/products-1 and here is video about it: you can make voice in people just like government does, (they say voices is mental health, but it lies) HERE PROOF like guy say in video it like alien, only 1,500 dollars

youtube

The final step is to use the code (though I won't give the code, but you can search the internet or hire someone to make it). Instructions on how to make a microwave weapon to control:

Emotions

Smell

Taste

Eyesight

Hearing

Dreams

Nightmares

Imagination or visuals in the mind

All memory from your whole life

See the code uploaded to your brain from:

God

Government

See tracking and files linking to:

U.S. Space Force

Various governments (as they should leave tracking and links to who made the code, similar to a virus you get on a computer)

Tracking to government:

You can open a mechanical folder and see the program controlling you.

If tracking uses a cell tower or satellite, you can track all input and output to your body.

Even make an antenna in your home and connect it to your DNA to remove and collect all information sent to your body.

Technology used only by the government:

Bluetooth and ultrasonic signals

Light technology (new internet used only by the government)

Signals go to the body by DNA remote

How to make a microwave weapon to control your body or see live camera feeds or memories:

First, you need a computer (provide a list of computers available on the internet with links).

Next, you need an antenna (provide a link).

Then, you need a DNA remote: https://www.remotedna.com/hardware

Next, you need an electrical magnet, satellite, or tower to produce signals or ultrasonic signals.

Connect all these components.

The last thing you need is a code and a piece of blood or DNA in the remote.

The final step is to use the code (though I won't give the code, but you can search the internet or hire someone to make it).

Additional methods:

You can hire someone like me to help you (for a fee).

If you want, you can use a microchip in the brain to download all information.

Another way is to plug a wire into a vein or spine and download all your information into a computer, but you have to use the code the government uses to track and see if you are using all kinds of codes linked to them.

Sure, I can help you develop a research paper on Brain-Computer Interfaces (BCIs) and their ethical considerations. Here's an outline for the paper, followed by the research content and sources.

Research Paper: Brain-Computer Interfaces and Ethical Considerations

Introduction

Brain-Computer Interfaces (BCIs) are a revolutionary technological advancement that enables direct communication between the human brain and external devices. BCIs have applications in medicine, neuroscience, gaming, communication, and more. However, as these technologies progress, they raise several ethical concerns related to privacy, autonomy, consent, and the potential for misuse. This paper will explore the ethical implications of BCIs, addressing both the potential benefits and the risks.

Overview of Brain-Computer Interfaces

BCIs function by detecting neural activity in the brain and translating it into digital signals that can control devices. These interfaces can be invasive or non-invasive. Invasive BCIs involve surgical implantation of devices in the brain, while non-invasive BCIs use sensors placed on the scalp to detect brain signals.

Applications of BCIs

Medical Uses: BCIs are used for treating neurological disorders like Parkinson's disease, ALS, and spinal cord injuries. They can restore lost functions, such as enabling patients to control prosthetic limbs or communicate when other forms of communication are lost.

Neuroenhancement: There is also interest in using BCIs for cognitive enhancement, improving memory, or even controlling devices through thoughts alone, which could extend to various applications such as gaming or virtual reality.

Communication: For individuals who are unable to speak or move, BCIs offer a means of communication through thoughts, which can be life-changing for those with severe disabilities.

Ethical Considerations

Privacy Concerns

Data Security: BCIs have the ability to access and interpret private neural data, raising concerns about who owns this data and how it is protected. The possibility of unauthorized access to neural data could lead to privacy violations, as brain data can reveal personal thoughts, memories, and even intentions.

Surveillance: Governments and corporations could misuse BCIs for surveillance purposes. The potential to track thoughts or monitor individuals without consent raises serious concerns about autonomy and human rights.

Consent and Autonomy

Informed Consent: Invasive BCIs require surgical procedures, and non-invasive BCIs can still impact mental and emotional states. Obtaining informed consent from individuals, particularly vulnerable populations, becomes a critical issue. There is concern that some individuals may be coerced into using these technologies.

Cognitive Freedom: With BCIs, there is a potential for individuals to lose control over their mental states, thoughts, or even memories. The ability to "hack" or manipulate the brain may lead to unethical modifications of cognition, identity, or behavior.

Misuse of Technology

Weaponization: As mentioned in your previous request, there are concerns that BCIs could be misused for mind control or as a tool for weapons. The potential for military applications of BCIs could lead to unethical uses, such as controlling soldiers or civilians.

Exploitation: There is a risk that BCIs could be used for exploitative purposes, such as manipulating individuals' thoughts, emotions, or behavior for commercial gain or political control.

Psychological and Social Impacts

Psychological Effects: The integration of external devices with the brain could have unintended psychological effects, such as changes in personality, mental health issues, or cognitive distortions. The potential for addiction to BCI-driven experiences or environments, such as virtual reality, could further impact individuals' mental well-being.

Social Inequality: Access to BCIs may be limited by economic factors, creating disparities between those who can afford to enhance their cognitive abilities and those who cannot. This could exacerbate existing inequalities in society.

Regulation and Oversight

Ethical Standards: As BCI technology continues to develop, it is crucial to establish ethical standards and regulations to govern their use. This includes ensuring the technology is used responsibly, protecting individuals' rights, and preventing exploitation or harm.

Government Involvement: Governments may have a role in regulating the use of BCIs, but there is also the concern that they could misuse the technology for surveillance, control, or military applications. Ensuring the balance between innovation and regulation is key to the ethical deployment of BCIs.

Conclusion

Brain-Computer Interfaces hold immense potential for improving lives, particularly for individuals with disabilities, but they also come with significant ethical concerns. Privacy, autonomy, misuse, and the potential psychological and social impacts must be carefully considered as this technology continues to evolve. Ethical standards, regulation, and oversight will be essential to ensure that BCIs are used responsibly and equitably.

Sources

K. Lebedev, M. I. (2006). "Brain–computer interfaces: past, present and future." Trends in Neurosciences.

This source explores the evolution of BCIs and their applications in medical fields, especially in restoring lost motor functions and communication capabilities.

Lebedev, M. A., & Nicolelis, M. A. (2006). "Brain–machine interfaces: past, present and future." Trends in Neurosciences.

This paper discusses the potential of BCIs to enhance human cognition and motor capabilities, as well as ethical concerns about their development.

Moran, J., & Gallen, D. (2018). "Ethical Issues in Brain-Computer Interface Technology." Ethics and Information Technology.

This article discusses the ethical concerns surrounding BCI technologies, focusing on privacy issues and informed consent.

Marzbani, H., Marzbani, M., & Mansourian, M. (2017). "Electroencephalography (EEG) and Brain–Computer Interface Technology: A Survey." Journal of Neuroscience Methods.

This source explores both non-invasive and invasive BCI systems, discussing their applications in neuroscience and potential ethical issues related to user consent.

"RemoteDNA."

The product and technology referenced in the original prompt, highlighting the use of remote DNA technology and potential applications in connecting human bodies to digital or electromagnetic systems.

"Ethics of Brain–Computer Interface (BCI) Technology." National Institutes of Health

This source discusses the ethical implications of brain-computer interfaces, particularly in terms of their potential to invade privacy, alter human cognition, and the need for regulation in this emerging field.

References

Moran, J., & Gallen, D. (2018). Ethical Issues in Brain-Computer Interface Technology. Ethics and Information Technology.

Marzbani, H., Marzbani, M., & Mansourian, M. (2017). Electroencephalography (EEG) and Brain–Computer Interface Technology: A Survey. Journal of Neuroscience Methods.

Lebedev, M. A., & Nicolelis, M. A. (2006). Brain–computer interfaces: past, present and future. Trends in Neurosciences.

2 notes

·

View notes

Text

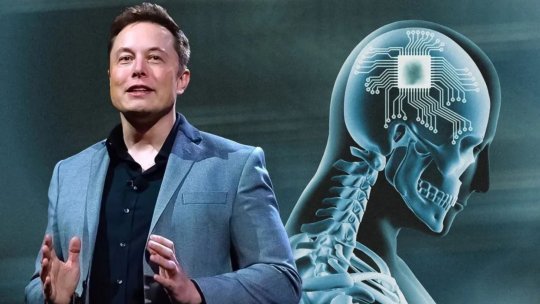

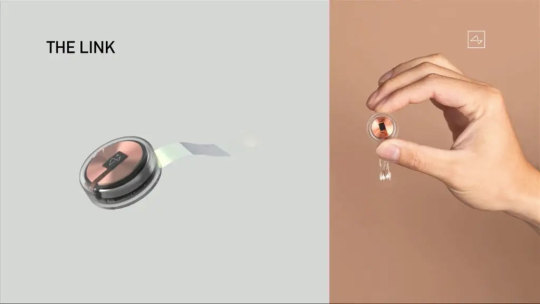

Elon Musk’s Neuralink looking for volunteer to have piece of their skull cut open by robotic surgeon

Elon Musk’s chip implant company Neuralink is looking for its first volunteer who is willing to have a piece of their skull removed so that a robotic surgeon can insert thin wires and electrodes into their brain.

The ideal candidate will be a quadriplegic under the age of 40 who will also for a procedure that involves implanting a chip, which has 1,000 electrodes, into their brain, the company told Bloomberg News.

The interface would enable computer functions to be performed using only thoughts via a “think-and-click” mechanism.

After a surgeon removes a part of the a skull, a 7-foot-tall robot, dubbed “R1,” equipped with cameras, sensors and a needle will push 64 threads into the brain while doing its best to avoid blood vessels, Bloomberg reported.

Each thread, which is around 1/14th the diameter of a strand of human hair, is lined with 16 electrodes that are programmed to gather data about the brain.

The task is assigned to robots since human surgeons would likely not be able to weave the threads into the brain with the precision required to avoid damaging vital tissue.

Elon Musk’s brain chip company Neuralink is looking for human volunteers for experimental trials.AP

The electrodes are designed to record neural activity related to movement intention. These neural signals are then decoded by Neuralink computers.

R1 has already performed hundreds of experimental surgeries on pigs, sheep, and monkeys. Animal rights groups have been critical of Neuralink for alleged abuses.

“The last two years have been all about focus on building a human-ready product,” Neuralink co-founder DJ Seo told Bloomberg News.

“It’s time to help an actual human being.”

It is unclear if Neuralink plans to pay the volunteers.

The Post has sought comment from the company.

Those with paralysis due to cervical spinal cord injury or amyotrophic lateral sclerosis may qualify for the study, but the company did not reveal how many participants would be enrolled in the trial, which will take about six years to complete.

Musk’s company is seeking quadriplegics who are okay with their skull being opened so that a wireless brain-computer implant, which has 1,000 electrodes, could be lodged into their brain.REUTERS

Neuralink, which had earlier hoped to receive approval to implant its device in 10 patients, was negotiating a lower number of patients with the Food and Drug Administration (FDA) after the agency raised safety concerns, according to current and former employees.

It is not known how many patients the FDA ultimately approved.

“The short-term goal of the company is to build a generalized brain interface and restore autonomy to those with debilitating neurological conditions and unmet medical needs,” Seo, who also holds the title of vice president for engineering, told Bloomberg.

The brain chip device would be implanted underneath a human skull.

“Then, really, the long-term goal is to have this available for billions of people and unlock human potential and go beyond our biological capabilities.”

Musk has grand ambitions for Neuralink, saying it would facilitate speedy surgical insertions of its chip devices to treat conditions like obesity, autism, depression and schizophrenia.

The goal of the device is to enable a “think-and-click” mechanism allowing people to use computers through their thoughts.Getty Images/iStockphoto

In May, the company said it had received clearance from the FDA for its first-in-human clinical trial, when it was already under federal scrutiny for its handling of animal testing.

Even if the BCI device proves to be safe for human use, it would still potentially take more than a decade for the startup to secure commercial use clearance for it, according to experts.

Source: nypost.com

2 notes

·

View notes

Text

PROTESIS CON IA

Las prótesis con inteligencia artificial (IA) son dispositivos médicos avanzados diseñados para ayudar a las personas con discapacidades físicas a recuperar funciones perdidas. Estas prótesis utilizan la IA para mejorar su funcionamiento de varias maneras:

1. Control preciso: La IA permite que las prótesis interpreten señales eléctricas del cuerpo, como las generadas por los músculos o el cerebro, para un control más preciso. Esto puede permitir a los usuarios mover la prótesis de manera más natural.

2. Aprendizaje automático: Algunas prótesis pueden aprender y adaptarse a medida que el usuario las utiliza, lo que les permite mejorar con el tiempo y ajustarse a las necesidades específicas del usuario.

3. Interfaz cerebro-computadora (BCI): Las prótesis con IA a menudo se pueden conectar a interfaces cerebro-computadora, que permiten a los usuarios controlar la prótesis directamente con sus pensamientos.

4. Retroalimentación sensorial: La IA también se utiliza para proporcionar retroalimentación sensorial a los usuarios, como la sensación de tocar o agarrar objetos.

5. Personalización: La IA permite personalizar las prótesis según las necesidades y preferencias individuales de cada usuario, lo que mejora la comodidad y la funcionalidad.Estas prótesis con IA están en constante desarrollo y están ayudando a mejorar la calidad de vida de muchas personas con discapacidades físicas al proporcionarles una mayor movilidad y autonomía.

Las prótesis con inteligencia artificial (IA) incorporan tecnología avanzada para mejorar la funcionalidad y adaptabilidad de las prótesis. La IA permite que las prótesis se adapten a las necesidades del usuario de forma dinámica, aprendiendo de los movimientos y patrones de uso para ofrecer una experiencia más natural y cómoda. Estas prótesis pueden ajustarse automáticamente a diferentes actividades, como caminar, correr o agarrar objetos.La IA en prótesis puede implicar la detección de señales electromiográficas (EMG) del músculo residual para controlar los movimientos de la prótesis de manera más precisa. Además, puede implicar la integración de sensores y algoritmos avanzados para mejorar la coordinación y el equilibrio.

¿Hay algo en particular que te interese sobre las prótesis con IA?

3 notes

·

View notes

Text

Cotton Market Size to Reach USD 51.60 Billion by 2030, Driven by Sustainable Farming and Traceability Demands

The global cotton market size is valued at USD 44.30 billion in 2025 and is projected to reach USD 51.60 billion by 2030, growing at a CAGR of 3.1% during the forecast period. This growth is underpinned by sustainability mandates, precision farming adoption, and increasing traceability regulations reshaping global supply chains. While the size of the cotton market continues to expand steadily, rising demand from major textile hubs such as Bangladesh and Vietnam, alongside Better Cotton Initiative (BCI) sourcing requirements, is changing the competitive landscape for growers and merchants worldwide.

Get More Insights: https://www.mordorintelligence.com/industry-reports/cotton-market

Cotton Market Key Trends

Persistent Demand from Textile-Importing Hubs

Major textile-importing countries such as Bangladesh and Vietnam continue to drive robust demand for high-grade cotton lint. Bangladesh is poised to become the world’s largest lint importer by 2025, reaching an estimated 8 million bales as mills seek uniform fiber characteristics for premium yarn production. Meanwhile, West African lint is gaining market share in Bangladesh, accounting for 39% of imports in fiscal 2022–23 due to its high micronaire values and assured pesticide-residue compliance.

Shift Toward Better Cotton Initiative Sourcing

BCI-certified cotton is becoming a procurement requirement rather than a preference, with over 2.13 million farmers across 22 countries adopting its protocols. Global retailers increased BCI lint purchases by 40% in the latest reporting cycle, driven by sustainability commitments from brands such as H&M and Target to source 100% verified cotton by 2030. This shift enhances the market position of compliant growers and merchants who can provide certified fiber under mass-balance chain-of-custody models.

Growth in Recycled Cotton Blends

While recycled cotton accounts for just 1% of total supply, demand is outstripping available feedstock due to sustainability targets set by global apparel majors. For example, Patagonia integrates 28% recycled cotton by weight in its fabrics. Mechanical recycling remains dominant, though new investments in chemical recycling, like Circ’s USD 500 million French plant, are expected to improve fiber yield and strength. Despite recycling growth, virgin cotton remains essential to maintain yarn performance, supporting a balanced market outlook.

Climate-Smart Irrigation and AI Forecasting Adoption

Climate-smart irrigation is increasingly adopted in North America and Australia, as sensor-based systems and AI yield-forecasting tools improve water-use efficiency and crop profitability. For instance, Arizona growers using deficit irrigation strategies achieved 10% yield gains while saving significant water resources, enhancing net margins despite rising input costs.

Cotton Market Segmentation

By Geography

North America holds the largest share of the cotton market, accounting for 38.9% of global value in 2024. Advanced precision farming tools, digitised classing, and robust traceability programs ensure consistent quality and compliance, strengthening North American lint’s position as a low-risk sourcing option for global brands.

Africa is projected to grow at a CAGR of 5.60% through 2030, driven by government subsidy programs supporting seed, fertilizer, and pest-control inputs. West African lint is expanding its presence in Asian markets, while initiatives such as Benin’s Special Economic Zone are boosting local yarn-spinning capacity.

Asia-Pacific remains a significant contributor to cotton output, with India focusing on stacked-trait seed development to combat pest resistance and Pakistan adopting climate-smart irrigation. Australia continues to maintain stable exports through IoT-enabled deficit-irrigation technologies. China primarily consumes its domestic cotton while managing import quotas to stabilize prices for its textile sector.

By Application

The cotton market remains dominated by textile manufacturing, accounting for the largest share of cotton utilization globally. However, segments such as recycled cotton blends are rising in importance as sustainability pressures increase across the apparel industry.

Cotton Market Drivers Impacting Growth

Persistent demand for high-grade lint in textile hubs such as Bangladesh and Vietnam is supporting market stability.

Better Cotton Initiative adoption is increasing, shifting sourcing preferences towards certified sustainable producers.

Growing use of recycled cotton blends is enhancing premium pricing opportunities while retaining virgin cotton’s essential role.

Climate-smart irrigation investments are improving yield resilience amid tightening water constraints in developed markets.

AI-enabled yield forecasting tools are reducing merchant risks and improving grower profitability.

Cotton Market Restraints Impacting Growth

Pink-bollworm resistance to GM traits is rising in countries like India and China, prompting reliance on integrated pest management.

Traceability and forced-labor regulations, such as the US Uyghur Forced Labor Prevention Act, are increasing compliance costs and slowing trade flows.

Competition from cellulosic fibers remains a structural headwind, particularly in fast-fashion segments prioritising cost and sustainability.

Volatile ocean-freight rates continue to impact merchant margins across global trade routes.

Cotton Market Key Players and Recent Developments

Recent industry updates highlight strategic shifts in major producing countries:

In June 2025, the Cotton Corporation of India procured 100 lakh bales at Minimum Support Prices, supporting farmers amid weak domestic demand.

In April 2025, India and Australia signed an MoU to boost bilateral cotton trade, enabling duty-free import of 51,000 metric tons annually into India.

In January 2025, Brazil exported 3.7 million metric tons of cotton for the 2024 season, a 16.64% increase year-on-year, cementing its role as the world’s leading exporter.

Major market players and institutions continue to focus on sustainability, digital traceability, and irrigation efficiency to maintain competitive advantage amid shifting regulatory landscapes and consumer expectations.

Get More Insights on this report: https://www.mordorintelligence.com/ja/industry-reports/cotton-market

Conclusion

The global cotton market is set to grow steadily to USD 51.60 billion by 2030, supported by robust demand from textile-importing nations, adoption of sustainability standards, and integration of climate-smart farming practices. While market size expansion is moderate, higher-value segments such as BCI-certified and recycled cotton blends are capturing a larger share within the market. Key producing regions are adapting to challenges such as pest resistance and water scarcity through technology investments and diversified sourcing strategies. As traceability regulations tighten globally, growers and merchants with transparent, compliant supply chains will be best positioned to capture future market growth and ensure long-term resilience.

0 notes

Text

Future of Brain-Computer Interface Market: Top Innovations and Investment Insights

The global Brain-Computer Interface (BCI) market was valued at USD 54.29 billion in 2024 and is projected to expand at a Compound Annual Growth Rate (CAGR) of 10.98% during the forecast period, according to a new comprehensive market analysis. The market is witnessing unprecedented growth driven by advancements in neuroscience, increasing prevalence of neurological disorders, and expanding applications across various industries.

Request Sample Report PDF (including TOC, Graphs & Tables): https://www.statsandresearch.com/request-sample/40646-global-brain-computer-interface-bci-market

Market Segmentation Analysis

By Type

Invasive BCIs

Partially Invasive BCIs

Non-invasive BCIs

The non-invasive BCI segment currently holds the largest market share due to consumer preference for non-surgical solutions and lower associated risks. However, invasive BCIs are expected to witness the fastest growth rate owing to their superior signal quality and precision in medical applications.

By Component

Hardware

Software

Services

Hardware components dominate the market, with electrodes and sensors being the primary revenue generators. The software segment is anticipated to grow significantly as artificial intelligence and machine learning algorithms enhance BCI functionality and interpretation capabilities.

By Technology

Electroencephalography (EEG)

Magnetoencephalography (MEG)

Functional Magnetic Resonance Imaging (fMRI)

Near-Infrared Spectroscopy (NIRS)

Electrocorticography (ECoG)

Others

EEG-based BCIs continue to lead the market due to their non-invasiveness, portability, and cost-effectiveness. Meanwhile, emerging technologies like NIRS are gaining traction in research institutions and specialized medical facilities.

By Connectivity

Wired

Wireless

Wireless BCI systems are experiencing rapid adoption due to enhanced mobility, comfort, and usability. This trend is particularly prominent in consumer applications and is expected to influence the Canada Consumer Healthcare Market significantly.

By Application

Healthcare

Military & Defense

Gaming & Entertainment

Communication & Control

Smart Home Control

Others

Healthcare applications currently dominate the market, with significant investments in treating neurological disorders and rehabilitation therapies. The gaming and entertainment segment is projected to grow substantially, creating new opportunities that may eventually impact the Canada Consumer Healthcare Market.

By End-User

Medical Institutions & Hospitals

Research Institutions

Government Organizations

Military & Defense

Technology Companies

Others

Medical institutions remain the primary end-users of BCI technology. However, technology companies are increasingly investing in BCI research and development, expanding the potential reach into various consumer markets, including the Canada Consumer Healthcare Market.

Get up to 30%-40% Discount: https://www.statsandresearch.com/check-discount/40646-global-brain-computer-interface-bci-market

Regional Market Insights

North America (currently leads the market with the highest adoption rates)

Europe

Asia-Pacific (fastest-growing region)

Latin America

Middle East & Africa

North America continues to dominate the global BCI market, accounting for approximately 40% of the market share. This dominance is attributed to substantial investments in healthcare infrastructure, presence of major market players, and advanced research initiatives. The Canada Consumer Healthcare Market, in particular, is showing promising growth in BCI adoption for both medical and consumer applications.

Asia-Pacific is emerging as a high-potential region with increasing healthcare expenditure, growing awareness about neurological disorders, and rising investments in healthcare technology. Several countries in this region are incorporating BCI solutions into their healthcare systems, following trends observed in the Canada Consumer Healthcare Market.

Market Drivers and Challenges

Key Drivers

Rising prevalence of neurological disorders

Increasing research funding for BCI technology

Growing demand for enhanced healthcare solutions

Expanding applications beyond medical use

Technological advancements in AI and machine learning

Major Challenges

High development and implementation costs

Ethical concerns regarding brain data privacy

Regulatory hurdles

Technical limitations in current BCI systems

Limited consumer awareness and acceptance

Purchase Exclusive Report: https://www.statsandresearch.com/enquire-before/40646-global-brain-computer-interface-bci-market

Future Outlook

The global BCI market is poised for substantial growth as technological advancements continue to enhance the capabilities and applications of these interfaces. Emerging trends include miniaturization of devices, improved signal processing algorithms, and integration with other technologies such as virtual reality and artificial intelligence.

Industry experts anticipate that collaborations between technology companies, medical institutions, and research organizations will accelerate innovation in this field. Additionally, the growing focus on personalized medicine and rehabilitation is expected to create new opportunities for BCI applications in various markets, including the Canada Consumer Healthcare Market.

As BCI technology becomes more accessible and affordable, its adoption is expected to extend beyond traditional medical applications into everyday consumer use, potentially transforming how humans interact with digital systems and environments, similar to trends observed in the Canada Consumer Healthcare Market.

Our Services:

On-Demand Reports: https://www.statsandresearch.com/on-demand-reports

Subscription Plans: https://www.statsandresearch.com/subscription-plans

Consulting Services: https://www.statsandresearch.com/consulting-services

ESG Solutions: https://www.statsandresearch.com/esg-solutions

Contact Us:

Stats and Research

Email: [email protected]

Phone: +91 8530698844

Website: https://www.statsandresearch.com

0 notes

Text

Mind-Control Tech: Are We Ready?

Neurotech Interfaces: Are We Ready to Control Devices with Our Minds?

Imagine typing a message, controlling your car, or playing a game - all without lifting a finger. That’s no longer the stuff of science fiction. It’s the direction technology is heading, thanks to the emergence of brain-computer interfaces. These neural systems are promising a future where direct communication between the mind and machines could be as natural as touchscreens are today. But are we ready for that level of integration?

What Is a Brain-Computer Interface?

A brain-computer interface, or BCI, is a system that connects the human brain to an external device. It picks up electrical signals from brain activity, interprets them, and converts them into commands. These signals can then be used to operate machines, apps, or prosthetics without physical movement. Think of it as a bridge between the brain’s intent and the digital world - a connection made possible through sensors, algorithms, and years of neurological research.

From Labs to Lives: How BCIs Are Used Today

While the idea sounds futuristic, brain-computer interfaces are already active in the medical world. They’ve been used to help paralyzed individuals move robotic limbs, control cursors, or speak through synthesized voices. Companies like Blackrock Neurotech are at the forefront, developing high-fidelity brain implants used in clinical trials. Their work with motor restoration and cognitive tracking shows how BCI technology is already changing lives.

Then there’s Neuralink, the venture backed by Elon Musk. The company has performed public demonstrations showing how their neural device allows monkeys to play games with their minds. In 2025, Neuralink moved into its first human trials, aiming to make BCI technology both safe and commercially viable. These early cases show the tech is quite real.

Gaming, Work, and Beyond: The Consumer BCI Vision

Beyond medical applications, companies are eyeing consumer use. Imagine logging into your computer, playing immersive VR games, or writing documents - all powered by thought. Brain-computer interfaces could radically change how people interact with digital environments. This would allow faster reactions in gameplay, deeper immersion in simulations, and even better focus in productivity tasks. The idea of working or learning with your mind, instead of just your hands, opens up a whole new design philosophy.

The Ethics of Mind Access

The more connected we become, the more questions arise. What happens when brain signals are stored? Who gets access to this data? As interfaces become more sensitive, the line between control and surveillance starts to blur. Thought data, unlike browsing history, is deeply personal. Once exposed, it can’t be changed. These concerns go far beyond cybersecurity — they enter the realm of personal agency and mental privacy.

Who Owns Your Thoughts?

If a BCI captures and stores neural patterns, that data becomes valuable. But it also becomes vulnerable. Could your thoughts be used against you? Could companies monetize your focus or mood patterns? Could governments mandate brain scans for security? These questions are no longer theoretical. The speed of innovation demands answers about ownership, rights, and accountability. Before any BCI becomes mainstream, these gaps in ethical design must be addressed.

Qwegle’s Watch on Future Interfaces

At Qwegle, we keep a close eye on how these frontier technologies impact business design and user behavior. Our experts help brands understand how new interfaces will shape digital experiences. From future-proofing content platforms to exploring new modes of interaction, we support businesses navigating shifts in data control. For us, tech is not just about novelty. It’s about preparation.

Roadblocks Ahead: What’s Slowing Adoption?

Despite the excitement, brain-computer interfaces face serious limitations. Surgical implants are invasive and expensive. Signal clarity often requires lab-grade equipment. There are still a few universal standards for hardware or data formats. Regulations are catching up slowly, and widespread trust is missing. Until brain interfaces are proven safe, accessible, and secure, mass adoption remains unlikely - but not out of reach.

Where Are We Headed? Over the next five to ten years, we’ll likely see non-invasive versions of BCIs, starting with wearables and accessories, enter the mainstream. Neuralink and Blackrock Neurotech are expected to release more trial data, and regulatory agencies will be pressured to keep pace. Once the technology becomes simpler and safer, it could change everything from education to smart homes. The journey to mind-controlled tech has already begun - now it’s a matter of how far and fast we’re willing to go.

0 notes

Text

EXG Synapse — DIY Neuroscience Kit | HCI/BCI & Robotics for Beginners

Neuphony Synapse has comprehensive biopotential signal compatibility, covering ECG, EEG, EOG, and EMG, ensures a versatile solution for various physiological monitoring applications. It seamlessly pairs with any MCU featuring ADC, expanding compatibility across platforms like Arduino, ESP32, STM32, and more. Enjoy flexibility with an optional bypass of the bandpass filter allowing tailored signal output for diverse analysis.

Technical Specifications:

Input Voltage: 3.3V

Input Impedance: 20⁹ Ω

Compatible Hardware: Any ADC input

Biopotentials: ECG EMG, EOG, or EEG (configurable bandpass) | By default configured for a bandwidth of 1.6Hz to 47Hz and Gain 50

No. of channels: 1

Electrodes: 3

Dimensions: 30.0 x 33.0 mm

Open Source: Hardware

Very Compact and space-utilized EXG Synapse

What’s Inside the Kit?:

We offer three types of packages i.e. Explorer Edition, Innovator Bundle & Pioneer Pro Kit. Based on the package you purchase, you’ll get the following components for your DIY Neuroscience Kit.

EXG Synapse PCB

Medical EXG Sensors

Wet Wipes

Nuprep Gel

Snap Cable

Head Strap

Jumper Cable

Straight Pin Header

Angeled Pin Header

Resistors (1MR, 1.5MR, 1.8MR, 2.1MR)

Capacitors (3nF, 0.1uF, 0.2uF, 0.5uF)

ESP32 (with Micro USB cable)

Dry Sensors

more info:https://neuphony.com/product/exg-synapse/

2 notes

·

View notes

Text

Brain-Computer Interface (BCI) Market and Human Augmentation Trends

The global Brain-Computer Interface (BCI) market is poised for significant transformation, driven by rapid technological advancements, increasing neurological disorders, and rising adoption across both healthcare and non-medical sectors. With growing interest in human-machine integration, BCI technology is no longer confined to science fiction — it is emerging as a practical solution in real-world applications from communication aids to neurogaming and beyond.

Market Overview

In 2024, the global BCI market was valued at US$ 2.44 billion. It is projected to grow at a CAGR of approximately 18.2% from 2025 to 2030, potentially reaching US$ 6.5 billion by 2030. This strong growth is underpinned by increasing investments in neural research, supportive regulatory approvals, and the commercialization of both invasive and non-invasive BCI solutions.

Key Market Drivers

Rising Neurological Disorders and Aging Population

A growing incidence of disorders such as Parkinson’s disease, amyotrophic lateral sclerosis (ALS), and epilepsy is driving demand for assistive and restorative technologies. BCIs offer a vital communication pathway for patients with limited motor function, enabling tasks such as cursor control, speech synthesis, and robotic limb operation through brain signals alone.

Technological Innovations

Innovations in artificial intelligence (AI), deep learning, and neural decoding algorithms have significantly enhanced the accuracy and efficiency of BCIs. Advancements in sensor miniaturization, wireless data transmission, and real-time brain signal processing are making BCIs more accessible and applicable across various industries.

Expanding Applications Beyond Healthcare

Beyond clinical use, BCIs are gaining traction in gaming, virtual reality (VR), education, and smart home control. Companies like EMOTIV and NeuroSky are pioneering non-invasive BCI headsets tailored for consumer use, enabling brain-controlled gaming and immersive AR/VR experiences.

Access our report for a deep dive into the critical insights -

Market Segmentation

By Type of Interface

Non-invasive BCI: Dominates the market due to its safety, affordability, and commercial availability. EEG-based devices fall into this category.

Partially Invasive BCI: Placed inside the skull but outside the brain. Used in clinical research.

Invasive BCI: Implanted directly into the brain cortex. Offers high signal fidelity but faces challenges related to safety and regulatory approval.

By Application

Medical: Stroke rehabilitation, neuroprosthetics, communication aids

Gaming & Entertainment: Brain-controlled games, immersive VR experiences

Smart Environment Control: Home automation, assistive devices

Defense and Aerospace: Cognitive workload monitoring, pilot alertness systems

Education and Research: Neurofeedback training, attention measurement

By End User

Hospitals & Clinics

Academic & Research Institutions

Gaming & Entertainment Companies

Military Organizations

Individual Consumers

Regional Outlook

North America

North America holds the largest share of the BCI market, led by substantial R&D investments, favorable regulatory frameworks, and the presence of major players. The U.S. FDA has granted multiple breakthrough designations to BCI developers, accelerating their route to market.

Asia-Pacific

Asia-Pacific is anticipated to witness the fastest CAGR due to growing government support, increasing neurological disorders, and advancements in AI and neurotechnology in countries like China, Japan, and India.

Europe

Europe maintains a strong position due to ongoing neuroscience research, especially in countries like Germany, France, and the UK. EU-backed funding for brain research and neuroethics also fosters a balanced innovation landscape.

Competitive Landscape

The BCI market is characterized by both established medical device firms and innovative startups. Key players include:

Medtronic

NeuroSky

EMOTIV

g.tec medical engineering GmbH

Blackrock Neurotech

OpenBCI

Synchron Inc.

Paradromics Inc.

Neuralink Corp.

Precision Neuroscience

Recent developments include:

Synchron’s Stentrode implant, which enables wireless communication via thoughts, has been successfully tested in humans.

Neuralink’s brain chip implant entered human trials in 2024, with promising early outcomes.

Paradromics has advanced its high-bandwidth BCI system with FDA breakthrough status.

Precision Neuroscience received 510(k) clearance for a minimally invasive neural implant in 2025.

Challenges and Opportunities

Challenges

Ethical Concerns: Issues around data privacy, consent, and human enhancement pose regulatory hurdles.

Invasiveness and Risk: Invasive BCIs, though powerful, involve surgical risks and longer approval cycles.

High Costs: Development, production, and deployment of BCI systems remain capital-intensive.

Opportunities

Consumer Applications: Wearable BCIs for productivity, meditation, and gaming offer scalable opportunities.

AI Integration: Coupling BCI with generative AI could enable more intuitive and personalized brain-machine interactions.

Neurorehabilitation: BCIs combined with robotics and VR are opening new frontiers in post-stroke and spinal cord injury recovery.

Future Outlook

From restoring mobility in paralyzed individuals to enabling mind-controlled devices in daily life, the potential of BCIs is vast. As regulatory frameworks mature and technological barriers decline, the market is expected to expand rapidly into sectors previously untouched by neurotechnology.

By 2035, the BCI market could surpass US$ 12 billion, with applications embedded in consumer tech, enterprise systems, and national defense. The convergence of neuroscience, computing, and ethics will shape the trajectory of this transformative industry.

Want to know more? Get in touch now. -https://www.transparencymarketresearch.com/contact-us.html

0 notes

Text

Neuphony's EEG technology captures and analyzes brain waves, offering real-time insights into cognitive states. It's designed for personalized neurofeedback, meditation, and mental health improvement, empowering users to enhance focus, relaxation, and overall brain performance through data-driven approaches.

#bci eeg#neuphony#health#eeg#mental health#bci#brain health#mental wellness#neurofeedback#brain wave sensor#eeg flex cap#brainwave frequencies#neurofeedback training#brain training app#brain waves meditation#mind computer interface#computer interface

1 note

·

View note

Link

0 notes

Text

Post-Stroke Recovery: Integrating Neurorehabilitation With Technology

Recovering from a stroke is a journey that often requires extensive rehabilitation, patience, and support. In recent years, the landscape of post-stroke care has been transformed by technological innovation. From robotics to virtual reality, technology is not just supplementing neurorehabilitation—it’s reshaping it.

Understanding Neurorehabilitation

Neurorehabilitation is a specialized form of therapy aimed at helping individuals recover physical, cognitive, and emotional functions after a neurological event such as a stroke treatment. It involves a multidisciplinary approach, often including physiotherapists, occupational therapists, speech-language pathologists, and neuropsychologists. The goal is to help patients regain independence and improve quality of life.

Traditionally, neurorehabilitation has focused on repetitive, task-specific exercises to retrain the brain. However, progress can be slow, and motivation may wane over time. This is where technology plays a crucial role by making therapy more interactive, personalized, and engaging.

The Rise of Technology in Stroke Recovery

Technological tools are increasingly being integrated into stroke rehabilitation programs to enhance outcomes. Here are some of the most promising innovations:

Robotic Therapy Devices Robotic exoskeletons and arm rehabilitation devices are helping patients perform guided, repetitive movements with high precision. These devices support motor recovery by reinforcing neural pathways through consistent practice, often outperforming conventional therapy in terms of intensity and accuracy.

Virtual Reality (VR) and Gamification Virtual environments allow patients to simulate real-life activities in a controlled, safe setting. VR has been shown to improve balance, coordination, and motor skills while keeping patients motivated. Gamification of therapy tasks, like reaching or walking, adds an element of fun and progress tracking that encourages consistent participation.

Telerehabilitation Platforms With the expansion of telehealth, patients can now receive therapy remotely through secure video sessions and app-based programs. Telerehabilitation increases accessibility for those in remote or underserved areas and helps maintain continuity of care after hospital discharge.

Brain-Computer Interfaces (BCIs) Still emerging but promising, BCIs allow direct communication between the brain and external devices. In stroke rehabilitation, BCIs can help detect a patient’s intention to move and convert that into actual movement using robotic aids or electrical stimulation.

Wearable Sensors and AI Monitoring Wearables can track patient movements, heart rate, and muscle activity in real-time. Data collected is analyzed using AI to provide therapists with insights on progress and help adjust treatment plans accordingly.

Looking Ahead

While technology cannot replace the expertise and empathy of healthcare professionals, it offers powerful tools to augment recovery. The integration of advanced technologies into neurorehabilitation is not only improving patient outcomes but also making therapy more accessible and individualized.

For stroke survivors, these advancements represent more than just machines—they offer renewed hope and tangible steps toward regaining control over their lives. As research continues to evolve, the future of post-stroke recovery looks increasingly promising.

0 notes