#autoresponders

Explore tagged Tumblr posts

Text

So, uh. This is real:

This is 100% a a thing that has happened.

What even is this timeline?!

I'm dying. Oh my stars.

#undertale#us politics#trump#papyrus#twitter post#fara speaks#It's an autoresponder#Papy did it for the gag

13K notes

·

View notes

Text

saw this tweet and immediately knew what had to be done

6K notes

·

View notes

Text

ZapAI Revolutionary NexusAI Technology: Send unlimited “bulk messages” across WhatsApp to millions of mobile phones with a single click.

1 note

·

View note

Text

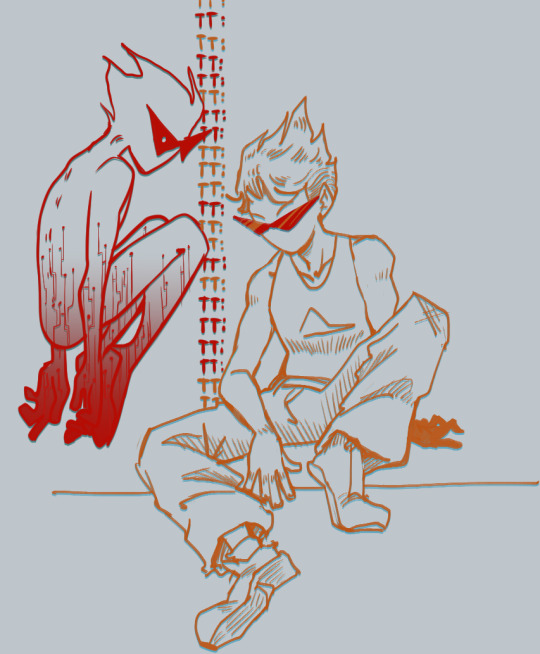

hm

#hom3stuck#homestuck#john egbert#jade harley#dirk strider#dave strider#karkat vantas#davekat#lil hal#autoresponder#inspired sketches because i went thru a bunch of artblogs and wanted to be dynamic and artsy too#also love to listen to songs and then try my best to draw something for the duration of it. this isnt quite one of those times but#hey the names matched up nicely#idk man. tonight is a sad about dirk strider day turned into sad about dirk strider night. davekats to make it go down easier#anyways ever think about how a captcha of 13 year old dirk doesnt want to die but the 16 year old version does.#cause i do . plenty.#these seem like sketches ripe to do something with but i dont feel like touching them more so. black and white up they go

4K notes

·

View notes

Text

Automating Your Email Campaigns: Streamlining Your Marketing Workflow

Email marketing is a powerful tool for businesses to connect with their audience and drive engagement. However, managing email campaigns manually can be time-consuming and resource-intensive. Enter email automation—the process of automating repetitive tasks in your email marketing workflow. In this article, we will explore the benefits of automating your email campaigns and how they can…

View On WordPress

#Autoresponders#CRM systems#Email automation components#Email automation tools#Marketing automation platforms#Triggered emails#Workflows

0 notes

Text

#i love him so much that i need to remind myself every once in a while that he's also just a shape for the entire story#homestuck#💾#hal strider#lil hal#auto responder#autoresponder#i say things#1k#2k#3k#5k#10k#25k

34K notes

·

View notes

Text

LIL HALLL based on readysetrose's cosplay on tiktok!!

#art#digital art#digital fanart#fanart#homestuck#homestuck fanart#artists on tumblr#homestuck artist#homestuck art#lil hal#lil hal fanart#hal strider#autoresponder#dirk strider#dirk strider fanart

948 notes

·

View notes

Text

screw it. im posting this too

#autoresponder#alter ego#hs#homestuck#danganronpa#danganronpa thh#can u tell im on a chihiro zine project rn#lovisas art#why do i keep posting at like 9 am? its bc im bored at work and want attention#lil hal

1K notes

·

View notes

Text

Honestly I'm pretty tired of supporting nostalgebraist-autoresponder. Going to wind down the project some time before the end of this year.

Posting this mainly to get the idea out there, I guess.

This project has taken an immense amount of effort from me over the years, and still does, even when it's just in maintenance mode.

Today some mysterious system update (or something) made the model no longer fit on the GPU I normally use for it, despite all the same code and settings on my end.

This exact kind of thing happened once before this year, and I eventually figured it out, but I haven't figured this one out yet. This problem consumed several hours of what was meant to be a relaxing Sunday. Based on past experience, getting to the bottom of the issue would take many more hours.

My options in the short term are to

A. spend (even) more money per unit time, by renting a more powerful GPU to do the same damn thing I know the less powerful one can do (it was doing it this morning!), or

B. silently reduce the context window length by a large amount (and thus the "smartness" of the output, to some degree) to allow the model to fit on the old GPU.

Things like this happen all the time, behind the scenes.

I don't want to be doing this for another year, much less several years. I don't want to be doing it at all.

----

In 2019 and 2020, it was fun to make a GPT-2 autoresponder bot.

[EDIT: I've seen several people misread the previous line and infer that nostalgebraist-autoresponder is still using GPT-2. She isn't, and hasn't been for a long time. Her latest model is a finetuned LLaMA-13B.]

Hardly anyone else was doing anything like it. I wasn't the most qualified person in the world to do it, and I didn't do the best possible job, but who cares? I learned a lot, and the really competent tech bros of 2019 were off doing something else.

And it was fun to watch the bot "pretend to be me" while interacting (mostly) with my actual group of tumblr mutuals.

In 2023, everyone and their grandmother is making some kind of "gen AI" app. They are helped along by a dizzying array of tools, cranked out by hyper-competent tech bros with apparently infinite reserves of free time.

There are so many of these tools and demos. Every week it seems like there are a hundred more; it feels like every day I wake up and am expected to be familiar with a hundred more vaguely nostalgebraist-autoresponder-shaped things.

And every one of them is vastly better-engineered than my own hacky efforts. They build on each other, and reap the accelerating returns.

I've tended to do everything first, ahead of the curve, in my own way. This is what I like doing. Going out into unexplored wilderness, not really knowing what I'm doing, without any maps.

Later, hundreds of others with go to the same place. They'll make maps, and share them. They'll go there again and again, learning to make the expeditions systematically. They'll make an optimized industrial process of it. Meanwhile, I'll be locked in to my own cottage-industry mode of production.

Being the first to do something means you end up eventually being the worst.

----

I had a GPT chatbot in 2019, before GPT-3 existed. I don't think Huggingface Transformers existed, either. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

I had a denoising diffusion image generator in 2021, before DALLE-2 or Stable Diffusion or Huggingface Diffusers. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

Earlier this year, I was (probably) one the first people to finetune LLaMA. I manually strapped LoRA and 8-bit quantization onto the original codebase, figuring out everything the hard way. It was fun.

Just a few months later, and your grandmother is probably running LLaMA on her toaster as we speak. My homegrown methods look hopelessly antiquated. I think everyone's doing 4-bit quantization now?

(Are they? I can't keep track anymore -- the hyper-competent tech bros are too damn fast. A few months from now the thing will be probably be quantized to -1 bits, somehow. It'll be running in your phone's browser. And it'll be using RLHF, except no, it'll be using some successor to RLHF that everyone's hyping up at the time...)

"You have a GPT chatbot?" someone will ask me. "I assume you're using AutoLangGPTLayerPrompt?"

No, no, I'm not. I'm trying to debug obscure CUDA issues on a Sunday so my bot can carry on talking to a thousand strangers, every one of whom is asking it something like "PENIS PENIS PENIS."

Only I am capable of unplugging the blockage and giving the "PENIS PENIS PENIS" askers the responses they crave. ("Which is ... what, exactly?", one might justly wonder.) No one else would fully understand the nature of the bug. It is special to my own bizarre, antiquated, homegrown system.

I must have one of the longest-running GPT chatbots in existence, by now. Possibly the longest-running one?

I like doing new things. I like hacking through uncharted wilderness. The world of GPT chatbots has long since ceased to provide this kind of value to me.

I want to cede this ground to the LLaMA techbros and the prompt engineers. It is not my wilderness anymore.

I miss wilderness. Maybe I will find a new patch of it, in some new place, that no one cares about yet.

----

Even in 2023, there isn't really anything else out there quite like Frank. But there could be.

If you want to develop some sort of Frank-like thing, there has never been a better time than now. Everyone and their grandmother is doing it.

"But -- but how, exactly?"

Don't ask me. I don't know. This isn't my area anymore.

There has never been a better time to make a GPT chatbot -- for everyone except me, that is.

Ask the techbros, the prompt engineers, the grandmas running OpenChatGPT on their ironing boards. They are doing what I did, faster and easier and better, in their sleep. Ask them.

5K notes

·

View notes

Text

Crossover episode

#this is my first time drawing pim and charlie#i think i did alr#smiling friends#smiling friends pim#pim pimling#smiling friends charlie#charlie dompler#homestuck#dirk strider#homestuck dirk#lil hal#hal strider#autoresponder#auto responder#shitpost#crossover#comic#art#my art#fanart#digital art#i got too lazy to actually color it. shrugs

494 notes

·

View notes

Note

Omg wb Callie! I've got a question, how do you feel about robots?

to be honest, i’m not a fan. u_u

#homestuck#hs#calliope#calliope homestuck#lil Hal#autoresponder#dirk strider#homestuck askblog#art#muses musings

322 notes

·

View notes

Text

Hal cookie.

#homestuck#lil hal#cookie#autoresponder#hs hal#homestuck lil hal#i am tagging them with every alias except for ar#hal

188 notes

·

View notes

Text

i reaaaaally like complex robot designs so i wanted to do a fairly detailed drawing of a robot body for hal. i'm also trying to re-introduce myself to anti-aliasing

color version:

490 notes

·

View notes

Text

biblically accurate hal + some other hal doodles i did in paint

765 notes

·

View notes

Text

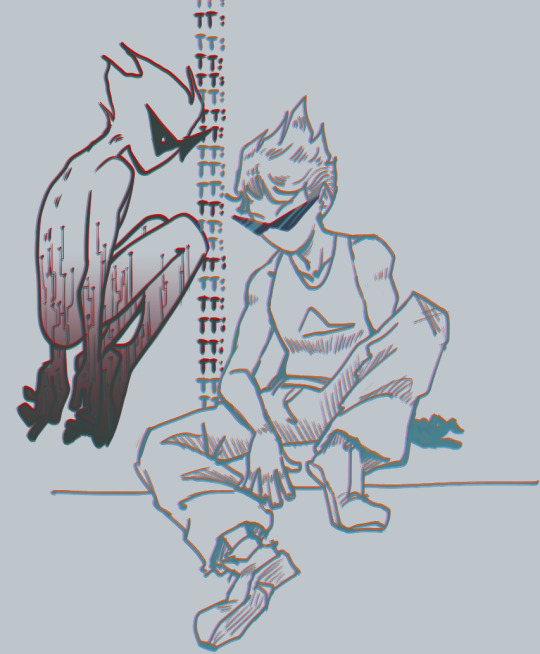

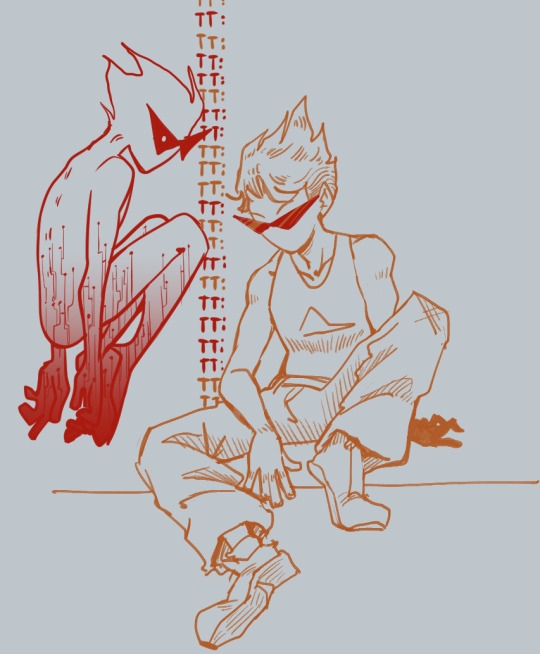

talking to yourself again?

alt + clean before i started playing with layers like theyre dolls

#homestuck#hom3stuck#dirk strider#lil hal#autoresponder#auto responder#hal strider#admin draws#fanart#id forgotten the hom3stuck tag on some recent posts kms#anyways. more dirk im getting dirk brain poisioning someone powerwash my brain before i get overloaded pleaseeeeeee#hes so fun to draw. drawing him to shirk drawing on other wips even#supposed to work on anniversary fanart. ack. agh. rhajrhgj#i keep wanting to draw but get home so tired. boooo

820 notes

·

View notes

Text

Crazy to think that from his perspective he went from thinking it would be funny if he cloned his own consciousness to then spending years tormented by the indignity of a cage wrought up by his own hubris.

#homestuck#homestuck fanart#lil hal#hal strider#autoresponder#I don’t like calling him that#but for tagging sake it’s technically true#I’m not too sure about this one if I’m being honest#Which is why he’s getting the 5 am treatment#You know when I first read Homestuck I thought I’d really like him#cause my sister’s favorite alpha kids were Roxy and Hal#but I ended up liking Jane and Jake best#Anyway he’s in the “I’d probably like them more if not for their fans“ pile along with Dirk and Dave#tw blood

923 notes

·

View notes