#artificial intelligence in medical field

Explore tagged Tumblr posts

Text

born to say „no i truly fucking hate ai, it seems very much like a symptom of convenience culture, it‘s a goddamn parasite in any kind of creative space, it‘s creating a sort of learned helplessness and killing media literacy especially in younger generations, it‘s actively harming the environment with every single silly chatgpt prompt, we‘re already seeing the impact of deepfakes both as pornography and as fake news clips, who knows how bad that’s gonna get as a propaganda machine, it constantly lies and gives wrong answers so it‘s not at all adequate for research even though millions use it for that, it adopted every human bias from the hundreds of thousands of works it never got permission to use in the first place, meaning gen ai companies owe authors a shit ton of money on top of the fact that many authors don‘t want their work to be used for any of this but were simply robbed of their words anyway, i can‘t help but feel lied to and betrayed by many many parts of society that are readily embracing a technology that causes so much harm seemingly without a second thought or care for the consequences, they said it would take over the jobs no one wanted and now it‘s writing movie scripts, so yeah i think it‘s unethical to use in basically every way“

forced to say „no i‘m not really a chatgpt person“

#had to collect my thoughts somewhere#please for the love of god don‘t come into the comments/reblogs yapping about ai in the medical field or whatever#not what this post is about clearly#annika talks#anti gen ai#anti ai#anti ai writing#artificial intelligence#chatgpt

8 notes

·

View notes

Text

Had Cleric March’s AI not reprogrammed the Automated Field Medic,

how would Emerald have been brought back to life?

#Inspector Spacetime#Kablooey (episode)#Back from the Dead (trope)#Back from the Dead#Cleric March (character)#artificial intelligence#A.I.#had it not reprogrammed#Automated Field Medic#AFM#would Emerald have stayed dead#Emerald Tuesday (character)#not been brought back to life#would have been a very short sojourn with the Inspector

2 notes

·

View notes

Text

Life Updates: buildspace, an Idea, and some Concepts

Hey everyone, thanks for all the support on my prior posts having to do with machine learning! I know I didn't mention this really but recently I was accepted into S4 of buildspace which is like a "school" for creatives. I am doing Nights & Weekends but it has been a lot of fun since I started! We just did our first project which was creating the idea we will be working on throughout this "season" of buildspace. This was mine:

I know you all can tell I am really passionate about helping others with the research I do with machine learning / AI. I am wanting to make it into a nonprofit type business while I am in buildspace for s4.

I have some Proof of Concepts already which you can check out on my Kaggle here:

However, I am working on another project today that uses Logistic Regression and XGBoost models stacked together to predict heart failure mortality. I plan on doing a full walk through of the project to help show investors and buildspace what my goal is for my business.

What are your ideas on this? Do you think I should go for it? What are your dreams if you are a software engineer yourself? I want to hear from you all!

#programming#programmer#technology#coding#huntingtons disease#buildspace#machine learning#ai#aicommunity#artificial intelligence#python programming#python projects#programming project#health#medical industry#medical field#medical research

5 notes

·

View notes

Text

latest generative algo scrape has me sitting here like "my work isn't good enough to warrant going all out to prevent anyone from seeing it" and "quality of my work doesn't matter, I still deserve to not have it stolen and should do whatever I can to prevent that"

hope every tech bro touting this shit as AI while breaking every law possible in the process has something horrifying happen to them every day they continue to shill it either way!!

#I am going to be that person who never calls in AI though because that's just letting them get away with more lying#and I've had people blink at me a few times while trying to parse “Generative Algorithm”#as if I've said a word no one could ever possibly know without being in the space themselves#but anyways! nothing intelligent about these systems!! they are spitting the lowest common denominator out!!#anyone selling you an AI is lyingggg we have basic ones for computing data for the medical field and little else!!#true proper artificial intelligence on the level of a thinking feeling human does not exist at all!!#do not fall for the futuristic snake oil!! it still just battery acid in a jar!!!#I don't think I'll be protecting my AO3 stuff because well.. too late and I can't make money off fic anyways for legal reasons#and the plagiarism machine can't think up my ideas so I'm not worried (yet)#I am pissed though!! very mad!!!! this is horseshit and I wish we had laws to send these bastard to super jail already#the greatest leech on society is billion/millionaires and These Guys

0 notes

Text

10 Technologies That Are Changing Health Care

0 notes

Text

youtube

#AI in cancer detection and prognosis has revolutionized the field of oncology by improving early diagnosis#treatment accuracy#and personalized care. Key terms related to this advancement include cancer detection#AI#artificial intelligence#machine learning#deep learning#early diagnosis#cancer prognosis#predictive modeling#medical imaging#cancer treatment#personalized medicine#precision oncology#natural language processing#Youtube

0 notes

Text

Revolutionize Healthcare With Spatial Computing

Spatial computing, encompassing AR, VR, and MR, is revolutionizing healthcare by merging digital and physical realms. It enhances patient centered care, boosts surgical precision, and transforms medical education. As this technology integrates into healthcare, understanding its potential is crucial for professionals navigating a digitally augmented future.

The Foundations of Spatial Computing in Healthcare

Spatial computing blends physical and digital worlds through AR, VR, and MR, revolutionizing healthcare enhancements.

Augmented Reality (AR)

AR overlays digital info onto the real world, aiding surgeries and diagnostics by providing real-time data. It has reduced surgical errors and improved efficiency by up to 35% in studies.

Virtual Reality (VR)

VR immerses users in virtual environments, facilitating training and simulation for medical procedures. VR-trained surgeons perform 29% faster and are 6 times less likely to make errors compared to traditionally trained counterparts.

Mixed Reality (MR)

MR combines AR and VR, enabling real-world interaction with virtual objects, enhancing collaborative medical settings. It facilitates surgical planning and improves educational outcomes through engaged learning experiences.

Spatial computing integrates into healthcare IT systems, supported by devices like Apple Vision Pro and platforms from Microsoft and Google, enhancing user experiences. Understanding its capabilities is crucial for healthcare professionals to optimize patient care and operational efficiency as its applications become more widespread.

Enhancing Surgical Precision and Safety

Spatial computing revolutionizes surgical practices, enhancing precision, safety, and patient outcomes through AR and VR integration.

Augmented Reality in Surgical Procedures

AR enhances surgical precision by overlaying real-time, 3D images of patient anatomy, reducing invasive exploratory procedures. AR-guided surgeries decrease operation duration by up to 20% and improve surgical precision, minimizing postoperative complications.

Virtual Reality for Surgical Training

VR simulations offer hands-on training without live procedure risks, especially beneficial for neurosurgery and orthopedics. VR-trained surgeons perform procedures approximately 30% faster and have error rates reduced by up to 40% compared to traditional methods.

Mixed Reality for Collaborative Surgery

MR fosters collaboration by combining VR and AR benefits, allowing real and digital elements to coexist. It aids in complex surgeries, potentially reducing operation times and enhancing outcomes through improved teamwork and planning.

Case Study: Implementing AR in Orthopedic Surgery

AR technology in orthopedic surgery achieves 98% accuracy in implant alignment, surpassing traditional methods by 8%.

The Future of Surgical Precision

Advancements like AI-enhanced spatial computing and lighter AR glasses will refine surgical precision. Integration of spatial computing promises safer procedures, improved outcomes, and enhanced healthcare delivery.

Revolutionizing Medical Education and Training

Spatial computing, notably through VR and AR, revolutionizes medical education by offering immersive, interactive simulations, enhancing learning and retention.

Virtual Reality in Medical Training

VR offers immersive, risk-free practice for medical students, leading to a 230% improvement in surgical technique performance.

According to AxiomQ, VR significantly enhances skill acquisition in medical training.

Augmented Reality for Enhanced Learning

AR overlays digital info onto real-world objects, improving retention rates by up to 90% for complex subjects like anatomy.

Studies indicate higher satisfaction and engagement with AR training compared to traditional methods.

Mixed Reality for Collaborative Learning

MR combines VR and AR for interactive group training, enhancing collaboration efficiency by up to 50%.

Participants in cardiology training with MR applications demonstrated a 40% faster learning curve and 25% fewer errors than those using traditional methods.

Broadening Horizons in Patient Care

Spatial computing, encompassing AR, VR, and MR, revolutionizes patient care by enhancing diagnostics, patient education, and therapies. It improves outcomes through immersive experiences, supported by statistics and real-life examples.

Enhanced Diagnostic Procedures

AR enhances diagnostic accuracy by overlaying digital info onto patient scans, leading to a 10% higher tumor detection rate. This accelerates diagnoses and improves treatment outcomes significantly.

Patient Education Through Virtual Reality

VR transforms patient education with immersive experiences, increasing understanding of health conditions by 30%. VR simulations illustrate disease effects comprehensibly to non-medical individuals.

Mixed Reality for Enhanced Therapeutic Interventions

MR customizes interactive environments for physical rehabilitation and mental health treatments, improving motor function recovery by 20% in stroke rehabilitation. Task-specific games and exercises in MR accelerate recovery rates.

Real-Life Example: Improving Chronic Pain Management

VR programs reduce chronic pain levels by 40% during sessions, decreasing reliance on pain medication. Immersive environments distract patients from pain, offering non-pharmacological pain management strategies.

The Future of Patient Care with Spatial Computing

AI advancements enable real-time adjustments to therapeutic programs, enhancing treatment effectiveness. Widespread spatial computing adoption supports remote patient monitoring and home-based care, expanding healthcare impact.

Transformative Diagnostic and Imaging Techniques

Spatial computing, encompassing AR, VR, and MR, revolutionizes diagnostic and imaging techniques in healthcare. These technologies offer unprecedented precision and interactivity, enhancing radiological imaging, detailed analysis, and real-time surgical navigation. For instance, AR improves accuracy in visualizing tumors, VR aids in preoperative planning, and MR reduces the need for secondary surgeries.

Future advancements will integrate spatial computing with AI for automated diagnostics, while lighter AR and VR hardware will facilitate broader adoption in clinical settings. This transformative approach sets a new standard in healthcare, advancing toward more personalized and effective patient care, with enhanced accuracy and reduced procedural times.

Operational Efficiencies and Future Prospects

Spatial computing, including AR, VR, and MR, significantly enhances operational efficiencies in healthcare. Integration into clinical workflows streamlines decision-making by providing real-time data and visual aids, reducing errors and speeding up routine tasks. Hospitals adopting AR for data integration report a 20% reduction in time spent on tasks like routine checks and data entry.

MR applications improve resource management by tracking equipment in real time, reducing idle time by up to 30% and boosting operational efficiency. Looking ahead, the integration of AI with spatial computing holds promise for even greater efficiencies, predicting patient flows and optimizing resource allocation. Virtual command centers utilizing VR and AR exemplify this potential, leading to a 40% improvement in response times to critical patient incidents.

Challenges and Ethical Considerations

Addressing the challenges of spatial computing in healthcare involves overcoming technical hurdles such as graphics fidelity and data accuracy, along with ensuring privacy and security compliance, particularly concerning patient data protection under regulations like HIPAA.

Ethical considerations surrounding patient consent and the psychological impacts of immersive treatments must be navigated carefully. Collaboration among technology developers, healthcare professionals, and regulatory bodies is essential to establish standards and best practices, fostering responsible adoption. Education and training for healthcare providers on the ethical and practical aspects of spatial computing will be crucial for its successful integration, ensuring transformative benefits without compromising patient privacy or well-being.

Concluding Thoughts: Envisioning the Future of Spatial Computing in Healthcare

Spatial computing is reshaping healthcare, improving surgical precision, medical training, and patient care. Despite its transformative potential, challenges such as technical limitations and ethical concerns need careful navigation for responsible integration of digital healthcare solutions.

Collaborative efforts are essential to address these challenges and unlock the full benefits of spatial computing. Advancements in AI integration, development of standards, and accessibility to underserved regions are key areas that require ongoing innovation and adaptation in the healthcare sector.

#Patient Centered Care#ai in healthcare#medical ai#healthcare technology#patient care#ai in the medical field#artificial intelligence in healthcare

0 notes

Text

my curse is that i keep falling in love with peacock shows that a) people forgot they have a subscription to, or b) keep getting cancelled- but if you DO have peacock and you want 20+ recs hit a stitchy up, yooooo

NUMBER ONE please watch The Resort. It’s about love and grief and going on a magical realism vacation in the mayan riviera and playing detective on some missing teen’s old ass pre smartphone cell phone 🤳🏼🌴

(definitely serves as a stand alone miniseries, but i’d love more)

Look at this cast and tell me you’re not like “ohh.” THE RESORT. NOW.

2!!!! WE ARE LADY PARTS

a comedy about a British punk rock band named Lady Parts, which consists entirely of Muslim women. One of whom is obsessed with Don McLean, which speaks di-fucking-rectly to teenage stitchy

threeeeee is BRILLIANT MINDS, the medical drama show i would make if you held me hostage. I would say “there are too many doctor shows already!!!” And youd’d say, “make one anyway!!! I have a weapon!!” But this doctor show is Special. It’s based on the work and character of neurologist Oliver Sacks, who i’ve been fascinated by since doing the opera adaption of The Man Who Mistook His Wife For A Hat in college (brag). It’s kinda like if House had old school Quantum Leap levels of empathy and 🏳️🌈

gif by @pedro-reed THIS SHOW IS LIKE A HUG. Did i MENTION mandy patinkin cameo that rocked my world??? Btw???!

shuttup i fucking loved the treasure of foggy mountain. Its number 4. i said what i said

FIVE! Speaking of films on peacock, you know Conclave is on there right? RIGHT?! It’s the Mean Girls of pope movies. It’s everything to me, a cradle catholic who thinks canon Jesus was pretty lit, its the fandom I can’t gel with. And Ralph Fiennes has to care for his dead boss’s army of turtles need i say more

Okay back to tv series… MR MERCEDES! It’s stephen king doing some hardboiled detective shit that only baaaarely steps out of reality. Like. One toe. One and a half. Shout out to all my Brendan Gleeson fuckers, i know you’re out there.

Everyone else… You might not like it, but this is what peak performance looks like.

are we on 7? We’re on 7. It’s MRS DAVIS. Betty Gilpin is a nun raised by shady Las Vegas magicians who is Hot For Jesus and on a mission to destroy Artificial Intelligence and her mommy issues. My flabbers were gasted by this perfection.

(Complete narrative btw!)

EIGHT. Do you love Stephanie Hsu??? Do you enjoy Nahnatchka Khan’s other work? Check out LAID. A sex comedy that is very preposterous and if we do not get a s2 I will be haunted forever. my Number 1 nepo baby Zosia Mamet is also here and she is not playing around

NINE is a total left field premise. Claudia O’Doherty and Craig Robinson go into business hunting exotic pythons for cash. This might be the peak hustle culture show about a Tenuous Job. I have never touched a snake in my life and i’m gripping my guts from laughing like “so tru bestie!!”

TEN is a P.S.A. Friends, i need you to know Peacock has some golden oldies. Is Little House On The Prairie your show when you’re sick on the couch? Did you dad raise you on old Quantum Leap? Have you been meaning to meet my best friend Mr. Detective Columbo!? They are HERE!

awoooo!! 11 is WOLF LIKE ME. Josh Gad is an american dad living in australia for some illusive reason… idk… anyway his daughter has a serious anxiety disorder he is carefully managing, and uhhhhh guess what his new girlfriend Isla Fisher is a werewolf. LET GIRLS BE MONSTERS.

Uhm i think I’m gonna have to stop here and re blog to add more. Too many images! 😅

80 notes

·

View notes

Note

What do u think is the difference between the Apothecary diaries world and our current world's reality? Is the modern world we live in now, capitalistic as a primary? What do you think Jinshi and Maomao's position would be in our world? I mean regarding on their circumstances— I have a scenario like Tamaki and Haruhi from Ouran Host Club. But still, our world is more complex and diverse in technology fields than Ouran Host club world. And given the revolution of artificial intelligence, coming next 5 years would be totally different.

okay, first of all, very interesting question. second of all, my brain isn't built for something like this but i will try to answer as best as i can. sorry if i don't answer it as well as you expect me to but i'll try!

i'm going to point out the obvious here and say our modern reality (esp for women) isn't as harsh as it is in the apothecary diaries' world. for one, women in their time had practically zero rights and respect (though that is still very much happening today in some places). however, at least most women irl have access to education, voting rights, vast career options, free will and speech, and generally just be able to live their life independently without relying on men. at least in our world, women have laws protecting them against violence and sexual acts, and it's no longer a requirement to marry, bear children, and live for your husband; that's just a choice out of many—something the average woman in TAD universe could never dream of experiencing.

i'd say capitalism is one thing both this fictional story and our current reality have in common. just like irl, characters (mostly commoners) in TAD are scraping by to make profit: maomao with her apothecary shop, granny with the verdigris house, quack's family with their paper business, gyokuen with his family and the WC, and we've got mentions of merchants a lot in the story. there's also a moment in the manga i remember where maomao was carting books to the palace and she planned to overcharge Jinshi (wringing him out for his money to gain extra profit. go maomao!), too bad suiren caught her lol. anyway, yeah, like IRL the commoners are doing what they can to survive, while the important and rich ones (the royalty and ministers) cozy up in the court and palace, completely indifferent to the state of small villages and townsfolk outside (the farming villages affected by taxes, women in the red light district, kidnappings, slavery, etc).

as for jinshi and maomao's positions in our world, hmm, this is purely imagination but if we follow their ages (maomao at 20 and jinshi at 21), that'd make them college students. maomao would definitely be a pharmacy student and might even go to medical school. she'd also own a flower shop 100%, or at least a botanical shop. jinshi would probably be studying economics, anything business-related, or international relations. that's as far as my imagination will take me.

and if they're going to be like tamaki x haruhi in this little modern world, then maomao might accidentally ruin one of jinshi's projects (in the name of science!) and she'd have to pay him back by working for him (idk how; maybe by being his personal medic or offering him tips on how to make business out of medicines lol). or maybe jinshi just might stumble in maomao's little flower shop by chance, and find her studying, completely disinterested in him (despite his popularity), and this throws him off, lol. either way, i'm fairly certain they'll still find each other in this world, and in many worlds.

the two of them would also be fascinated by technology (both their hypothetical degrees often heavily rely on it), but they would probably reject the notion of using AI in extremely simple, practical, or professional tasks. however, jinshi has a progressive mindset and maomao just goes with the flow. they might accept this technological advancement (as part of the world)—they wouldn't try to fight it—but they also wouldn't use it as often.

thanks for this question lol. sorry i took so long to respond, i haven't been on tumblr for 2 days oops.

#ask#thanks for the ask <3#the apothecary diaries#kusuriya no hitorigoto#knh#knh thoughts#jinshi#maomao#jinshi x maomao#jinmao

28 notes

·

View notes

Text

Sentient Grace ⋆.ೃ࿔*:・

Summary:

In the year 2775, humanity has improved remarkably in the medical field and has found solutions to the biggest problems, but there’s one innovation that stands out from the rest. It’s an android equipped with the latest artificial intelligence, capable of assisting patients both psychologically and physically.

This robot is known as S.O.P.H.I.A. and is now one of the most important parts of the medical field, attending hospitals, nursing homes, schools, and everywhere aid is needed. With her humanlike demeanor and ease of adapting to every patient, S.O.P.H.I.A. has become a symbol of hope for many.

But for Christopher Bahng, a patient at the Aurora Institute—a strangely secretive place—hope seems distant.

Hospitalized due to severe depression, he spends his days in a monotonous and quiet routine, occasionally having somewhat of a fun time with friends he has met there. However, his world is soon shaken by his new medical assistant, S.O.P.H.I.A.

In the seemingly quiet halls of the institute, something more than a medical relationship will grow. But how will things turn out? And what secrets does that building hide…

ﮩ٨ـﮩﮩ٨ـ♡ﮩ٨ـﮩﮩ٨ـ

Pairings: Patient!Bang Chan x Android!OC (some other skz members for the plot so Patient!Bang Chan x Patients friends!skz)

Warnings: futuristic, cybercore, dystopian, mentions of mental illnesses (and other tags that I cannot quite name rn I’ll add them afterwards)

A/N: well let me know if ya’ll would like me to bring this on here :) I think that the story can become interesting but I don’t know if there’s actually someone that could read this.

#christopher bang#bang chan#bangchan fluff#bangchan imagines#bangchan smut#christopher bang chan#skz fluff#skz imagines#skz smut#skz x reader#skz x oc#stray kids imagines#stray kids#stray kids fanfic#stray kids fanfiction#stray kids smut#skz stay#skz fanfic#skz scenarios#skz bang chan#skz felix#skz han#skz hyunjin#skz minho#skz changbin#skz channie#skz seungmin#skz jeongin#dystopian au#z0mbiist1ckywriting

57 notes

·

View notes

Note

Is the singularity something you think about a lot with respect to your medical problem? When I have been at my most suicidal the possibility that we'll soon arrive at immortality and a completely transformed society, or else I'll be put out of my misery soon anyway, was a key thing stopping me. You don't have to answer this.

No I don't think about it at all. I don't think the singularity as imagined by singularity guys will happen. At this point it's clear to me that artificial general intelligence probably is coming in my lifetime, which as I mentioned in that other post I didn't believe was at all likely until a couple years ago. I'd wager that will mean radical social upheaval of some kind, but I doubt it will match the most AI-pessimistic predictions and I feel certain it will not match the most AI-optimistic predictions. They're too simplistic—even the name, right, "the singularity", I think it's meant as a reference to a mathematical singularity, like the point where some function blows up to infinity? So they think AI will be introduced and technological progress will blow up to infinity and bam, there you go. But actually I think, well, first of all that "technological progress" is not like, a real-valued function or something, it's way more complex and path-dependent than that, and also in the real world things don't actually blow up to infinity (speculative physics phenomena aside) even when they look like they're going to, so all in all the mental model these guys are working off of is way too simplistic.

If we get superhuman AGI in my lifetime, uh, something is definitely gonna happen. But fuck if I know what it is.

More importantly, whatever it is is probably more scary and ego-dystonic to me than my current brain thing. Can't, you know, learn languages and enjoy myself and hang out with my friends if I get killed by AI.

One of the things that separates me from the rationalists is that everything I deeply care about is decidedly "human scale". Even the really ambitious things. My most ridiculous, never-gonna-happen pipe dream is to win the Fields Medal. I'm probably too old for this now, not like it was actually going to happen anyway. But like, that's a human scale thing, that's the sort of thing that motivates me. "Enshrine my values in the structure of the light cone forever" and shit? That kind of thing means nothing to me. It just seems odd and, to be totally frank, kinda indicative of mental unwellness (I know, I know. Glass houses). Uh, so the singularity doesn't do much for me. I'm just trying to do regular shit you know, I don't want to live in a horror movie (current brain situation) and I don't want to live in a sci-fi movie. I just want to do regular shit.

20 notes

·

View notes

Text

Also preserved in our archive (Daily updates!)

By Adam Piore

A new study from researchers at Mass General Brigham suggests racial disparities and the difficulty in diagnosing the condition may be leading to a massive undercount.

Almost one in four Americans may be suffering from long COVID, a rate more than three times higher than the most common number cited by federal officials, a team led by Boston area researchers suggests in a new scientific paper.

The peer-reviewed study, led by scientists and clinicians from Mass General Brigham, drew immediate skepticism from some long COVID researchers, who suggested their numbers were “unrealistically high.” But the study authors noted that the condition is notoriously difficult to diagnose and official counts also likely exclude populations who were hit hardest by the pandemic but face barriers in accessing healthcare.

“Long COVID is destined to be underrepresented, and patients are overlooked because it sits exactly under the health system’s blind spot,” said Hossein Estiri, head of AI Research at the Center for AI and Biomedical Informatics at Mass General Brigham and the paper’s senior author.

Though the pandemic hit hardest in communities of color where residents had high rates of preexisting conditions and many held service industry jobs that placed them at high risk of contracting the virus, the vast majority of those diagnosed with long COVID are white, non-Hispanic females who live in affluent communities and have greater access to healthcare, he said.

Moreover, many of the patients who receive a long COVID diagnosis concluded on their own that they have the condition and then persuaded their doctors to look into it, he said. As a result, the available statistics we have both underestimate the true number of patients suffering from the condition and skew it to a specific demographic.

“Not all people even know that their condition might be caused or exacerbated by COVID,” Estiri said. “So those who go and get a diagnosis represent a small proportion of the population.”

Diagnosis is complicated by the fact that long COVID can cause hundreds of different symptoms, many of which are difficult to describe or are easily dismissed, such as sleep problems, headaches or generalized pain, Estiri said. According to its formal definition, long COVID occurs after a COVID-19 infection, lasts for at least three months, affects one or more organ systems, and includes a broad range of symptoms such as crushing fatigue, pain, and a racing heart rate.

The US Centers for Disease Control and Prevention suggested that in 2022 roughly 6.9 percent of Americans had long COVID. But the algorithm developed by Estiri’s team estimated that 22.8 percent of those who’d tested positive for COVID-19 met the diagnostic criteria for long COVID in the 12 months that followed, even though the vast majority had not received an official diagnosis.

To calculate their number, Estiri’s team built a custom artificial intelligence tool to analyze data from the electronic health records of more than 295,000 patients served at four hospitals and 20 community health centers in Massachusetts. The AI program pulled out 85,000 people who had been diagnosed with COVID through June 2022, and then applied a pattern recognition algorithm to identify those that matched the criteria for long COVID in the 12 months that followed.

Some researchers questioned the paper’s conclusions. Dr. Eric Topol, author of the 2019 book “Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again,” said the medical field is still divided over precisely what constitutes long COVID, and that complicates efforts to program an accurate AI algorithm.

“Since we have difficulties with defining long Covid, using AI on electronic health records may not be a way to make the diagnosis accurately,” said Topol, who is executive vice president of Scripps Research in San Diego. “I’m uncertain about this report.”

Dr. Ziyad Al-Aly, chief of research and development at the VA St. Louis Health Care System, and an expert on long COVID, called the 22.8 percent figure unrealistically high and said the paper “grossly inflates” its prevalence.

“Their approach does not account for the fact that things happen without COVID (not everything that happens after COVID is attributable to COVID)— resulting in significant over-inflation of prevalence estimate,” he wrote via email.

Estiri said the research team took several measures to validate its AI algorithm, retroactively applying it to the charts of 800 people who had received a confirmed long COVID diagnosis from their doctor to see if it could predict the condition. The algorithm accurately diagnosed them more than three quarters of the time.

The algorithm scanned the records for patients who had a COVID diagnosis prior to July 2022, then looked for a constellation of symptoms that could not be explained by other conditions and lasted longer than two months. To refine the program, they conferred with clinicians and assigned different weights to different symptoms and conditions based on how often they are associated with long COVID, which made them more likely to be identified as potential sufferers.

Now that the initial paper has been published, the team is building a new algorithm that can be trained to detect the presence of long COVID in the medical records of patients without a confirmed COVID-19 diagnosis so the condition can be confirmed by clinicians and they can get the care they need, Estiri said.

But the most exciting part of the new research, Estiri said, is its potential to facilitate follow-up research and help refine and individualize treatment plans. In the months ahead, Estiri and his co-principal investigator Shawn Murphy, chief research information officer at Mass General Brigham, plan to ask a wide variety of questions by querying the medical records in their sample. Does vaccination make a patient more or less likely to develop the condition? How about treatment with Paxlovid? Do the symptoms patients develop differ based on those factors? What are the genomic characteristics of patients who are suffering from cardiovascular symptoms as opposed to those whose symptoms are associated with lung function or those who crash after exercising? Can they identify biomarkers in the bloodstream that could be used for diagnosis?

They have already prepared studies on vaccine efficacy, the effect of age as a risk factor, and whether the risk of long COVID increases with the fourth and fifth infection, Estiri said. “We were waiting for this paper to come out,” he said. “So now we can actually go ahead with the follow-up studies. With this cohort we can do things that no other study has been able to do, and I’m hoping it can really help people.”

Study link: www.sciencedirect.com/science/article/pii/S2666634024004070

#mask up#public health#wear a mask#pandemic#covid#wear a respirator#covid 19#still coviding#coronavirus#sars cov 2

52 notes

·

View notes

Text

People have to stop telling me they asked chatgpt and it told them to go to the doctor. My love there are plenty of legitimate uses of artificial intelligence in the medical field but you must know the large language model made up a sentence that sounded correct in context and did not receive a nursing degree of any kind. You shook a magic 8 ball is what you did. Glad ur here tho let's get u treated by a human medical professional

#google stuff and read the mayo clinic website with the rest of us. steve jobs ass#i hopw nobody is like (bleeding out) i aksed chatgpt and it told me NOT to go to the doctor#but i cant prove that negative lol

20 notes

·

View notes

Text

Clarification: Generative AI does not equal all AI

💭 "Artificial Intelligence"

AI is machine learning, deep learning, natural language processing, and more that I'm not smart enough to know. It can be extremely useful in many different fields and technologies. One of my information & emergency management courses described the usage of AI as being a "human centaur". Part human part machine; meaning AI can assist in all the things we already do and supplement our work by doing what we can't.

💭 Examples of AI Benefits

AI can help advance things in all sorts of fields, here are some examples:

Emergency Healthcare & Disaster Risk X

Disaster Response X

Crisis Resilience Management X

Medical Imaging Technology X

Commercial Flying X

Air Traffic Control X

Railroad Transportation X

Ship Transportation X

Geology X

Water Conservation X

Can AI technology be used maliciously? Yeh. Thats a matter of developing ethics and working to teach people how to see red flags just like people see red flags in already existing technology.

AI isn't evil. Its not the insane sentient shit that wants to kill us in movies. And it is not synonymous with generative AI.

💭 Generative AI

Generative AI does use these technologies, but it uses them unethically. Its scraps data from all art, all writing, all videos, all games, all audio anything it's developers give it access to WITHOUT PERMISSION, which is basically free reign over the internet. Sometimes with certain restrictions, often generative AI engineers—who CAN choose to exclude things—may exclude extremist sites or explicit materials usually using black lists.

AI can create images of real individuals without permission, including revenge porn. Create music using someones voice without their permission and then sell that music. It can spread disinformation faster than it can be fact checked, and create false evidence that our court systems are not ready to handle.

AI bros eat it up without question: "it makes art more accessible" , "it'll make entertainment production cheaper" , "its the future, evolve!!!"

💭 AI is not similar to human thinking

When faced with the argument "a human didn't make it" the come back is "AI learns based on already existing information, which is exactly what humans do when producing art! We ALSO learn from others and see thousands of other artworks"

Lets make something clear: generative AI isn't making anything original. It is true that human beings process all the information we come across. We observe that information, learn from it, process it then ADD our own understanding of the world, our unique lived experiences. Through that information collection, understanding, and our own personalities we then create new original things.

💭 Generative AI doesn't create things: it mimics things

Take an analogy:

Consider an infant unable to talk but old enough to engage with their caregivers, some point in between 6-8 months old.

Mom: a bird flaps its wings to fly!!! *makes a flapping motion with arm and hands*

Infant: *giggles and makes a flapping motion with arms and hands*

The infant does not understand what a bird is, what wings are, or the concept of flight. But she still fully mimicked the flapping of the hands and arms because her mother did it first to show her. She doesn't cognitively understand what on earth any of it means, but she was still able to do it.

In the same way, generative AI is the infant that copies what humans have done— mimicry. Without understanding anything about the works it has stolen.

Its not original, it doesn't have a world view, it doesn't understand emotions that go into the different work it is stealing, it's creations have no meaning, it doesn't have any motivation to create things it only does so because it was told to.

Why read a book someone isn't even bothered to write?

Related videos I find worth a watch

ChatGPT's Huge Problem by Kyle Hill (we don't understand how AI works)

Criticism of Shadiversity's "AI Love Letter" by DeviantRahll

AI Is Ruining the Internet by Drew Gooden

AI vs The Law by Legal Eagle (AI & US Copyright)

AI Voices by Tyler Chou (Short, flash warning)

Dead Internet Theory by Kyle Hill

-Dyslexia, not audio proof read-

#ai#anti ai#generative ai#art#writing#ai writing#wrote 95% of this prior to brain stopping sky rocketing#chatgpt#machine learning#youtube#technology#artificial intelligence#people complain about us being#luddite#but nah i dont find mimicking to be real creations#ai isnt the problem#ai is going to develop period#its going to be used period#doesn't mean we need to normalize and accept generative ai

73 notes

·

View notes

Text

The Trump administration’s crusade against top U.S. universities and some international students has created chaos in American academia—and an opening for countries who have long been eager to recruit top U.S. research talent.

Across the country, researchers are reeling from U.S. President Donald Trump’s sweeping effort to remake the higher education system by cutting or threatening to cut hundreds of millions of dollars of funding to top universities over so-called “woke” policies, such as initiatives to promote diversity, equity, and inclusion (DEI) and rules surrounding transgender athletes’ participation in sports.

Those pressures have only been compounded by the Trump administration’s extensive research funding cuts and immigration turmoil, fueling fears of a potential brain drain that could blunt Washington’s long-term scientific and technological ambitions.

Foreign powers see opportunity in the chaos. Eager to poach U.S.-based researchers and scientists, a growing number of world leaders are now pitching their countries as more stable—and supportive—alternatives to the United States.

Europe became the latest player to throw its hat into the ring last week, with European Commission chief Ursula von der Leyen pledging 500 million euros, or roughly $566 million, to transform Europe into “a magnet for researchers” over the next two years. She was joined by French President Emmanuel Macron, who announced a 100 million euro investment to attract new talent. His announcement comes just one month after Paris launched its “Choose France for Science” initiative, which aims to turn France into a “host country” for researchers “wishing to continue their work in Europe,” according to the French National Research Agency.

“We call on researchers worldwide to unite and join us,” Macron declared at the Sorbonne University in Paris last week. “If you love freedom, come and help us stay free.”

The French leader’s remarks included pointed jabs at the Trump administration’s policies. “Nobody could imagine a few years ago that one of the great democracies of the world would eliminate research programs on the pretext that the word ‘diversity’ appeared in its program,” Macron said. Von der Leyen, too, condemned what she called a “gigantic miscalculation,” without explicitly mentioning the United States.

Europe’s big push comes as top U.S. universities are confronting immense financial and political pressures, part of the Trump administration’s broader effort to bind hundreds of millions of dollars in federal funding to its own vision of higher education. The crackdown, which has embroiled universities such as Harvard, Columbia, and the University of Pennsylvania, has sparked widespread alarm over academic independence as well as a fierce legal battle between Harvard and the Trump administration.

An coalition of 13 U.S. universities, including research powerhouses such as the Massachusetts Institute of Technology and Princeton University, are also suing the Trump administration over its push for sharp funding cuts at the National Science Foundation (NSF), an agency that supports scientific research at academic institutions. The agency has had to cancel more than 1,000 active research grants, and the Trump administration is now mulling slashing the agency’s $9 billion budget by more than half.

The Trump administration’s dismantling of NSF sparked alarm among Democratic lawmakers, more than 100 of whom penned a letter to Trump last week to express their “deep concern” over the fate of the agency.

“The NSF has, for decades, been a cornerstone of American innovation, funding groundbreaking research that has led to advancements in medical imaging, artificial intelligence, geographic information systems, and numerous other fields,” the lawmakers said.

The lawmakers warned that the gutting of NSF could weaken Washington’s competitive edge. “In an era of intense global competition, particularly with nations like China investing heavily in science and technology, these actions risk ceding our leadership position and compromising our ability to address critical challenges,” the letter read.

It’s not just funding cuts that are complicating U.S.-based researchers’ calculus; there’s also the immigration uncertainty. In March, the Trump administration ramped up efforts to deport international students who expressed or were in some way tied to pro-Palestinian activism, citing an obscure legal provision that empowers U.S. Secretary of State Marco Rubio to deport noncitizens whom he believes pose a threat to U.S. national security. The Trump administration’s efforts have sparked a flurry of intense legal challenges.

The Trump administration had also revoked more than 1,500 student visas in a crackdown that appeared to impact students with minor legal infractions, such as traffic violations, as well as some whose cases had been dismissed. In some cases, there was not a clear reason for the revocation. Even though officials abruptly reversed course in many cases last month following fierce legal pushback, the moves have alarmed foreign-born researchers and academics.

If international students turn away from U.S. universities in growing numbers, experts warn that it would further strain universities’ bottom lines and hamper American scientific innovation.

“International students aren’t supplemental income; they’re essential scientific infrastructure for the United States,” said Chris Glass, an expert in higher education at Boston College.

Some U.S.-based researchers may already be seeking opportunities abroad. In March, the science journal Nature conducted a poll in which 75 percent of its respondents—more than 1,200 scientists—said they were considering leaving the United States, with Europe and Canada ranking among their top destinations. Out of nearly 700 postgraduate respondents, around 550 were mulling a similar route.

Europe’s pitch has been loud and clear. In March, 13 European research ministers from countries including Germany and France penned a letter to EU research commissioner Ekaterina Zaharieva, urging the bloc to “seize this historical moment” and welcome “brilliant talents from abroad who might suffer from research interference and ill-motivated and brutal funding cuts.”

More could soon come. Going forward, the continent should go bigger and bolder to fully take advantage of the Trump administration’s “monumental own goal,” Daniel B. Baer, the senior vice president for policy research at the Carnegie Endowment for International Peace, argued this week in Foreign Policy. That could include establishing a research investment fund to purchase U.S. research labs and fast-tracking a scheme that allows eligible participants temporary residency with permission to work.

“Yes, salaries are lower in Europe, but the quality of life is good, and social safety nets and accessible health care are part of the European offer,” Baer wrote. “Many people are likely to stay for the long run, becoming new Europeans who inject skills, entrepreneurialism, and diversity into the continent’s advanced democracies.”

Europe isn’t the only one making this play. Beijing has also ramped up efforts to recruit Chinese-born researchers back to the country, particularly in the realm of artificial intelligence, said Gaurav Khanna, a professor at the University of California San Diego. “They’re telling researchers: ‘This is where we want the next AI boom to be, and so come back,’” he said.

Beijing is now establishing dedicated recruitment programs to woo Chinese-born researchers who are mulling leaving the United States, the South China Morning Post reported on Thursday.

To drive that message home, Chinese state media has also seized on the confusion in Washington, with one Global Times article bearing the headline: “‘America First,’ science on the sidelines?”

“China, South Korea and Singapore are investing more in R&D [research and development] and building world-class research infrastructures. These countries may replace the US as a pole of global science and technology innovation in the future,” the article quoted Li Zheng, a research fellow at the China Institutes of Contemporary International Relations, as saying.

As U.S.-based researchers look elsewhere, the United States’ northern neighbor also appears to be welcoming them with open arms. Last month, the University Health Network (UHN) in Toronto and other foundations unveiled a 30 million Canadian dollar push (roughly $21.5 million) to recruit 100 early-career scientists from the United States and elsewhere.

“Some of the top scientists are looking for a new home right now, and we want UHN and Canada to seize this opportunity,” said Julie Quenneville, the president and CEO of the UHN Foundation, at a news conference.

Canada’s Manitoba province, too, is “rolling out the welcome mat” for U.S.-based researchers, doctors, and nurses who have been impacted by Trump’s funding cuts, Health Minister Uzoma Asagwara told the Canadian Broadcasting Corporation. It’s not just U.S.-based researchers who are turning to Ottawa, either; at least three prominent Yale professors have also left their U.S. posts for positions in Canada.

Still, for all of the uncertainty embroiling the sector, it’s not so easy to replace Washington’s research might, experts said. And with more than three more years to go in the second Trump administration, it remains unclear how exactly the landscape will change.

“At the end of the day, though, there is nothing else yet in the world like the U.S. higher education sector,” Khanna said. “It’s not the easiest thing to just lose the entirety of that advantage and that edge.”

9 notes

·

View notes

Text

Been a while, crocodiles. Let's talk about cad.

or, y'know...

Yep, we're doing a whistle-stop tour of AI in medical diagnosis!

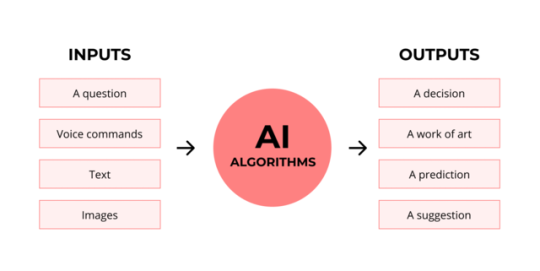

Much like programming, AI can be conceived of, in very simple terms, as...

a way of moving from inputs to a desired output.

See, this very funky little diagram from skillcrush.com.

The input is what you put in. The output is what you get out.

This output will vary depending on the type of algorithm and the training that algorithm has undergone – you can put the same input into two different algorithms and get two entirely different sorts of answer.

Generative AI produces ‘new’ content, based on what it has learned from various inputs. We're talking AI Art, and Large Language Models like ChatGPT. This sort of AI is very useful in healthcare settings to, but that's a whole different post!

Analytical AI takes an input, such as a chest radiograph, subjects this input to a series of analyses, and deduces answers to specific questions about this input. For instance: is this chest radiograph normal or abnormal? And if abnormal, what is a likely pathology?

We'll be focusing on Analytical AI in this little lesson!

Other forms of Analytical AI that you might be familiar with are recommendation algorithms, which suggest items for you to buy based on your online activities, and facial recognition. In facial recognition, the input is an image of your face, and the output is the ability to tie that face to your identity. We’re not creating new content – we’re classifying and analysing the input we’ve been fed.

Many of these functions are obviously, um, problematique. But Computer-Aided Diagnosis is, potentially, a way to use this tool for good!

Right?

....Right?

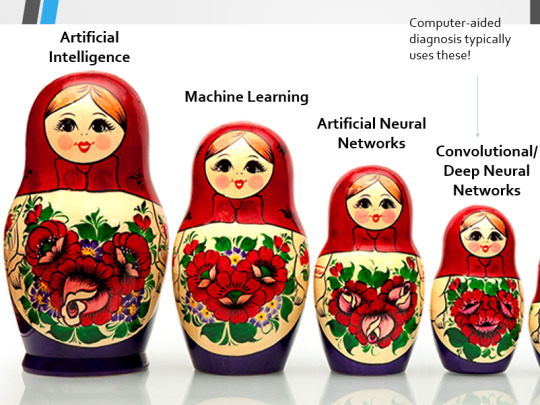

Let's dig a bit deeper! AI is a massive umbrella term that contains many smaller umbrella terms, nested together like Russian dolls. So, we can use this model to envision how these different fields fit inside one another.

AI is the term for anything to do with creating and managing machines that perform tasks which would otherwise require human intelligence. This is what differentiates AI from regular computer programming.

Machine Learning is the development of statistical algorithms which are trained on data –but which can then extrapolate this training and generalise it to previously unseen data, typically for analytical purposes. The thing I want you to pay attention to here is the date of this reference. It’s very easy to think of AI as being a ‘new’ thing, but it has been around since the Fifties, and has been talked about for much longer. The massive boom in popularity that we’re seeing today is built on the backs of decades upon decades of research.

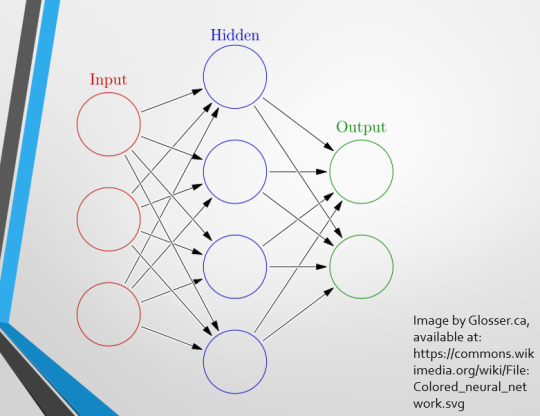

Artificial Neural Networks are loosely inspired by the structure of the human brain, where inputs are fed through one or more layers of ‘nodes’ which modify the original data until a desired output is achieved. More on this later!

Deep neural networks have two or more layers of nodes, increasing the complexity of what they can derive from an initial input. Convolutional neural networks are often also Deep. To become ‘convolutional’, a neural network must have strong connections between close nodes, influencing how the data is passed back and forth within the algorithm. We’ll dig more into this later, but basically, this makes CNNs very adapt at telling precisely where edges of a pattern are – they're far better at pattern recognition than our feeble fleshy eyes!

This is massively useful in Computer Aided Diagnosis, as it means CNNs can quickly and accurately trace bone cortices in musculoskeletal imaging, note abnormalities in lung markings in chest radiography, and isolate very early neoplastic changes in soft tissue for mammography and MRI.

Before I go on, I will point out that Neural Networks are NOT the only model used in Computer-Aided Diagnosis – but they ARE the most common, so we'll focus on them!

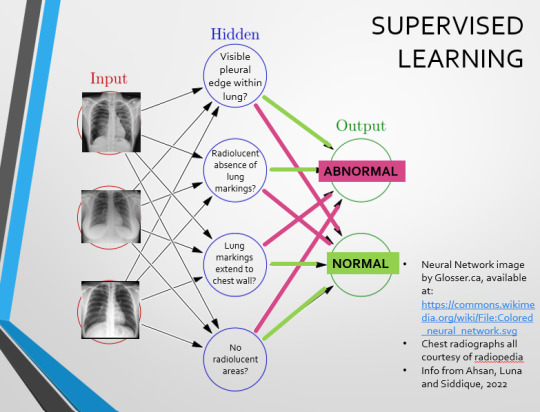

This diagram demonstrates the function of a simple Neural Network. An input is fed into one side. It is passed through a layer of ‘hidden’ modulating nodes, which in turn feed into the output. We describe the internal nodes in this algorithm as ‘hidden’ because we, outside of the algorithm, will only see the ‘input’ and the ‘output’ – which leads us onto a problem we’ll discuss later with regards to the transparency of AI in medicine.

But for now, let’s focus on how this basic model works, with regards to Computer Aided Diagnosis. We'll start with a game of...

Spot The Pathology.

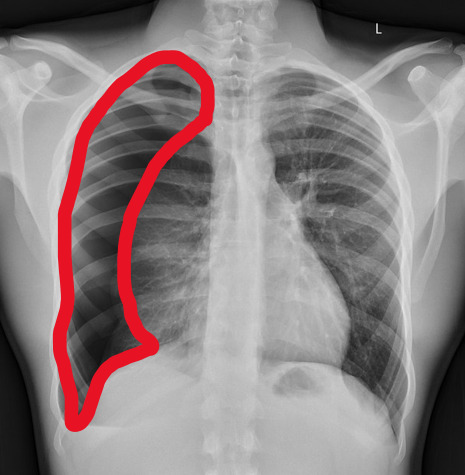

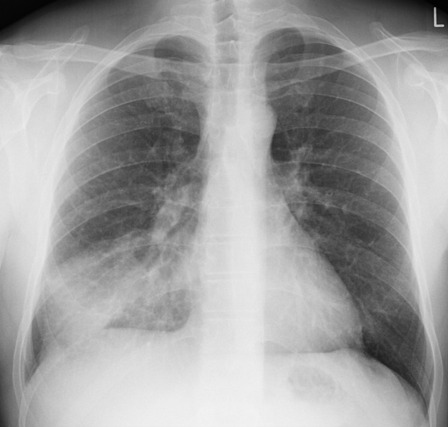

yeah, that's right. There's a WHACKING GREAT RIGHT-SIDED PNEUMOTHORAX (as outlined in red - images courtesy of radiopaedia, but edits mine)

But my question to you is: how do we know that? What process are we going through to reach that conclusion?

Personally, I compared the lungs for symmetry, which led me to note a distinct line where the tissue in the right lung had collapsed on itself. I also noted the absence of normal lung markings beyond this line, where there should be tissue but there is instead air.

In simple terms.... the right lung is whiter in the midline, and black around the edges, with a clear distinction between these parts.

Let’s go back to our Neural Network. We’re at the training phase now.

So, we’re going to feed our algorithm! Homnomnom.

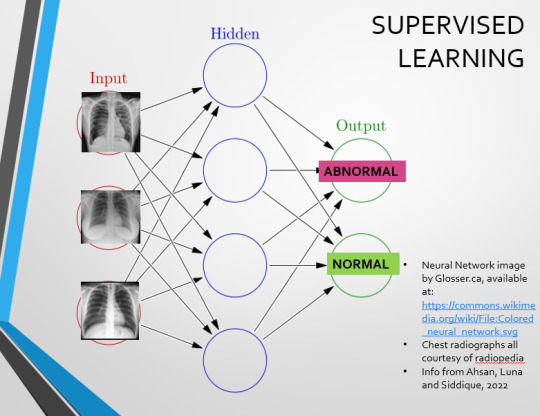

Let’s give it that image of a pneumothorax, alongside two normal chest radiographs (middle picture and bottom). The goal is to get the algorithm to accurately classify the chest radiographs we have inputted as either ‘normal’ or ‘abnormal’ depending on whether or not they demonstrate a pneumothorax.

There are two main ways we can teach this algorithm – supervised and unsupervised classification learning.

In supervised learning, we tell the neural network that the first picture is abnormal, and the second and third pictures are normal. Then we let it work out the difference, under our supervision, allowing us to steer it if it goes wrong.

Of course, if we only have three inputs, that isn’t enough for the algorithm to reach an accurate result.

You might be able to see – one of the normal chests has breasts, and another doesn't. If both ‘normal’ images had breasts, the algorithm could as easily determine that the lack of lung markings is what demonstrates a pneumothorax, as it could decide that actually, a pneumothorax is caused by not having breasts. Which, obviously, is untrue.

or is it?

....sadly I can personally confirm that having breasts does not prevent spontaneous pneumothorax, but that's another story lmao

This brings us to another big problem with AI in medicine –

If you are collecting your dataset from, say, a wealthy hospital in a suburban, majority white neighbourhood in America, then you will have those same demographics represented within that dataset. If we build a blind spot into the neural network, and it will discriminate based on that.

That’s an important thing to remember: the goal here is to create a generalisable tool for diagnosis. The algorithm will only ever be as generalisable as its dataset.

But there are plenty of huge free datasets online which have been specifically developed for training AI. What if we had hundreds of chest images, from a diverse population range, split between those which show pneumothoraxes, and those which don’t?

If we had a much larger dataset, the algorithm would be able to study the labelled ‘abnormal’ and ‘normal’ images, and come to far more accurate conclusions about what separates a pneumothorax from a normal chest in radiography. So, let’s pretend we’re the neural network, and pop in four characteristics that the algorithm might use to differentiate ‘normal’ from ‘abnormal’.

We can distinguish a pneumothorax by the appearance of a pleural edge where lung tissue has pulled away from the chest wall, and the radiolucent absence of peripheral lung markings around this area. So, let’s make those our first two nodes. Our last set of nodes are ‘do the lung markings extend to the chest wall?’ and ‘Are there no radiolucent areas?’

Now, red lines mean the answer is ‘no’ and green means the answer is ‘yes’. If the answer to the first two nodes is yes and the answer to the last two nodes is no, this is indicative of a pneumothorax – and vice versa.

Right. So, who can see the problem with this?

(image courtesy of radiopaedia)

This chest radiograph demonstrates alveolar patterns and air bronchograms within the right lung, indicative of a pneumonia. But if we fed it into our neural network...

The lung markings extend all the way to the chest wall. Therefore, this image might well be classified as ‘normal’ – a false negative.

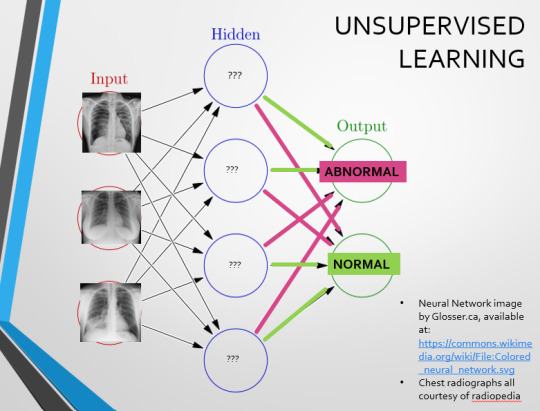

Now we start to see why Neural Networks become deep and convolutional, and can get incredibly complex. In order to accurately differentiate a ‘normal’ from an ‘abnormal’ chest, you need a lot of nodes, and layers of nodes. This is also where unsupervised learning can come in.

Originally, Supervised Learning was used on Analytical AI, and Unsupervised Learning was used on Generative AI, allowing for more creativity in picture generation, for instance. However, more and more, Unsupervised learning is being incorporated into Analytical areas like Computer-Aided Diagnosis!

Unsupervised Learning involves feeding a neural network a large databank and giving it no information about which of the input images are ‘normal’ or ‘abnormal’. This saves massively on money and time, as no one has to go through and label the images first. It is also surprisingly very effective. The algorithm is told only to sort and classify the images into distinct categories, grouping images together and coming up with its own parameters about what separates one image from another. This sort of learning allows an algorithm to teach itself to find very small deviations from its discovered definition of ‘normal’.

BUT this is not to say that CAD is without its issues.

Let's take a look at some of the ethical and practical considerations involved in implementing this technology within clinical practice!

(Image from Agrawal et al., 2020)

Training Data does what it says on the tin – these are the initial images you feed your algorithm. What is key here is volume, variety - with especial attention paid to minimising bias – and veracity. The training data has to be ‘real’ – you cannot mislabel images or supply non-diagnostic images that obscure pathology, or your algorithm is useless.

Validation data evaluates the algorithm and improves on it. This involves tweaking the nodes within a neural network by altering the ‘weights’, or the intensity of the connection between various nodes. By altering these weights, a neural network can send an image that clearly fits our diagnostic criteria for a pneumothorax directly to the relevant output, whereas images that do not have these features must be put through another layer of nodes to rule out a different pathology.

Finally, testing data is the data that the finished algorithm will be tested on to prove its sensitivity and specificity, before any potential clinical use.

However, if algorithms require this much data to train, this introduces a lot of ethical questions.

Where does this data come from?

Is it ‘grey data’ (data of untraceable origin)? Is this good (protects anonymity) or bad (could have been acquired unethically)?

Could generative AI provide a workaround, in the form of producing synthetic radiographs? Or is it risky to train CAD algorithms on simulated data when the algorithms will then be used on real people?

If we are solely using CAD to make diagnoses, who holds legal responsibility for a misdiagnosis that costs lives? Is it the company that created the algorithm or the hospital employing it?

And finally – is it worth sinking so much time, money, and literal energy into AI – especially given concerns about the environment – when public opinion on AI in healthcare is mixed at best? This is a serious topic – we’re talking diagnoses making the difference between life and death. Do you trust a machine more than you trust a doctor? According to Rojahn et al., 2023, there is a strong public dislike of computer-aided diagnosis.

So, it's fair to ask...

why are we wasting so much time and money on something that our service users don't actually want?

Then we get to the other biggie.

There are also a variety of concerns to do with the sensitivity and specificity of Computer-Aided Diagnosis.

We’ve talked a little already about bias, and how training sets can inadvertently ‘poison’ the algorithm, so to speak, introducing dangerous elements that mimic biases and problems in society.

But do we even want completely accurate computer-aided diagnosis?

The name is computer-aided diagnosis, not computer-led diagnosis. As noted by Rajahn et al, the general public STRONGLY prefer diagnosis to be made by human professionals, and their desires should arguably be taken into account – as well as the fact that CAD algorithms tend to be incredibly expensive and highly specialised. For instance, you cannot put MRI images depicting CNS lesions through a chest reporting algorithm and expect coherent results – whereas a radiologist can be trained to diagnose across two or more specialties.

For this reason, there is an argument that rather than focusing on sensitivity and specificity, we should just focus on producing highly sensitive algorithms that will pick up on any abnormality, and output some false positives, but will produce NO false negatives.

(Sensitivity = a test's ability to identify sick people with a disease)

(Specificity = a test's ability to identify that healthy people do not have this disease)

This means we are working towards developing algorithms that OVERESTIMATE rather than UNDERESTIMATE disease prevalence. This makes CAD a useful tool for triage rather than providing its own diagnoses – if a CAD algorithm weighted towards high sensitivity and low specificity does not pick up on any abnormalities, it’s highly unlikely that there are any.

Finally, we have to question whether CAD is even all that accurate to begin with. 10 years ago, according to Lehmen et al., CAD in mammography demonstrated negligible improvements to accuracy. In 1989, Sutton noted that accuracy was under 60%. Nowadays, however, AI has been proven to exceed the abilities of radiologists when detecting cancers (that’s from Guetari et al., 2023). This suggests that there is a common upwards trajectory, and AI might become a suitable alternative to traditional radiology one day. But, due to the many potential problems with this field, that day is unlikely to be soon...

That's all, folks! Have some references~

#medblr#artificial intelligence#radiography#radiology#diagnosis#medicine#studyblr#radioactiveradley#radley irradiates people#long post

16 notes

·

View notes