#applicable to both don and var

Explore tagged Tumblr posts

Text

Desire

#varian and the seven kingdoms#vat7k#hugo vat7k#donella vat7k#olivia vat7k#varian vat7k#my art#perspective ain't the best but we keep improving 💪#this guy oough#<33#feeling sentimental#last panel is vague on purpose#applicable to both don and var#(and hugo to olivia)

168 notes

·

View notes

Text

How Ancient DNA Unearths Corn's A-Maize-ing History

https://sciencespies.com/nature/how-ancient-dna-unearths-corns-a-maize-ing-history/

How Ancient DNA Unearths Corn's A-Maize-ing History

Smithsonian Voices National Museum of Natural History

How Ancient DNA Unearths Corn’s A-maize-ing History

December 14th, 2020, 3:00PM / BY

Erin Malsbury

Sequencing entire genomes from ancient tissues helps researchers reveal the evolutionary and domestication histories of species. (Thomas Harper, The Pennsylvania State University)

In the early 2000s, archeologists began excavating a rock shelter in the highlands of southwestern Honduras that stored thousands of maize cobs and other plant remains from up to 11,000 years ago. Scientists use these dried plants to learn about the diets, land-use and trading patterns of ancient communities.

After years of excavations, radiocarbon dating and more traditional archaeological studies, researchers are now turning to ancient DNA to provide more detail to their insights than has ever before been possible.

In a paper published today in the Proceedings of the National Academy of Sciences, scientists used DNA from 2,000-year-old corn cobs to reveal that people reintroduced improved varieties of domesticated maize into Central America from South America thousands of years ago. Archeologists knew that domesticated maize traveled south, but these genomes provide the first evidence of the trade moving both directions.

Researchers at the Smithsonian and around the world are just beginning to tap into the potential of ancient DNA. This study shows how the relatively recent ability to extract whole genomes from ancient material opens the door for new types of research questions and breathes new life into old samples, whether from fieldwork or forgotten corners of museum collections.

Cobbling together DNA

DNA, packed tightly into each of our cells, holds the code for life. The complex molecule is shaped like a twisting ladder. Each rung is made up of two complementary molecules, called a base pair. As humans, we have around three billion base pairs that make up our DNA. The order of these base pairs determines our genes, and the DNA sequence in its entirety, with all the molecules in the correct position, is called a genome. Whole genomes provide scientists with detailed data about organisms, but the process of acquiring that information is time sensitive.

“In every cell, DNA is always being bombarded with chemical and physical damage,” said lead author Logan Kistler, curator of archeobotany and acheogenomics at the Smithsonian’s National Museum of Natural History. “In live cells, it’s easily repaired. But after an organism dies, those processes that patch things up stop functioning.” As a result, DNA begins breaking down into smaller and smaller fragments until it disappears entirely. This decomposition poses the greatest challenge for scientists trying to sequence entire genomes from old or poorly-preserved tissue.

Researchers wear protective suits and work in sterile conditions in the ancient DNA lab to prevent contamination. (James DiLoreto, Smithsonian)

“You have to take these really, really small pieces of DNA — the length of the alphabet in some cases – and try to stitch them back together to make even a 1000 piece long fragment,” said Melissa Hawkins, a curator of mammals at the Smithsonian who works with ancient DNA. “It’s like trying to put a book back together by having five words at a time and trying to find where those words overlap.”

This laborious process prevented researchers from sequencing whole genomes from ancient DNA until around 2008, when a new way to sequence DNA became available. Since then, the technology and the ability to reconstruct ancient DNA sequences has grown rapidly.

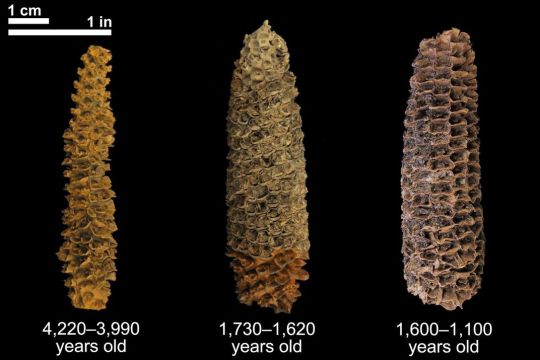

Ancient DNA still proves challenging to work with, however. Kistler and colleagues collected 30 maize cobs from the thousands in the El Gigante rock shelter in Honduras. The material ranged in age from around 2,000 to around 4,000 years old. Of the 30 cobs that the researchers tried to extract DNA from, only three of the 2,000-year-old samples provided enough to stitch together whole genomes. A few others provided shorter snippets of DNA, but most of the cobs didn’t have any usable genetic material left after thousands of years.

The second biggest problem researchers face when working with ancient DNA is contamination. “Everything living is a DNA factory,” said Kistler. When working with samples that are thousands of years old, the researchers take extra precautions to avoid mixing modern DNA into their samples. They don sterilized suits and work in an air-tight, positive-pressure lab designed specifically for working with ancient DNA.

A-maize-ing possibilities

The ability to sequence whole genomes from thousands of years ago has allowed researchers to ask questions they couldn’t think of answering using individual genes or smaller DNA fragments.

“A whole genome is comprised of several hundred ancestral genomes, so it’s sort of a time capsule of the entire population,” said Kistler. For important staple crops like maize, this means researchers can study the genes associated with domestication and determine when and how people changed it over time. And knowing what communities were doing with crops provides insight into other parts of life, such as land-use and trading.

“Whole genome sequencing of ancient materials is revolutionizing our understanding of the past,” said co-lead author Douglas Kennett from the University of California, Santa Barbara. The authors dug into the whole genome for information about how maize domestication occurred and where it spread.

The cobs from 4,000 years ago and before did not have enough genetic material left for researchers to produce genomes. (Thomas Harper, The Pennsylvania State University)

Before their results, it was widely assumed that maize was mostly flowing southward. They were surprised to learn that improved maize varieties were also reintroduced northward from South America. “We could only know this through whole genome sequencing,” said Kennett. Next, the scientists plan to pinpoint more specific dates for the movement of maize and connect its history to broader societal changes in the pre-colonial Americas.

Growing applications

The same technological advances that made Kistler and Kennett’s maize study possible have also created new uses for museum specimens. Scientists use ancient genomes to study how humans influenced plant and animal population sizes over time, species diversity and how closely related organisms are to each other. They even expect to discover new species hiding in plain sight.

“Sometimes, species are really hard to tell apart just by looking at them,” said Hawkins. “There is so much more that we don’t know.” To make extracting and sequencing DNA from older museum specimens easier, the Smithsonian is in the process of building a historic DNA lab. This space, separate from the ancient DNA lab, will allow researchers to focus on older collections with tissue quality that falls between ancient samples from archeological sites and freshly frozen material.

The ancient DNA lab at the Smithsonian takes several precautions to preserve existing DNA and prevent contamination. (James DiLoreto, Smithsonian)

“It’s really amazing that we have the opportunity to learn from samples that have already been here for 100 years,” said Hawkins. “We’ve unlocked all these museum collections, and we can do so many more things with them now than anyone had a clue was possible even 15 years ago.”

Related stories: Our Thanksgiving Menu has Lost a Few Crops Scientists to Read DNA of All Eukaryotes in 10 Years Safety Suit Up: New Clean Room Allows Scientists to Study Fragile Ancient DNA

Erin Malsbury is an intern in the Smithsonian National Museum of Natural History’s Office of Communications and Public Affairs. Her writing has appeared in Science, Eos, Mongabay and the Mercury News, among others. Erin recently graduated from the University of California, Santa Cruz with an MS in science communication. She also holds a BS in ecology and a BA in anthropology from the University of Georgia. You can find her at erinmalsbury.com.

More From This Author »

#Nature

6 notes

·

View notes

Photo

New Post has been published on https://techcrunchapp.com/are-you-in-control-of-your-digital-footprint-tech-news-top-stories/

Are you in control of your digital footprint?, Tech News & Top Stories

It is easy to think that e-commerce scams and hacking attempts are less of a concern today among digital natives who have grown up with digital technologies.

One such digital native, 27-year-old Hariz Taufik, believes that tech-savvy young adults are generally more aware about safeguarding their data online by making their social media accounts private and refraining from sharing personal information such as addresses or mobile numbers.

This is in part due to how schools are educating students about the importance of online safety, especially in an era marked by a growing number of media platforms, says Mr Hariz.

Living in the digital age also means it’s just as important to be aware of our digital footprint as much as our carbon footprint. Yet, despite a greater understanding of technology and its influence, are we as safe and cautious as we can be?

“Even when we carefully share personal information or upload pictures behind a private social media account, we still lose some degree of privacy,” says Mr Hariz. “Shopping online or enabling the location services on our phone’s apps are some of the ways we unknowingly create digital footprints.”

This phenomenon highlights the importance of considering the various ways our online activity can be used by others, and taking necessary steps to mitigate such risks.

Risk of scams and identity theft

Anyone can gather all the information about a person online and use them with malicious intent. PHOTO: GETTY IMAGES

Online spaces offer many opportunities for individuals to reach out easily to global audiences. However, it is this same advantage that gives criminals and scammers the ability to prey on unsuspecting victims, according to Dr Jiow Hee Jhee, a Media Literacy Council member and Digital Communications and Integrated Media programme director at the Singapore Institute of Technology.

Anyone can gather all the information available about a person online without his or her knowledge — from interests and hobbies, social groups, or even favourite places to hang out based on one’s posts and geo-tagging — and use them with malicious intent.

This hits close to home for Mr Hariz, whose friends have been victims of such attempts.

He says: “People can easily obtain various information about someone online and facilitate identity-related crimes. Someone once impersonated my friend on Instagram and sent me a direct message asking for my personal information.”

Thankfully, the attempt was unsuccessful as Mr Hariz was careful and had texted his friend via WhatsApp to check if she had indeed sent the message.

While the digitally literate may be less likely to fall prey to scams, they should still be wary about the information they share online.

Warns Dr Jiow: “Your credibility and reputation can be affected regardless of whether a scam is successful or not. By oversharing information online, your friends and family may be more wary of trusting the messages they receive from you, and potential employers could have concerns that you could put their data at risk too.”

Affect employment and further education opportunities

Employers can review an applicant’s digital footprint as part of the hiring process. PHOTO: GETTY IMAGES

Along with Internet users, employers have become increasingly digitally savvy over the years. According to a Financial Times article in 2018, social media has transformed the job market, with employers taking increasing interest in the online presence and activities of applicants. The article also cited a 2017 survey by US recruitment company CareerBuilder that revealed 70 per cent of companies used social media to screen candidates as part of the hiring process. This increased from 11 per cent in 2006 to 60 per cent in 2016.

Mr Hariz is unsurprised about the practice of reviewing an applicant’s digital footprint.

“I can see why companies analyse online data prior to hiring an individual,” he says, when presented with the statistics. “Hiring the right person is essential for business growth and analysing the data online could be a quick and cost-effective way to get to know the candidate better even prior to the interview.”

It’s thus important to always consider potential repercussions before posting anything online. Adds Dr Jiow: “What you post today or even ten years ago can be saved and used to your disadvantage when you least expect it.”

Cause damage to one’s reputation

Private conversations online can be leaked and used against a person. PHOTO: GETTY IMAGES

It’s no secret that millennials are online for a large part of their day. Founder of EmpathyWorks Psychological Wellness and registered psychologist Joy Hou explains that it’s also not uncommon for millennials to prefer to update their friends and loved ones about their lives via social media posts. They would also invite friends, loved ones and followers to leave comments or click ‘like’ to show their affection or support.

Such interactions online can occur behind a private social media page and considered personal conversations, but there could be instances where information is leaked and used against a person.

While it’s easy to think that friends or loved ones would never do that to you, Ms Hou says it’s still important to consider the possibility and suggests some reasons why individuals could engage in such behaviours:

To gain attention: They get to be the centre of attention temporarily while the piece of private information goes viral, and they receive numerous likes or shares on social media.

To express anger, unhappiness or envy: Some may engage in such behaviours to hurt those whose popularity, talents, or lifestyles they envy. They may derive a warped sense of fulfilment when the affected party’s reputation is tarnished.

To quell low self-esteem: To temporarily feel secure or superior, some may share information about or judge others.

To feel empowered: Bullied or victimised individuals may exert the same behaviours online for a taste of the power they do not have in the real world.

Ms Hou says: “In cyberspace where millions of people can access information with only one click, fake stories or ugliness about a person can go viral in seconds.”

The ripple effects could grow and include a wider audience, and be permanent.

However, in the digital age where the Internet can be a critical means of communication to bridge and build personal relationships across physical distances, it’s also unrealistic to cut yourself off from everyone else by not sharing any information online at all.

Thus, it’s perhaps more important to simply exercise caution by thinking twice about the personal and private information you post, and being aware of who you’re sharing the information with.

Making better choices online

With the Internet and social media being such an integral part of our personal and professional lives, it’s increasingly important to learn and apply best safety practices.

“It’s always good to err on the side of caution and take preventive steps so that you do not end up becoming a victim,” says Dr Jiow.

Consider applying the tips below to secure your digital footprint:

GRAPHIC: MEDIA LITERACY COUNCIL

GRAPHIC: MEDIA LITERACY COUNCIL

Don different hats to see if what you post could affect present and future personal or professional relationships, and be mindful that the Internet is both a positive and negative tool.

While leveraging the endless possibilities for connection and innovation the Internet brings, being aware of what we share and the lasting consequences of our choices online can empower us to better use it positively.

For more information and tips to Be Smart online, download the tip sheet on digital footprint here.

(function(d, s, id) var js, fjs = d.getElementsByTagName(s)[0]; if (d.getElementById(id)) return; js = d.createElement(s); js.id = id; js.async=true; js.src = "https://connect.facebook.net/en_US/all.js#xfbml=1&appId=263116810509534"; fjs.parentNode.insertBefore(js, fjs); (document, 'script', 'facebook-jssdk'));

0 notes

Text

Xamarin.Forms on the Web

TLDR: I implemented a web backend for Xamarin.Forms so that it can run in any browser. It achieves this without javascript recompilation by turning the browser into a dumb terminal fully under the control of the server (through web sockets using a library I call Ooui). This crazy model turns out to have a lot of advantages. Try it here!

A Need

I have been enjoying building small IoT devices lately. I've been building toys, actual household appliances, and other ridiculous things. Most of these devices don't have a screen built into them and I have found that the best UI for them is a self-hosted website. As long as the device can get itself on the network, I can interact with it with any browser.

There's just one problem...

The Web Demands Sacrifice

The web is the best application distribution platform ever made. Anyone with an internet connection can use your app and you are welcome to monetize it however you want. Unfortunately, the price of using this platform is acuquiecense to "web programming". In "web programming", your code and data are split between the client that presents the UI in a browser and the server that stores and executes application data and logic. The server is a dumb data store while the client executes UI logic - only communicating with the server at very strategic points (because synchronization is hard yo). This means that you spend the majority of your time implementing ad-hoc and buggy synchronization systems between the two. This is complex but is only made more complex when the server decides to get in on the UI game by rendering templates - now your UI is split along with your data.

Getting this right certainly is possible but it takes a lot of work. You will write two apps - one server and one client. You will draw diagrams and think about data state flows. You will argue about default API parameters. You will struggle with the DOM and CSS because of their richness in both features and history. You will invent your own security token system and it will be hilarious. The web is great, but it demands sacrifices.

(And, oh yes, the server and client are usually written in different languages - you have that barrier to deal with too. The node.js crew saw all the challenges of writing a web app and decided that the language barrier was an unnecessary complication and removed that. Bravo.)

Something Different

I was getting tired of writing HTML templates, CSS, REST APIs, and all that other "stuff" that goes into writing a web app. I just wanted to write an app - I didn't want to write all this boilerplate.

I decided that what I really wanted was a way to write web apps that was indistinguishable (from the programmer's perspective) from writing native UI apps. If I wanted a button, I would just new it up and add it to other UI elements. If I wanted to handle a click event, I wanted to be able to just subscribe to the event and move on. What I needed was a little magic - something to turn my simple app into the server/client split required by web apps.

That magic is a library I call Ooui. Ooui is a small .NET Standard 2.0 library that contains three interesting pieces of technology:

A shadow DOM that gives a .NET interfaces to the web DOM. It has all the usual suspects <div>, <span>, <input>, etc. along with a styling system that leverages all the power of CSS.

A state-synchronization system. This is where the magic happens. All shadow DOM elements record all the operations that have ever been performed on them. This includes changes to their state (setting their inner text for example) but also methods that have been called (for instance, drawing commands to <canvas>). This state can then be transmitted to the client at any time to fully mirror the server state on the client. With this system, all logic is executed on the server while the client renders the UI. Of course, it also allows for the client to transmit events back to the server so that click events and other DOM events can be handled. This is the part of Ooui that I am the most proud of.

A self-hosting web server (with web sockets) or ASP.NET Core action handlers to make running Ooui very easy. If I want to self-host a button, I simply write:

var button = new Button { Text = "Click Me!" }; button.Clicked += (s, e) => button.Text = "Thanks!"; // Start a web server and serve the interactive button at /button UI.Publish("/button", button);

I can do this from any platform that supports .NET Standard 2. I can run this on a Mac, Linux, Windows, Raspberry PI, etc.

Alternatively, you can host it on an ASP.NET MVC page if you want it up on the internet:

public class HomeController : Controller { public IActionResult Index() { var button = new Button { Text = "Click Me!" }; button.Clicked += (s, e) => button.Text = "Thanks!"; // Return interactive elements using the new ElementResult return new ElementResult(button); } }

Pretty neat huh?

But one more thing...

Xamarin.Forms Support

The DOM is great and all, but what do .NET developers really love when you get right down to it? XAML. This little serialization-format-that-could has become the standard way to build .NET UIs. Whether you're writing a Windows, UWP, or mobile app, you expect there to be XAML support.

So I made XAML work on the web by implementing a new web platform for Xamarin.Forms. Now, any of your Xamarin.Forms apps can run on the web using ASP.NET.

Xamarin.Forms was not at all on my radar when I was building Ooui. Eventually though I realized that it was the perfect basis for a web version of Forms. I thought the idea to be a little silly to be honest - web developers love their CSS and I didn't think there was much point. But one day I heard someone ask for just that feature and I thought "now we're two".

I had never written a backend for Xamarin.Forms but found the process very straightforward and very easy given its open sourceness (e.g. I copied a lot of code from the iOS implementation :-)). There's still a bit of work to be done but Xamarin.Forms and Ooui are getting along like long-lost cousins.

Animations work, pages and layouts work, styling works (as far as I have implemented), and control renders are currently being implemented. Fonts of course are an annoyance and cause a little trouble right now, but it's nothing that can't be fixed.

Once I got Xamarin.Forms working on the web I realized how wrong I was for thinking this to be a silly technology. Writing web apps with the Forms API is a real pleasure that I hope you'll get to experience for yourself.

Now that I am officially releasing Ooui, I want to work on a roadmap. But for now I mostly just want to hear people's opinions. What do you think about all this? What are your concerns? How do you think you could use it? Do you think it's as cool as I do? (Sorry, that last one is a little leading...)

13 notes

·

View notes

Link

https://ift.tt/2Fu5zVs Read on our visit to Ebi Tori Menzo | All Hailed From Osaka by FoodGem

Media Tasting at Ebi Tori Menzo

Are you getting tired of the Japanese ramen options that we have? Well, then it’s time to try something a little different. Direct from Osaka, Ebi Tori Menzo serves up delicious ramen and tsukemen.

The Tsukemen, which is their signature dipping noodle, was a unique surprise. It is a type of ramen that the noodles and the broth are served separately, which you then dip into the broth before slurping it up. The Tsukemen is served with an array of different vegetables, including Japanese sweet potatoes, Mizuna (Japanese mustard seed) and tomatoes, a lemon wedge, and some chashao. Along with the noodle is the signature thick and creamy prawn head broth, which is cooked for hours under slow and low heat, and then blended for maximum flavour.

The noodles were soft and springy, going well together with both the original and spicy ebi sauce. The spicy ebi sauce was light and smooth, carrying a subtle hint of “hae bee hiam” soup kind of taste. The original ebi sauce was less flavourful in comparison, but also carried a light taste of the shrimp, suitable for non-spicy lovers to enjoy. The Tsukemen does not get too overwhelming to enjoy, thanks to the refreshing side of vegetables and lemon wedge. There was also a slice of baguette served, an alternative dipping dish that went well with the both the ebi sauces.

A small bowl of Japanese rice is then served at the end of the meal, with milk soup to mix into your leftover dipping sauce to make a delicious risotto. This risotto carried a bizarre taste, since it is the first time eating a dish containing ebi broth with warm milk and rice, but it was really warming for the stomach. Overall the Tsukemen carried both a pleasant taste and experience, something new and unique that’s totally worth the try!

Something more familiar to everyone will be their traditional soup-based ramen. The broth is slow cooked for hours using pork, chicken bones, and vegetables, under low heat, to achieve a clear and flavourful soup.

Their popular Shio Chicken Ramen has got to be my favourite pick. The chashao that was served along with the soft and springy ramen was tender and melts in the mouth. The broth was light and naturally sweet, and I totally enjoyed the whole bowl till the very last drop of it.

Ebi Tori Menzo also serve a variety of side dishes as well as Japanese alcohol options which we really enjoyed. The mini chashao don we had was another favourite of mine. The chashao was well seasoned with salt and pepper and carries a melt-in-the-mouth tenderness.

The tofu was served together with the ebi dipping sauce, adding a distinct ebi taste and slight saltiness to the otherwise plain tofu, a unique twist to the usual shoyu-with-tofu dish.

As a gyoza lover, the gyozas surely did not disappoint as well. Not forgetting the sweet potato crisps which we were snacking on consistently throughout the meal. Completing the meal with a choice of Umeshu (with soda), it was refreshing and cleared the oil in our palette.

Ebi Tori Menzo is located at an accessible corner located along South Beach Road, with a really nice and chill vibe. A great and affordable place for a casual dinner or an occasion to celebrate, suitable for people of all ages to dine at.

*Service charge(10%) and GST(7%) applicable.

Share this post with your friends and loved ones.

Budget Per Pax

S$XX-S$XXX

How to go Ebi Tori Menzo

var map_fusion_map_5bf05b6aae19b; var markers = []; var counter = 0; function fusion_run_map_fusion_map_5bf05b6aae19b() { jQuery('#fusion_map_5bf05b6aae19b').fusion_maps({ addresses: [{"address":"26 Beach Road B1-18 Singapore 189768","infobox_content":"26 Beach Road B1-18 Singapore 189768","coordinates":false,"cache":true,"latitude":"1.2947454","longitude":"103.8560195"}], animations: true, infobox_background_color: '', infobox_styling: 'default', infobox_text_color: '', map_style: 'default', map_type: 'roadmap', marker_icon: '', overlay_color: '', overlay_color_hsl: {"hue":0,"sat":0,"lum":6}, pan_control: true, show_address: true, scale_control: true, scrollwheel: true, zoom: 14, zoom_control: true, }); } if ( typeof google !== 'undefined' ) { google.maps.event.addDomListener(window, 'load', fusion_run_map_fusion_map_5bf05b6aae19b); }

Operating Hours

Monday to Saturday 11AM-3PM; 5PM-10PM

Sunday CLOSED

Address and Contact

26 Beach Road B1-18 Singapore 189768

Email: [email protected]

Travel and Parking

Parking available South Bridge Tower.

Travel via public transport.

From City Hall Mrt Station (East-West Line)

Exit C; Walk 419 m (about 10 minutes) to South Beach Tower.

The post Ebi Tori Menzo | All Hailed From Osaka appeared first on foodgem: Food & Travel.

0 notes

Text

MQTT, Cornerstone of Activ-IoTy

Update of the ActivIoTy project for the Eclipse Open IoT Challenge.

Activ-IoTy is based on the pub-sub paradigm. This means that some components (publishers or senders) send messages to a central point, called broker, that delivers the messages to other components (subscribers or receivers) interested in receiving the information send by the publishers.

Just as a reminder of what the system does:

Checkpoints registers { competitor's ID + timestamp }. So, these are the publishers

Controller(s) collect the information sent by Checkpoints and process the information. So, this is one of the subscribers.

Other subscribers may perform other activities (i.e., visualizations, integration with third party services, etc.)

MQTT

> It's a good choice for systems where we need to share small code messages, and the network bandwidth may be limited

This mechanism is implemented using MQTT 3.1.1, an OASIS standard that is widely adopted by industries and IoT solutions. MQTT is a light-weight protocol suitable for Activity, due to the flexibility requirements regarding the conditions where Checkpoints operates. MQTT guarantees connections with remote locations. It's a good choice for systems where we need to share small code messages, and the network bandwidth may be limited. So, it's a perfect choice for ActivIoTy.

MQTT ensures the data is delivered properly to subscribers. The broker is in charge of delivers messages, according to three Quality of Service (QoS) levels:

QoS 0: At most once delivery. No response is sent by the receiver and no retry is performed by the sender. This means that the message arrives at the receiver either once or not at all.

QoS 1: At least once delivery. This quality of service ensures that the message arrives at the receiver at least once.

QoS 2: Exactly once delivery. This is the highest quality of service, for use when neither loss nor duplication of messages are acceptable.

Since ActivIoTy needs to satisfy completeness and integrity of data gathered by Checkpoints, the chosen QoS level is QoS 1 (At least once delivery). QoS 2 would be also adequate but it increases the overhead of communications. There may be duplicates, that will be filtered by receivers without too much cost associated.

Topics and Messages

Application messages within the MQTT queue are related to topics. So, both sender and receivers may work with messages organized by topics. Topics follow a hierarchical structure (e.g. bedroom to indicate all messages related to a bedroom; bedroom/temperature and bedroom/humidity for specific messages sent by sensors in the bedroom). This that is useful for subscribers to filter and receive only the information they need. Forward slash (‘/’ U+002F) is used to separate each level within a topic tree structure.

Checkpoints generate two kind of application messages:

1- Readiness messages (topic ready). They are sent once the Checkpoint is initialized and connected to the network. 2- Check-in messages (topic checkin). They are sent after the check-in of a competitor.

The definition of topics may be as complex as we want, In my case, the schema of topic names is based on the id of the checkpoint and the type of message. This is: {checkpointID}/ready and {checkpointID}/checkin.

Receivers may be subscribed to topics in a flexible way, using wildcards. For instance, +/ready to subscribe to all ready topics from all senders.

Messages will be encoded as UTF-8 in JSON format as follows:

Topic +/ready:

{ "checkpoint" : { // ID required "id" : "…", // Optional metadata "name" : "…", "description" : "…", "geo" : { "lat" : 0.000, "lng" : 0.000, "elevation" : 0.000 }, }, "timestamp" : 0000000 }

Readiness messages only require checkpoint identifier and timestamp (the Unix time of the device after synchronizing with a NTP server).

Topic +/checkpoint:

{ "checkpoint" : { "id" : "…", // Required "geo" : { "…" } // (Optional) It can be included in case the checkpoint is on the move }, "bib" : "…", // Either 'bib' or 'epc' are required "epc" : "…", "timestamp" : 0000000, // Required }

For check-in messages, it is required the checkpoint identifier (some information may be added, such as coordinates in case the checkpoint is on the move), the unique identifier of the competitor (we can have several, such as bib number and RFID tag), and the timestamp of the check-in.

Implementation with Mosquitto and Paho

Eclipse Mosquitto is an open source message broker that implements the MQTT protocol versions 3.1 and 3.1.1. This server is really stable and lightweight so it can be installed in any kind of computer, even on your Raspberry Pi.

Installation and configuration of the Mosquitto Broker is really easy (check official page for instructions). For this pilot, I installed the Mosquitto Broker on a UP² Squared I received for this challenge –thanks, it's great!

I installed it from the Mosquitto Debian Repository with apt just in a few steps:

After connecting via SSH with the UP² Squared.

wget http://repo.mosquitto.org/debian/mosquitto-repo.gpg.key sudo apt-key add mosquitto-repo.gpg.key

Repository available to apt:

cd /etc/apt/sources.list.d/ sudo wget http://repo.mosquitto.org/debian/mosquitto-wheezy.list

Update apt information and install Mosquitto

apt-get update apt-get install mosquitto

As a summary of the configuration of this broker:

Configuration file: /etc/mosquitto/mosquitto.conf

Logs: /var/log/mosquitto/mosquitto.log

Mosquito service can be managed with this command:

sudo service mosquitto [start|stop|restart]

The listener by default will open the 1883 port. Through the configuration file, we can set up the server. There is a sample file with all options listed and documented at /usr/share/doc/mosquitto/examples/mosquitto.conf.

There, we can configure all the security specifications of our broker (user/password files, SSL/TLS support, bridges, etc.).

For this pilot we don't include security measures but communications should be private and secured in the production environment.

In case we want to test the broker, we can also install the pub-sub clients anywhere.

apt-get install mosquitto-clients

Opening several terminal sessions, we can simulate subscribers (mosquitto_sub) and publishers (mosquitto_pub) with just these options: * -h (hostname) * -t (topic) * -m (message)

Do not forget to open that port on your firewall to make it available from outside your network.

Paho

Checkpoints are implemented using Eclipse Paho. Paho provides open-source client implementations of MQTT protocols for any platform. Paho implementations may be deployed on all checkpoint modules of ActivIoTy: Raspberry + Python; UP2 Squared + Python/embedded C; Arduino + embedded C).

Paho is also the base for the implementation of the Controller (subscriber), coded in Node.js.

0 notes

Text

React Interview Questions

General React Theory Questions

What is React, who developed it, and how is it different from other frameworks?

React is a JavaScript library that was developed by Facebook for building User Interfaces (UI's). This corresponds to the View in the Model-View-Controller (MVC) pattern.

React uses a declarative paradigm that makes it easier to reason about your application, and React computes the minimal set of changes necessary to keep your DOM up-to-date.1 2

How do you tell React to build in Production mode and what will that do?

Typically you’d use Webpack’s DefinePlugin method to set NODE_ENV to production. This will strip out things like propType validation and extra warnings.

Explain what JSX is and how it works

React components are typically written in JSX, a JavaScript extension syntax allowing quoting of HTML and using HTML tag syntax to render subcomponents. HTML syntax is processed into JavaScript calls of the React framework. Developers may also write in pure JavaScript.

Explain how the One-Way Data Flow works

In React, data flows from the parent to the child, but not the other way around. This is designed to alleviate cascading updates that traditional MVC suffers from.

Properties, a set of immutable values, are passed to a component's renderer as properties in its HTML tag. A component cannot directly modify any properties passed to it, but can be passed callback functions that do modify values. This mechanism's promise is expressed as "properties flow down; actions flow up".

What are the differences between React in ES5 and ES6? What are the advantages/disadvantages of using ES6?

Syntax

Creating React Components the ES5 way involves using the React.createClass() method. In ES6, we use class SomeModule extends React.Component{}

Autobinding

React in ES5 has autobinding, while React in ES6 does not. For that reason, we have to use bind to make this available to methods in the constructor.

// React in ES5 var thing = { name: 'mike', speak: function(){ console.log(this.name) } } window.addEventListener('keyup', thing.speak)

In the above code, if we call thing.speak(), it will log mike, but pressing a key will log underfined, context of the callback is the global object. The browser’s global object – window – becomes this inside the speak() function, so this.name becomes window.name, which is undefined.

React in ES5 automatically does autobinding, effectively doing the following:

window.addEventListener('keyup', thing.speak.bind(thing))

Autobinding automatically binds our functions to the React Component instance so that passing the function by reference in the render() works seamlessly.3

But in ES6, we need to make the context of this available to the sayHi method by using bind.

export class App extends Component { constructor(props) { super(props) this.state = { name: ben, age: 37 } this.sayHi = this.sayHi.bind(this) } sayHi() { console.log(this.state.name) } }

Explain the Virtual DOM and how React renders it to the actual DOM.

React creates an in-memory data structure cache, computes the resulting differences, and then updates the browser's displayed DOM efficiently.

How does transpiling with Babel work?

Babel transpiles React code to plain vanilla Javascript that is cross browser compliant.

What is the difference between React's Router and Backbone's Router?

When a route is triggered in Backbone's router, a list of actions are executed. When a route is triggered in React's router, a component is called, and decides for itself what actions to execute.

Differences:

If you used Backbone's Router with React, it would have to manually mount and unmount React components from the DOM regularly. This would cause havok.

Backbone's router demands that you create a flat list of routes (/users/:id, /users/:post, users/list).

React uses Higher Order Components (HOC's) that have children define sub routers. E.g.

<Router history={browserHistory}> <Route path="/" component={MainApp}> <Route path="contact" component={Contact}/> <Route path="posts" component={Posts}> <Route path="/user/:userId" component={UserPage}/> </Route> <Route path="*" component={NoMatch404}/> </Route> </Router>

LifeCycle Questions

Explain the stages of a React Component's lifecycle

Mounting

These methods are called when an instance of a component is being created and inserted into the DOM.

constructor()

componentWillMount()

render()

componentDidMount()

Updating

An update can be caused by changes to props or state. These methods are called when a component is being re-rendered.

componentWillReceiveProps()

shouldComponentUpdate()

componentWillUpdate()

render()

componentDidUpdate()

Unmounting

This method is called when a component is being removed from the DOM.

componentWillUnmount()

Explain the lifecycle methods, when they occur, and how you would use each of them

Mounting

constructor - The constructor for a React component is called before it is mounted. If you don't call super(props) in the constructor, this.props will be undefined. You should initialize your state here too.

componentWillMount - invoked immediately before mounting occurs. It is called before render, therefore setting state in this method will not trigger a re-rendering. Avoid introducing any side-effects or subscriptions in this method.

render - creates a tree of React elements. When called, it examines this.props and this.state and returns a single React element. This element can be either a representation of a native DOM component, such as <div />, or another composite component that you've defined yourself. Returning null or false indicates that you don't want anything rendered.

componentDidMount - invoked immediately after a component is mounted. Ideal place for network requests (e.g. AJAX). Setting state in this method will trigger a re-rendering.

componentWillReceiveProps - is invoked before a mounted component receives new props. This is a good place to update the state in reponse to changes in props. Calling setState generally doesn't trigger componentWillReceiveProps.

Updating

shouldComponentUpdate - allows you to decide if you wish to re-render the component when new props or state are received. Invoked before rendering when new props or state are being received. Defaults to true. Not called on initial render or on forceUpdate. If shouldComponentUpdate returns false, then componentWillUpdate, render, and componentDidUpdate will not be invoked. Returning false does not prevent child components from re-rendering when their state changes.

componentWillUpdate - invoked immediately before rendering when new props or state are being received. Cannot call setState here and is not called on initial render. If you need to update state in response to a prop change, use componentWillReceiveProps.

componentDidUpdate - invoked immediately after update occurs. Operate on the DOM in this method and make network requests here, if needed, but compare to previous props & state. Not called for the initial render.

Unmounting

componentWillUnmount - invoked immediately before a component is unmounted. Perform cleanup here, e.g. invalidating timers, canceling network requests, or cleaning up any DOM elements that were created in componentDidMount.

Explain how setState works

setState(updater, callback)

setState takes 2 arguments, an updater and a callback that gets executed once setState has completed.

The updater can either be a function or an object. Both are executed asynchronously. If it is a function, it takes the form:

setState((prevState, props) => { return newState })

If you supply an object to setState instead of the above function, then that will become the new state.

What happens when you call setState? AKA explain how Reconciliation works.

When setState is called, the object returned from the updater becomes the current state of the component. This will begin the process of reconciliation, which aims to update the UI in the most efficient way possible, according to the reconciliation algorithm.

React diffs the previous root element to the new root element. If they are are of different types (<article> vs <section>), React will tear down the old tree and create a completely new one. If they are the same type, only the changed attributes will be updated.

When tearing down a tree, old DOM nodes are destroyed and componentWillUnmount is called on them. When building up a new tree, new DOM nodes are inserted into the DOM.

The core idea of reconcialiation is to be as efficient as possible on the UI by only making updates where absolutely necessary.

What is the difference between forceUpdate and setState? Do they both update the state?

setState causes your app to update when props or state have changed. But if your app relies on other data, you can force an update (render) with forceUpdate.

Calling forceUpdate will cause render to be called on the component, skipping shouldComponentUpdate. This will trigger the normal lifecycle methods for child components, including the shouldComponentUpdate method of each child. React will still only update the DOM if the markup changes.

Use of forceUpdate should be avoided as much as possible. Use state and props instead.

What is the second argument that can optionally be passed to setState and what is its purpose?

The second argument is a callback that gets executed once setState has completed.

In which lifecycle event do you make AJAX/Network requests and why?

Use componentDidUpdate or componentDidMount. If you use componentWillMount, the AJAX request could theoritically resolve before the component has mounted. Then you would be trying to call setState on a component that hasn't mounted, which would introduce bugs. Secondly, componentWillMount might get called multiple times per render by React's reconciliation algorithm for performance reasons. That would cause multiple AJAX requests to get sent.

Feature Specific Questions

What’s the difference between an Element and a Component in React?

An element is a representation of something in the UI, which will usually become HTML, e.g. <a>, <div>, etc.

A component is a function or class that accepts input and returns an element or other components.

What is the difference between Class Components and Stateless Functional Components (aka Pure Functional Components, etc)? When would you use one over the other?

If your component needs to work with this.state, or this.setState, or lifecycle methods, use a Class Component, otherwise use a Stateless Functional Component.

What is the difference between createElement and cloneElement?

createElement creates a new React element. cloneElement clones an existing React element.

What are refs in React and why are they important?

Refs are an escape hatch from React's declarative model that allow you to directly access the DOM. These are mostly used to grab form data in uncontrolled components. (In uncontrolled components, data is handled by the DOM itself, instead of via the React components, which is controlled).

Avoid using refs for anything that can be done declaratively.

You may not use the ref attribute on functional components because they don't have instances.

Give a situation where you would want to use refs over controlled components

Managing focus, text selection, or media playback.

Triggering imperative animations.

Integrating with third-party DOM libraries.

What are keys in React and why are they important?

Keys help React become more efficient at performing updates on lists.

What is the difference between a controlled component and an uncontrolled component?

In uncontrolled components, data is handled by the DOM itself. In controlled components, data is only handled by the React component.

In HTML, form elements such as <input>, <textarea>, and <select> typically maintain their own state and update it based on user input. In React controlled components, mutable state is typically kept in the state property of components, and only updated with setState.

This means that state is the single source of truth. So if you wanted to change the value of an <input> box, you would call setState every time the user pressed a key, which would then fill input with the new value of state.

In uncontrolled components, we usually use refs to grab the data inside of form fields.

What are High Order Components (HOC's) and how would you use them in React?

HOC's aren't just a feature of React, they are a pattern that exists in software engineering.

A higher-order component is a function that takes a component and returns a new component.

Whereas a component transforms props into UI, a higher-order component transforms a component into another component.

An HOC doesn't modify the input component, nor does it use inheritance to copy its behavior. Rather, an HOC composes the original component by wrapping it in a container component. An HOC is a pure function with zero side-effects.

The wrapped component receives all the props of the container, along with a new prop, data, which it uses to render its output.

What is the difference between using extend, createClass, mixins and HOC's? What are the advantages and disadvantages of each?

Mixins

The point of mixins is to give devs new to functional programming a way to reuse code between components when you aren’t sure how to solve the same problem with composition. While they aren't deprecated, their use is strongly not recommended. Here's why 1:

Mixins introduce implicit dependencies

Mixins cause name clashes

Mixins cause snowballing complexity

HOC's - above

Extends

Mixins are possible, but not built-in to React’s ES6 API. However, the ES6 API makes it easier to create a custom Component that extends another custom Component.

ES6 classes allow us to inherit the functionality of another class, however this makes it more difficult to create a single Component that inherits properties from several mixins or classes. Instead, we need to create prototype chains.

How does PropType validation work in React?

PropTypes allow us to supply a property type for all of our different properties, so that it will validate to make sure that we're supplying the right type.

This is kind of like strong typing in Java (e.g. int, char, string, obj, etc).

If you use an incorrect type, or the value is required but you don't have it, React will issue a warning, but won't crash your app.

Why would you use React.Children.map(props.children, () => ) instead of props.children.map(() => )

It’s not guaranteed that props.children will be an array. If there is only one child, then it will be an object. If there are many children, then it will be an array.

<Parent> <Child /> </Parent>

vs

<Parent> <Child /> <Cousin /> </Parent>

Describe how events are handled in React

In order to solve cross browser compatibility issues, your event handlers in React will be passed instances of SyntheticEvent, which is React’s cross-browser wrapper around the browser’s native event. These synthetic events have the same interface as native events you’re used to, except they work identically across all browsers.

Where does a parent component define its children components?

Within the render method

Can a parent component access or read its children components properties?

Yes

How do you set the value of textarea?

In React, a <textarea> uses a value attribute instead. This way, a form using a <textarea> can be written very similarly to a form that uses a single-line input.

What method do you use to define default values for properties?

In ES6 classes

SomeModule.defaultProps = {name: 'Ben', age: 36}

In Stateless Functional Components, just use ES6 named params.

export const SomeModule = ({name="Ben", age=36}) => { return ( <div>{name}, {age}</div> ) }

What does it mean when an input field does not supply a value property?

That you're using an uncontrolled component.

Sources

A lot of this information was learned and borrowed from the following:

https://www.lynda.com/React-js-tutorials/React-js-Essential-Training/496905-2.html

https://www.toptal.com/react/interview-questions

https://tylermcginnis.com/react-interview-questions/

https://www.codementor.io/reactjs/tutorial/5-essential-reactjs-interview-questions

0 notes

Text

GOPOKER Launches India's First Online Poker League

GOPOKER Launches India's First Online Poker League

Business Wire IndiaGOPOKER launches India’s first Online Poker League with the largest prize pool that online poker in India has ever seen! The league will have 8 Teams – Bombay Badshahs, Bangalore Billionaires, Hyderabad Highrollers, Delhi Dons, Goa Gamechangers, Kolkata Kings, Chennai Cartels, and Manipal Money Makers. Applications for mentor/captain for the teams have already begun on gopoker.in/IOPL. The dates for the qualifiers will be released soon and will be hosted exclusively on www.gopoker.in. The organizers of the Online Poker League anticipate a huge turnout, given the tremendous response to the ongoing registration process. In addition to being the first league of its kind, the enormous prize pool on offer has caused quite a buzz in the poker circuit.

Speaking on the occasion Mr. Jatin Banga, Founder – Indian Online Poker League, said, “We are excited to announce the Indian Online Poker League. We intend to sportify the game of poker and bring it to the masses. We have been working on this for a while now and the concept has materialized into something beyond what we had imagined. The never seen before structures and massive prize pool will keep the adrenaline rush going for the players along with a chance to make some serious money!”

The Poker League will be an 8 week event. The most gripping part of the league is that all the events will be streamed live on Digital Media – bringing both excitement and recognition to the game.

About the Company

GoPoker.in is the most advanced online poker website in India. We offer exclusive bonuses, lucrative tournaments, and some really exclusive deals to our players. Our platform provides poker game lovers in India and from across the world with the best online poker experience.

Gopoker.in has world-class poker software to make your poker playing experience a fantastic one. With our software, playing becomes very easy in just 3-simple steps; download and install, create your free account and start playing right off the bat.

Intertwined Brand Solutions will partner with Gopoker in brand building to bring you the next level of poker gaming experience and the Ultimate Poker League.

var VUUKLE_EMOTE_SIZE = "90px"; VUUKLE_EMOTE_IFRAME = "180px" var EMOTE_TEXT = ["HAPPY","INDIFFERENT","AMUSED","EXCITED","ANGRY"]

%URL

0 notes

Link

https://ift.tt/2OK8BVX Read on our visit to Beef Sukiyaki Don Keisuke | Activate Your Senses To Experience Beef Sukiyaki In Full by FoodGem

Media Tasting at Beef Sukiyaki Don Keisuke

A brand new concept restaurant, Beef Sukiyaki Don Keisuke, showcases the best of both worlds of classic Japanese dishes like beef sukiyaki and gyu don in a bowl. When we see Keisuke, we can’t help but think of Japanese Ramen King – Keisuke Takeda! And it’s Keisuke’s 15th restaurant in Singapore! Kudos!!

Chef showcases how exemplary the quality of their beef is before beginning to cook in them.

In your dining experience, activate your five senses, where the chef imparts his/her showmanship which we don’t usually experience while having a beef sukiyaki dish. Hear the sizzling sound of the pan, smell the aroma of food wafting through the air and watch your dishes get prepared right before your eyes as you sit back and relax.

At the last step, chef styled the beef sukiyaki don for the pleasure of your eyes and awakens your taste bud. YAY! Time to tuck in!!! Oh wait, the camera/phone first.

Each serving beef sukiyaki don is served with succulent beef slices simmered in a special sukiyaki sauce, shimeji mushroom, tofu braised in sukiyaki sauce served on a bed of warm Koshihikari rice. What can you expect alongside the tasty beef sukiyaki that plays the supporting role? Sesame tofu in a dashi ankake sauce, savoury onsen egg, traditional miso soup and assorted pickles such as pumpkin, beetroot, and gobo and last but not the last Chinese cabbage, Japanese kyuri, carrots, and kelp. I’ve ordered wagyu sukiyaki don. The wagyu sukiyaki beef has a sweet and nice caramelised sugar and special sukiyaki sauce based broth and full of bold flavours.��A sweet surprise with a piece of soft tofu sandwiched between sukiyaki beef slices and warm rice. Don’t forget to add some of the seasonings (Ichimi/ Yuzu Shichimi/ Sansho) to your beef slices. I’ve also enjoyed adding yuzu shichimi as it enhances the optimum flavour of the sukiyaki beef.

Beef Sukiyaki Don (US Prime Beef, a Chuck Eye Roll) at S$13.90.

Wagyu Sukiyaki Don (Yonezawa Beef A4, one of the top three Japanese Wagyu known to the culinary world) at S$29.90.

And when we zoom into the small details, the rice that we had is carefully prepared in a traditional Donabe rice cooker, giving a sweet flavour and soft texture.

And if you realised, there are only two main dishes on the menu; Beef Sukiyaki Don and Beef Sukiyaki Don. If you can’t get enough of the delicious beef slices, feel free to order additional serving of the US prime beef or wagyu beef starting from S$10++. There are only 14 seaters in this restaurant, be sure to be there early to avoid long queue!

Beef Sukiyaki Don Keisuke will officially open the door in Singapore on Monday, 24 September 2018.

*Service charge(10%) and GST(7%) applicable.

Share this post with your friends and loved ones.

You have a chance to vote for this food article. Simply leave your vote/s and comment below.

Budget Per Pax

S$13.90-S$29.90

How to go Beef Sukiyaki Don Keisuke

var map_fusion_map_5ba470e40bb27; var markers = []; var counter = 0; function fusion_run_map_fusion_map_5ba470e40bb27() { jQuery('#fusion_map_5ba470e40bb27').fusion_maps({ addresses: [{"address":"11 Kee Seng Street, #01-01, Singapore 089218","infobox_content":"11 Kee Seng Street, #01-01, Singapore 089218","coordinates":false,"cache":true,"latitude":"1.2749588","longitude":"103.8419308"}], animations: true, infobox_background_color: '', infobox_styling: 'default', infobox_text_color: '', map_style: 'default', map_type: 'roadmap', marker_icon: '', overlay_color: '', overlay_color_hsl: {"hue":0,"sat":0,"lum":6}, pan_control: true, show_address: true, scale_control: true, scrollwheel: true, zoom: 14, zoom_control: true, }); } if ( typeof google !== 'undefined' ) { google.maps.event.addDomListener(window, 'load', fusion_run_map_fusion_map_5ba470e40bb27); }

Operating Hours

Monday – Sunday 11.30AM – 2.30PM 5.00PM – 10.00PM (last order for food: 9.30PM)

Address and Contact

Address: 11 Kee Seng Street, #01-01, Singapore 089218

Contact: +65 6535 1129

Reservation not allowed.

Travel and Parking

Parking available at Tanjong Pagar Plaza (67 m away).

Travel via public transport.

From Tanjong Pagar Mrt Station (East-West Line)

Exit A; Walk 439 m (about 12 minutes) to Onze @ Tanjong Pagar.

The post Beef Sukiyaki Don Keisuke | Activate Your Senses To Experience Beef Sukiyaki In Full appeared first on foodgem: Food & Travel.

#Beef Sukiyaki Don Keisuke | Activate Your Senses To Experience Beef Sukiyaki In Full#Food#FoodPorn#F

0 notes

Link

http://ift.tt/2qmb5kU Read on our visit to Tonkichi Hokkaido | Long-established Tonkatsu Restaurant With Over 20 years in Singapore by FoodGem

Media Tasting at Tonkichi Hokkaido

Tonkichi Singapore, with more than 20 years of serving Japanese Tonkatsu in Singapore. Tonkichi has refurbished and is renamed as Tonkichi Hokkaido, bringing you a brand new experience. A wide variety of choices on the menu that makes you have a hard time deciding what to order. There are six different katsu available; hire(pork fillet), rosu(pork loin), prawn, chicken, fish and oyster. Besides the popular deep fried Tonkatsu, you can also expect sashimi, sushi, don, soba, ramen and more!

The sashimi mori comes in choices of 3 or 5 kinds of assorted sashimi. I especially enjoyed the thick slices of fresh sashimi and salmon being my favourite. If you’re a fan of salmon like myself, you should also try Salmon Daisuki.

Sashimi mori (5 kinds) at S$27.

Simply drools at the sight of salmon fats. Look at the amount of salmon fats in every slice of salmon. You get the best of both worlds with salmon sushi and salmon roll. I have personally enjoyed the salmon roll. A refreshing way to enjoy salmon wrapped with thinly sliced cucumber. The sushi rice is generously sprinkled with sesame seed, surrounded by the fragrant sesame smell in every mouthful. Definitely a great satisfaction to any salmon lover.

Salmon Daisuki at S$27.

The Signature Premium Rosu Katsu set comes with deep fried pork loin from Japan, a bowl of Japanese rice, chawanmushi, roasted sesame, pickles and also a bowl of miso soup.

There are three ways to enjoy the premium deep fried pork loin;

Dip into the tonkatsu sauce.

Mix the roasted sesame with tonkatsu sauce. Drizzle or dip into the mixed sauce as you like.

Drizzle or dip into Japanese curry sauce (option to change chawanmushi to curry sauce at S$3).

The different flavours allow it to be blended with the nicely pressed premium pork loin to create a wide variety of tastes. The crunchy breaded coating protecting the juicy and tender meat beneath. There is a la carte katsu option to add on; if you can’t get enough of the katsu. Choices of prawn, chicken, pork fillet, pork loin, fish and oyster; prices from S$3.

If you can’t decide what to order; try Sushi & Katsu Set. It comes with 7 nicely pressed sushi and also choice of katsu (pork fillet, oyster or prawn). The sushi chef has already applied the right dab of wasabi between the fish and the rice for you. Do inform the server if you do not want any wasabi in your sushi. The oyster katsu is huge; delicious contrast of crispiness and succulence.

Sushi & Katsu Set at S$28.

The unagi gives a rich and deep flavours. However I would have enjoyed more if the texture is softer.

Unagi kabayaki at S$18.

The ambience of the restaurant was cosy and relaxing, exuding a comfortable welcome with the bright light bulbs.

*Service charge(10%) and GST(7%) applicable.

Share this post with your friends and loved ones.

Budget Per Pax

S$20-S$50

How to go Tonkichi Hokkaido

var map_fusion_map_592950d12a3d0; var markers = []; var counter = 0; function fusion_run_map_fusion_map_592950d12a3d0() { jQuery('#fusion_map_592950d12a3d0').fusion_maps({ addresses: [{"address":"#07-06 Central, 181 Orchard Rd, Singapore 238896","infobox_content":"#07-06 Central, 181 Orchard Rd, Singapore 238896","coordinates":false,"cache":true,"latitude":"1.3008592","longitude":"103.8397182"}], animations: true, infobox_background_color: '', infobox_styling: 'default', infobox_text_color: '', map_style: 'default', map_type: 'roadmap', marker_icon: '', overlay_color: '', overlay_color_hsl: {"hue":0,"sat":0,"lum":6}, pan_control: true, show_address: true, scale_control: true, scrollwheel: true, zoom: 14, zoom_control: true, }); } if ( typeof google !== 'undefined' ) { google.maps.event.addDomListener(window, 'load', fusion_run_map_fusion_map_592950d12a3d0); }

Operating Hours

Monday to Thursday:

11AM – 3PM

6PM – 10PM

Friday, Saturday, Sunday & Holidays:

11AM – 10PM

Address and Contact

181 Orchard Rd, #07-06 Central, Singapore 238896

Contact: +65 6238 7976

Travel and Parking

Parking available at Orchard Central.

Travel via public transport.

From Somerset Mrt Station (North-South Line)

Exit B; Walk 116m (about 4 minutes) to Orchard Central.

The post Tonkichi Hokkaido | Long-established Tonkatsu Restaurant With Over 20 years in Singapore appeared first on foodgem: Food & Travel.

#Tonkichi Hokkaido | Long-established Tonkatsu Restaurant With Over 20 years in Singapore#Food#FoodPo

0 notes

Text

Node.js Tutorial Notes

Node.JS

Node.js is an open-source cross platform runtime environment for server-side and networking applications. It's built on top of Chrome's Javascript Runtime, the V8 Engine. Applications for Node are written in JavaScript.

Docs

https://nodejs.org/api/

Credit

Credit to Lynda.com for their fantastic courses that helped me put together much of this information.

Working with Modules

In Node, files and modules are the same thing. E.g. in Python, you need a folder with __init__.py in it to be classified as a module. You DON'T need that in Node. It's all just files.

file1.js:

exports.myText = 'how are you?';

file2.js:

var file1 = require('./file1.js'); console.log('hello, ', file1.myText);

Async

Node.js is single-threaded. All of the users are sharing the same thread. Events are raised and recorded in an event queue and then handled in the order that they were raised.

Node.js is asynchronous, which means that it can do more than one thing at a time. This ability to multitask is what makes Node.js so fast.

The Global Object

<object> The global namespace object.

In browsers, the top-level scope is the global scope. That means that in browsers if you're in the global scope var something will define a global variable. In Node.js this is different. The top-level scope is not the global scope; var something inside an Node.js module will be local to that module.

Every node js file that we create is it's own module. Any variable that we create in a node js file, is scoped only to that module. That means that our variables are not added to the global object the way that they are in the browser.

Get Current Directory and Filename

These will give you the current directory and current filename:

console.log(__dirname) console.log(__filename)

You can also use the inbuilt path module for extra features re paths.

var path = require('path') console.log(path.basename(__filename))

This will pluck the base filename from a full path.

We can also use the path module to create path strings. The path.join() function can be used to join strings together in a path.

var pathString = path.join(__dirname, 'src', 'js', 'components') // if you log this, it comes out [full static path]/src/js/components

The .js Extension

You can leave off the .js extension in the command line when calling files and in require statements, because it's assumed by Node

So instead of:

node index.js

You can do

node index

The Process Object

Allows us to interact with information about the current process.

We can use the process object to get environment information, read environment variables, communicate with the terminal, or parent processes, through standard input and standard output. We can even exit the current process. This object essentially gives us a way to work with the current process instance. One of the the things that we can do with the process object is to collect all the information from the terminal, or command prompt, when the application starts.

All of this information will be saved in a variable called process.argv which stands for the argument variables used to start the process.

So if you call a node file with

node index --user Ben --password whyH3ll0

And you console.log(process.argv), the above flags and values will be printed in their data structure.

Another feature of the process object is standard input and standard output. These two objects offer us a way to communicate with a process while it is running.

process.stdout.write - uses the standard output to write things to the terminal. console.log uses this.

process.stdin.on('data', callback) - uses the standard input to accept data from the user and fire a data event (i.e. data event is where a user enters something via the terminal and hits enter).

To exit a process use process.exit()

You can use process.on('exit', () => {}) to catch the exit event and then do something.

process.stdout.cleanLine() - will clear the current line in the terminal

process.stdout.cursorTo(i) - will send the cursor to position i on the current line.

The Util Module

The util.log method is similar to console.log, except it adds a data and a timestamp. Cool!

let util = require('util') util.log('An error occurred')

The Event Emitter

The Event Emitter is Node.js's implementation of the pub/sub design pattern, and it allows us to create listeners for an emit custom Events.

let events = require('events') let emitter = new events.EventEmitter() emitter.on('customEvent', (message, status) => { console.log(`${status}: ${message}`) }) emitter.emit('customEvent', 'All is good', 200)

Inheriting the Event Emitter into an Object

The utilities module has an inherits function, and it's a way that we can add an object to the prototype of an existing object.

let EventEmitter = require('events').EventEmitter let util = require('util') let Person = (name) => { this.name = name } util.inherits(Person, EventEmitter)

If we now create a new instance of a Person, it will have an on and emit function.

Creating Child Processes with Exec

Node.js comes with a child_process module which allows you to execute external processes in your environment. In other words, your Node.js app can run and communicate with other applications on the computer that it is hosting.

let exec = require('child_process').exec exec('ls', (err, stdout) => { if (err) { throw err } console.log(stdout) })

Creating Child Processes with Spawn

spawn is similar to exec, but it is meant for ongoing processes with larger amounts of data. exec is good for small singular commands.

let spawn = require('child_process').spawn let cp = spawn('node', ['myFileToRun']) cp.stdout.on('data', (data) => { console.log(`STDOUT: ${data.toString()}`) }) cp.on('close', () => { console.log('finished') process.exit() })

Working with the File System

Node allows you to work with the file system via asynchronous or synchronous commands. All synchronous commands are suffixed with Sync, e.g.

let fs = require('fs') console.log(fs.readdirSync('./'))

If you wish to use the Async version, then just leave off the Sync at the end.

let fs = require('fs') fs.readdir('./', (err, files) => { if (err) { throw err } console.log(files) })

File Streams

readFile buffers all of the data from a file, so if it gets too big, you could have a memory overflow or develop system slowdown or any number of problems.

For large files, it's better to use streams, which just grab small chucks of the file at a time and allow you to do work with them individually.

let fs = require('fs') let stream = fs.createReadStream('./status.log', 'UTF-8') let data = '' stream.once('data', () => { console.log('Started Reading File') }) stream.on('data', (chunk) => { data += chunk }) stream.on('end', () => { console.log(`Finished: ${data.length}`) })

Writable File Streams

Just like we can have readable file streams (above), we can also use file streams to write to files, chunk by chunk. If the filename does not exist, Node will create a file of that name.

let fs = require('fs') let stream = fs.createWriteStream('./status.log') stream.write('Some text here' + whateverElse)

The HTTP/S Module

If you're working with HTTPS, then you'll need to use The HTTPS Module. Both HTTP and HTTPS modules have a request method that takes options, and fire a callback function once the request has started. The res response object implements the stream interface.

let https = require('https') let option = { hostname: 'en.wikipedia.org', port: 443, path: '/wiki/Piracy', method: 'GET' } let req = https.request(options, (res) => { res.setEncoding = 'UTF-8' console.log(res.headers) console.log(res.statusCode) res.on('data', (chunk) => { console.log(chunk, chunk.length) }) }) req.on('error', (err) => { console.log(err) }) req.end()

Creating a Web Server

We use the http.createServer method of the http module to create a webserver. Every reqeust sent to this server will cause the method's callback function that we define to be invoked.

let http = require('http') let server = http.createServer((req, res) => { res.writeHead(200, {'Content-Type': 'text/plain'}) res.end('Output text') }) server.listen(3000) console.log('Listening on port 3000')

The req object that we receive as an argument to this method will contain information about the requested headers, any data that is going along with the request, as well as information about our user, like their environment and so on.

The other argument that we'll be adding here is going to be our response res object. So, we will have a blank response object also sent to this request function, and it's going to be our job to complete the response. We will do so by writing the response headers. So I'm going to use the res.writeHead method to complete our response headers. The first argument that we add to this method is going to be our response status code. 200 means that we have a successful response.

The second argument represents a JavaScript literal of all the headers that I am going to add to this response.

res.end can be used to end our response, and we will send "Output Text". Finally we need to tell this server instance what IP and port it should be listening for incoming requests on.

server.listen is a function that we can use to specific the IP address and incoming port for all of our web requests for this server. I'm going to add (3000), telling this server to listen to any requests on this local machine for port 3000.

Making an API

To make an API, you filter URL's and HTTP methods with if (req.url === '/') and if (req.method = 'GET'). Then you can use regular JS to create a response and return it back.

0 notes