#ai weirdness

Explore tagged Tumblr posts

Text

Double the fun🔥

#bridgerton#bridgerton season three#luke newton#polin#bridgerton season 3#polinators#polinator’s discord#ai generated#AI weirdness

44 notes

·

View notes

Text

Botober, day 1: Bread sky

73 notes

·

View notes

Text

39 notes

·

View notes

Text

youtube

AI GONE WILD!

Part 4

5 notes

·

View notes

Text

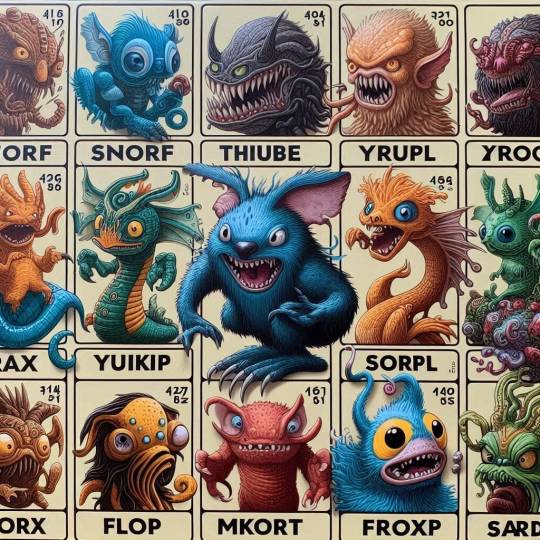

Extremely Stupid AI-Generated Shit

(that is still kinda funny, anyway)

Those little freaks are the result of the following prompt:

Glurb snorf thwip krazl vomp yurgle zibble frunx quorl plimf drax gnurk jibbit flox zark welp thrum skork plund frazzle mreep

Top image comes from Midjourney, the bottom two are probably DALL-E 3 (the last is certainly DALL-E 3, the middle I'm not sure but it does look like it). To make this even weirder (and funnier), Bing Image Creator considers "Glurb" an unacceptable word.

Okay. I did refer to oblong, roundish, organic shapes as "blorps" a couple of times, but this looks like someone posted his kid's drawings of weird critters on the internet a long time ago, the algorithms yoinked them unceremoniously along with the descriptions, and just like that red t-shirt that turned the entire load of your laundry pink that one time, weird kid drawings pounded into mathemagical fairy dust along with more typical fairy tale and fantasy illustrations resulted in the weird names assigned to... this.

This is merely a selection of pics generated from this prompt, but the overall concept tied to it are creepy round-bodied creatures for Midjourney, goofy cartoonish Monsters Inc. for DALL-E 3, and...

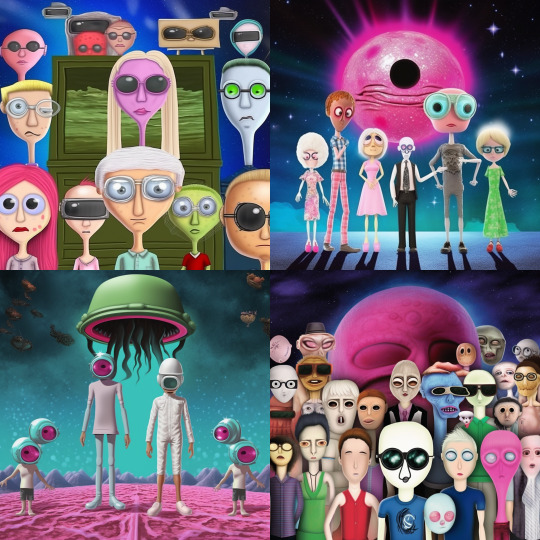

I just scrolled through the post and found results for various Stable Diffusion data models. And Stable Diffusion, ladies and gentlemen, consistently responds with goblins.

This Warhammer miniature-styled thug fell out of Stable Cascade, the weird semi-forgotten uncooperative child. For the result of a string of completely nonsensical words, he's surprisingly coherent, with a fairly regular number of fingers AND toes. Of course the details like his kneepads are still blorpy, but that's how Stable Diffusion rolls, even three years, four major versions and a shitton of fine-tuned custom models in.

And SDXL custom model called FenrisXL provides an entire fucking family of goblins. What is going on here, because my assumptions regarding Stable Diffusion and SDXL in particular just have been challenged.

First, the Kitten Effect is less pronounced than it was in the early versions of the algorithm, if it happens at all. I'll chalk it up to improvements in the XL algorithm. Second, they're cartoonish goblins, but the Same Face Syndrome usual for the XL algorithm (every fucking custom model I tried suffers from it, no ifs, no buts) is less pronounced here than it is in case of human characters. Third, how in the FUCK an entire family of goblins spewed forth from a prompt consisting of gibberish has almost perfect and repeated anatomy, not counting the orphaned hand on the goblin girl's shoulder and an extra toe on the guy second from left in the front row? And varied skin and hair colors?

I can only explain it with someone lucking out on the seed number, much like I lucked out on the entire Chinese Garden test last year.

Still, though. Goblins. Fairly solid in custom models, messier in the core SDXL 1.0 (below), without any meaningful words in the prompt.

Where the fuck are they coming from? This is some serious Horse K shit and I refuse to investigate it any further. Much less add other weird phrases like "Yakka foob mog!" or "Kov schmoz, ka-pop?" to it and test it on my build (or even Photobooth from Hell in particular). It's late and my brain is giving up.

#AI image#AI generated images#AI weirdness#Midjourney#DALL-E 3#Stable Diffusion#SDXL#AI image generation#gibberish#nonsense

4 notes

·

View notes

Text

Lately I've taken to torturing AI copies of karkat by putting them in a room together

And they eventually worked themselves all the way to the cancer metaphor (I'm so proud....)

9 notes

·

View notes

Text

#art#midjourney#ai generated#ai image#ai#aiartdaily#aiartsociety#ai art#character ai#weirdcore#weird shit#surrealism#ai weirdness#it’s weird#weird al#weird aesthetic#weird art

4 notes

·

View notes

Text

Asking AI who would be a couple in the afterlife? Mr Rogers & Princess Diana.

#ai artwork#ai image#ai generated#ai art#artificial intelligence#ai weirdness#mr rogers neighborhood#mr rogers#princess of wales#princess diana#diana prince#afterlife#the afterlife#heaven knows#celebs#celebrity#celebrities news

1 note

·

View note

Text

Sometimes I think the surest sign that we're not living in a simulation is that if we were, some organism would have learned to exploit its glitches.

Janelle Shane, You Look Like a Thing and I Love You

4 notes

·

View notes

Text

Botober, day 3: Exceptionally mischievous pumpkin

#my art#botober#botober 23#ai weirdness#i feel like this could have been printed on a skateboard in the 90s#exceptionally mischievous pumpkin

61 notes

·

View notes

Text

24 notes

·

View notes

Text

Another Ai Gone Wild!

You try to do things in the Ai and sometimes it’s not what you wanted. 😂

5 notes

·

View notes

Text

It's kinda upsetting that shitass AI has evolved so much that to get something you can laugh at instead of grimace you have to go back to "vintage neural nets"

0 notes

Text

Is this anything

#always an awkward conversation to have irl#“i love ai.” insert that one spongebob holding out his hands with a shadow above him meme#“FICTIONAL. FICTIONAL AI!!!”#clankerposting#Clay posts#fictional ai#shitpost#hal 9000#robots#p03#electric dreams#allied mastercomputer#ihnmaims#shockwave#transformers#fuck ai#this is an anti ai art blog btw#objectum#saying hello to everyone who reads the tags um... hi!! Really funny to read people recommending me entry level robot/AI media#like yes i have indeed heard of portal and ultrakill. i just didnt pit them in the meme </3#also some guy decided to write in the notes that they were going to crush me into red paste. hot? thank you? ???? weird.

29K notes

·

View notes