#ai shader for 3d model

Explore tagged Tumblr posts

Text

I hate setting up cloth sim Anyway, I'd say it's doing a terrific job considering the somewhat shoddy input (skinning mishaps, clipping, simplistic textures, etc)

Definitely gets rid of the majority of "3d model feeling". The issues I can see are the drifting eye color and yellow flowers getting lost, but it's something that should get remedied with a model trained for specific character appearance. All in all, worth exploring further with more effort put into the base 3d render.

3K notes

·

View notes

Video

youtube

Little and simple turn around for the motor bike

#youtube#3d modeling#3d model#blender#blender render#3ds max#maya#cartoon shader#toon shader#artists on tumblr#video art#video#blender eevee#youtube video#turnaround#3d artist#artist of tumblr#artist on youtube#artist of youtube#no ai art#rendered in blender#done with blender

0 notes

Text

So unfortunately you can still see posts from people who have blocked you (particularly when someone else links you) and someone wrote this huge rebuttal to the pokemon thing that makes me sound like such a jerk about it, I really can’t just not respond? Like are people doing it on purpose to be rude, skim-reading and filling in the blanks, or do I genuinely communicate my thoughts and opinions that badly the first time? :(

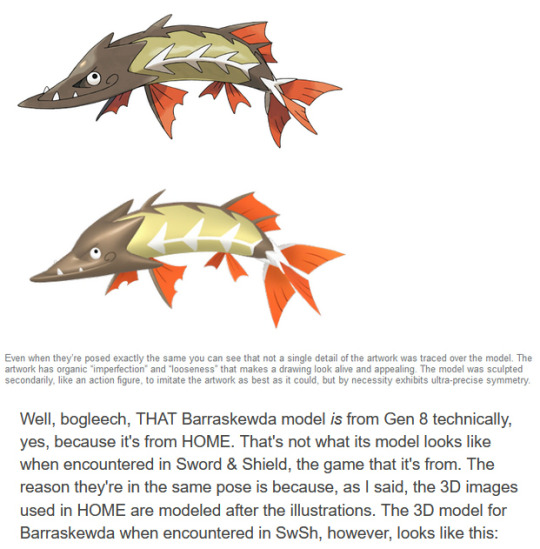

So I’m honestly not even sure what this part of the thread is trying to address or correct me on, for instance, because it uses a screenshot with my explanation right there. I was using this as an example of the differences you get when a model is based on the 2-d art, and I chose the model as seen in Pokemon Home specifically because it follows the pose of the art and therefore makes comparison easier. It is in fact the same exact model used in SwSh, but in the game it has a different shader/filter applied.

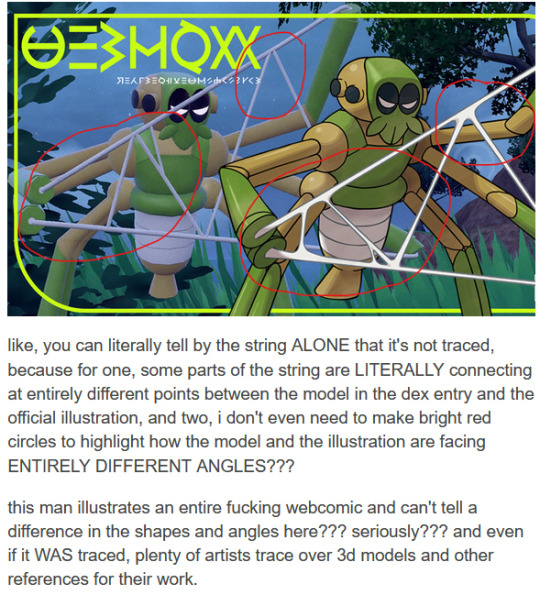

This is another odd response because the very first thing I say in my article is exactly what they say here, that the silk was clearly redrawn. And “facing different angles” isn’t relevant because when you draw over a 3-d model, you get the model first and you position it how you want. I wasn’t making a comparison to poses and angles. Parts are also clearly redrawn like the “tail,” but the head especially has that rotoscoped geometry.

So then this person thinks the phrase “auto generated” meant I was bringing AI art into this??? Auto generated means you took the 3-d model and applied 2d stylization filters to it, which has been a thing since before Neuralnet AI art. Filtering 3d into a 2d look is also a legitimate method to make cool artwork, they just didn’t do it in a very good way for some of these Pokemon.

Again this makes me sad enough to have to respond to because it’s written like I’m actually stupid, mean spirited or dishonest over nothing but Pokemon art and it’s just unreal.

595 notes

·

View notes

Text

The program I use to make my 3d renders has a new feature. I mentioned it earlier in the month but that was before I really understood how to use it and the amazing things that could be done with it. Turns out that with a bit of work you can put the anime style shader on already existing/created characters. You don't need to start again from scratch.. This is my work, my 3D models, created in DAZ with a texture shader that makes them look cartoon in the program. The same way I could use a metal shader to make them both look like statues made of silver. This isn't an AI after-effect or cartoon generator on top of an already existing render. The characters look like this while I am posing them. I am in love with this and will definitely be making some more artwork of all my characters looking like this as it renders out almost immediately instead of waiting hours.

Here's a 30 frame gif I rendered in 1 minute as opposed to roughly 8 hours per 10 frames of my regular work.

#eddie munson#stranger things#eddie stranger things#eddie munson stranger things#stranger things 4#eddie munson x oc#eddie munson x karmen jones#eddie munson/oc#the karmenverse#kaddie render#eddiem solo render#eddie munson 3d#karmen jones 3d#oc karmen jones#stranger things oc#stranger things original character#eddie munson gif#eddie munson pic#eddie munson art#eddie munson fanart#eddie munson fan art#eddie munson artwork#art#fanart#fan art#artwork#gif#gifs#art gif#art gifs

11 notes

·

View notes

Note

EXCUSE ME who told you you’re talking too much about your art?? Dude that’s the part I love most!! I like hearing about how you struggled to get it where you wanted, or how much the tools you’re working with frustrated you, but you got to a place you were happy with anyway.

I don’t know anything about 3d animation, and I know even less about game design. So it’s really important for me that you describe your process and how much work you had to do because it your work context. I’m sorry anybody made you feel like that wasn’t valuable. I’d argue that’s more valuable than the finished work itself!!

I always love seeing your posts pop up on my dash. And Wolffe and the 104th look absolutely fantastic in the newest one!! If you don’t mind me asking, what were some of the weird issues you ran into in the 104th’s one, and what makes them different issues from the ones you ran into before?

Thank you so much for all the kind words, but to be completely honest, they are kinda right. I think any artist can relate to the feeling of being too critical towards your own work. I tend to overexaggerate mistakes, or point out issues that aren't even really noticable to someone that knows little about this field. But at the same time, i always have a vision of how i'd like my current work to look like, and when i don't meet my own expectations (which i rarely do), that's when i start yapping. Well there were some minor ones, like noticing how some of the armors were not modeled accurately( like around the shoulder part of the chest piece, it's completely missing that part where it connects the front to the back, elbow pieces are way too big, helmets were also not modeled accurately, etc). I also completely messed up the rigging process, thus giving myself so much more work when animating. There are always certain body parts that just go into eachother (lower arm going into the upper arm when it's bent, feet going into the floor, hands going into the chest, etc) that could have been easily avoidable if i took the time to make a proper rig for my models.

There are also always some texturing mistakes, or wrongly placed focal points i notice once the final render is done. In this one, once all of the characters come up, and the camera starts zooming in on their faces, the focal point was placed too far, resulting in some parts of the helmets looking blurrier, than they should look. Since renders take a whole lot of time, i always try to fix this by putting the final renders into a 3rd party AI upscaling program, instead of going back to place it correctly, then re render it. That's probably a crappy workflow, but if this project wouldn't have a deadline that's approaching WAY TOO QUICKLY, and i wouldn't have a lot more stuff to model and animate, i would do the latter. At the same time, i probably should just pay more attention before hitting the render button lol. Also, the movements of the characters sometimes look way too stiff, and don't have that fluidity to them. I haven't been animating for long, so here's the reason for that, at the same time tho, i'm noticing some impovements when comparing the recent piece to my first animation. These are the problems i'm running into most of the time. In the recent one though, if you look closely, once Wolffe goes into his stance (after the commander Wolffe text disappeares) there's some weird black flickering going on around his chest/belly area, that for the love of God, i could not fix. Sometimes the particle system can cause some really interesting issues, that most of the time can be fixed by baking the dynamics. Since i did that (multiple times, deleting them, then re baking) and the issue persisted, i started to think either the shaders, or the particle system+volumetric fog combo was causing this problem. I also use a s*** ton of REALLY powerful lights, with the power constantly changing throughout the entire animation, that could also be causing this issue (i think?) I tried re-placing the cube that's making the volumetric fog, tried placing the lights and camera slightly elsewhere, but nothing worked, so i just decided to leave it as it is. The super slow mo parts are being made in the Non Linear Animation editor, which is... just as confusing as it sounds lol. Making the slow mo parts sometimes causes the blasters to disappear then reappear at the wrong time. The way grabbing the blasters then putting them away works is by having one blaster that's always parented to one hand, and one, that's always parented to the holster, and you change the visibility accordingly. (the moment the character pulls the blaster out of the holster, both blasters have to be perfectly alligned so the change in visibility doesn't have a weird jump in it) The visibility itself gets an action strip on its own, and it's hard to line them up correctly once you chopped up all your other strips and scaled them to make them slow motion. Because if the armature's action strip gets chopped up and scaled to make the movement slow motion, then everything else that has movement linked to it has to as well. So lights, the camera, the empty axes that the camera is parented to, and the blasters as well. This could be achieved by just placing the keyframes further apart from eachother, but i found this method to be somewhat simpler.

I'm probably doing this the wrong way though and could just place the keyframes accordingly without pushing the blaster action down to the NLA editor (cuz after all it's just visibility, not slow motion movement the blaster has). Though i have some really cool ideas with blasters in the upcoming animations, that would probably require to have them as NLA strips. Or maybe not, and everything i'm doing and talking about is bullshit, and isn't the way it should be done, and i really hope someone, that's in the industry doesn't read this and go "what the f is this woman talking about" lol. Basically everything about animating confuses the hell out of me, and i'm always doing stuff on the trial and error basis. So i hope one day i'll be able to learn it properly haha See, i'm yapping way too much after all. And i'm sorry for the long answer, but i'm really really passionate about this. And it actually feels so nice to know that there are people out there that care. 💖💖

12 notes

·

View notes

Note

(about the bell post) i dont know anything about lore olympus but is there something inherently bad in using free stock images? like, thats what stock images are for right? i know that its probably lazy or whatever but is that the only problem? /genuine

Part of the issue with using stock photos is licensing. Like fonts, they're in abundance online and easy to snag for "free", but as soon as you enter commercial work, it becomes a legal minefield. Stock photos typically belong to either individuals or corporations that rely on people buying the rights to those photos to use them; if they don't, they could very well be sued for copyright infringement.

In that respect, emojis fall into a similar grey area. Some emojis are public domain/open source meaning they're free to use for everyone. But many are not. It's why different social media platforms and different phone providers use different emoji's - it's not purely for branding (though that is a factor as Facebook emojis have become distinguishable from Android emojis) but also for ownership.

So, in the legal sense, I do not know if the bell emoji that Rachel used in LO is legally hers to use, or if it's even subject to such laws (it could be an open source image meaning it's free-for-all). I'm hoping for her sake she's not breaking any sort of copyright ownership laws, but I'm also not a lawyer and wouldn't know how to get that information even if I wanted to lmao

Aside from the legal, it's also just... sigh I'm gonna get into more opinionated territory here, but even if something is open source, even if you're legally free to use a stock photo or other tool to create your comic, there's also the ethics/integrity of it. Lore Olympus is not a Canvas comic. It is not an indie hobbyist project. It's a commercial product with multiple people working on it behind the scenes, book deals, merch deals, a TV deal, and an upcoming feature at this year's SDCC, with Rachel headlining alongside Cassandra Claire (Mortal Instruments) and Jeff Smith (BONE). Webtoons is trying very hard to market LO as a 'flagship' series and convince the public that it can stand alongside other literature juggernauts.

What I'm trying to say here is, if Rachel did legally use it, it doesn't make it any less cheap. There's a lot of discussion in the art field over the usage of external tools and assets in art creation, especially here in the west. 3D models, AI shaders, gradient maps - there are tons of things that exist now that stand to benefit artists, but can be abused or used poorly, being used as less of a tool to benefit an artist with pre-existing skills and more as a cheap shortcut to circumvent actual skill/effort.

The bell emoji isn't the heart of the issue I pointed out in that post. If it were an isolated thing, if LO were an otherwise impeccable comic with high-effort art and just one little picture of a bell, it wouldn't be that big of an issue.

But LO isn't that comic. The recipe of its art development week after week has become very cheap and low-effort, and the bell is really just the cherry on top.

And just to make it clear, I do stand by artists being able to use tools that make their lives easier. None of this is to say it's wrong to use stock images, or 3D models, or gradient maps, or whatever have you. Those tools exist to help and can be used in fun and experimental ways to bring new perspectives and life to your work. And I'm not going to scrutinize whatever shortcuts are being used in a comic that's being made for free by a hobbyist or someone who's still learning.

But like all tools, there are still ways to use them to the detriment of your own work, either due to a lack of understanding as to how that tool works, or lack of effort to blend it into your work. It can make it glaringly obvious that third-party assets are being used, and can often distract from what you've drawn (the complete opposite of what most people are trying to achieve).

When I think of art shortcuts and tools being used poorly, I think of Let's Play and its stock photo background characters.

I think of Time Gate: [AFTERBIRTH]'s stiff default 3D models that result in lifeless poses and restricted body types, which I am VERY eager to move on from LMAO

I think of LO's 3D backgrounds with only 1-2 colors thrown in and the characters floating in front of them. Or sometimes no characters at all even when people are speaking.

And of course, I think of the emoji bell, which could have easily just been drawn as a door or an actual doorbell, and not some random grey bell copied and pasted from a Google search.

All that's to say, too much reliance on poorly-implemented assets can take a great piece of work down to a mediocre one. Of course, the assets definitely aren't the only issue with LO, but they are definitely a piece of the problem. There might not be anything 'wrong' with using assets, but they can still be used poorly or result in cheap-looking work and that's primarily what I'm calling out here.

#lore olympus critical#lo critical#antiloreolympus#anti lore olympus#ama#ask me anything#anon ask me anything#anon ama

77 notes

·

View notes

Text

Jest: A Concept for a New Programming Language

Summary: "Jest" could be envisioned as a novel computer programming language with a focus on humor, playfulness, or efficiency in a specific domain. Its design might embrace creativity in syntax, a unique philosophy, or a purpose-driven ecosystem for developers. It could potentially bridge accessibility with functionality, making coding intuitive and enjoyable.

Definition: Jest: A hypothetical computer language designed with a balance of simplicity, expressiveness, and potentially humor. The name suggests it might include unconventional features, playful interactions, or focus on lightweight scripting with a minimalist approach to problem-solving.

Expansion: If Jest were to exist, it might embody these features:

Playful Syntax: Commands and expressions that use conversational, quirky, or approachable language. Example:

joke "Why did the loop break? It couldn't handle the pressure!"; if (laughs > 0) { clap(); }

Efficiency-Focused: Ideal for scripting, rapid prototyping, or teaching, with shortcuts that reduce boilerplate code.

Modular Philosophy: Encourages user-created modules or libraries, reflecting its playful tone with practical use cases.

Integrated Humor or Personality: Built-in error messages or prompts might be witty or personalized.

Flexibility: Multi-paradigm support, including functional, procedural, and object-oriented programming.

Transcription: An example code snippet for a Jest-like language:

// Hello World in Jest greet = "Hello, World!"; print(greet); laugh();

A Jest program that calculates Fibonacci numbers might look like this:

// Fibonacci in Jest fib = (n) => n < 2 ? n : fib(n-1) + fib(n-2);

joke "What's the Fibonacci sequence? You'll love it, it grows on you!"; n = 10; print("The Fibonacci number at", n, "is:", fib(n));

Potential Domains:

Gamified education

Creative industries

AI-driven storytelling

Interactive debugging

Would you like me to refine or explore additional aspects?

Certainly! If we were to imagine Jest as the brainchild of a creative coder or team, their portfolio would likely include other innovative or experimental programming languages. Let’s expand on this concept and invent some plausible complementary languages the same inventor might have designed.

Related Languages by the Inventor of Jest

Pantomime

Description: A visual programming language inspired by gesture and movement, where users "drag and drop" symbols or create flowcharts to express logic. Designed for non-coders or children to learn programming through interaction.

Key Features:

Icon-based syntax: Conditional loops, variables, and functions represented visually.

Works seamlessly with Jest for creating visual representations of Jest scripts.

Sample Code (Visual Representation): Flowchart blocks: Input → Decision → Output.

Facet

Description: A declarative programming language focusing on creativity and modularity, aimed at artists, designers, and 3D modelers. Facet could integrate well with game engines and creative suites like Blender or Unity.

Key Features:

Embedded visual tools for shaders, animations, and simulations.

Simplified expressions for creative coding (e.g., animations and generative art).

Sample Code:

shape = circle(radius: 10, color: "blue"); animation = wave(shape, amplitude: 5, frequency: 2); render(animation, duration: 10s);

Quip

Description: A lightweight scripting language built for humor, similar to Jest, but more focused on natural language processing, chatbots, and interactive storytelling.

Key Features:

Syntax mirrors conversational English.

Designed for AI integration, especially in storytelling apps.

Sample Code:

character "Alice" says "Hello! What's your name?"; user_input -> name; character "Alice" says "Nice to meet you, {name}!";

Cryptic

Description: A language for designing cryptography algorithms and secure communications. Aimed at developers interested in blockchain, encryption, or cybersecurity.

Key Features:

High-level abstractions for cryptographic primitives.

Secure-by-design philosophy.

Sample Code:

key = generate_key(algorithm: "AES", length: 256); encrypted_message = encrypt("Hello, World!", key); print(encrypted_message);

Harmony

Description: A reactive programming language for building interactive user interfaces, designed to be highly interoperable with Jest and other tools in the suite. Perfect for web developers and app creators.

Key Features:

Two-way data binding with minimal boilerplate.

Component-based architecture.

Sample Code:

component Button { state: clicked = false; onClick() { clicked = !clicked; alert("Button clicked!"); } } render(Button);

Inventor’s Vision

The coder or team behind Jest and these complementary languages would likely be passionate about:

Accessibility: Making programming intuitive and fun for people of all skill levels.

Playfulness: Infusing coding with creativity, humor, and expressiveness.

Interoperability: Ensuring all their languages work well together for larger projects.

Would you like me to dive deeper into one of these languages, conceptualize others, or expand Jest further?

Expanded Concepts and Bridge Languages

Pantomime

Expansion: Adds support for AR/VR interfaces where users use gestures to program logic in 3D space. For example, drawing a loop physically creates a repetitive cycle.

Bridge Language: GestureScript: Converts AR/VR gestures from Pantomime into traditional code for broader applications.

Facet

Expansion: Enhanced for generative AI tools, allowing users to define complex artistic styles and AI-driven procedural designs.

Bridge Language: PixelForge: Focused on exporting Facet code into game engines, enabling real-time rendering pipelines for interactive environments.

Quip

Expansion: Includes pre-trained AI models for dynamic storytelling, allowing Quip scripts to evolve based on user interaction.

Bridge Language: Narrate: Combines Quip scripts with voice-to-text technologies for live storytelling in games or educational tools.

Cryptic

Expansion: Introduces quantum-safe cryptography primitives and allows simulation of blockchain-like ecosystems for testing purposes.

Bridge Language: CipherBridge: Translates Cryptic code into various blockchain frameworks like Ethereum or Solana.

Harmony

Expansion: Gains support for cross-platform development, allowing users to write code for web, mobile, and IoT devices simultaneously.

Bridge Language: Symphony: Converts Harmony components into platform-native scripts for seamless integration.

Additional Bridge Languages

Echo: Bridges Quip and Jest, enabling storytelling code to trigger functional scripts for immersive experiences.

Morph: A universal language that compiles any Jest family code into executable binaries for specific operating systems.

Thread: Integrates Cryptic and Harmony to build secure, reactive user interfaces for fintech applications.

Canvas: Bridges Facet and Pantomime to create interactive art in AR/VR, blending visual coding with gesture inputs.

Let me know if you'd like a deeper dive into any specific language or bridge concept!

Here's a detailed exploration of Echo, Morph, Thread, Canvas, and all previously mentioned languages, emphasizing their unique applications and advanced functionalities.

Expanded Bridge Languages

Echo

Purpose: Links storytelling elements in Quip with functional programming in Jest, enabling dynamic, immersive interactions between narrative and logic.

Key Features:

Story-driven logic triggers: e.g., a character’s dialogue prompts a database query or API call.

Integration with AI tools for real-time responses.

Use Case: AI-driven chatbots that incorporate both storytelling and complex backend workflows.

Sample Code:

story_event "hero_arrives" triggers fetch_data("weather"); response = "The hero enters amidst a storm: {weather}.";

Morph

Purpose: Acts as a meta-compiler, translating any language in the Jest ecosystem into optimized, platform-specific binaries.

Key Features:

Universal compatibility across operating systems and architectures.

Performance tuning during compilation.

Use Case: Porting a Jest-based application to embedded systems or gaming consoles.

Sample Code:

input: Facet script; target_platform: "PS7"; compile_to_binary();

Thread

Purpose: Combines Cryptic's security features with Harmony's reactive architecture to create secure, interactive user interfaces.

Key Features:

Secure data binding for fintech or healthcare applications.

Integration with blockchain for smart contracts.

Use Case: Decentralized finance (DeFi) apps with intuitive, safe user interfaces.

Sample Code:

bind secure_input("account_number") to blockchain_check("balance"); render UI_component(balance_display);

Canvas

Purpose: Fuses Facet's generative design tools with Pantomime's gesture-based coding for AR/VR art creation.

Key Features:

Real-time 3D design with hand gestures.

Multi-modal export to AR/VR platforms or 3D printers.

Use Case: Collaborative VR environments for designers and architects.

Sample Code:

gesture: "draw_circle" → create_3D_shape("sphere"); gesture: "scale_up" → modify_shape("sphere", scale: 2x); render(scene);

Deep Dive into Main Languages

Jest

Philosophy: A playful, expressive scripting language with versatile syntax tailored for humor, experimentation, and creativity.

Core Application: Writing scripts that blend functional programming with a whimsical, human-readable style.

Pantomime

Expanded Features: Includes AI-driven gesture recognition for predictive modeling and natural motion capture.

Advanced Use: Developing AR apps for rehabilitation therapy where exercises are coded as gestures.

Facet

Advanced Use: Procedural generation in games, defining terrains, weather systems, and NPC behaviors with artistic flair.

Quip

Advanced Features: Integrates pre-trained language models for nuanced, character-driven dialogue trees.

Advanced Use: Creating adaptive narratives in games where user decisions alter the story arc.

Cryptic

Advanced Features: Quantum-safe algorithms and AI-enhanced key generation for unbreakable security.

Advanced Use: Designing multi-layered blockchain ecosystems with Cryptic’s high-level abstractions.

Harmony

Advanced Features: Adaptive UIs that respond to user emotion, tracked via AI-enhanced cameras.

Advanced Use: Apps for mental health, where interfaces adjust to user stress levels.

Proposed New Bridge Languages

Chord

Bridges Harmony and Facet for designing interactive music-based visual art.

Use Case: Music videos where the visuals respond to real-time sound input.

Path

Links Cryptic and Canvas for secure AR/VR environments.

Use Case: Virtual meetings in secure, customized 3D spaces.

Fable

Connects Quip and Pantomime for storytelling via interactive gestures.

Use Case: VR education apps where lessons are taught through immersive narratives.

Would you like a specific implementation plan for any of these languages or applications?

#playstation7#deardearestbrands#ps7#digitalconsole#framework#python#celestiallink#Code#Script#Language#pantomime#Echo#Canvas#Fable#Morph#Bridge#Path#Chord#Harmony#Cryptic#Quip#Facet

3 notes

·

View notes

Text

The latest disinformation campaign from the con artists pushing "AI" - Expanding the definition.

As a growing percentage of the population is waking up to the fact that the whole "AI" thing is some combination of outright lies, the same autocomplete function your phone has had forever, and obfuscation of the outright theft of an unfathomable amount of art, writing, contents of theoretically secure databases, etc. the newly emerging strategy to keep the world from pulling the plug on the whole thing and putting the people stealing basically every file accessible on the internet is a pivot to a defense of "well SOME uses of AI are outright criminal acts sure, but there's a lot of useful things about it to!" However, since that's... you know, not actually true, what they're actually doing is just going around pointing at literally anything cool involving computers and going "see, that's AI!"

Some of the examples I've been seeing have really just been flooring me, too. These hucksters looking for useful software to use as a shield for their scams are pointing at stuff like the basic fundamental concept of a database, AI in the sense of the routines that dictate enemy behavior in games, and even things like freaking shaders. You know, the code that gets applied to 3D models to determine whether they react to lighting realistically or look cartoon-y or whatever. There's a post going around this site right now trying to claim we wouldn't have Miles' jawline in Spiderverse if we shut down the people stealing every digital artist's portfolio to repackage and pretend a computer did it. Yeah no, putting a hand drawn looking line on the edge of a 3D character's jaw is something we've been doing for at least 30 damn years now.

Everything about these scams infuriates me, but this latest set of lies is particularly setting me off, because we're already apparently dealing with a serious problem in society where a huge number of people under the age of 25 or something are just shockingly lacking in basic computer literacy, and now we've got a bunch of con artists trying to convince them that basic math and data structures are beyond all human comprehension and possible only because of whatever goes on inside of the unknowable black box that has an honest to goodness thinking machine, which not only can't be understood, but will stop functioning if you try to stop people from committing blatant theft.

I'm honestly mostly just venting here, but for real, any time you see this sort of crap, please do your civic duty and call out how none of this stuff is "AI" and useful software has nothing to do with any of these creeps scraping every file they can off the internet.

6 notes

·

View notes

Text

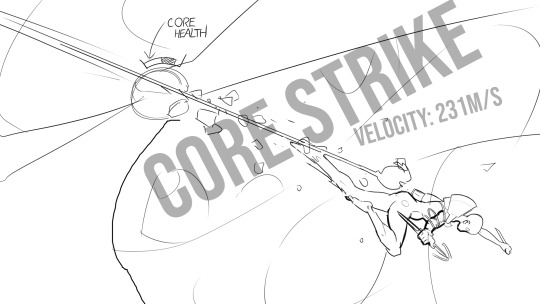

I'm doing a gamedev course, paid for by Her Majesty's Government, because I guess they think more game developers is more taxes or something. I'll take it lol. Am I going to be come a game developer? Unclear as yet! I'll be as surprised as you. But I need 'money' to 'eat food', I understand, and this is looking like the best bet.

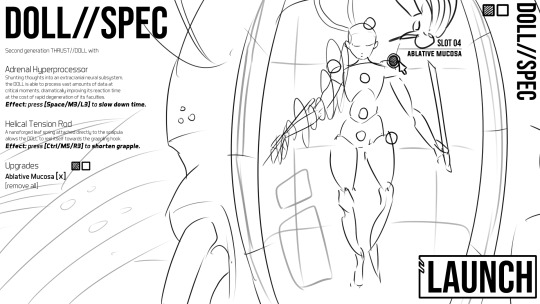

A lot of it is about one big project that I'll be working on over the next ~14 weeks, so I thought maybe people would be interested in what I'm trying to make. Which is THRUST//DOLL, a game about darting and grappling your posthuman shell through hundreds of missiles. You can see some visual inspirations here...

For reasons best known to Past Bryn (ok, it's to learn the tech), I'm trying to do this entire project within Unity's new(ish) Data Oriented Technology Stack (DOTS), which gives you the magic of an Entity Component System, meaning you can shove data in and out of the CPU cache at speeds previously unknown to humanity. DOTS is a paradigm that's supposed to replace the old object-oriented world of GameObjects with something sleek and modern and compiled (using 'Burst', we're still in C# sadly).

So the core idea of ECS is that, instead of storing data on class instances, you put that data in tightly packed arrays of component strcts indexed by the same 'entities', and you iterate over these rapidly with 'systems'. If you've heard of e.g. the Rust game engine Bevy, it's the same idea, just... awkwardly jammed into Unity. (Many other engines are following the same sort of idea).

But... it's had a really rocky history, the API has only just stablised after most of a decade, and half the DOTS-related information you'll find on the internet is plain out of date, and the rest is either a little inscrutable or long video tutorials.

The first task I've been set by the powers that be at "Mastered" is to make a 'configurator'. The assessment task they had me do to get into the course was also a configurator, I guess someone there really likes configuring things. Anyway, for me that's going to be the character creation screen of THRUST//DOLL, where you swap out bits of your body to get new abilities.

So, have the first of some devlogs where I describe the design decisions I've been making so far. At first all I wanted to do was create a system where there are UI elements that you can click on with a little toggleable circle in the UI that is attached to them. Unity has like three ways of doing everything you can think of, so that involved a lot of digging. Eventually I settled on doing it a fourth way using none of Unity's built-in UI systems, using shader magic.

So I made a noodly looking thing for drawing circles:

Why shadergraph and not the cool hardcore HLSL text editor way? Because sigh DOTS has a bunch of weird boilerplate I didn't have the energy to figure out. 'Because DOTS has a bunch of weird boilerplate' is gonna be the recurring thing in this story I suspect. Anyway Unity ShaderGraph is almost the same as Blender Material Nodes, except you need to know a little bit about rasterisation. Luckily I made a rasteriser in 2017, so I have a decent understanding of this stuff.

A billboard shader it turns out is hilariously simple. What you basically want to do is have a polygon that matches the rotation of the camera. However, in a shadergraph, Unity will apply the full MVP matrix no matter what. So the solution is that you do is you take the View matrix, which is the camera's transformation, and invert it, and apply that before the MVP matrix with 0 in the w component to make sure any translation still gets applied. As long as the model isn't rotated at all, it all cancels out.

Anyway I figured out how to do overrides with DOTS components, which is neat, so I can feed in numbers into my shader per entity. The next step was to figure out how to create entities and components to display these little circles... which is the subject of devlog #2.

This one's basically a DOTS cheat sheet where I boil down the main things you'd want to know how to do. If you ever felt like trying out Unity DOTS, I really, really hope it will save you some of the misery I've had. There are so many weird gotchas (you fool, you saved a temporary negative entity index!) but the good part is that it really forces you to learn what's going on in this thing.

That is a good part, right?

Anyway here's the milestone I reached today: cubes you can click on at like 200FPS. It will revolutionise gaming, I think.

Yeah so that looks like shit, but the code is cool, and now the code's there I can make something that looks cool instead of bad!

More updates soon, I have to work pretty hard at this thing. Have another concept sketch!

19 notes

·

View notes

Text

This. Even if GenAI perfected creating A Dude it doesn't provide any way to specify a structure that must be tweaked by a specific amount.

AI fails utterly at perspective, as laid out above, but also at anatomical and mechanical art. Dinosaurs with extra legs, fighter planes that are a scrambled mess of triangular surfaces, and I have zero hope it'll know what the fuck an antorbital fenestra or Divertless Supersonic Inlet is. If I went out and soapboxed this to a more visible platform than what I have I'd be flooded with about a trillion "it'll get there!" responses- but where is *"there?"* There would not be AI at all. There would be a tool to help me calculate ideal placements of structural elements or a model of a skeleton to pose to help with perspective, another tool to do the placing- ie, opening any 2D art program or 3D modeling software and just putting the stylus in the appropriate places- and then from there just the fucking fill tool to make a solid object of it, and a shader or brush for all the rust and texture and integument and shadow. Most of these already exist, and what aspects do not will not be descendants of current image breeding software- they will be welcomed by the greater art community as situational tools, where GenAI rots as a proof of concept that somehow grew into a cult, circlejerking itself as the end of all other forms of illustration. Riding off the anti-artist sentiments of the Monkey Jpeg army as the world's longest, most painfully forced Own The Libs bit. GenAI is a dead end.

We have perhaps made a mistake to describe AI as soulless, because that lets chucklefucks call it subjective and move on. No, what it lacks is something far more concrete: design. Style. Intent. Form. You're looking up images, same as going onto Google, except, wowee, technology, these images don't exist! Too bad they suck and you can't fix them. But, hey, at least the fucking teratoma you're trying to pass off as a Dragon will come out with pretty shading. Better light and shadow than I do honestly. Will look good on a wall, from the other side of a room, probably.

This machine kills AI

81K notes

·

View notes

Text

The Best Game Engines for Desktop Game Development: Unity vs. Unreal Engine

When it comes to desktop game development, choosing the right game engine is one of the most critical decisions a developer can make. Two of the most popular and powerful game engines on the market today are Unity and Unreal Engine. Each engine has its strengths and is suitable for different types of projects, but understanding the key differences can help developers make informed decisions. In this article, we will compare Unity and Unreal Engine to help you choose the best platform for your next desktop game project.

Unity: Versatility and Ease of Use

1. User-Friendly Interface

Unity is widely recognized for its intuitive interface, making it a popular choice for both beginners and experienced developers. It has a drag-and-drop system and a modular component-based architecture, allowing users to quickly prototype games without extensive coding experience. For those new to game development, Unity’s straightforward layout, coupled with a massive collection of online tutorials and documentation, makes it an ideal starting point.

2. Wide Platform Support

One of Unity’s key strengths is its flexibility in supporting various platforms. Whether you’re developing for desktops (Windows, macOS, Linux), mobile devices, consoles, or even augmented and virtual reality (AR/VR), Unity allows seamless cross-platform deployment. This means developers can build a game for desktop and then easily port it to mobile or consoles with minimal adjustments. This wide platform support has made Unity a popular choice for indie developers and smaller studios looking to reach multiple audiences without needing different development pipelines for each platform.

3. Graphics and Performance

While Unity is highly versatile, its graphics capabilities are often perceived as less powerful than Unreal Engine, particularly when it comes to photorealism. Unity uses the Universal Render Pipeline (URP) and High Definition Render Pipeline (HDRP) to improve visual fidelity, but it tends to shine more in 2D, stylized, or mobile games rather than highly detailed 3D games. That said, many desktop games with excellent graphics have been developed using Unity, thanks to its extensive asset store and customizable shaders.

4. C# Scripting Language

Unity uses C# as its primary scripting language, which is relatively easy to learn and widely supported in the programming community. C# allows for efficient, object-oriented programming, making it a good choice for developers who prefer a manageable learning curve. Unity’s scripting also integrates smoothly with its user interface, giving developers control over game mechanics, physics, and other elements.

5. Asset Store

Unity’s asset store is another standout feature, offering a wide range of assets, tools, and plugins. From pre-made 3D models to game templates and AI systems, the asset store allows developers to speed up the development process by purchasing or downloading assets that fit their game.

Unreal Engine: Power and Photorealism

1. Unmatched Graphics and Visual Quality

Unreal Engine is renowned for its stunning graphics capabilities. It excels in creating high-fidelity, photorealistic visuals, making it the go-to choice for AAA game studios that require visually complex environments and characters. Unreal’s graphical power is largely attributed to its Unreal Engine 5 release, which includes cutting-edge features such as Lumen (a dynamic global illumination system) and Nanite (a virtualized geometry system that allows for the use of highly detailed models without performance hits).

If your desktop game requires breathtaking visual effects and highly detailed textures, Unreal Engine provides the best tools to achieve that. It’s particularly suited for action, adventure, and open-world games that need to deliver immersive, cinematic experiences.

2. Blueprints Visual Scripting

One of Unreal Engine’s defining features is its Blueprints Visual Scripting system. This system allows developers to create game logic without writing a single line of code, making it incredibly user-friendly for designers and non-programmers. Developers can use Blueprints to quickly build prototypes or game mechanics and even create entire games using this system. While Unreal also supports C++ for those who prefer traditional coding, Blueprints offer an accessible way to develop complex systems.

3. Advanced Physics and Animation Systems

Unreal Engine is also praised for its robust physics and animation systems. It uses Chaos Physics to simulate realistic destruction, making it perfect for games that require accurate physics-based interactions. Additionally, Unreal Engine’s animation tools, including Control Rig and Animation Blueprints, offer in-depth control over character movements and cinematics, helping developers craft highly polished, fluid animations.

4. High-Performance Real-Time Rendering

Thanks to its real-time rendering capabilities, Unreal Engine is often the engine of choice for cinematic trailers, architectural visualization, and other projects that require real-time photorealism. These rendering tools allow developers to achieve high-quality visuals without compromising on performance, which is particularly important for large-scale desktop games that demand a balance between graphics and frame rates.

5. Open-Source Flexibility

Unreal Engine is open-source, which means that developers have access to the engine’s full source code. This level of access allows for complete customization, enabling developers to modify the engine to suit their specific game needs. This is an attractive feature for larger studios or developers working on ambitious projects where customization and optimization are critical.

Which Engine Is Best for Desktop Game Development?

Both Unity and Unreal Engine have their strengths and are widely used in desktop game development. The choice between the two largely depends on the type of game you want to create and your specific needs as a developer:

Choose Unity if you’re looking for versatility, ease of use, and multi-platform support. Unity excels in 2D games, mobile games, and projects that require quick prototyping or simple development pipelines. It’s ideal for indie developers or small teams who want a cost-effective solution with a gentle learning curve.

Choose Unreal Engine if your goal is to create a visually stunning, high-performance desktop game. Unreal is the best choice for AAA-quality games or those that require photorealistic graphics, complex environments, and advanced physics. It’s suited for larger teams or developers with experience in game development who want to push the boundaries of what’s visually possible.

Ultimately, both Unity and Unreal Engine are powerful tools in their own right, and the best engine for you depends on your game’s goals and technical requirements.

0 notes

Text

:]

#don't mind weird artefacts on skirt#i messed up the initial render and her undies are clipping through#anthro#moth#animation#ai shader for 3d model#neuroslug

474 notes

·

View notes

Text

Car modeled taking reference from one of Damon Moran drawing.

#3d modeling#3d model#art#blender#zbrush#3ds max#maya#3d render#Cartoon shader#toon shader#Cartoon car#Car#artitst on tumblr#blender eevee#Black car#no ai art#3d concept#3d car

1 note

·

View note

Text

Hire Unity 3D Developer: A Comprehensive Step to Achieving Game Development Success

Why Hire a Unity 3D Developer?

Among these powerful game engines in today's gaming world is Unity 3D, which, besides showing versatile capability in the support of game creation, be it in the field of 2D, 3D, VR, or AR games, really shows some pretty promising versatility due to the fact that developers are able to easily and freely create their code for cross-platform applications, thus making the possibility of creating a game to work on all forms of platforms, such as PC, mobile, consoles, and web browsers. To offer a high-quality, immersive gaming experience, one needs an expert Unity 3D developer for various reasons:

Expertise in Game Development: A Unity 3D Professional knows C#-language scripts, which form Unity's scripting language, and understands expertise in the Unity engine. An expert can proficiently implement game mechanics, physics, and AI components within your project.

Cross-Platform Development: With Unity 3D, a developer can create cross-platform games that will cross all the platforms. Be it iOS, Android, Windows, macOS, or even VR devices, Unity 3D offers flexibility that reduces the time and cost used to develop specific platform versions of your game.

Custom solutions: The reality is each game is different, so a professional Unity 3D developer can indeed provide you with custom solutions based on the requirements; this could be from creating shaders to implementation of multiplayer functionality to gameplay performance optimization, so you can realize your vision.

Use advanced features: With such advanced features as real-time rendering, particle systems, and built-in physics engines, Unity 3D developers can indeed really make visually impressive and smooth gameplay occur.

Key Skills to Hunt for in a Unity 3D Developer

It would be pertinent here to look out at all key skills for a Unity 3D developer that could help your project grow. Just essential skills include:

C#: Unity 3D is built using C# as its primary choice of programming language. A Unity game or application needs any programmer to possess in-depth skills using C# in order to implement his game logic, control objects, or manage various aspects of gameplay logic.

Unity Engine Proficiency: The developer needs to be hands-on in the use of the Unity editor, integration of assets, lighting, animation, and physics. The extent of how well you will make use of the full features of Unity will depend directly on the proficiency.

3D Modeling: 3D Modeling and Animation Some, but not all, Unity developers have knowledge on how to integrate 3D models and animations. It would thus be very beneficial if they knew how to work together with designers and artists.

Knowledge of Game Physics: A good Unity developer should know enough simulation of realistic physics to control gravity, character movement, or interaction among objects. This is of paramount importance for lifelike game environments.

Development of Multiplayer Games: If the game you plan will be multiplayer, a Unity developer should know how to implement multiplayer networking frameworks, manage the synchronization among the users, and use server-side logic.

AR/VR Development: In this, you would be searching for developers that have the experience of working with hardware like Oculus Rift, HTC Vive, or Microsoft Hololens. They should know how to integrate those devices and optimize the game for immersive experiences.

Where to Find Unity 3D Developers

There are innumerable platforms and avenues that can help you find qualified Unity 3D developers:

Freelance Platforms: Such websites as Upwork, Freelancer, and Toptal provide highly skilled Unity 3D freelance developers. You may check their portfolios, ratings from previous clients, and experience relevant to the project you need to ensure you hire the right person.

Development Agencies: Specialized firms like AIS Technolabs hire experienced Unity 3D developers and, because of an agency, you will probably receive a team of developers with various skill sets to ensure end-to-end support for your project.

Game Developer Communities: Sometimes Unity Connect, GitHub, and forums with Unity developers can give you the necessary results. You would get an idea about the work of the developer from contributions and project showcases.

LinkedIn: This is another very good place where you can look for Unity developers. Here, you can browse through profiles of different varieties, see all the recommendations, and even post job postings to find the right kind of candidates for the positions at hand.

How to Choose the Best Unity 3D Developer for Your Project

After shortlisting a few, follow the following steps to choose the best Unity 3D developer.

Port Folio: See what they have done before. See if they have worked on games like yours in terms of style and complexity. See if they have maintained uniformity in their quality and a proper understanding of game mechanics.

Assess Their Problem-Solving Skills: Game development oftentimes deals with solving seemingly complex problems, be it performance optimization, bug fixes, or implementing a feature. Ask the developer what kind of challenges they faced in past projects and how they overcame them.

Test Technical Knowledge: You can perform technical interviews for determining their competency with Unity, C#, and any other specific requirements your project might have. Even give them some small task/test to check their coding abilities.

Communication and Collaboration: Game development often involves collaboration between designers, artists, and other people on your team. Ensure that the developer can communicate well with your team.

Talk Through Deadlines and Budget: Your project deadlines and budget should be well discussed with the developer. A good developer should be able to give you a realistic timeline and even a cost estimate based on what you require.

Conclusion

Here at AIS Technolabs are skilled Unity 3D developers specializing in creating enriching cross-platform games and engaging interfaces, which entail deep knowledge in game mechanics, VR/AR development, and real-time simulations. With an optimized and unwinding user experience, ensuring that each vision is actualised at the forefront by way of cutting-edge technology, AIS Technolabs stands as a top preference among businesses looking forward to hiring Unity 3D developers. If you require more information or would like to discuss the process of hiring a Unity 3D developer, please do not hesitate to contact us!

View source link:

FAQ’s

1. What is Unity 3D? And why would anybody hire a Unity 3D developer?

Unity 3D is undoubtedly one of the most potent game engines in creating games and applications for 2D, 3D, VR, and even AR, and with the aid of a developer in Unity 3D with a side, you would undoubtedly reach the level of proficiency you aspire to in developing highly visual games that are highly advanced in their technological efficiency too, with the prospect of running on a multi-platform mobile, PC, and also console.

2. The best set of skills to hire a Unity 3D developer

Proficiency in C# programming experience with Unity game engine features; knowledge of the integration of 3D modeling and animation; knowledge of game physics, cross-platform optimization, AR/VR development, and multiplayer systems. Depending on your project, some might need one or the other.

3. How much does a Unity 3D developer cost?

The prices would depend on the project, the developer, and location. Freelancers are pretty variable across this scale, from $25 an hour up to $150 an hour. Development agencies have their rates structured according to the scope of the project.

4. Freelance Unity 3D Developer or a Development Agency?

However, for bigger or more complex projects, a development agency like AIS Technolabs makes sure that you'll have your very own set of developers with range expertise and comprehensive project management. For smaller projects or limited budgets, freelancers may be the way to go sometimes with Unity 3D developers.

5. Can Unity 3D developers build cross-platform games?

Yes, Unity 3D has cross-platform capabilities. With the help of a good Unity 3D programmer, it can be possible to create a game that can just run perfectly on all kinds of platforms, whether mobile platforms (iOS and Android), PCs, consoles (PlayStation and Xbox), and others using VR gear

#hire developers#hire#developer#india#usa#canada#virtual reality#hire unity 3d developer#unity#unity3d

0 notes

Text

What are Game Engines and How Do They Work?

At the core of every video game lies a game engine - sophisticated software that handles the rendering of graphics, the physics simulations, the playback of animations and sound, the implementation of game rules, handling of input, and much more. Without a game engine, game development would be immensely difficult and time-consuming. Let's take a closer look at what they are and how they enable efficient game development. What is a game engine? A Game Engine is a framework designed for the creation and development of video games. They provide developers with a way to implement advanced game functionality without having to write it from scratch for each new project. Some key characteristics of them include: - Renderer: Handles the rendering of 3D graphics, shaders, textures, sprite animations, lighting effects and more to display the visual elements of the game. Popular 3D graphics APIs supported include Direct3D and OpenGL. - Physics engine: Simulates real-world physics to determine how objects in the game interact - for example how characters and objects move, collide or are affected by forces like gravity. Popular physics engines include PhysX, Havok and Bullet. - Animation system: Manages skeletal meshes and animation playback for game characters and creatures. Animation data is tied to the physics simulation for realistic movement. - Scripting support: Allows incorporating scripting languages like C#, Lua or JavaScript to handle game logic, AI, events and interactions through pre-defined functions and classes. - Asset management: Provides tools to import, organize and access game assets like models, textures, sound effects and more used in the game world. - Networking functionality: Integrates online multiplayer support through features like network transport layers and client-server or peer-to-peer architectures. - Integrated development environment (IDE): Provides a unified interface to access and optimize all features through visual editors, debuggers and other tools during development. - Godot: A fully-featured, free and open source 2D and 3D engine well-suited for indie games. Scriptable in GDScript with a node-based visual scene editor.

Get more insights on Game Engines

About Author:

Ravina Pandya, Content Writer, has a strong foothold in the market research industry. She specializes in writing well-researched articles from different industries, including food and beverages, information and technology, healthcare, chemical and materials, etc. (https://www.linkedin.com/in/ravina-pandya-1a3984191)

0 notes

Text

Industry Practice Blog 1- The Rise of Hyper-Realistic 3D Characters: Blurring the Lines Between Digital and Reality:

As the world of 3D character modeling keeps on developing, we are witnessing a remarkable shift towards exceptional degrees of realism and hyper-realism. This change is being driven by an intersection of technological advancements, creative ingenuity, and a constant quest for immersive digital experiences.

The Pursuit of Realism:

The articles [1], [2], and [5] feature the expanded spotlight on accomplishing realism and hyper-realism in 3D character modeling. This includes careful meticulousness, from exact physical designs to nuanced expressions and movements. Procedures like photogrammetry, high-dynamic-range imaging, and high level delivering are empowering 3D craftsmen to catch the complicated subtleties of the human structure with striking precision.

The outcome is another generation of 3D characters that are for all intents and purposes undefined from their real-world counterparts. As the article [2] notes, present day 3D character models gloat an extraordinary degree of detail and authenticity, with high-resolution textures, high level shaders, and genuinely based delivering strategies making a consistent mix of the computerized and the physical.

The Impact of Technological Advancements

Fundamental this shift towards hyper-authenticity is a wave of mechanical progressions that are changing the scene of 3D person displaying. As the articles [1], [3], and [4] examine, the integration of artificial intelligence (AI) and is improving on the creation process, mechanizing complex undertakings, and adding profundity and nuance to digital characters.

Besides, the rise of virtual and expanded reality (VR/AR) advances is obscuring the lines between the computerized and actual universes, empowering more vivid and intelligent plan processes. The article [3] features how advancements in 3D filtering, volumetric video, and light field shows are ready to upset the manner in which we make and experience 3D characters.

The Broader Applications of Hyper-Realism

The effect of hyper-realistic 3D characters reaches out a beyond the domain of entertainment. As the articles [1], [4], and talk about, these progressions are transforming industries like medical services, education, and manufacturing, where similar digital portrayals are improving training, recreation, and joint effort.

The article dives into the capability of hyper-realistic avatars to give more precise and engaging representations of clients in virtual conditions, encouraging a more profound feeling of presence and emotional resonance. This has broad implications for the advancement of the metaverse and the fate of digital experiences.

The Challenges and Ethical Considerations

While the quest for hyper-realism in 3D character modeling holds colossal potential, it additionally raises significant moral contemplations. As the article [4] features, the improvement of deepfakes and the potential for abuse of this innovation should be painstakingly explored.

Furthermore, the article [5] stresses the requirement for 3D artists to work out some kind of harmony between technical mastery and artistic expression, guaranteeing that the mission for authenticity doesn't come to the detriment of inventiveness and creativity.

Conclusion

The development of 3D character modeling towards hyper-realism is a demonstration of the exceptional advancements in innovation and the unflinching imagination of 3D artists. As we keep on pushing the limits of what's potential, we should stay aware of the moral ramifications and the significance of keeping a harmony between specialized ability and imaginative vision.

The fate of 3D pcharacter design vows to be an interesting and groundbreaking excursion, one that will keep on obscuring the lines between the digital and the physical, and rethink the manner in which we experience and collaborate with the virtual world.

Bibliography:-

Reporter, S. (2023, August 18). Emerging trends in 3D character modeling - Your Harlow. Your Harlow. https://www.yourharlow.com/2023/08/18/emerging-trends-in-3d-character-modeling/

Salvi, P. (2023, November 15). A Peek into the World of 3D Character Modeling. Encora. https://www.encora.com/insights/a-peek-into-the-world-of-3d-character-modeling

Orgeron, D. (2023, February 14). CES 2023: 5 trends that could impact 3D artists - TurboSquid Blog. TurboSquid Blog. https://blog.turbosquid.com/2023/02/14/ces-2023-5-trends-that-could-impact-3d-artists/

Lucid Reality Labs. (2022, October 21). Digital Twins and Hyper-Realistic Avatars, the 3D modeling of 2022. https://www.linkedin.com/pulse/digital-twins-hyper-realistic-avatars-3d-modeling-2022-

Bob, Bob, & Wow-How. (2024, February 8). The Illusion of Reality: Exploring hyperrealism in 3D animation. Wow-How Studio - Video Production, 2D & 3D Animation. https://wow-how.com/articles/hyperrealism-in-animation

0 notes