#ai at the edge

Explore tagged Tumblr posts

Text

Not telling y'all that you should be able to identify AI slop (but it is a valuable skill, you totes should), but if you're to be accusing artists of being AI left and right at least go and do your homework, or at least do the bare minimum and use AI identification tools like Hive Moderation, so you 1- don't ruin someone's lifehood 2- don't make a clown out of yourself maybe

Like, i get it, AI slop and "AI artists" pretending to be genuine is getting harder and harder to identify, but just accusing someone out of the blue and calling it a day doesn't make it any better.

The AI clowns shifted to styles that have less "tells" and the AI arts are becoming better. Yeah, it sucks ass.

They're also integrating them with memes, so you chuckle and share, like those knights with pink backgrounds, some cool frog and a funny one liner, so you get used to their aesthetic.

This is an art from the new coming set Final Fantasy for MtG. This is someone on Reddit accusing someone of using AI. From what i can tell, and i fucking hate AI, there is NO AI used on this image.

As far as i can tell and as far as any tool i've used, the Artist didn't use AI. which leads to the next one:

they accused the artist of this one of using Ai. the name of this artist is Nestor Ossandon.

He as already been FALSELY ACCUSED of using AI, because he drew a HAND THAT LOOKED A LITTLE WEIRD, which caused a statement from D&D Beyond, confirming that no AI has been used.

Not to repeat mysef, they're accusing the art above, that is by Nestor, to have used Ai.

REAL artists are not machines. And just like the AI slop, we are not perfect and we make mistakes. The hands we draw have wonky fingers sometimes. The folds we draw are weird. But we are REAL. We are real people. And hey, some of our "mistakes" sometimes are CHOICES. Artistic choices are a thing yo.

If you're to accuse someone of using Ai, i know it's getting hard to identify. But come on. At least do your due diligence.

#no#i will not “tag” the Ai artists of the catsune miku and the cat cux for all i care AI artists can go to hell and burn#but like#there are many of them#and when you figure out how to spot ai and how the AI generate the images#please trust me on this one#it gets super easy to ident like 80% of most of it#the catsune miku is the HARDEST to ident so far#because it did something out of the ordinary#but otherwise the others have very easy tells#they're trying to mimic styles like watercolors and acrylic#that have blurred edges#and impressionism#that have undefined shapes#so theyr “mistakes” pass as intention#but that's besides the point#what i want here is people to just think a little but before randomly accuse people#cuz it's really getting out of had#and god i do love seeing an AI artist getting their wig yanked out of their fucking scalp for pretending to be a human#but y'all need to know when to do it#some of you don't know how to behave and it shows man

6K notes

·

View notes

Text

Haven't been the same before reading this comment

#spiderverse#miles morales#gwen stacy#spidergwen#ghostflower#ai even hung upside down on the edge of my couch to try this like an idiot#can confirm you have to make an effort#atsv#raich rambles

2K notes

·

View notes

Text

Worship my ass loser

Kiss my perfect ass and thank me

#beta sub#beta slave#beta faggot#beta boi#beta sissy#findom queen#ai findom#findom sub#findom sissy#faggot humiliation#submisive faggot#exposed faggot#diaper faggot#ball torture#cbt and ballbusting#chastized#degrade and humiliate me#edging and denial#permanent sissy#permanent denial#keyholder#chastikey#chastisement#foot feddish#footgoddess#foot soles#goddess worship#findom worship#worship me

494 notes

·

View notes

Text

I was gonna do some serious sketches but got silly with them as usual...

#yes i did post one of these before but then deleted it because....well.#also don't ask why hiccup looks like that...i don't know man#nox draws#sketchies#🗡#dagur the deranged#hiccup haddock#rtte#race to the edge#httyd#dreamworks dragons#tw stabbing#(call this my honourary ides of march addition? /j)#tw blood#idk what more tws to add but like it's nothing graphic so idkkkk#also i think i am getting better at drawing his hair so i'm kinda proud of that#yayyy#yk sometimes i worry i'm gonnaget accused of like ai or smth because of the way i fuck up details and hands

541 notes

·

View notes

Text

🌸️ (watercolor on cotton rag paper)

#hu tao#watercolor#traditional art#透明水彩#genshin impact#my art#not ai art#random fact: i made my own hu tao cosplay a few years ago and the plum blossom branch was the most fun part to make#because to make the flower petals you use a lighter to burn/seal the edges of the fabric#it felt fitting for her

644 notes

·

View notes

Note

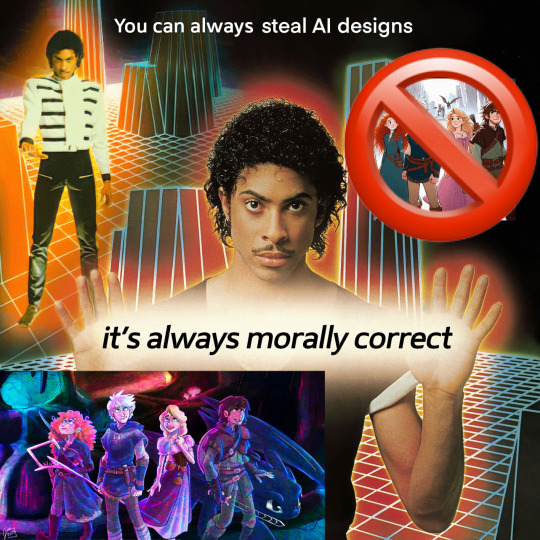

hey! i’m an artist and i was wondering what about the httyd crossover art made it obviously AI. i’m trying to get better at recognizing AI versus real art and i totally would have just not clocked that.

Hey! This is TOTALLY okay to not have recognized it, because I DIDN'T AT FIRST, EITHER. Unfortunately there’s no real foolproof way to distinguish real art from the fake stuff. However I have noticed a general rule of thumb while browsing these last few months.

So this is the AI generated image I used as inspiration. I will not be tagging the account that posted it because I do not condone bullying of any type, but it’s important to mention that this was part of a set of images:

This is important because one of the BIGGEST things you can use to your advantage is context clues. This is the thing that clued me in: right off the bat we can see that there is NO consistency between these three images. The art style and outfits change with every generated image. They're vaguely related (I.E. characters that resemble the Big Four are on some sort of adventure?) and that's about it. Going to the account in question proved that all they posted were AI generated images. All of which have many red flags, but for clarity's sake we'll stick with the one that I used.

The first thing that caught my eye was this???? Amorphous Blob in the background. Which is obviously supposed to be knights or a dragon or something.

Again, context clues come into play here. Artists will draw everything With A Purpose. And if what they're drawing is fanart, you are going to recognize most of what you see in the image. Even if there are mistakes.

In the context of this image, it looks like the Four are supposed to be running from these people. The thing that drew my attention to it was the fact that I Didn't Recognize The Villains, and this is because there is nothing to recognize. These shapes aren't Drago, or Grimmel, or Pitch, or any other villain we usually associate with ROTBTD. They're just Amorphous Blobs that are vaguely villain shaped.

Which brings me to my second point:

Do you see the way they're standing? There is no purpose to this. It throws the entire image off. Your eye is drawn to the Amorphous Villain Blobs in the background, and these characters are not reacting to them one bit.

Now I'm not saying that all images have to have a story behind them, but if this were created by a person, it clearly would have had one. Our group here is not telling a story, they are posing.

This is because the AI does not see the image as a whole, but as two separate components: the setting, and the description of the characters that the prompter dictates. I.E. "Merida from Brave, Jack Frost from ROTG, Rapunzel from Tangled, and Hiccup from HTTYD standing next to each other"

Now obviously the most pressing part of this prompt are the characters themselves. So the AI prioritizes that and tries to spit out something that WE recognize as "Merida from Brave, Jack Frost from ROTG, Rapunzel from Tangled, and Hiccup from HTTYD standing next to each other".

This, more times than not, is going to end up with this stagnant posing. Because AI cannot create, it can only emulate. And even then, it still can't do it right. Case in point:

This is not Hiccup. The AI totally thinks this is Eugene Fitzherbert. Look at the pose. The facial structure. The goatee. The smirk. The outfits. He's always next to Raps. Why does he have a quiver? Where's Toothless? His braids? His scar??

HE HAS BOTH OF HIS LEGS.

The AI. Cannot even get the most important part of it's prompt correct.

And that's just the beginning. Here:

More amorphous shapes.

So these are obviously supposed to be utility belts, but I mean. Look at them. The perspective is all off. There are useless straps. I don't even know what that cluster behind Jack's left arm is supposed to be.

This is a prime example of AI emulating without understanding structure.

You can see this particularly in Jack, between his hands, the "tassels" of his tunic, and the odd wrinkles of his boots. There's just not any structure here whatsoever.

Lastly, AI CANNOT CREATE PATTERNS.

Here are the side-by-sides of the shit I had to deal with when redesigning their outfits. Please someone acknowledge this. This killed me inside. THIS is most recognizable to me, and usually what I look for first if I'm wary about an art piece. These clusterfuck bunches of color. I hate them. I hate them so. much.

Anyways here's some other miscellaneous things I've noticed:

Danny Phantom Eyes

???? Thumb? (and random sword sheath)

Collarbone Necklace (corset from hell)

No Staff :( No Bow :(

What is that.

So yeah. Truly the best thing to do is to just. study it. A lot of times you aren't gonna notice anything just looking at the big picture, you need to zoom in and focus on the little details. Obviously I'm not like an expert in AI or anything, but I do have a degree in animation practices and I'm. You know. A human being. So.

In conclusion:

(Y'all should totally reblog my redesign of this btw)

#rotbtd#the big four#anti ai#ai discourse#fanart#ask#inbox#rise of the brave tangled dragons#httyd#how to train your dragon#hiccup horrendous haddock iii#brave#tangled#rapunzel#merida#jack frost#rotg#rise of the guardians#dreamworks#disney#hijack#frostcup#jackunzel#jarida#mericcup#hicunzel#crossover#hicless#rtte#race to the edge

1K notes

·

View notes

Text

Notes Game - Bladder Torture

notes game to make my bladder stretched to its limits. no cum, just edge and hold like the stupid dumb slut I am (MDNI) wanna help? reblogs, like and comments (any time you want), make me suffer like the useless whore I am

every 1 note is 1 minute to add to my holding

every 5 notes are 100 ml I have to drink

every 10 notes I press on my bladder for 5 seconds

every 50 notes I press my bladder on a counter for 10 sec and release for 5, 3 times

every 70 notes 10 squats

100 notes: after 1 hour I can't hold with my hands or cross my leg anymore

~ after 100 notes:

every 10 notes is a slap on my full bladder

every 20 notes a slap on my open spread pussy

every 40 notes lie on my belly with something under it for 5 min

200 notes: do a workout with full bladder, leaking is not an excuse to stop

220 notes: melt an ice cube in my cunt with panties on, can't remove them (fake pee)

250 notes: body write with humiliating words while sitting on the toilet

+++ I accept tasks, challenges, punishments in the comments/asks

~ punishment

leaking

drink a glass of water + add 10 min + fake pee

wetting / accident

drink 4 glass of water + add 30 min + lay on belly with a small ball on bladder for 10 min

will close on september 11th

#bladder challenge#bladder control#bladder desperation#bladder holding#bladder torture#cl!t torture#humiliation kink#omo hold#pee humiliation#piss holding#degrade and humiliate me#piss humiliation#ruined 0rgasm#0rgasm denial#0rgasm control#bd/sm daddy#pain slvt#free use slvt#dumb slvt#omo challenge#c0cksleeve#c0ckslut#c0ckwh0re#stupid slvt#daddy's good girl#edging kink#desperate wh0re#attention wh0r3#cnc free use#ai pee desperation

760 notes

·

View notes

Text

while I agree that some anti-generative AI arguments are weak and revealing (defense of copyright, or the nebulous idea of soul and what makes art Real Art) nothing's currently gonna shake the association between generative ai and conservative twitter techbros to me. Like I sometimes see "heh... the anti ai people are even more annoying than the ai people" posts and respectfully I still unfortunately use twitter and imo that is not fucking true

#to be clear I do Not like generative ai personally I just think a lot of people come at it from shitty angles on either side#and you've got people saying shit like. suggesting IP holding corporations should issue even more lawsuits#or hand waving any edge cases in regards to disability etc with 'everyone can learn to draw perfectly they're just not trying hard enough'#or trying to categorically define art based on presence of a human soul (subjective and religious concept)#none of this is as bad as twitter techbros though. the things they have to say about art aren't any better

85 notes

·

View notes

Text

oh here's something to really kick the hornet's nest: any argument against ai art that is basically saying ai is cheating because its too easy, that the process is the most important part, that it's lazy...

...is fundamentally the same argument as people that hate accessibility options in videogames.

#yall are really out here telling people playing around with ai to get gud. its not even ironic thats just what you are doing#and yes obviously you can come up with some scenarios where the distinction matters.#entering ai art in a digital art competition would be just as fucked as easy mode in a game tournament#but those are edge cases and not what the vast majority of people are mad about and you know it

67 notes

·

View notes

Text

No-reference two-hour scrapsketch of Elu Thingol, single hard brush only, for training. Mostly post this because someone accused me of my art being AI and like mate I don't know how much more obvious my human brushstrokes could be. It's not even not AI it's not even GIMP it's basically MSpaint straight out of the 90s.

#Elu thingol#Thingol#Sindar#the silmarillion#Tolkien#Lee art#He can lock me in a tree a little bit if he wants as a kinky treat. I say faggotly#Lmao “ai detectors” have said the same piece of art is anywhere from 1% AI to 97% AI#They are absolutely bullshit algorithms with zero reliability that think the em dash is AI or pixel art is AI#Like they will say the Mona Lisa is AI. Be serious for the love of God use critical skills#Don't use AI to tell you if other stuff is AI I'm doing to tear my hair out.#I have consistent tells in my art from my wonky lace fixation to my hair technique to#To my leaving pixilated bits even in the pieces I smooth out to my jewelry/piercings#To unfinished edges or clothes. For the love of God it's so obviously not AI it's not even good enough to be#I did ONE good Lalwen and immediately got accused lmaoooooo like please let me take my own W#silm art

52 notes

·

View notes

Text

I imagine Virgil—while appearing like a regular crow—is just ever so slightly uncanny enough to tell you “that’s not a normal crow” (kinda like how Nikkie described the demon weasels in the Crooked House. They look like normal weasels, for the most part, but something about them seems…Wrong.)

Like, he’s just slightly too big. Sure, maybe he’s just a larger crow, they can get pretty big. But he’s just a bit larger than that. Almost the size of an average raven. But…he’s not a raven. He’s a crow. So why is he the size of a raven?

His eyes are too big. Sure, his eyes are also fucked up already, but ignoring that part. Normal crow eyes are small and beady. Virgil’s eyes are almost too big for his head. They’re almost the size and shape of a human’s.

His feathers are a Too Black for a natural crow. His beak is Too Sharp. His body is too amorphous. Like, yeah, it’s vaguely crow shaped when he’s perched or flying, just enough to not question it when you see him out of the corner of your eye, but if you look at him—really look at him—something about the shape is…odd. And the worst part is that you can’t even tell what is off about him. Is it his feathers? His wings? His talons? You’re not quite sure.

I mean, Jericho calls Virgil his “Weird Gross Crow” for a reason.

#idk I was drawing fanart of Jericho with Virgil#(which is being difficult bc my reference pose is…odd. to the point where I can’t tell if it’s ai or not)#and this came to me#Virgil the weird gross crow <3#legends of avantris#edge of midnight#virgil eom#virgil zurn

61 notes

·

View notes

Text

50 notes

·

View notes

Text

Headcanon that Ais never feels fully rested. He doesn't feel tired, but he doesn't feel right either. Not since joining the groupmind.

He sleeps, but his mind is never really at peace. When and if he manages to fall into a deep enough sleep, he's in a constant state of something akin to lucid dreaming.

He gets flashes of the other members of the group mind in place of any real rest. Their current actions; errant memories; whispers in long-dead languages he's leaned to understand.

#also sometimes when he wakes up he's *immediately* violent#wakes up in a rage sometimes and he doesn't really know why--he's the most dangerous one to wake up bc you never know WHO you'll get and#even if you get Ais he doesn't have the wherewithal to stop himself--damage is already done by the time he....#(what is that word i am looking for?)#the word for...debris floating on water? flotsam?? i swear there's another word...#also very difficult to tell when he's asleep - he gets in bed and just breathes evenly and you would think that he MUST be asleep but he#has that kind of ...discipline?? where he can force his body to relax#he sleeps on his back for the most part (less muscle strain) and lays eerily still it's v unnatural#if he's ultra comfy he's a stomach sleeper but Basically No One is aware of this#he'll stomach sleep with Princess & the pack sometimes#would sleep on the bed with his shoes on sometimes i think i'm sorry it's true. just hang 'em off the edge babe there's blood on the soles#sigh ais you are such a balancing act i need to write you MORE#ais touchstarved#toxintouch writing: headcanons#ais headcanon#queue: time for sleep#queued post

98 notes

·

View notes

Text

wearing my tightest shorts I have that barely cover my ass~

I drunk already nearly 2L of water and a big cup of coffee, with this shorts every step is a rub on my clit and pee hole, every time I sit or just squirm they press firmer on my bladder, it's a pure torture😖💦

my bladder hurts so much and the shorts make it even worse, what can I do please???

torture me, feel free to write in asks and comments

#ai pee desperation#bladder torture#piss holding#bladder challenge#bladder control#bladder desperation#bladder holding#humiliation kink#omo hold#pee humiliation#piss slvt#desperate to pee#piss kink#piss slave#piss humiliation#degrade and humiliate me#free use slvt#cnc free use#full bladder#pee kink#dumb wh0re#c0ckslut#c0ckwh0re#dumb puppy#edging kink#edging slave#stupid slvt#bd/sm slave

302 notes

·

View notes

Text

If any of the Batsiblings ever end up in serious medical trouble, to the point they've been forced onto bedrest or put into a medically-induced coma, their siblings will rotate in shifts (and require physical force to be removed sometimes) or hover at windowsills and talk at the patient and meddle so much that Leslie keeps having to smack their hands away from IV lines or wrestle back her stethoscope. But the second said sibling wakes up it converts spontaneously to around the clock taunting, shoulder punches that nearly send them and their crutches into the floor, silent help during PT, and someone coming by to coerce pain meds onto you with bedside manner so bad that you forget all about almost dying and start planning homicide.

#You guys... I have the scariest amount of deja vu about this one#ISTG i have made this post before.#But I myself cannot find it#In any case I assume renditions of it have been made over and over this is not a novel concept#which gets us thinking about what a novel concept even is and the fact that those poor baby AIs (I hate em) are just doing their best to#mimic the way our fleshy brain soup pours everything together and blends it into something new#but they're shitty at it so you can see the hard edges of the words they ripped out of a newspaper to make their collage#devastating truly#batman#dc comics#batfamily#personal#shitpost#batpost

94 notes

·

View notes