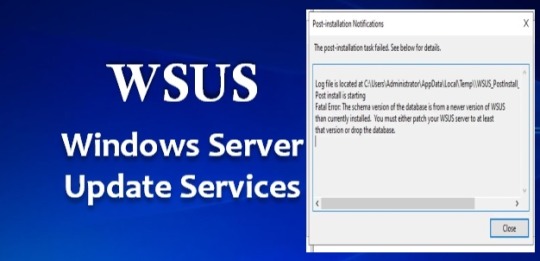

#The schema version of the database

Explore tagged Tumblr posts

Text

The schema version of the database is from a newer version of wsus

The WSUS installation from Server Manager fails with a fatal error stating, “The schema version of the database is from a newer version of WSUS than currently installed.” This error requires either patching the WSUS server to at least that version or dropping the database. Windows update indicates that the system is up to date. Please see how to delete ADFS Windows Internal Database without…

View On WordPress

#“WSUS Post-deployment Configuration Failed#Microsoft Windows#Remove Roles and Remove features#The schema version of the database#When prompted with the "Remove Roles and features Wizard"#Windows Internal Database (WID)#Windows Server 2012#Windows Server 2016#Windows Server 2019#Windows Server 2022#Windows Server 2025#WSUS#WSUS Database#WSUS Updates (Windows Server Update Services

0 notes

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

The Data Migration Odyssey: A Journey Across Platforms

As a database engineer, I thought I'd seen it all—until our company decided to migrate our entire database system to a new platform. What followed was an epic adventure filled with unexpected challenges, learning experiences, and a dash of heroism.

It all started on a typical Monday morning when my boss, the same stern woman with a flair for the dramatic, called me into her office. "Rookie," she began (despite my years of experience, the nickname had stuck), "we're moving to a new database platform. I need you to lead the migration."

I blinked. Migrating a database wasn't just about copying data from one place to another; it was like moving an entire city across the ocean. But I was ready for the challenge.

Phase 1: Planning the Expedition

First, I gathered my team and we started planning. We needed to understand the differences between the old and new systems, identify potential pitfalls, and develop a detailed migration strategy. It was like preparing for an expedition into uncharted territory.

We started by conducting a thorough audit of our existing database. This involved cataloging all tables, relationships, stored procedures, and triggers. We also reviewed performance metrics to identify any existing bottlenecks that could be addressed during the migration.

Phase 2: Mapping the Terrain

Next, we designed the new database design schema using schema builder online from dynobird. This was more than a simple translation; we took the opportunity to optimize our data structures and improve performance. It was like drafting a new map for our city, making sure every street and building was perfectly placed.

For example, our old database had a massive "orders" table that was a frequent source of slow queries. In the new schema, we split this table into more manageable segments, each optimized for specific types of queries.

Phase 3: The Great Migration

With our map in hand, it was time to start the migration. We wrote scripts to transfer data in batches, ensuring that we could monitor progress and handle any issues that arose. This step felt like loading up our ships and setting sail.

Of course, no epic journey is without its storms. We encountered data inconsistencies, unexpected compatibility issues, and performance hiccups. One particularly memorable moment was when we discovered a legacy system that had been quietly duplicating records for years. Fixing that felt like battling a sea monster, but we prevailed.

Phase 4: Settling the New Land

Once the data was successfully transferred, we focused on testing. We ran extensive queries, stress tests, and performance benchmarks to ensure everything was running smoothly. This was our version of exploring the new land and making sure it was fit for habitation.

We also trained our users on the new system, helping them adapt to the changes and take full advantage of the new features. Seeing their excitement and relief was like watching settlers build their new homes.

Phase 5: Celebrating the Journey

After weeks of hard work, the migration was complete. The new database was faster, more reliable, and easier to maintain. My boss, who had been closely following our progress, finally cracked a smile. "Excellent job, rookie," she said. "You've done it again."

To celebrate, she took the team out for a well-deserved dinner. As we clinked our glasses, I felt a deep sense of accomplishment. We had navigated a complex migration, overcome countless challenges, and emerged victorious.

Lessons Learned

Looking back, I realized that successful data migration requires careful planning, a deep understanding of both the old and new systems, and a willingness to tackle unexpected challenges head-on. It's a journey that tests your skills and resilience, but the rewards are well worth it.

So, if you ever find yourself leading a database migration, remember: plan meticulously, adapt to the challenges, and trust in your team's expertise. And don't forget to celebrate your successes along the way. You've earned it!

6 notes

·

View notes

Text

Top 10 Laravel Development Companies in the USA in 2024

Laravel is a widely-used open-source PHP web framework designed for creating web applications using the model-view-controller (MVC) architectural pattern. It offers developers a structured and expressive syntax, as well as a variety of built-in features and tools to enhance the efficiency and enjoyment of the development process.

Key components of Laravel include:

1. Eloquent ORM (Object-Relational Mapping): Laravel simplifies database interactions by enabling developers to work with database records as objects through a powerful ORM.

2. Routing: Laravel provides a straightforward and expressive method for defining application routes, simplifying the handling of incoming HTTP requests.

3. Middleware: This feature allows for the filtering of HTTP requests entering the application, making it useful for tasks like authentication, logging, and CSRF protection.

4. Artisan CLI (Command Line Interface): Laravel comes with Artisan, a robust command-line tool that offers commands for tasks such as database migrations, seeding, and generating boilerplate code.

5. Database Migrations and Seeding: Laravel's migration system enables version control of the database schema and easy sharing of changes across the team. Seeding allows for populating the database with test data.

6. Queue Management: Laravel's queue system permits deferred or background processing of tasks, which can enhance application performance and responsiveness.

7. Task Scheduling: Laravel provides a convenient way to define scheduled tasks within the application.

What are the reasons to opt for Laravel Web Development?

Laravel makes web development easier, developers more productive, and web applications more secure and scalable, making it one of the most important frameworks in web development.

There are multiple compelling reasons to choose Laravel for web development:

1. Clean and Organized Code: Laravel provides a sleek and expressive syntax, making writing and maintaining code simple. Its well-structured architecture follows the MVC pattern, enhancing code readability and maintainability.

2. Extensive Feature Set: Laravel comes with a wide range of built-in features and tools, including authentication, routing, caching, and session management.

3. Rapid Development: With built-in templates, ORM (Object-Relational Mapping), and powerful CLI (Command Line Interface) tools, Laravel empowers developers to build web applications quickly and efficiently.

4. Robust Security Measures: Laravel incorporates various security features such as encryption, CSRF (Cross-Site Request Forgery) protection, authentication, and authorization mechanisms.

5. Thriving Community and Ecosystem: Laravel boasts a large and active community of developers who provide extensive documentation, tutorials, and forums for support.

6. Database Management: Laravel's migration system allows developers to manage database schemas effortlessly, enabling version control and easy sharing of database changes across teams. Seeders facilitate the seeding of databases with test data, streamlining the testing and development process.

7. Comprehensive Testing Support: Laravel offers robust testing support, including integration with PHPUnit for writing unit and feature tests. It ensures that applications are thoroughly tested and reliable, reducing the risk of bugs and issues in production.

8. Scalability and Performance: Laravel provides scalability options such as database sharding, queue management, and caching mechanisms. These features enable applications to handle increased traffic and scale effectively.

Top 10 Laravel Development Companies in the USA in 2024

The Laravel framework is widely utilised by top Laravel development companies. It stands out among other web application development frameworks due to its advanced features and development tools that expedite web development. Therefore, this article aims to provide a list of the top 10 Laravel Development Companies in 2024, assisting you in selecting a suitable Laravel development company in the USA for your project.

IBR Infotech

IBR Infotech excels in providing high-quality Laravel web development services through its team of skilled Laravel developers. Enhance your online visibility with their committed Laravel development team, which is prepared to turn your ideas into reality accurately and effectively. Count on their top-notch services to receive the best as they customise solutions to your business requirements. Being a well-known Laravel Web Development Company IBR infotech is offering the We provide bespoke Laravel solutions to our worldwide customer base in the United States, United Kingdom, Europe, and Australia, ensuring prompt delivery and competitive pricing.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $25 — $49 / hr

No. Employee: 10–49

Founded Year : 2014

Verve Systems

Elevate your enterprise with Verve Systems' Laravel development expertise. They craft scalable, user-centric web applications using the powerful Laravel framework. Their solutions enhance consumer experience through intuitive interfaces and ensure security and performance for your business.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $25

No. Employee: 50–249

Founded Year : 2009

KrishaWeb

KrishaWeb is a world-class Laravel Development company that offers tailor-made web solutions to our clients. Whether you are stuck up with a website concept or want an AI-integrated application or a fully-fledged enterprise Laravel application, they can help you.

Additional Information-

GoodFirms : 5.0

Avg. hourly rate: $50 - $99/hr

No. Employee: 50 - 249

Founded Year : 2008

Bacancy

Bacancy is a top-rated Laravel Development Company in India, USA, Canada, and Australia. They follow Agile SDLC methodology to build enterprise-grade solutions using the Laravel framework. They use Ajax-enabled widgets, model view controller patterns, and built-in tools to create robust, reliable, and scalable web solutions

Additional Information-

GoodFirms : 4.8

Avg. hourly rate: $25 - $49/hr

No. Employee: 250 - 999

Founded Year : 2011

Elsner

Elsner Technologies is a Laravel development company that has gained a high level of expertise in Laravel, one of the most popular PHP-based frameworks available in the market today. With the help of their Laravel Web Development services, you can expect both professional and highly imaginative web and mobile applications.

Additional Information-

GoodFirms : 5

Avg. hourly rate: < $25/hr

No. Employee: 250 - 999

Founded Year : 2006

Logicspice

Logicspice stands as an expert and professional Laravel web development service provider, catering to enterprises of diverse scales and industries. Leveraging the prowess of Laravel, an open-source PHP framework renowned for its ability to expedite the creation of secure, scalable, and feature-rich web applications.

Additional Information-

GoodFirms : 5

Avg. hourly rate: < $25/hr

No. Employee: 50 - 249

Founded Year : 2006

Sapphire Software Solutions

Sapphire Software Solutions, a leading Laravel development company in the USA, specialises in customised Laravel development, enterprise solutions,.With a reputation for excellence, they deliver top-notch services tailored to meet your unique business needs.

Additional Information-

GoodFirms : 5

Avg. hourly rate: NA

No. Employee: 50 - 249

Founded Year : 2002

iGex Solutions

iGex Solutions offers the World’s Best Laravel Development Services with 14+ years of Industry Experience. They have 10+ Laravel Developer Experts. 100+ Elite Happy Clients from there Services. 100% Client Satisfaction Services with Affordable Laravel Development Cost.

Additional Information-

GoodFirms : 4.7

Avg. hourly rate: < $25/hr

No. Employee: 10 - 49

Founded Year : 2009

Hidden Brains

Hidden Brains is a leading Laravel web development company, building high-performance Laravel applications using the advantage of Laravel's framework features. As a reputed Laravel application development company, they believe your web application should accomplish the goals and can stay ahead of the rest.

Additional Information-

GoodFirms : 4.9

Avg. hourly rate: < $25/hr

No. Employee: 250 - 999

Founded Year : 2003

Matellio

At Matellio, They offer a wide range of custom Laravel web development services to meet the unique needs of their global clientele. There expert Laravel developers have extensive experience creating robust, reliable, and feature-rich applications

Additional Information-

GoodFirms : 4.8

Avg. hourly rate: $50 - $99/hr

No. Employee: 50 - 249

Founded Year : 2014

What advantages does Laravel offer for your web application development?

Laravel, a popular PHP framework, offers several advantages for web application development:

Elegant Syntax

Modular Packaging

MVC Architecture Support

Database Migration System

Blade Templating Engine

Authentication and Authorization

Artisan Console

Testing Support

Community and Documentation

Conclusion:

I hope you found the information provided in the article to be enlightening and that it offered valuable insights into the top Laravel development companies.

These reputable Laravel development companies have a proven track record of creating customised solutions for various sectors, meeting client requirements with precision.

Over time, these highlighted Laravel developers for hire have completed numerous projects with success and are well-equipped to help advance your business.

Before finalising your choice of a Laravel web development partner, it is essential to request a detailed cost estimate and carefully examine their portfolio of past work.

#Laravel Development Companies#Laravel Development Companies in USA#Laravel Development Company#Laravel Web Development Companies#Laravel Web Development Services

2 notes

·

View notes

Text

Understanding ER Modeling and Database Design Concepts

In the world of databases, data modeling is a crucial process that helps structure the information stored within a system, ensuring it is organized, accessible, and efficient. Among the various tools and techniques available for data modeling, Entity-Relationship (ER) diagrams and database normalization stand out as essential components. This blog will delve into the concepts of ER modeling and database design, demonstrating how they contribute to creating an efficient schema design.

ER Modeling

What is an Entity-Relationship Diagram?

An Entity-Relationship Diagram, or ERD, is a visual representation of the entities, relationships, and data attributes that make up a database. ERDs are used as a blueprint to design databases, offering a clear understanding of how data is structured and how entities interact with one another.

Key Components of ER Diagrams

Entities: Entities are objects or things in the real world that have a distinct existence within the database. Examples include customers, orders, and products. In ERDs, entities are typically represented as rectangles.

Attributes: Attributes are properties or characteristics of an entity. For instance, a customer entity might have attributes such as CustomerID, Name, and Email. These are usually represented as ovals connected to their respective entities.

Relationships: Relationships depict how entities are related to one another. They are represented by diamond shapes and connected to the entities they associate. Relationships can be one-to-one, one-to-many, or many-to-many.

Cardinality: Cardinality defines the numerical relationship between entities. It indicates how many instances of one entity are associated with instances of another entity. Cardinality is typically expressed as (1:1), (1:N), or (M:N).

Primary Keys: A primary key is an attribute or set of attributes that uniquely identify each instance of an entity. It is crucial for ensuring data integrity and is often underlined in ERDs.

Foreign Keys: Foreign keys are attributes that establish a link between two entities, referencing the primary key of another entity to maintain relationships.

Steps to Create an ER Diagram

Identify the Entities: Start by listing all the entities relevant to the database. Ensure each entity represents a significant object or concept.

Define the Relationships: Determine how these entities are related. Consider the type of relationships and the cardinality involved.

Assign Attributes: For each entity, list the attributes that describe it. Identify which attribute will serve as the primary key.

Draw the ER Diagram: Use graphical symbols to represent entities, attributes, and relationships, ensuring clarity and precision.

Review and Refine: Analyze the ER Diagram for completeness and accuracy. Make necessary adjustments to improve the model.

The Importance of Normalization

Normalization is a process in database design that organizes data to reduce redundancy and improve integrity. It involves dividing large tables into smaller, more manageable ones and defining relationships among them. The primary goal of normalization is to ensure that data dependencies are logical and stored efficiently.

Normal Forms

Normalization progresses through a series of stages, known as normal forms, each addressing specific issues:

First Normal Form (1NF): Ensures that all attributes in a table are atomic, meaning each attribute contains indivisible values. Tables in 1NF do not have repeating groups or arrays.

Second Normal Form (2NF): Achieved when a table is in 1NF, and all non-key attributes are fully functionally dependent on the primary key. This eliminates partial dependencies.

Third Normal Form (3NF): A table is in 3NF if it is in 2NF, and all attributes are solely dependent on the primary key, eliminating transitive dependencies.

Boyce-Codd Normal Form (BCNF): A stricter version of 3NF where every determinant is a candidate key, resolving anomalies that 3NF might not address.

Higher Normal Forms: Beyond BCNF, there are Fourth (4NF) and Fifth (5NF) Normal Forms, which address multi-valued dependencies and join dependencies, respectively.

Benefits of Normalization

Reduced Data Redundancy: By storing data in separate tables and linking them with relationships, redundancy is minimized, which saves storage and prevents inconsistencies.

Improved Data Integrity: Ensures that data modifications (insertions, deletions, updates) are consistent across the database.

Easier Maintenance: With a well-normalized database, maintenance tasks become more straightforward due to the clear organization and relationships.

Benefits of Normalization

ER Modeling and Normalization: A Symbiotic Relationship

While ER modeling focuses on the conceptual design of a database, normalization deals with its logical structure. Together, they form a comprehensive approach to database design by ensuring both clarity and efficiency.

Steps to Integrate ER Modeling and Normalization

Conceptual Design with ERD: Begin with an ERD to map out the entities and their relationships. This provides a high-level view of the database.

Logical Design through Normalization: Use normalization steps to refine the ERD, ensuring that the design is free of redundancy and anomalies.

Physical Design Implementation: Translate the normalized ERD into a physical database schema, considering performance and storage requirements.

Common Challenges and Solutions

Complexity in Large Systems: For extensive databases, ERDs can become complex. Using modular designs and breaking down ERDs into smaller sub-diagrams can help.

Balancing Normalization with Performance: Highly normalized databases can sometimes lead to performance issues due to excessive joins. It's crucial to balance normalization with performance needs, possibly denormalizing parts of the database if necessary.

Maintaining Data Integrity: Ensuring data integrity across relationships can be challenging. Implementing constraints and triggers can help maintain the consistency of data.

Common Challenges and Solutions

Conclusion

Entity-Relationship Diagrams and normalization are foundational concepts in database design. Together, they ensure that databases are both logically structured and efficient, capable of handling data accurately and reliably. By integrating these methodologies, database designers can create robust systems that support complex data requirements and facilitate smooth data operations.

FAQs

What is the purpose of an Entity-Relationship Diagram?

An ER Diagram serves as a blueprint for database design, illustrating entities, relationships, and data attributes to provide a clear structure for the database.

Why is normalization important in database design?

Normalization reduces data redundancy and enhances data integrity by organizing data into related tables, ensuring consistent and efficient data storage.

What is the difference between ER modeling and normalization?

ER modeling focuses on the conceptual design and relationships within a database, while normalization addresses the logical structure to minimize redundancy and dependency issues.

Can normalization impact database performance?

Yes, while normalization improves data integrity, it can sometimes lead to performance issues due to increased joins. Balancing normalization with performance needs is essential.

How do you choose between different normal forms?

The choice depends on the specific needs of the database. Most databases aim for at least 3NF to ensure a balance between complexity and efficiency, with higher normal forms applied as necessary.

HOME

#ERModeling#DatabaseDesign#LearnDBMS#EntityRelationship#DataModeling#DBMSBasics#DatabaseConcepts#TechForStudents#InformationTechnology#DataArchitecture#AssignmentHelp#AssignmentOnClick#assignment help#assignment service#aiforstudents#machinelearning#assignmentexperts#assignment#assignmentwriting

0 notes

Text

The 3 Life-Saving WordPress SEO Plugins You’re Probably Not Using (Yet!)

If you’ve ever tried optimizing a WordPress site for SEO, you already know it’s not as simple as installing a plugin and watching traffic roll in.

With Google constantly changing its algorithms and user behavior evolving faster than ever, your SEO game needs to be sharp—and your tools smarter.

After countless hours of testing and trial-and-error, we finally found 3 powerful plugins that didn’t just tweak our rankings—they saved our WordPress SEO.

Here’s exactly what they are, how they work, and why they’re worth installing today.

1. Rank Math SEO – The All-in-One Powerhouse

We used to swear by Yoast. Then we met Rank Math.

This plugin is a game-changer. It's lighter, faster, and loaded with features that typically require multiple add-ons in other tools.

🔑 Why It’s a Lifesaver:

Advanced Schema Markup: Rank Math lets you add rich snippets in just a few clicks (no coding).

Google Search Console Integration: See your performance data directly in your WordPress dashboard.

Built-in SEO Analyzer: Automatically scans your site for SEO issues and gives actionable tips.

It’s like having an SEO assistant inside your WordPress dashboard—working 24/7.

📌 Pro Tip: Use its content AI (in the pro version) for intelligent, keyword-based suggestions while writing.

2. WP Rocket – Because Site Speed = SEO

Did you know that page speed is a confirmed ranking factor?

Slow-loading pages don’t just hurt your SEO—they kill your user experience. Enter: WP Rocket, the most user-friendly caching plugin out there.

⚡ Why It’s a Lifesaver:

One-click performance optimization: Minifies HTML, CSS, and JavaScript without breaking your site.

Lazy loading: Images load only when needed, improving load times instantly.

Database optimization: Clean up your WordPress clutter with just one click.

No need to mess with complex settings. WP Rocket just works—and your site gets faster overnight.

📌 Pro Tip: Pair it with a CDN like Cloudflare for even better speed and global performance.

3. SEO Press – The Underrated SEO Secret Weapon

If you want clean SEO without the bloat, SEO Press is your hidden gem.

It’s an underrated plugin that’s lightweight, incredibly powerful, and 100% white-labeled—perfect for agencies or businesses who want full control.

🎯 Why It’s a Lifesaver:

Automatic redirects when URLs change (no more 404 headaches)

XML and HTML sitemaps for better crawlability

Social media meta previews so your links look great when shared

And the best part? It’s incredibly affordable—even for premium features.

📌 Pro Tip: Use the broken link checker to fix internal linking issues that silently harm your SEO.

Bonus: Why Just Plugins Aren’t Enough

While these 3 plugins do the heavy lifting, remember: tools don’t replace strategy.

✅ Do keyword research ✅ Focus on high-quality content ✅ Monitor analytics ✅ Build backlinks the right way

Plugins are the engine—but strategy is the fuel.

Final Thoughts: Stack Your SEO Right

These three plugins transformed our WordPress SEO from scattered and slow to streamlined and successful. They're fast, reliable, and (most importantly) backed by strong communities and regular updates.

Whether you’re a beginner blogger or managing SEO for dozens of clients, these plugins will help you work smarter—not harder.

So don’t wait—optimize today, thank us later. 💡

0 notes

Text

CSC 455: Database Processing for Large-Scale Analytics Assignment 3

Part 1 In this and the next part we will use an extended version of the schema from Assignment 2. You can find it in a file ZooDatabase.sql posted with this assignment on D2L. Once again, it is up to you to write the SQL queries to answer the following questions: List the animals (animal names) and the ID of the zoo keeper assigned to them. Now repeat the previous query and make sure…

0 notes

Text

🔍 Streamlining JSON Comparison: Must-Know Tips for Dev Teams in 2025

JSON is everywhere — from configs and APIs to logs and databases. But here’s the catch: comparing two JSON files manually? Absolute chaos. 😵💫

If you’ve ever diffed a 300-line nested JSON with timestamps and IDs changing on every run… you know the pain.

Let’s fix that. 💡

📈 Why JSON Comparison Matters (More Than You Think)

In today’s world of microservices, real-time APIs, and data-driven apps, JSON is the glue. But with great power comes... yeah, messy diffs.

And no, plain text diff tools don’t cut it anymore. Not when your data is full of:

Auto-generated IDs

Timestamps that change on every request

Configs that vary by environment

We need smart tools — ones that know what actually matters in a diff.

🛠️ Pro Strategies to Make JSON Diff Less of a Nightmare

🔌 Plug It Into Your Dev Flow

Integrate JSON diff tools right in your IDE

Add them to CI/CD pipelines to catch issues before deploy

Auto-flag unexpected changes in pull requests

🧑💻 Dev Team Playbook

Define what counts as a “real change” (schema vs content vs metadata)

Set merge conflict rules for JSON

Decide when a diff needs a second pair of eyes 👀

✅ QA Power-Up

Validate API responses

Catch config issues in test environments

Compare snapshots across versions/releases

💡 Advanced Tactics for JSON Mastery

Schema-aware diffing: Skip the noise. Focus on structure changes.

Business-aware diffing: Prioritize diffs based on impact (not just "what changed")

History-aware diffing: Track how your data structure evolves over time

⚙️ Real-World Use Cases

Microservices: Keep JSON consistent across services

Databases: Compare stored JSON in relational or NoSQL DBs

API Gateways: Validate contract versions between deployments

🧠 But Wait, There’s More…

🚨 Handle the Edge Cases

Malformed JSON? Good tools won’t choke.

Huge files? Stream them, don’t crash your RAM.

Internationalization? Normalize encodings and skip false alarms.

🔒 Privacy & Compliance

Mask sensitive info during comparison

Log and audit every change (hello, HIPAA/GDPR)

⚡ Performance Tips

Real-time comparison for live data

Batch processing for large jobs

Scale with cloud-native or distributed JSON diff services

🚀 Future-Proof Your Stack

New formats and frameworks? Stay compatible.

Growing data? Choose scalable tools.

New team members? Train them to use smarter JSON diffs from day one.

💬 TL;DR

Modern JSON comparison isn't just about spotting a difference—it’s about understanding it. With the right strategies and tools, you’ll ship faster, debug smarter, and sleep better.

👉 Wanna level up your JSON testing? Keploy helps you test, diff, and validate APIs with real data — no fluff, just solid testing automation.

0 notes

Text

Web Development in 2025: Why Your Website Can’t Fall Behind

In 2025, your website is not just a web page. It's the initial impression people get when they stumble upon your business or brand.

Be it a small shop, a startup business, or a personal blog—your website is your shop window, elevator pitch, and customer service center rolled into one.

So let's know what web development actually is, and why you should care.

What Is Web Development? Web development is how websites are built—not only to be pretty, but also to function well.

It involves:

Frontend: What the users see — colors, buttons, layouts, and animations.

Backend: What happens behind the scenes — login systems, product pages, databases, etc.

In 2025, your site shouldn't just be cute. It must be:

✅ Fast ✅ Mobile-friendly ✅ Easy to use ✅ Safe and secure ✅ SEO-ready (so people can find you on Google) ✅ Can scale with your business

Why Mobile-First First

Honest question—are you viewing this on your phone?

Most are That's why we build mobile first, and then optimize for tablets and laptops.

Google prioritizes the mobile version of your site.

And more than 70% of all website visitors arrive on phones. If your website isn't optimized for mobile, folks just will leave.

Speed = Better Results Nobody enjoys a slow website. If your site loads in over 3 seconds, people will bounce. ????

We make your site fast by:

Image compression

Writing tidy, lightweight code

Utilizing clever tech tools

So your site loads fast and keeps your users smiling.

Web Development + SEO = Winning Combo

To rank on Google, your site must be SEO-friendly. That involves:

Tidy and legible HTML code

Mobile-friendly design

Lightning-fast loading speed

Internal linking properly

Schema markup (helps Google understand your content better)

If your site isn’t built for search engines, people might not even find you. ?

Who Should Care About Web Development?

Everyone. But especially:

Online stores (eCommerce) Tech startups Education/EdTech websites Healthcare and medical sites Real estate businesses B2B and industrial companies Bloggers and content creators

If you’re online, your website needs to perform well.

How DazzleBirds Helps

We're not just web developers—we're your growth partners.

At DazzleBirds, we create websites that:

✅ Are beautiful on every device ✅ Are fast to load ✅ Get better search rankings ✅ Are simple to update and manage ✅ Convert visitors to customers

Getting a new site? Need to update the one you have? We've got you covered. ?

Final Words

It can harm your business in 2025 if you have a slow or old website. Modern web development is about creating a site that's fast, mobile-friendly, SEO-friendly, and designed to grow your business.

If your website is playing catch-up with the rest of the world, let's catch up.

#WebDevelopment#MobileFirstDesign#ResponsiveWebsites#SmallBusinessTips#DazzleBirds#WebsiteDesign#DigitalMarketing#SEO#ModernWebDesign

0 notes

Text

WordPress Performance Tuning for High-Traffic Stores in 2025

When your WordPress eCommerce store starts attracting serious traffic, that’s a great sign — but it also brings challenges. A slow or unstable site can kill conversions, frustrate customers, and even hurt your SEO.

The good news? With the right performance tuning, your store can stay fast, reliable, and ready to scale.

In this guide, we’ll share the best WordPress performance tuning strategies for high-traffic stores in 2025. And if you want a pro setup, a WordPress Development Company in Udaipur can optimize your store for speed, security, and growth.

Why WordPress Performance Matters for High-Traffic Stores

✅ Speed improves conversion rates — every extra second in load time can reduce conversions by up to 20%. ✅ Google uses speed as a ranking factor — faster sites rank higher. ✅ Better UX means lower bounce rates and happier customers. ✅ A fast site handles traffic spikes without crashing.

Top Performance Tuning Tips for High-Traffic WordPress Stores

🚀 1️⃣ Choose High-Performance Hosting

Your hosting plan is the foundation of your site’s speed.

✅ Look for:

Managed WordPress or WooCommerce hosting

SSD storage

Built-in caching

Auto-scaling for traffic spikes

Examples: Kinsta, SiteGround, Hostinger WooCommerce plans.

🚀 2️⃣ Use a Caching Solution

Caching serves static versions of your pages to reduce server load.

✅ Top plugins:

LiteSpeed Cache (best for LiteSpeed servers)

WP Rocket (premium all-in-one solution)

W3 Total Cache

✅ Features to enable:

Page caching

Browser caching

Object caching

GZIP compression

🚀 3️⃣ Optimize Images

Large images slow your site.

✅ Best practices:

Use WebP format where possible

Compress images with Smush or ShortPixel

Enable lazy loading

👉 Tip: Don’t upload 4K images when 1200px will do.

🚀 4️⃣ Minify CSS, JS, and HTML

Reduce file sizes to improve load time.

✅ Tools:

Autoptimize

LiteSpeed Cache (has minify options)

WP Rocket

Minify AND combine files where safe to do so.

🚀 5️⃣ Use a CDN (Content Delivery Network)

A CDN stores copies of your site globally, so users get data from the nearest location.

✅ Recommended CDNs:

Cloudflare (free + paid tiers)

BunnyCDN

StackPath

This improves load time worldwide, especially for international stores.

🚀 6️⃣ Optimize Your Database

Over time, databases collect clutter — slowing queries.

✅ Use:

WP-Optimize

Advanced Database Cleaner

✅ Remove:

Post revisions

Spam comments

Expired transients

🚀 7️⃣ Limit Plugins and Bloat

Every plugin adds code and potential load time.

✅ Do:

Deactivate and delete unused plugins

Choose multi-function plugins (e.g., Rank Math for SEO + schema)

Test site speed after adding new plugins

🚀 8️⃣ Streamline WooCommerce

WooCommerce can get heavy with extensions and add-ons.

✅ Tips:

Disable cart fragments when not needed

Use optimized WooCommerce themes (Astra, Kadence, Storefront)

Simplify checkout flow — fewer fields, faster completion

🚀 9️⃣ Monitor and Test Regularly

Use tools like:

Google PageSpeed Insights

GTmetrix

Pingdom

✅ Test:

Homepage

Shop page

Product pages

Cart + checkout

Set a goal: under 2 seconds load time.

🚀 10️⃣ Scale with Traffic

As traffic grows:

Consider dedicated or cloud hosting (auto-scale during spikes)

Use object caching (e.g., Redis, Memcached)

Optimize server configurations (a job for professionals!)

Pro Tip: Combine Speed + Security

High-traffic sites = bigger target for attackers.

✅ Use:

Wordfence or Sucuri for firewalls + malware scans

SSL everywhere

Daily backups (UpdraftPlus, BlogVault)

Why Work With a WordPress Performance Expert?

Performance tuning isn’t just about installing plugins — it’s about:

Configuring them the right way

Balancing speed, design, and functionality

Avoiding plugin conflicts

Optimizing server-side for scale

A WordPress Development Company in Udaipur can:

Audit your current store

Apply advanced speed and security techniques

Set up monitoring and ongoing performance care

Final Thoughts: Keep Your High-Traffic Store Running Like a Dream

A fast, optimized store means: ✅ Happier shoppers ✅ Higher SEO rankings ✅ More sales and revenue ✅ Less stress during traffic surges

0 notes

Text

Meet Your New Favorite SQL Copilot — dbForge AI Assistant

Tired of writing SQL from scratch or wasting time optimizing clunky queries?

Now you don’t have to.

The newly developed dbForge AI Assistant, created by Devart, makes even complex SQL coding tasks simple:

Generate, explain, and optimize context-aware queries — instantly Simply attach the required database, and the Assistant will promptly check its metadata. After that, it will be able to generate SQL queries of any type and complexity that will be relevant to your schema.

Convert natural language to SQL dbForge AI Assistant is apt even without the context. You can ask it to generate a query of any kind; just write down a request in your natural language, and the Assistant will respond immediately.

Troubleshoot errors before they hit production You can ask the Assistant to analyze and troubleshoot your SQL code. To do that, enter a query; if there is something wrong with it, the Assistant will see that and immediately provide you with analysis results and actionable suggestions.

Get contextual help across dbForge tools, and much more Enjoy real-time AI chat support, smart coding prompts, and tailored guidance directly within dbForge tools.

Whether you're a developer racing to meet release deadlines, a DBA managing complex environments, a data analyst working without deep SQL knowledge, or a team lead looking to speed up code reviews — dbForge AI Assistant is built to boost your productivity.

Just update to the newest version of your dbForge tool, open the dbForge AI Assistant, and let it do the heavy lifting.

No guesswork. No syntax stress. Just smart SQL, faster.

Get started now with new intelligent dbForge AI Assistant.

Not sure yet? Take it for a spin with a free 14-day trial!

More details, screenshots, and tips here.

#New Release#dbForge#Devart#dbForge AI#dbForge AI Assistant#AI Assistant#SQL AI Assistant#SQL AI#AI#AI SQL#MySQL AI#SQL Server AI#AI Coding Assistant#SQL AI Tool#SQL AI Bot#SQL AI Helper

0 notes

Text

Software Development Process—Definition, Stages, and Methodologies

In the rapidly evolving digital era, software applications are the backbone of business operations, consumer services, and everyday convenience. Behind every high-performing app or platform lies a structured, strategic, and iterative software development process. This process isn't just about writing code—it's about delivering a solution that meets specific goals and user needs.

This blog explores the definition, key stages, and methodologies used in software development—providing you a clear understanding of how digital solutions are brought to life and why choosing the right software development company matters.

What is the software development process?

The software development process is a series of structured steps followed to design, develop, test, and deploy software applications. It encompasses everything from initial idea brainstorming to final deployment and post-launch maintenance.

It ensures that the software meets user requirements, stays within budget, and is delivered on time while maintaining high quality and performance standards.

Key Stages in the Software Development Process

While models may vary based on methodology, the core stages remain consistent:

1. Requirement Analysis

At this stage, the development team gathers and documents all requirements from stakeholders. It involves understanding:

Business goals

User needs

Functional and non-functional requirements

Technical specifications

Tools such as interviews, surveys, and use-case diagrams help in gathering detailed insights.

2. Planning

Planning is crucial for risk mitigation, cost estimation, and setting timelines. It involves

Project scope definition

Resource allocation

Scheduling deliverables

Risk analysis

A solid plan keeps the team aligned and ensures smooth execution.

3. System Design

Based on requirements and planning, system architects create a blueprint. This includes:

UI/UX design

Database schema

System architecture

APIs and third-party integrations

The design must balance aesthetics, performance, and functionality.

4. Development (Coding)

Now comes the actual building. Developers write the code using chosen technologies and frameworks. This stage may involve:

Front-end and back-end development

API creation

Integration with databases and other systems

Version control tools like Git ensure collaborative and efficient coding.

5. Testing

Testing ensures the software is bug-free and performs well under various scenarios. Types of testing include:

Unit Testing

Integration Testing

System Testing

User Acceptance Testing (UAT)

QA teams identify and document bugs for developers to fix before release.

6. Deployment

Once tested, the software is deployed to a live environment. This may include:

Production server setup

Launch strategy

Initial user onboarding

Deployment tools like Docker or Jenkins automate parts of this stage to ensure smooth releases.

7. Maintenance & Support

After release, developers provide regular updates and bug fixes. This stage includes

Performance monitoring

Addressing security vulnerabilities

Feature upgrades

Ongoing maintenance is essential for long-term user satisfaction.

Popular Software Development Methodologies

The approach you choose significantly impacts how flexible, fast, or structured your development process will be. Here are the leading methodologies used by modern software development companies:

🔹 Waterfall Model

A linear, sequential approach where each phase must be completed before the next begins. Best for:

Projects with clear, fixed requirements

Government or enterprise applications

Pros:

Easy to manage and document

Straightforward for small projects

Cons:

Not flexible for changes

Late testing could delay bug detection

🔹 Agile Methodology

Agile breaks the project into smaller iterations, or sprints, typically 2–4 weeks long. Features are developed incrementally, allowing for flexibility and client feedback.

Pros:

High adaptability to change

Faster delivery of features

Continuous feedback

Cons:

Requires high team collaboration

Difficult to predict final cost and timeline

🔹 Scrum Framework

A subset of Agile, Scrum includes roles like Scrum Master and Product Owner. Work is done in sprint cycles with daily stand-up meetings.

Best For:

Complex, evolving projects

Cross-functional teams

🔹 DevOps

Combines development and operations to automate and integrate the software delivery process. It emphasizes:

Continuous integration

Continuous delivery (CI/CD)

Infrastructure as code

Pros:

Faster time-to-market

Reduced deployment failures

🔹 Lean Development

Lean focuses on minimizing waste while maximizing productivity. Ideal for startups or teams on a tight budget.

Principles include:

Empowering the team

Delivering as fast as possible

Building integrity in

Why Partnering with a Professional Software Development Company Matters

No matter how refined your idea is, turning it into a working software product requires deep expertise. A reliable software development company can guide you through every stage with

Technical expertise: They offer full-stack developers, UI/UX designers, and QA professionals.

Industry knowledge: They understand market trends and can tailor solutions accordingly.

Agility and flexibility: They adapt to changes and deliver incremental value quickly.

Post-deployment support: From performance monitoring to feature updates, support never ends.

Partnering with professionals ensures your software is scalable, secure, and built to last.

Conclusion: Build Smarter with a Strategic Software Development Process

The software development process is a strategic blend of analysis, planning, designing, coding, testing, and deployment. Choosing the right development methodology—and more importantly, the right partner—can make the difference between success and failure.

Whether you're developing a mobile app, enterprise software, or SaaS product, working with a reputed software development company will ensure your vision is executed flawlessly and efficiently.

📞 Ready to build your next software product? Connect with an expert software development company today and turn your idea into an innovation-driven reality!

0 notes

Text

How a Web Development Company in UAE Can Boost Your Online Presence

In the age of the internet, all businesses need an established presence online. For businesses that run their operations within a technologically advanced region such as the UAE, a decent website is not only an advantage but also a must. A professional web development company in UAE can offer the resources, skills, and plan you require to remain competitive within a saturated industry.

Your site is your online store. It's where prospects gain their initial impression, engage with your brand, and make choices. That's why operating with experts such as TechAdisa can really maximize your exposure, authority, and business success online.

Developing a Mobile-First Experience Use of smartphones in the UAE is highest in the world. The majority of internet users in the region browse, shop, and engage with brands on their phones directly. If your site isn't mobile optimized, you're losing traffic and credibility.

TechAdisa creates responsive sites that adapt automatically to whatever screen size your visitors are using. Whether on phone, tablet, or desktop, they'll have a quick, slick, and visually cohesive experience. That makes it easier to use, drives bounce rates down, and keeps users interacting longer.

Being mobile-first also enhances your Google search ranking, as mobile optimization is now an important SEO consideration.

Enhancing Your Search Engine Visibility Even the most beautifully designed website won’t help if no one can find it. That’s why SEO is essential to building an effective online presence. Professional web development services in Dubai like those offered by TechAdisa ensure your website is search engine–friendly from the ground up.

From quick load times and organized URLs to image compression and schema markup, TechAdisa constructs with SEO considerations. Therefore, your site has an increased likelihood of being on Google's first page and reaching the correct target market without having to strictly pay for advertisements.

Building Your Brand's Credibility Your site tends to be the first impression with prospective customers. A poorly designed or inefficient site can ruin your brand's image in a split second. Customers judge your site's quality as being indicative of the quality of your services or products.

TechAdisa is dedicated to clean, minimalist designs that reflect your brand identity. Each component—from typography and imagery to calls-to-action—is created to build trust and confidence. In a country such as Dubai, where looks and performance are synonymous, your site needs to have the look and feel of professionalism and quality.

That's why e-commerce website development in Dubai is more than just an online store—it's an extension of your brand.

Supporting a Multilingual Audience The UAE is a cosmopolitan center with individuals from everywhere in the world residing and working here. This renders localization an integral component of any successful website. Providing both English and Arabic versions of your website enhances your reach and accessibility.

TechAdisa develops multilingual, culturally localized websites with right-to-left language support, localized navigation, and region-specific design considerations. This isn't simply enhancing communication—it's evoking a feeling of familiarity and trust among different customer segments.

Investing in localization enables businesses to reach more people and close more leads, all from a single robust platform.

Enhancing Speed, Security, and Scalability Speed and security aren't luxuries anymore—they're mandated. Even an one-second lag in loading time can significantly impact user satisfaction. Likewise, any issues of security can do serious damage to your brand and customer confidence.

TechAdisa makes sure your site performs fast, remains safe, and scales with your business. Performance optimization, SSL encryption, encrypted databases, and automated updates are included as standard features. And their development process is scalable too, meaning you won't outgrow your site as your business grows.

That's just one of the many reasons why they're among the best web development company in Dubai for providing both quality and long-term value.

Merging New Digital Features Your modern website is not just pages and text. It's a digital platform that might feature e-commerce, booking functionality, CRM integration, live chat, and performance analysis. TechAdisa excels at creating robust, versatile websites that accommodate your entire business system.

Need to sell online? TechAdisa can create a fully featured e-commerce site with inventory management, product filters, payment gateways, and customer accounts.

Want to drive leads? Integration with marketing software and email platforms means that your site not only brings in visitors, but it converts them into buyers.

Dedicated Support and Instant Insights Having a site online is only the start. To stay at the top of its game, you require constant updates, performance tracking, and technical support.

TechAdisa offers regular maintenance and support services so your site remains up-to-date, secure, and free of bugs. They also provide live analytics so you know what's performing and what's not. From bounce rates to click-throughs, every detail is trackable—and actionable.

It's easier to react to user behavior with this proactive strategy, execute successful campaigns, and adjust your approach in real time.

Keeping It Cost-Effective Web development doesn’t have to break the bank. Many businesses hesitate to invest in a quality website due to cost concerns, but in reality, a well-built website saves money over time through higher conversion rates and lower marketing costs.

With TechAdisa's affordable web development services in UAE, you receive enterprise-class functionality and support without the exorbitant price. They have flexible packages to suit various business sizes and objectives—so you pay only for what you use.

This brings professional web development within reach for startups, small businesses, and emerging brands.

Conclusion In a competitive online environment such as the UAE, your website is more than a virtual brochure—it's a business asset. It can draw new customers, establish trust, promote your brand, and grow revenue when executed well.

A business such as TechAdisa installs the experience and vision necessary to design high-performing websites that are specific to the local audience. From mobile-first development and search engine optimization to e-commerce shopping integration and multilingual functionality, TechAdisa provides solutions that are intelligent, scalable, and secure.

If you're ready to build your digital footprint and develop your business online, starting with the right web development company is the way to go. With TechAdisa on your team, that step results in outcomes.

0 notes

Text

5 Practical AI Agents That Deliver Enterprise Value

The AI landscape is buzzing, and while Generative AI models like GPT have captured headlines with their ability to create text and images, a new and arguably more transformative wave is gathering momentum: AI Agents. These aren't just sophisticated chatbots; they are autonomous entities designed to understand complex goals, plan multi-step actions, interact with various tools and systems, and execute tasks to achieve those goals with minimal human oversight.

AI Agents are moving beyond theoretical concepts to deliver tangible enterprise value across diverse industries. They represent a significant leap in automation, moving from simply generating information to actively pursuing and accomplishing real-world objectives. Here are 5 practical types of AI Agents that are already making a difference or are poised to in 2025 and beyond:

1. Autonomous Customer Service & Support Agents

Beyond the Basic Chatbot: While traditional chatbots follow predefined scripts to answer FAQs, Agentic AI takes customer service to an entirely new level.

How they work: Given a customer's query, these agents can autonomously diagnose the problem, access customer databases (CRM, order history), consult extensive knowledge bases, initiate refunds, reschedule appointments, troubleshoot technical issues by interacting with IT systems, and even proactively escalate to a human agent with a comprehensive summary if the issue is too complex.

Enterprise Value: Dramatically reduces the workload on human support teams, significantly improves first-contact resolution rates, provides 24/7 support for complex inquiries, and ultimately enhances customer satisfaction through faster, more accurate service.

2. Automated Software Development & Testing Agents

The Future of Engineering Workflows: Imagine an AI that can not only write code but also comprehend requirements, rigorously test its own creations, and even debug and refactor.

How they work: Given a high-level feature request ("add a new user login flow with multi-factor authentication"), the agent breaks it down into granular sub-tasks (design database schema, write front-end code, implement authentication logic, write unit tests, integrate with existing APIs). It then leverages code interpreters, interacts with version control systems (e.g., Git), and testing frameworks to iteratively build, test, and refine the code until the feature is complete and verified.

Enterprise Value: Accelerates development cycles by automating repetitive coding tasks, reduces bugs through proactive testing, and frees up human developers for higher-level architectural design, creative problem-solving, and complex integrations.

3. Intelligent Financial Trading & Risk Management Agents

Real-time Precision in Volatile Markets: In the fast-paced and high-stakes world of finance, AI agents can act with unprecedented speed, precision, and analytical depth.

How they work: These agents continuously monitor real-time market data, analyze news feeds for sentiment (e.g., identifying early signs of market shifts from global events), detect complex patterns indicative of fraud or anomalies in transactions, and execute trades based on sophisticated algorithms and pre-defined risk parameters. They can dynamically adjust strategies based on market shifts or regulatory changes, integrating with trading platforms and compliance systems.

Enterprise Value: Optimizes trading strategies for maximum returns, significantly enhances fraud detection capabilities, identifies emerging market risks faster than human analysts, and provides a continuous monitoring layer that ensures compliance and protects assets.

4. Dynamic Supply Chain Optimization & Resilience Agents

Navigating Global Complexity with Autonomy: Modern global supply chains are incredibly complex and vulnerable to unforeseen disruptions. AI agents offer a powerful proactive solution.

How they work: An agent continuously monitors global events (e.g., weather patterns, geopolitical tensions, port congestion, supplier issues), analyzes real-time inventory levels and demand forecasts, and dynamically re-routes shipments, identifies and qualifies alternative suppliers, or adjusts production schedules in real-time to mitigate disruptions. They integrate seamlessly with ERP systems, logistics platforms, and external data feeds.

Enterprise Value: Builds unparalleled supply chain resilience, drastically reduces operational costs due to delays and inefficiencies, minimizes stockouts and overstock, and ensures continuous availability of goods even in turbulent environments.

5. Personalized Marketing & Sales Agents

Hyper-Targeted Engagement at Scale: Moving beyond automated emails to truly intelligent, adaptive customer interaction is crucial for modern sales and marketing.

How they work: These agents research potential leads by crawling public data, analyze customer behavior across multiple channels (website interactions, social media engagement, past purchases), generate highly personalized outreach messages (emails, ad copy, chatbot interactions) using integrated generative AI models, manage entire campaign execution, track real-time engagement, and even schedule follow-up actions like booking a demo or sending a tailored proposal. They integrate with CRM and marketing automation platforms.

Enterprise Value: Dramatically improves lead conversion rates, fosters deeper customer engagement through hyper-personalization, optimizes marketing spend by targeting the most promising segments, and frees up sales teams to focus on high-value, complex relationship-building.

The Agentic Future is Here

The transition from AI that simply "generates" information to AI that "acts" autonomously marks a profound shift in enterprise automation and intelligence. While careful consideration for aspects like trust, governance, and reliable integration is essential, these practical examples demonstrate that AI Agents are no longer just a theoretical concept. They are powerful tools ready to deliver tangible business value, automate complex workflows, and redefine efficiency across every facet of the modern enterprise. Embracing Agentic AI is key to unlocking the next level of business transformation and competitive advantage.

0 notes

Text

Flex Home Nulled Script 2.54.1

Unlock the Power of Real Estate Listings with Flex Home Nulled Script Are you searching for a dynamic, feature-rich platform to launch your real estate website without the burden of costly licenses? Flex Home Nulled Script is the perfect solution, offering you a professional-grade property listing system absolutely free. This Laravel-based script provides everything you need to build, manage, and scale a multilingual, user-friendly real estate portal—without breaking the bank. What is Flex Home Nulled Script? Flex Home Nulled Script is a premium real estate management platform built with Laravel and designed to empower developers and entrepreneurs. With its intuitive interface, advanced filtering system, and seamless multi-language support, this nulled version offers all the functionalities of the original script—minus the expensive price tag. Whether you're a freelancer, developer, or small agency, this script provides you with an edge in a competitive digital market. Technical Specifications Framework: Laravel 8+ Database: MySQL Language Support: Multilingual (RTL compatible) Admin Panel: Fully responsive dashboard SEO Optimization: Integrated meta tools and schema markup Security: CSRF Protection, input validation, and secure authentication Key Features and Benefits Multilingual & RTL Ready: Perfect for reaching a global audience, including Arabic and Hebrew speakers. Advanced Property Search: Filter by city, status, price, property type, and more. Agent Dashboard: Give agents the tools they need to list, edit, and manage properties seamlessly. Monetization Tools: Charge for premium listings and featured properties with built-in monetization options. Custom Fields: Add new property attributes without touching the code. Mobile Responsive: Optimized for mobile, tablet, and desktop users for maximum reach. How Flex Home Script Can Be Used This script is ideal for: Independent Realtors looking to showcase their listings with a professional website. Agencies aiming to create a multi-agent, scalable real estate portal. Entrepreneurs who want to launch real estate marketplaces in emerging markets without heavy investment. Developers building custom property management platforms for clients. Installation Guide Setting up Flex Home Nulled Script is simple and developer-friendly: Upload the script to your web hosting environment. Create a MySQL database and configure your .env file with the required credentials. Run the installation wizard or artisan commands to finalize the setup. Access your admin panel and start adding listings and categories. No technical expertise? Don’t worry. The admin dashboard is intuitive enough for beginners to handle efficiently. Frequently Asked Questions (FAQs) Is the Flex Home Nulled Script safe to use? Yes, this nulled version has been thoroughly scanned and tested for safety. However, it's always recommended to use it on a secured server and keep regular backups. Can I use this script for a multilingual real estate site? Absolutely! Flex Home Nulled Script supports multiple languages and even RTL layouts, making it perfect for global audiences. Do I need to know coding to use this? No coding skills are required for basic setup and property listing. Developers, however, can easily customize the codebase to fit any advanced requirement. Can I monetize my real estate website with this script? Yes, you can monetize via featured listings, ad placements, and premium packages directly within the admin panel. Why Choose Flex Home Nulled Script? With an extensive feature set, intuitive interface, and support for modern web standards, Flex Home Nulled Script is your gateway to launching a successful real estate platform. Best of all? You can download it for free right from our website—no subscriptions, no hidden fees. Pair it with tools like WP-Optimize Premium nulled for unbeatable performance, and you'll have a winning combination for your online real estate venture.

Final Thoughts Whether you're just starting or looking to expand your real estate offerings, Flex Home delivers powerful capabilities, flexibility, and zero licensing costs. Take the first step in transforming your real estate vision into reality—get your free download today and build with confidence.

0 notes

Text

PHP with MySQL: Best Practices for Database Integration

PHP and MySQL have long formed the backbone of dynamic web development. Even with modern frameworks and languages emerging, this combination remains widely used for building secure, scalable, and performance-driven websites and web applications. As of 2025, PHP with MySQL continues to power millions of websites globally, making it essential for developers and businesses to follow best practices to ensure optimized performance and security.

This article explores best practices for integrating PHP with MySQL and explains how working with expert php development companies in usa can help elevate your web projects to the next level.

Understanding PHP and MySQL Integration

PHP is a server-side scripting language used to develop dynamic content and web applications, while MySQL is an open-source relational database management system that stores and manages data efficiently. Together, they allow developers to create interactive web applications that handle tasks like user authentication, data storage, and content management.

The seamless integration of PHP with MySQL enables developers to write scripts that query, retrieve, insert, and update data. However, without proper practices in place, this integration can become vulnerable to performance issues and security threats.

1. Use Modern Extensions for Database Connections

One of the foundational best practices when working with PHP and MySQL is using modern database extensions. Outdated methods have been deprecated and removed from the latest versions of PHP. Developers are encouraged to use modern extensions that support advanced features, better error handling, and more secure connections.

Modern tools provide better performance, are easier to maintain, and allow for compatibility with evolving PHP standards.

2. Prevent SQL Injection Through Prepared Statements

Security should always be a top priority when integrating PHP with MySQL. SQL injection remains one of the most common vulnerabilities. To combat this, developers must use prepared statements, which ensure that user input is not interpreted as SQL commands.

This approach significantly reduces the risk of malicious input compromising your database. Implementing this best practice creates a more secure environment and protects sensitive user data.

3. Validate and Sanitize User Inputs

Beyond protecting your database from injection attacks, all user inputs should be validated and sanitized. Validation ensures the data meets expected formats, while sanitization cleans the data to prevent malicious content.

This practice not only improves security but also enhances the accuracy of the stored data, reducing errors and improving the overall reliability of your application.

4. Design a Thoughtful Database Schema

A well-structured database is critical for long-term scalability and maintainability. When designing your MySQL database, consider the relationships between tables, the types of data being stored, and how frequently data is accessed or updated.

Use appropriate data types, define primary and foreign keys clearly, and ensure normalization where necessary to reduce data redundancy. A good schema minimizes complexity and boosts performance.

5. Optimize Queries for Speed and Efficiency

As your application grows, the volume of data can quickly increase. Optimizing SQL queries is essential for maintaining performance. Poorly written queries can lead to slow loading times and unnecessary server load.

Developers should avoid requesting more data than necessary and ensure queries are specific and well-indexed. Indexing key columns, especially those involved in searches or joins, helps the database retrieve data more quickly.

6. Handle Errors Gracefully

Handling database errors in a user-friendly and secure way is important. Error messages should never reveal database structures or sensitive server information to end-users. Instead, errors should be logged internally, and users should receive generic messages that don’t compromise security.

Implementing error handling protocols ensures smoother user experiences and provides developers with insights to debug issues effectively without exposing vulnerabilities.

7. Implement Transactions for Multi-Step Processes

When your application needs to execute multiple related database operations, using transactions ensures that all steps complete successfully or none are applied. This is particularly important for tasks like order processing or financial transfers where data integrity is essential.

Transactions help maintain consistency in your database and protect against incomplete data operations due to system crashes or unexpected failures.

8. Secure Your Database Credentials

Sensitive information such as database usernames and passwords should never be exposed within the application’s core files. Use environment variables or external configuration files stored securely outside the public directory.

This keeps credentials safe from attackers and reduces the risk of accidental leaks through version control or server misconfigurations.

9. Backup and Monitor Your Database

No matter how robust your integration is, regular backups are critical. A backup strategy ensures you can recover quickly in the event of data loss, corruption, or server failure. Automate backups and store them securely, ideally in multiple locations.

Monitoring tools can also help track database performance, detect anomalies, and alert administrators about unusual activity or degradation in performance.

10. Consider Using an ORM for Cleaner Code

Object-relational mapping (ORM) tools can simplify how developers interact with databases. Rather than writing raw SQL queries, developers can use ORM libraries to manage data through intuitive, object-oriented syntax.

This practice improves productivity, promotes code readability, and makes maintaining the application easier in the long run. While it’s not always necessary, using an ORM can be especially helpful for teams working on large or complex applications.

Why Choose Professional Help?

While these best practices can be implemented by experienced developers, working with specialized php development companies in usa ensures your web application follows industry standards from the start. These companies bring:

Deep expertise in integrating PHP and MySQL

Experience with optimizing database performance

Knowledge of the latest security practices

Proven workflows for development and deployment

Professional PHP development agencies also provide ongoing maintenance and support, helping businesses stay ahead of bugs, vulnerabilities, and performance issues.

Conclusion

PHP and MySQL remain a powerful and reliable pairing for web development in 2025. When integrated using modern techniques and best practices, they offer unmatched flexibility, speed, and scalability.

Whether you’re building a small website or a large-scale enterprise application, following these best practices ensures your PHP and MySQL stack is robust, secure, and future-ready. And if you're seeking expert assistance, consider partnering with one of the top php development companies in usa to streamline your development journey and maximize the value of your project.

0 notes