#Secure Data Storage

Explore tagged Tumblr posts

Text

Explore Timbl Cloud Services for secure data storage, seamless access, and scalable solutions. Empower your business with reliable cloud hosting and advanced technology infrastructure.

#Timbl Cloud Services#Cloud Hosting Solutions#Secure Data Storage#Scalable Cloud Plans#Business Cloud Solutions#Timbl Cloud Technology#Enterprise Cloud Services

0 notes

Text

How to Set Up a Personal Cloud Storage System

In a world dominated by online services and ever-growing data needs, setting up your personal cloud storage system is a game changer. Whether you’re tired of relying on third-party services or simply want more control over your data, a personal cloud storage setup offers the flexibility, security, and scalability you need. Here’s how to set up your own cloud storage system, step by step. 1.…

#home cloud network#personal cloud storage#remote access cloud.#secure cloud setup#secure data storage

0 notes

Text

What Are the Top Security Features to Look for When Choosing a Virtual Tax Filing Service?

In today’s digital age, virtual tax filing services offer a convenient way to handle your taxes. However, with the growing risks of cyber threats, protecting your sensitive financial and personal information is more important than ever. When choosing a virtual tax filing service, security should be at the top of your priority list. Here are the top security features to look for to ensure your data remains safe.

Data Encryption

Encryption is the backbone of secure online platforms. A trustworthy virtual tax filing service should use robust encryption protocols, such as 256-bit SSL (Secure Sockets Layer). This ensures that all information exchanged between you and the platform—like Social Security numbers and bank account details—is protected from unauthorized access.

Multi-Factor Authentication (MFA)

Passwords alone are no longer enough to safeguard accounts. Look for services that offer multi-factor authentication, which requires a second verification step, like a code sent to your phone or email. MFA adds an extra layer of protection, making it harder for hackers to breach your account even if they have your password.

Secure Storage

Your tax information is valuable, so it’s crucial to choose a service that stores your data securely. Check if the platform offers encrypted storage and uses secure servers located in data centers with advanced physical security measures.

Regular Security Audits and Updates

A reliable virtual tax filing service should perform regular security audits to identify and fix vulnerabilities. They should also stay updated with the latest cybersecurity practices, ensuring their platform remains protected against new and emerging threats.

Privacy Policy Transparency

Read the service’s privacy policy to understand how they handle your data. Ensure they don’t share your information with third parties without your consent and comply with regulations like GDPR or CCPA, depending on your location.

Fraud Detection and Alerts

Some platforms include built-in fraud detection systems that monitor for suspicious activity, like unauthorized logins or unusual transactions. Additionally, instant alerts keep you informed of any potential security breaches.

Customer Support and Backup Options

In case of a security issue, responsive customer support is essential. Choose a service that offers immediate assistance and provides options for securely backing up your data.

When selecting a virtual income tax filing service, security should be non-negotiable. By prioritizing features like encryption, MFA, secure storage, and regular updates, you can protect your sensitive information and enjoy the convenience of filing taxes online without worry.

#Virtual Tax Filing Service#Income Tax Filing#Data Encryption#Multi-Factor Authentication#Secure Data Storage#Fraud Detection and Privacy Protection

0 notes

Text

Understanding SecurePath Premium: A Comprehensive Review

Introduction

In a world of increasing cyber threats, businesses and individuals alike are seeking more robust and reliable ways to protect their data and assets. SecurePath Premium is a pioneering cybersecurity solution designed to provide comprehensive protection for businesses and individuals in today’s digital age. In this review, we will take a closer look at SecurePath Premium, its features, benefits, and how it can help you stay safe online.

What is SecurePath Premium?

SecurePath Premium is a leading cybersecurity service that provides comprehensive protection for businesses and individuals. It is designed to offer advanced security measures to keep your sensitive data and assets safe from cyber threats such as malware, ransomware, phishing attacks, and more.

Features of SecurePath Premium

Comprehensive Endpoint Protection

SecurePath Premium offers comprehensive endpoint protection, which means it protects your devices from all angles. This includes real-time threat detection and removal, advanced firewall protection, web filtering, and more. With this feature, you can rest assured that your devices are protected from the latest cyber threats.

Cloud Backup

SecurePath Premium also includes cloud backup, allowing you to securely store your important files and documents in the cloud. This feature ensures that even if your device is compromised, your data is safe and can be easily restored.

Identity Theft Protection

Identity theft is a growing concern, and SecurePath Premium addresses this by offering identity theft protection. This feature helps protect your personal and financial information from unauthorized access and misuse.

Password Manager

SecurePath Premium also includes a password manager, making it easy for you to create and manage strong, unique passwords for all your online accounts. This feature helps protect you from password-related security breaches.

Benefits of SecurePath Premium

Enhanced Security

The most obvious benefit of SecurePath Premium is enhanced security. With its advanced features, SecurePath Premium provides comprehensive protection against a wide range of cyber threats.

Peace of Mind

Knowing that your sensitive data and assets are protected can give you peace of mind. With SecurePath Premium, you can rest assured that your devices and data are safe from cyber threats.

Cost Savings

While the cost of SecurePath Premium may seem high, the benefits it provides can actually save you money in the long run. By preventing data breaches and other cyber incidents, SecurePath Premium can help you avoid costly repairs and downtime.

Conclusion

SecurePath Premium is a pioneering cybersecurity solution that offers comprehensive protection for businesses and individuals. With its advanced features and benefits, it provides enhanced security, peace of mind, and cost savings. If you are looking for a reliable and effective cybersecurity solution, SecurePath Premium is definitely worth considering.

1 note

·

View note

Text

#WordPress site deletion#Delete WordPress website#Removing WordPress site#Uninstall WordPress from cPanel#Backup WordPress website#WordPress database deletion#Website platform migration#WordPress site management#cPanel tutorial#WordPress site backup#WordPress website security#Data backup and recovery#Website content management#WordPress maintenance#WordPress database management#Website data protection#Deleting WordPress files#Secure data storage#WordPress site removal process#WordPress website best practices

0 notes

Text

#Entity Locker#Locker Storage#Digital Locker#Secure Storage#Cloud Locker#Data Locker#Secure Entity Locker#Document Locker#Online Locker#Smart Locker

1 note

·

View note

Text

A Complete Guide to Mastering Microsoft Azure for Tech Enthusiasts

With this rapid advancement, businesses around the world are shifting towards cloud computing to enhance their operations and stay ahead of the competition. Microsoft Azure, a powerful cloud computing platform, offers a wide range of services and solutions for various industries. This comprehensive guide aims to provide tech enthusiasts with an in-depth understanding of Microsoft Azure, its features, and how to leverage its capabilities to drive innovation and success.

Understanding Microsoft Azure

A platform for cloud computing and service offered through Microsoft is called Azure. It provides reliable and scalable solutions for businesses to build, deploy, and manage applications and services through Microsoft-managed data centers. Azure offers a vast array of services, including virtual machines, storage, databases, networking, and more, enabling businesses to optimize their IT infrastructure and accelerate their digital transformation.

Cloud Computing and its Significance

Cloud computing has revolutionized the IT industry by providing on-demand access to a shared pool of computing resources over the internet. It eliminates the need for businesses to maintain physical hardware and infrastructure, reducing costs and improving scalability. Microsoft Azure embraces cloud computing principles to enable businesses to focus on innovation rather than infrastructure management.

Key Features and Benefits of Microsoft Azure

Scalability: Azure provides the flexibility to scale resources up or down based on workload demands, ensuring optimal performance and cost efficiency.

Vertical Scaling: Increase or decrease the size of resources (e.g., virtual machines) within Azure.

Horizontal Scaling: Expand or reduce the number of instances across Azure services to meet changing workload requirements.

Reliability and Availability: Microsoft Azure ensures high availability through its globally distributed data centers, redundant infrastructure, and automatic failover capabilities.

Service Level Agreements (SLAs): Guarantees high availability, with SLAs covering different services.

Availability Zones: Distributes resources across multiple data centers within a region to ensure fault tolerance.

Security and Compliance: Azure incorporates robust security measures, including encryption, identity and access management, threat detection, and regulatory compliance adherence.

Azure Security Center: Provides centralized security monitoring, threat detection, and compliance management.

Compliance Certifications: Azure complies with various industry-specific security standards and regulations.

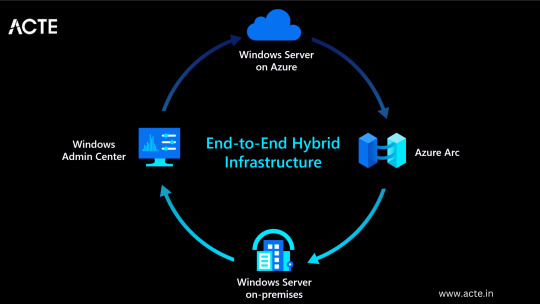

Hybrid Capability: Azure seamlessly integrates with on-premises infrastructure, allowing businesses to extend their existing investments and create hybrid cloud environments.

Azure Stack: Enables organizations to build and run Azure services on their premises.

Virtual Network Connectivity: Establish secure connections between on-premises infrastructure and Azure services.

Cost Optimization: Azure provides cost-effective solutions, offering pricing models based on consumption, reserved instances, and cost management tools.

Azure Cost Management: Helps businesses track and optimize their cloud spending, providing insights and recommendations.

Azure Reserved Instances: Allows for significant cost savings by committing to long-term usage of specific Azure services.

Extensive Service Catalog: Azure offers a wide range of services and tools, including app services, AI and machine learning, Internet of Things (IoT), analytics, and more, empowering businesses to innovate and transform digitally.

Learning Path for Microsoft Azure

To master Microsoft Azure, tech enthusiasts can follow a structured learning path that covers the fundamental concepts, hands-on experience, and specialized skills required to work with Azure effectively. I advise looking at the ACTE Institute, which offers a comprehensive Microsoft Azure Course.

Foundational Knowledge

Familiarize yourself with cloud computing concepts, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

Understand the core components of Azure, such as Azure Resource Manager, Azure Virtual Machines, Azure Storage, and Azure Networking.

Explore Azure architecture and the various deployment models available.

Hands-on Experience

Create a free Azure account to access the Azure portal and start experimenting with the platform.

Practice creating and managing virtual machines, storage accounts, and networking resources within the Azure portal.

Deploy sample applications and services using Azure App Services, Azure Functions, and Azure Containers.

Certification and Specializations

Pursue Azure certifications to validate your expertise in Azure technologies. Microsoft offers role-based certifications, including Azure Administrator, Azure Developer, and Azure Solutions Architect.

Gain specialization in specific Azure services or domains, such as Azure AI Engineer, Azure Data Engineer, or Azure Security Engineer. These specializations demonstrate a deeper understanding of specific technologies and scenarios.

Best Practices for Azure Deployment and Management

Deploying and managing resources effectively in Microsoft Azure requires adherence to best practices to ensure optimal performance, security, and cost efficiency. Consider the following guidelines:

Resource Group and Azure Subscription Organization

Organize resources within logical resource groups to manage and govern them efficiently.

Leverage Azure Management Groups to establish hierarchical structures for managing multiple subscriptions.

Security and Compliance Considerations

Implement robust identity and access management mechanisms, such as Azure Active Directory.

Enable encryption at rest and in transit to protect data stored in Azure services.

Regularly monitor and audit Azure resources for security vulnerabilities.

Ensure compliance with industry-specific standards, such as ISO 27001, HIPAA, or GDPR.

Scalability and Performance Optimization

Design applications to take advantage of Azure’s scalability features, such as autoscaling and load balancing.

Leverage Azure CDN (Content Delivery Network) for efficient content delivery and improved performance worldwide.

Optimize resource configurations based on workload patterns and requirements.

Monitoring and Alerting

Utilize Azure Monitor and Azure Log Analytics to gain insights into the performance and health of Azure resources.

Configure alert rules to notify you about critical events or performance thresholds.

Backup and Disaster Recovery

Implement appropriate backup strategies and disaster recovery plans for essential data and applications.

Leverage Azure Site Recovery to replicate and recover workloads in case of outages.

Mastering Microsoft Azure empowers tech enthusiasts to harness the full potential of cloud computing and revolutionize their organizations. By understanding the core concepts, leveraging hands-on practice, and adopting best practices for deployment and management, individuals become equipped to drive innovation, enhance security, and optimize costs in a rapidly evolving digital landscape. Microsoft Azure’s comprehensive service catalog ensures businesses have the tools they need to stay ahead and thrive in the digital era. So, embrace the power of Azure and embark on a journey toward success in the ever-expanding world of information technology.

#microsoft azure#cloud computing#cloud services#data storage#tech#information technology#information security

6 notes

·

View notes

Text

Data Center Virtualization Market Report: Unlocking Growth Potential and Addressing Challenges

United States of America – June 26, 2025 – The Insight Partners is pleased to announce its latest market report titled "Data Center Virtualization Market: An In-depth Analysis of Industry Trends and Opportunities." This report provides a comprehensive overview of the data center virtualization market, examining current dynamics and future growth prospects across key segments.

Overview of Data Center Virtualization Market

The data center virtualization market has experienced significant transformation due to rapid digitalization, the rise of cloud computing, and increasing demand for scalable IT infrastructure. Virtualization technologies allow organizations to optimize resource utilization, reduce operational costs, and enhance business agility. The market is evolving with advancements in server, storage, and network virtualization solutions, alongside growing adoption by enterprises of all sizes.

Key Findings and Insights

Market Size and Growth

The data center virtualization market continues to expand steadily, driven by increasing demand from various industry verticals and growing adoption of virtualization services. Organizations are focusing on enhancing IT efficiency and security while managing complex workloads and expanding data volumes.

Key Factors Influencing the Market

Accelerated cloud adoption and hybrid IT environments.

Rising need for cost-effective and flexible IT infrastructure.

Enhanced disaster recovery and business continuity requirements.

Increasing emphasis on energy-efficient and sustainable data centers.

Market Segmentation

The market is segmented by:

Component:

Solution

Services

Type:

Server

Storage

Network

Desktop

Application

Organization Size:

Large Enterprises

SMEs

End User:

BFSI (Banking, Financial Services, and Insurance)

Healthcare

IT and Telecommunication

Manufacturing

Government

Retail

Other sectors

This segmentation highlights the broad applicability of data center virtualization technologies across diverse industries and organizational scales.

Spotting Emerging Trends

Technological Advancements: Development of software-defined data centers (SDDC), containerization, and enhanced virtualization security protocols are reshaping the market landscape.

Changing Consumer Preferences: Organizations are increasingly prioritizing flexible, scalable virtualization solutions that support remote and hybrid work models.

Regulatory Changes: Compliance with data protection regulations and standards is influencing virtualization strategies and investments.

Growth Opportunities

The data center virtualization market presents promising opportunities in:

Expanding virtualization in emerging economies with growing IT infrastructure investments.

Adoption of AI and machine learning for automated and intelligent data center management.

Increasing demand for virtualization in cloud-native applications and edge computing.

Development of customized virtualization solutions for industry-specific needs.

Conclusion

The Data Center Virtualization Market: Global Industry Trends, Share, Size, Growth, Opportunity, and Forecast 2023-2031 report provides much-needed insight for a company willing to set up its operations in the Data Center Virtualization Market. Since an in-depth analysis of competitive dynamics, the environment, and probable growth path are given in the report, a stakeholder can move ahead with fact-based decision-making in favor of market achievements and enhancement of business opportunities.

About The Insight Partners

The Insight Partners is among the leading market research and consulting firms in the world. We take pride in delivering exclusive reports along with sophisticated strategic and tactical insights into the industry. Reports are generated through a combination of primary and secondary research, solely aimed at giving our clientele a knowledge-based insight into the market and domain. This is done to assist clients in making wiser business decisions. A holistic perspective in every study undertaken forms an integral part of our research methodology and makes the report unique and reliable.

To know more and get access to Sample reports. https://www.theinsightpartners.com/sample/TIPRE00039803

#Data center#virtualization#cloud computing#IT infrastructure#server virtualization#storage virtualization#network virtualization#enterprise IT#hybrid cloud#data security

0 notes

Text

How Automated Data Archiving and Offline Storage Systems Protect Your Digital Assets?

In today's digital world, data is more precious than ever — and more at risk. With the growing threats of cyberattacks, unintentional data loss, and digital decay, protecting your digital assets is no longer a choice. For governments, organizations, and even individuals working with sensitive data, maintaining a strong solution for data archiving and offline storage is now a strategic imperative.

Step into the realm of Automated Data Archiving and offline storage solutions — new technology that is revolutionizing how we manage, secure, and store data for long-term preservation.

Why Long-Term Data Storage Is Important

Every click, transaction, and communication nowadays leaves a digital trail. From financial records, legal documents, medical reports, research information, or digital data storage for compliance, the requirement to maintain data for years — or decades — is the norm today.

But with technology changing relentlessly, saving files in a hard disk or cloud storage is no longer sufficient. You require long-term data storage solutions that make data accessible, secure, and complete — regardless of how old it becomes.

What is Automated Data Archiving?

Automated Data Archiving is the process of locating digital data — particularly data that is no longer in active use — and automatically relocating it to a safe archive. This takes pressure off your live systems while keeping valuable information safely stored and accessible.

Rather than doing it manually by transferring files and folders, these systems run in the background and archive according to rules such as file age, size, or frequency of access.

Not only does this automation save time and effort, but it also minimizes the likelihood of human error, making your secured data storage system more trustworthy.

The Role of Offline Storage in Data Security

Although cloud-based tools are convenient, they are also susceptible to perpetual online threats — hacking, ransomware, and even accidental overwrites. This is why offline data storage is now so important.

By having a copy of your data stored offline, you significantly lower the possibility of outside attacks. This sort of offline data protection is particularly beneficial to cold data storage — data that's only scarcely touched but needs to be saved in case of regulatory, legal, or business continuity purposes.

Offline storage is perfect for:

Archived legal documents

Historical customer information

Financial and audit trails

Scientific or academic research repositories

Sensitive digital records storage

Cold Data Storage: The Quiet Watchdog

Not all data has to be available right away. Actually, most organizational data goes inactive within a matter of months. But that doesn't equate to disposability. That is cold data — data that has to be kept around but doesn't require immediate access.

Cold storage data solutions are built expressly for this kind of data. They provide low-cost, high-security data storage for archiving data that might get accessed rarely — or perhaps not at all.

This makes them ideal for long-term preservation of digital proof, contracts, or old project documents.

Advantages of Automated Data Archiving and Offline Storage Systems

1. Enhanced Data Security

Paying files offline or cold storage takes them out of the immediate online danger zone. Your data is secure from cyberattacks, malware, and accidental loss.

2. Regulations Compliance

Numerous industries have digital evidence storage and data storage and archiving systems that must comply with certain legal requirements. The process being automated makes sure you never fall short of a requirement.

3. Cost Savings

Archived information doesn't have to reside on pricey high-speed servers. Off-line or cold storage reduces costs significantly without sacrificing security.

4. Scalability

As your information expands, so does your archive. Current data archiving and off-line storage systems are capable of expanding to accommodate terabytes — even petabytes — of data with ease.

5. System Performance Optimization

By relocating inactive data from your active system, you reclaim space and enhance performance for your normal operations.

Selecting the Right Solution

Not all data is created equal — and neither are all storage requirements. The right system will most likely blend automated data archiving with online and offline data storage, striking an equilibrium between accessibility and safeguarding.

Search for features such as:

Policy-based rules for archiving

Encryption and access control

Redundant backups

Offline access protocols

Integration with compliance standards

Collaborating with the correct data archiving and storage systems vendor ensures that your configuration adapts to your requirements — not against them.

Final Thoughts

In 2025 and beyond, digital security isn't so much about firewalls and passwords. It's about having the proper data lifecycle strategy. As the amount of information continues to balloon, automated data archiving and offline storage systems are becoming a necessity for those who take digital asset protection seriously.

If you're working with sensitive documents, governed data, or mission-critical digital information, it's the wise investment to make in long-term storage that incorporates offline data protection.

Because when it comes to data, what you hold back — and how you hold on — can determine your future.

Are your digital assets really secure?

It's time to rethink storage. Select a solution that's not only smart, but future-ready

#Automated Data Archiving#data archiving and offline storage#offline data storage#cold data storage#secured data storage#digital evidence storage#offline data security#long-term data storage#data archiving and storage systems

0 notes

Text

Discover the key features and benefits of eShare.ai’s cloud storage solution. From seamless file sharing and real-time collaboration to top-tier data encryption and unlimited storage, eShare.ai empowers individuals and businesses to organize, protect, and access their data anytime, anywhere. Go paperless, boost productivity, and simplify your workflow with a platform designed for modern digital needs.

#eShare.ai#cloud storage#file sharing#digital organization#online collaboration#secure storage#paperless office#cloud backup#unlimited storage#business productivity#file sync#SaaS platform#data access anywhere#digital workspace

0 notes

Text

Best Privacy Tools to Anonymize Your Online Activity

In an era where data is the new currency, protecting your privacy online is more important than ever. From tracking your browsing habits to selling your personal information, it feels like everyone wants a piece of your digital footprint. But don’t worry, you can fight back with these top privacy tools designed to help you stay anonymous and keep your online activity secure. 1. Virtual Private…

#DIY cloud storage#home cloud network#personal cloud storage#remote access cloud.#secure data storage

0 notes

Text

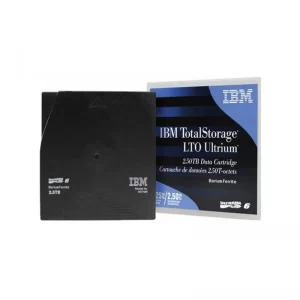

High Quality Data Backup Cartridges | Server Storage UK

Shop premium data cartridges designed for efficient backup and long-term storage. Ideal for enterprise servers and tape libraries, our cartridges provide secure, cost-effective data protection. Browse our extensive UK stock, including LTO and other formats, with fast delivery, expert advice, and reliable customer support for your business needs.

#Data backup cartridges#Server storage solutions#Enterprise tape libraries#LTO data cartridges#Secure data protection

0 notes

Text

“When the Storm Hits - Why A Cloud Rewind is Your Digital Lifeboat"

Ian Moyse Technology Influencer & Sales Leader In today’s digital landscape, Cyber Threats are increasingly sophisticated and pervasive and unexpected outages of varying causes have been seen to take IT systems offline at unexpected times. Traditional data backup solutions alone are no longer sufficient for the intrinsically linked IT and data mission critical lives we all live, even though with…

0 notes

Text

AI-driven admin analytics: Tackling complexity, compliance, and customization

New Post has been published on https://thedigitalinsider.com/ai-driven-admin-analytics-tackling-complexity-compliance-and-customization/

AI-driven admin analytics: Tackling complexity, compliance, and customization

As productivity software evolves, the role of enterprise IT admins has become increasingly challenging.

Not only are they responsible for enabling employees to use these tools effectively, but they are also tasked with justifying costs, ensuring data security, and maintaining operational efficiency.

In my previous role as a Reporting and Analytics Product Manager, I collaborated with enterprise IT admins to understand their struggles and design solutions. This article explores the traditional pain points of admin reporting and highlights how AI-powered tools are revolutionizing this domain.

Key pain points in admin reporting

Through my research and engagement with enterprise IT admins, several recurring challenges surfaced:

Manual, time-intensive processes: Admins often spent significant time collecting, aggregating, and validating data from fragmented sources. These manual tasks not only left little room for strategic planning but also led to frequent errors.

Data complexity and compliance: The explosion of data, coupled with stringent regulatory requirements (e.g., GDPR, HIPAA), made ensuring data integrity and security a daunting task for many admins.

Unpredictable user requests: Last-minute requests or emergent issues from end-users often disrupted admin workflows, adding stress and complexity to their already demanding roles.

Limited insights for decision-making: Traditional reporting frameworks offered static, retrospective metrics with minimal foresight or actionable insights for proactive decision-making.

Optimizing LLM performance and output quality

The session focuses on enhancing outcomes for customers and businesses by optimizing the performance and output quality of generative AI.

Building a workflow to solve reporting challenges

To address these pain points, I developed a workflow that automates data collection and improves overall reporting efficiency. Below is a comparison of traditional reporting workflows and an improved, AI-driven approach:

Traditional workflow:

Data collection: Manually gathering data from different sources (e.g., logs, servers, cloud platforms).

Data aggregation: Combining data into a report manually, often using Excel or custom scripts.

Validation: Ensuring the accuracy and consistency of aggregated data.

Report generation: Compiling and formatting the final report for stakeholders.

Improved workflow (AI-driven):

Automation: Introducing AI tools to automate data collection, aggregation, and validation, which significantly reduces manual efforts and errors.

Real-Time Insights: Integrating real-time data sources to provide up-to-date, actionable insights.

Customization: Providing interactive dashboards for on-demand reporting, enabling admins to track key metrics and make data-driven decisions efficiently.

Evolution with AI capabilities: Market research insights

Several leading companies have successfully implemented AI to transform their admin reporting processes. Below are examples that highlight the future of admin reporting:

Microsoft 365 Copilot

Microsoft’s AI-powered Copilot integrates with its suite of apps to provide real-time data insights, trend forecasting, and interactive visualizations.

This proactive approach helps IT admins make data-driven decisions while automating manual processes. By forecasting trends and generating real-time reports, Copilot allows admins to manage resources and workloads more effectively.

Salesforce Einstein Analytics

Salesforce Einstein leverages advanced AI for predictive modeling, customer segmentation, and enhanced analytics.

Admins can forecast future trends based on historical data and create personalized reports that directly impact strategic decision-making. This enables actionable insights that were previously difficult to uncover manually.

Box AI agents

Box’s AI agents autonomously collect, analyze, and report data. These agents detect anomalies and generate detailed reports, freeing admins to focus on higher-priority tasks. By automating complex reporting processes, Box’s AI agents enhance both speed and accuracy in decision-making.

How generative AI is revolutionizing drug discovery and development

Discover how generative AI is transforming drug discovery, medical imaging, and patient outcomes to accelerate advancements with AstraZeneca

Future capabilities and opportunities

Looking ahead, several emerging capabilities can further unlock the potential of admin reporting:

Seamless data integration: AI-powered tools enable organizations to unify data from disparate systems (e.g., cloud storage, internal databases, third-party applications), providing a holistic view of critical metrics and eliminating the need for manual consolidation.

AI-powered decision support: Context-aware AI can offer personalized recommendations or automate complex workflows based on historical patterns and operational context, reducing manual intervention while enhancing accuracy.

Automated compliance checks: AI tools can continuously monitor compliance with evolving regulations, automatically generating compliance reports to keep organizations secure and up-to-date.

Security and performance monitoring: AI can detect unusual patterns in data, such as unexpected traffic spikes or system anomalies, allowing admins to proactively address potential security threats or failures before they escalate.

Interactive dashboards and NLP: By incorporating natural language processing (NLP), AI tools enable admins to query data using plain language and receive intuitive, visual reports, streamlining analysis and enhancing user experience and usability.

Conclusion

The transformation of admin reporting from manual workflows to AI-driven insights has revolutionized IT operations. By automating routine tasks, delivering real-time insights, and enhancing predictive capabilities, AI empowers IT admins to focus on strategic initiatives while ensuring data accuracy and compliance.

As organizations continue to adopt advanced AI capabilities, the future of admin reporting holds exciting possibilities, from seamless data integration to adaptive, context-aware decision-making tools.

These innovations will not only enhance efficiency but also enable organizations to thrive in an increasingly complex, data-driven world.

#admin#agents#ai#AI AGENTS#ai tools#AI-powered#Analysis#Analytics#anomalies#applications#approach#apps#Article#Articles#automation#box#Building#Cloud#cloud storage#Companies#comparison#complexity#compliance#consolidation#Customer Segmentation#data#data collection#Data Integration#data integrity#data security

0 notes

Text

The advantages of Computer Networks: Enhancing Connectivity & Efficiency

Computer networks form the backbone of modern communication, business operations, and data management, enabling seamless connectivity and resource sharing. Whether in workplaces, homes, or global enterprises, networking has transformed the way we exchange information. Here’s why computer networks are essential for efficiency, security, and collaboration. 1. Seamless Communication &…

#business connectivity#cloud storage solutions#computer networking benefits#digital collaboration#IT infrastructure efficiency#network performance optimization#secure data sharing

1 note

·

View note

Video

youtube

🔐 Your Data Deserves Better

This Wednesday, we’re diving into the future of data protection with an exclusive presentation on GotBackup — the cloud backup service that secures your files and helps you earn residual income. 💻💰

🌍 100% Online & Free 🗓️ Don’t miss it — spots are limited!

👉 Reserve Your Spot Now

https://bit.ly/42JEDax

#GotBackup #DataProtection #CloudBackup #DigitalSecurity #OnlineBackup #PassiveIncome #WorkFromHome #TechTools #BackupSolutions #SecureYourFiles #ProtectYourData #TumblrEvents #CyberSecurity #CloudStorage

(via 🔒 Why You Can’t Miss This Wednesday’s GotBackup Presentation!)

#GotbackUp#data protection#cloud backup#digital security#Online Backup#passive income#work from home#tech tools#backup solution#secure your files#protect your data#tumblr event#cybersecurity#cloud storage

0 notes