#Scrape Data from eBay

Explore tagged Tumblr posts

Text

eBay Scraper | Scrape eBay product data

Use the unofficial eBay scraper API to scrape eBay product data, including categories, prices, and keywords, in the USA, UK, Canada, Germany, and other countries.

0 notes

Text

3.11.2024: What I Know and Where to Go

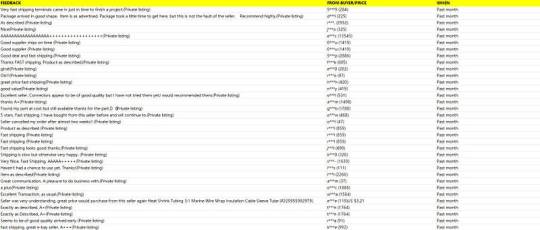

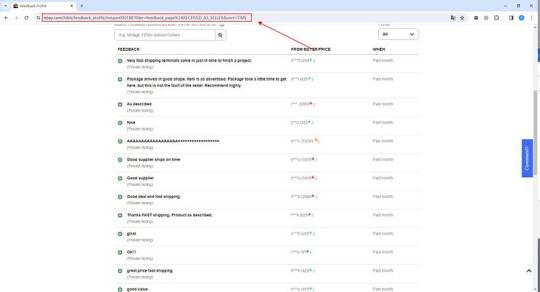

While the simplest and most brute-force way to catalog every Garfield plush would just be to get on Ebay and put each unique listing into a spreadsheet (which trust, I AM doing), just ending there would mean that a LOT of plushies slip through the cracks, and what's the point in an ultimate spreadsheet without all of the available data? As such, I've been trying to use a more top-down approach to fill in the blanks where Ebay cannot.

So far, I've had trouble finding any leads that aren't just dead ends. I haven't been actively recording the process so far, so this is a way to get this blog up to date and also sort out what to do next.

Here's what I know so far:

Dakin Inc has a long winding history, from selling guns to plush animals, but what's important to know for our investigation is that it was founded in 1955 and began merchandising Garfield plushies in the 1980's. It's reasonable to assume that this started at the earliest in 1981, since that was the year that Paws Inc was officially founded and allowed to freely merchandise Garfield's likeness.

Applause Inc, another toy company, bought out Dakin in 1995, after the 1994 San Francisco earthquake, which destroyed their headquarters. I haven't found any evidence of Garfield plushies ever being produced or sold under Applause, which leads me to believe that they stopped Garfield production in that year at the latest.

These give us a hypothetical timeline: 1981-1995. Sweet. Given this timeline, copies/scans of physical catalogs would be a good resource, however I'm having trouble finding them at all, let alone any that feature the Garfield plushies. I'm still trawling the internet looking for them, though I might resort to hoping on Reddit or some old forums to see if anyone else has good leads.

It's hard to find any former members of Dakin Inc as well, especially any who worked during this time period. I'm not deep into this aspect of the investigation yet, so I'll return to this more later.

As for the toys themselves, there are a few details that might be worth looking over, mostly on the tags. First up is the copyright dates on the tags. Some will have just one year number, ex. 1983, while some will have two specific years together, ex. 1978, 1983. These seem to refer to the year that Garfield was picked up and printed by Feature Syndicate (1978) and the year the plushie was produced (1983, here). These will help us create a timeline.

The second feature on the tag is that some plushes will have a number in a circle on the back of their tags. However, I've been yet to find any sort of pattern or meaning to these. It's not character based, as the same character in a different pose will have different numbers and two different characters will have the same number. There's no pattern with similar poses either, nor year of production. Most plushies have them, but I've noticed that some of the Garfield ones don't for whatever reason??

It's been frustrating, because it seems that these numbers might either be related to the production process or catalog ordering, but without the context they mean virtually nothing. I've looked into tagging practices at the time, even going so far as to email some employees at the US Consumer Product Safety Committee about old tagging standards, but I've come up with nothing. This suggests that this was a practice exclusive (relatively) to Dakins, which is further backed up by noticing that some other Dakin plushes from the time had these numbers as well. The Garfield numbers tended to stay in the teens though, while the numbers I could find on other plushes have been in the 20's and higher.

These are the leads I have for now. My next steps look like this:

scrape the internet for catalog scans

ask in forums for any leads

look into former employees that could answer questions

try and figure out what the numbers on the tags could mean

continue logging new Ebay finds

#he speaks#garfield plush#garfield#garfposting#garfield the cat#garfield cat#garfield collection#garfield collector#toy collector#collection

3 notes

·

View notes

Text

How to Use Web Scraping for MAP Monitoring Automation?

As the market of e-commerce is ever-growing, we can utilize that online markets are increasing with more branded products getting sold by resellers or retailers worldwide. Some brands might not notice that some resellers and sellers sell branded products with lower pricing to get find customers, result in negative impact on a brand itself.

For a brand reputation maintenance, you can utilize MAP policy like an agreement for retailers or resellers.

MAP – The Concept

Minimum Advertised Pricing (MAP) is a pre-confirmed minimum price for definite products that authorized resellers and retailers confirm not to advertise or sell or below.

If a shoe brand set MAP for A product at $100, then all the approved resellers or retailers, either at online markets or in brick-&-mortar stores become grateful to pricing not under $100. Otherwise, retailers and resellers will get penalized according to the MAP signed agreement.

Normally, any MAP Policy might benefit in provided aspects:

Guaranteed fair prices and competition in resellers or retailers

Maintaining value and brand awareness

Preventing underpricing and pricing war, protecting profit limits

Why is Making the MAP Policy Tough for Brands?

1. Franchise stores

A franchise store is among the most common ways to resell products of definite brands. To organize monitoring of MAP Violation of the front store retailers, we could just utilize financial systems to monitor transactions in an efficient way.

Yet, a brand still can’t ensure that all sold products submitted by franchise stores are 100% genuine. It might require additional manual work to make that work perfectly.2. Online Market Resellers

If we look at research of the Web Retailers, we can have a basic idea about world’s finest online marketplaces. With over 150 main all- category markets across the globe, countless niche ones are available.Online retailers which might be selling products in various online marketplaces

Certainly, most online retailers might choose multiple marketplaces to sell products which can bring more traffic with benefits.Indefinite resellers without any approval

Despite those that sell products using approval, some individual resellers deal in copycat products that a brand might not be aware of.

So, monitoring pricing a few some products with ample online markets at similar time could be very difficult for a brand.

How to Find MAP Violations and Defend Your Brand in Online Markets?

For outdated physical retail, a brand require a business system to record data to attain MAP monitoring. With online market resellers, we would like to introduce an extensively used however ignored tech data scraping which can efficiently help them in MAP monitoring.

Consequently, how do brands utilize data scraping for detecting if all resellers violate an MAP policy?

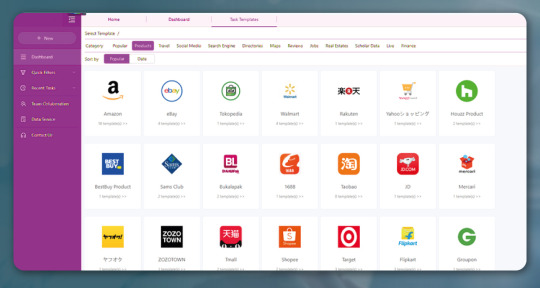

Let’s assume that one online reseller is selling products on different 10 online websites like Amazon, Target, JD, Taobao, eBay, Rakuten, Walmart,Tmall, Flipkart, and Tokopedia.

Step 1: Identify which data you need?

Frankly speaking, for MAP monitoring, all the data needed include product information and pricing.

Step 2: Choose a suitable technique to make data scrapers.

We need to do 10 data scrapers to collect data from corresponding markets and scraping data in a definite frequency.

A programmer need to write 10 scripts to achieve web scraping. Though, the inadequacies are:

Trouble in maintaining web scrapers if a website layout is changed.

Difficulty to cope with IP rotations as well as CAPTCHA and RECAPTCHA.

A supernumerary selection is the use of a data scraping tool made by Actowiz Solutions. For coders or non-coders, this can provide ample web scraping.

2. Automatic crawler: Also, the latest Actowiz Solutions’ scrapers enable auto data detection and creates a crawler within minutes.

Step 3: Running scrapers to collect data on 10 online markets. To get MAP monitoring, we need to scrape data at definite frequencies. So, whenever you prepare a scraper utilizing computer languages, you might have to start scrapers manually each day. Or, you could run the script with an extraction frequency function written with it. Though if you are using a web scraping tool like Actowiz Solutions, we could set the scraping time consequently.

Step 4: Subsequently after having data, whatever you should do is just go through the required data. Once you recognize any violating behaviors, you can react to it immediately.

Conclusion

For brands, MAP is very important. It helps in protecting the brand reputation and stop pricing war amongst resellers or retailers and offer more alternatives to do marketing. To deal with MAP desecrations, some ideas are there and you can search thousands of ideas online within seconds. Using MAP monitoring, it’s easy to take benefits from web extraction, the most profitable way of tracking pricing across various online markets, Actowiz Solutions is particularly helpful.

For more information, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping and web scraping services requirements

9 notes

·

View notes

Text

Unlock Business Insights with Web Scraping eBay.co.uk Product Listings by DataScrapingServices.com

Unlock Business Insights with Web Scraping eBay.co.uk Product Listings by DataScrapingServices.com

In today's competitive eCommerce environment, businesses need reliable data to stay ahead. One powerful way to achieve this is through web scraping eBay.co.uk product listings. By extracting essential information from eBay's vast marketplace, businesses can gain valuable insights into market trends, competitor pricing, and customer preferences. At DataScrapingServices.com, we offer comprehensive web scraping solutions that allow businesses to tap into this rich data source efficiently.

Web Scraping eBay.co.uk Product Listings enables businesses to access critical product data, including pricing, availability, customer reviews, and seller details. At DataScrapingServices.com, we offer tailored solutions to extract this information efficiently, helping companies stay competitive in the fast-paced eCommerce landscape. By leveraging real-time data from eBay.co.uk, businesses can optimize pricing strategies, monitor competitor products, and gain valuable market insights. Whether you're looking to analyze customer preferences or track market trends, our web scraping services provide the actionable data needed to make informed business decisions.

Key Data Fields

With our eBay.co.uk product scraping, you can access:

1. Product titles and descriptions

2. Pricing information (including discounts and offers)

3. Product availability and stock levels

4. Seller details and reputation scores

5. Shipping options and costs

6. Customer reviews and ratings

7. Product images

8. Item specifications (e.g., size, color, features)

9. Sales history and volume

10. Relevant categories and tags

What We Offer?

Our eBay.co.uk product listing extraction service provides detailed information on product titles, descriptions, pricing, availability, seller details, shipping costs, and even customer reviews. We tailor our scraping services to meet specific business needs, ensuring you get the exact data that matters most for your strategy. Whether you're looking to track competitor prices, monitor product availability, or analyze customer reviews, our team has you covered.

Benefits for Your Business

By leveraging web scraping of eBay.co.uk product listings, businesses can enhance their decision-making process. Competitor analysis becomes more efficient, enabling companies to adjust their pricing strategies or identify product gaps in the market. Sales teams can use the data to focus on best-selling products, while marketing teams can gain insights into customer preferences by analyzing product reviews.

Moreover, web scraping eBay product listings allows for real-time data collection, ensuring you’re always up to date with the latest market trends and fluctuations. This data can be instrumental for businesses in pricing optimization, inventory management, and identifying potential market opportunities.

Best Web Scraping eBay.co.uk Product Listings in UK:

Liverpool, Dudley, Cardiff, Belfast, Northampton, Coventry, Portsmouth, Birmingham, Newcastle upon Tyne, Glasgow, Wolverhampton, Preston, Derby, Hull, Stoke-on-Trent, Luton, Swansea, Plymouth, Sheffield, Bristol, Leeds, Leicester, Brighton, London, Southampton, Edinburgh, Nottingham, Manchester, Aberdeen and Southampton.

Best eCommerce Data Scraping Services Provider

Amazon.ca Product Information Scraping

Marks & Spencer Product Details Scraping

Amazon Product Price Scraping

Retail Website Data Scraping Services

Tesco Product Details Scraping

Homedepot Product Listing Scraping

Online Fashion Store Data Extraction

Extracting Product Information from Kogan

PriceGrabber Product Pricing Scraping

Asda UK Product Details Scraping

Conclusion

At DataScrapingServices.com, our goal is to provide you with the most accurate and relevant data possible, empowering your business to make informed decisions. By utilizing our eBay.co.uk product listing scraping services, you’ll be equipped with the data needed to excel in the competitive world of eCommerce. Stay ahead of the game and unlock new growth opportunities with the power of data.

Contact us today to get started: Datascrapingservices.com

#ebayproductlistingscraping#webscrapingebayproductprices#ecommerceproductlistingextraction#productdataextraction#marketanalysis#competitorinsights#businessgrowth#datascrapingservices#productpricingscraping#datadriven

0 notes

Text

Stupid AI and voice acting

I feel like this is talked about a lot in regard to digital image artists or animators, but AI isn’t just an issue for them. It literally threatens the entire industry of art as a whole. With companies being greedy to the point of preferring that your mother dive head-first into an active wood chipper over having to pay someone a living wage, and now that AI is worming it’s way into seemingly every single FUCKING thing ever (Not even my god-forsaken washing machines are safe, doomed to have AI wrapped around their necks like a damn yoke. I reblogged something related just under this post on my blog), is it any wonder that people who they see as nothing more than numbers in a model or pawns to be used up and abandoned when they’re no longer useful. It’s sickening. I hate it. I hate it so much. Not even, like, just AI, it’s the spirit of the entire thing that makes me so excited for class consciousness.

I specifically wanted to highlight voice acting, because that’s particularly in path of AI’s advancement. All of those tik tok brainrot videos, of which there are an innumerable amount, have AI voices. Now, text-to-speech has been around forever, robot voices reading reddit Am I The Asshole stories are old news. But now, you’ve got people waking up and finding that their voices have been scraped from their work and turned into an AI, and are now saying shit they’ve never said. I remember reading about this one story where the VA for Beidou in genshin impact got their work stolen and used to make a Beidou AI voice and subsequently tik tok porn, which the VA stumbled upon in the wild like finding the thing that got stolen from you on ebay. The real kicker is that the voice actor can really only cry about it to ElevenLabs, which, if you hadn’t guessed it already, is now historically precedented to be a company that sucks! Yippee! Forbes made an article on it, but it has a paywall, so definitely don’t mash ctrl + p as you click the link to turn it into a PDF you can read, because it might not work, and if it doesn’t, closing the link and trying again but faster than the paywall wouldn’t help at all. Nope. www.forbes.com/sites/rashishrivastava/2023/10/09/keep-your-paws-off-my-voice-voice-actors-worry-generative-ai-will-steal-their-livelihoods/

Like, as a tool? Fine. That’s completely fine. Why shouldn’t AI compile all my data for me into a neat little package I can send to the editing team? Apart from environmental concerns (which are valid but not the focus, apparently) I wouldn’t mind if AI helped a character someone’s voicing sound slightly different, older or younger or things like that. Or in cases where you have a game and you want the player to be able to actually just talk to the characters, AI wouldn’t be a horrible solution. Games like Waltz of the Wizard, where you can talk to Skully, they don’t use AI but they instead have a bunch of funny reactions to things the player says or does.

Very cool idea. But, to any game devs out there, make sure you have plenty of actual human voice lines because if baldurs gate can do half of a million of them, you can too. Even if it’s to a lesser degree. You don’t need that many, but you SHOULD have a lot if you’re going to have it so the player can talk to the NPCs and say whatever they want.

But it’s crazy how instead of using AI to make games better, you instead hear about how voices are getting stolen and used without a modicum of consent.

0 notes

Text

Major Applications of UPC Product Code Data Scraping Services

What Are the Major Applications of UPC Product Code Data Scraping Services?

In today's digital marketplace, data is a vital asset that offers significant competitive advantages for businesses. Among the critical data points for retailers, manufacturers, and e-commerce platforms is the Universal Product Code (UPC), a unique identifier assigned to each product. UPC codes are essential for inventory management, sales tracking, and pricing strategies. As the demand for data continues to grow, UPC Product Code Data Scraping Services have emerged as a powerful method for extracting UPC codes from various online sources. These services enable businesses to efficiently gather accurate product information from e-commerce platforms, manufacturer websites, and industry databases. This article explores the concept of web scraping for UPC codes, highlighting its benefits, applications, and the ethical considerations that organizations must consider when employing such techniques. By leveraging UPC Product Code Data Scraping Services, businesses can enhance their data-driven decision-making and stay ahead in a competitive market.

Understanding UPC Codes

The Universal Product Code (UPC) is a 12-digit barcode used to identify products in retail settings uniquely. Introduced in the 1970s, UPC codes have become the standard for tracking trade items in stores and warehouses. Each UPC is unique to a specific product, enabling retailers to manage inventory efficiently, streamline sales processes, and analyze consumer behavior.

For businesses, UPC codes are critical for various functions, including:

1. Inventory Management: UPC codes allow retailers to track stock levels, manage reordering processes, and reduce the risk of stockouts or overstock situations.

2. Sales Analysis: By linking sales data to UPC codes, businesses can analyze trends, identify top-selling products, and make data-driven decisions to improve sales performance.

3. Pricing Strategies: UPC codes facilitate competitive pricing analysis, enabling businesses to monitor competitors' prices and adjust their strategies accordingly.

4. E-commerce Listings: Online retailers use UPC codes to create accurate product listings and enhance customer searchability.

The Role of Web Scraping in UPC Code Extraction

Web scraping refers to the automated process of extracting data from websites. It employs various techniques and tools to collect structured data from HTML pages, which can then be organized and analyzed for business insights. In the context of UPC codes, web scraping is particularly useful for gathering product information from online retailers, manufacturer websites, and industry databases.

1. Automated Data Collection: Web scraping enables businesses to extract UPC product codes quickly and efficiently from various online sources. Automated tools can navigate websites, gather information, and compile data into structured formats. This process significantly reduces the time and effort required for manual data entry, allowing companies to focus on strategic initiatives rather than tedious tasks.

2. Access to Comprehensive Product Information: By utilizing techniques, businesses can scrape retail UPC product data alongside UPC codes, including product descriptions, prices, and availability. This comprehensive data collection provides a holistic view of products, enabling retailers to make informed decisions regarding inventory management and pricing strategies.

3. Efficient Extraction from E-commerce Sites: Extracting UPC codes from e-commerce sites like Amazon, eBay, and Walmart is crucial for maintaining competitive pricing and accurate product listings. Web scraping tools can systematically gather UPC codes from these platforms, ensuring businesses can access up-to-date information on product offerings and trends.

4. Enhanced Market Analysis: Web scraping facilitates ecommerce product data collection by providing insights into competitor offerings, pricing strategies, and market trends. Businesses can analyze the scraped data to identify top-selling products, monitor competitor pricing, and adjust their strategies accordingly, thereby gaining a competitive advantage in the marketplace.

5. Improved Data Accuracy: The use of web scraping tools for UPC code extraction ensures high levels of data accuracy. By automating the data collection process, businesses minimize the risks associated with manual entry errors. Accurate UPC codes are crucial for effective inventory management and sales tracking, ultimately improving operational efficiency.

Web scraping can be leveraged to extract UPC codes from various sources, including:

Retail Websites: E-commerce platforms like Amazon, eBay, and Walmart often display UPC codes alongside product listings, making them a valuable resource for data extraction.

Manufacturer Sites: Many manufacturers provide detailed product information on their websites, including UPC codes, specifications, and descriptions.

Product Databases: Various online databases and repositories aggregate product information, including UPC codes, which can benefit businesses looking to enrich their product catalogs.

Benefits of Web Scraping for UPC Codes

The use of Web Scraping Ecommerce Product Data offers numerous advantages for businesses in various sectors:

Data Accuracy and Completeness: Manual data entry is often prone to errors, leading to inaccuracies that can have significant repercussions for businesses. Web scraping automates the data extraction process, minimizing the risk of human error and ensuring that the UPC codes collected are accurate and complete. This level of precision is essential when working with eCommerce product datasets, as even minor inaccuracies can disrupt inventory management and sales tracking.

Cost-Effectiveness: Web scraping eliminates costly manual research and data entry. By automating the extraction process, businesses can save time and resources, allowing them to focus on strategic initiatives rather than tedious data collection tasks. Utilizing eCommerce product data scrapers can further enhance cost-effectiveness, as these tools streamline the data collection.

Speed and Efficiency: Web scraping can extract vast amounts of data quickly. Businesses can quickly gather UPC codes from multiple sources, updating them on market trends, competitor offerings, and pricing strategies. The ability to process large eCommerce product datasets efficiently ensures that companies remain agile and responsive to market changes.

Market Insights: By scraping UPC codes and related product information, businesses can gain valuable insights into market trends and consumer preferences. This data can inform product development, marketing strategies, and inventory management. Analyzing eCommerce product datasets gives businesses a clearer understanding of customer behavior and market dynamics.

Competitive Advantage: With accurate and up-to-date UPC code data, businesses can make informed decisions that give them a competitive edge. Understanding product availability, pricing, and market trends enables companies to respond quickly to changing consumer demands. Leveraging eCommerce product data scrapers allows businesses to stay ahead of competitors and adapt their strategies in a rapidly evolving marketplace.

Applications of UPC Code Data

The data extracted through web scraping can be utilized in various applications across different industries:

1. E-commerce Optimization: For online retailers, having accurate UPC codes is essential for product listings. Scraping UPC codes from competitor sites allows retailers to ensure their product offerings are competitive and to enhance their SEO strategies by optimizing product descriptions.

2. Inventory Management: Retailers can use scraped UPC codes to analyze stock levels and product performance. Businesses can identify top-performing products by linking UPC data with sales metrics and optimize their inventory accordingly.

3. Price Monitoring: Businesses can monitor competitors' prices by scraping UPC codes and associated pricing data. This allows them to adjust their pricing strategies dynamically, ensuring they remain competitive.

4. Market Research: Data extracted from various sources can provide valuable insights into market trends, consumer preferences, and emerging products. Businesses can use this information to inform their product development and marketing strategies.

5. Integration with ERP Systems: Scraped UPCs can be integrated into Enterprise Resource Planning (ERP) systems, streamlining inventory management and sales tracking. This integration helps businesses maintain accurate records and improve operational efficiency.

Ethical and Legal Considerations While Scraping UPC Codes

While web scraping offers numerous benefits, businesses must approach this practice cautiously and honestly. Several ethical and legal considerations should be taken into account:

1. Terms of Service Compliance

Many websites have terms of service that explicitly prohibit scraping. Businesses must review these terms before proceeding with data extraction. Violating a website's terms can result in legal action or a ban from the site.

2. Respect for Copyright

Scraping copyrighted or proprietary data can lead to legal disputes. Businesses should only collect publicly accessible data and not infringe on intellectual property rights.

3. Data Privacy Regulations

Data privacy laws, such as the General Data Protection Regulation (GDPR) in the European Union, govern how businesses can collect and use personal data. While UPC codes do not contain personal information, businesses should be aware of the broader implications of data scraping and comply with relevant regulations.

4. Responsible Data Use

Even when data is collected legally, businesses should consider how they use it. Responsible data use means avoiding practices that could harm consumers or competitors, such as price-fixing or anti-competitive behavior.

Conclusion

Web scraping for product UPC codes presents a valuable opportunity for businesses to access critical data that can inform decision-making and enhance operational efficiency. By automating the data collection process, companies can gain insights into inventory management, pricing strategies, and market trends, all while minimizing costs and improving accuracy.

However, it is essential to approach web scraping with a focus on ethical considerations and legal compliance. By adhering to best practices and respecting the rights of data owners, businesses can harness the power of web scraping to gain a competitive edge in today's dynamic marketplace.

As the landscape of e-commerce and retail continues to evolve, the importance of accurate UPC code data will only grow. Businesses that leverage web scraping effectively will be better positioned to adapt to changes in consumer preferences and market dynamics, ensuring their success in the long run.

Experience top-notch web scraping service and mobile app scraping solutions with iWeb Data Scraping. Our skilled team excels in extracting various data sets, including retail store locations and beyond. Connect with us today to learn how our customized services can address your unique project needs, delivering the highest efficiency and dependability for all your data requirements.

Source: https://www.iwebdatascraping.com/major-applications-of-upc-product-code-data-scraping-services.php

#UPCProductCodeDataScrapingServices#ExtractUPCProductCodes#EcommerceProductDatasets#EcommerceProductDataCollection#ECommerceProductDataScrapers

0 notes

Text

Best ISP Proxies: What They Are and Why You Need Them

In today’s digital age, proxies have become essential for individuals and businesses looking to ensure privacy, security, and efficient data management. Best ISP Proxies Among the various types of proxies available, ISP proxies have gained significant popularity due to their unique blend of performance and anonymity. Let’s explore what ISP proxies are and why they stand out as the best option for certain use cases.

What Are ISP Proxies?

ISP proxies, or Internet Service Provider proxies, are residential IP addresses assigned by legitimate ISPs but hosted on data center servers. This hybrid nature allows ISP proxies to combine the high speed and scalability of data center proxies with the authenticity and low detection rates of residential proxies.

When using an ISP proxy, websites and online platforms will recognize the IP as being from a residential user, even though it is operated on powerful data center infrastructure. This provides users with a unique advantage, especially when trying to balance speed and invisibility.

Key Benefits of ISP Proxies

High Anonymity: ISP proxies mask your real IP address with one from a residential range, making it appear that requests are coming from genuine users. This reduces the chances of getting flagged or banned by websites that scrutinize proxy usage.

Increased Speed: Unlike traditional residential proxies that rely on potentially slower, individual user connections, ISP proxies run on data centers, offering faster and more reliable speeds. This makes them ideal for high-volume tasks such as web scraping, automation, and managing multiple accounts.

Bypass Geo-Restrictions: ISP proxies provide access to specific geographic locations, enabling users to bypass regional content restrictions. Whether for streaming, gaming, or market research, they offer the flexibility needed to access content globally.

Enhanced Reliability: ISP proxies are more reliable than residential proxies, which can suffer from inconsistent speeds or connection dropouts due to their reliance on residential users’ internet services.

Lower Ban Rates: Since ISP proxies are perceived as residential connections, they are less likely to be flagged by websites for suspicious activity. This makes them ideal for tasks requiring long-term proxy use, such as managing social media accounts or engaging in e-commerce activities.

Use Cases for ISP Proxies

Web Scraping: For businesses and researchers collecting large amounts of data, ISP proxies offer the speed and anonymity required to efficiently scrape websites without being blocked.

SEO Monitoring: Digital marketers can use ISP proxies to gather accurate information from different regions, improving their SEO strategies without triggering security measures from search engines.

E-Commerce Management: Managing multiple accounts or listings on platforms like Amazon or eBay can be difficult without proxies. ISP proxies allow users to manage numerous accounts from different locations without getting flagged for suspicious activity.

Ad Verification: Businesses can verify how their ads are being displayed across different locations using ISP proxies, ensuring they are served correctly to the intended audience.

Why Choose Proxiware for ISP Proxies?

When it comes to ISP proxies, Proxiware offers some of the best solutions in the market. With a focus on performance, anonymity, and scalability, Proxiware ensures that its ISP proxies cater to various use cases, from web scraping to digital marketing and beyond.

Fast and Reliable Connections: Proxiware’s ISP proxies offer blazing fast speeds thanks to their data center backbone, making them perfect for tasks that require both speed and stealth.

Global Coverage: With IP addresses from multiple countries, Proxiware allows users to access region-specific content seamlessly, ensuring you can target the markets you need.

Cost-Effective Solutions: Proxiware provides competitive pricing plans, ensuring you get the best value for your money while enjoying top-tier proxy performance.

Conclusion

If you’re looking for the best proxy solution that combines speed, reliability, and anonymity, ISP proxies are the way to go. Best ISP Proxies Whether you’re managing social media accounts, scraping websites, or performing ad verification, ISP proxies provide the perfect balance of performance and invisibility. Explore Proxiware’s offerings today and take your proxy usage to the next level.

0 notes

Text

Proxy IP's main use of people, you belong to which category?

Proxy IP has become a common tool in Internet operations, especially in the context of today's information technology globalization, proxy IP application scenarios are more and more extensive. So, which groups of people use proxy IP most often?

1. Data Scraping and Web Crawling Engineers

Data crawling and web crawling are the most common applications of proxy IP. Crawler engineers can bypass the anti-crawler mechanism of websites through proxy IP, and collect data from websites in a large scale and efficiently. The switching function of proxy IP can help crawlers to perform large-scale data crawling without exposing themselves, and avoid being blocked due to frequent visits to the same IP. For enterprises or individuals engaged in market research and competitive analysis, proxy IP is undoubtedly a powerful tool.

2. Cross-border e-commerce and foreign trade practitioners

Cross-border e-commerce and foreign trade practitioners are also heavy users of proxy IP. In cross-border e-commerce operations, merchants need to frequently log in to e-commerce platforms in different countries, such as Amazon, eBay, etc., and monitor market trends in multiple countries at the same time. By using residential proxies, they can easily switch to the IP address of the target country for accurate localization and avoid account risks caused by mismatched IP addresses.

3. Overseas Ad Placement and Promoters

When making overseas advertisement placement, proxy IP can help advertising practitioners operate their accounts more flexibly, manage ad campaigns, and conduct data monitoring. Especially when advertising globally on Facebook, Google Ads and other platforms, proxy IP can solve the problems of regional restrictions and IP blocking to ensure the safety and smooth operation of advertising accounts.

4. Social media operators

Social media operators, especially those engaged in account management, usually need to operate multiple accounts at the same time. While social platforms have strict restrictions on logging into multiple accounts with the same IP, the use of proxy IPs can effectively circumvent such restrictions. Especially on social platforms such as Facebook, Instagram, Twitter, etc., proxy IPs can help with multi-account operations, maintain IP diversity, and reduce the risk of account blocking.

5. Gamers and proxy trainers

Proxy IPs are also crucial for certain international gamers or surrogates. As the servers of some games are distributed all over the world and the network latency is high in certain regions, proxy IP can help gamers optimize their network connection and improve their gaming experience. At the same time, by switching IP addresses of different countries, gamers can also unlock game content in specific regions and enjoy more gaming fun.

6. Security and Privacy Protection Users

Some users attach great importance to network privacy and security, and they hide their real IP address through proxy IP to avoid being attacked or monitored by the network. Most of these users are engaged in network security or have strong awareness of personal information protection, proxy IP can effectively enhance their privacy and security.

Proxy IP is used by a wide range of people, and everyone can use proxy IP to improve the efficiency and security of their work and entertainment. If you also need the support of proxy IP, 711Proxy provides efficient and secure global proxy services to help you easily cope with all kinds of network needs!

0 notes

Text

How to Scrape Product Reviews from eCommerce Sites?

Know More>>https://www.datazivot.com/scrape-product-reviews-from-ecommerce-sites.php

Introduction In the digital age, eCommerce sites have become treasure troves of data, offering insights into customer preferences, product performance, and market trends. One of the most valuable data types available on these platforms is product reviews. To Scrape Product Reviews data from eCommerce sites can provide businesses with detailed customer feedback, helping them enhance their products and services. This blog will guide you through the process to scrape ecommerce sites Reviews data, exploring the tools, techniques, and best practices involved.

Why Scrape Product Reviews from eCommerce Sites? Scraping product reviews from eCommerce sites is essential for several reasons:

Customer Insights: Reviews provide direct feedback from customers, offering insights into their preferences, likes, dislikes, and suggestions.

Product Improvement: By analyzing reviews, businesses can identify common issues and areas for improvement in their products.

Competitive Analysis: Scraping reviews from competitor products helps in understanding market trends and customer expectations.

Marketing Strategies: Positive reviews can be leveraged in marketing campaigns to build trust and attract more customers.

Sentiment Analysis: Understanding the overall sentiment of reviews helps in gauging customer satisfaction and brand perception.

Tools for Scraping eCommerce Sites Reviews Data Several tools and libraries can help you scrape product reviews from eCommerce sites. Here are some popular options:

BeautifulSoup: A Python library designed to parse HTML and XML documents. It generates parse trees from page source code, enabling easy data extraction.

Scrapy: An open-source web crawling framework for Python. It provides a powerful set of tools for extracting data from websites.

Selenium: A web testing library that can be used for automating web browser interactions. It's useful for scraping JavaScript-heavy websites.

Puppeteer: A Node.js library that gives a higher-level API to control Chromium or headless Chrome browsers, making it ideal for scraping dynamic content.

Steps to Scrape Product Reviews from eCommerce Sites Step 1: Identify Target eCommerce Sites First, decide which eCommerce sites you want to scrape. Popular choices include Amazon, eBay, Walmart, and Alibaba. Ensure that scraping these sites complies with their terms of service.

Step 2: Inspect the Website Structure Before scraping, inspect the webpage structure to identify the HTML elements containing the review data. Most browsers have built-in developer tools that can be accessed by right-clicking on the page and selecting "Inspect" or "Inspect Element."

Step 3: Set Up Your Scraping Environment Install the necessary libraries and tools. For example, if you're using Python, you can install BeautifulSoup, Scrapy, and Selenium using pip:

pip install beautifulsoup4 scrapy selenium Step 4: Write the Scraping Script Here's a basic example of how to scrape product reviews from an eCommerce site using BeautifulSoup and requests:

Step 5: Handle Pagination Most eCommerce sites paginate their reviews. You'll need to handle this to scrape all reviews. This can be done by identifying the URL pattern for pagination and looping through all pages:

Step 6: Store the Extracted Data Once you have extracted the reviews, store them in a structured format such as CSV, JSON, or a database. Here's an example of how to save the data to a CSV file:

Step 7: Use a Reviews Scraping API For more advanced needs or if you prefer not to write your own scraping logic, consider using a Reviews Scraping API. These APIs are designed to handle the complexities of scraping and provide a more reliable way to extract ecommerce sites reviews data.

Step 8: Best Practices and Legal Considerations Respect the site's terms of service: Ensure that your scraping activities comply with the website’s terms of service.

Use polite scraping: Implement delays between requests to avoid overloading the server. This is known as "polite scraping."

Handle CAPTCHAs and anti-scraping measures: Be prepared to handle CAPTCHAs and other anti-scraping measures. Using services like ScraperAPI can help.

Monitor for changes: Websites frequently change their structure. Regularly update your scraping scripts to accommodate these changes.

Data privacy: Ensure that you are not scraping any sensitive personal information and respect user privacy.

Conclusion Scraping product reviews from eCommerce sites can provide valuable insights into customer opinions and market trends. By using the right tools and techniques, you can efficiently extract and analyze review data to enhance your business strategies. Whether you choose to build your own scraper using libraries like BeautifulSoup and Scrapy or leverage a Reviews Scraping API, the key is to approach the task with a clear understanding of the website structure and a commitment to ethical scraping practices.

By following the steps outlined in this guide, you can successfully scrape product reviews from eCommerce sites and gain the competitive edge you need to thrive in today's digital marketplace. Trust Datazivot to help you unlock the full potential of review data and transform it into actionable insights for your business. Contact us today to learn more about our expert scraping services and start leveraging detailed customer feedback for your success.

#ScrapeProduceReviewsFromECommerce#ExtractProductReviewsFromECommerce#ScrapingECommerceSitesReviews Data#ScrapeProductReviewsData#ScrapeEcommerceSitesReviewsData

0 notes

Text

How Customer Review Collection Brings Profitable Results?

What is the first thing you do when you're about to purchase? Do you rely on the brand's claims or the product's features? Or do you turn to other customers' experiences, seeking their insights and opinions? Knowing the first-hand experience through customer reviews builds trust.

Now, you can transform your role as a buyer, seller, or mediator by reading a few customer reviews and having a wide range of customer review collections. The power lies in extracting data from multiple resources, understanding various factors, and leveraging this knowledge to streamline your processes and efficiently bring quality returns.

This content will equip you with secret strategies for converting customer review collection into profitable actions to ensure your business's success. We will familiarize you with web scraping customer reviews from multiple sources and how companies optimize their marketing strategies to target potential leads.

What Is Customer Reviews Collection?

Review scraping services make retrieving customer review data from various websites and platforms to analyze valuable information easy and efficient. They streamline the complete process of collecting useful information and meet your goals with data stored in a structured format, giving you the confidence to leverage this data for your business's success.

Here are the common platforms to scrape review data of customers:AmazonYelpGlassdoorTripAdvisorTrustpilotCostcoGoogle ReviewsHomedepotShopeeIKEAZaraFlipkartLowesZalandoEtsyBigbasketAlibabaAmctheatresWalmartTargetRakuteneBayBestbuyWishShein

Customer review collection can be completed using web scraping tools, programs, or scripts to extract customer reviews from the desired location. This can include various forms of data, such as product ratings, reviews, images, reviewers' names, and other information if required. Collecting and analyzing this data lets you gain insights into customer preferences, product performance, and more.

How Is Customer Reviews Collection Profitable?

They are a source of customers' experience about specific goods and products, which means you can easily understand the pros and cons. Here are some of the benefits of data for your business that can help you generate quality returns:

Understand Your Products & Services

With access to structured customer reviews, understanding the positive and negative impacts on the audience becomes more manageable. This allows you to focus on the negative section, make necessary changes, and embrace the positive ones to grow and engage more audiences, inspiring your business's success.

Scraping Competitor Reviews

It is essential to know what you are up against in the market. With a custom review data scraper, you can easily filter the data you want to gather from where and when. This gives you the freedom to examine your competitors' positives and negatives. Now, you can build strategies to fulfill customer requirements where your competitors need to improve and improve services where they excel. This will ultimately grab the attention of potential users and boost profits efficiently.

Find The Top Selling Products & Services

It is a plus point if you know the popular products and services when entering a market irrelevant to your target industry. Some common platforms to extract customer reviews for services are Yelp and TripAdvisor, while people opt for Amazon, eBay, or Flipkart for products.

With billions of users active on each platform, you can analyze data about products and services from different locations, ages, genders, and more. The review scraping services use quality tools and resources to make data extraction effortless to understand.

Improve Your Marketing & Product Strategies

The customer reviews collection helps to optimize the production description and connect with your audience. Analyzing the data extracted can help you focus on customer-centric strategies to promote your products and services.

Also, you can get valuable insights about your team to take unbiased and accurate actions to enhance your business performance. Unlike customer forms, surveys, or other media for collecting customer feedback, product reviews are organic views explaining their experience. Customer reviews are unique in that they are often more detailed and provide a broader perspective, making them a valuable source of information for businesses.

Different Methods To Extract Customer Review Data

There are various methods available to scrape customer review data from multiple resources. Let you look at some of them:

Coding with Libraries

This involves using programming languages such as HTML, XPath, Python, Java, and others, depending on expertise. Then, use custom libraries or readily available ones like Beautiful Soup and Scrapy to parse website code and extract specific elements like ratings, text, and more.

Web Scraping Tools

Many software tools are designed for web scraping customer review data. These tools offer user-friendly interfaces to target website review sections and collect data without any code.

Scraping Review APIs

Some websites offer APIs (Application Programming Interfaces) allowing authorized review data access. This provides a structured way to collect reviews faster and effortlessly.

How Does Web Scraping Work For Customer Reviews Collection?

No matter which method you pick to extract customer review data, it is essential to meet the final target. Here is a standard procedure to collect desired data from multiple websites:

Define Web Pages

Creating a list of pages you need to scrape to gather customer review data is essential. Then, we will send HTTP requests to the target website to fetch the HTML content.

Parse HTML

Our experts will parse the content using libraries after fetching it. The aim is to convert the data into a structured format that is easy to understand.

Extraction

Web scrapers find elements like images, text, links, and more through tags, attributes, or classes. They gather and store this data in a desired format.

Organizing Data

Once you have stored the data in SCV, JSON, or a database for analysis, you can structure it efficiently. Multiple libraries are available to manage data for better visualization.

What To Do With Scraped Customer Review Collection Data?

You know the different methods and reasons for extracting customer review data. We will now give you insights about what to do next after gathering data from review scraping services:

Analysis

Go through your collected data to understand customer sentiments towards a particular resource. This involves analyzing customer reviews, looking for patterns or trends, and categorizing the feedback into positive, negative, or neutral. Having a wide range of information from different locations, platforms, and customers can help you find your business's and competitors' strengths and weaknesses.

For example, you might discover that customers love a particular product feature or need clarification on a specific aspect of your service. Allows you to connect with customers and personalize their experience to boost engagement rates.

Tracking

The market changes every second, so with the help of custom review, data scraper extraction will be done in real-time. This allows you to monitor the latest trends, demands, and updates. You can also figure out your business's USPs (Unique selling points) and quickly gain customer loyalty.

For example, you have tracked the market updates regularly for a particular location for previous months. Now, you know which product is highly purchased, the peak time of orders, and more details about the customers. This can help you optimize your promotions and target the right audience to have higher chances of conversions.

Strategize

After analyzing and monitoring the data, it is time to implement strategies to scale your business. Focus on the significant segments where customer reviews and opinions have made a difference. This can be a location, time duration, or a popular product with quality services.

For example, if you notice a trend of positive reviews for a particular product feature, you can emphasize that feature in your marketing campaigns. If you see a lot of negative feedback on a specific aspect of your service, you can address it and improve customer satisfaction. This could involve updating your product description, offering additional support for the feature, or adjusting your pricing strategy.

Social Profiling

Customer feedback helps optimize marketing strategies and gain the trust of other visitors. Social profiling means highlighting the positive customer reviews on your apps, websites, or social media channels.

You can demonstrate credibility by showcasing these reviews and letting potential customers make more informed decisions. This becomes an excellent source for new visitors to understand your services and the quality of customer care.

Wrapping It Up!

We have made your journey effective whether you are planning to scale your business, gain potential leads, understand the company's pros and cons, or gather information about competitors.

Web scraping has become a go-to solution for extracting customer review collection data stored in structured form for analysis. Pick the right tools, platforms, and experts to streamline the process. Whether dealing with competitor analysis, marketing, pricing, personalization, customer sentiments, or more, ensure you have a precise output for analysis.

At iWeb Scraping, a trusted provider of web data scraping services, we help you harness the power of customer review collection to boost your business's profits smartly. Data is dynamic and readily available. You need the right resources and expertise to convert that into high returns like ours.

0 notes

Text

eBay Scraper | Scrape eBay product data

RealdataAPI / ebay-product-scraper

Unofficial eBay scraper API to scrape or extract product data from eBay based on keywords or categories. Scrape reviews, prices, product descriptions, images, location, availability, brand, etc. Download extracted data in a structured format and use it in reports, spreadsheets, databases, and applications. The scraper is available in the USA, Canada, Germany, France, Australia, the UK, Spain, Singapore, India, and other countries.

Customize me! Report an issue E-commerce Business

Readme

API

Input

Related actors

Extract And Download Unlimited Product Data From EBay Using This API, Including Product Details, Reviews, Categories, Or Prices.

EBay Product Scraper Collects Any Product From Any Category In The Platform Or Using The Search Keyword And Page URLs. Download The Data In Any Digestible Format.

How to use the scraped eBay data?

Establish automatic bidding and price tracking - scan multiple retail products in real-time to manage your pricing or purchasing strategies.

Competitor price monitoring- Monitor products, sales data, and listing from digital stores to compare bidder's and Competitor's prices and support the decision-making process with actual data

Market analysis facilitation- scan and study eBay for products, images, categories, keywords, and more to learn consumer behavior and emerging trends.

Execute lightweight automation and improve performance - easily compile product data for machine learning, market research, product development, etc.

Generally, Amazon Subcategory Permalink Includes /S After The Amazon Domain. Therefore Ensure To Maintain Your URL Looks Like The Above Example.

Therefore, Add Any Links In The Input And Choose As Many Products As You Want To Collect. Then Export The Output Schema. You Can Also Get This Information Directly From The API Without Logging In To The Real Data API Platform.

Tutorial

If You Wish To Learn More About The Process Of How EBay Data Scraper Works, Check This Explanation In Detail With Examples And Screenshots.

Execution cost

Executing The Run Is The Simplest Way To Check The Required CUs. Executing A Single But More Extended Scraper Is More Effective Than Multiple Smaller Scrapers Due To The Startup Duration. On Average, This API Will Cost You Around 65 Cents Per 1k Products. For More Information, Check Our Pricing Page. If You're Confused About How Many CUs You Have, Check If You Need To Upgrade Them. You Can See Your Limits In Settings- Billing And Usage Tag In The Real Data API Console Account.

Input Parameters

This API Will Require Only A Few Settings From Your Side - A Proxy And A Link.

proxyConfiguration (needed)- mentions using proxy settings during actor execution. While running the API on the Real Data API, you must set the proxy setting on the default value { "useRealdataAPIProxy": true }

startUrls (needed): list of request points, mentioning search terms and categories you wish to extract. You can use any URL of the category or search page from eBay.

Example

{ "StartUrls": [ { "Url": "Https://Www.Ebay.Com/Sch/I.Html?_from=R40&_trksid=P2499334.M570.L1313&_nkw=Massage%2Brecliner%2Bchair&_sacat=6024" }, { "Url": "Https://Www.Ebay.Com/Sch/I.Html?_from=R40&_trksid=P2334524.M570.L1313&_nkw=Motor+Parts&_sacat=0&LH_TitleDesc=0" }, { "Url": "Https://Www.Ebay.Com/Sch/I.Html?_from=R40&_trksid=P2334524.M570.L1313&_nkw=Bicycle+Handlebars&_sacat=0&LH_TitleDesc=0" } ], "MaxItems": 10, "ProxyConfig": { "UseRealdataAPIProxy": True } }

Output

[{ "Url": "Https://Www.Ebay.Com/Itm/164790739659", "Categories": [ "Camera Drones", "Other RC Model Vehicles & Kits" ], "ItemNumber": "164790739659", "Title": "2021 New RC Drone 4k HD Wide Angle Camera WIFI FPV Drone Dual Camera Quadcopter", "SubTitle": "US Stock! Fast Shipping! Highest Quality! Best Service!", "WhyToBuy": [ "Free Shipping And Returns", "1,403 Sold", "Ships From United States" ], "Price": 39, "PriceWithCurrency": "US $39.00", "WasPrice": 41.05, "WasPriceWithCurrency": "US $41.05", "Available": 10, "AvailableText": "More Than 10 Available", "Sold": 1, "Image": "Https://I.Ebayimg.Com/Images/G/Pp4AAOSwKtRgZPzC/S-L300.Jpg", "Seller": "Everydaygadgetz", "ItemLocation": "Alameda, California, United States", "Ean": Null, "Mpn": Null, "Upc": "Does Not Apply", "Brand": "Unbranded", "Type": "Professional Drone" }]

After The EBay API Completes The Scraping Execution, It Will Save The Output In A Dataset And Display Them As Output Results. You Can Download Them In Any Format.

Output example:

Remember That Search Output May Vary When Running Data Extraction From Different Geolocation. We Suggest Using Proxy Locations To Get The Best Results From The Same Location.

Proxy Utilization

This API Needs Proxy Servers To Work Properly, Like Many Data Scrapers In The ECommerce Industry. We Don't Suggest Executing It On A Free Account For Difficult Tasks Than Getting Sample Outputs. If You Want To Execute This Actor To Get Many Results, Subscribing To Our Platform Will Allow You To Use Several Proxies.

Supported countries

This EBay Actor Is Compatible With Scraping EBay Data In The Domains And Countries Below.

🇺🇸 US - https://www.ebay.com

🇬🇧 GB - https://www.ebay.co.uk

🇩🇪 DE - https://www.ebay.de

🇪🇸 ES - https://www.ebay.es

🇫🇷 FR - https://www.ebay.fr

🇮🇹 IT - https://www.ebay.it

🇮🇳 IN - https://www.ebay.in

🇨🇦 CA - https://www.ebay.ca

🇯🇵 JP - https://www.ebay.co.jp

🇦🇪 AE - https://www.ebay.ae

🇸🇦 SA - https://www.ebay.sa

🇧🇷 BR - https://www.ebay.com.br

🇲🇽 MX - https://www.ebay.com.mx

🇸🇬 SG - https://www.ebay.sg

🇹🇷 TR - https://www.ebay.com.tr

🇳🇱 NL - https://www.ebay.nl

🇦🇺 AU - https://www.ebay.com.au

🇸🇪 SE - https://www.ebay.se If you want us to add more domains and countries to this list, please mail us your suggestions.

Other retail scrapers

We've Many Other Retail And ECommerce Data Scrapers Available For You. To Check The List, Explore The ECommerce Category On Our Store Page.

Industries

Check How Industries Are Using EBay Scraper Worldwide.

0 notes

Text

Scrape Popular eCommerce Websites of the United States

Introduction

We are in the digital age, and ecommerce has become a central point of our routine lives as people use online platforms to do shopping. In the United States (US), many ecommerce giants rule the market, providing a vast range of services and products.

To be competitive in the market, businesses often use web scraping to scrape popular eCommerce websites in US. In this guide, we'll go through the procedure to extract popular eCommerce websites in US, highlighting the significance of the practice and giving insights about how it will benefit businesses.

Some Important Statistics About the US eCommerce Market

According to Statista, the forecast for the revenue in the E-commerce market in the United States predicts a continuous increase from 2024 to 2029, totaling an impressive 388.1 billion U.S. dollars, marking a significant growth of 51.25 percent.

This trend is indicative of the market's resilience and sustained expansion. Following a decade of consecutive growth, it is projected that the indicator will reach a remarkable milestone of 1.1 trillion U.S. dollars by 2029, establishing a new peak.

Notably, the revenue of the E-commerce market has exhibited consistent growth over recent years, underscoring the sector's robust performance and promising outlook for the future.

Top Popular eCommerce Websites of the United States

In the bustling landscape of ecommerce in the United States, several platforms stand out as the top contenders, drawing in millions of users daily with their diverse product offerings and seamless shopping experiences.

Among these, Amazon, Walmart, eBay, Target, and Best Buy reign supreme. These ecommerce giants cater to a wide range of consumer needs, spanning from electronics and apparel to household essentials and beyond.

Their vast customer bases and extensive product catalogs make them prime targets for data extraction through web scraping. By harnessing web scraping techniques, businesses can gather valuable insights into market trends, competitor strategies, pricing dynamics, and consumer preferences.

This data collection process enables businesses to monitor changes in product availability, track pricing fluctuations, and stay informed about industry developments in real-time.

With the aid of web scraping tools and techniques, businesses can effectively monitor and analyze data from these popular ecommerce websites, gaining a competitive edge in the dynamic landscape of online retail in the US.

Why Scrape Popular eCommerce Websites of the United States?

Web scraping eCommerce website data in US yields a plethora of advantages for businesses, enhancing their competitive edge and strategic decision-making processes. Here's why:

Insights into Market Trends: By scraping ecommerce websites, businesses gain access to real-time data on market trends, enabling them to identify emerging consumer preferences and adjust their strategies accordingly.

Competitor Analysis: Web scraping facilitates the extraction of valuable information about competitor strategies, including pricing strategies, product launches, and promotional tactics, empowering businesses to stay ahead of the competition.

Pricing Dynamics: Through data extraction, businesses can monitor pricing dynamics across multiple ecommerce platforms, allowing them to optimize their pricing strategies and remain competitive in the market.

Understanding Customer Preferences: Scraping data enables businesses to analyze customer behavior and preferences, helping them tailor their product offerings and marketing campaigns to better meet the needs of their target audience.

Informed Decision-Making: With access to comprehensive data from popular ecommerce websites, businesses can make informed decisions about inventory management, product development, and marketing investments.

Optimizing Offerings: By analyzing data collected through web scraping, businesses can identify gaps in their product offerings and make adjustments to better align with customer demand, thereby maximizing sales potential.

Staying Ahead of the Competition: Regular monitoring of ecommerce website data allows businesses to stay abreast of industry trends and developments, ensuring they remain agile and responsive to changing market conditions.

eCommerce website data collection in US is a strategic imperative for businesses seeking to gain a competitive edge in the dynamic online retail landscape. By leveraging web scraping techniques, businesses can extract valuable insights into market trends, competitor strategies, pricing dynamics, and customer preferences, enabling them to make informed decisions and optimize their offerings to drive success.

What is the Process of Scraping eCommerce Websites?

Scraping ecommerce websites is a systematic process that requires careful planning and execution to extract valuable data effectively. Here's a breakdown of the key steps involved:

Identify Target Websites and Define Objectives: Begin by identifying the ecommerce websites you want to scrape and clearly define your scraping objectives. Determine the specific data you need, such as product information, pricing, or customer reviews.

Choose the Right Web Scraping Tools and Techniques: Select the appropriate web scraping tools and techniques based on your project requirements and technical expertise. Popular tools include BeautifulSoup, Scrapy, and Selenium, each offering unique features and capabilities.

Develop Scraping Scripts: Develop scraping scripts or programs to automate the data extraction process. These scripts should navigate through the website, locate relevant data elements, and extract them into a structured format for further analysis.

Handle Authentication and Execute Scripts: If scraping requires access to restricted content or user-specific data, handle authentication mechanisms to ensure compliance with website policies. Execute the scraping scripts to collect data from the target ecommerce websites.

Process and Clean Extracted Data: Once data has been scraped, process and clean it to remove any irrelevant or duplicate information. Transform the raw data into a usable format, such as CSV or JSON, for analysis.

By following these steps, businesses can effectively scrape ecommerce websites to gather valuable insights into market trends, competitor strategies, and customer behavior. With the right tools and techniques in place, businesses can harness the power of web scraping to drive informed decision-making and gain a competitive edge in the ecommerce landscape.

A Common Code to Scrape eCommerce Websites Data

Why Should You Choose Actowiz Solutions to Scrape Popular eCommerce Websites in US?

When it comes to eCommerce website data collection in US, Actowiz Solutions stands out as a trusted eCommerce website data extractor in US for businesses seeking comprehensive web scraping solutions. Here's why you should choose Actowiz Solutions:

Specialization in Ecommerce Sector: At Actowiz Solutions, we specialize in catering to the unique needs of businesses operating in the ecommerce sector. Our expertise in this domain allows us to understand the intricacies of ecommerce websites and tailor our scraping solutions accordingly.

Accurate and Timely Data Extraction: With Actowiz Solutions, you can rely on accurate and timely data extraction from ecommerce websites. We prioritize data quality and ensure that the information collected is up-to-date and relevant to your business needs.

Insights into Market Trends and Competitor Strategies: By partnering with Actowiz Solutions, you gain valuable insights into market trends, competitor strategies, and customer behavior. Our scraping solutions enable you to stay informed about industry developments and make informed decisions to drive business growth.

Empowerment for Informed Decision-Making: With our services, you are empowered to make informed decisions based on comprehensive data collected from popular ecommerce websites in the US. Whether it's optimizing pricing strategies, refining product offerings, or enhancing marketing campaigns, Actowiz Solutions equips you with the insights needed to succeed in the competitive ecommerce landscape.

Conclusion

eCommerce website data monitoring in the US is a valuable practice that can provide businesses with actionable insights to stay competitive in the digital marketplace.

By leveraging web scraping techniques, businesses can extract and analyze valuable data from these platforms, gaining a deeper understanding of market dynamics and consumer behavior.

With Actowiz Solutions as your partner, you can unlock the full potential of ecommerce website data scraping and drive success in the competitive ecommerce landscape. You can also contact Actowiz Solutions for all your mobile app scraping, instant data scraper and web scraping service requirements.

#ScrapePopulareCommerceWebsites#ScrapeUSAECommerceSites#USAECommerceSitesScraper#ExtractUSAECommerceSitesData

0 notes

Text

What Is Review Scraping & Why Businesses Need It?

Ever wondered what people are really saying about your business (or your competitor's)? Online reviews hold immense power, influencing buying decisions and shaping brand perception. But how do you use this valuable data? This blog dives deep into the world of review scraping - the secret weapon for businesses looking to uncover hidden insights about your products and services, identify customer pain points before they become problems, and stay ahead of the curve with emerging trends in your industry.

What is Review Scraping?

Review scraping is a software tool that automatically collects customer reviews from different online sources. These could be from online stores like Amazon or eBay, social media sites like Facebook or Twitter, and dedicated review websites like Yelp or TripAdvisor. Imagine the scraping tool as a digital spider, crawling through the website's code to find and collect specific information, such as:

Review text

Star ratings

Author names

Dates of publication

Images (optional)

The data extracted is usually available in a very unorganized and coded format. Using data cleaning and analyzing tools, the data is organized into a structured format, typically spreadsheets or databases, for further analysis and utilization.

Benefits of Review Scraping

Review scraping offers numerous benefits for businesses and organizations:

Market Research: Analyse customers' feelings about your products or services and what your competitors offer. Identify areas for improvement, determine customers' problems, and keep up with market trends.

Product Development: Understand what customers want and like so we can improve our current products or develop new ones people want to buy.

Pricing Strategy: Study how much competitors charge for their products and what customers say about them to come up with competitive prices that appeal to the people you want to sell to.

Brand Reputation Management: Monitor what people say about us online, especially negative feedback. When you spot any negative feedback, it would help if you immediately addressed the customers' concerns. This way, you can ensure that our brand looks good to everyone.

Sentiment Analysis: Study the feelings expressed in reviews to see how happy customers are and find ways to improve based on their feedback.

Competitive Intelligence: Keep an eye on what your competitors' customers say about them. This will help you see what they're good at and where they might need to catch up. Doing this lets you figure out what you can do better and change your marketing campaigns to stay ahead.

Advanced Applications of Review Scraping

Review scraping extends beyond basic data collection. Here are some advanced applications:

Machine Learning and AI: Reviews data extraction can be used to teach computer programs to understand people's feelings, determine what topics are being discussed, and spot new trends as they appear.

Social Listening: Analyze reviews and social media chats together to understand what customers think online, looking at the big picture of their opinions.

Price Optimization: When we gather reviews from different sources and combine them with other types of data, we can create smart pricing plans that change according to how customers feel and what the market wants.

Holistic Brand Perception: Combine reviews and social media comments to understand how everyone sees your brand online.

Types of Review Scraping Tools

As customer data becomes increasingly important, review scraping tools have become more accessible. Here are the different kinds of tools you can use:

Web Scraping APIs: These provide pre-built code snippets that can be integrated into existing applications to extract data from specific websites. They are ideal for developers who want to build custom scraping solutions.

Web Scraping Extensions: Browser extensions make it easy for people to scrape information from websites without knowing how to code. They're especially helpful for beginners who do not have more experience with coding.

Dedicated Web Scraping Software: More advanced software offers powerful features like data filtering, scheduling, integration with other data analysis tools, and handling complex website structures.

Why Web Scraping APIs are Popular?

Using Web Scraping APIs instead of traditional methods has become popular because of their benefits for review scraping solutions.

Ease of Use

Web Scraping APIs are tools with pre-built functionalities, eliminating the need for users to write complex code from scratch. This makes them perfect for people and businesses who don't have technical expertise. Some of these APIs even have easy-to-use interfaces where you can just click on the data points you want to extract, simplifying the process.

Content Source https://www.reviewgators.com/what-is-review-scraping-and-why-businesses-need-it.php

1 note

·

View note

Text

Unlock Business Insights with Web Scraping eBay.co.uk Product Listings by DataScrapingServices.com

Unlock Business Insights with Web Scraping eBay.co.uk Product Listings by DataScrapingServices.com

In today's competitive eCommerce environment, businesses need reliable data to stay ahead. One powerful way to achieve this is through web scraping eBay.co.uk product listings. By extracting essential information from eBay's vast marketplace, businesses can gain valuable insights into market trends, competitor pricing, and customer preferences. At DataScrapingServices.com, we offer comprehensive web scraping solutions that allow businesses to tap into this rich data source efficiently.

Web Scraping eBay.co.uk Product Listings enables businesses to access critical product data, including pricing, availability, customer reviews, and seller details. At DataScrapingServices.com, we offer tailored solutions to extract this information efficiently, helping companies stay competitive in the fast-paced eCommerce landscape. By leveraging real-time data from eBay.co.uk, businesses can optimize pricing strategies, monitor competitor products, and gain valuable market insights. Whether you're looking to analyze customer preferences or track market trends, our web scraping services provide the actionable data needed to make informed business decisions.

Key Data Fields

With our eBay.co.uk product scraping, you can access:

1. Product titles and descriptions

2. Pricing information (including discounts and offers)

3. Product availability and stock levels

4. Seller details and reputation scores

5. Shipping options and costs

6. Customer reviews and ratings

7. Product images

8. Item specifications (e.g., size, color, features)

9. Sales history and volume

10. Relevant categories and tags

What We Offer?

Our eBay.co.uk product listing extraction service provides detailed information on product titles, descriptions, pricing, availability, seller details, shipping costs, and even customer reviews. We tailor our scraping services to meet specific business needs, ensuring you get the exact data that matters most for your strategy. Whether you're looking to track competitor prices, monitor product availability, or analyze customer reviews, our team has you covered.

Benefits for Your Business

By leveraging web scraping of eBay.co.uk product listings, businesses can enhance their decision-making process. Competitor analysis becomes more efficient, enabling companies to adjust their pricing strategies or identify product gaps in the market. Sales teams can use the data to focus on best-selling products, while marketing teams can gain insights into customer preferences by analyzing product reviews.

Moreover, web scraping eBay product listings allows for real-time data collection, ensuring you’re always up to date with the latest market trends and fluctuations. This data can be instrumental for businesses in pricing optimization, inventory management, and identifying potential market opportunities.

Best Web Scraping eBay.co.uk Product Listings in UK:

Liverpool, Dudley, Cardiff, Belfast, Northampton, Coventry, Portsmouth, Birmingham, Newcastle upon Tyne, Glasgow, Wolverhampton, Preston, Derby, Hull, Stoke-on-Trent, Luton, Swansea, Plymouth, Sheffield, Bristol, Leeds, Leicester, Brighton, London, Southampton, Edinburgh, Nottingham, Manchester, Aberdeen and Southampton.

Best eCommerce Data Scraping Services Provider

Amazon.ca Product Information Scraping

Marks & Spencer Product Details Scraping

Amazon Product Price Scraping

Retail Website Data Scraping Services

Tesco Product Details Scraping

Homedepot Product Listing Scraping

Online Fashion Store Data Extraction

Extracting Product Information from Kogan

PriceGrabber Product Pricing Scraping

Asda UK Product Details Scraping

Conclusion

At DataScrapingServices.com, our goal is to provide you with the most accurate and relevant data possible, empowering your business to make informed decisions. By utilizing our eBay.co.uk product listing scraping services, you’ll be equipped with the data needed to excel in the competitive world of eCommerce. Stay ahead of the game and unlock new growth opportunities with the power of data.

Contact us today to get started: Datascrapingservices.com