We are the leading web data scraping solution company big-scale public data assembly, driven by innovations and higher business standards.

Don't wanna be here? Send us removal request.

Text

How to Use Web Scraping for MAP Monitoring Automation?

As the market of e-commerce is ever-growing, we can utilize that online markets are increasing with more branded products getting sold by resellers or retailers worldwide. Some brands might not notice that some resellers and sellers sell branded products with lower pricing to get find customers, result in negative impact on a brand itself.

For a brand reputation maintenance, you can utilize MAP policy like an agreement for retailers or resellers.

MAP – The Concept

Minimum Advertised Pricing (MAP) is a pre-confirmed minimum price for definite products that authorized resellers and retailers confirm not to advertise or sell or below.

If a shoe brand set MAP for A product at $100, then all the approved resellers or retailers, either at online markets or in brick-&-mortar stores become grateful to pricing not under $100. Otherwise, retailers and resellers will get penalized according to the MAP signed agreement.

Normally, any MAP Policy might benefit in provided aspects:

Guaranteed fair prices and competition in resellers or retailers

Maintaining value and brand awareness

Preventing underpricing and pricing war, protecting profit limits

Why is Making the MAP Policy Tough for Brands?

1. Franchise stores

A franchise store is among the most common ways to resell products of definite brands. To organize monitoring of MAP Violation of the front store retailers, we could just utilize financial systems to monitor transactions in an efficient way.

Yet, a brand still can’t ensure that all sold products submitted by franchise stores are 100% genuine. It might require additional manual work to make that work perfectly.2. Online Market Resellers

If we look at research of the Web Retailers, we can have a basic idea about world’s finest online marketplaces. With over 150 main all- category markets across the globe, countless niche ones are available.Online retailers which might be selling products in various online marketplaces

Certainly, most online retailers might choose multiple marketplaces to sell products which can bring more traffic with benefits.Indefinite resellers without any approval

Despite those that sell products using approval, some individual resellers deal in copycat products that a brand might not be aware of.

So, monitoring pricing a few some products with ample online markets at similar time could be very difficult for a brand.

How to Find MAP Violations and Defend Your Brand in Online Markets?

For outdated physical retail, a brand require a business system to record data to attain MAP monitoring. With online market resellers, we would like to introduce an extensively used however ignored tech data scraping which can efficiently help them in MAP monitoring.

Consequently, how do brands utilize data scraping for detecting if all resellers violate an MAP policy?

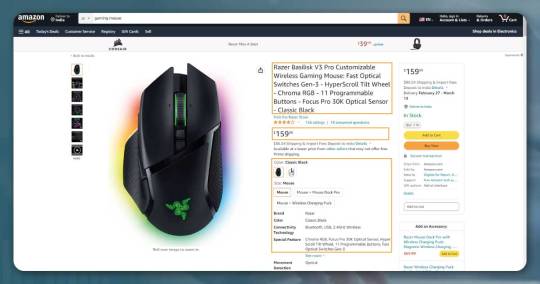

Let’s assume that one online reseller is selling products on different 10 online websites like Amazon, Target, JD, Taobao, eBay, Rakuten, Walmart,Tmall, Flipkart, and Tokopedia.

Step 1: Identify which data you need?

Frankly speaking, for MAP monitoring, all the data needed include product information and pricing.

Step 2: Choose a suitable technique to make data scrapers.

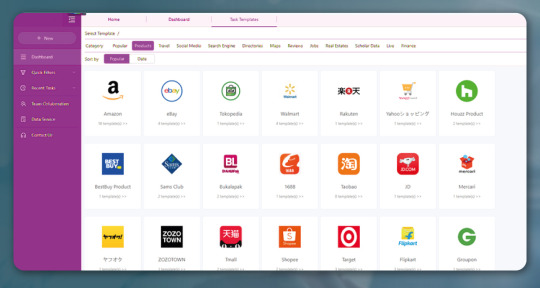

We need to do 10 data scrapers to collect data from corresponding markets and scraping data in a definite frequency.

A programmer need to write 10 scripts to achieve web scraping. Though, the inadequacies are:

Trouble in maintaining web scrapers if a website layout is changed.

Difficulty to cope with IP rotations as well as CAPTCHA and RECAPTCHA.

A supernumerary selection is the use of a data scraping tool made by Actowiz Solutions. For coders or non-coders, this can provide ample web scraping.

2. Automatic crawler: Also, the latest Actowiz Solutions’ scrapers enable auto data detection and creates a crawler within minutes.

Step 3: Running scrapers to collect data on 10 online markets. To get MAP monitoring, we need to scrape data at definite frequencies. So, whenever you prepare a scraper utilizing computer languages, you might have to start scrapers manually each day. Or, you could run the script with an extraction frequency function written with it. Though if you are using a web scraping tool like Actowiz Solutions, we could set the scraping time consequently.

Step 4: Subsequently after having data, whatever you should do is just go through the required data. Once you recognize any violating behaviors, you can react to it immediately.

Conclusion

For brands, MAP is very important. It helps in protecting the brand reputation and stop pricing war amongst resellers or retailers and offer more alternatives to do marketing. To deal with MAP desecrations, some ideas are there and you can search thousands of ideas online within seconds. Using MAP monitoring, it’s easy to take benefits from web extraction, the most profitable way of tracking pricing across various online markets, Actowiz Solutions is particularly helpful.

For more information, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping and web scraping services requirements

9 notes

·

View notes

Text

Walking Through Online Food Delivery Aggregator Data Analytics

Based on the input address, as shown in the below map, we recognized all the QSRs within the range of delivery. We filtered them into different categories based on delivery fees in different colors. As predicted, we found that the higher the delivery fees of the restaurant, the highest the delivery. As indicated in the map, you can see a circular boundary with orange shading. Beyond that boundary, the delivery fee is greater than 5 USD. But, there are a few points beyond the boundary where the delivery fee is less than 5 USD.

0 notes

Text

Walking Through Online Food Delivery Aggregator Data Analytics

Based on the input address, as shown in the below map, we recognized all the QSRs within the range of delivery. We filtered them into different categories based on delivery fees in different colors. As predicted, we found that the higher the delivery fees of the restaurant, the highest the delivery. As indicated in the map, you can see a circular boundary with orange shading. Beyond that boundary, the delivery fee is greater than 5 USD. But, there are a few points beyond the boundary where the delivery fee is less than 5 USD.

The majority of restaurants inside the polygon are situated 1 to 3 km from the input address, and their delivery fees range from 0 to 7 USD. Apart from this, restaurants located between the 4 to 7 km range have a probability of delivery charges below 5 USD.

From the analyzed data, we observed that most of the restaurants located outside the polygon have zero delivery fees, which means the delivery distance needed to be more accurate. We saw the delivery fees of 7 USD for the restaurants within the polygon. This reflects that restaurants outside the created polygon have considerably fewer delivery fees as compared to the inside restaurants. Moreover, analyzing the potential impact on delivery fees, we selected a group of columns with our assumptions and the output of the correlation matrix measuring the strength of relationships of values.df.corr()['deliveryFeeDefault'].sort_values(ascending=False)[2:]

After plotting the selected data column groups, we can see all the restaurants in the polygon plotting. The columns pricerange, and isnew have the same distribution. But no restaurant outside the polygon used scoober delivery, and the sponsored restaurant distribution looks more balanced. The least order value also varied more in this portion considerably.

Here, the distance is related to delivery fees on a positive note, where the output has grown from .15 to .75

Here, we couldn't see any improvement with the distance remaining constant at 0.20, and the minimum order value was 0.4, way higher than the distance.

It looks like lienferando has a base value for orders of 8 USD for each restaurant that offers scoober delivery service. It may be because of the agreement between scoober delivery and restaurants. It is the same as another food aggregator Wolt as they also have a base order value of 8 USD for every restaurant.

Summary Of The Analysis

We found that around 25 percent of existing restaurants don't offer scoober delivery with their order value between 8 to 45 USD, and low delivery fees despite the delivery distance. Whereas, the rest of the restaurants offer scoober delivery, with minimum order value being 8 USD and higher delivery fees.

Do you want to do the food delivery platform analysis for your restaurant business using our mobile app scraping and web scraping services to work on food delivery data analytics? Do contact us at Actowiz Solutions, and we will revert you instantly.

0 notes

Text

How to Use Python & BeautifulSoup for Scraping TV Show Episode IMDb Ratings?

The internet is flooded with innumerable information relating to how to scrape data. But hardly any information is available on how to scrape TV show episodes for IMDb ratings. If you are the one looking for the same, then you are at the right place. This blog will give you stepwise information on the scraping procedure.

Let’s scrape the IMDb movie ratings along with their details using Python’s BeautifulSoup library.

Modules Required:

Below is the module list needed to scrape from IMDB

1. Requests: This library is an essential part of Python. It makes HTTP requests to a specified URL.

2. Bs4: This object is provided by Beautiful Soup. It is a web scraping framework for Python.

3. Pandas: This library is made over the NumPy library, providing multiple data structures and operators to alter numerical data.

Approach:

First, navigate through the season 1-page series. It will comprise the list of season episodes. Series 1 will appear like this:

‘tt1439629’ is the show’s ID. If you aren’t using Community, then this id will be different.

Parse HTML Content Using BeautifulSoup

Create a BeautifulSoup object to parse the response.text. Now, assign this object to html_soup. The html.parser argument signifies that we will perform parsing with the help of Python’s built-in HTML parser.

Episode Number

Episode Title

IMDb Rating

Airdate

Episode Description

Total Votes

In the above image, if you notice attentively, you will find that the information that we require is in <div class="info" ...> </div>

The yellow part contains tags of the code. At the same time, the green ones are the data that we are trying to extract.

find_all will return a ResultSet object which comprises a list of 25<div class="info" ...> </div>

Extraction of Required Variables

Now, we will extract the data from episode_containers for an individual episode.

Episode Title

For the title, we require a title attribute from < a > tag.

Episode Number

It lies within the meta tag under the content attribute.

Airdate

It lies within the < div > tag with the class airdate. If we stripe to remove whitespace, we can easily obtain test attributes.

IMDb Rating

It lies within the < div > tag with the class ipl-rating-star__rating. It also uses text attributes.

Total Votes

It includes the same tag. The only difference is that it lies within different classes.

Episode Description

Here we will perform the same thing as we did for the airdate but only will change the class.

Putting Final Code Altogether

Repeat the same for each episode and season. It will require two ‘for’ loops. For per season loop, adjust the range() based on the season numbers you want to scrape.

Create a Data Frame

Cleaning of Data

Total Votes Count Conversion to Numeric

To make a function numeric, we will use replace() to remove the ‘,’ , ‘(‘, and ‘)’ from total_votes

Apply the function and change the type to int using astype()

Converting Rating to Numeric

Convert airdate from String to Date Time

Ensure to save it

CTA: For more information, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping and web scraping services requirements.

0 notes

Text

How to Utilize Web Scraping for Alternative Data for Finance?

Investors and hedge funds are increasingly including alternate datasets into workflows to notify an investment decision-making procedure. Web data has constantly proven to be the most popular and influential. The demand for alternative data using web scraping is increasing because of its scale, availability, and a unique capability to produce alpha.

Web data is the No.1 source for alternative data, serving to inform the world’s finest asset managers about market opportunities and deploying them with needed insights to work quickly and create positions carefully. When scraping high-quality data online, there’s practically no limit to the quantity and type of data accessible.

In this blog, we’ll show different kinds of available web data for web scraping and how they could be used to update the investment decision-making procedure.

Company News

Product Data

Product Reviews

SEC Filing Data

Sentiment Analysis

Company News

One can extract the web for frequencies of company news across different social media apps and business content providers, producing valuable data on the company’s trajectories.

High-level algo traders can incorporate this kind of data into procedures to ensure key news events are considered while making trades, and that’s the taste of possibility when this kind of data is included in the machinery investment.

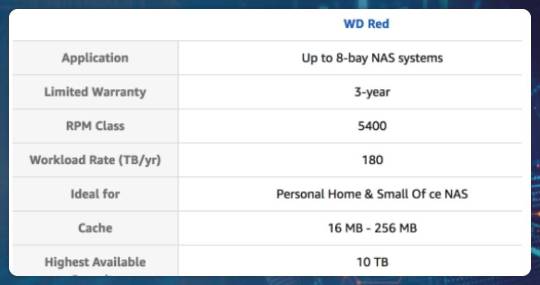

Product Data

Extracting product data from composite online marketplaces is a challenging task, but one offering tremendous insights into many factors essential for assessing stock performance and company fundamentals.

This data provides profitable opportunities for investors while determining positions and market orders and giving insights into long-term trends.

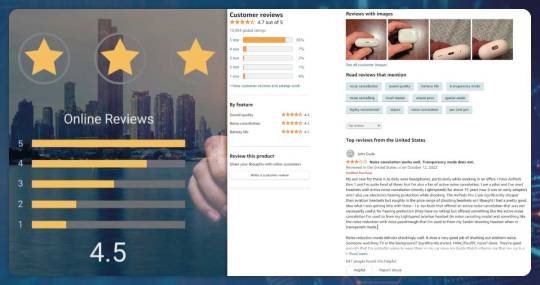

Product Reviews

To precisely guess how a stock will perform for a given company helps one understand how the essential products are trailing in a marketplace.

Tactlessly, the delay between quarterly earnings and company performance can check the usefulness of reports to do real-time analysis. Extracting product review data can help investors actively collect product life-cycle information and make updated assumptions about a company’s earnings.

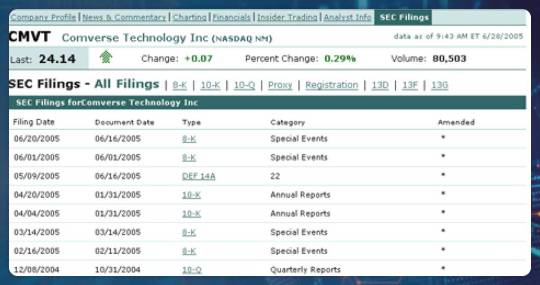

SEC Scraping Data

Deep diving into lengthy and vague pages of companies’ SEC scraping can result in great investment insights. This gives investors reliable and high-quality information — data previously held per strict standards by the US government.

By extracting SEC data, the discovery procedure familiar to the investors could replicate tens of thousands of times with unlimited amounts of filings, finding valuable alpha in unseen places.

Sentiment Data

Some time ago, Bloomberg stated that access to Twitter stream provides among the most extensive and most beneficial alternative data sets for alpha-seeking investors, with newer developments in social economics research advising that “joint mood states resulting from big-scale Twitter feed” might expect Dow Jones movements having a fantastic accuracy of 87.6%!

By scraping sentiment data online, investors can make accurate and timely decisions in today’s ever-hastening market.

Contemporary data sources extend a wider variety, from those discussed to emailed receipts, geolocation data, and satellite imagery. The possibilities are endless, but sophisticated investors will get the finest results by merging human analytics, machine learning, and high-quality alternative data sets.

More and superior data means investment decision-making procedure consistently produces more value. Besides, adjusting to newer practices of alternative data ensures that your models are synced with the pending data-based change of virtually all business sectors.

Know more about web scraping for finance alternate data

At Actowiz Solutions, we have been in the scraping industry for many years. We have assisted in extracting web data for clients ranging from Fortune 100 companies and Government agencies to individuals and early-stage startups. With time, we have gained much expertise and experience in data extraction. For more information, contact Actowiz Solutions now! You can also reach us for your mobile app scraping and web scraping services requirements.

1 note

·

View note

Text

What Are the Advantages of Hiring Web Scraping Services?

How Can Enterprise Web Scraping Services Help Your Business?

Hiring the best Web Scraping firm would save time and money for your organization by quickly acquiring valuable and organized data. Organizations providing Web Scraping Services may generate complete information, which your firm requires using various software explicitly designed for this purpose.

Data information provided by Web Scraping organizations should be an authentic, reliable, and incredible resource. A web scraper service can be trusted to offer you databases that will enable you to analyze vital statistics to receive important market information through which you can adjust your marketing strategy to achieve the most outstanding results from the audience.

Objective Of Hiring Web Scraping Service Providers

Data taken from multiple web platforms are easy to get and have a low degree of inaccuracy, making them very trustworthy. Actowiz Solutions offers web scraping solutions that you may customize to your specific needs. An extensive database is extracted with the help of web scraping for the following objectives:

Enhancing Services on Offer

If your firm is promoting any services, your firm should adopt the trends per market changes. It could entail the following:

Addressing prior issues

Implementing user suggestions to improve or introducing new functions.

You might also create additional products or services that match the latest trends. Numerous companies do this according to customer requests and buying trends.

Pitch The Right Product to The Right Customers

Large advertising expenditures may only be effective in the presence of accurate information. You can identify the right audience by using the information to pinpoint the target as per age, area, profession, and other factors. Utilizing data will generate leads and lower expenses for your advertising and promotion initiatives! Even if you have a great application or beneficial service, your revenues will only increase once you reach your targeted audience.

Web Scraping Services & Its Challenges

Organizations offering Large-Scale Web Scraping Services have to face several problems. Organizations offering online Web Scraping Solutions may encounter numerous issues below: these problems could occur if you develop solutions using accessible modules for programming languages—open-source UI-based technologies.

1. Possible Obstacles May Minimize the Usage of Data Scraping

Many SMEs demand data from various sources that must be upgraded as and when required. The timing might be crucial for scraping pricing data from competitors' websites or obtaining material from recent news articles. Possible steps to expedite the process include:

Establish the best possible configuration for the cloud platform.

Create multithreaded programs that scale as needed and scrape information from several pages simultaneously.

You may notice either a slowdown in your scraping activities or a sharp rise in your cloud prices when you extract data from several websites and many web pages.

2. Establish an Accurate Cloud Structure

Enterprise Web Scraping Services cannot be done on a laptop; hence must use cloud applications such as Azure, GCP, or AWS. Establishing things might be simple if you follow specific guides. Though you might face below challenges:

Upkeep of the cloud infrastructure.

Lowering the cost of cloud infrastructure.

Updating your infrastructure plan according to your scraping needs.

As your organization expands, add cloud applications, such as data center networks, handling data filtering, archiving, parsing, and more processes.

Advantages Of Hiring Actowiz for Web Scraping Services

We have focused only on free software and the problems it can cause when implementing web scraping on a large scale. Paid online tools and services can solve the problem, but some obstacles remain. This is where companies that offer web scraping services can be helpful. Actowiz Solutions takes care of all the problems mentioned above. Other functionalities and customizations are also available with us, making web scraping services extremely easy.

Costing Involved in Webs Scraping Services

We provide a fully managed, scaled-back cloud solution with firm Service level agreements and on-demand support. We charge for your services, much like a simple virtual solution. Therefore, your expenditures will decrease by scraping a few web pages each month or updating your data less often; your costs will go down.

Hiring Enterprise Web Scraping Services makes it possible to integrate the collected information within your system. When anything goes wrong, such as when a website's user interface changes or scraping for a particular webpage stops, our tracking and monitoring technologies immediately get into motion to identify the actual problem, which our internal team subsequently handles. Since we know how crucial data is, we assist the client in the best possible way.

Data Scraping Made Simple with Actowiz Solutions

Actowiz Solutions is known for offering wonderful online Web Scraping Services worldwide. Below are the few sectors where we have provided our services:

Hospitality

eCommerce & Retail

Market Research

Recruitment

Real Estate

Automobiles

Financial Services

A better understanding of the market and expertise in research on various sorts of websites enable us to handle scraping projects for different web pages, whether simple or complicated.

Conclusion

Organizations are offering Enterprise Web Scraping Services on the internet these days, and many talks about extracting information on an upscale level. However, online scraping entails digging into the extraction of data. It would help if you dealt with website updates, blocks, legal difficulties, the latest technologies, and other challenges requiring skilled professionals' expertise. Integration of new applications is effective up to a certain point.

Our team creates custom solutions for every organization that wants to utilize data to develop and stay ahead of its competitors. In today's world, when others may ultimately scoop up the information, you must guarantee. Visit our website to learn more about your Web Scraping and mobile app scraping services requirements.

0 notes

Text

Top Web Scraping and Data Crawling Companies in the USA 2022-23

If you want to scrape data online, many companies offer end-to-end data crawling or web scraping services for the users. These days, web crawling is extensively applied in different areas. If you want high data reliability and quality, then you will get many well-known web data scraping companies that help you get data from the World Wide Web. At times, it’s hard for the companies to scrape data online as well as aggregate it in the planned order. It all relies on the companies’ experience as well as their team’s strength. Therefore, if you want to speed up your business using quality data as well as move towards success, we have listed some top web scraping and web crawling companies that have set benchmarks in the web scraping industry. It is a list of data scraping companies that can guide you towards success with competence.

1. Actowiz Solutions

Actowiz Solutions is the top web scraping services provider in the USA, UK, UAE, Germany, China, Australia, and India and they solve all the complex scraping problems by leveraging different kinds of web data. This company provides a complete range of dedicated and well-managed web scraping and data crawling platforms for development teams of business enterprises and start-ups.

Actowiz Solutions delivers data, which are meaningful, within budget, on time, and extremely accurate. Despite extracting data from the web, Actowiz Solutions also implements manual and automated QA by an individual QA team to verify if the data is precise or not, to meet complete client satisfaction!

2. Promptcloud

Starting in 2009, PromptCloud has been a pioneer as well as a worldwide leader in providing Data-as-a-Service solutions. The important part of work is data scraping with cloud computing technology focused on serving enterprise obtained large-scale well-structured data online.

The company is constantly scraping data since the year 2009 as well as having a clientele, which increases across prominent geographies as well as industries. PromptCloud takes care of all end-to-end solutions — from creating and maintaining crawlers to cleaning, maintaining, as well as normalizing data quality.

3. ScrapHero

ScrapeHero has the reputation being of a well-organized enterprise-grade data scraping Service Company in the industry. The company had entered the scraping industry with a vision and objective, which has made many bigger companies depend on ScrapeHero for converting billions of pages into workable data. Their Data-as-a-Service offers high-quality data for better business results and helps in taking wise business decisions. Their data scraping platform is integrated with Google Cloud Storage, Amazon S3, DropBox, FTP, Microsoft Azure, and more.

4. Grepsr

Grepsr is a skillfully managed platform for offloading your regular data extraction work. We arrange, manage as well as monitor all the crawlers so that you can sleep well! Grepsr offers outstanding data extraction services. They surpassed everybody’s expectations by quickly offering lists, which are perfect for generating leads. To cope with the increasing demands of quality data, Grepsr possesses a committed team of professionals, working very hard to provide the finest services to all their customers.

5. Datahub

For more than a decade, Datahub has been making applications and tools for data. Because of their skills and ability, they have created and used quality data, carrying intense improvements in effortlessness, speed, and dependability. Datahub offers different solutions for Publishing and Deploying your information with supremacy and effortlessness. Datahub is the quickest way for organizations, individuals, and teams to publish, organize, as well as share data.

6. Octoparse

Octoparse provides completely hassle-free data scraping services and assists businesses in staying focused on the core business by taking care of different web scraping requirements and infrastructure. Being a professional data scraping company, Octoparse assists businesses in keeping alive through continuously feeding the scrapped data, which helps the businesses to make active and knowledge-based decisions. Therefore, if you want to hire any data scraping service provider, Octoparse is among the finest options available with years of involvement in web data scraping services.

7. Apify

Apify is known as the one-stop solution for data extraction, web scraping, as well as Robotic Process Automation (RPA) requirements. As we all know that web is the biggest resource of information made ever, and Apify is the software platform, which targets to help forward-looking companies by giving access to data in different forms using an API, helping users in searching as well as replacing datasets with better APIs, and scale procedures, robotize tiresome jobs, and accelerate workflows with adaptable automation software.

8. Import.io

Import.io is the data scraping service or website data import from a company of a similar name, having headquarters in Saratoga. In February 2019, Import.io acquired Connnotate, one more data scraping company. Connotate is a part of Import.io now. When comes to extracting data from larger URL lists, it's precise and extremely effective. Compared to other open-source competitors, extra web scraping characteristics would be good to have.

9. Datahut

Datahut is a data scraping service, which helps companies in getting data from opponent websites without any coding. Datahut works on a ‘Data-as-a-Service’ model in which Datahut assists users in getting data in the required format. They are in this web scraping business for the past 11 years as well as have experience working on big-scale data scraping projects, which can help in navigating through challenges faced with data extraction.

10. SunTec India

SunTec India provides high-quality scraping services for businesses across the globe. With a team of expert and experienced data scraping specialists, the company is well-versed with the newest web scraping methodologies. As an ISO-certified company, SunTec India promises the best-in-the-class services as well as data safety. So, if you want to make service directories, make a marketing database, observe competitor prices, make a contact listing for telemarketing, or analyze your web presence, SunTec India could scrape or extract relevant data for you.

Conclusions

We have just seen the listing of top web scraping and data crawling companies in the USA in 2023. This will help you get precise and high-quality data for the business. Therefore, if you are confused about which web scraping company to choose, then this blog will clear all your thoughts. All the companies listed here have developed their businesses for years as well as well-equipped with the hottest technology trends

0 notes

Text

Top Web Scraping and Data Crawling Companies in the USA 2022-23

If you want to scrape data online, many companies offer end-to-end data crawling or web scraping services for the users. These days, web crawling is extensively applied in different areas. If you want high data reliability and quality, then you will get many well-known web data scraping companies that help you get data from the World Wide Web.

0 notes

Text

Shopee Product Data Scraping Services - Scrape Shopee Product Data

Scrape Shopee web data. Extract and parse all product and category information: seller details, merchant ID, title, URL, image URL, category tree, brand, product overview, description, sizes, colors, style, availability, arrival time, initial price, final price, models, images, features, ratings, and reviews.

0 notes

Text

How to Do Influencer Marketing with Web Scraping?

Influencer marketing is a well-known concept to everybody now. In the retail business in the US, 67% of retailers use influencer marketing! It is a wonderful way of lifting brand awareness using word-of-mouth advertising. However, a tricky part is how to get the right channel or person to promote your brand.

If you use Google to search for the most prominent social media influencers, you are pushing against the wall! Many businesses don’t get the budget to buy exclusive tools, including data integration. Instead, it would help to begin with your audience and then spread the connections. For instance, getting commenters’ data and contacting them with the news is an excellent way to find influencers. The finest way of getting those influencers is using web scraping to scrape influencers' data from the audience. Though many low-priced alternatives are available to collect essential data, one is a web data scraper.

Web Scraping – The Concept

Web scraping is a method of automating the data extraction process. It comprises parsing the website and collecting data snippets per your needs. We will show how to utilize a web data scraping tool and make the data accessible to do digital marketing. No programming skills are needed to do web scraping. The tool we have used is the Actowiz web scraper, and we will show why it gives incredible value to marketing professionals.

Find “Evangelists”

As per Joshua, a comment is a place you can leverage for extending our marketing strategies. We don’t say we give comments and tap hands for commenters. When the user name of commenters becomes clickable, it becomes possible to associate with them by scraping the profile data. When we get a newer content piece, we contact these people. That’s how we convert commenters to evangelists. Also, you can be a bit more creative by increasing this technique and making an evangelist’s pool for further making marketing procedures.

Twitter Evangelists

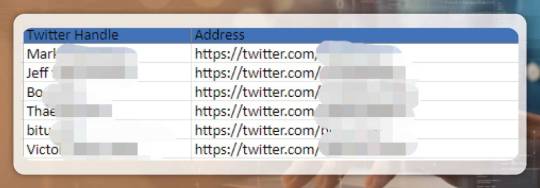

Thoughtlessly sending messages begging for tweets doesn’t work. A fantastic start will be to utilize the audience pool. The notion is to:

Scrape Twitter mentions with newer followers to the spreadsheet with IFTTT.com

Extract the total followers, descriptions, and contact data of the people.

Contact people. (ensure that you write a well-pitched letter to every person)

To begin, you must connect with a couple of IFTTT Recipes using your Google Drive and Twitter. Set upon those recipes, Trail New Followers in the Spreadsheet, and Get mentions in the Twitter username for a spreadsheet in the IFTTT account.

The recipes do what the names specified. They gather user IDs and people’s links that either followed or mentioned you and provide data in the spreadsheets.

We have to get an influencer that might drive traffic toward your website. To do that, we have to extract the following:

Bios

Total followers

Twitter ID

Websites

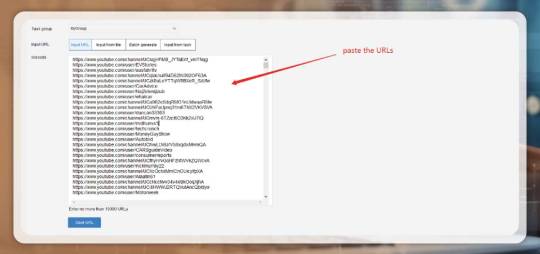

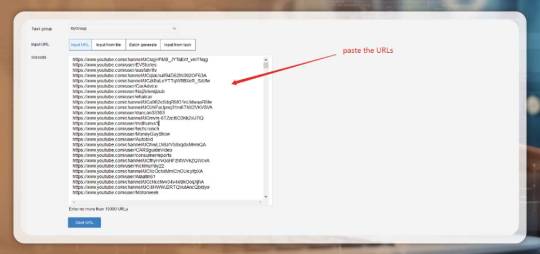

Initially, just set a new job by importing collected URLs.

Secondly, Actowiz will produce a loop listing URL when importing a URL list. Choose desired elements only by clicking on the details within an in-built browser. Click on “followers” and select “extract text of chosen elements from the action tip.” You would see the scraped data come in a data field.

Thirdly, repeat the steps to find their websites, bios, and Twitter IDs.

In the last, run a task.

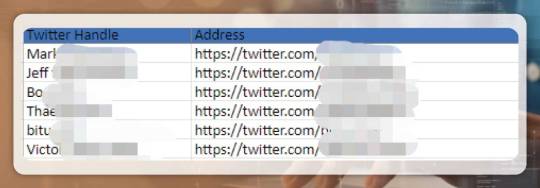

You need to have a sheet like that. You would see every person or follower who mentions you with their data.

After that, export data to the spreadsheet. You separate people that are not competent based on background and follower numbers.

YouTube Influencers

YouTube influencer marketing is an entirely new black. This is a beautiful way to increase conversions and brand awareness using word- of-mouth. So, influencers are growing. Getting the right influencers is critical to any successful marketing campaign.

“61% of the marketers agree that it’s hard to get the finest influencers for campaigns, suggesting that problem is far from determined.”

Here are metrics that you have to pay attention to:

Quality Content - Do they offer value to the audiences? Do they provide any views?

Who is your audience? Are they associated with the targets?

What is the engagement rate: total likes, followers, and comments?

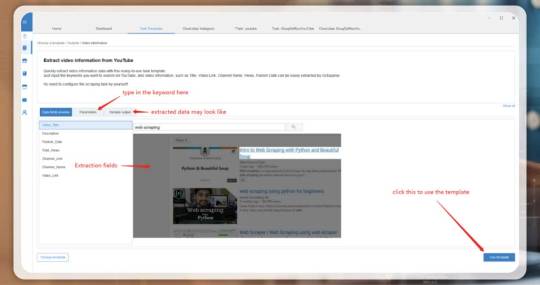

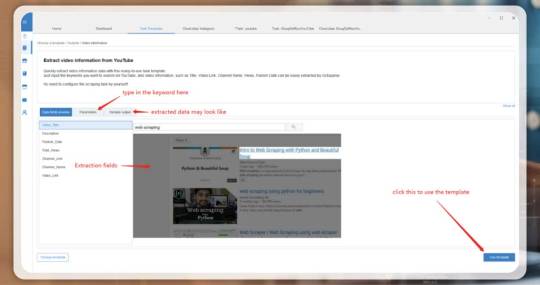

The Actowiz data scraping template is a pre-formatted module. All you need to do is enter the keywords or targeted website. This helps you scrape data without any configurations.

After that, select “Social Media” on a category bar. You would find a “YouTube” icon.

Fourthly, click on “use template” and choose a way you would love to run a task (either using on-premises, on clouds, or setting schedules)

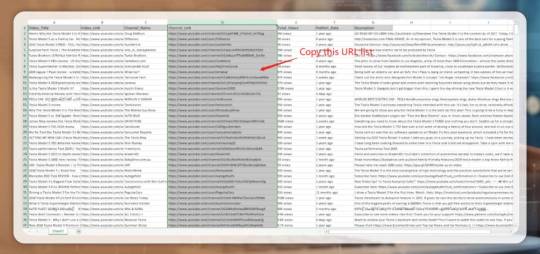

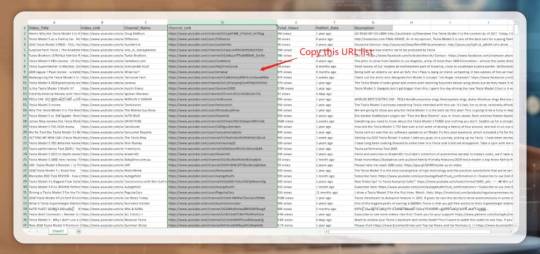

Firstly, open the Actowiz data scraper and set a task just by entering a URL list using Advanced Mode. It brings to a configuration window where you can parse a webpage and choose an element.

Secondly, choose the elements. Here, choose a YouTube Channel’s name. And follow a guide tip and select “scrape the text of selected elements.”

Thirdly, repeat the above steps to scrape subscriber numbers

Lastly, run a job. It takes under one minute to have results. Export data in options: CSV, text, or Excel. Here, we have used Excel.

Web scraping is extremely powerful. Although, legal reactions circle back when you don’t utilize that wisely. We think that the finest way of improving a skill is through practice. We inspire you to replicate the tricks and acclimatize auto data scraping to get the big fish in your pond.

For more information about influencers’ data scraping, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping and web scraping services requirements.

0 notes

Text

Influencer marketing is a well-known concept to everybody now. In the retail business in the US, 67% of retailers use influencer marketing! It is a wonderful way of lifting brand awareness using word-of-mouth advertising. However, a tricky part is how to get the right channel or person to promote your brand.

If you use Google to search for the most prominent social media influencers, you are pushing against the wall! Many businesses don’t get the budget to buy exclusive tools, including data integration. Instead, it would help to begin with your audience and then spread the connections. For instance, getting commenters’ data and contacting them with the news is an excellent way to find influencers. The finest way of getting those influencers is using web scraping to scrape influencers' data from the audience. Though many low-priced alternatives are available to collect essential data, one is a web data scraper.

Web Scraping – The Concept

Web scraping is a method of automating the data extraction process. It comprises parsing the website and collecting data snippets per your needs. We will show how to utilize a web data scraping tool and make the data accessible to do digital marketing. No programming skills are needed to do web scraping. The tool we have used is the Actowiz web scraper, and we will show why it gives incredible value to marketing professionals.

Find “Evangelists”

As per Joshua, a comment is a place you can leverage for extending our marketing strategies. We don’t say we give comments and tap hands for commenters. When the user name of commenters becomes clickable, it becomes possible to associate with them by scraping the profile data. When we get a newer content piece, we contact these people. That’s how we convert commenters to evangelists. Also, you can be a bit more creative by increasing this technique and making an evangelist’s pool for further making marketing procedures.

Twitter Evangelists

Thoughtlessly sending messages begging for tweets doesn’t work. A fantastic start will be to utilize the audience pool. The notion is to:

Scrape Twitter mentions with newer followers to the spreadsheet with IFTTT.com

Extract the total followers, descriptions, and contact data of the people.

Contact people. (ensure that you write a well-pitched letter to every person)

To begin, you must connect with a couple of IFTTT Recipes using your Google Drive and Twitter. Set upon those recipes, Trail New Followers in the Spreadsheet, and Get mentions in the Twitter username for a spreadsheet in the IFTTT account.

The recipes do what the names specified. They gather user IDs and people’s links that either followed or mentioned you and provide data in the spreadsheets.

We have to get an influencer that might drive traffic toward your website. To do that, we have to extract the following:

Bios

Total followers

Twitter ID

Websites

Initially, just set a new job by importing collected URLs.

Secondly, Actowiz will produce a loop listing URL when importing a URL list. Choose desired elements only by clicking on the details within an in-built browser. Click on “followers” and select “extract text of chosen elements from the action tip.” You would see the scraped data come in a data field.

Thirdly, repeat the steps to find their websites, bios, and Twitter IDs.

In the last, run a task.

You need to have a sheet like that. You would see every person or follower who mentions you with their data.

After that, export data to the spreadsheet. You separate people that are not competent based on background and follower numbers.

YouTube Influencers

YouTube influencer marketing is an entirely new black. This is a beautiful way to increase conversions and brand awareness using word- of-mouth. So, influencers are growing. Getting the right influencers is critical to any successful marketing campaign.

“61% of the marketers agree that it’s hard to get the finest influencers for campaigns, suggesting that problem is far from determined.”

Here are metrics that you have to pay attention to:

Quality Content - Do they offer value to the audiences? Do they provide any views?

Who is your audience? Are they associated with the targets?

What is the engagement rate: total likes, followers, and comments?

The Actowiz data scraping template is a pre-formatted module. All you need to do is enter the keywords or targeted website. This helps you scrape data without any configurations.

After that, select “Social Media” on a category bar. You would find a “YouTube” icon.

Fourthly, click on “use template” and choose a way you would love to run a task (either using on-premises, on clouds, or setting schedules)

Firstly, open the Actowiz data scraper and set a task just by entering a URL list using Advanced Mode. It brings to a configuration window where you can parse a webpage and choose an element.

Secondly, choose the elements. Here, choose a YouTube Channel’s name. And follow a guide tip and select “scrape the text of selected elements.”

Thirdly, repeat the above steps to scrape subscriber numbers

Lastly, run a job. It takes under one minute to have results. Export data in options: CSV, text, or Excel. Here, we have used Excel.

Web scraping is extremely powerful. Although, legal reactions circle back when you don’t utilize that wisely. We think that the finest way of improving a skill is through practice. We inspire you to replicate the tricks and acclimatize auto data scraping to get the big fish in your pond.

For more information about influencers’ data scraping, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping and web scraping services requirements.

0 notes

Text

How to Do Influencer Marketing with Web Scraping?

0 notes

Text

Lazada Product Data Scraping Services - Scrape Lazada Product Data

Scrape Lazada web data. Extract and parse all product and category information: seller details, merchant ID, title, URL, image URL, category tree, brand, product overview, description, sizes, colors, style, availability, arrival time, initial price, final price, models, images, features, ratings, and reviews.

Get Started

0 notes

Text

Black Friday 2022 – A Detailed Analysis of Different Web Data Scraping Patterns

An intense season of Black Friday has just passed, and we have some fantastic news to share!

Our team has utilized Actowiz Solutions products to do a detailed analysis for comparing market trends and data demand requirements received in this period – and we have got pretty impressive results.

There was a good association between web data requests and market trends, and our execution was off the charts! We can say here that a “Black Friday Creep” is actual.

Read further to see what we have uncovered in this Black Friday 2022 season.

Backstory

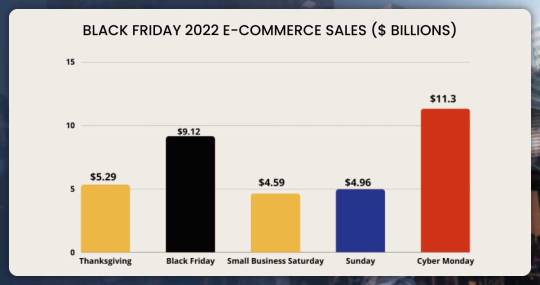

Cyber Monday or Black Friday may look like only 2-days shopping mania after Thanksgiving…however there is much more than that.

Although Black Friday and Thanksgiving day were factually the best- selling days for ecommerce industry, Cyber Monday has become the most crucial day for ecommerce business in recent times.

As per Adobe Analytics, in 2022, Cyber Monday had covered $11.3 billion in total online spending.

The new phenomenon, "Black Friday Creep,” has twisted the Black Friday markets the wrong way up. It is imposing retailers to provide more deals in a week and for a longer time.

And it’s precisely what glowed our curiosity to dive deeper into our data requests.

Increased Requirement of E-Commerce Web Data

Let us show you what we mean by available patterns found in the Black Friday season.

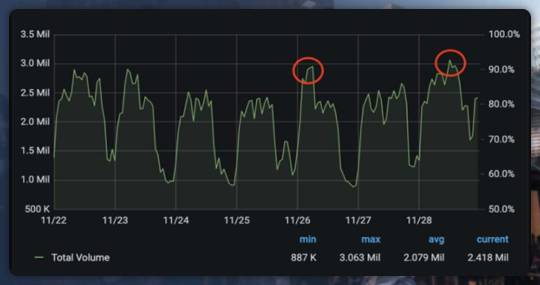

We have pursued and analyzed the received requests by Actowiz Solutions for a well-known e-commerce website – preliminary on Tuesday (11/22) and through Black Friday (11/25) as well as Cyber Monday (11/28) time.

This chart shows a per-hour accumulation of the handled traffic when users crawl this particular site, where every request usually permits a customer to scrape data from one webpage.

Web Extraction Patterns of E-commerce Data

Cyber Monday beat Black Friday in the requests’ volume– having 61 million requests handled on this website only – compared to 46.7 million requests on Black Friday.

We have noticed a higher increase of data scraping demands on days that lead to Thanksgiving that is where early deals got released.

A smaller 2-day valley trailed it before settling on Black Friday

That was again trailed by a smaller dip – and later finishing off with a high on Cyber Monday, the greatest day of 2022.

While looking at the registered traffic numbers by our tool we have seen a 7.88% increase while comparing a Black Friday.

Associating Tuesday to Monday (at end of Cyber Monday) we have seen 6.34% increase in Year-Over-Year.

Performance

We are happy to report more than 99% scraping performance throughout the week. We have delivered 3.34% increase on Black Friday as well as 3.95% high performance across the whole week compared to 2021.

Conclusion

Our study showed a clear association between volumes of sales reports vs data requests for a Black Friday season.

Cyber Monday has surpassed Black Friday in Actowiz Solutions’ requests of data demands.

99% of performance average across the whole week

Black Friday Creep has higher data demand requests in the week leading up to Cyber Monday and Black Friday.

Interest in the ecommerce data has become more significant than ever – and results prove that we can provide during the fullest of seasons. Even using higher volume requests, we have given excellent performance to customers.

This results in constantly pursue new ways of improving data extraction procedure and offer data of the best quality possible.

Actowiz Solutions will help you scrape ecommerce product data from Black Friday and Cyber Monday ecommerce sale.

Actowiz Solutions is the leader in web data scraping – with services which offer data on 3 billion products every month – therefore you can scrape data from any ecommerce website.

We know the significance of data for e-commerce and always ensure that results are up-to-date and accurate.

Contact Actowiz Solutions with your e-commerce data scraping needs, and our experts will do the rest!

You can also reach us for your mobile app scraping and web scraping services needs.

0 notes

Text

Google Shopping Product Data Scraping Services - Scrape Google Shopping Product Data

Scrape Google Shopping web data. Extract and parse all product and category information: seller details, merchant ID, title, URL, image URL, category tree, brand, product overview, description, sizes, colors, style, availability, arrival time, initial price, final price, models, images, features, ratings, and reviews.

Get Started

0 notes

Text

Competitor Price Monitoring Services - Scrape Dynamic Pricing for Brands

Actowiz Solutions provides an extensive range of competitor price monitoring services at affordable rates. Our capable teams handle special procedures to get a competitive edge over leading competitors.

Competitor Price Monitoring and Tracking

Being competitive in a highly competitive market with transparent pricing is a challenging job for retailers. Higher competition decreases margins with total competitors, and changing frequency makes dependable physical analysis impossible.

Getting reliable, competitive intelligence data might be a massive task with the increasing cost of IT maintenance and unreliable vendors offering data with high problem levels based on decisions.

Actowiz Solutions offers you high-quality competitors' data by leveraging algorithms, streamlined procedures, and manual work of Data Cleaning and QA operators, well-supported using semi-automatic tools. Actowiz Solutions provides much more than only data!

1 note

·

View note

Text

What are the Challenges and Benefits of Amazon Data Scraping?

It has become increasingly easy for people to purchase things they want online. A similar has happened with sellers making stores to do online business at Flipkart, eBay, Walmart, Alibaba, etc. Although to get users' attention and convert them to customers, e-commerce sellers must utilize data analytics to optimize their offerings.

As of 2022, Amazon is the biggest e-commerce company in the U.S., with 38% of the total e-commerce retail sales. Many shoppers start their online searches on the Amazon website or app rather than using search engines like Yahoo or Google. This platform is an excellent data resource, allowing companies to make well-informed decisions and know customers well.

What is Amazon Scraping?

Amazon is the best place where you can get all the valuable and relevant data about sellers, reviews, products, news, special offers, ratings, etc. Gathering data from Amazon benefits everybody: buyers, sellers, and suppliers.

Rather than scraping hundreds of websites, gathering data from Amazon can assist solve the expensive procedure of scraping e-commerce data.

Let's see what type of data you can scrape:

Competitor's product listing

Customer profiles

Pricing on the local and worldwide market

Product ratings

Reviews of your own and competitor's products

Why Can Scraping Amazon Data Be Challenging?

There are some problems while scraping Amazon data to your own, despite the methods you select. The wickedest thing about self-scraping is you might not even expect the issues and might even meet unknown responses and network errors.

Here are a few examples of common problems you may face while scraping Amazon data to your own.

IP blocking, Bot Detection, and Captcha

Amazon can easily control if the information is collected manually or using a boot scraper. It is detected by tracking a browser agent's behavior.

For instance, when a website finds scrapers or a user makes 400+ requests for similar pages at a particular time, some actions are taken against whoever is gathering the data. So, IP bans and captchas are utilized for blocking bots. If an IP address continues requesting pages without any Captcha details, this will get banned from Amazon, or an address will get blacklisted.

To conquer these obstacles, we at Actowiz Solutions use various methods and strive hard to make the behavior of crawlers more humanoid:

Change scraper headers to make it look like requests are approaching from the browser.

Regularly change different IP addresses using proxy servers

Remove all query parameters from the URLs to eliminate identifiers linking queries together

Send different page requests at casual intervals

To avoid Amazon's overall response against crawlers, change a User-Agent in the headers of crawlers.

Changing the Structure of Product Pages

While collecting data on product descriptions from Amazon, you might have encountered many exceptions and response errors. The whole reason is that maximum scrapers are all set for a particular page structure, scraping HTML data from that and collecting relevant data. However, if a page's structure changes, a scraper could fail as it is not intended to deal with exceptions.

Amazon's site uses templates for updating product data, and pages have different layouts, HTML elements, and properties. It mainly emphasizes the main features and attributes of certain types of products. The product group or category recently added ASINs affect the templates utilized in the product installation procedure on Amazon.

So, to eliminate different inconsistencies, we at Actowiz Solutions write the codes in a way that can deal with the exceptions. By doing that, we ensure that our codes do not fail at the initial network or timeout errors.

Different Product Variations and Geography Delivery Areas

One product could have diverse variations, helping customers to explore and select what they want. For instance, sweaters come in various sizes, and lipstick is available in multiple shades.

Product variations match the patterns we've drawn above and are presented on the website in various ways. And rather than getting rated on one type of a product, reviews and ratings are usually rolled up and reported by all accessible varieties.

We show total reviews when we gather customers' feedback data on Amazon. And to avoid the geolocation problem, we use the IP addresses of countries where we collect data on the Amazon platform.

Underachieving Scraper

It's tough to create a web scraper yourself, which will work for hours and gather hundreds of thousands of strings. The website's algorithms are mostly hard enough to extract as Amazon is different from other websites. The website is built to minimize the crawling practice.

Also, Amazon saves a significant amount of data. If you wish to gather content for the company's requirements, you must understand that extracting a considerable amount of material could be difficult, mainly if you do that yourself. It's a regular and time-consuming activity; therefore, creating an excellent, efficient web scraper will take time and effort!

The fast and dependable way is to leave Amazon data collection to professionals that can bypass the steeplechases of data scraping and systematically offer the data needed in the required format.

How to Get Benefits from Scraping Amazon Data?

Amazon offers essential information collected in one place: reviews, ratings, products, news, exclusive offers, etc. Therefore, extracting data from Amazon would help you solve problems of the time-consuming procedure of scraping data from e-commerce websites. And there are vital benefits you could get if you include automatic web scraping methods in your work:

Price Comparison

Data scraping helps you regularly retrieve relevant competitors' pricing data from Amazon pages. If you don't track price changes in the marketplace, especially in peak seasons, you could get considerable losses in sales volumes online and competitive disadvantages. Price analysis could help you monitor pricing trends, analyze competitors, set promotions, and regulate the finest pricing strategy for staying in the market. A well-organized pricing strategy would raise profits and get more leads.

Recognize Targeted Group

Each dealer has a particular customer base that buys certain products—knowing the targeted group makes it easy to make reasonable options for selling products in demand. Researching customer sentiments and favorites on Amazon can assist you in clarifying the customer base, studying their purchasing habits, and planning various product sets for customers to increase sales.

Improve Product Profiles

Entrepreneurs must keep an eye on how the products sell in a marketplace. For Amazon sellers, the finest way of achieving higher sales is by putting products at the top of applicable searches. To make the product fit the description, you have to create and add a product profile. To study sentiments and make competitive analyses, you can get product data like pricing, ratings, ranks, reports, and reviews. Here, companies can better understand market trends and product positioning and properly create product profiles to applicable searches to get the goods on top and find more customers.

Demand Predictions

To regulate the most gainful niche, it is required to analyze market data comprehensively. This will help you analyze how the products fit in the current market, track interest in products on Amazon, and recognize which products have the highest demand. Extracting the platforms will offer you data that, after detailed study, can improve the supply chain and optimize the internal assortments, appropriately manage inventories and use superior production resources.

Want to Resume Your Business?

The primary winner is web scraping services if you need to select between various Amazon scraping procedures. Unlike the different methods, data scraping services can deal with all the problems given above. Hiring the best web scraping services will gather content and offer you quality data regularly. Web scraping services use professionals aware of different legal restrictions and won't have difficulties with blocking.

It will be more effective for the company if you put resources into the business and provide Amazon data collection to third-party firms with which you deal. They perform web scraping for you as per the set timeline.

Conclusion

Amazon is the world's biggest online retailer, where shoppers start their product search and are progressively confident in buying the items needed. E-commerce sellers must use data analytics to optimize products to convert an average consumer into a reliable customer.

That's where Amazon data scraping can offer a wealth of data in one place so that you can quickly speed up your Amazon data scraping procedure and use that to make critical business decisions. Also, avoid problems while scraping Amazon pages due to repeated queries or predictable behavior, and find assistance from scraping experts like Actowiz Solutions!

Contact us for all your mobile app scraping and web scraping services requirements!

1 note

·

View note