#Scott Alexander

Explore tagged Tumblr posts

Text

Okay, guys, after reading a post by @centrally-unplanned I just took that ACX "AI Turing Test" that Scott Alexander did, and I am screaming, as the kids used to say.

You guys are way, way overthinking this.

I thought I would do better than average, and I guess I did; excluding three pictures I had seen before, I got 31/46 correct.

Not great if you're taking the SAT, but I feel like if I could call a roulette spin correctly 2 times out of 3 I could clean up in Vegas.

So, what is the secret of my amazing, D+ performance?

You have to look at the use of color and composition as tools to draw the eye to points of interest.

AI is really bad at this, when left to its own devices.

For example, here:

Part of the reason to suspect that this is AI is the "AI house style" and the bad hands that I literally only noticed right this exact second as I was typing this sentence. Even if the hands were rendered correctly, I would still clock this as AI.

The focal point of this piece ought to be the face of the woman and the little dragon she is looking at (Just noticed the dragon's wings don't match up either), but take off your glasses or squint at this for a second:

Your eye is being drawn by the bright gold sparkles on the lower right side of the piece. That particular bright gold is only in that spot on the image, but there's no reason to look there, it's just an upper arm and an elbow. The bright light source highlighting the woman's horn separates it out as a point of interest.

Meanwhile, the weird aurora streaming out of the woman's face on the left side means that it is blending in with the background.

In other words, the way the image is composed, and the subject matter suggest that your eye should be drawn here:

But the use of color suggests that you should look here:

That's a senseless place to draw the eye towards! It would be a really weird mistake for a human to make! In fact, I think there's a strong argument that the really close cropped picture of the face of the character is a strong improvement. It's still not a particularly good composition, but at least the color contrast now draws the eye to the proper points.

In fact, I would say that a good reason for my performance not being even better was this alarming statement at the start of the test:

I've tried to crop some pictures of both types into unusual shapes, so it won't be as easy as "everything that's in DALL-E's default aspect ratio is AI".

Uh...

So how about this one:

This is a lot better anatomically and in terms of the use of color and light to draw the eye towards sensible parts of the painting. The lighting makes pretty good sense in terms of coming from a particular direction and it also draws the eye to effectively to the face and the outstretched hand of the figure.

It's also a really flat and meaningless composition and subject matter that no renaissance artist would have chosen. What is this angel doing, exactly? Our eye is drawn to the face and hand, and the figure is looking off towards the left side, at, uh, what exactly?

But then I thought, "Well, maybe Scott chopped out a giant chunk of the picture, and this is just a detail from, like, the lower right eighth of some giant painting with three other figures that makes total sense"

This makes sense as a piece of a larger human made artwork, but if you tell me, "Nope, that's the whole thing and this is the original, un-cropped picture" I'd go, "Oh, AI, obviously.

All of the ones I had trouble with were AI art with good composition and use of color, and human ones with bad composition and use of color. For example, this one:

This has three solid points of interest arranged in an interesting relationship with different colors to block them out. I'd say the biggest tells are that the astronauts' feet are out of frame, which is a weird choice, and looking closely now, the landscape and smoke immediately to the right of the ship don't really make sense.

But again; I had to think, "Maybe Scott just cropped it weird and they had feet in the original picture."

Here's another problem:

StableDiffusion being bad at composition is such a known problem that there are a variety of tools which a person can use to manually block out the composition. In fact, let me try something.

I popped open Krita (Which now has a StableDiffusion plugin) and after literally dozens of generations and a couple of different models I landed on ZavyChromaXL with the following prompt:

concept art of two astronauts walking towards a spaceship on an alien planet, with a giant moon in th background, artstation, classic scifi, book cover

And this was the best I could do:

Not great, but Krita has a tool that lets you break an image into regions which each have different prompts, so I quickly blocked something out:

Each of those color blobs has a different part of the prompt, so the green region has "futuristic astronauts" the blue is the spaceship, the orange is the moon, grey is the ground and pink is the sky, which gives us:

Still way too much, so we can use Krita's adaptive patch tool and AI object removal to get:

I'm not saying it's high art, or even any good, but it's better than the stuff I was getting from a pure prompt, because a human did the composition.

But it's still so dominated by AI processes that it's fair to call it "AI Art".

Which makes me wonder how many of the AI pictures I called out as human made because one of the traits I was looking for, good composition, was in fact, actually made by a human.

188 notes

·

View notes

Text

A friendly reminder that the joint Worm Fandom+Rationalism movement has killed over 38,615 people, exactly one of whom was a landlord. This is genuinely the most evil force in the world today. A dangerous cult movement is rapidly gaining control of both American youth culture and the U.S. government.

#wormblr#worm web serial#worm#worm fandom#hpmor#Harry potter and the methods of rationality#methods of rationality#elon musk#andrew yang#jd vance#zizians#ziz#scott Alexander#eliezer yudkowsky#pepfar#musk and usaid#usaid funding freeze#usaid#us aid#pmi#us politics#slate star codex#rationality#rational thinking#rationalism#rational#richard hanania#matthew yglesias#wildbow#rokos basilisk

51 notes

·

View notes

Text

Zack Beauchamp at Vox:

It’s been a rough week in the world of the online intellectual right, which is currently in the midst of two separate yet related blowups — both of which illustrate how the pressures of power are cracking the elite coalition that aligned behind President Donald Trump’s return to power. The first fight is really a struggle over who should determine the philosophical identity of MAGA, pitting a group of anti-woke writers against a wide group of illiberal or post-liberal figures. The lead figure in the anti-woke camp, the prominent pundit James Lindsay, has been attacking his enemies as the “woke right” for months. In his mind, this group’s emphasis on the importance of religion, national identity, and ethnicity is the mirror image of the left’s identity politics — and thus an existential threat both to American freedom and the MAGA movement’s success. In response, his targets on the right — which range from national conservatives to white nationalists — have started firing back aggressively, arguing that Lindsay is not only wrong but maliciously attempting to fracture the MAGA coalition. This might seem like a niche online fight, but given that niche online discourse has been a major influence on the second Trump administration’s thinking, it might end up mattering quite a bit. The same could be said about the second fight, which revolves around Curtis Yarvin — the neo-monarchist blogger who has influenced both Vice President JD Vance and DOGE. A recent post by rationalist author Scott Alexander accused Yarvin of “selling out” — aligning himself with Trump even though he had long denounced the kind of “authoritarian populism” that Trump embodies. Yarvin defended himself with some fairly bitter attacks on Alexander, drawing in defenders and critics from the broader right-wing universe in the process.

Each of these fights is telling in their own right. The “woke right” contretemps shows just how deep the divisions go inside the Trump world — between anti-woke liberals, on the one hand, and various different forms of “postliberals” on the other. The Yarvin argument is a revealing portrait of how easy it is to get someone to compromise their own beliefs in the face of polarization and proximity to power. But put together, they show us just how hard it is to go from an insurgent force to a governing one.

The “woke right” redux

The “woke right” debate first came on my radar back in December, when the anti-woke pundit James Lindsay tricked a Christian nationalist website, American Reformer, into publishing excerpts of The Communist Manifesto edited to sound like a critique of modern American liberalism. It might seem to make little sense to describe a 19th-century text on resistance to capitalism as an example of 21st-century identity politics. But Lindsay, who sees himself as a right-wing liberal, is using an idiosyncratic understanding of “wokeness” that equates it with collectivism — the idea that the politics should be understood through the lens of interests of groups, be it the proletariat or Black Americans, rather than treating all citizens purely as individuals. Thus, for Lindsay, communism is a form of wokeness, even if the term “woke” postdates Marx by nearly 200 years. This broad definition also allows there to be right-wing forms of wokeness. Neo-Nazism, Christian nationalism, Catholic integralism, even certain forms of anti-liberal conservative nationalism — all of these doctrines give significant weight to group identity in their understanding of what matters in the political realm. Thus, for Lindsay, they are threatening to American liberalism in exactly the same way as their left-wing peers.

“Woke Right are ‘right-wing’ people who have mostly adopted an identity-based victimhood orientation for themselves to bind together as a class,” he writes. “Like the Woke Left, then, they happily offer the trade-off usually used to describe Marxists: people who will ask you to trade some of your liberty so that they might hurt your enemies for you.” Personally, I find Lindsay’s definition of “wokeness” so broad that it ceases to operate as a meaningful category (if it ever was one in the first place). But the charge has clearly stung his antagonists on the right, where calling someone “woke” is basically the worst thing you can say about them. Prominent figures on the illiberal right, ranging from Tim Pool to Mike Cernovich to Anna Khachiyan, shot back at Lindsay — calling him a “grifter” out to undermine the MAGA movement. Meanwhile, Lindsay’s allies, including biologist Colin Wright and Babylon Bee CEO Seth Dillon, accused them of being the true traitors to MAGA. The most interesting intervention in this debate is an essay recently posted on X by the Israeli intellectual Yoram Hazony. Hazony’s main project, the National Conservatism conference, has served as a hub connecting various different strands of illiberalism to each other and to power. Vance, Tucker Carlson, and Sen. Josh Hawley (R-MO) have all given notable speeches there.

[...]

What the two fights reveal about the Trump era

Both the “woke right” and Yarvin debates revolve fundamentally around power — specifically, how it should be wielded once you have it. The “woke right” debate is, at heart, about what the ultimate ends of the Trump administration should be. While both sides agree that the “woke left” should be wiped out, they disagree on what an alternative vision should look like. Lindsay and his allies argue for a restoration of some kind of right-wing liberal individualism; Hazony and his camp believe that the task is replacing liberalism with some kind of hazy alternative rooted in religious or ethno-cultural identity. This debate is taking place on purely abstract grounds — there’s almost never any reference to concrete policy disagreements — but it reflects an assumption that there are very real implications of this argument for the next four years of American politics. Lindsay has repeatedly argued, in tweets and interviews, that the rise of the “woke right” threatens to derail the entire MAGA project and return power to the left. The Yarvin debate poses a related, but more introspective, question about power: How corrosive is it for intellectuals to be in proximity to it? Alexander, the most intellectually rigorous person in either debate, suggests the answer is “very.” In Yarvin, he sees someone who he long took seriously as tainted by access — by, for example, Vance citing Yarvin as an influence in a podcast appearance. Yarvin’s own conduct in their debate vindicates his assessment. Put together, these debates point us to two major themes worth watching throughout the remainder of the Trump administration. First, how much the administration’s policy choices intensify the fractures in its elite coalition.

Hazony is right that hostility to the left is what brought disparate groups together under the Trump banner. But now, in a world where the administration has to govern, some of those factions are bound to feel like they’re losing or even betrayed.

The so-called “woke right” and “anti-woke right” united to get Donald Trump elected last year. Now, they are fighting for the direction of the MAGA (and post-MAGA) movement.

#MAGA Cult#Curtis Yarvin#Donald Trump#James Lindsay#Postliberalism#Scott Alexander#J.D. Vance#Seth Dillon#Colin Wright#Mike Cernovich#Tim Pool#Anna Khachiyan#Yoram Hazony#MAGA on MAGA Violence

16 notes

·

View notes

Text

I've been feeling devastated about last week's disaster of a debate (among other political developments) and see it as evidence that Biden was never a fit candidate for reelection. And at this point I really don't think he has it in him to stick out a job like the presidency all the way until 2029. But I think a lot of people are really overreacting in terms of what kind or variety of weakness it exposed in Biden. I'm a little stunned by how many people -- not generally Republicans or anti-leftists or leftists who have a bias against Biden already, but moderate-left-ish types such as Scott Alexander and Kat Rosenfield -- who seem convinced of things like that the debate shockingly but obviously "proved" that Biden is completely senile, has a clinical level of dementia, is unfit to be president right at this moment (let alone for 4.5 more years), obviously isn't acting as president but must be sitting around dazed while others do the work for him, that the Biden team's insistence that Biden is fundamentally fit has now glaringly been exposed as a complete lie, etc.

One particular narrow range of skills was on display at the debate, and I'm not sure exactly what succinct term to use for it, but it was something like "smooth articulation ability", and it's something I think about a lot as a communicator in my own professional context. There have always been certain mental states I get into (often triggered by stress or sleep deprivation) where words and sentences don't come out as clearly, get caught up in the moment on the wrong beat and get sidetracked, and struggle to get wrapped up without becoming run-ons that lack in a conclusion, where I mumble and stammer easily, and where I have trouble recalling particular words and phrases on the fly, and these contrast dramatically with my moments where the opposite is the case. This especially affects my teaching: it used to fairly often be the case that I had "bad days" where I could tell right from the start of the 75-minute class period that I wasn't going to be able to form thoughts as well as on my "good days". With more experience I've gradually learned how to minimize the "bad days", but I'm still prone to it if I'm not careful. Yet, even at my worst moments of this, it says nothing about my knowledge of the topic I'm teaching about, nor about my fitness in general. It's a very narrow aspect of my mental abilities.

Now one could point out that a huge part of being a politician is being a absolute world-class "smooth articulator". And that's true, and Biden certainly was once, and clearly old age has eroded his ability at this. But it's kind of beside the point when someone is suggesting that stumbling a lot at a debate is evidence of having dementia and being too old for one's job, other than that our being accustomed to politicians being extremely skilled at articulation is obfuscating the fact that for a typical person (whether old and senile or not), having to express one's ideas on the fly in the style of a presidential debate is incredibly difficult. I believe the great majority of adult humans -- including those who are dismissing Biden now, including a lot of the very intelligent and generally articulate among us, including myself -- would probably not be able to do much better than Biden did at that debate if we were placed in his position, and it doesn't say much about our ability to make decisions in the role of US president or about our dementia status.

All that said, what matters most in a presidential debate is the vibes each candidate gives off, and Biden definitely gave off "doddering old man" vibes in just about the worst way possible, which will certainly make a lot of people not feel okay about voting for him, whether or not they've seriously reflected on his capability of performing the actual non-public tasks required of a president.

#our current president#scott alexander#kat rosenfield#dementia#old age#teaching#yes i'm still alive#know it's been a while

39 notes

·

View notes

Text

The AI Art Turing Test Is Meaningless

Last year I tried the "AI art Turing Test" (here's a better link if you want to take it yourself), and I did pretty well in it. I could identify many pictures as immediately AI-generated. Multiple images had missing or additional fingers. Some had shadows in them but nothing to cast them. Some I recognised as real pictures and I could definitely rule out. One picture had a particular human touch put in by the painter as a way to "bump the lamp", what the kids today would call a "flex", so I could rule it out as AI-generated. Multiple images had weird choices of "materials", where a wooden object blended into metal, or cloth blended into flesh. Some had details that looked like a Penrose Triangle or Shepard's Elephant: Locally the features looked normal, but they didn't fit together. Sometimes there was a very obvious asymmetry that a human artist would have caught.

I could rule out some more pictures as definitely not AI-generated, because there was just no way to prompt for this kind of picture, and some I could rule out because there were features in the middle, and off to the sides, in a way that would have been difficult to prompt for. Other pictures just felt off in a way I can't put into words. Some were obviously rendered, one some looked like watercolours, but with some chromatogram-like effect that I don't know how to achieve with watercolours, but that could totally be done by mixing different types of paint, but that effect looked suspiciously like the receptive fields of neurons in the visual cortex.

And then I thought "Would an AI art guy prompt for this image? Would he select this output and post it?" Immediately, I could rule out several more images as human-generated. I could already get far enough with "What prompt would this be based on?", but "Would this be the kind of thing modern AI art guy would prompt for?" combined with "Would a modern AI art guy use that prompt to get a picture?" was really effective. Anything with a simple prompt like "Virgin Mary" or "Anime Girl on Dragon" was more likely to be AI art, and anything that had multiple points of interest and multiple little details that would be hard to describe succinctly was less likely to be AI art.

Finally, there were some pictures that looked "like a Monet", or perhaps like something by Berthe Morisot, Auguste Renoir, Louis Gurlitt, William Turner, John Constable, except... not. One time I thought a picture looks like a view William Turner would have painted, but in a different style. Were these pictures by disciples of famous painters? Lesser known pictures? Were these prompted "in the style of Monet" or "in an impressionistic style"? There are many possibilities. An impressionistic-looking painting may have been painted by a lesser known impressionist, somebody who studied under a famous impressionist, by an early to mid-20th century painter, or very recently. What if that's a Beltracchi? And then there was this one Miró that contained bits of the the symbol language Miró had used. That had to be legit.

When I was unsure, I tried again to imagine what the prompt that could have produced a painting would have looked like. On several pictures that looked like digital painting (think Krita) or renders (think Blender/POV-Ray), I erred on the side of human-generated. Nobody prompts for "Blender".

I spent a lot of time on one particular picture. I correctly identified it as AI-generated, based on my immediate gut feeling, but I psyched myself out a bit. The shadows were off. There was nothing in the picture to cast these shadows. But what if the picture had been painted based on a real place, and there were tall trees just out of the frame? Well, in that case, it would look awkward. Why not frame it differently? What if the trees that cast the shadows were cropped out? In the end, I decided to go with generated. Make no mistake though: You could easily imagine a computer disregarding anything outside of the picture, and a human painting a real scene on a real location at a particular time of day, a scene that just happens to have a tree right outside of the picture frame. Would a painter include the shadows? A photographer would have no choice here.

This left several pictures that might as well have been generated, or might as well have been human-made, and several where there was no obvious problem, but they felt off. I suspected that one more of the landscapes was AI-generated, and I had incorrectly marked it as human, purely metagaming based on the structure of the survey. I was right, but I couldn't figure out which one.

In the end, I got most of the pictures right, and I was well-calibrated. I got two thirds of those where I thought it might go either way but I leaned slightly, and around 90% of those where I was reasonably sure. At least I am perfectly calibrated.

And then I asked myself: What is this supposed to prove? Is it fair to call it a Turing Test?

I can see when something is AI-generated easily enough, but not always. I can see where an AI prompter has different priorities from a modern painter, and where an AI prompter trying to re-create an older style has different priorities. This "Turing Test" is pitting the old masters against modern imitators, but it also pits bad contemporary art against bad imitations of bad contemporary art. It's not a test where you let a human paint something and let an AI paint something and compare.

As it stands now, the results of the AI art Turing tests do not tell us anything, and the test is not that difficult to pass. But what if it were? What could that tell us?

Just imagine that were the case: What's the difference between a blurry .jpg of a Rothko and a computer-generated blurry .jpg of a Rothko?

What if an AI prompter tells the AI to generate the picture so it looks aged, with faded bits and a cracked canvas? What if an AI prompter asks for a picture in the style of Monet? What if a prompter with medieval religious sensibilities asks for illustrations of the life of St. Francis of Assisi? You can easily psych yourself out taking the test, but you can also imagine a version of the test that is actually difficult.

If you control for all of these, maybe all that's left will be that eerie AI-generated feeling that I couldn't quite shake off, and the tendency of image synthesis systems to focus on the centre of the picture and to make even landscapes look like portraits. Maybe we can fix even that if we don't prompt with "trending on artstation" but with "Hieronymus Bosch", and then we will have a fair test. Or would it be a fair test if we pit a human against an AI? What would happen if you pitted me, with armed with watercolours, charcoal, crayons, or acrylic paint, against midjourney?

Or maybe it's a fool's errand, given that some people who took the test couldn't even notice the extra fingers. You can pass the political Turing test if you just pretend to be an un-informed voter, and AI art is probably already better at illustrating cover images for podcast episodes than an un-informed voter. And then it can generate the whole podcast.

8 notes

·

View notes

Text

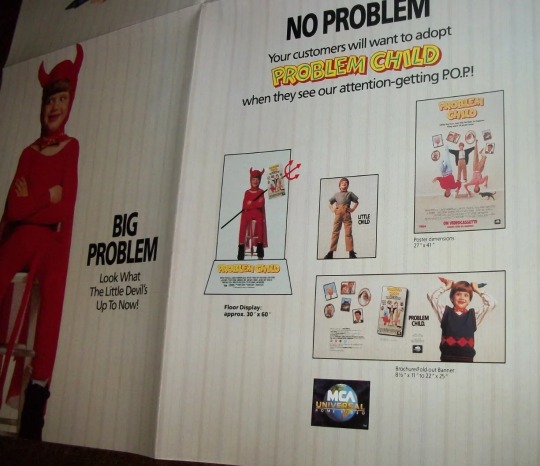

#Problem Child 1990#Problem Child#Dennis Dugan#Scott Alexander#Larry Karaszewski#Michael Oliver#John Ritter#Amy Yasbeck#90s

35 notes

·

View notes

Text

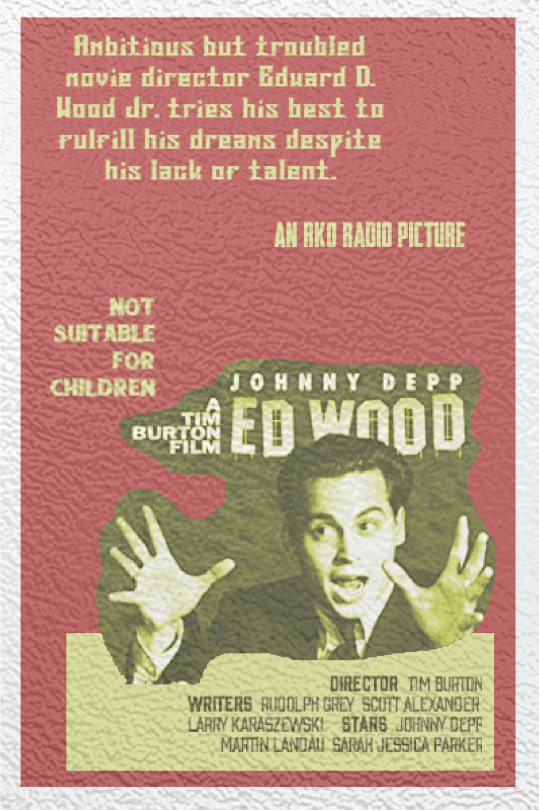

Ed Wood (1994, Tim Burton)

12/07/2024

#ed wood#film#tim burton#1994#cinematography#johnny depp#1995 Cannes Film Festival#academy awards#danny elfman#howard shore#1952#variety#biographical film#christine jorgensen#bela lugosi#glen or glenda#Dolores Fuller#independent film#bride of the monster#substance dependence#Apostoli di Gesù#plan 9 from outer space#Chiropractic#tom mason#maila nurmi#baptists#Special effect#orson welles#Las Vegas#scott alexander

9 notes

·

View notes

Text

Y'know, I have my disagreements with Scott Alexander, but I must express my gratitude for the review of "the body keeps the score". It did set me on the path that was actually effective, in resolving some of my psychopathology.

2 notes

·

View notes

Text

"And in the end, the new administration managed to outperform everyone’s expectations: it lasted an entire four months before the apocalypse descended in fire and blood upon America and the world."

—Scott Alexander, Unsong Interlude ת: Trump

2 notes

·

View notes

Text

There are so many skyscrapers. I’m an heir to Art Deco and the cult of progress; I should idolize skyscrapers as symbols of human accomplishment. I can’t. They look no more human than a termite nest. Maybe less. They inspire awe, but no kinship. What marvels techno-capital creates as it instantiates itself, too bad I’m a hairless ape and can take no credit for such things.

-Scott Alexander, Half An Hour Before Dawn In San Francisco

32 notes

·

View notes

Text

I keep noticing this thing happening where I'm introduced to something brand new that comes in many installments (often a musical artist or TV show) through one example/sample, really love it, and get into the the new thing as a whole, and come away with the feeling that the first bit of it I became familiar with is my favorite that it has to offer.

Most recently, it was the musical group Men I Trust, whose track "The Landkeeper" from its very new album was playing on the radio, and I stopped transfixed (having been about to leave my car) to listen to the whole thing and made a mental note to myself that I had to follow up on days later to look up what song was playing on that station at that time (as it wasn't announced afterwards). I found the whole album it came from, all of which I consider very good, but my favorite track on it is still "The Landkeeper" (with "All My Candles", which I caught on the radio again sometime later in the week, and "Girl (2025)" being close contenders. Interestingly, I've tried their other albums and didn't particularly like them.)

I'm pretty sure this has happened with more musical creators, albums, and TV shows than I can think of right now. To give a weird book example, my first glimpse at Lewis Carroll's Sylvie and Bruno books (published much later than his Alice books and with much less acclaim) was a passage very far into them, which I found delightfully and refreshingly hilarious in the typical Carroll fashion. I then enthusiastically plunged into reading the full Sylvie and Bruno books, which are not short -- one should be warned that each of them is about the length of Wonderland and Looking Glass combined! -- and found that they weren't quite that good and in fact the passage that I had randomly stumbled upon beforehand was probably my very favorite bit of dialog in the whole thing.

And heck, my introduction to Scott Alexander (and thus to the whole rationalist community) was his post "A Response to Apophemi on Triggers", and while I immediately liked the rest of his stuff a lot, for a long time almost none of it had quite the same charm to me as that Response to Apophemi piece, to the point that I clearly remember filling out a survey (maybe one of the early annual SSC readership surveys) where I had to select my favorite SSC post and chose that one as my favorite. I wouldn't say that it's stayed my favorite -- my tastes have evolved, and having like a thousand more Scott essays in existence does muddy things a lot -- but I still consider it in certain ways some of Scott's best classic stuff.

What's going on here? One possibility is that I tend to prefer first samples of things because emotions coming from the novelty of it in the first experience remain associated with that sample (i.e. musical track, episode, article, etc.).

But I think the more likely explanation that the whole history of dozens of small works by a single creator, averaged together as a single unit, is (1) unlikely to score outstandingly highly in my preferences and (2) unlikely to grab my attention in the first place unless I have the luck of encountering a unit of that set of creations that is an outlier in how much I like it. There's a sort of Bayes / conditional probability thing going on here where the creators I wind up consciously exposing myself to are disproportionately going to be ones whose sample I randomly came across really really made me happy, at an extreme level that it's very hard for a prolific creator's average work to impress me.

In other words, if I'd heard, say, "I Don't Like Music" on the radio from that same album of Men I Trust, I might have nodded and said, "That's pretty good stuff" and then not remembered long afterwards when back at home that I should look up what was playing on the radio at that time. Or if I'd first run across a different post of Scott's in early 2014, I would have probably said to myself, "That was really good and interesting" but then not successfully made a mental note to investigate the blog it came from four months later when my spring semester would be over, as I did singlehandedly due to the Response to Apophemi post.

#preferences#men i trust#lewis carroll#scott alexander#bayes' theorem#one notable thing about equus asinus (the men i trust album)#is the man in the cover image#being one of the only men i've ever seen in media#whose torso looks a lot like mine#including in conventionally unattractive ways

4 notes

·

View notes

Text

I’m an expert on Nietzsche (I’ve read some of his books), but not a world-leading expert (I didn’t understand them).

3 notes

·

View notes

Text

Photographed by: Scott Alexander

3 notes

·

View notes