#eliezer yudkowsky

Explore tagged Tumblr posts

Text

I keep seeing once in a while people pondering on an apparent contradiction in Daniil’s character – he is said to be a rationalist but he is evidently extremely emotional. Those things do not go together, right? People notice their confusion. They find all sorts of interesting explanations. From him being manipulative and performative, using his displays of emotion like tools to control people. To him not being rational at all actually, him lying to himself and others, not even knowing who he is, pretending and failing.

Every time I get over it and completely forget and then another one of these hits me in the face. What I forget is that in common understanding rationality is opposed to being emotional. While in the community it is a basic level understanding that there are rational emotions and irrational ones. The same way there are rational beliefs and irrational beliefs (which is to say true and false basically).

From here:

A popular belief about “rationality” is that rationality opposes all emotion—that all our sadness and all our joy are automatically anti-logical by virtue of being feelings. …

For my part, I label an emotion as “not rational” if it rests on mistaken beliefs, or rather, on mistake-producing epistemic conduct. “If the iron approaches your face, and you believe it is hot, and it is cool, the Way opposes your fear. If the iron approaches your face, and you believe it is cool, and it is hot, the Way opposes your calm.” Conversely, an emotion that is evoked by correct beliefs or truth-conducive thinking is a “rational emotion”; and this has the advantage of letting us regard calm as an emotional state, rather than a privileged default. …

Becoming more rational—arriving at better estimates of how-the-world-is—can diminish feelings or intensify them. Sometimes we run away from strong feelings by denying the facts, by flinching away from the view of the world that gave rise to the powerful emotion. If so, then as you study the skills of rationality and train yourself not to deny facts, your feelings will become stronger. …

I visualize the past and future of humankind, the tens of billions of deaths over our history, the misery and fear, the search for answers, the trembling hands reaching upward out of so much blood, what we could become someday when we make the stars our cities, all that darkness and all that light—I know that I can never truly understand it, and I haven’t the words to say. Despite all my philosophy I am still embarrassed to confess strong emotions, and you’re probably uncomfortable hearing them. But I know, now, that it is rational to feel.

Daniil probably suppresses some of his emotions to be taken seriously. But this is masking. And he is bad at it. He has strong emotions and strong convictions and they spill out of him regardless. He also values truth and honesty and that’s another reason why he can’t fully suppress his authenticity.

But all of it is about how to behave in polite society. How not to freak out neurotypicals. It has nothing to do with his thinking process, his beliefs and his goals. His rationality.

Now you can argue that his sincerity and his openness are irrational instrumentally, which is to say they lead to his downfall. He should have masked better and become more cynical if he wanted to succeed. Maybe? But that would also have its downsides, I’m pretty sure. (we’ll see what apathy meter does to his decision making soon enough)

Anyway, that is not the point I see people make. And I just really want people to stop making it. Strong emotions, strong ideals, passionate belief in a better future for humanity – those are all perfectly rational if they align with truth. And he does fail as a rationalist quite a lot as well, but this is purely an epistemological issue that has nothing to do with him being emotional.

#one of the reasons i find daniil to be such a great character#is that he's a pretty good representation of what an actual rationalist is like#outside of ratfic anyway#pathologic#daniil dankovsky#rationality#eliezer yudkowsky

43 notes

·

View notes

Text

Today's (or maybe technically yesterday's or the day before's) Ziz-stuff-related news that came to me from the San Francisco Chronicle is that Audere / Maximilian Snyder, the alleged murderer in the recent killing of the landlord involved in the fatally violent clash with the three (or more?) Ziz-related tenants, released an open letter addressed to Eliezer Yudkowsky. The letter doesn't actually discuss the murder of the landlord or the circumstances of Snyder's being jailed (probably on his lawyer's orders?) but is almost entirely devoted to imploring Yudkowsky to take his own rationalism to what Snyder claims is its logical conclusion and to become vegan. (I'm not sure I was actually aware that Yudkowsky is a meat-eater.) The letter is written in a very rationalist-y, somewhat Yudkowskian style and makes the writer come across as, well, I can only say quite rational for a cold-blooded murderer. Yudkowsky himself, quite understandably, refuses to acknowledge its contents.

[Content warning: briefly described graphic violence below.]

Interestingly, the SFC article (which is paywalled I'm sure, but hopefully available for anyone who like me is willing to pay 25 cents for three months' access) is the first source I've seen which mentions/claims that all three of the tenants definitely involved in the violent confrontation with the landlord (not just Somni with her katana) physically attacked the landlord with weapons. I had begun to wonder if it was only Somni, which wouldn't explain why the landlord had to lose an eye as well as stagger around in the aftermath with a sword sticking through him. The article states all this as fact, despite the defense's narrative being a completely different story.

8 notes

·

View notes

Text

Against AGI

I don't like the term "AGI" (Short for "Artificial General Intelligence").

Essentially, I think it functions to obscure the meaning of "intelligence", and that arguments about AGIs, alignment, and AI risk involve using several subtly different definitions of the term "intelligence" depending on which part of the argument we're talking about.

I'm going to use this explanation by @fipindustries as my example, and I am going to argue with it vigorously, because I think it is an extremely typical example of the way AI risk is discussed:

In that essay (originally a script for a YouTube Video) @fipindustries (Who in turn was quoting a discord(?) user named Julia) defines intelligence as "The ability to take directed actions in response to stimulus, or to solve problems, in pursuit of an end goal"

Now, already that is two definitions. The ability to solve problems in pursuit of an end goal almost certainly requires the ability to take directed actions in response to stimulus, but something can also take directed actions in response to stimulus without an end goal and without solving problems.

So, let's take that quote to be saying that intelligence can be defined as "The ability to solve problems in pursuit of an end goal"

Later, @fipindustries says, "The way Im going to be using intelligence in this video is basically 'how capable you are to do many different things successfully'"

In other words, as I understand it, the more separate domains in which you are capable of solving problems successfully in pursuit of an end goal, the more intelligent you are.

Therefore Donald Trump and Elon Musk are two of the most intelligent entities currently known to exist. After all, throwing money and subordinates at a problem allows you to solve almost any problem; therefore, in the current context the richer you are the more intelligent you are, because intelligence is simply a measure of your ability to successfully pursue goals in numerous domains.

This should have a radical impact on our pedagogical techniques.

This is already where the slipperiness starts to slide in. @fipindustries also often talks as though intelligence has some *other* meaning:

"we have established how having more intelligence increases your agency."

Let us substitute the definition of "intelligence" given above:

"we have established how the ability to solve problems in pursuit of an end goal increases your agency"

Or perhaps,

"We have established how being capable of doing many different things successfully increases your agency"

Does that need to be established? It seems like "Doing things successfully" might literally be the definition of "agency", and if it isn't, it doesn't seem like many people would say, "agency has nothing to do with successfully solving problems, that's ridiculous!"

Much later:

''And you may say well now, intelligence is fine and all but there are limits to what you can accomplish with raw intelligence, even if you are supposedly smarter than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, intelligence is not this end all be all superpower."

Again, let us substitute the given definition of intelligence;

"And you may say well now, being capable of doing many things successfully is fine and all but there are limits to what you can accomplish with the ability to do things successfully, even if you are supposedly much more capable of doing things successfully than a human surely you wouldn’t be capable of just taking over the world uninmpeeded, the ability to do many things successfully is not this end all be all superpower."

This is... a very strange argument, presented as though it were an obvious objection. If we use the explicitly given definition of intelligence the whole paragraph boils down to,

"Come on, you need more than just the ability to succeed at tasks if you want to succeed at tasks!"

Yet @fipindustries takes it as not just a serious argument, but an obvious one that sensible people would tend to gravitate towards.

What this reveals, I think, is that "intelligence" here has an *implicit* definition which is not given directly anywhere in that post, but a number of the arguments in that post rely on said implicit definition.

Here's an analogy; it's as though I said that "having strong muscles" is "the ability to lift heavy weights off the ground"; this would mean that, say, a 98lb weakling operating a crane has, by definition, stronger muscles than any weightlifter.

Strong muscles are not *defined* as the ability to lift heavy objects off the ground; they are a quality which allow you to be more efficient at lifting heavy objects off the ground with your body.

Intelligence is used the same way at several points in that talk; it is discussed not as "the ability to successfully solve tasks" but as a quality which increases your ability to solve tasks.

This I think is the only way to make sense of the paragraph, that intelligence is one of many qualities, all of which can be used to accomplish tasks.

Speaking colloquially, you know what I mean if I say, "Having more money doesn't make you more intelligent" but this is an oxymoron if we define intelligence as the ability to successfully accomplish tasks.

Rather, colloquially speaking we understand "intelligence" as a specific *quality* which can increase your ability to accomplish tasks, one of *many* such qualities.

Say we want to solve a math problem; we could reason about it ourselves, or pay a better mathematician to solve it, or perhaps we are very charismatic and we convince a mathematician to solve it.

If intelligence is defined as the ability to successfully solve the problem, then all of those strategies are examples of intelligence, but colloquially, we would really only refer to the first as demonstrating "intelligence".

So what is this mysterious quality that we call "intelligence"?

Well...

This is my thesis, I don't think people who talk about AI risk really define it rigorously at all.

For one thing, to go way back to the title of this monograph, I am not totally convinced that a "General Intelligence" exists at all in the known world.

Look at, say, Michael Jordan. Everybody agrees that he is an unmatched basketball player. His ability to successfully solve the problems of basketball, even in the face of extreme resistance from other intelligent beings is very well known.

Could he apply that exact same genius to, say, advancing set theory?

I would argue that the answer is no, because he couldn't even transfer that genius to baseball, which seems on the surface like a very closely related field!

It's not at all clear to me that living beings have some generalized capacity to solve tasks; instead, they seem to excel at some and struggle heavily with others.

What conclusions am I drawing?

Don't get me wrong, this is *not* an argument that AI risk cannot exist, or an argument that nobody should think about it.

If anything, it's a plea to start thinking more carefully about this stuff precisely because it is important.

So, my first conclusion is that, lacking a model for a "General Intelligence" any theorizing about an "Artificial General Intelligence" is necessarily incredibly speculative.

Second, the current state of pop theory on AI risks is essentially tautology. A dangerous AGI is defined as, essentially, "An AI which is capable of doing harmful things regardless of human interference." And the AI safety rhetoric is "In order to be safe, we should avoid giving a computer too much of whatever quality would render it unsafe."

This is essentially useless, the equivalent of saying, "We need to be careful not to build something that would create a black hole and crush all matter on Earth into a microscopic point."

I certainly agree with the sentiment! But in order for that to be useful you would have to have some idea of what kind of thing might create a black hole.

This is how I feel about AI risk. In order to talk about what it might take to have a safe AI, we need a far more concrete definition than "Some sort of machine with whatever quality renders a machine uncontrollable".

26 notes

·

View notes

Text

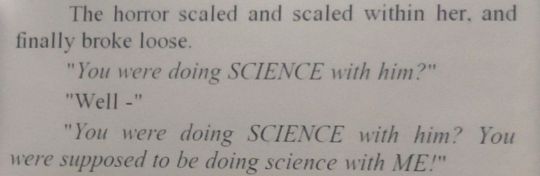

Trust Eliezer Yudkowsky to make science sound dirty (this moment was preceded by a morality lesson on how nobody is genetically programmed to know why killing people is bad btw. Just uh. Thought i should say that)

#hp fandom#harry potter#harry potter and methods of rationality#hpmor#harry potter fanfiction#eliezer yudkowsky

30 notes

·

View notes

Text

"Just pretend to be pretending to be a scientist"

You gotta understand that this quote is so ridiculous it makes you think that Harry was deliberately trying to think of bullshit more confusing than the brain can physically handle, but in reality it only scratches the surface. Harry said this to Draco as part of a -fake- double-layered plot to rewire Draco's brain instead of anything actually important. He was doing this in the -background- while focusing on solving magic itself. This sentence was not a result of Harry actually trying, it was a result of Harry's brain tripping on Hogwarts Special Sauce Blue Beans in the small window of sleep when he is not having wet dreams about Professor Quirrel. The level of effort Harry needs to expend to seamlessly manipulate an 11-year-old stereotype is barely comparable to what he actually needs to do many times later in the book, and I love it.

18 notes

·

View notes

Text

Hello! I am a graduate student and am conducting fieldwork for my Folklore and Folklife class. My topic is: Fanfiction and Folklore Among Members of the Rationality Community. If you are/ were involved with the Rationality Community and are 18 years of age or older (class requirement for paperwork reasons), I would love to interview you for my project!

It would mean so much to have your participation; please DM me to set up an interview time!

#ratblr#rationality#rational thinking#rationalist#rationalism#ex-rationalist#lesswrong#Eliezer Yudkowsky#grad school#folklore#field work

4 notes

·

View notes

Text

Ender Wiggen is to Bean

as

Harry Potter is to Harry Potter James-Evans-Verres

4 notes

·

View notes

Text

Eliezer Yudkowsky suggests nuclear war as an alternative to ChatGPT in Time Magazine

https://time.com/6266923/ai-eliezer-yudkowsky-open-letter-not-enough/

Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs. Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms. No exceptions for anyone, including governments and militaries. Make immediate multinational agreements to prevent the prohibited activities from moving elsewhere. Track all GPUs sold. If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.

Frame nothing as a conflict between national interests, have it clear that anyone talking of arms races is a fool. That we all live or die as one, in this, is not a policy but a fact of nature. Make it explicit in international diplomacy that preventing AI extinction scenarios is considered a priority above preventing a full nuclear exchange, and that allied nuclear countries are willing to run some risk of nuclear exchange if that’s what it takes to reduce the risk of large AI training runs.

Why is Eliezer Yudkowsky in Time at all? Why is he allowed to push one of the most deranged, uninformed opinions I have ever heard on such a massive, mainstream platform? The sources he posts for his theories are LessWrong blog articles and Trump-esque “everyone is saying” anecdotes. Yudkowsky has the same qualifications regarding quote-unquote “artificial intelligence” as I do. We’re fanfic authors.

I heard from Nostalgebraist’s blog that Yud truly believes this stuff, that he really has worked himself into a state of depression due to his certainty that a Terminator 2-style AI takeover is imminent. This isn’t a con. Yet his level of certainty and passion in this idea should not distract from his utter lack of expertise in the subject, let alone his obvious bankrupted understanding of geopolitics.

58 notes

·

View notes

Text

"Draco’s breathing was ragged. “Do you realize what you’ve done?” Draco surged forward and he seized Harry by the collar of his robes. His voice rose to a scream, it sounded unbearably loud in the closed classroom and the silence. “Do you realize what you’ve done?”

Harry’s voice was shaky. “You had a belief. The belief was false. I helped you see that. What’s true is already so, owning up to it doesn’t make it worse—"

Woodkid - Carol N°1

#digital art#artists on tumblr#darkness#pixel art#pixel illustration#fanart#hpmor#harry potter#eliezer yudkowsky#gom jabbar

21 notes

·

View notes

Text

By: Eliezer Yudkowsky

Published; Aug 4, 2007

The earliest account I know of a scientific experiment is, ironically, the story of Elijah and the priests of Baal.

The people of Israel are wavering between Jehovah and Baal, so Elijah announces that he will conduct an experiment to settle it—quite a novel concept in those days! The priests of Baal will place their bull on an altar, and Elijah will place Jehovah’s bull on an altar, but neither will be allowed to start the fire; whichever God is real will call down fire on His sacrifice. The priests of Baal serve as control group for Elijah—the same wooden fuel, the same bull, and the same priests making invocations, but to a false god. Then Elijah pours water on his altar—ruining the experimental symmetry, but this was back in the early days—to signify deliberate acceptance of the burden of proof, like needing a 0.05 significance level. The fire comes down on Elijah’s altar, which is the experimental observation. The watching people of Israel shout “The Lord is God!”—peer review.

And then the people haul the 450 priests of Baal down to the river Kishon and slit their throats. This is stern, but necessary. You must firmly discard the falsified hypothesis, and do so swiftly, before it can generate excuses to protect itself. If the priests of Baal are allowed to survive, they will start babbling about how religion is a separate magisterium which can be neither proven nor disproven.

Back in the old days, people actually believed their religions instead of just believing in them. The biblical archaeologists who went in search of Noah’s Ark did not think they were wasting their time; they anticipated they might become famous. Only after failing to find confirming evidence—and finding disconfirming evidence in its place—did religionists execute what William Bartley called the retreat to commitment, “I believe because I believe.”

Back in the old days, there was no concept of religion’s being a separate magisterium. The Old Testament is a stream-of-consciousness culture dump: history, law, moral parables, and yes, models of how the universe works—like the universe being created in six days (which is a metaphor for the Big Bang), or rabbits chewing their cud. (Which is a metaphor for . . .)

Back in the old days, saying the local religion “could not be proven” would have gotten you burned at the stake. One of the core beliefs of Orthodox Judaism is that God appeared at Mount Sinai and said in a thundering voice, “Yeah, it’s all true.” From a Bayesian perspective that’s some darned unambiguous evidence of a superhumanly powerful entity. (Although it doesn’t prove that the entity is God per se, or that the entity is benevolent—it could be alien teenagers.) The vast majority of religions in human history—excepting only those invented extremely recently—tell stories of events that would constitute completely unmistakable evidence if they’d actually happened. The orthogonality of religion and factual questions is a recent and strictly Western concept. The people who wrote the original scriptures didn’t even know the difference.

The Roman Empire inherited philosophy from the ancient Greeks; imposed law and order within its provinces; kept bureaucratic records; and enforced religious tolerance. The New Testament, created during the time of the Roman Empire, bears some traces of modernity as a result. You couldn’t invent a story about God completely obliterating the city of Rome (a la Sodom and Gomorrah), because the Roman historians would call you on it, and you couldn’t just stone them.

In contrast, the people who invented the Old Testament stories could make up pretty much anything they liked. Early Egyptologists were genuinely shocked to find no trace whatsoever of Hebrew tribes having ever been in Egypt—they weren’t expecting to find a record of the Ten Plagues, but they expected to find something. As it turned out, they did find something. They found out that, during the supposed time of the Exodus, Egypt ruled much of Canaan. That’s one huge historical error, but if there are no libraries, nobody can call you on it.

The Roman Empire did have libraries. Thus, the New Testament doesn’t claim big, showy, large-scale geopolitical miracles as the Old Testament routinely did. Instead the New Testament claims smaller miracles which nonetheless fit into the same framework of evidence. A boy falls down and froths at the mouth; the cause is an unclean spirit; an unclean spirit could reasonably be expected to flee from a true prophet, but not to flee from a charlatan; Jesus casts out the unclean spirit; therefore Jesus is a true prophet and not a charlatan. This is perfectly ordinary Bayesian reasoning, if you grant the basic premise that epilepsy is caused by demons (and that the end of an epileptic fit proves the demon fled).

Not only did religion used to make claims about factual and scientific matters, religion used to make claims about everything. Religion laid down a code of law—before legislative bodies; religion laid down history—before historians and archaeologists; religion laid down the sexual morals—before Women’s Lib; religion described the forms of government—before constitutions; and religion answered scientific questions from biological taxonomy to the formation of stars.1 The modern concept of religion as purely ethical derives from every other area’s having been taken over by better institutions. Ethics is what’s left.

Or rather, people think ethics is what’s left. Take a culture dump from 2,500 years ago. Over time, humanity will progress immensely, and pieces of the ancient culture dump will become ever more glaringly obsolete. Ethics has not been immune to human progress—for example, we now frown upon such Bible-approved practices as keeping slaves. Why do people think that ethics is still fair game?

Intrinsically, there’s nothing small about the ethical problem with slaughtering thousands of innocent first-born male children to convince an unelected Pharaoh to release slaves who logically could have been teleported out of the country. It should be more glaring than the comparatively trivial scientific error of saying that grasshoppers have four legs. And yet, if you say the Earth is flat, people will look at you like you’re crazy. But if you say the Bible is your source of ethics, women will not slap you. Most people’s concept of rationality is determined by what they think they can get away with; they think they can get away with endorsing Bible ethics; and so it only requires a manageable effort of self-deception for them to overlook the Bible’s moral problems. Everyone has agreed not to notice the elephant in the living room, and this state of affairs can sustain itself for a time.

Maybe someday, humanity will advance further, and anyone who endorses the Bible as a source of ethics will be treated the same way as Trent Lott endorsing Strom Thurmond’s presidential campaign. And then it will be said that religion’s “true core” has always been genealogy or something.

The idea that religion is a separate magisterium that cannot be proven or disproven is a Big Lie—a lie which is repeated over and over again, so that people will say it without thinking; yet which is, on critical examination, simply false. It is a wild distortion of how religion happened historically, of how all scriptures present their beliefs, of what children are told to persuade them, and of what the majority of religious people on Earth still believe. You have to admire its sheer brazenness, on a par with Oceania has always been at war with Eastasia. The prosecutor whips out the bloody axe, and the defendant, momentarily shocked, thinks quickly and says: “But you can’t disprove my innocence by mere evidence—it’s a separate magisterium!”

And if that doesn’t work, grab a piece of paper and scribble yourself a Get Out of Jail Free card.

-

1 The Old Testament doesn't talk about a sense of wonder at the complexity of the universe, perhaps because it was too busy laying down the death penalty for women who wore mens clothing, which was solid and satisfying religious content of that era.

==

I've said this myself less eloquently. Believers say, "pffth, you're not supposed to take it literally." Since when? Where does it say that?

The scripture was written as a science book, a morality book, a law book, a history book. For over a thousand years it was regarded as "true."

Now that we've figured out it's wrong, all of a sudden, it's not supposed to be taken literally? That sure is embarrassing for all of the governments, courtrooms, schools and institutions that based their laws, judgements, teachings and understandings of the world on the bible, never knowing they weren't supposed to take it literally. All the people convicted of crimes, imprisoned or executed, subjected to "healing" and "remedies," denounced as heretics and blasphemers because of the bible. Oopsie!

[ Thanks to a follower for the recommendation. ]

#Eliezer Yudkowsky#religion#Old Testament#New Testament#scientific inaccuracies#magisterium#non overlapping magisteria#science vs religion#religion vs science#science#religion is a mental illness

10 notes

·

View notes

Text

"I have a dream," said Harry's voice, "that one day sentient beings will be judged by the patterns of their minds, and not their color or their shape or the stuff they're made of, or who their parents were. Because if we can get along with crystal things someday, how silly would it be not to get along with Muggleborns, who are shaped like us, and think like us, as alike to us as peas in a pod? The crystal things wouldn't even be able to tell the difference. How impossible is it to imagine that the hatred poisoning Slytherin House would be worth taking with us to the stars? Every life is precious, everything that thinks and knows itself and doesn't want to die. Lily Potter's life was precious, and Narcissa Malfoy's life was precious, even though it's too late for them now, it was sad when they died. But there are other lives that are still alive to be fought for. Your life, and my life, and Hermione Granger's life, all the lives of Earth, and all the lives beyond, to be defended and protected, EXPECTO PATRONUM! " And there was light.

4 notes

·

View notes

Text

a very succinct article on the kind of neurosis that hangs over lesswrong. an important note is that in this article's context, it's NOT talking about present negative effects of LLMs like gpt, instead it's talking about a specific extreme anxiety of some users, that the earth is going to be blown up any second by nothing less than a manmade god.

You have to live in a kind of mental illusion to be in terror of the end of the world. Illusions don't look on the inside like illusions. They look like how things really are.

So, if you're done cooking your nervous system and want out… …but this AI thing gosh darn sure does look too real to ignore… …what do you do? My basic advice here is to land on Earth and get sober.

#ai#neural network#eliezer yudkowsky#he's mentioned but not the author btw#i'm glad somebody is trying to help#but judging by the comments...#idk if these people Want help

7 notes

·

View notes

Text

My brief, vague, scattered review of Almost Nowhere

Welp, I finished it, all 1079 pages of it. (Really 1077 pages with the first and last essentially acting as a front and back cover.) As the book is of an unwieldy length and I don't have much time or brainpower at the moment, this post is going to comment on just some aspects of it. Also, as I never managed to gain anything close to mastery of exactly how the plot worked, most of this is going to be vague and avoid discussions of the plot events, character decisions or traits, or anything that specific really. I think a couple of people wrote spoilery reviews; I don't feel very capable of this (nor of giving a very good description of the novel to someone else at a level of concreteness that they would reasonable expect.)

So, I would say no concrete spoilers to follow, and only a couple of quite vague ones.

I noted this in other posts written while I was in the midst of the novel, but I have to say it again because it's one of the most pertinent parts of my experience reading this: Almost Nowhere is the most cerebral fiction-writing I've ever read (with the possible exception of the few chapters of Harry Potter and the Methods of Rationality that I've read), on just about every level including narrative style, plot mechanics, and the ideas and themes explored -- there are even occasional fictional-scientific lectures inserted from time to time (which I found quite enjoyable actually)! More generally, the writing just screams of sheer IQ points both on the part of the author and on the part of the expected audience, in the use of a dazzling vocabulary as much as the elaborate plot and fictional-scientific situations one has to keep track of the characters being in. (It's interesting to note, though, that the cast of characters is actually quite modest, perhaps the fewest I've ever seen for a novel of this size: the complexity of the plot doesn't arise from a complexity of relationships among characters but from a distorted timeline and an array of alternate-reality situations.)

For this reason, I can't help but continue to compare this work to what I've read of Eliezer Yudkowsky, who similarly exudes sheer IQ and writes with an unabashedly cerebral style. Rob (the author, Tumblr-user Nostalgebraist) may not care much for the comparison, since as far as I know he doesn't align himself with Yudkowsky's rationalist movement or consider himself particularly in sympathy with Yudkowsky's worldview. But, while I have very little experience with Yudkowsky's fiction-writing (the main thing I've read of his is the Sequences), my impression is that their fiction is extremely different, that Yudkowsky's fiction comes across as just a transparent "mouthpiece" for his rationalist views and ideas, and that Yudkowsky has far less talent for fictional narrative. HPMoR (or what I've read of it) gives me an interesting plot and characters and makes me think about rationalist-y ideas in a direct, easy-to-follow way. Truly emotional non-cerebralness is actually pretty frequent in HPMoR but conveyed in a way I recall finding rather awkward. Almost Nowhere, on the other hand, took me on a vast, sweeping journey, where an even greater proportion of the scenes carry a colder, more dispassionately intellectual ambience, where moments of raw emotional intimacy are rather few and far between but are far better written when they do occur.

I think this is ultimately why I stuck with Almost Nowhere despite struggling to follow many aspects of the plot (while I lost too much motivation only a dozen or so chapters into HPMoR): I felt like I was being taken somewhere and was able to enjoy where it was taking me. The whole novel felt like a slightly surreal dream and an escape to a far vaster space than the one I inhabit in real life. Perhaps the feeling of being in a dream enabled my brain not to particularly care about precisely following the intricacies of the plot, as tends to be one's brain state in dreams. Of course, I shouldn't leave this as an implied "excuse" for not doing a good job of following: among the main reasons were intellectual fatigue from the general nature and business of the rest of my life, being a bit too rushed to get through the novel so that I can move to the rest of my reading list, general mental laziness, and, well, a dash of general mental ineptitude I suppose.

Specifically, what I struggled throughout to follow was some of the timeline shenanigans and that paths carved out within them by various individual characters, as well as recalling characters' experiences and motives at different times, and just generally keeping track of the scifi mechanics. I also had a tendency to glaze over some of dense dialogs that were more... I hate to keep using the word cerebral but don't know how else to characterize them... or that were more technical or jargon-filled or sounding like computer coding. Regarding the scifi mechanics, I did enjoy the occasional lengthy "lessons" delivered by characters and mostly followed their teachings but had a tendency to forget many of the finer (but still important) points later on -- for instance, Sylvie's big fictionally-written-lesson chapter at the end was really fun reading and I followed the interesting ideas going on but (even though it was near the end) did have trouble remembering everything in it pretty shortly afterwards.

The fictional-scientific mechanics themselves made for a very interesting elaborate thought experiment, and for the most part they made a lot of sense, as in, some hypothetical universe could work under these mechanics. I'm not sure that keeping vague links between different paths among alternate realities in the form of dreams or nostalgium doesn't seem like a bit of a cheat, but I'd have to think over it more deeply before deciding that, and I liked how it played in the story. I was a bit taken aback near the beginning about the role of Maryam Mirzakhani's fictional-scientific discoveries since as far as I knew Mirzakhani never worked on such things, but I much later realized that the earlier parts of the novel were written when she was still alive and young and potentially able to make discoveries of that nature in the then-future (for those who don't know, she tragically died in the late 2010's at age 40, partway through the writing of Almost Nowhere and shortly after becoming the first woman to get the Fields Medal).

As I've mentioned, the cast of distinguishable characters is very modest for such a huge novel. The characterization of each is on the subtle side, and to some extent I don't think I ever truly got to understand the deepest layers of Grant and Cordelia because I didn't put in the right amount of effort. I expect the only two which are memorable enough to stick with me for years when I look back on reading this are Azad and Sylvie. Azad was a pleasure to read and I had to enjoy the scenes where he was present or narrating -- interestingly he has one major Bad Moment around the middle of the novel in which he behaves in a certain way that earns him a ton of criticism at the time, and then after all the fuss is made it all seems to sort of get forgotten about. I don't know what I'm really supposed to think of him ultimately, but I'll miss his beautifully intellectual soul. Sylvie's scenes, in contrast, are a bit grating to read, but I suspect they're supposed to be. In a way, I know even less how I should feel about him than about Azad: his deepest biases and motives frequently seemed to exist in an occasionally-clashing tension. The bleak cacophony surrounding him is punctuated with sharp humor that I appreciate: he's a bit of dark vortex but he's also such a good boy.

The idea that we eventually get to see the characters get together and write the book that we are now reading doesn't seem original to this work of fiction, but I can't think precisely where I've seen it anywhere else, and it was fun. Rob's ability, as shown (to a milder extent) in The Northern Caves to be able to speak and write in very distinct character voices is impressive and maximally showcased here.

The novel, as Rob pointed out in one of his posts about it, comes with three distinct parts, the second of which distinguishes itself by having chapters numbered in Arabic numerals with chapter titles and is built of scenes with an entirely different flow. This second part was easily my favorite to read and felt more like surreal and placidly dreamlike escapism than any other area of the book. I don't know if anyone else has made comparisons between the fictional-novel-within-the-fiction-novel The Northern Caves (Rob's novel called The Northern Caves is the only other of his that I've read -- and liked a lot -- and its plot revolves around a bizarrely opaque thousand-plus-page novel of the same title), but I couldn't help being reminded of my conception of the fictional novel TNC throughout Almost Nowhere (also a thousand-plus-paged rather difficult novel, with surreal aspects to the narrative and layer upon layer of meaning, even if it doesn't devolve into apparent nonsense partway through). And I made this connection the most during Part 2 of Almost Nowhere, recalling that the fictional novel TNC is explained to have a sort of middle "lucid section" made of vignettes which mostly consist of coherent dialog but in which the characters have different relationships than they did at the outset (i.e. in TNC the two main characters who were siblings now appear to be married). I felt sort of drawn toward experiencing the journey that TNC would take me along, particularly the middle lucid section part just consisting of little dialog scenes, and I felt like in a way I got some version of that through Part 2 of Almost Nowhere.

(This is the most spoilery I'll get:) The final chapter of the novel ends on the point of view of the character who I had forgotten (but soon realized) was the one the very first chapter began on. It felt only fitting to go back to the first part of the first chapter and skim it, to close the loop (so to speak). I was almost never actually emotionally moved by Almost Nowhere exactly, but with the ending-looped-back-to-the-beginning it came close.

I remember when the novel was first finished this past summer, Nostalgebraist made a post discussing the finished product a bit, and I ran into one or two effortposts by other people discussing how they felt about the plot and characters. I didn't want to spoil them at the time, and I didn't want to look for them just after finishing before I wrote my own thing (this post), but I'd like to find them now and would appreciate anyone pointing me to them (I can probably find the Nostalgebraist posts easily enough).

Anyway, it's impressive work Rob, congrats on finishing such a hefty project, and thanks for giving me a unique fiction-reading experience I'll never forget!

#almost nowhere#fiction writing#eliezer yudkowsky#harry potter and the methods of rationality#sci fi#time travel#maryam mirzakhani#the northern caves

40 notes

·

View notes

Text

Many ambitious people find it far less scary to think about destroying the world than to think about never amounting to much of anything at all. All the people I have met who think they are going to win eternal fame through their AI projects are like this.

Eliezer Yudkowsky, AI analyst, and cofounder of the Machine Intelligence Research Institute

10 notes

·

View notes

Text

The reason Eliezer Yudkowsky (@yudkowsky) is terrified is that unlike most people, including most nominal atheists, he actually believes in evolution.

Conventional evolution makes 12 turtles and discards 11. Each turtle is a thick solid, or high-dimensional, composed of billions (or maybe trillions) of cells, and is therefore costly.

Humanity's great trick is to imagine 12 hammers, forget the 11 least useful, and then build 1 of them. Each imaginary hammer is basically a thin surface, or low-dimensional, where we don't care about the internal details like the exact configuration of plant cells in the wooden handle.

What Yudkowsky sees is a potential loss of humanity's evolutionary niche - a new synthetic form of life that's much better at our "one weird trick" of imagining things than we are.

And as someone with an atheistic bent, he isn't convinced that "the moral arc of the universe" must necessarily bend towards "justice," as that would require some person or force to do the bending.

21 notes

·

View notes

Text

So like wrt AI alignment (talking the Yudkowsky, "does humanity get to keep existing" scope, not the "do shitheads get to be extra shitty" scope), doesn't the problem sort of solve itself? If an AI wants to gain access to more intelligence/become more intelligent to solve task X, it runs into the exact issue humanity's running into - how can it trust whatever it creates/becomes to *also* be aligned to X? This gets even more lopsided in favor of a good outcome if the intelligence has any self-preservation instinct and not just raw dedication to the cause, but even if it's willing to sacrifice itself it still has to solve just as tricky a problem as we do.

Ironically, this line of logic is kind of inversely powerful to how fruitful you think alignment research will be. If you think alignment is fundamentally solvable, then the robots will figure it out and won't let this argument stop them from jumping up the chain. But if you think it's unsolvable, there's nothing increased computation can do and we'll probably be safe regardless unless they really mess this one up.

3 notes

·

View notes